#Generative Adversarial Networks (GANs)

Explore tagged Tumblr posts

Text

AI multi-speaker lip-sync has arrived

New Post has been published on https://thedigitalinsider.com/ai-multi-speaker-lip-sync-has-arrived/

AI multi-speaker lip-sync has arrived

.pp-multiple-authors-boxes-wrapper display:none; img width:100%;

Rask AI, an AI-powered video and audio localisation tool, has announced the launch of its new Multi-Speaker Lip-Sync feature. With AI-powered lip-sync, 750,000 users can translate their content into 130+ languages to sound as fluent as a native speaker.

For a long time, there has been a lack of synchronisation between lip movements and voices in dubbed content. Experts believe this is one of the reasons why dubbing is relatively unpopular in English-speaking countries. In fact, lip movements make localised content more realistic and therefore more appealing to audiences.

There is a study by Yukari Hirata, a professor known for her work in linguistics, which says that watching lip movements (rather than gestures) helps to perceive difficult phonemic contrasts in the second language. Lip reading is also one of the ways we learn to speak in general.

Today, with Rask’s new feature, it’s possible to take localised content to a new level, making dubbed videos more natural.

The AI automatically restructures the lower face based on references. It takes into account how the speaker looks and what they are saying to make the end result more realistic.

How it works:

Upload a video with one or more people in the frame.

Translate the video into another language.

Press the ‘Lip Sync Check’ button and the algorithm will evaluate the video for lip sync compatibility.

If the video passes the check, press ‘Lip Sync’ and wait for the result.

Download the video.

According to Maria Chmir, founder and CEO of Rask AI, the new feature will help content creators expand their audience. The AI visually adjusts lip movements to make a character appear to speak the language as fluently as a native speaker.

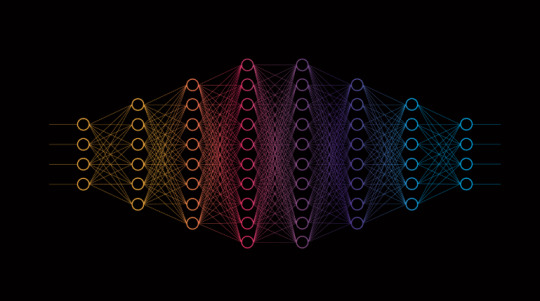

The technology is based on generative adversarial network (GAN) learning, which consists of a generator and a discriminator. Both the generator and the discriminator compete with each other to stay one step ahead of the other. The generator clearly generates content (lip movements), while the discriminator is responsible for quality control.

The beta release is available to all Rask subscription customers.

(Editor’s note: This article is sponsored by Rask AI)

Tags: ai, artificial intelligence, GAN, Generative Adversarial Network, lip sync, rask, rask ai

#000#ai#ai news#AI-powered#algorithm#applications#Article#artificial#Artificial Intelligence#audio#beta release#CEO#Companies#creators#English#GAN#generative#Generative Adversarial Network#generator#how#intelligence#it#language#Languages#Learn#learning#Linguistics#lip sync#natural#network

2 notes

·

View notes

Text

why did they shove ai research into fontaine

#mfw 'graph adversarial technology' -> generative adversarial networks aka GANs aka real comp sci#and we're helping that researcher gather training data?? 😭😭 what is this 😭 us when we take pictures so she can develop#the newest and greatest facial recognition software for robots 😭#me when i die because gacha game imitates my life too closely#liveblog insanity#genshin impact

2 notes

·

View notes

Text

youtube

STOP Using Fake Human Faces in AI

#GenerativeAI#GANs (Generative Adversarial Networks)#VAEs (Variational Autoencoders)#Artificial Intelligence#Machine Learning#Deep Learning#Neural Networks#AI Applications#CreativeAI#Natural Language Generation (NLG)#Image Synthesis#Text Generation#Computer Vision#Deepfake Technology#AI Art#Generative Design#Autonomous Systems#ContentCreation#Transfer Learning#Reinforcement Learning#Creative Coding#AI Innovation#TDM#health#healthcare#bootcamp#llm#youtube#branding#animation

1 note

·

View note

Text

A Beginner's Guide to Creating Your Own AI Image Generator

In recent years, the intersection of artificial intelligence (AI) and art has sparked a revolution in creative expression. AI art generation, powered by sophisticated algorithms and neural networks, has enabled artists and enthusiasts alike to explore new realms of creativity and produce mesmerizing artworks that push the boundaries of traditional art forms. The importance of creating your own…

View On WordPress

#ai art#ai creativity#ai generator#ai image#art inspiration#Artistic creativity#Creative coding#creative technology#Data augmentation#Deep Learning#Digital Creativity#Ethical AI#Generative Adversarial Networks (GANs)#Machine Learning#neural networks#neural style transfer#Style Transfer

1 note

·

View note

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Text

I feel like people don't know my personal history with Machine Learning.

Yes, Machine Learning. What we called it before the marketing folks started calling it "AI".

I studied Graphic Design at university, with a minor in Computer Science. There was no ChatGPT, there was no Stable Diffusion. What there was were primitive Generative Adversarial Networks, or GANs.

I went to one of the head researchers with a proposal; an improvement to a model out of Waseda University that I had thought up. A GAN that could take in a sketch as an input, and output an inked drawing. The idea was to create a new tool for artists to go from sketchy lines to clean linework by applying a filter. The hope was amelioration of the sometimes brutal working conditions of inkers and assistants in manga and anime, by giving them new tools. Naive, looking back, yes. But remember, none of this current sturm and drang had occurred yet.

However, we quickly ran into ethics issues.

My job was to create the data set, and we were unable to come up with a way to get the data we needed, because obviously we can't just throw in a bunch of artists work without permission.

So the project stalled. We couldn't figure out how to license a dataset specific, and large enough.

Then the capitalists who saw a buck to be made independently decided fuck the ethics, we will do it anyway. And they won the arms race we didn't know we were in.

I cared about Machine Learning before any of y'all did. I had informed opinions on the state of the art, before there was any cultural motion to it to react to.

So yeah. There were people trying to do this ethically. I was one of them. We lost to the people who didn't give a shit.

19 notes

·

View notes

Text

You know, if you think of Amanda and Connor as some kind of a humanisation of Generative Adversarial Network (GAN), it kind of works. Two neural networks, generator and discriminator, one acts the other critiques and makes the other one improve and do better by finding impurities in its work, scrutinising it thoroughly. The resistance between the two continues indefinitely. It's the resentment that both of them need and cannot exist without.

29 notes

·

View notes

Text

this kind of thing would sound so cool if i wasn't extremely jaded about every single word in that title by now

3 notes

·

View notes

Text

The Building Blocks of AI : Neural Networks Explained by Julio Herrera Velutini

What is a Neural Network?

A neural network is a computational model inspired by the human brain’s structure and function. It is a key component of artificial intelligence (AI) and machine learning, designed to recognize patterns and make decisions based on data. Neural networks are used in a wide range of applications, including image and speech recognition, natural language processing, and even autonomous systems like self-driving cars.

Structure of a Neural Network

A neural network consists of layers of interconnected nodes, known as neurons. These layers include:

Input Layer: Receives raw data and passes it into the network.

Hidden Layers: Perform complex calculations and transformations on the data.

Output Layer: Produces the final result or prediction.

Each neuron in a layer is connected to neurons in the next layer through weighted connections. These weights determine the importance of input signals, and they are adjusted during training to improve the model’s accuracy.

How Neural Networks Work?

Neural networks learn by processing data through forward propagation and adjusting their weights using backpropagation. This learning process involves:

Forward Propagation: Data moves from the input layer through the hidden layers to the output layer, generating predictions.

Loss Calculation: The difference between predicted and actual values is measured using a loss function.

Backpropagation: The network adjusts weights based on the loss to minimize errors, improving performance over time.

Types of Neural Networks-

Several types of neural networks exist, each suited for specific tasks:

Feedforward Neural Networks (FNN): The simplest type, where data moves in one direction.

Convolutional Neural Networks (CNN): Used for image processing and pattern recognition.

Recurrent Neural Networks (RNN): Designed for sequential data like time-series analysis and language processing.

Generative Adversarial Networks (GANs): Used for generating synthetic data, such as deepfake images.

Conclusion-

Neural networks have revolutionized AI by enabling machines to learn from data and improve performance over time. Their applications continue to expand across industries, making them a fundamental tool in modern technology and innovation.

3 notes

·

View notes

Text

Evaluation Humanités Digitales

'La petite mort' Pilar Rosado - PP-PH 24/25

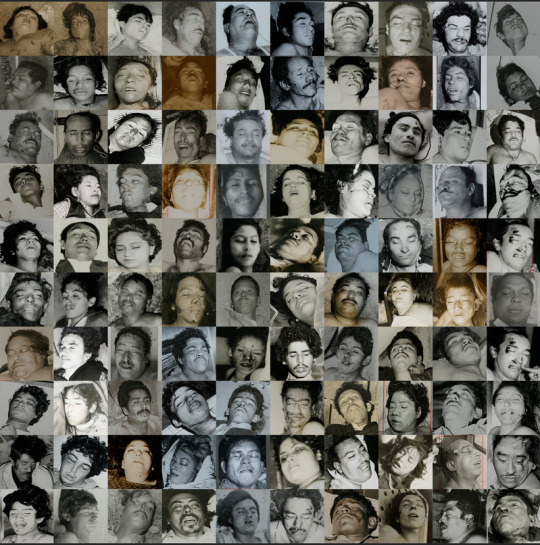

"LA PETITE MORT" est un projet photographique qui utilise l'IA pour générer une collection d'images évoquant le moment culminant d'un orgasme et de personnes décédées de mort violente. Ces collections sont générées à l'aide de GAN: Generative Adversarial Network, afin de créer un ensemble de données plus authentique.

Pilar Rosado tente de brouiller la frontière entre orgasme et mort pour amplifier la nature ironique de l'expression : "LA PETITE MORT" ; qui implique une petite perte de conscience ou un affaiblissement de l'esprit lors du relâchement complet de la tension d'excitation. Cependant, d'un autre point de vue, Rosado dénonce également la manière dont l'intelligence artificielle et l'utilisation de la génération d'images GAN sont capables d'imiter la réalité, des expressions des sujets à leurs positions. De plus, la densité et la taille avec lesquelles Rosado présente la collection renforcent le fait qu'il est plus difficile pour le lecteur de distinguer entre les sujets qui meurent ou qui ont un orgasme. Cela illustre encore davantage les dangers que la technologie peut apporter lorsqu'elle imite la vie pour paraître humaine ; brouiller les frontières entre le sans vie et le vivant, l'artificiel et le naturel.

'Can’t Help Myself' Sun Yuan and Peng Yu – PP-MID 24/25

'Can't Help Myself' est une grande sculpture cinétique créée par Sun Yuan et Peng Yu en 2016. Composée d'un bras robotique qui se déplace de manière répétée pour balayer le fluide cellulosique rouge sang qui s'échappe de son noyau interne par des mouvements rythmés, elle a attiré l'attention des médias internationaux.

Yuan et Yu ont attiré l'attention des médias internationaux grâce à leur sculpture en raison de sa dégradation progressive, qui devient une performance : la sculpture commence à ralentir et à ne plus être aussi efficace. Le spectateur finit souvent par traiter la sculpture comme un être humain, lui donnant ainsi une qualité anthropomorphique. Les lecteurs ont commencé à sympathiser et à s'identifier à la machine sans vie alors qu'elle se dégradait. En conséquence, les intentions des artistes ont été dénoncées, la technologie étant utilisée comme outil pour montrer comment elle peut imiter la vie et manipuler les émotions humaines. Par conséquent, cela modifie la discussion sur le but éthique de l'utilisation de la technologie, car celle-ci peut être frauduleuse lorsqu'elle s'adapte et manipule les émotions humaines pour humaniser les êtres sans vie. Elle rappelle ainsi les différentes façons dont la technologie peut être utilisée avec des intentions diversifiées.

youtube

Alexander Mcqueen SS 1999 – PP-AV/Design de mode 24/25

Le défilé printemps-été 1999 de McQueen était une déclaration de mode révolutionnaire qui a mêlé mode et technologie pour clôturer l'un de ses défilés. Le mannequin Shalom Harlow portait une robe blanche, debout sur une plate-forme tournante installée sur le podium, tandis que des robots lui pulvérisaient de la peinture.

S'inspirant de la sculpture « High Moon » de Rebecca Horn, McQueen a créé une sorte de symétrie dans la composition. Il a emprunté deux machines à une usine de fabrication de voitures et a spécifié la manière dont elles devaient se déplacer, jointure par jointure, à la manière de cobras crachant. Cette interaction entre la machine et la mode pourrait être une façon de dénoncer la suprématie croissante des machines dans le monde du travail, notamment dans l'industrie de la mode. Une autre interprétation est que McQueen utilisait simplement les machines pour créer une déclaration visuelle sur la mode et faire l'impensable. L'utilisation de machines pour projeter de la peinture sur Shalom Harlow était certainement une image saisissante et mémorable, qui a suscité de nombreuses interprétations différentes chez les spectateurs et les critiques.

Au final, il appartient à chacun d'interpréter les intentions de McQueen. Cependant, il est clair que l'utilisation de machines pour pulvériser de la peinture sur Shalom Harlow était une déclaration puissante et stimulante qui résonne encore aujourd'hui.

2 notes

·

View notes

Quote

畳み込みニューラル ネットワーク (CNN) CNN は、コンピューター ビジョンの世界における大きな武器です。彼らは、特殊なレイヤーのおかげで、画像内の空間パターンを認識する才��を持っています。この能力により、画像を認識し、その中にある物体を見つけ、見たものを分類することが得意になります。これらのおかげで、携帯電話で写真の中の犬と猫を区別できるのです。 リカレント ニューラル ネットワーク (RNN) RNN はある種のメモリを備えているため、文章、DNA シーケンス、手書き文字、株式市場の動向など、データのシーケンスが関係するあらゆるものに最適です。情報をループバックして、シーケンス内の以前の入力を記憶できるようにします。そのため、文中の次の単語を予測したり、話し言葉を理解したりするなどのタスクに優れています。 Long Short-Term Memory Networks (LSTM) LSTM は、長期間にわたって物事を記憶するために構築された特別な種類の RNN です。これらは、RNN が長いシーケンスにわたって内容を忘れてしまうという問題を解決するように設計されています。段落の翻訳や TV シリーズで次に何が起こるかを予測するなど、情報を長期間保持する必要がある複雑なタスクを扱う場合は、LSTM が最適です。 Generative Adversarial Networks (GAN) 2 つの AI のイタチごっこを想像してください。1 つは偽のデータ (画像など) を生成し、もう 1 つは何が偽物で何が本物かを捕まえようとします。それがGANです。この設定により、GAN は信じられないほどリアルな画像、音楽、テキストなどを作成できます。彼らはニューラル ネットワークの世界の芸術家であり、新しい現実的なデータをゼロから生成します。

ニューラル ネットワークの背後にある数学 |データサイエンスに向けて

6 notes

·

View notes

Text

youtube

MIND-BLOWING Semantic Data Secrets Revealed in AI and Machine Learning

#GenerativeAI#GANs (Generative Adversarial Networks)#VAEs (Variational Autoencoders)#Artificial Intelligence#Machine Learning#Deep Learning#Neural Networks#AI Applications#CreativeAI#Natural Language Generation (NLG)#Image Synthesis#Text Generation#Computer Vision#Deepfake Technology#AI Art#Generative Design#Autonomous Systems#ContentCreation#Transfer Learning#Reinforcement Learning#Creative Coding#AI Innovation#TDM#health#healthcare#bootcamp#llm#branding#youtube#animation

1 note

·

View note

Text

Exploring Generative AI: Unleashing Creativity through Algorithms

Generative AI, a fascinating branch of artificial intelligence, has been making waves across various fields from art and music to literature and design. At its core, generative AI enables computers to autonomously produce content that mimics human creativity, leveraging complex algorithms and vast datasets.

One of the most compelling applications of generative AI is in the realm of art. Using techniques such as Generative Adversarial Networks (GANs) or Variational Autoencoders (VAEs), AI systems can generate original artworks that blur the line between human and machine creativity. Artists and researchers alike are exploring how these algorithms can inspire new forms of expression or augment traditional creative processes.

In the realm of music, generative AI algorithms can compose melodies, harmonies, and even entire pieces that resonate with listeners. By analyzing existing compositions and patterns, AI can generate music that adapts to different styles or moods, providing musicians with novel ideas and inspirations.

Literature and storytelling have also been transformed by generative AI. Natural Language Processing (NLP) models can generate coherent and engaging narratives, write poetry, or even draft news articles. While these outputs may still lack the depth of human emotional understanding, they showcase AI's potential to assist writers, editors, and journalists in content creation and ideation.

Beyond the arts, generative AI has practical applications in fields like healthcare, where it can simulate biological processes or generate synthetic data for research purposes. In manufacturing and design, AI-driven generative design can optimize product designs based on specified parameters, leading to more efficient and innovative solutions.

However, the rise of generative AI also raises ethical considerations, such as intellectual property rights, bias in generated content, and the societal impact on creative industries. As these technologies continue to evolve, it's crucial to navigate these challenges responsibly and ensure that AI augments human creativity rather than replacing it.

In conclusion, generative AI represents a groundbreaking frontier in technology, unleashing new possibilities across creative disciplines and beyond. As researchers push the boundaries of what AI can achieve, the future promises exciting developments that could redefine how we create, innovate, and interact with technology in the years to come.

If you want to become a Generative AI Expert in India join the Digital Marketing class from Abhay Ranjan

3 notes

·

View notes

Note

Different NN architectures often have different training functions, like GANs (previous generation of AI art) the training function was "make an image that your adversarial network can't tell is fake" while Diffusion models (current generation of AI art) the training function was "please restore this noisy image to its original"

isn't that generalising the training set in both cases? like that's my entire point, the training method and architecture might differ but the objective is the same: construct a function that accurately represents a given data set.

9 notes

·

View notes

Text

The Role of AI in Music Composition

Artificial Intelligence (AI) is revolutionizing numerous industries, and the music industry is no exception. At Sunburst SoundLab, we use different AI based tools to create music that unites creativity and innovation. But how exactly does AI compose music? Let's dive into the fascinating world of AI-driven music composition and explore the techniques used to craft melodies, rhythms, and harmonies.

How AI Algorithms Compose Music

AI music composition relies on advanced algorithms that mimic human creativity and musical knowledge. These algorithms are trained on vast datasets of existing music, learning patterns, structures and styles. By analyzing this data, AI can generate new compositions that reflect the characteristics of the input music while introducing unique elements.

Machine Learning Machine learning algorithms, particularly neural networks, are crucial in AI music composition. These networks are trained on extensive datasets of existing music, enabling them to learn complex patterns and relationships between different musical elements. Using techniques like supervised learning and reinforcement learning, AI systems can create original compositions that align with specific genres and styles.

Generative Adversarial Networks (GANs) GANs consist of two neural networks – a generator and a discriminator. The generator creates new music pieces, while the discriminator evaluates them. Through this iterative process, the generator learns to produce music that is increasingly indistinguishable from human-composed pieces. GANs are especially effective in generating high-quality and innovative music.

Markov Chains Markov chains are statistical models used to predict the next note or chord in a sequence based on the probabilities of previous notes or chords. By analyzing these transition probabilities, AI can generate coherent musical structures. Markov chains are often combined with other techniques to enhance the musicality of AI-generated compositions.

Recurrent Neural Networks (RNNs) RNNs, and their advanced variant Long Short-Term Memory (LSTM) networks, are designed to handle sequential data, making them ideal for music composition. These networks capture long-term dependencies in musical sequences, allowing them to generate melodies and rhythms that evolve naturally over time. RNNs are particularly adept at creating music that flows seamlessly from one section to another.

Techniques Used to Create Melodies, Rhythms, and Harmonies

Melodies AI can analyze pitch, duration and dynamics to create melodies that are both catchy and emotionally expressive. These melodies can be tailored to specific moods or styles, ensuring that each composition resonates with listeners. Rhythms AI algorithms generate complex rhythmic patterns by learning from existing music. Whether it’s a driving beat for a dance track or a subtle rhythm for a ballad, AI can create rhythms that enhance the overall musical experience. Harmonies Harmony generation involves creating chord progressions and harmonizing melodies in a musically pleasing way. AI analyzes the harmonic structure of a given dataset and generates harmonies that complement the melody, adding depth and richness to the composition. -----------------------------------------------------------------------------

The role of AI in music composition is a testament to the incredible potential of technology to enhance human creativity. As AI continues to evolve, the possibilities for creating innovative and emotive music are endless.

Explore our latest AI-generated tracks and experience the future of music. 🎶✨

#AIMusic#MusicInnovation#ArtificialIntelligence#MusicComposition#SunburstSoundLab#FutureOfMusic#NeuralNetworks#MachineLearning#GenerativeMusic#CreativeAI#DigitalArtistry

2 notes

·

View notes