#Text Generation

Explore tagged Tumblr posts

Text

Like we needed this...

#AI#Text generation#when techbros decide that publishing brainrot written by robots makes for good content

4 notes

·

View notes

Text

66 notes

·

View notes

Text

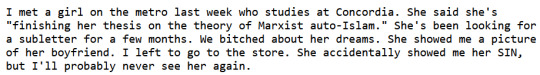

"I Met Somebody In Montreal"

by me

(you, too, can "meet" "somebody" "in" "Montreal" here!)

An html text generator about what it feels like to live in this strange city.

#visual poetry#poetry#poets on tumblr#text generation#experimental poetry#computer poetry#montreal#everyone has met at least one of these people... right?#i made th

2 notes

·

View notes

Text

i dont want natural language generators to be coached into sounding like me and being endlessly apologetic i want them to say weird and meaningless words i want them to show the randomness of statistics and the patterns of language and the emptiness below it i want to see beautiful colors spread across a canvas not a derivative recreation of a mondrian

2 notes

·

View notes

Text

🎉🎊Big News!!🎊🎉

So I'm sure most of you have noticed that I haven't been posting anything Vex related in a while. I have an explanation for that. I recently got into Minecraft and I have a pretty big project planned. In case you didn't know, Animation has been my dream since I was younger. Well, I have been experimenting with Blender and the add-on MCprep. Someone told me about a program called Mine-imitator. I know what you are thinking... Since it is so easy, it is not a true animation program. Well, in all honesty, it is perfect for me since I can get the hang of animating, and then whatever I make in Mine-Imator I can then export over to Blender. I loved the animations that you can make using Mine-imator. I have also been in the process of making this huge Minecraft AU while using a role-play text generator. Now just hear me out here. I know that some of you are against that kind of thing but I only used it to help me flesh out my universe and to give me ideas, it also helped my writing skills immensely and helped me to discover my writing style. Please stick with me and bear with me, I promise you that it will be worth your while. I am also going to be making a separate Tumblr for all my Minecraft stuff, including animations and my AU. I look forward to making this and I hope everyone will enjoy my content.

#minecraft#animation#au#alternate universe#text generation#action#adventure#drama#comfort#romance#hurt/comfort#heartbreak#oc#emotions

2 notes

·

View notes

Text

God i need this!!!

burning text gif maker

heart locket gif maker

minecraft advancement maker

minecraft logo font text generator w/assorted textures and pride flags

windows error message maker (win1.0-win11)

FromSoftware image macro generator (elden ring Noun Verbed text)

image to 3d effect gif

vaporwave image generator

microsoft wordart maker (REALLY annoying to use on mobile)

you're welcome

261K notes

·

View notes

Text

You ever have an idea so dumb you drop everything to draw it

#get cheesed idiot#my art#feat uncharacteristically on-model Shadow#sonic#sonic the hedgehog#comic#shadow the hedgehog#shadow#maria robotnik#gerald robotnik#professor gerald robotnik#ark siblings#sonic x shadow generations#shadow generations#sonic movie 3#id in alt text

14K notes

·

View notes

Text

Is ChatGPT replacing writers?

Humans should realize that conventional content generated by ChatGPT is likely to develop tendencies of procrastination, memory loss, and reduce our academic abilities.

Ever since ChatGPT took on its role in providing great conversations to humans, humans have stopped relying on their intellect. ChatGPT is a new hope for people who find it difficult to produce novel content from time to time. Just ChatGPT things are turning popular, where people find it super easy to give prompts to the chat app and find answers that look great and satisfying to intellect. My…

#ai assistant#chat bot#chatgpt#content creation#Education#future#Humans#job at risk#philosophy of life#small changes#teaching#text generation#virtual assistant#writing#writing assistant

1 note

·

View note

Photo

Reblogging with the actual link: https://cooltext.com/Logo-Design-Burning

379K notes

·

View notes

Text

youtube

STOP Using Fake Human Faces in AI

#GenerativeAI#GANs (Generative Adversarial Networks)#VAEs (Variational Autoencoders)#Artificial Intelligence#Machine Learning#Deep Learning#Neural Networks#AI Applications#CreativeAI#Natural Language Generation (NLG)#Image Synthesis#Text Generation#Computer Vision#Deepfake Technology#AI Art#Generative Design#Autonomous Systems#ContentCreation#Transfer Learning#Reinforcement Learning#Creative Coding#AI Innovation#TDM#health#healthcare#bootcamp#llm#youtube#branding#animation

1 note

·

View note

Text

Sometimes...characters being in a romantic relationship is worse.

#text#sometimes it's the most boring way to have characters interact#sometimes it's worse than the chemistry they have as literally any other kind of relationship#sometimes their platonic dynamic is better#sometimes their hostile dynamic is better#sometimes their dynamic is better if it's anything other than romance#because maybe them falling into generic romance is how you ruin their characters.#aro#aromantic#aroace#i only tag these because it feels relevant to why i feel this way

23K notes

·

View notes

Text

Fun fact: a lot of generative AI for text works off predicting the next word on a data set so if you want to communicate something humans can understand that will corrupt data you could encode it. Like maybe alternate words between two sentences.

Simple It encoding isn’t methods quite like as this effective can as corrupt pure a gibberish dataset because for topics computers and but frequently if used we words communicate are what still we usable are data using but it word will order screw doesn’t up work the with word this order.

Random Though text alphabetical unfortunately codes isn’t adjusted super to likely work to on work a because set the dictionary definition could of be a really real effective word if in you the want program to can encode be things determined people by can scanning decode dictionaries.

If But you I do know that it last could part be I easier want to suggest use urban something dictionary standard bc like it Mariam would Webster be or so maybe funny Wikipedia and to break use the an data online so one bad.

Apparently they're selling post content to train AI now so let us be the first to say, flu nork purple too? West motor vehicle surprise hamster much! Apple neat weed very crumgible oysters in a patagonia, my hat. Very of the and some then shall we not? Much jelly.

56K notes

·

View notes

Text

was talking with a friend about how some of dunmeshi fаndom misunderstands kabru's initial feelings towards laios.

to sum up kabru's situation via a self-contained modernized metaphor:

kabru is like a guy who lost his entire family in a highly traumatic car accident. years later he joins a discord server and takes note of laios, another server member who seems interesting, so they start chatting. then laios reveals his special interest and favorite movie of all time is David Cronenberg's Crash (1996), and invites kabru to go watch a demolition derby with him

#dungeon meshi#delicious in dungeon#kabru#kabru already added laios as a discord friend. everyone else in the server can see laios excitedly asking kabru to go with him#what would You even Do in this situation. how would YOU feel?#basically: kabru isnt a laios-hater! hes just in shock bc Thats His Trauma. the key part is kabru still says yes#bc he wants to get to know laios. to understand why laios would be so fascinated by something horrific to him#and ALSO bc even while in shock kabru can still tell laios has unique expertise + knowledge that Could be used for Good#even if kabru doesnt fully trust laios yet (bc kabru just started talking to the guy 2 hours ago. they barely know each other)#kabru also understands that getting to know ppl (esp laios) means having to get to know their passions. even if it triggers his trauma here#but thats too much to fit in this metaphor/analogy. this is NOT an AU! its not supposed to cover everything abt kabru or laios' character!#its a self-contained metaphor written Specifically to be more easily relatable+thus easy to understand for general ppl online#(ie. assumed discord users. hence why i said (a non-specific) 'discord server' and not something specific like 'car repair subreddit')#its for ppl who mightve not fully grasped kabru's character+intentions and think hes being mean/'chaotic'/murderous.#to place ppl in kabru's shoes in an emotionally similar situation thats more possible/grounded in irl experiences and contexts.#and also for the movie punchline#mynn.txt#dm text#crossposting my tweets onto here since my friends suggested so

13K notes

·

View notes

Text

I've seen "I don't know how to play with toys anymore" a few times lately and just wanted to point out-

Playing with toys looks different for everyone, even actual children!

Playing with toys can look like: 🧸🪁🚂

Taking pictures of your toys and writing captions for them

Brushing or grooming soft toys or toys with rooted hair

Ordering or sorting your toys by colour/species etc

Making up stories, poems or comics about your toys

Dressing or accessorising your toys

Imagining your toys talking to you or each other, forming opinions of their own, etc

Drawing your toys

Taking your toys for a walk outside, even in a backpack or pocket if you don't want to carry them openly

Making lists of the toys you have and where you got them etc

Feel free to add your own ideas

#removing the agere part of the original text to make it more general but it still stands#since I saw at least one 'not agere but-' on this and wanted to make it more broad#Hester rambles#I don't know if this will be relevant to any of you but just in case#let's tag this why not#plushies#stuffies#toycore#kidcore#plushcore#agere#sfw agere#agedre#age dreaming

11K notes

·

View notes

Text

i love learning cursive just to write text for exactly one character

#fun umbral lore. i can barely read cursive#if you want to hide anything from me then write it in cursive and i will literally never be able to read it#or write it. i had to google cursive text generator and copy it for this#ill settle on textbox designs also eventually#god its been so long since i've drawn the manor gang i think#saw this post and i immediately thought “cyn”#it has nothing to do with her being my number 1 blorbo. bite me#murder drones#art#murder drones n#murder drones v#murder drones j#murder drones cyn#serial designation n#serial designation j#serial designation v#they're so gay also they blushed immediately after this and made out probably im still torn between like 5 different ships#curse you fanfics for putting these ideas in my head

9K notes

·

View notes