#Introduction to Algorithms and Data Structures

Explore tagged Tumblr posts

Text

What do we mean by worst-case performance of an algorithm?

The worst-case performance of an algorithm refers to the scenario in which the algorithm performs the least efficiently or takes the maximum amount of time or resources among all possible input data. In other words, it represents the "worst" possible input that an algorithm could encounter.

When analyzing the worst-case performance of an algorithm, you assume that the input data is specifically chosen or structured to make the algorithm perform as poorly as possible. This analysis is crucial because it provides a guarantee that, regardless of the input data, the algorithm will not perform worse than what is described by its worst-case time or space complexity.

For example, in the context of sorting algorithms, if you are analyzing the worst-case performance of an algorithm like Bubble Sort, you would consider a scenario where the input array is arranged in reverse order. This is the worst-case scenario for Bubble Sort because it requires the maximum number of comparisons and swaps.

In Big O notation, we express the worst-case time complexity of an algorithm using the notation O(f(n)), where "f(n)" is a function describing the upper bound on the runtime of the algorithm for a given input size "n." For example, if an algorithm has a worst-case time complexity of O(n^2), it means that the algorithm's runtime grows quadratically with the input size in the worst-case scenario.

Analyzing and understanding worst-case performance is important in algorithm design and analysis because it provides a guarantee that an algorithm will not exceed a certain level of inefficiency or resource usage, regardless of the input. It allows developers to make informed decisions about algorithm selection and optimization to ensure that an algorithm behaves predictably and efficiently in all situations.

0 notes

Text

What is ServiceNow |Introduction | User Interface| Application & Filter Navigation | Complete Course

youtube

ServiceNow is planned with intelligent systems to speed up the work process by providing solutions to amorphous work patterns. Each employee, customer, and machine in the enterprise is related to ServiceNow, allowing us to make requests on a single cloud platform. Various divisions working with the requests can assign, prioritize, correlate, get down to root cause issues, gain real‑time insights, and drive action. This workflow process helps the employees to work better, and this would eventually improve the service levels. ServiceNow provides cloud services for the entire enterprise. This module consists User Interface and Navigation. The Objective of this module is to make beginners learn how to navigate to applications and modules in ServiceNow, using the Application and Filter Navigators. To Create views and filters for a table list and to update record using online editing.

#introduction#ServiceNow#Courses#Free Training#Tutorials#Programming#Data Structure#Algorithms#Computer Science#Tips#Demos#ServiceNow Fundamentals#What is service now#Service now tutorial for beginners#Servicenow Online Tutorial#Service now introduction#Youtube

0 notes

Text

Unwanted: Chapter 22, Untold - Pt. 1

Pairing: Bucky Barnes x Avenger!Fem!Reader

Summary: When your FWB relationship with your best friend Bucky Barnes turns into something more, you couldn’t be happier. That is, however, until a new Avenger sets her sights on your super soldier and he inadvertently breaks your heart. You take on a mission you might not be prepared for to put some distance between the two of you and open yourself up to past traumas. Too bad the only one who can help you heal is the one person you can no longer trust.

Warnings: (For this part only; see Story Masterlist for general Warnings) Language, alcohol consumption, strippers,

Word Count: 1.5k

Previously On...: Tony expressed his concerns about you going on this mission.

A/N: When Tony Met Pocket!

NOTE! The tag list is a fickle bitch, so I'm not really going to be dealing with it anymore. If you want to be notified when new story parts drop, please follow @scoonsaliciousupdates

Banner By: The absolutely amazing @mrsbuckybarnes1917!

Thank you to all those who have been reading; if you like what you've read, likes, comments, and reblogs give me life, and I truly appreciate them, and you!

Taglist: (Sadly, tag list is closed; Tumblr will not let me add anyone new. If you want to be notified when I update, please Follow me for Notifications!) @jmeelee @cazellen @mrsbuckybarnes1917 @blackhawkfanatic @buckybarnessimpp @hayjat @capswife @itsteambarnes @marygoddessofmischief @sebastians-love @learisa @lethallyprotected @rabbitrabbit12321 @buckybarnesandmarvel @fanfictiongirl77 @calwitch @fantasyfootballchampion @selella @jackiehollanderr @wintercrows @sashaisready @missvelvetsstuff @angelbabyyy99 @keylimebeag @maybefoxysouls @vicmc624 @j23r23 @wintercrows @crist1216 @cjand10 @pattiemac1@les-sel @dottirose @winterslove1917 @harperkenobi @ivet4 @casey1-2007 @mrsevans90 @steeph-aniie @bean-bean2000 @beanbagbitch @peachiestevie @wintrsoldrluvr @shadowzena43

Tumblr will not let me directly tag the following: @marcswife21 @erelierraceala @jupiter-107 @doublejeon @hiqhkey @unaxv @brookeleclerc

Boston, 2002

The bass inside the club was pounding, reverberating through the air and your skull as you made your way onto the floor. The day had already been unbearably long, and after your shift tonight, you still had a mountain of reading to do for your Introduction to Data Structures and Algorithms class. But, MIT courses didn’t come cheap, even at two classes a semester, and you needed every penny you could make from your shifts at Beantown Burlesque. It would make more sense, financially, to work a club closer to the college, but the idea of running into any of your classmates or, god forbid, your professors, made the extra time and money you spent commuting from Cambridge to inner Boston completely worth it.

Not that you expected a lot of tips tonight. It would have been better if you’d been scheduled to work the stage before they sent you to the floor; you were always requested for more lap dances after the patrons had seen you work the pole. You’d just have to work your ass off to entice a couple of lonely men into the VIP booth. But that always came with the additional task of fighting off requests for additional “services.” You may have been desperate for cash, but you were quite done with having your body sold for money, thank you.

You made your way over to the bar, hoping to get some intel on tonight’s patrons so you could shoot your best shot.

“How’s it goin’ tonight, Cherry Pie?” the bartender, Mac, asked, using the pseudonym you’d chosen for your stage name when you started at the club a year ago.

“No complaints yet, Mac,” you said, gratefully accepting the glass of water he offered you– it was important to stay hydrated, after all, “but then again, the night is very young.”

Mac let out a gruff laugh as he wiped down a glass. “You’re too young to be so cynical, Cherr,” he said.

You shrugged. That was an understatement. “Any good prospects tonight?” you asked, leaning your elbows on the bartop.

Mac nodded his chin toward a group of young men sitting close to the stage. “That group over there’s racked up a pretty big tab so far. Think they’re from the MIT alumni conference.” That piqued your interest. Beantown Burlesque might not be the ideal place to network, but you’d honestly take whatever you could get.

“They seem decent enough?” you asked Mac.

“About as decent as any group of blokes that come here,” he offered. “But they’ve been pretty respectful so far; no one’s tried to put hands anywhere they shouldn’t.”

“Good enough for me,” you told him. With a parting wave, you sauntered over to the group, making sure to put some extra sway in your hips. As you approached, you surveyed the collection of men. They all seemed to be centering their focus on one man in particular– he was dark haired with a goatee and wearing a pair of tinted glasses and looked vaguely familiar, though you couldn’t place where you might have seen him before. You clocked his expensive loafers and custom Armani suit, and the way the others around him laughed a little too loudly at what he was saying.

That’s the one, you thought to yourself. He had the money. If you were going to make your rent on time this month, he was the one you’d need to impress.

“You boys fancy some company tonight?” you asked once you approached the group. The man with the goatee leaned forward, a sure sign of interest, and looked at you over the lens of his glasses.

“Well, gorgeous,” he said with a smirk, “we're not ones to turn down an offer for good companionship, especially from someone as captivating as you. But let's be real, the question is whether you can keep up with us. Think you're ready for the challenge?”

Oh, this one was cocky. You could work with that. You trailed your fingertips along the tops of his shoulders as you made your way around to the table in front of him. Without breaking eye contact, you picked up the double shot of whiskey sitting there and downed the entire thing in one swig without flinching.

The other men in the group whooped and hollered at your display, but the man with the goatee just studied you with a peculiar look on his face. “What’s your name, sweetheart?” he asked.

“You can call me Cherry Pie,” you said as you began swaying your hips to the rhythm of the music coming through the speakers.

“I didn’t ask what they call you here,” he said, leaning back as you put your hands on his shoulder and began swaying in between his legs. “I asked for your name.”

“You haven’t spent nearly enough to earn that, honey,” you said as you gyrated.

The man laughed at that, then, reaching for his wallet, pulled out a handful of crisp, one hundred dollar bills. He gently tucked them into the waistband of your bottoms. “How’s that?”

You looked at the bills tucked into your underwear. By your guess, there was about eight hundred dollars there. You just might make rent, after all. “It’s a start,” you shrugged, beginning your tried and true lap dance routine.

One of the other men in the group let out a loud laugh. “She’s sure got your number, Stark!”

At the name, your eyes shot to the man with the goatee’s face, and it suddenly clicked for you. “Holy shit,” you breathed. “You’re Tony Stark.”

Stark smiled. “Guilty as charged, sweetheart.”

“Your company’s network security sucks ass,” you told him, the words coming out of your mouth before you could stop them.

He quirked an eyebrow at that. “Excuse me?”

Fuck. “Uh, nothing, sorry. Forget I said anything.” You put a renewed vigor back into your dance.

“Um, no.” Stark said, grasping your wrist firmly enough to encourage you to stop dancing, but gently enough to let you know he posed you no threat. “I want to hear how a stripper knows the faults of my network security.”

You blushed at that. “I, uh, may have broken in the back door and temporarily held your system hostage for ten minutes last May,” you confessed.

“That was you?” Stark exclaimed. If you didn’t know any better, you’d think he sounded… impressed. “You paralyzed our entire operation!”

“Yeah… sorry about that.” Well, you could kiss any further tips goodbye, that was for sure.

“Why’d you relinquish control back to us?” he asked. “You could have held it for ransom; we would have paid whatever you asked for.”

Huh. You had never even considered doing that. “Well, um, actually, I did it as part of a final project? For my Engineering Ethics and Professionalism course at MIT?”

Stark cocked his head at you. “With Erickson?” You nodded, and Stark actually laughed. “He still a narcissistic son of a bitch?”

You chuckled and nodded. “Sexist, too. He nearly shat a brick when he had to watch a mere girl bring a Fortune 500 company to its knees.”

Stark laughed, heartily. “I’ll bet he did! What I wouldn’t have given to see his face!”

“I set up a camera to record it,” you told him. “I can make you a copy of the VHS, if you want. I needed to capture the moment for posterity.”

From there, the atmosphere and your position in the group shifted. You were no longer the entertainment. Tony (he insisted you call him that) invited you to join him as his equal, and for the next several hours, he picked your brain, testing your knowledge and asking you questions about yourself, much to the displeasure of the rest of his group. One by one, they departed, until it was just the two of you. You were having the time of your life. You figured you’d never again have the opportunity to sit back and just hang out with such an icon of the tech community, and you were going to make the most of it. Now, here you were playing a game of Never Have I Ever.

“Never have I ever sheared a sheep,” Tony said with a grin.

“Why, Mr. Stark,” you said, bringing your glass to your lips (you failed to mention that, technically, you weren’t legally old enough to drink), “you haven’t truly lived until you’ve shorn the raw wool from an unwilling ewe.”

“You’re shitting me,” Tony said, laughing.

You took the glass from your lips without drinking. “You got me,” you told him. I grew up in Dayton. Not a whole lotta opportunities for sheep shearing there.”

A mischievous glint came into Tony’s eyes. “Your shift’s got to be almost over,” he said. “What do you say, Cherry Pie? Wanna go shear a sheep?”

“(Y/N),” you told him. “My name’s (Y/N), and I would fucking love to.”

<- Previous Part / Next Part ->

#bucky barnes x reader#bucky barnes x you#james bucky buchanan barnes#bucky barnes x y/n#bucky x you#bucky x reader#bucky fanfic#bucky barnes#bucky barnes fanfic#bucky x female reader#bucky barnes fic#bucky barnes fanfiction#james buchanan barnes#mcu bucky barnes#james barnes

191 notes

·

View notes

Text

Free online courses for bioinformatics beginners

🔬 Free Online Courses for Bioinformatics Beginners 🚀

Are you interested in bioinformatics but don’t know where to start? Whether you're from a biotechnology, biology, or computer science background, learning bioinformatics can open doors to exciting opportunities in genomics, drug discovery, and data science. And the best part? You can start for free!

Here’s a list of the best free online bioinformatics courses to kickstart your journey.

📌 1. Introduction to Bioinformatics – Coursera (University of Toronto)

📍 Platform: Coursera 🖥️ What You’ll Learn:

Basic biological data analysis

Algorithms used in genomics

Hands-on exercises with biological datasets

🎓 Why Take It? Ideal for beginners with a biology background looking to explore computational approaches.

📌 2. Bioinformatics for Beginners – Udemy (Free Course)

📍 Platform: Udemy 🖥️ What You’ll Learn:

Introduction to sequence analysis

Using BLAST for genomic comparisons

Basics of Python for bioinformatics

🎓 Why Take It? Short, beginner-friendly course with practical applications.

📌 3. EMBL-EBI Bioinformatics Training

📍 Platform: EMBL-EBI 🖥️ What You’ll Learn:

Genomic data handling

Transcriptomics and proteomics

Data visualization tools

🎓 Why Take It? High-quality training from one of the most reputable bioinformatics institutes in Europe.

📌 4. Introduction to Computational Biology – MIT OpenCourseWare

📍 Platform: MIT OCW 🖥️ What You’ll Learn:

Algorithms for DNA sequencing

Structural bioinformatics

Systems biology

🎓 Why Take It? A solid foundation for students interested in research-level computational biology.

📌 5. Bioinformatics Specialization – Coursera (UC San Diego)

📍 Platform: Coursera 🖥️ What You’ll Learn:

How bioinformatics algorithms work

Hands-on exercises in Python and Biopython

Real-world applications in genomics

🎓 Why Take It? A deep dive into computational tools, ideal for those wanting an in-depth understanding.

📌 6. Genomic Data Science – Harvard Online (edX) 🖥️ What You’ll Learn:

RNA sequencing and genome assembly

Data handling using R

Machine learning applications in genomics

🎓 Why Take It? Best for those interested in AI & big data applications in genomics.

📌 7. Bioinformatics Courses on BioPractify (100% Free)

📍 Platform: BioPractify 🖥️ What You’ll Learn:

Hands-on experience with real datasets

Python & R for bioinformatics

Molecular docking and drug discovery techniques

🎓 Why Take It? Learn from domain experts with real-world projects to enhance your skills.

🚀 Final Thoughts: Start Learning Today!

Bioinformatics is a game-changer in modern research and healthcare. Whether you're a biology student looking to upskill or a tech enthusiast diving into genomics, these free courses will give you a strong start.

📢 Which course are you excited to take? Let me know in the comments! 👇💬

#Bioinformatics#FreeCourses#Genomics#BiotechCareers#DataScience#ComputationalBiology#BioinformaticsTraining#MachineLearning#GenomeSequencing#BioinformaticsForBeginners#STEMEducation#OpenScience#LearningResources#PythonForBiologists#MolecularBiology

9 notes

·

View notes

Text

Day 2 : Rust and Data Structure

This is second post and I am realizing that documenting the learning progress with blog is so hard for me, I studied error handling in in Rust, I watched second lecture from Algorithms course, I understood the most of it. but when it comes to writing the blog, My brain don't know what to write and how to write ?

What I learned ? What challenges I faced ? What I found interesting ? Key takeaways ?

YEAH RIGHT!! I KNOW THEM. BUT IT'S JUST NOT COMING TO ME AT THE TIME OF WRITING.

Anyways, I still tried!!! and I will do it again tomorrow.

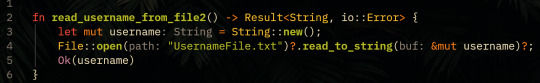

🦀 Rust Book: Error Handling

Today, I studied the 9th chapter of the rust book: Error handling, So, rust groups errors into two categories : recoverable and non-recoverable.

Rust has this Result type which is an enum with two variants Ok and Err. I also got to know the ? operator, which at looked like magic, it’s a shortcut to pass errors up the chain. Pretty cool, How this example

turns into just few lines of code by using ?

This chapter was easy to follow. I typed and ran all the examples, I spent 3 hours with it.

📘MIT 6.006 Introduction to Algorithms : Lecture 2

I watched 2nd lecture from the course. It was about Data Structures.

I got to know difference between interfaces and data structures. Now, I want to implement static and dynamic array and linked list from scratch.

Tomorrow's plan

I'll start the 10th chapter in rust book. Generic types and Traits and continue the 3rd lecture in the Algorithms course. Well, I want to start working on a project with Rust, right now I am thinking about writing interpreter. But, I'll wait until I complete the 12th chapter from the book.

#studyblr#codeblr#programming#rust#computer science#software development#100 days of productivity#100 days of studying#learn to code

16 notes

·

View notes

Text

Bayesian Active Exploration: A New Frontier in Artificial Intelligence

The field of artificial intelligence has seen tremendous growth and advancements in recent years, with various techniques and paradigms emerging to tackle complex problems in the field of machine learning, computer vision, and natural language processing. Two of these concepts that have attracted a lot of attention are active inference and Bayesian mechanics. Although both techniques have been researched separately, their synergy has the potential to revolutionize AI by creating more efficient, accurate, and effective systems.

Traditional machine learning algorithms rely on a passive approach, where the system receives data and updates its parameters without actively influencing the data collection process. However, this approach can have limitations, especially in complex and dynamic environments. Active interference, on the other hand, allows AI systems to take an active role in selecting the most informative data points or actions to collect more relevant information. In this way, active inference allows systems to adapt to changing environments, reducing the need for labeled data and improving the efficiency of learning and decision-making.

One of the first milestones in active inference was the development of the "query by committee" algorithm by Freund et al. in 1997. This algorithm used a committee of models to determine the most meaningful data points to capture, laying the foundation for future active learning techniques. Another important milestone was the introduction of "uncertainty sampling" by Lewis and Gale in 1994, which selected data points with the highest uncertainty or ambiguity to capture more information.

Bayesian mechanics, on the other hand, provides a probabilistic framework for reasoning and decision-making under uncertainty. By modeling complex systems using probability distributions, Bayesian mechanics enables AI systems to quantify uncertainty and ambiguity, thereby making more informed decisions when faced with incomplete or noisy data. Bayesian inference, the process of updating the prior distribution using new data, is a powerful tool for learning and decision-making.

One of the first milestones in Bayesian mechanics was the development of Bayes' theorem by Thomas Bayes in 1763. This theorem provided a mathematical framework for updating the probability of a hypothesis based on new evidence. Another important milestone was the introduction of Bayesian networks by Pearl in 1988, which provided a structured approach to modeling complex systems using probability distributions.

While active inference and Bayesian mechanics each have their strengths, combining them has the potential to create a new generation of AI systems that can actively collect informative data and update their probabilistic models to make more informed decisions. The combination of active inference and Bayesian mechanics has numerous applications in AI, including robotics, computer vision, and natural language processing. In robotics, for example, active inference can be used to actively explore the environment, collect more informative data, and improve navigation and decision-making. In computer vision, active inference can be used to actively select the most informative images or viewpoints, improving object recognition or scene understanding.

Timeline:

1763: Bayes' theorem

1988: Bayesian networks

1994: Uncertainty Sampling

1997: Query by Committee algorithm

2017: Deep Bayesian Active Learning

2019: Bayesian Active Exploration

2020: Active Bayesian Inference for Deep Learning

2020: Bayesian Active Learning for Computer Vision

The synergy of active inference and Bayesian mechanics is expected to play a crucial role in shaping the next generation of AI systems. Some possible future developments in this area include:

- Combining active inference and Bayesian mechanics with other AI techniques, such as reinforcement learning and transfer learning, to create more powerful and flexible AI systems.

- Applying the synergy of active inference and Bayesian mechanics to new areas, such as healthcare, finance, and education, to improve decision-making and outcomes.

- Developing new algorithms and techniques that integrate active inference and Bayesian mechanics, such as Bayesian active learning for deep learning and Bayesian active exploration for robotics.

Dr. Sanjeev Namjosh: The Hidden Math Behind All Living Systems - On Active Inference, the Free Energy Principle, and Bayesian Mechanics (Machine Learning Street Talk, October 2024)

youtube

Saturday, October 26, 2024

#artificial intelligence#active learning#bayesian mechanics#machine learning#deep learning#robotics#computer vision#natural language processing#uncertainty quantification#decision making#probabilistic modeling#bayesian inference#active interference#ai research#intelligent systems#interview#ai assisted writing#machine art#Youtube

6 notes

·

View notes

Text

Save me my blorbos save me from Data Structure And Algorithms, Introduction to Computer Systems II, Compilation Principles, Machine Learning and Graph Theory

2 notes

·

View notes

Text

// July 17th, 2024 ✦ 12/100 days of productivity

Yesterday I had the Law final exam. I think I did well, but we’ll see during these next few days.

At least now I can breathe, focus only in work for a few days. Plus, I already subscribed to the next subjects for next semester: Databases, Algorithms and Data Structures, and Software Engineering Introduction. I wanted to do Introduction to Communications but there weren’t any spots available 😕 oh well, next semester I guess.

Funny enough, even though yesterday I spent the entire day studying and doing my chores around the apartment, I feel rather energized. I better see how can put that energy into good use today 👀

#studyblr#my post#shelikesrainydays#university#forest#forest app#stay focused#productivity#100 days of productivity#paulastudies

9 notes

·

View notes

Text

The Future of Digital Marketing in 2025 – Trends Every Business Must Adopt

Introduction

As we step into 2025, digital marketing is evolving at an unprecedented pace. Businesses that stay ahead of trends will increase brand visibility, attract more leads, and boost conversions. From AI-driven SEO to hyper-personalized marketing, the digital landscape is more competitive than ever.

Whether you’re a small business owner, entrepreneur, or marketing professional, understanding these trends will help you craft a winning digital marketing strategy. Let’s explore the top digital marketing trends for 2025 that will shape the future of online success.

1. AI-Powered SEO is the Future

Search engines are becoming smarter and more intuitive. With AI-powered algorithms like Google’s MUM (Multitask Unified Model) and BERT (Bidirectional Encoder Representations from Transformers), traditional SEO tactics are no longer enough.

How AI is Transforming SEO in 2025?

✔ AI-driven content creation: Advanced AI tools analyze search intent to create highly relevant, optimized content. ✔ Predictive analytics: AI predicts user behavior, helping businesses optimize content for better engagement. ✔ Voice and visual search optimization: As voice assistants like Siri, Alexa, and Google Assistant become more popular, brands must adapt their SEO strategy to long-tail conversational queries.

Actionable Tip: Optimize for natural language searches, use structured data markup, and ensure website accessibility to improve rankings in 2025.

2. Video Marketing Continues to Dominate

With platforms like TikTok, Instagram Reels, and YouTube Shorts, video marketing is becoming the most powerful form of content in 2025.

Why is Video Marketing Essential?

📌 80% of internet traffic will be video content by 2025 (Cisco Report). 📌 Short-form videos increase engagement and hold attention longer than static content. 📌 Live streaming and interactive videos help brands connect with audiences in real-time.

Actionable Tip: Focus on storytelling, behind-the-scenes content, product demonstrations, and influencer collaborations to boost engagement.

3. Hyper-Personalization with AI & Data Analytics

Consumers expect highly personalized experiences, and AI-powered marketing automation makes it possible.

How Does Hyper-Personalization Work?

✔ AI analyzes customer behavior and past interactions to create tailored marketing messages. ✔ Email marketing campaigns are dynamically personalized based on user interests. ✔ Chatbots and voice assistants provide real-time, customized support.

Actionable Tip: Leverage tools like HubSpot, Salesforce, and Marketo to automate personalized marketing campaigns.

4. Influencer Marketing Becomes More Authentic

The influencer marketing industry is projected to reach $21.1 billion by 2025. However, brands are shifting from celebrity influencers to micro and nano-influencers for better authenticity and engagement.

Why Micro-Influencers Matter?

🎯 Higher engagement rates than macro-influencers. 🎯 More trust & relatability with niche audiences. 🎯 Cost-effective collaborations for brands with limited budgets.

Actionable Tip: Partner with influencers in your niche and use user-generated content (UGC) to enhance brand credibility.

5. Voice & Visual Search Optimization is a Must

By 2025, 50% of all searches will be voice or image-based, making traditional text-based SEO insufficient.

How to Optimize for Voice & Visual Search?

✔ Use long-tail keywords & conversational phrases. ✔ Optimize images with alt text & structured data. ✔ Ensure your site is mobile-friendly and fast-loading.

Actionable Tip: Implement Google Lens-friendly content to appear in image-based search results.

Conclusion

The future of digital marketing in 2025 is driven by AI, personalization, and immersive experiences. If you’re not adapting, you’re falling behind!

Looking for expert digital marketing strategies? Mana Media Marketing can help you grow and dominate your niche. Contact us today!

2 notes

·

View notes

Text

What is Data Structure in Python?

Summary: Explore what data structure in Python is, including built-in types like lists, tuples, dictionaries, and sets, as well as advanced structures such as queues and trees. Understanding these can optimize performance and data handling.

Introduction

Data structures are fundamental in programming, organizing and managing data efficiently for optimal performance. Understanding "What is data structure in Python" is crucial for developers to write effective and efficient code. Python, a versatile language, offers a range of built-in and advanced data structures that cater to various needs.

This blog aims to explore the different data structures available in Python, their uses, and how to choose the right one for your tasks. By delving into Python’s data structures, you'll enhance your ability to handle data and solve complex problems effectively.

What are Data Structures?

Data structures are organizational frameworks that enable programmers to store, manage, and retrieve data efficiently. They define the way data is arranged in memory and dictate the operations that can be performed on that data. In essence, data structures are the building blocks of programming that allow you to handle data systematically.

Importance and Role in Organizing Data

Data structures play a critical role in organizing and managing data. By selecting the appropriate data structure, you can optimize performance and efficiency in your applications. For example, using lists allows for dynamic sizing and easy element access, while dictionaries offer quick lookups with key-value pairs.

Data structures also influence the complexity of algorithms, affecting the speed and resource consumption of data processing tasks.

In programming, choosing the right data structure is crucial for solving problems effectively. It directly impacts the efficiency of algorithms, the speed of data retrieval, and the overall performance of your code. Understanding various data structures and their applications helps in writing optimized and scalable programs, making data handling more efficient and effective.

Read: Importance of Python Programming: Real-Time Applications.

Types of Data Structures in Python

Python offers a range of built-in data structures that provide powerful tools for managing and organizing data. These structures are integral to Python programming, each serving unique purposes and offering various functionalities.

Lists

Lists in Python are versatile, ordered collections that can hold items of any data type. Defined using square brackets [], lists support various operations. You can easily add items using the append() method, remove items with remove(), and extract slices with slicing syntax (e.g., list[1:3]). Lists are mutable, allowing changes to their contents after creation.

Tuples

Tuples are similar to lists but immutable. Defined using parentheses (), tuples cannot be altered once created. This immutability makes tuples ideal for storing fixed collections of items, such as coordinates or function arguments. Tuples are often used when data integrity is crucial, and their immutability helps in maintaining consistent data throughout a program.

Dictionaries

Dictionaries store data in key-value pairs, where each key is unique. Defined with curly braces {}, dictionaries provide quick access to values based on their keys. Common operations include retrieving values with the get() method and updating entries using the update() method. Dictionaries are ideal for scenarios requiring fast lookups and efficient data retrieval.

Sets

Sets are unordered collections of unique elements, defined using curly braces {} or the set() function. Sets automatically handle duplicate entries by removing them, which ensures that each element is unique. Key operations include union (combining sets) and intersection (finding common elements). Sets are particularly useful for membership testing and eliminating duplicates from collections.

Each of these data structures has distinct characteristics and use cases, enabling Python developers to select the most appropriate structure based on their needs.

Explore: Pattern Programming in Python: A Beginner’s Guide.

Advanced Data Structures

In advanced programming, choosing the right data structure can significantly impact the performance and efficiency of an application. This section explores some essential advanced data structures in Python, their definitions, use cases, and implementations.

Queues

A queue is a linear data structure that follows the First In, First Out (FIFO) principle. Elements are added at one end (the rear) and removed from the other end (the front).

This makes queues ideal for scenarios where you need to manage tasks in the order they arrive, such as task scheduling or handling requests in a server. In Python, you can implement a queue using collections.deque, which provides an efficient way to append and pop elements from both ends.

Stacks

Stacks operate on the Last In, First Out (LIFO) principle. This means the last element added is the first one to be removed. Stacks are useful for managing function calls, undo mechanisms in applications, and parsing expressions.

In Python, you can implement a stack using a list, with append() and pop() methods to handle elements. Alternatively, collections.deque can also be used for stack operations, offering efficient append and pop operations.

Linked Lists

A linked list is a data structure consisting of nodes, where each node contains a value and a reference (or link) to the next node in the sequence. Linked lists allow for efficient insertions and deletions compared to arrays.

A singly linked list has nodes with a single reference to the next node. Basic operations include traversing the list, inserting new nodes, and deleting existing ones. While Python does not have a built-in linked list implementation, you can create one using custom classes.

Trees

Trees are hierarchical data structures with a root node and child nodes forming a parent-child relationship. They are useful for representing hierarchical data, such as file systems or organizational structures.

Common types include binary trees, where each node has up to two children, and binary search trees, where nodes are arranged in a way that facilitates fast lookups, insertions, and deletions.

Graphs

Graphs consist of nodes (or vertices) connected by edges. They are used to represent relationships between entities, such as social networks or transportation systems. Graphs can be represented using an adjacency matrix or an adjacency list.

The adjacency matrix is a 2D array where each cell indicates the presence or absence of an edge, while the adjacency list maintains a list of edges for each node.

See: Types of Programming Paradigms in Python You Should Know.

Choosing the Right Data Structure

Selecting the appropriate data structure is crucial for optimizing performance and ensuring efficient data management. Each data structure has its strengths and is suited to different scenarios. Here’s how to make the right choice:

Factors to Consider

When choosing a data structure, consider performance, complexity, and specific use cases. Performance involves understanding time and space complexity, which impacts how quickly data can be accessed or modified. For example, lists and tuples offer quick access but differ in mutability.

Tuples are immutable and thus faster for read-only operations, while lists allow for dynamic changes.

Use Cases for Data Structures:

Lists are versatile and ideal for ordered collections of items where frequent updates are needed.

Tuples are perfect for fixed collections of items, providing an immutable structure for data that doesn’t change.

Dictionaries excel in scenarios requiring quick lookups and key-value pairs, making them ideal for managing and retrieving data efficiently.

Sets are used when you need to ensure uniqueness and perform operations like intersections and unions efficiently.

Queues and stacks are used for scenarios needing FIFO (First In, First Out) and LIFO (Last In, First Out) operations, respectively.

Choosing the right data structure based on these factors helps streamline operations and enhance program efficiency.

Check: R Programming vs. Python: A Comparison for Data Science.

Frequently Asked Questions

What is a data structure in Python?

A data structure in Python is an organizational framework that defines how data is stored, managed, and accessed. Python offers built-in structures like lists, tuples, dictionaries, and sets, each serving different purposes and optimizing performance for various tasks.

Why are data structures important in Python?

Data structures are crucial in Python as they impact how efficiently data is managed and accessed. Choosing the right structure, such as lists for dynamic data or dictionaries for fast lookups, directly affects the performance and efficiency of your code.

What are advanced data structures in Python?

Advanced data structures in Python include queues, stacks, linked lists, trees, and graphs. These structures handle complex data management tasks and improve performance for specific operations, such as managing tasks or representing hierarchical relationships.

Conclusion

Understanding "What is data structure in Python" is essential for effective programming. By mastering Python's data structures, from basic lists and dictionaries to advanced queues and trees, developers can optimize data management, enhance performance, and solve complex problems efficiently.

Selecting the appropriate data structure based on your needs will lead to more efficient and scalable code.

#What is Data Structure in Python?#Data Structure in Python#data structures#data structure in python#python#python frameworks#python programming#data science

6 notes

·

View notes

Text

ServiceNow | What is Update Sets | Compare , Revert and Merge Update Sets | Complete Course

ServiceNow is planned with intelligent systems to speed up the work process by providing solutions to amorphous work patterns. Each employee, customer, and machine in the enterprise is related to ServiceNow, allowing us to make requests on a single cloud platform. Various divisions working with the requests can assign, prioritize, correlate, get down to root cause issues, gain real‑time insights, and drive action. This workflow process helps the employees to work better, and this would eventually improve the service levels. ServiceNow provides cloud services for the entire enterprise.

youtube

#Introduction#ServiceNow#Courses#Free Training#Tutorials#Programming#Data Structure#Algorithms#Computer Science#Tips#Demos#ServiceNow Fundamentals#What is service now#Service now tutorial for beginners#Servicenow Online Tutorial#Service now introduction#navgation#UI16#Formview#Records#bigdata#programing#server#database#data science course#Youtube

0 notes

Text

Is Lotto Champ Legit Or Not? - Lotto Champ Software Download Reviews

Read this in-depth Lotto Champ Software review to discover if this system truly boosts your winning odds. Is it worth the hype? Find out here!

Lotto Champ Software promises to increase lottery wins using a mathematical strategy. But does it really work? This review breaks it all down!

Introduction

Winning the lottery has been a dream for many, but let’s face it—luck alone doesn’t always cut it. That’s where Lotto Champ Software steps in, claiming to use an advanced algorithm to boost your chances. But does it really hold water, or is it just another gimmick? Let’s dive into this review and see what makes it tick!

What is Lotto Champ Software?

Lotto Champ Software is a lottery prediction system designed to increase your odds of winning. It claims to use a strategic number selection process based on previous winning patterns.

Key Features:

Smart Number Selection – Uses statistical analysis to pick numbers with higher chances of appearing.

Easy-to-Use Interface – No complicated formulas; just follow the recommendations.

Multiple Lottery Compatibility – Works with various lottery formats worldwide.

Frequent Updates – Regularly updated to adapt to changes in lottery patterns.

How Does Lotto Champ Software Work?

The software analyzes past lottery results, identifies patterns, and suggests the best numbers to play. Rather than relying on pure luck, it aims to tilt the odds slightly in your favor.

Steps to Use:

Download & Install – The software is simple to set up on any device.

Select Your Lottery – Choose the lottery type you want to play.

Get Number Predictions – The system generates a list of numbers based on historical data.

Play and Wait – Use the numbers provided and see how they perform.

Pros and Cons of Lotto Champ Software

Every product has its highs and lows. Here’s what stands out:

Pros:

✅ Easy to Use – No technical knowledge required. ✅ Data-Driven – Uses real lottery statistics for predictions. ✅ Supports Various Lotteries – Works with different types of games. ✅ Saves Time – No need to manually analyze past results...

Is Lotto Champ Legit Or Not? Full Lotto Champ Software Download Reviews here! at https://scamorno.com/LottoChamp-Review-Software/?id=tumblr-legitornotdownload

Cons:

❌ Not a Guarantee – There’s still an element of luck involved. ❌ Paid Software – It requires an investment. ❌ Limited Information on Algorithm – The exact methodology remains undisclosed.

Does Lotto Champ Software Actually Work?

Now, here’s the million-dollar question! While Lotto Champ Software isn’t a magic wand, many users claim they’ve seen improvements in their lottery success. By using statistical strategies instead of random guesses, it provides a more structured approach.

Users have reported:

More frequent smaller wins

Better number selection than random guessing

A sense of control over their lottery picks

However, since lottery results are still unpredictable, it’s important to manage expectations.

Who Should Use Lotto Champ Software?

This software is ideal for:

Casual Lottery Players who want to improve their odds.

Regular Participants who spend money on tickets frequently.

Data-Driven Gamblers who enjoy numbers and probability.

If you’re looking for a risk-free way to guarantee wins, this might not be for you. But if you want to approach the lottery with a bit of strategy, it’s worth considering.

FAQs About Lotto Champ Software

1. Is Lotto Champ Software a scam?

No, it’s not a scam. While it doesn’t promise guaranteed wins, it provides a structured approach to number selection.

2. Can this software predict the exact winning numbers?

Not exactly. It uses statistical analysis to improve odds, but it can’t guarantee a jackpot.

3. Do I need any special skills to use it?

Not at all! The interface is user-friendly, and everything is automated.

4. Is Lotto Champ Software legal?

Yes, using software for lottery number selection is completely legal in most regions.

5. How much does Lotto Champ Software cost?

The price varies, but it’s a one-time investment compared to constantly buying random tickets...

Is Lotto Champ Legit Or Not? Full Lotto Champ Software Download Reviews here! at https://scamorno.com/LottoChamp-Review-Software/?id=tumblr-legitornotdownload

2 notes

·

View notes

Text

Zipf Maneuvers: On Non-Reprintable Materials

Andrew C. Wenaus & Germán Sierra

with an introduction by Steven Shaviro

Erratum Press Academic Division, 2025

Zipf Maneuvers: On Non-Reprintable Materials is a work of conceptual protest that challenges the commodification of knowledge in academic publishing, using mathematical and algorithmic techniques to resist institutional control over intellectual labour. In response to the exorbitant fee imposed by corporate publishers to reprint their own work, neuroscientist Germán Sierra and literary theorist Andrew C. Wenaus devise a radical strategy to bypass the neoliberal logic of access and ownership. As cultural critic and philosopher Steven Shaviro remarks in the introduction to this volume, the project orbits around Zipf’s Law, a statistical principle that ranks words by frequency. Sierra and Wenaus deploy a Python algorithm to reorganize their original articles according to Zipfian distributions, alphabetizing and numerically indexing every word. This reorganization produces a fragmented, non-linear data set that resists conventional reading. Each word is assigned a number corresponding to its original position in the text, creating a disjointed, catalogue-like structure. The result is a protest against the corporate financialization of knowledge and a critique of intellectual property laws that restrict access. By transforming their essays into algorithmically rearranged data, Zipf Maneuvers enacts a singular form of resistance, exposing the absurdity of a system that hinders the free circulation of ideas.

2 notes

·

View notes

Text

Field study synopsis page

Title: A Field Study on Sentinel Ships

Abstract

This study examines the enigmatic Sentinel ships that patrol the stars. As an explorer and researcher, I have observed these vessels in various systems, documenting their behavior, technology, and potential origins. Whiterock aims to provide insight into their purpose and how their presence influences the galactic ecosystem.

Introduction

The Sentinels are an omnipresent force in the galaxy, serving as both protectors and enforcers of some mysterious universal order. Among their many manifestations, Sentinel ships represent a specialized fleet that enforces their strict protocols in space. While planetary Sentinels regulate activities on land, Sentinel ships respond aggressively to spacefaring violations, including unauthorized mining, piracy, or direct aggression. Understanding these entities is critical for survival and the development of diplomatic strategies.

Methods of Observation

Data for this study was collected through firsthand encounters with Sentinel ships. Observations were conducted from the cockpit of a heavily modified explorer class starship equipped with advanced scanners. Encounters ranged from non-hostile patrols to direct combat engagements in various star systems. I also analyzed debris from downed Sentinel ships to gain insights into their technology.

Findings

1. Appearance and Design

Sentinel ships are sleek and angular, often resembling predatory creatures. Their designs suggest a focus on both intimidation and aerodynamic efficiency. Each ship is equipped with glowing energy cores, which appear to serve as both power sources and weak points during combat.

2. Behavior and Tactics

Sentinel ships are not inherently hostile but become aggressive when they detect violations of their protocols. They often arrive in escalating waves, beginning with small interceptor craft and escalating to heavily armed frigates if resistance continues. Their tactics are highly coordinated, suggesting either centralized control or advanced AI algorithms governing their actions.

3. Technology and Composition

The debris of Sentinel ships reveals a blend of organic and mechanical components. Their technology seems to rely on a unique material known as Pugneum, which exhibits self-repairing properties. Advanced weapon systems, including pulse lasers and plasma cannons, make them formidable adversaries. Their materials suggest a level of technological sophistication far beyond most known species in the galaxy.

4. Purpose and Origins

The exact purpose of the Sentinel fleet remains speculative. Theories range from their role as enforcers of an ancient ecological balance to tools of a hidden creator species. Their presence in every system and their reaction to resource exploitation suggest a primary directive of maintaining order and preventing excessive disruption to galactic ecosystems.

Discussion

The Sentinels' spaceborne manifestations highlight a complex and potentially ancient system of governance within the galaxy. Their advanced technology and unyielding vigilance suggest they are not merely reactive but part of a broader, more purposeful network. Whether their goals align with the preservation of life or the suppression of unchecked expansion remains unclear.

Survival in their presence requires careful planning, from evasion strategies to targeted combat tactics. However, the possibility of deeper communication or diplomacy with the Sentinels could unlock secrets about the galaxy's true history and its hidden power structures.

Conclusion

The Sentinel ships are an enduring mystery, embodying the duality of order and destruction. While they are a source of peril for explorers, they also offer an opportunity for a deeper understanding of the universe's interconnected systems. Future research should focus on deciphering the origins of the Sentinels and uncovering their ultimate purpose in the galaxy.

Notes on Further Research

Continued studies on planetary Sentinels and Sentinel archives may provide additional context for their behaviors and objectives. Moreover, engaging with other explorers to compare encounters and findings could illuminate patterns in their deployment and actions across different regions of space.

*Researcher: [Name Redacted for Security Reasons]*

*Location: [Classified Star System]*

#exploration#galaxies#nms#no mans sky#odyalutai#research#science#space#spaceships#Sentinel#WhiteRock Research Facility

4 notes

·

View notes

Text

20 SEO Mistakes To Avoid In 2024

Introduction In the rapidly evolving field of digital marketing, search engine optimization, or SEO, continues to be essential for success online. Nonetheless, the field of SEO is always evolving due to changes in user behaviour and algorithmic changes. It's critical to keep up with the newest trends and steer clear of typical traps if you want to guarantee that your website stays visible and competitive.

In this post, we'll look at 20 SEO blunders to avoid in 2024 to help you move deftly through the always-shifting digital landscape.

20 Mistakes You Should Avoid In SEO For Best Results

Ignoring Mobile Optimization:

In 2024, mobile optimization will no longer be a luxury; it will be essential. Since mobile devices are used by the majority of internet users to access content, not optimizing your website for mobile devices might result in lower visibility and lower user engagement.

Ignoring Page Speed:

Slow-loading pages can have a big effect on your site's SEO success in a world where speed is everything. Consumers demand immediate satisfaction, and search engines give preference to websites that load quickly. To improve page speed, make sure to minimize HTTP queries, take advantage of browser caching, and optimize images.

Ignoring Voice Search Optimization:

It is now mandatory to optimize your content for voice search due to the popularity of voice assistants like Siri, Alexa, and Google Assistant. Take into account long-tail keywords and natural language searches to match user speech patterns when conducting voice searches.

Ignoring HTTPS Migration:

In today's digital world, security is crucial, and Google favours secure websites. Making the switch to HTTPS improves your site's trustworthiness and security in the eyes of search engines and users alike.

Ignoring Local SEO:

Ignoring local SEO can be an expensive error for companies that cater to local customers. To increase exposure in local search results, make sure your business listings are correct and consistent across online directories and make use of local keywords.

Failing to Optimize for Featured Snippets:

Search engine results pages (SERPs) now prominently display featured snippets, which give users instant answers to their questions. To increase your chances of getting included in snippets, organize your material to provide succinct answers to frequently asked questions.

Excessive Anchor Text Optimization:

Although anchor text optimization is crucial for search engine optimization, excessive optimization may raise red flags with search engines. To avoid fines, keep the diversity of your anchor text in a natural balance by using synonyms and variations.

Ignoring User Experience (UX):

UX affects metrics like click-through rate, dwell duration, and bounce rate, and it is a major factor in SEO. To increase UX and SEO performance, give priority to responsive design, appealing content, and easy-to-navigate pages.

Underestimating the Power of Internal Linking:

Internal linking improves search engine optimization by dispersing link equity throughout your pages and assisting users in navigating your website. Include pertinent internal links in your text to improve crawlability and create a logical hierarchy.

Ignore Structured Data Markup:

Rich snippets and improved SERP displays are made possible by structured data markup, which gives search engines context about your material. Use structured data markup to increase search engine visibility and click-through rate.

Ignoring Image Optimization:

Images are more than simply decorative components; alt text, file names, and image sitemaps all help with SEO. To increase accessibility and SEO, optimize photos for size and relevancy and provide a description to the alt text.

Neglecting Content Quality:

In an era of abundant content, quality is paramount. The SEO performance of your website may be harmed by thin, pointless, or duplicate content. Concentrate on producing valuable, high-quality content that speaks to and meets the demands of your target audience.

Ignoring Social Signals:

Social media can affect search visibility indirectly by raising brand awareness, engagement, and traffic, even though its direct impact on SEO is up for discussion. Sustain a consistent presence on pertinent social media channels to increase the visibility of your material.

Ignoring technical SEO:

Technical SEO, which includes aspects like crawlability, indexing, and site structure, lays the groundwork for your site's overall performance. Perform routine audits to find and fix technical problems that could limit the SEO potential of your website.

Keyword Stuffing:

The overuse of keywords, often known as keyword stuffing, is a holdover from antiquated search engine optimization techniques and can now lead to search engine penalties. To keep your content relevant and readable, instead concentrate on naturally occurring keyword integration.

Ignoring Video Optimization:

Videos are taking over search results and provide a captivating way for users to consume material. To increase your videos' exposure in standard and video search results, make sure they have clear names, descriptions, and transcripts.

Ignoring Backlink Quality:

In today's SEO environment, quality is more important than quantity even if backlinks are still a crucial ranking component. Make getting backlinks from reputable, relevant sources your top priority, and avoid deceptive link-building strategies that can damage the reputation of your website.

Ignoring Local Citations:

In local search engine optimization, local citations—that is, references to your company's name, address, and phone number (NAP)—are quite important. Make sure that your NAP information is the same on all websites, social media pages, and review sites.

Ignoring SEO Analytics:

An effective SEO strategy requires data-driven decision-making. Track your progress and find optimization possibilities by keeping an eye on key performance indicators (KPIs), including organic traffic, keyword rankings, and conversion rates, on a regular basis.

Failing to Adjust to Algorithm Changes:

Algorithms used by search engines are always changing, so what works now might not work tomorrow. Keep up with algorithm changes and modify your SEO plan as necessary to keep your website visible and relevant in search results.

Conclusion

Success in the ever-changing world of SEO depends on avoiding frequent errors and keeping up with current trends. You can position your website for increased visibility, traffic, and eventually business growth by avoiding these 20 SEO blunders in 2024.

To Read more of our blogs, click the link below 👇

#seo mistakes#SEO mistakes to avoid#SEO#Search engine optimization#SEO optimization#SEO in 2024#Search engine optimization in 2024#strategies of SEO#SEO strategies in 2024#search engine optimization strategies#mistakes to avoid in 2024

7 notes

·

View notes

Text

The Evolution of Programming Paradigms: Recursion’s Impact on Language Design

“Recursion, n. See Recursion.” -- Ambrose Bierce, The Devil’s Dictionary (1906-1911)

The roots of programming languages can be traced back to Alan Turing's groundbreaking work in the 1930s. Turing's vision of a universal computing machine, known as the Turing machine, laid the theoretical foundation for modern computing. His concept of a stack, although not explicitly named, was an integral part of his model for computation.

Turing's machine utilized an infinite tape divided into squares, with a read-write head that could move along the tape. This tape-based system exhibited stack-like behavior, where the squares represented elements of a stack, and the read-write head performed operations like pushing and popping data. Turing's work provided a theoretical framework that would later influence the design of programming languages and computer architectures.

In the 1950s, the development of high-level programming languages began to revolutionize the field of computer science. The introduction of FORTRAN (Formula Translation) in 1957 by John Backus and his team at IBM marked a significant milestone. FORTRAN was designed to simplify the programming process, allowing scientists and engineers to express mathematical formulas and algorithms more naturally.

Around the same time, Grace Hopper, a pioneering computer scientist, led the development of COBOL (Common Business-Oriented Language). COBOL aimed to address the needs of business applications, focusing on readability and English-like syntax. These early high-level languages introduced the concept of structured programming, where code was organized into blocks and subroutines, laying the groundwork for stack-based function calls.

As high-level languages gained popularity, the underlying computer architectures also evolved. James Hamblin's work on stack machines in the 1950s played a crucial role in the practical implementation of stacks in computer systems. Hamblin's stack machine, also known as a zero-address machine, utilized a central stack memory for storing intermediate results during computation.

Assembly language, a low-level programming language, was closely tied to the architecture of the underlying computer. It provided direct control over the machine's hardware, including the stack. Assembly language programs used stack-based instructions to manipulate data and manage subroutine calls, making it an essential tool for early computer programmers.

The development of ALGOL (Algorithmic Language) in the late 1950s and early 1960s was a significant step forward in programming language design. ALGOL was a collaborative effort by an international team, including Friedrich L. Bauer and Klaus Samelson, to create a language suitable for expressing algorithms and mathematical concepts.

Bauer and Samelson's work on ALGOL introduced the concept of recursive subroutines and the activation record stack. Recursive subroutines allowed functions to call themselves with different parameters, enabling the creation of elegant and powerful algorithms. The activation record stack, also known as the call stack, managed the execution of these recursive functions by storing information about each function call, such as local variables and return addresses.

ALGOL's structured approach to programming, combined with the activation record stack, set a new standard for language design. It influenced the development of subsequent languages like Pascal, C, and Java, which adopted stack-based function calls and structured programming paradigms.

The 1970s and 1980s witnessed the emergence of structured and object-oriented programming languages, further solidifying the role of stacks in computer science. Pascal, developed by Niklaus Wirth, built upon ALGOL's structured programming concepts and introduced more robust stack-based function calls.

The 1980s saw the rise of object-oriented programming with languages like C++ and Smalltalk. These languages introduced the concept of objects and classes, encapsulating data and behavior. The stack played a crucial role in managing object instances and method calls, ensuring proper memory allocation and deallocation.

Today, stacks continue to be an integral part of modern programming languages and paradigms. Languages like Java, Python, and C# utilize stacks implicitly for function calls and local variable management. The stack-based approach allows for efficient memory management and modular code organization.

Functional programming languages, such as Lisp and Haskell, also leverage stacks for managing function calls and recursion. These languages emphasize immutability and higher-order functions, making stacks an essential tool for implementing functional programming concepts.

Moreover, stacks are fundamental in the implementation of virtual machines and interpreters. Technologies like the Java Virtual Machine and the Python interpreter use stacks to manage the execution of bytecode or intermediate code, providing platform independence and efficient code execution.

The evolution of programming languages is deeply intertwined with the development and refinement of the stack. From Turing's theoretical foundations to the practical implementations of stack machines and the activation record stack, the stack has been a driving force in shaping the way we program computers.

How the stack got stacked (Kay Lack, September 2024)

youtube

Thursday, October 10, 2024

#turing#stack#programming languages#history#hamblin#bauer#samelson#recursion#evolution#fortran#cobol#algol#structured programming#object-oriented programming#presentation#ai assisted writing#Youtube#machine art

3 notes

·

View notes