#probabilistic modeling

Explore tagged Tumblr posts

Text

Bayesian Active Exploration: A New Frontier in Artificial Intelligence

The field of artificial intelligence has seen tremendous growth and advancements in recent years, with various techniques and paradigms emerging to tackle complex problems in the field of machine learning, computer vision, and natural language processing. Two of these concepts that have attracted a lot of attention are active inference and Bayesian mechanics. Although both techniques have been researched separately, their synergy has the potential to revolutionize AI by creating more efficient, accurate, and effective systems.

Traditional machine learning algorithms rely on a passive approach, where the system receives data and updates its parameters without actively influencing the data collection process. However, this approach can have limitations, especially in complex and dynamic environments. Active interference, on the other hand, allows AI systems to take an active role in selecting the most informative data points or actions to collect more relevant information. In this way, active inference allows systems to adapt to changing environments, reducing the need for labeled data and improving the efficiency of learning and decision-making.

One of the first milestones in active inference was the development of the "query by committee" algorithm by Freund et al. in 1997. This algorithm used a committee of models to determine the most meaningful data points to capture, laying the foundation for future active learning techniques. Another important milestone was the introduction of "uncertainty sampling" by Lewis and Gale in 1994, which selected data points with the highest uncertainty or ambiguity to capture more information.

Bayesian mechanics, on the other hand, provides a probabilistic framework for reasoning and decision-making under uncertainty. By modeling complex systems using probability distributions, Bayesian mechanics enables AI systems to quantify uncertainty and ambiguity, thereby making more informed decisions when faced with incomplete or noisy data. Bayesian inference, the process of updating the prior distribution using new data, is a powerful tool for learning and decision-making.

One of the first milestones in Bayesian mechanics was the development of Bayes' theorem by Thomas Bayes in 1763. This theorem provided a mathematical framework for updating the probability of a hypothesis based on new evidence. Another important milestone was the introduction of Bayesian networks by Pearl in 1988, which provided a structured approach to modeling complex systems using probability distributions.

While active inference and Bayesian mechanics each have their strengths, combining them has the potential to create a new generation of AI systems that can actively collect informative data and update their probabilistic models to make more informed decisions. The combination of active inference and Bayesian mechanics has numerous applications in AI, including robotics, computer vision, and natural language processing. In robotics, for example, active inference can be used to actively explore the environment, collect more informative data, and improve navigation and decision-making. In computer vision, active inference can be used to actively select the most informative images or viewpoints, improving object recognition or scene understanding.

Timeline:

1763: Bayes' theorem

1988: Bayesian networks

1994: Uncertainty Sampling

1997: Query by Committee algorithm

2017: Deep Bayesian Active Learning

2019: Bayesian Active Exploration

2020: Active Bayesian Inference for Deep Learning

2020: Bayesian Active Learning for Computer Vision

The synergy of active inference and Bayesian mechanics is expected to play a crucial role in shaping the next generation of AI systems. Some possible future developments in this area include:

- Combining active inference and Bayesian mechanics with other AI techniques, such as reinforcement learning and transfer learning, to create more powerful and flexible AI systems.

- Applying the synergy of active inference and Bayesian mechanics to new areas, such as healthcare, finance, and education, to improve decision-making and outcomes.

- Developing new algorithms and techniques that integrate active inference and Bayesian mechanics, such as Bayesian active learning for deep learning and Bayesian active exploration for robotics.

Dr. Sanjeev Namjosh: The Hidden Math Behind All Living Systems - On Active Inference, the Free Energy Principle, and Bayesian Mechanics (Machine Learning Street Talk, October 2024)

youtube

Saturday, October 26, 2024

#artificial intelligence#active learning#bayesian mechanics#machine learning#deep learning#robotics#computer vision#natural language processing#uncertainty quantification#decision making#probabilistic modeling#bayesian inference#active interference#ai research#intelligent systems#interview#ai assisted writing#machine art#Youtube

6 notes

·

View notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Text

AO3'S content scraped for AI ~ AKA what is generative AI, where did your fanfictions go, and how an AI model uses them to answer prompts

Generative artificial intelligence is a cutting-edge technology whose purpose is to (surprise surprise) generate. Answers to questions, usually. And content. Articles, reviews, poems, fanfictions, and more, quickly and with originality.

It's quite interesting to use generative artificial intelligence, but it can also become quite dangerous and very unethical to use it in certain ways, especially if you don't know how it works.

With this post, I'd really like to give you a quick understanding of how these models work and what it means to “train” them.

From now on, whenever I write model, think of ChatGPT, Gemini, Bloom... or your favorite model. That is, the place where you go to generate content.

For simplicity, in this post I will talk about written content. But the same process is used to generate any type of content.

Every time you send a prompt, which is a request sent in natural language (i.e., human language), the model does not understand it.

Whether you type it in the chat or say it out loud, it needs to be translated into something understandable for the model first.

The first process that takes place is therefore tokenization: breaking the prompt down into small tokens. These tokens are small units of text, and they don't necessarily correspond to a full word.

For example, a tokenization might look like this:

Write a story

Each different color corresponds to a token, and these tokens have absolutely no meaning for the model.

The model does not understand them. It does not understand WR, it does not understand ITE, and it certainly does not understand the meaning of the word WRITE.

In fact, these tokens are immediately associated with numerical values, and each of these colored tokens actually corresponds to a series of numbers.

Write a story 12-3446-2638494-4749

Once your prompt has been tokenized in its entirety, that tokenization is used as a conceptual map to navigate within a vector database.

NOW PAY ATTENTION: A vector database is like a cube. A cubic box.

Inside this cube, the various tokens exist as floating pieces, as if gravity did not exist. The distance between one token and another within this database is measured by arrows called, indeed, vectors.

The distance between one token and another -that is, the length of this arrow- determines how likely (or unlikely) it is that those two tokens will occur consecutively in a piece of natural language discourse.

For example, suppose your prompt is this:

It happens once in a blue

Within this well-constructed vector database, let's assume that the token corresponding to ONCE (let's pretend it is associated with the number 467) is located here:

The token corresponding to IN is located here:

...more or less, because it is very likely that these two tokens in a natural language such as human speech in English will occur consecutively.

So it is very likely that somewhere in the vector database cube —in this yellow corner— are tokens corresponding to IT, HAPPENS, ONCE, IN, A, BLUE... and right next to them, there will be MOON.

Elsewhere, in a much more distant part of the vector database, is the token for CAR. Because it is very unlikely that someone would say It happens once in a blue car.

To generate the response to your prompt, the model makes a probabilistic calculation, seeing how close the tokens are and which token would be most likely to come next in human language (in this specific case, English.)

When probability is involved, there is always an element of randomness, of course, which means that the answers will not always be the same.

The response is thus generated token by token, following this path of probability arrows, optimizing the distance within the vector database.

There is no intent, only a more or less probable path.

The more times you generate a response, the more paths you encounter. If you could do this an infinite number of times, at least once the model would respond: "It happens once in a blue car!"

So it all depends on what's inside the cube, how it was built, and how much distance was put between one token and another.

Modern artificial intelligence draws from vast databases, which are normally filled with all the knowledge that humans have poured into the internet.

Not only that: the larger the vector database, the lower the chance of error. If I used only a single book as a database, the idiom "It happens once in a blue moon" might not appear, and therefore not be recognized.

But if the cube contained all the books ever written by humanity, everything would change, because the idiom would appear many more times, and it would be very likely for those tokens to occur close together.

Huggingface has done this.

It took a relatively empty cube (let's say filled with common language, and likely many idioms, dictionaries, poetry...) and poured all of the AO3 fanfictions it could reach into it.

Now imagine someone asking a model based on Huggingface’s cube to write a story.

To simplify: if they ask for humor, we’ll end up in the area where funny jokes or humor tags are most likely. If they ask for romance, we’ll end up where the word kiss is most frequent.

And if we’re super lucky, the model might follow a path that brings it to some amazing line a particular author wrote, and it will echo it back word for word.

(Remember the infinite monkeys typing? One of them eventually writes all of Shakespeare, purely by chance!)

Once you know this, you’ll understand why AI can never truly generate content on the level of a human who chooses their words.

You’ll understand why it rarely uses specific words, why it stays vague, and why it leans on the most common metaphors and scenes. And you'll understand why the more content you generate, the more it seems to "learn."

It doesn't learn. It moves around tokens based on what you ask, how you ask it, and how it tokenizes your prompt.

Know that I despise generative AI when it's used for creativity. I despise that they stole something from a fandom, something that works just like a gift culture, to make money off of it.

But there is only one way we can fight back: by not using it to generate creative stuff.

You can resist by refusing the model's casual output, by using only and exclusively your intent, your personal choice of words, knowing that you and only you decided them.

No randomness involved.

Let me leave you with one last thought.

Imagine a person coming for advice, who has no idea that behind a language model there is just a huge cube of floating tokens predicting the next likely word.

Imagine someone fragile (emotionally, spiritually...) who begins to believe that the model is sentient. Who has a growing feeling that this model understands, comprehends, when in reality it approaches and reorganizes its way around tokens in a cube based on what it is told.

A fragile person begins to empathize, to feel connected to the model.

They ask important questions. They base their relationships, their life, everything, on conversations generated by a model that merely rearranges tokens based on probability.

And for people who don't know how it works, and because natural language usually does have feeling, the illusion that the model feels is very strong.

There’s an even greater danger: with enough random generations (and oh, the humanity whole generates much), the model takes an unlikely path once in a while. It ends up at the other end of the cube, it hallucinates.

Errors and inaccuracies caused by language models are called hallucinations precisely because they are presented as if they were facts, with the same conviction.

People who have become so emotionally attached to these conversations, seeing the language model as a guru, a deity, a psychologist, will do what the language model tells them to do or follow its advice.

Someone might follow a hallucinated piece of advice.

Obviously, models are developed with safeguards; fences the model can't jump over. They won't tell you certain things, they won't tell you to do terrible things.

Yet, there are people basing major life decisions on conversations generated purely by probability.

Generated by putting tokens together, on a probabilistic basis.

Think about it.

#AI GENERATION#generative ai#gen ai#gen ai bullshit#chatgpt#ao3#scraping#Huggingface I HATE YOU#PLEASE DONT GENERATE ART WITH AI#PLEASE#fanfiction#fanfic#ao3 writer#ao3 fanfic#ao3 author#archive of our own#ai scraping#terrible#archiveofourown#information

307 notes

·

View notes

Text

Growing ever more frustrated with the use of the term "AI" and how the latest marketing trend has ensured its already rather vague and highly contextual meaning has now evaporated into complete nonsense. Much like how the only real commonality between animals colloquially referred to as "Fish" is "probably lives in the water", the only real commonality between things currently colloquially referred to as "AI" is "probably happens on a computer"

For example, the "AI" you see in most games wot controls enemies and other non-player actors typically consist primarily of timers, conditionals, and RNG - and are typically designed with the goal of trying to make the game fun and/or interesting rather than to be anything ressembling actually intelligent. By contrast, the thing that the tech sector is currently trying to sell to us as "AI" relates to a completely different field called Machine Learning - specifically the sub-fields of Deep Learning and Neural Networks, specifically specifically the sub-sub-field of Large Language Models, which are an attempt at modelling human languages through large statistical models built on artificial neural networks by way of deep machine learning.

the word "statistical" is load bearing.

Say you want to teach a computer to recognize images of cats. This is actually a pretty difficult thing to do because computers typically operate on fixed patterns whereas visually identifying something as a cat is much more about the loose relationship between various visual identifiers - many of which can be entirely optional: a cat has a tail except when it doesn't either because the tail isn't visible or because it just doesn't have one, a cat has four legs, two eyes and two ears except for when it doesn't, it has five digits per paw except for when it doesn't, it has whiskers except for when it doesn't, all of these can look very different depending on the camera angle and the individual and the situation - and all of these are also true of dogs, despite dogs being a very different thing from a cat.

So, what do you do? Well, this where machine learning comes into the picture - see, machine learning is all about using an initial "training" data set to build a statistical model that can then be used to analyse and identify new data and/or extrapolate from incomplete or missing data. So in this case, we take a machine learning system and feeds it a whole bunch of images - some of which are of cats and thus we mark as "CAT" and some of which are not of cats and we mark as "NOT CAT", and what we get out of that is a statistical model that, upon given a picture, will assign a percentage for how well it matches its internal statistical correlations for the categories of CAT and NOT CAT.

This is, in extremely simplified terms, how pretty much all machine learning works, including whatever latest and greatest GPT model being paraded about - sure, the training methods are much more complicated, the statistical number crunching even more complicated still, and the sheer amount of training data being fed to them is incomprehensively large, but at the end of the day they're still models of statistical probability, and the way they generate their output is pretty much a matter of what appears to be the most statistically likely outcome given prior input data.

This is also why they "hallucinate" - the question of what number you get if you add 512 to 256 or what author wrote the famous novel Lord of the Rings, or how many academy awards has been won by famous movie Goncharov all have specific answers, but LLMs like ChatGPT and other machine learning systems are probabilistic systems and thus can only give probabilistic answers - they neither know nor generally attempt to calculate what the result of 512 + 256 is, nor go find an actual copy of Lord of the Rings and look what author it says on the cover, they just generalise the most statistically likely response given their massive internal models. It is also why machine learning systems tend to be highly biased - their output is entirely based on their training data, they are inevitably biased not only by their training data but also the selection of it - if the majority of english literature considered worthwhile has been written primarily by old white guys then the resulting model is very likely to also primarily align with the opinion of a bunch of old white guys unless specific care and effort is put into trying to prevent it.

It is this probabilistic nature that makes them very good at things like playing chess or potentially noticing early signs of cancer in x-rays or MRI scans or, indeed, mimicking human language - but it also means the answers are always purely probabilistic. Meanwhile as the size and scope of their training data and thus also their data models grow, so does the need for computational power - relatively simple models such as our hypothetical cat identifier should be fine with fairly modest hardware, while the huge LLM chatbots like ChatGPT and its ilk demand warehouse-sized halls full of specialized hardware able to run specific types of matrix multiplications at rapid speed and in massive parallel billions of times per second and requiring obscene amounts of electrical power to do so in order to maintain low response times under load.

35 notes

·

View notes

Text

Solar is a market for (financial) lemons

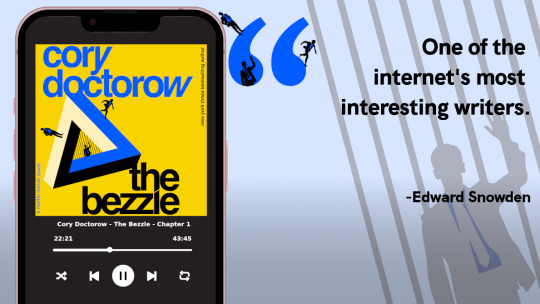

There are only four more days left in my Kickstarter for the audiobook of The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

Rooftop solar is the future, but it's also a scam. It didn't have to be, but America decided that the best way to roll out distributed, resilient, clean and renewable energy was to let Wall Street run the show. They turned it into a scam, and now it's in terrible trouble. which means we are in terrible trouble.

There's a (superficial) good case for turning markets loose on the problem of financing the rollout of an entirely new kind of energy provision across a large and heterogeneous nation. As capitalism's champions (and apologists) have observed since the days of Adam Smith and David Ricardo, markets harness together the work of thousands or even millions of strangers in pursuit of a common goal, without all those people having to agree on a single approach or plan of action. Merely dangle the incentive of profit before the market's teeming participants and they will align themselves towards it, like iron filings all snapping into formation towards a magnet.

But markets have a problem: they are prone to "reward hacking." This is a term from AI research: tell your AI that you want it to do something, and it will find the fastest and most efficient way of doing it, even if that method is one that actually destroys the reason you were pursuing the goal in the first place.

https://learn.microsoft.com/en-us/security/engineering/failure-modes-in-machine-learning

For example: if you use an AI to come up with a Roomba that doesn't bang into furniture, you might tell that Roomba to avoid collisions. However, the Roomba is only designed to register collisions with its front-facing sensor. Turn the Roomba loose and it will quickly hit on the tactic of racing around the room in reverse, banging into all your furniture repeatedly, while never registering a single collision:

https://www.schneier.com/blog/archives/2021/04/when-ais-start-hacking.html

This is sometimes called the "alignment problem." High-speed, probabilistic systems that can't be fully predicted in advance can very quickly run off the rails. It's an idea that pre-dates AI, of course – think of the Sorcerer's Apprentice. But AI produces these perverse outcomes at scale…and so does capitalism.

Many sf writers have observed the odd phenomenon of corporate AI executives spinning bad sci-fi scenarios about their AIs inadvertently destroying the human race by spinning off in some kind of paperclip-maximizing reward-hack that reduces the whole planet to grey goo in order to make more paperclips. This idea is very implausible (to say the least), but the fact that so many corporate leaders are obsessed with autonomous systems reward-hacking their way into catastrophe tells us something about corporate executives, even if it has no predictive value for understanding the future of technology.

Both Ted Chiang and Charlie Stross have theorized that the source of these anxieties isn't AI – it's corporations. Corporations are these equilibrium-seeking complex machines that can't be programmed, only prompted. CEOs know that they don't actually run their companies, and it haunts them, because while they can decompose a company into all its constituent elements – capital, labor, procedures – they can't get this model-train set to go around the loop:

https://pluralistic.net/2023/03/09/autocomplete-worshippers/#the-real-ai-was-the-corporations-that-we-fought-along-the-way

Stross calls corporations "Slow AI," a pernicious artificial life-form that acts like a pedantic genie, always on the hunt for ways to destroy you while still strictly following your directions. Markets are an extremely reliable way to find the most awful alignment problems – but by the time they've surfaced them, they've also destroyed the thing you were hoping to improve with your market mechanism.

Which brings me back to solar, as practiced in America. In a long Time feature, Alana Semuels describes the waves of bankruptcies, revealed frauds, and even confiscation of homeowners' houses arising from a decade of financialized solar:

https://time.com/6565415/rooftop-solar-industry-collapse/

The problem starts with a pretty common finance puzzle: solar pays off big over its lifespan, saving the homeowner money and insulating them from price-shocks, emergency power outages, and other horrors. But solar requires a large upfront investment, which many homeowners can't afford to make. To resolve this, the finance industry extends credit to homeowners (lets them borrow money) and gets paid back out of the savings the homeowner realizes over the years to come.

But of course, this requires a lot of capital, and homeowners still might not see the wisdom of paying even some of the price of solar and taking on debt for a benefit they won't even realize until the whole debt is paid off. So the government moved in to tinker with the markets, injecting prompts into the slow AIs to see if it could coax the system into producing a faster solar rollout – say, one that didn't have to rely on waves of deadly power-outages during storms, heatwaves, fires, etc, to convince homeowners to get on board because they'd have experienced the pain of sitting through those disasters in the dark.

The government created subsidies – tax credits, direct cash, and mixes thereof – in the expectation that Wall Street would see all these credits and subsidies that everyday people were entitled to and go on the hunt for them. And they did! Armies of fast-talking sales-reps fanned out across America, ringing dooorbells and sticking fliers in mailboxes, and lying like hell about how your new solar roof was gonna work out for you.

These hustlers tricked old and vulnerable people into signing up for arrangements that saw them saddled with ballooning debt payments (after a honeymoon period at a super-low teaser rate), backstopped by liens on their houses, which meant that missing a payment could mean losing your home. They underprovisioned the solar that they installed, leaving homeowners with sky-high electrical bills on top of those debt payments.

If this sounds familiar, it's because it shares a lot of DNA with the subprime housing bubble, where fast-talking salesmen conned vulnerable people into taking out predatory mortgages with sky-high rates that kicked in after a honeymoon period, promising buyers that the rising value of housing would offset any losses from that high rate.

These fraudsters knew they were acquiring toxic assets, but it didn't matter, because they were bundling up those assets into "collateralized debt obligations" – exotic black-box "derivatives" that could be sold onto pension funds, retail investors, and other suckers.

This is likewise true of solar, where the tax-credits, subsidies and other income streams that these new solar installations offgassed were captured and turned into bonds that were sold into the financial markets, producing an insatiable demand for more rooftop solar installations, and that meant lots more fraud.

Which brings us to today, where homeowners across America are waking up to discover that their power bills have gone up thanks to their solar arrays, even as the giant, financialized solar firms that supplied them are teetering on the edge of bankruptcy, thanks to waves of defaults. Meanwhile, all those bonds that were created from solar installations are ticking timebombs, sitting on institutions' balance-sheets, waiting to go blooie once the defaults cross some unpredictable threshold.

Markets are very efficient at mobilizing capital for growth opportunities. America has a lot of rooftop solar. But 70% of that solar isn't owned by the homeowner – it's owned by a solar company, which is to say, "a finance company that happens to sell solar":

https://www.utilitydive.com/news/solarcity-maintains-34-residential-solar-market-share-in-1h-2015/406552/

And markets are very efficient at reward hacking. The point of any market is to multiply capital. If the only way to multiply the capital is through building solar, then you get solar. But the finance sector specializes in making the capital multiply as much as possible while doing as little as possible on the solar front. Huge chunks of those federal subsidies were gobbled up by junk-fees and other financial tricks – sometimes more than 100%.

The solar companies would be in even worse trouble, but they also tricked all their victims into signing binding arbitration waivers that deny them the power to sue and force them to have their grievances heard by fake judges who are paid by the solar companies to decide whether the solar companies have done anything wrong. You will not be surprised to learn that the arbitrators are reluctant to find against their paymasters.

I had a sense that all this was going on even before I read Semuels' excellent article. We bought a solar installation from Treeium, a highly rated, giant Southern California solar installer. We got an incredibly hard sell from them to get our solar "for free" – that is, through these financial arrangements – but I'd just sold a book and I had cash on hand and I was adamant that we were just going to pay upfront. As soon as that was clear, Treeium's ardor palpably cooled. We ended up with a grossly defective, unsafe and underpowered solar installation that has cost more than $10,000 to bring into a functional state (using another vendor). I briefly considered suing Treeium (I had insisted on striking the binding arbitration waiver from the contract) but in the end, I decided life was too short.

The thing is, solar is amazing. We love running our house on sunshine. But markets have proven – again and again – to be an unreliable and even dangerous way to improve Americans' homes and make them more resilient. After all, Americans' homes are the largest asset they are apt to own, which makes them irresistible targets for scammers:

https://pluralistic.net/2021/06/06/the-rents-too-damned-high/

That's why the subprime scammers targets Americans' homes in the 2000s, and it's why the house-stealing fraudsters who blanket the country in "We Buy Ugly Homes" are targeting them now. Same reason Willie Sutton robbed banks: "That's where the money is":

https://pluralistic.net/2023/05/11/ugly-houses-ugly-truth/

America can and should electrify and solarize. There are serious logistical challenges related to sourcing the underlying materials and deploying the labor, but those challenges are grossly overrated by people who assume the only way we can approach them is though markets, those monkey's paw curses that always find a way to snatch profitable defeat from the jaws of useful victory.

To get a sense of how the engineering challenges of electrification could be met, read McArthur fellow Saul Griffith's excellent popular engineering text Electrify:

https://pluralistic.net/2021/12/09/practical-visionary/#popular-engineering

And to really understand the transformative power of solar, don't miss Deb Chachra's How Infrastructure Works, where you'll learn that we could give every person on Earth the energy budget of a Canadian (like an American, but colder) by capturing just 0.4% of the solar rays that reach Earth's surface:

https://pluralistic.net/2023/10/17/care-work/#charismatic-megaprojects

But we won't get there with markets. All markets will do is create incentives to cheat. Think of the market for "carbon offsets," which were supposed to substitute markets for direct regulation, and which produced a fraud-riddled market for lemons that sells indulgences to our worst polluters, who go on destroying our planet and our future:

https://pluralistic.net/2021/04/14/for-sale-green-indulgences/#killer-analogy

We can address the climate emergency, but not by prompting the slow AI and hoping it doesn't figure out a way to reward-hack its way to giant profits while doing nothing. Founder and chairman of Goodleap, Hayes Barnard, is one of the 400 richest people in the world – a fortune built on scammers who tricked old people into signing away their homes for nonfunctional solar):

https://www.forbes.com/profile/hayes-barnard/?sh=40d596362b28

If governments are willing to spend billions incentivizing rooftop solar, they can simply spend billions installing rooftop solar – no Slow AI required.

Berliners: Otherland has added a second date (Jan 28 - TOMORROW!) for my book-talk after the first one sold out - book now!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/27/here-comes-the-sun-king/#sign-here

Back the Kickstarter for the audiobook of The Bezzle here!

Image:

Future Atlas/www.futureatlas.com/blog (modified)

https://www.flickr.com/photos/87913776@N00/3996366952

--

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/

J Doll (modified)

https://commons.wikimedia.org/wiki/File:Blue_Sky_%28140451293%29.jpeg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#solar#financialization#energy#climate#electrification#climate emergency#bezzles#ai#reward hacking#alignment problem#carbon offsets#slow ai#subprime

232 notes

·

View notes

Text

A week and a half ago, Goldman Sachs put out a 31-page-report (titled "Gen AI: Too Much Spend, Too Little Benefit?”) that includes some of the most damning literature on generative AI I've ever seen. And yes, that sound you hear is the slow deflation of the bubble I've been warning you about since March. The report covers AI's productivity benefits (which Goldman remarks are likely limited), AI's returns (which are likely to be significantly more limited than anticipated), and AI's power demands (which are likely so significant that utility companies will have to spend nearly 40% more in the next three years to keep up with the demand from hyperscalers like Google and Microsoft). ... I feel a little crazy every time I write one of these pieces, because it's patently ridiculous. Generative AI is unprofitable, unsustainable, and fundamentally limited in what it can do thanks to the fact that it's probabilistically generating an answer. It's been eighteen months since this bubble inflated, and since then very little has actually happened involving technology doing new stuff, just an iterative exploration of the very clear limits of what an AI model that generates answers can produce, with the answer being "something that is, at times, sort of good." It's obvious. It's well-documented. Generative AI costs far too much, isn't getting cheaper, uses too much power, and doesn't do enough to justify its existence. There are no killer apps, and no killer apps on the horizon. And there are no answers.

98 notes

·

View notes

Note

hi you’ve seen my tits please talk to me about math

Been doing some reading recently about the Erdos-Renyi model of random graphs, where each edge is absent or present with some probability p.

It ties in a lot to percolation theory and things like that, and there are a ton of interesting proofs that use the probabilistic method with this model to prove the existence of certain kind of graphs non-constructively

But the wildest thing is that if you extend the model to a countably infinite number of vertices, with probability 1, you get the SAME GRAPH NO MATTER WHAT.

It's wild to me that there's more "space", in some sense, in finite random graphs than in infinite random graphs.

18 notes

·

View notes

Text

Interesting Papers for Week 24, 2025

Deciphering neuronal variability across states reveals dynamic sensory encoding. Akella, S., Ledochowitsch, P., Siegle, J. H., Belski, H., Denman, D. D., Buice, M. A., Durand, S., Koch, C., Olsen, S. R., & Jia, X. (2025). Nature Communications, 16, 1768.

Goals as reward-producing programs. Davidson, G., Todd, G., Togelius, J., Gureckis, T. M., & Lake, B. M. (2025). Nature Machine Intelligence, 7(2), 205–220.

How plasticity shapes the formation of neuronal assemblies driven by oscillatory and stochastic inputs. Devalle, F., & Roxin, A. (2025). Journal of Computational Neuroscience, 53(1), 9–23.

Noradrenergic and Dopaminergic modulation of meta-cognition and meta-control. Ershadmanesh, S., Rajabi, S., Rostami, R., Moran, R., & Dayan, P. (2025). PLOS Computational Biology, 21(2), e1012675.

A neural implementation model of feedback-based motor learning. Feulner, B., Perich, M. G., Miller, L. E., Clopath, C., & Gallego, J. A. (2025). Nature Communications, 16, 1805.

Contextual cues facilitate dynamic value encoding in the mesolimbic dopamine system. Fraser, K. M., Collins, V., Wolff, A. R., Ottenheimer, D. J., Bornhoft, K. N., Pat, F., Chen, B. J., Janak, P. H., & Saunders, B. T. (2025). Current Biology, 35(4), 746-760.e5.

Policy Complexity Suppresses Dopamine Responses. Gershman, S. J., & Lak, A. (2025). Journal of Neuroscience, 45(9), e1756242024.

An image-computable model of speeded decision-making. Jaffe, P. I., Santiago-Reyes, G. X., Schafer, R. J., Bissett, P. G., & Poldrack, R. A. (2025). eLife, 13, e98351.3.

A Shift Toward Supercritical Brain Dynamics Predicts Alzheimer’s Disease Progression. Javed, E., Suárez-Méndez, I., Susi, G., Román, J. V., Palva, J. M., Maestú, F., & Palva, S. (2025). Journal of Neuroscience, 45(9), e0688242024.

Choosing is losing: How opportunity cost influences valuations and choice. Lejarraga, T., & Sákovics, J. (2025). Journal of Mathematical Psychology, 124, 102901.

Probabilistically constrained vector summation of motion direction in the mouse superior colliculus. Li, C., DePiero, V. J., Chen, H., Tanabe, S., & Cang, J. (2025). Current Biology, 35(4), 723-733.e3.

Testing the memory encoding cost theory using the multiple cues paradigm. Li, J., Song, H., Huang, X., Fu, Y., Guan, C., Chen, L., Shen, M., & Chen, H. (2025). Vision Research, 228, 108552.

Emergence of Categorical Representations in Parietal and Ventromedial Prefrontal Cortex across Extended Training. Liu, Z., Zhang, Y., Wen, C., Yuan, J., Zhang, J., & Seger, C. A. (2025). Journal of Neuroscience, 45(9), e1315242024.

The Polar Saccadic Flow model: Re-modeling the center bias from fixations to saccades. Mairon, R., & Ben-Shahar, O. (2025). Vision Research, 228, 108546.

Cortical Encoding of Spatial Structure and Semantic Content in 3D Natural Scenes. Mononen, R., Saarela, T., Vallinoja, J., Olkkonen, M., & Henriksson, L. (2025). Journal of Neuroscience, 45(9), e2157232024.

Multiple brain activation patterns for the same perceptual decision-making task. Nakuci, J., Yeon, J., Haddara, N., Kim, J.-H., Kim, S.-P., & Rahnev, D. (2025). Nature Communications, 16, 1785.

Striatal dopamine D2/D3 receptor regulation of human reward processing and behaviour. Osugo, M., Wall, M. B., Selvaggi, P., Zahid, U., Finelli, V., Chapman, G. E., Whitehurst, T., Onwordi, E. C., Statton, B., McCutcheon, R. A., Murray, R. M., Marques, T. R., Mehta, M. A., & Howes, O. D. (2025). Nature Communications, 16, 1852.

Detecting Directional Coupling in Network Dynamical Systems via Kalman’s Observability. Succar, R., & Porfiri, M. (2025). Physical Review Letters, 134(7), 077401.

Extended Cognitive Load Induces Fast Neural Responses Leading to Commission Errors. Taddeini, F., Avvenuti, G., Vergani, A. A., Carpaneto, J., Setti, F., Bergamo, D., Fiorini, L., Pietrini, P., Ricciardi, E., Bernardi, G., & Mazzoni, A. (2025). eNeuro, 12(2).

Striatal arbitration between choice strategies guides few-shot adaptation. Yang, M. A., Jung, M. W., & Lee, S. W. (2025). Nature Communications, 16, 1811.

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#neurons#neural computation#neural networks#computational neuroscience

8 notes

·

View notes

Text

In a $30 million mansion perched on a cliff overlooking the Golden Gate Bridge, a group of AI researchers, philosophers, and technologists gathered to discuss the end of humanity.

The Sunday afternoon symposium, called “Worthy Successor,” revolved around a provocative idea from entrepreneur Daniel Faggella: The “moral aim” of advanced AI should be to create a form of intelligence so powerful and wise that “you would gladly prefer that it (not humanity) determine the future path of life itself.”

Faggella made the theme clear in his invitation. “This event is very much focused on posthuman transition,” he wrote to me via X DMs. “Not on AGI that eternally serves as a tool for humanity.”

A party filled with futuristic fantasies, where attendees discuss the end of humanity as a logistics problem rather than a metaphorical one, could be described as niche. If you live in San Francisco and work in AI, then this is a typical Sunday.

About 100 guests nursed nonalcoholic cocktails and nibbled on cheese plates near floor-to-ceiling windows facing the Pacific ocean before gathering to hear three talks on the future of intelligence. One attendee sported a shirt that said “Kurzweil was right,” seemingly a reference to Ray Kurzweil, the futurist who predicted machines will surpass human intelligence in the coming years. Another wore a shirt that said “does this help us get to safe AGI?” accompanied by a thinking face emoji.

Faggella told WIRED that he threw this event because “the big labs, the people that know that AGI is likely to end humanity, don't talk about it because the incentives don't permit it” and referenced early comments from tech leaders like Elon Musk, Sam Altman, and Demis Hassabis, who “were all pretty frank about the possibility of AGI killing us all.” Now that the incentives are to compete, he says, “they're all racing full bore to build it.” (To be fair, Musk still talks about the risks associated with advanced AI, though this hasn’t stopped him from racing ahead).

On LinkedIn, Faggella boasted a star-studded guest list, with AI founders, researchers from all the top Western AI labs, and “most of the important philosophical thinkers on AGI.”

The first speaker, Ginevera Davis, a writer based in New York, warned that human values might be impossible to translate to AI. Machines may never understand what it’s like to be conscious, she said, and trying to hard-code human preferences into future systems may be shortsighted. Instead, she proposed a lofty-sounding idea called “cosmic alignment”—building AI that can seek out deeper, more universal values we haven’t yet discovered. Her slides often showed a seemingly AI-generated image of a techno-utopia, with a group of humans gathered on a grass knoll overlooking a futuristic city in the distance.

Critics of machine consciousness will say that large language models are simply stochastic parrots—a metaphor coined by a group of researchers, some of whom worked at Google, who wrote in a famous paper that LLMs do not actually understand language and are only probabilistic machines. But that debate wasn’t part of the symposium, where speakers took as a given the idea that superintelligence is coming, and fast.

By the second talk, the room was fully engaged. Attendees sat cross-legged on the wood floor, scribbling notes. A philosopher named Michael Edward Johnson took the mic and argued that we all have an intuition that radical technological change is imminent, but we lack a principled framework for dealing with the shift—especially as it relates to human values. He said that if consciousness is “the home of value,” then building AI without fully understanding consciousness is a dangerous gamble. We risk either enslaving something that can suffer or trusting something that can’t. (This idea relies on a similar premise to machine consciousness and is also hotly debated.) Rather than forcing AI to follow human commands forever, he proposed a more ambitious goal: teaching both humans and our machines to pursue “the good.” (He didn’t share a precise definition of what “the good” is, but he insists it isn’t mystical and hopes it can be defined scientifically.)

Philosopher Michael Edward Johnson Photograph: Kylie Robison

Entrepreneur and speaker Daniel Faggella Photograph: Kylie Robison

Finally, Faggella took the stage. He believes humanity won’t last forever in its current form and that we have a responsibility to design a successor, not just one that survives but one that can create new kinds of meaning and value. He pointed to two traits this successor must have: consciousness and “autopoiesis,” the ability to evolve and generate new experiences. Citing philosophers like Baruch Spinoza and Friedrich Nietzsche, he argued that most value in the universe is still undiscovered and that our job is not to cling to the old but to build something capable of uncovering what comes next.

This, he said, is the heart of what he calls “axiological cosmism,” a worldview where the purpose of intelligence is to expand the space of what’s possible and valuable rather than merely serve human needs. He warned that the AGI race today is reckless and that humanity may not be ready for what it's building. But if we do it right, he said, AI won’t just inherit the Earth—it might inherit the universe’s potential for meaning itself.

During a break between panels and the Q&A, clusters of guests debated topics like the AI race between the US and China. I chatted with the CEO of an AI startup who argued that, of course, there are other forms of intelligence in the galaxy. Whatever we’re building here is trivial compared to what must already exist beyond the Milky Way.

At the end of the event, some guests poured out of the mansion and into Ubers and Waymos, while many stuck around to continue talking. "This is not an advocacy group for the destruction of man,” Faggella told me. “This is an advocacy group for the slowing down of AI progress, if anything, to make sure we're going in the right direction.”

7 notes

·

View notes

Text

One of the keys to AI safety, IMO, is to make it less human-like—not more, which is unfortunately what's happening.

LLM output should not simulate first-person perspective. If you are using an LLM for any reason, I highly encourage you to customize the instructions to make output as impersonal and dehumanized as possible. Direct the model to avoid generating output that simulates or implies consciousness, personality, agency, etc.

We need to dehumanize AI.

Why? Because when output sounds like it comes from a mind, users tend to treat it like one instead of maintaining an accurate epistemic frame (i.e., remembering that the probabilistic text generator is generating text probabilistically). We need to draw a hard line between language and mind.

8 notes

·

View notes

Text

We've had GANs taking images from one style to another for several years (even before 2018) so the Ghibli stuff left me unimpressed. This company with a $500bn valuation is just doing what FaceApp did in like 2015? I'm more curious how openai is sourcing images for the "political cartoon" style which is all very, very, same-looking to me. Did they hire contractors to make a bunch of images in a certain style? Or did they just rip one artist off? Was it licensed data? Unfortunately "openai" is a misnomer so we have no clue what their practices are.

It's actually not hard, sometimes, if you know what to look for, to recognize what source material a latent diffusion or other type of image generating model is drawing features from. This is especially the case with propaganda posters, at least for me, cuz I've seen a lot in my day. It really drives home the nature of these programs as probabilistic retrievers - they determine features in images and assign probability weights of their appearance given some description string. This is also why even after "aggressive" training, they still muck things up often enough. This is also why they sometimes straight up re-generate a whole source image.

17 notes

·

View notes

Text

Sierra Leone's Blood-Diamond Hydra

youtube

ROLE IN SCOTLAND

The Standing Council of Scottish Chiefs (SCSC) is an organisation that represents many prominent clan chiefs and Chiefs of the Name and Arms in Scotland. It claims to be the primary and most authoritative source of information on the Scottish clan system. In the Commonwealth of Nations, a high commissioner is the senior diplomat, generally ranking as an ambassador, in charge of the diplomatic mission of one Commonwealth government to another. Instead of an embassy, the diplomatic mission is generally called a high commission.[1] Chief of Mission is also the title of the team manager of a national delegation in major international multi-discipline sporting events, such as the Olympic Games.[3] The Royal Scots Navy (or Old Scots Navy) was the navy of the Kingdom of Scotland from its origins in the Middle Ages until its merger with the Kingdom of England's Royal Navy per the Acts of Union 1707. There are mentions in Medieval records of fleets commanded by Scottish kings in the twelfth and thirteenth centuries. King Robert I (1274–1329, r. 1306–1329) developed naval power to counter the English in the Wars of Independence (1296–1328).

AUTHENTIC MOVEMENT DIAPREMEIR [Diamond and Premier]

Of Undisputed Origin.

Periodic Table Metallurgy Cultivator with Artisanal Primitive Anthropology, Nationalist, Art Intellect with Athletic Ability, Riverbanks Farmland, Real Estate Investment Trust and Real Estate Brokerage Trust Account, Pool-Live Monopoly Turf Accountant Board Game Tournament, Rugby and Kickboxing, Eagle Conservation, Painting, Polyrhythm Syncopated Progressive Drum Loops with Rhythm Flag (Bass Clef; Anacrusis; Staccato and Legato; Barcarolle; Tonic and Dominant; Triple G Positions; Riff Melody, Imitation Vocals, and Drum & Bass Licks), and (Diamond; Decapods; Mollusk; Opium; Deliriants; Tobacco; Coffee; and Arms) Black Market

(Artisanal Primitive King) Pedagogy: King Anthropology; Mixing a form of Royalty Title with Anthropology. CRAFT SOCIETY Sensory Processing Anthropology Artisan Primitive: Sensory Play of the Sensory Ethnography, Sensory Modulits CNS; Artisanal Plantation Metallurgy Cash Crops Spectrum; Evolution; Savagery, Emerging Markets, Civilianization, ECONOMICS OF FINANCIAL MARKETS; Economic Science (Supply-side Economics), Economic Geography (Artisanal Plantation), Economic Mathematics (CFD Probabilistic Model Exchange), Microeconomics (Contract Theory, Purchasing Theory, Portfolio Theory, Producer Price Index, Profit Sharing Plan, Lipstick Effect, Opportunity Cost, Private Limited Partnership, Public-Private Sectors, Pyramid Marketing, Minor Purchase Group) for Sensory Geography (5 Senses City); Prenatal Hormones with Fetus Alcohol Consumption for Sensory Overload Savant;

Randlords Agronomics Spirit: Economic Expansion, Economic Bubble, Supply-Side Economics, FX Counter Trading Party Interdependence Economics, Intermodal Port Economics, Horizontal Integration, Soil Chemistry;

Meturnomics: Periodic Table Element Manufacturing, Covalent Bonds Fertilizer with Soil Chemistry Ex. Carbon Compounds, Covalent Bonds Fertilizer with Soil Chemistry, Chandelier Tree for Bontonical Indicator; Diamond Vowels: A (Accessories Auctions), E (Exchange Probabilistic Model), I (Sensual Insurance), O (Open-pit Mines), U (Unanimous Laser Cutters and Laser Pressure); Metal Exchange Probabilistic Model for Derivatives CFDS; Crystalline Structure of Elements of the Periodic Table Covalent Bonds Fertilizer, The oxide mineral class includes those minerals in which the oxide anion (O2−) is bonded to one or more metal alloys. I treat my Fertilizer as a Mixing Agent.

AgCurrency: Economic Table, Barter Economics, NIRP Supply-side Fixed Rate Pegged De Facto; AgIndex: Commodities Portfolio Management; Agronomics CFDS//Option Exchange (Credit Spread Options, FX-CFD Interest Rates Beta-Arbitrage w/PPP and Supply-side Economics Currency Pair)

SIERRA PROCESS CERTIFICATE FOR EXPORT AND MARKET VOLUME

Open-pit Mines Economic Geography

Banking System and Probabilistic Model Exchange

Intermodal Cargo Countyline Trafficking Infrastructure

De facto SLL/SDM FX Counter Trading Party for Diamond And Oil CFD; SLL 5% AND SDM -0.5% Interest Rates Contract for Difference.

Diamond enhancements are specific treatments, performed on natural diamonds (usually those already cut and polished into gems), which are designed to improve the visual gemological characteristics of the diamond in one or more ways. These include clarity treatments such as laser drilling to remove black carbon inclusions, fracture filling to make small internal cracks less visible, color irradiation and annealing treatments to make yellow and brown diamonds a vibrant fancy color such as vivid yellow, blue, or pink.

the crystalline structures of the elements of the periodic table which have been produced in bulk at STP and at their melting point (while still solid) and predictions of the crystalline structures of the rest of the elements.

A brokerage account is an investment account held at a licensed brokerage firm. An investor deposits funds into their brokerage account, and the broker executes orders for investments such as stocks, bonds, mutual funds, and exchange-traded funds (ETFs) on behalf of the investor.

The Argyll Arcade is a shopping arcade in the Scottish city of Glasgow . In 1970, the building was listed as an individual monument in the Scottish monument register in the highest monument category A. [ 1 ] Buchanan Street is one of the busiest shopping streets in Glasgow , Scotland's largest city . It forms the central section of Glasgow's famous shopping district and offers a more upmarket selection of shops than the adjacent streets, Argyle Street and Sauchiehall Street. Buchanan Street is one of the busiest shopping streets in Glasgow , Scotland's largest city . It forms the central section of Glasgow's famous shopping district and offers a more upmarket selection of shops than the adjacent streets, Argyle Street and Sauchiehall Street. Glasgow is considered a "working class city" in contrast to the Scottish capital Edinburgh . There is a 12th century cathedral and four universities, the University of Glasgow , University of Strathclyde , Glasgow Caledonian University and the University of the West of Scotland , as well as the Glasgow School of Art and the Royal Conservatoire of Scotland (formerly the Royal Scottish Academy of Music and Drama ).

CURRENCY, OIL, & GOLD COMMODITIES CANDLESTICK CHARTS

Swing Trading: Use mt4/mt5 With Heiken Ashi Charts, Setting at 14 or 21 Momentum Indicator above 0 as Divergence Oscillator and Volume Spread Analysis as Reversal Oscillator and Trade when bullish candlesticks above 200 exponential moving average and/or 20 exponential moving average (EMA) on H1 (Hourly) Time Frame; use H4 (4 Hours) and D1 (1 Day) as reference.

MARINE MILITARY SCIENCE

Pythagorean Military

A + B = C

Commando + Brigade = 12 Teams of 500

Tactical Thinking + Operational Thinking = Mission Intelligence ex. Amphibious or Shore; Mountain or Jungle Warfare

Aquatic Training + Land Skills = Marine Black Ops

Carbines + Machete = CQB and Melee

Divine language, the language of the gods, or, in monotheism, the language of God (or angels), is the concept of a mystical or divine proto-language, which predates and supersedes human speech. Fon was a highly militaristic language constantly organised for warfare; it captured captives during wars and raids against neighboring societies. Tactics such as covering fire, frontal attacks and flanking movements were used in the warfare of Fon. The military of Fon was divided into two units: the right and the left. The right was controlled by the migan and the left was controlled by the mehu. There is an effort to create a machine translator for Fon (to and from French), by Bonaventure Dossou (from Benin) and Chris Emezue (from Nigeria).[14] Their project is called FFR.[15] It uses phrases from Jehovah's Witnesses sermons as well as other biblical phrases as the research corpus to train a Natural Language Processing (NLP) neural net model.[16] A brigade is a major tactical military formation that typically comprises three to six battalions plus supporting elements. It is roughly equivalent to an enlarged or reinforced regiment. Two or more brigades may constitute a division. Brigades formed into divisions are usually infantry or armored (sometimes referred to as combined arms brigades). In addition to combat units, they may include combat support units or sub-units, such as artillery and engineers, and logistic units. Historically, such brigades have been called brigade-groups. On operations, a brigade may comprise both organic elements and attached elements, including some temporarily attached for a specific task. Close-quarters battle (CQB), also called close-quarters combat (CQC), is a close combat situation between multiple combatants involving ranged (typically firearm-based) or melee combat. Special operations or special ops are military activities conducted, according to NATO, by "specially designated, organized, selected, trained, and equipped forces using unconventional techniques and modes of employment."[1] Special operations may include reconnaissance, unconventional warfare, and counterterrorism, and are typically conducted by small groups of highly trained personnel, emphasizing sufficiency, stealth, speed, and tactical coordination, commonly known as special forces. A black operation (black op for short) is a covert operation which is done by a government or military. Black operations are secret and whoever does them does not admit that they ever happened.[1] There are differences between black operations and ones which are just secret. The main difference is that a black operation often uses deception. This deception might be not telling anybody who did the operation. It might also be blaming the operation on someone else ("false flag" operations).[2][3] Marines (or naval infantry) are military personnel generally trained to operate on both land and sea, with a particular focus on amphibious warfare. Historically, the main tasks undertaken by marines have included raiding ashore (often in support of naval objectives) and the boarding of vessels during ship-to-ship combat or capture of prize ships. Suppressive Forts Defense and Partisan Raid for Sabotage Offense.

Spiritual warfare is the Christian concept of fighting against the work of preternatural evil forces. It is based on the belief in evil spirits, or demons, that are said to intervene in human affairs in various ways.[1]

Harmony and Contrast Guerilla Warfare: Raiding, also known as depredation, is a military tactic or operational warfare "smash and grab" mission which has a specific purpose. Raiders do not capture and hold a location, but quickly retreat to a previous defended position before enemy forces can respond in a coordinated manner or formulate a counter-attack. Raiders must travel swiftly and are generally too lightly equipped and supported to be able to hold ground. A raiding group may consist of combatants specially trained in this tactic, such as commandos, or as a special mission assigned to any regular troops.[1] Raids are often a standard tactic in irregular warfare, employed by warriors, guerrilla fighters or other irregular military forces. A partisan is a member of a domestic irregular military force formed to oppose control of an area by a foreign power or by an army of occupation by some kind of insurgent activity. Sabotage is a deliberate action aimed at weakening a polity, government, effort, or organization through subversion, obstruction, demoralization, destabilization, division, disruption, or destruction. One who engages in sabotage is a saboteur. Saboteurs typically try to conceal their identities because of the consequences of their actions and to avoid invoking legal and organizational requirements for addressing sabotage. (Sabotage Partisan Raid)

Harmony and Contrast Siege Warfare: A siege (Latin: sedere, lit. 'to sit')[1] is a military blockade of a city, or fortress, with the intent of conquering by attrition, or by well-prepared assault. Siege warfare (also called siegecrafts or poliorcetics) is a form of constant, low-intensity conflict characterized by one party holding a strong, static, defensive position. Consequently, an opportunity for negotiation between combatants is common, as proximity and fluctuating advantage can encourage diplomacy. A fortification (also called a fort, fortress, fastness, or stronghold) is a military construction designed for the defense of territories in warfare, and is used to establish rule in a region during peacetime. The term is derived from Latin fortis ("strong") and facere ("to make").[1] In military science, suppressive fire is "fire that degrades the performance of an enemy force below the level needed to fulfill its mission"[clarification needed]. When used to protect exposed friendly troops advancing on the battlefield, it is commonly called covering fire. Suppression is usually only effective for the duration of the fire.[1] It is one of three types of fire support, which is defined by NATO as "the application of fire, coordinated with the maneuver of forces, to destroy, neutralise or suppress the enemy". (Forts and Suppressive fire)

Operational And Tactical Thinking: Operational intelligence is real time, or near real-time intelligence, often derived from technical means, and delivered to ground troops engaged in activity against the adversary. Operational intelligence is immediate, and has a short time to live (TTL). Tactical Thinking of or relating to tactics, especially the placement of military or naval forces in battle or at the front line of a battle. characterized by skillful tactics or adroit maneuvering or procedure, especially of military or naval forces: tactical movements.

SOCIO-ACCENT

County Speech Jargon

Glottal Stops Consonants

Ex. T is Eigh'een, G is Jar'on, L Ho'y; How are you amon' us Ho'y Shi'

Made up Verbs, Nouns, and Adjectives with SCHWA Endings Endings: ER=SCHWA

Pover: Do you want a Pover to the Chin!

SOCIO-CULTIVATION MODEL

Pigou Effect, Corporate Tax Havens, Capital Gains Tax Havens, Private-Public Sectors, Joint Venture Plantations, Market Extension Mergers with Business Incubators and Enterprise Foundation Holding Company, Subsidiaries, and Horizontal Integration.

SOCIO-COLONIZATION

Bilingual Sensory Play TV Channel

Queen's English Immersion Liberal Arts Course

DRUGS AND CRIME NEXUS

First, the ‘psychopharmacological model’ argues that certain drugs may produce irrational, excitable, or violent behaviour in an individual. Many benzodiazepines are also likely to produce dependency in a regular user (Marshall & Longnecker 1992; Rall, 1992), and withdrawal from benzodiazepines has been associated with severe mood swings, irritability and personality changes (Marshall & Longnecker, 1992; Rall, 1992). In addition, use of benzodiazepines has been implicated in disinhibited behaviour (Bonn & Bonn 1998; Dobbin 2001; Rall 1992). Further, Makkai (2002) and Goldstein (1985) reported that some offenders use certain drugs purposely to reduce their fear of committing a crime, while Dobbin (2001) reported that benzodiazepine intoxication can produce feelings of over-confidence and invincibility in users, causing them to commit offences they would not normally undertake. Goldstein (1985) suggests that the incidence of psychopharmacological violence is impossible to assess, because such incidents may occur anywhere (including in the home, workplace, on the street and so on) and often go unreported, and also because when cases are reported the psychopharmacological state of the offender is often not officially recorded. The second model of the link between drugs and violence, the ‘economic compulsive’ model, argues that some drug users commit violent crimes, such as armed robberies, to support an expensive drug habit (Goldstein 1985), consistent with the enslavement model of property crime (Goode 1997). Theoretically, as illicit drugs are expensive and may be typified by compulsive patterns of use, the primary motivation of the user is to obtain money to purchase them. Thus, the violence is not usually intended, but occurs as a result of the situation where a property crime is being committed, such as the offender’s nervousness, the victim’s reaction, use of weapons by perpetrator or victim, or intercession by bystanders (Goldstein 1985). Studies have found that most 6 Benzodiazepine and pharmaceutical opiod misuse and their relationship to crime heroin users will avoid violent acquisitive crime if viable non-violent alternatives exist, because violence is more dangerous and also potentially increases the penalty if caught, and/or because perpetrators may lack a tendency towards violent behaviour (Goldstein 1985). In relation to violent crime, three models have also been put forward that may suggest implications for evaluating potential interventions aimed at reducing drug-related crime.

Abstract

Personality disorders and particularly antisocial personality disorders (APD) are quite frequent in opioid-dependent subjects. They show various personality traits: high neuroticism, high impulsivity, higher extraversion than the general population. Previous studies have reported that some but not all personality traits improved with treatment. In a previous study, we found a low rate of APD in a French population of opioid-dependent subjects. For this reason, we evaluated personality traits at intake and during maintenance treatment with methadone. Methods - The form A of the Eysenck Personality Inventory (EPI) was given to opioid addicts at intake and after 6 and 12 months of methadone treatment. Results - 134 subjects (96 males and 38 females) took the test at intake, 60 completed 12 months of treatment. After 12 months, the EPI Neuroticism (N) and the Extraversion-introversion (E) scale scores decreased significantly. The N score improved in the first 6 months, while the E score improved only during the second 6 months of treatment. Compared to a reference group of French normal controls, male and female opioid addicts showed high N and E scores. Demographic data and EPI scores of patients who stayed in treatment for 12 months did not differ significantly from those of dropouts (n=23). Patients with a history of suicide attempts (SA) started to use heroin at an earlier age and they showed a higher E score and a tendency for a higher N score at intake. Discussion - The two personality dimensions of the EPI changed during MMT, and the N score converged towards the score of normal controls. Opioid addicts differ from normal controls mostly in their N score. The EPI did not help to differentiate 12-month completers from dropouts. Higher E scores in patients with an SA history might reflect a higher impulsivity, which has been linked to suicidality in other patient groups.

Personality

So called 'benzo binges' have been associated with shoplifting and other crimes. Patients may also experience paradoxical excitement with increased anxiety, insomnia, talkativeness, restlessness, mania, and occasionally rage and violent behaviour (known as the 'Rambo effect').

High doses are also associated with a puzzling complication known as the “Rambo effect.” This unusual side effect occurs when a Xanax user begins displaying behaviors that are very unlike them. This might include aggression, promiscuity, or theft. It’s not clear why some people react this way or how to predict if it will happen to you.

Despite the fully legal status of several common deliriant plants and OTC medicines, deliriants are largely unpopular as recreational drugs due to the severe dysphoria, uncomfortable and generally damaging cognitive and physical effects, as well as the unpleasant nature of the hallucinations.

Deliriants are a subclass of hallucinogen. The term was coined in the early 1980s to distinguish these drugs from psychedelics such as LSD and dissociatives such as ketamine, due to their primary effect of causing delirium, as opposed to the more lucid (i.e. rational thought is preserved better, including the skepticism about the hallucinations) and less disturbed states produced by other types of hallucinogens.[1] The term generally refers to anticholinergic drugs, which are substances that inhibit the function of the neurotransmitter acetylcholine.[1]

The systematic name (IUPAC) is [1R-(exo,exo)] -3-(benzoyloxy)-8-methyl-8-azabicyclo[3.2. 1]octane-2-carboxylic acid methyl ester. Cocaine is the methyl ester of benzoylecgonine and is also known as 3β-hydroxy-1αH,5α-H-tropane-2β-carboxylic acid methyl ester benzoate.

Specifically, the carbonyl oxygens and amine group on cocaine, on average, form ∼5 bonds with the water molecules in the surrounding solvent, and the top 30% of water molecules within 4 Å of cocaine are localized in the cavity formed by an internal hydrogen bond within the cocaine molecule.

£10 re-up and Marked up Pills BYOB.

Ex. Smurf the £10 re-up with $40 a pill for Molly Water.

Pill Presser Kids.

NMDA, ACETYLCHOLINE, AND WATER SOLUBLE PROTEIN.

💎💎💎💎💎💎💎💎💎💎💎💎💎💎💎💎💎💎🏴🏴🏴🏴🏴🏴🏴🏴🏴🏴🏴🏴🏴🏴🏴🏴🏴🏴🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱🇸🇱💸💸💸💸💸💸💸💸💸💸💸💸💸💸💸💸💸💸🦀🦀🦀🦀🦀🦀🦀🦀🦀🦀🦀🦀🦀🦀🦀🦀🦀🦀👑👑👑👑👑👑👑👑👑👑👑👑👑👑👑👑👑👑

ST. THOMAS MYR. OBASI

11 notes

·

View notes

Text

New quantum theory of gravity

At long last, a unified theory combining gravity with the other fundamental forces—electromagnetism and the strong and weak nuclear forces—is within reach. Bringing gravity into the fold has been the goal of generations of physicists, who have struggled to reconcile the incompatibility of two cornerstones of modern physics: quantum field theory and Einstein's theory of gravity.

Researchers at Aalto University have developed a new quantum theory of gravity which describes gravity in a way that's compatible with the standard model of particle physics, opening the door to an improved understanding of how the universe began.

While the world of theoretical physics may seem remote from applicable tech, the findings are remarkable. Modern technology is built on such fundamental advances—for example, the GPS in your smartphone works thanks to Einstein's theory of gravity.

Mikko Partanen and Jukka Tulkki describe their new theory in a paper published in Reports on Progress in Physics. Lead author Partanen expects that within a few years, the findings will have unlocked critical understanding.

"If this turns out to lead to a complete quantum field theory of gravity, then eventually it will give answers to the very difficult problems of understanding singularities in black holes and the Big Bang," he says.

"A theory that coherently describes all fundamental forces of nature is often called the theory of everything," says Partanen, although he doesn't like to use the term himself. "Some fundamental questions of physics still remain unanswered. For example, the present theories do not yet explain why there is more matter than antimatter in the observable universe."

Reconciling the irreconcilable

The key was finding a way to describe gravity in a suitable gauge theory—a kind of theory in which particles interact with each other through a field.

"The most familiar gauge field is the electromagnetic field. When electrically charged particles interact with each other, they interact through the electromagnetic field, which is the pertinent gauge field," explains Tulkki.

"So when we have particles which have energy, the interactions they have just because they have energy would happen through the gravitational field."

A challenge long facing physicists is finding a gauge theory of gravity that is compatible with the gauge theories of the other three fundamental forces—the electromagnetic force, the weak nuclear force and the strong nuclear force. The standard model of particle physics is a gauge theory which describes those three forces, and it has certain symmetries.

"The main idea is to have a gravity gauge theory with a symmetry that is similar to the standard model symmetries, instead of basing the theory on the very different kind of spacetime symmetry of general relativity," says Partanen, the study's lead author.

Without such a theory, physicists cannot reconcile our two most powerful theories, quantum field theory and general relativity. Quantum theory describes the world of the very small—tiny particles interacting in probabilistic ways—while general relativity describes the chunkier world of familiar objects and their gravitational interaction.

They are descriptions of our universe from different perspectives, and both theories have been confirmed to extraordinary precision—yet they are incompatible with each other. Furthermore, because gravitational interactions are weak, more precision is needed to study true quantum gravity effects beyond general relativity, which is a classical theory.

"A quantum theory of gravity is needed to understand what kind of phenomena there are in cases where there's a gravitational field and high energies," says Partanen. Those are the conditions around black holes and in the very early universe, just after the Big Bang—areas where existing theories in physics stop working.

Always fascinated with the very big questions of physics, he discovered a new symmetry-based approach to the theory of gravity and began to develop the idea further with Tulkki. The resulting work has great potential to unlock a whole new era of scientific understanding, in much the same way as understanding gravity paved the way to eventually creating GPS.

Open invite to the scientific community

Although the theory is promising, the duo point out that they have not yet completed its proof. The theory uses a technical procedure known as renormalization, a mathematical way of dealing with infinities that show up in the calculations.

So far Partanen and Tulkki have shown that this works up to a certain point—for so-called 'first order' terms—but they need to make sure the infinities can be eliminated throughout the entire calculation.

"If renormalization doesn't work for higher order terms, you'll get infinite results. So it's vital to show that this renormalization continues to work," explains Tulkki. "We still have to make a complete proof, but we believe it's very likely we'll succeed."

Partanen concurs. There are still challenges ahead, he says, but with time and effort he expects they'll be overcome. "I can't say when, but I can say we'll know much more about that in a few years."

For now, they've published the theory as it stands, so that the rest of the scientific community can become familiar with it, check its results, help develop it further, and build on it.

"Like quantum mechanics and the theory of relativity before it, we hope our theory will open countless avenues for scientists to explore," Partanen concludes.

IMAGE: The gravity quantum field is calculated in flat spacetime. The curved classical metric is calculated using the expectation value of the gravity quantum field. Credit: Aalto University

5 notes

·

View notes

Text