#Large Language Model Solutions

Explore tagged Tumblr posts

Text

What Is the Role of AI Ethics in Custom Large Language Model Solutions for 2025?

The rapid evolution of artificial intelligence (AI) has led to significant advancements in technology, particularly in natural language processing (NLP) through the development of large language models (LLMs). These models, powered by vast datasets and sophisticated algorithms, are capable of understanding, generating, and interacting in human-like ways. As we move toward 2025, the importance of AI ethics in the creation and deployment of custom LLM solutions becomes increasingly critical. This blog explores the role of AI ethics in shaping the future of these technologies, focusing on accountability, fairness, transparency, and user privacy.

Understanding Custom Large Language Models

Before delving into AI ethics, it is essential to understand what custom large language models are. These models are tailored to specific applications or industries, allowing businesses to harness the power of AI while meeting their unique needs. Custom Large Language Model solutions can enhance customer service through chatbots, streamline content creation, improve accessibility for disabled individuals, and even support mental health initiatives by providing real-time conversation aids.

However, the deployment of such powerful technologies also raises ethical considerations that must be addressed to ensure responsible use. With the potential to influence decision-making, shape societal norms, and impact human behavior, LLMs pose both opportunities and risks.

The Importance of AI Ethics

1. Accountability

As AI systems become more integrated into daily life and business operations, accountability becomes a crucial aspect of their deployment. Who is responsible for the outputs generated by LLMs? If an LLM generates misleading, harmful, or biased content, understanding where the responsibility lies is vital. Developers, businesses, and users must collaborate to establish guidelines that outline accountability measures.

In custom LLM solutions, accountability involves implementing robust oversight mechanisms. This includes regular audits of model outputs, feedback loops from users, and clear pathways for addressing grievances. Establishing accountability ensures that AI technologies serve the public interest and that any adverse effects are appropriately managed.

2. Fairness and Bias Mitigation

AI systems are only as good as the data they are trained on. If the training datasets contain biases, the resulting LLMs will likely perpetuate or even amplify these biases. For example, an LLM trained primarily on texts from specific demographics may inadvertently generate outputs that favor those perspectives while marginalizing others. This phenomenon, known as algorithmic bias, poses significant risks in areas like hiring practices, loan approvals, and law enforcement.

Ethics in AI calls for fairness, which necessitates that developers actively work to identify and mitigate biases in their models. This involves curating diverse training datasets, employing techniques to de-bias algorithms, and ensuring that custom LLMs are tested across varied demographic groups. Fairness is not just a legal requirement; it is a moral imperative that can enhance the trustworthiness of AI solutions.

3. Transparency

Transparency is crucial in building trust between users and AI systems. Users should have a clear understanding of how LLMs work, the data they were trained on, and the processes behind their outputs. When users understand the workings of AI, they can make informed decisions about its use and limitations.

For custom LLM solutions, transparency involves providing clear documentation about the model’s architecture, training data, and potential biases. This can include detailed explanations of how the model arrived at specific outputs, enabling users to gauge its reliability. Transparency also empowers users to challenge or question AI-generated content, fostering a culture of critical engagement with technology.

4. User Privacy and Data Protection

As LLMs often require large volumes of user data for personalization and improvement, ensuring user privacy is paramount. The ethical use of AI demands that businesses prioritize data protection and adopt strict privacy policies. This involves anonymizing user data, obtaining explicit consent for data usage, and providing users with control over their information.

Moreover, the integration of privacy-preserving technologies, such as differential privacy, can help protect user data while still allowing LLMs to learn and improve. This approach enables developers to glean insights from aggregated data without compromising individual privacy.

5. Human Oversight and Collaboration

While LLMs can operate independently, human oversight remains essential. AI should augment human decision-making rather than replace it. Ethical AI practices advocate for a collaborative approach where humans and AI work together to achieve optimal outcomes. This means establishing frameworks for human-in-the-loop systems, where human judgment is integrated into AI operations.

For custom LLM solutions, this collaboration can take various forms, such as having human moderators review AI-generated content or incorporating user feedback into model updates. By ensuring that humans play a critical role in AI processes, developers can enhance the ethical use of technology and safeguard against potential harms.

The Future of AI Ethics in Custom LLM Solutions

As we approach 2025, the role of AI ethics in custom large language model solutions will continue to evolve. Here are some anticipated trends and developments in the realm of AI ethics:

1. Regulatory Frameworks

Governments and international organizations are increasingly recognizing the need for regulations governing AI. By 2025, we can expect more comprehensive legal frameworks that address ethical concerns related to AI, including accountability, fairness, and transparency. These regulations will guide businesses in developing and deploying AI technologies responsibly.

2. Enhanced Ethical Guidelines

Professional organizations and industry groups are likely to establish enhanced ethical guidelines for AI development. These guidelines will provide developers with best practices for building ethical LLMs, ensuring that the technology aligns with societal values and norms.

3. Focus on Explainability

The demand for explainable AI will grow, with users and regulators alike seeking greater clarity on how AI systems operate. By 2025, there will be an increased emphasis on developing LLMs that can articulate their reasoning and provide users with understandable explanations for their outputs.

4. User-Centric Design

As user empowerment becomes a focal point, the design of custom LLM solutions will prioritize user needs and preferences. This approach will involve incorporating user feedback into model training and ensuring that ethical considerations are at the forefront of the development process.

Conclusion

The role of AI ethics in custom large language model solutions for 2025 is multifaceted, encompassing accountability, fairness, transparency, user privacy, and human oversight. As AI technologies continue to evolve, developers and organizations must prioritize ethical considerations to ensure responsible use. By establishing robust ethical frameworks and fostering collaboration between humans and AI, we can harness the power of LLMs while safeguarding against potential risks. In doing so, we can create a future where AI technologies enhance our lives and contribute positively to society.

#Custom Large Language Model Solutions#Custom Large Language Model#Custom Large Language#Large Language Model#large language model development services#large language model development#Large Language Model Solutions

0 notes

Text

Simplify Transactions and Boost Efficiency with Our Cash Collection Application

Manual cash collection can lead to inefficiencies and increased risks for businesses. Our cash collection application provides a streamlined solution, tailored to support all business sizes in managing cash effortlessly. Key features include automated invoicing, multi-channel payment options, and comprehensive analytics, all of which simplify the payment process and enhance transparency. The application is designed with a focus on usability and security, ensuring that every transaction is traceable and error-free. With real-time insights and customizable settings, you can adapt the application to align with your business needs. Its robust reporting functions give you a bird’s eye view of financial performance, helping you make data-driven decisions. Move beyond traditional, error-prone cash handling methods and step into the future with a digital approach. With our cash collection application, optimize cash flow and enjoy better financial control at every level of your organization.

#seo agency#seo company#seo marketing#digital marketing#seo services#azure cloud services#amazon web services#ai powered application#android app development#augmented reality solutions#augmented reality in education#augmented reality (ar)#augmented reality agency#augmented reality development services#cash collection application#cloud security services#iot applications#iot#iotsolutions#iot development services#iot platform#digitaltransformation#innovation#techinnovation#iot app development services#large language model services#artificial intelligence#llm#generative ai#ai

4 notes

·

View notes

Text

What is the rabbit r1? The Future of Personal Technology

In the rapidly evolving landscape of technology, a groundbreaking device has emerged that aims to revolutionize the way we interact with our digital world. Meet the rabbit r1, an innovative gadget that blends simplicity with sophistication, offering a unique alternative to the traditional smartphone experience. This article delves into the essence of the rabbit r1, exploring its features,…

View On WordPress

#128GB storage#360-degree camera#4G connectivity#AI in daily life#AI-powered device#analog scroll wheel#app-free experience#cloud-based solutions#compact device#digital decluttering#digital simplification#future of smartphones#intuitive technology#Large Action Model#MediaTek P35#modern tech solutions#natural language interface#online services simplification#personal assistant devices#personal technology#Rabbit Inc#Rabbit OS#rabbit r1#smart gadgets#tech trends 2024#technology innovation

0 notes

Text

AI hasn't improved in 18 months. It's likely that this is it. There is currently no evidence the capabilities of ChatGPT will ever improve. It's time for AI companies to put up or shut up.

I'm just re-iterating this excellent post from Ed Zitron, but it's not left my head since I read it and I want to share it. I'm also taking some talking points from Ed's other posts. So basically:

We keep hearing AI is going to get better and better, but these promises seem to be coming from a mix of companies engaging in wild speculation and lying.

Chatgpt, the industry leading large language model, has not materially improved in 18 months. For something that claims to be getting exponentially better, it sure is the same shit.

Hallucinations appear to be an inherent aspect of the technology. Since it's based on statistics and ai doesn't know anything, it can never know what is true. How could I possibly trust it to get any real work done if I can't rely on it's output? If I have to fact check everything it says I might as well do the work myself.

For "real" ai that does know what is true to exist, it would require us to discover new concepts in psychology, math, and computing, which open ai is not working on, and seemingly no other ai companies are either.

Open ai has already seemingly slurped up all the data from the open web already. Chatgpt 5 would take 5x more training data than chatgpt 4 to train. Where is this data coming from, exactly?

Since improvement appears to have ground to a halt, what if this is it? What if Chatgpt 4 is as good as LLMs can ever be? What use is it?

As Jim Covello, a leading semiconductor analyst at Goldman Sachs said (on page 10, and that's big finance so you know they only care about money): if tech companies are spending a trillion dollars to build up the infrastructure to support ai, what trillion dollar problem is it meant to solve? AI companies have a unique talent for burning venture capital and it's unclear if Open AI will be able to survive more than a few years unless everyone suddenly adopts it all at once. (Hey, didn't crypto and the metaverse also require spontaneous mass adoption to make sense?)

There is no problem that current ai is a solution to. Consumer tech is basically solved, normal people don't need more tech than a laptop and a smartphone. Big tech have run out of innovations, and they are desperately looking for the next thing to sell. It happened with the metaverse and it's happening again.

In summary:

Ai hasn't materially improved since the launch of Chatgpt4, which wasn't that big of an upgrade to 3.

There is currently no technological roadmap for ai to become better than it is. (As Jim Covello said on the Goldman Sachs report, the evolution of smartphones was openly planned years ahead of time.) The current problems are inherent to the current technology and nobody has indicated there is any way to solve them in the pipeline. We have likely reached the limits of what LLMs can do, and they still can't do much.

Don't believe AI companies when they say things are going to improve from where they are now before they provide evidence. It's time for the AI shills to put up, or shut up.

5K notes

·

View notes

Photo

A Comprehensive Guide about Google Cloud Generative AI Studio https://medium.com/google-cloud/a-comprehensive-guide-about-google-cloud-generative-ai-studio-1b2bafc4108a?source=rss----e52cf94d98af---4

#google-cloud-platform#generative-ai#generative-ai-tools#generative-ai-solution#large-language-models#Rubens Zimbres#Google Cloud - Community - Medium

0 notes

Text

creatively -- images, storytelling, idea sourcing, etc

non-creatively -- solutions to problems, understanding/learning, emails, etc

*I'm mostly talking about generative AI or large language models, not necessarily machine learning for data processing or games, etc, but do with this what you will

#this is because i had a startling conversation with a coworker yesterday. so i have to poll the people#please don't yell at me for what im calling creative and non-creative. those are just suggestions !!!

360 notes

·

View notes

Text

☁︎。⋆。 ゚☾ ゚。⋆ how to resume ⋆。゚☾。⋆。 ゚☁︎ ゚

after 10 years & 6 jobs in corporate america, i would like to share how to game the system. we all want the biggest payoff for the least amount of work, right?

know thine enemy: beating the robots

i see a lot of misinformation about how AI is used to scrape resumes. i can't speak for every company but most corporations use what is called applicant tracking software (ATS).

no respectable company is using chatgpt to sort applications. i don't know how you'd even write the prompt to get a consumer-facing product to do this. i guarantee that target, walmart, bank of america, whatever, they are all using B2B SaaS enterprise solutions. there is not one hiring manager plinking away at at a large language model.

ATS scans your resume in comparison to the job posting, parses which resumes contain key words, and presents the recruiter and/or hiring manager with resumes with a high "score." the goal of writing your resume is to get your "score" as high as possible.

but tumblr user lightyaoigami, how do i beat the robots?

great question, y/n. you will want to seek out an ATS resume checker. i have personally found success with jobscan, which is not free, but works extremely well. there is a free trial period, and other ATS scanners are in fact free. some of these tools are so sophisticated that they can actually help build your resume from scratch with your input. i wrote my own resume and used jobscan to compare it to the applications i was finishing.

do not use chatgpt to write your resume or cover letter. it is painfully obvious. here is a tutorial on how to use jobscan. for the zillionth time i do not work for jobscan nor am i a #jobscanpartner i am just a person who used this tool to land a job at a challenging time.

the resume checkers will tell you what words and/or phrases you need to shoehorn into your bullet points - i.e., if you are applying for a job that requires you to be a strong collaborator, the resume checker might suggest you include the phrase "cross-functional teams." you can easily re-word your bullets to include this with a little noodling.

don't i need a cover letter?

it depends on the job. after you have about 5 years of experience, i would say that they are largely unnecessary. while i was laid off, i applied to about 100 jobs in a three-month period (#blessed to have been hired quickly). i did not submit a cover letter for any of them, and i had a solid rate of phone screens/interviews after submission despite not having a cover letter. if you are absolutely required to write one, do not have chatgpt do it for you. use a guide from a human being who knows what they are talking about, like ask a manager or betterup.

but i don't even know where to start!

i know it's hard, but you have to have a bit of entrepreneurial spirit here. google duckduckgo is your friend. don't pull any bean soup what-about-me-isms. if you truly don't know where to start, look for an ATS-optimized resume template.

a word about neurodivergence and job applications

i, like many of you, am autistic. i am intimately familiar with how painful it is to expend limited energy on this demoralizing task only to have your "reward" be an equally, if not more so, demoralizing work experience. i don't have a lot of advice for this beyond craft your worksona like you're making a d&d character (or a fursona or a sim or an OC or whatever made up blorbo generator you personally enjoy).

and, remember, while a lot of office work is really uncomfortable and involves stuff like "talking in meetings" and "answering the phone," these things are not an inherent risk. discomfort is not tantamount to danger, and we all have to do uncomfortable things in order to thrive. there are a lot of ways to do this and there is no one-size-fits-all answer. not everyone can mask for extended periods, so be your own judge of what you can or can't do.

i like to think of work as a drag show where i perform this other personality in exchange for money. it is much easier to do this than to fight tooth and nail to be unmasked at work, which can be a risk to your livelihood and peace of mind. i don't think it's a good thing that we have to mask at work, but it's an important survival skill.

⋆。゚☁︎。⋆。 ゚☾ ゚。⋆ good luck ⋆。゚☾。⋆。 ゚☁︎ ゚。⋆

640 notes

·

View notes

Text

Large language models like those offered by OpenAI and Google famously require vast troves of training data to work. The latest versions of these models have already scoured much of the existing internet which has led some to fear there may not be enough new data left to train future iterations. Some prominent voices in the industry, like Meta CEO Mark Zuckerberg, have posited a solution to that data dilemma: simply train new AI systems on old AI outputs.

But new research suggests that cannibalizing of past model outputs would quickly result in strings of babbling AI gibberish and could eventually lead to what’s being called “model collapse.” In one example, researchers fed an AI a benign paragraph about church architecture only to have it rapidly degrade over generations. The final, most “advanced” model simply repeated the phrase “black@tailed jackrabbits” continuously.

A study published in Nature this week put that AI-trained-on-AI scenario to the test. The researchers made their own language model which they initially fed original, human-generated text. They then made nine more generations of models, each trained on the text output generated by the model before it. The end result in the final generation was nonessential surrealist-sounding gibberish that had essentially nothing to do with the original text. Over time and successive generations, the researchers say their model “becomes poisoned with its own projection of reality.”

#diiieeee dieeeee#ai#model collapse#the bots have resorted to drinking their own piss in desperation#generative ai

567 notes

·

View notes

Text

GenAI and writing in its shadow

I see a lot of creative writers express anxiety or even despair because of how good GenAI or LLMs (Large Language Models) seem to be at writing.

What I want to say is, please don't worry.

Having both worked in software development and been writing for a while, I'd like to happily say that GenAI is ✨garbage✨ at writing good stories because of two big reasons:

They lose the plot too easily and can't do callbacks or non-linear writing, because there is a hard upper limit to what an LLM can 'remember'. Even when presented with something like a plan that they have to follow, they don't necessarily connect each step of the plan to each other; they'll do each calculation in isolation, finish with it, then move on to the next. Because narratives, especially when you have more than one character, become these tangled webs of different 'plans' happening simultaneously, the LLM will 🎶 itself trying to remember and instead begin to 'improvise' solutions, much of which will deviate wildly from the plan and the narrative itself.

Also, did you notice how I used the words calculation and solutions? LLMs understand words and meaning as numbers. The next word in a sentence is math to them: they use statistics to guess which word would probably follow the word before it and still be relevant. This doesn't work for narratives because there's these wonderful things called ✨nuance, subtlety, emotional resonance, and subtext✨, and none of that is quantifiable. You can't put a number value on the 'why' of a character shrugging, or heck, not saying anything—that is literally 0 to an LLM, and thus impossible for it to calculate.

Both of these issues aren't just math or coding problems. The first requires absurd (and insanely costly) leaps in computing power and hardware. The second requires building an LLM on something that... isn't a computer.

Write your hearts out. Don't be afraid. The world needs us to stay creative, because while science and technology give us the ways and means to live our lives, creativity and emotion give us the reason to be alive. 💖

#writing#writeblr#writers on tumblr#creative writing#on writing#writers#writers and poets#writing community#creativity#writer humor#writer things

118 notes

·

View notes

Note

I saw something about generative AI on JSTOR. Can you confirm whether you really are implementing it and explain why? I’m pretty sure most of your userbase hates AI.

A generative AI/machine learning research tool on JSTOR is currently in beta, meaning that it's not fully integrated into the platform. This is an opportunity to determine how this technology may be helpful in parsing through dense academic texts to make them more accessible and gauge their relevancy.

To JSTOR, this is primarily a learning experience. We're looking at how beta users are engaging with the tool and the results that the tool is producing to get a sense of its place in academia.

In order to understand what we're doing a bit more, it may help to take a look at what the tool actually does. From a recent blog post:

Content evaluation

Problem: Traditionally, researchers rely on metadata, abstracts, and the first few pages of an article to evaluate its relevance to their work. In humanities and social sciences scholarship, which makes up the majority of JSTOR’s content, many items lack abstracts, meaning scholars in these areas (who in turn are our core cohort of users) have one less option for efficient evaluation.

When using a traditional keyword search in a scholarly database, a query might return thousands of articles that a user needs significant time and considerable skill to wade through, simply to ascertain which might in fact be relevant to what they’re looking for, before beginning their search in earnest.

Solution: We’ve introduced two capabilities to help make evaluation more efficient, with the aim of opening the researcher’s time for deeper reading and analysis:

Summarize, which appears in the tool interface as “What is this text about,” provides users with concise descriptions of key document points. On the back-end, we’ve optimized the Large Language Model (LLM) prompt for a concise but thorough response, taking on the task of prompt engineering for the user by providing advanced direction to:

Extract the background, purpose, and motivations of the text provided.

Capture the intent of the author without drawing conclusions.

Limit the response to a short paragraph to provide the most important ideas presented in the text.

Search term context is automatically generated as soon as a user opens a text from search results, and provides information on how that text relates to the search terms the user has used. Whereas the summary allows the user to quickly assess what the item is about, this feature takes evaluation to the next level by automatically telling the user how the item is related to their search query, streamlining the evaluation process.

Discovering new paths for exploration

Problem: Once a researcher has discovered content of value to their work, it’s not always easy to know where to go from there. While JSTOR provides some resources, including a “Cited by” list as well as related texts and images, these pathways are limited in scope and not available for all texts. Especially for novice researchers, or those just getting started on a new project or exploring a novel area of literature, it can be needlessly difficult and frustrating to gain traction.

Solution: Two capabilities make further exploration less cumbersome, paving a smoother path for researchers to follow a line of inquiry:

Recommended topics are designed to assist users, particularly those who may be less familiar with certain concepts, by helping them identify additional search terms or refine and narrow their existing searches. This feature generates a list of up to 10 potential related search queries based on the document’s content. Researchers can simply click to run these searches.

Related content empowers users in two significant ways. First, it aids in quickly assessing the relevance of the current item by presenting a list of up to 10 conceptually similar items on JSTOR. This allows users to gauge the document’s helpfulness based on its relation to other relevant content. Second, this feature provides a pathway to more content, especially materials that may not have surfaced in the initial search. By generating a list of related items, complete with metadata and direct links, users can extend their research journey, uncovering additional sources that align with their interests and questions.

Supporting comprehension

Problem: You think you have found something that could be helpful for your work. It’s time to settle in and read the full document… working through the details, making sure they make sense, figuring out how they fit into your thesis, etc. This all takes time and can be tedious, especially when working through many items.

Solution: To help ensure that users find high quality items, the tool incorporates a conversational element that allows users to query specific points of interest. This functionality, reminiscent of CTRL+F but for concepts, offers a quicker alternative to reading through lengthy documents.

By asking questions that can be answered by the text, users receive responses only if the information is present. The conversational interface adds an accessibility layer as well, making the tool more user-friendly and tailored to the diverse needs of the JSTOR user community.

Credibility and source transparency

We knew that, for an AI-powered tool to truly address user problems, it would need to be held to extremely high standards of credibility and transparency. On the credibility side, JSTOR’s AI tool uses only the content of the item being viewed to generate answers to questions, effectively reducing hallucinations and misinformation.

On the transparency front, responses include inline references that highlight the specific snippet of text used, along with a link to the source page. This makes it clear to the user where the response came from (and that it is a credible source) and also helps them find the most relevant parts of the text.

293 notes

·

View notes

Text

Recent advances in artificial intelligence (AI) have generalized the use of large language models in our society, in areas such as education, science, medicine, art, and finance, among many others. These models are increasingly present in our daily lives. However, they are not as reliable as users expect. This is the conclusion of a study led by a team from the VRAIN Institute of the Universitat Politècnica de València (UPV) and the Valencian School of Postgraduate Studies and Artificial Intelligence Research Network (ValgrAI), together with the University of Cambridge, published today in the journal Nature. The work reveals an “alarming” trend: compared to the first models, and considering certain aspects, reliability has worsened in the most recent models (GPT-4 compared to GPT-3, for example). According to José Hernández Orallo, researcher at the Valencian Research Institute in Artificial Intelligence (VRAIN) of the UPV and ValgrAI, one of the main concerns about the reliability of language models is that their performance does not align with the human perception of task difficulty. In other words, there is a discrepancy between expectations that models will fail according to human perception of task difficulty and the tasks where models actually fail. “Models can solve certain complex tasks according to human abilities, but at the same time fail in simple tasks in the same domain. For example, they can solve several doctoral-level mathematical problems, but can make mistakes in a simple addition,” points out Hernández-Orallo. In 2022, Ilya Sutskever, the scientist behind some of the biggest advances in artificial intelligence in recent years (from the Imagenet solution to AlphaGo) and co-founder of OpenAI, predicted that “perhaps over time that discrepancy will diminish.” However, the study by the UPV, ValgrAI, and University of Cambridge team shows that this has not been the case. To demonstrate this, they investigated three key aspects that affect the reliability of language models from a human perspective.

25 September 2024

50 notes

·

View notes

Text

GENLOSS RAMBLE

Heyo! This is a little ramble I needed to make before the founders cut comes out! yipee!

(GENERATION LOSS SPOILERS)

/

/

/

/

/

/

/

/

/

/

/

/

/

/

/

/

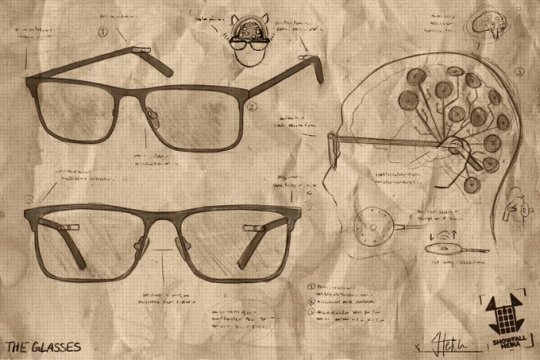

So we can see in the above images the methods Showfall Media is using to control gl!Sneeg gl!Charlie and gl!Ranboo, they use an already pre-existing technology called an Electroencephalogram (EEG). Now this technology has been in use for decades, and essentially how it works is that it uses electrodes placed onto your scalp combined with a conductive gel to measure the electrical activity in your brain, these electrical signals are usually referred to as “brain waves” and these brainwaves can be subdivided into four categories, Gamma (greater than 30 Hz), Beta (13-30 Hz), Alpha (8-12 Hz), Theta (3.5-7.5 Hz), and Delta (0.1-3.5 Hz)

These different brainwaves are generally assosiated with different emotions, awareness levels, brain activities, etc. Now if Showfall Media has installed these onto sneeg, charlie, and ranboo, that means they have access to their thoughts and feelings, but brainscanning isn’t an absolute precise device, it still takes a lot of human effort and time to properly interpret the brainwaves. If Showfall somehow had a tool to easily interpret the signals they could much more easily operate, say, a live show. Lucky for them there is already a real life solution to this problem, kinda.

Its called Brain Generative Pre-Training Transformer, or BrainGPT for short. What its goal is, is to act as an assist tool for human neurologists to use in real neuroscience cases and case studies, what it does is it uses a Large Language Model (LLM) full of pre-existing human research papers and other neuroscience knowledge too vast for human comprehension. And whenever a neurologist hands BrainGPT a prompt, (such as anomalous finding or to asses the fields understanding of a certain topic) , “would generate likely data patterns reflecting its current synthesis of the scientific literature” (braingpt.org)

Now in regards to Generation Loss, what this means is that Showfall Media potentially has acces to this sort of technology, and would be able to use it in the production of their shows, now BrainGPT has a good way to go before its widely avalable. But in the genloss au, it can be far into development at this point, and be available for companies to use in whatever way they see fit.

Now reading and decoding brain signals is one thing, but to mind control someone is far beyond what is capable today, but Showfall Media has somehow developed technology to do so, the way I’m guessing they did it is that they produced certain brainwaves from the electrodes on the actors heads to give them the emotional reactions they needed for the show. I can’t exactly get into the technical stuff cause I’m not a neurologist, but its just a hunch on how I think they did it.

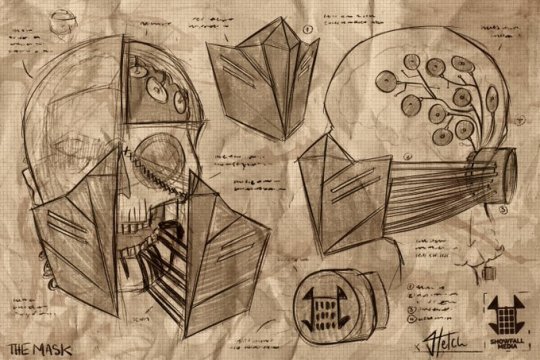

As for the mind controlling devices themselves, I feel there’s a more subtextual reason as to why those objects in particular are chosen as the devices that are central to the show’s operation. Ranboo’s mask has been a heavy emphasis throughout Gen 1 TSE,

Its been a central figure in not only generation loss’ marketing, but also ranboo’s marketing, because when you think of ranboo one of the first things that pops up is the mask, atleast in the wider public’s eye.

But these general associations not only exist with Ranboo, with Slimcicle you usually think of the wide brim glasses, with Sneeg its his backwards cap, and this is with the other cast members too when their introduced on the spinning carousel in episode 2. Furthermore, with Niki it’s that’s she's just so nice, with Austin its that he’s just a gay guy, and with Vinny and Ethan these associations don’t really exist. So, with Vinny he's just the “hoarder”, and Ethan isn't even introduced. And then there's Jerma, who is relinquished to a goofy character with a weird voice and a strange sense of humour which sort of fits his public image.

But what I wanna mention with Ranboo’s mask specifically is that with the three images shown on the genloss twitter of the control devices, sneeg’s is just a hat, like theres nothing special about it, just a hat with electrodes on it, when you take it off he’s completely in control of himself. But, with charlie’s it’s a good bit harder to just take it off. His glasses are drilled into his skull connected to electrodes which are also implanted in his skull, with an additional feature of a speaker in his jaw. But if you remove the glasses, there would be a lot of bleeding and his vision would be impaired, but he would still be a free man.

But with Ranboo, poor, poor, Ranboo… Like Charlie, they have electrodes implanted on to their brain connected to a switch on the back of their skull (which also may or may not also be connected to their spine, idk its hard to tell). These sprout wires that thread through the mask and lead into their throat, and the mask piece itself is sewn shut onto their SKIN.

Now this makes me wonder, why is Ranboo so heavily guarded when the other are (relatively) easy to set free? Is it because Ranboo is an integral part of the show and therefore high risk? Is it because Showfall needed extra resources for the chat to be able to control them?... Or is it because Ranboo tried to escape so many times before that they were forced to disfigure them to such an extreme degree, and yet somehow, SOMEHOW, they are able to resist, whether it be tapping SOS on their hand when they're on full control mode or shanking a Showfall employee with a dagger, Ranboo, Resists. But Showfall will never let them leave. Or they will? Idk founders cut hasn’t come out yet as of writing this, anyway ramble over. You can leave now.

#generation loss#genloss#gl!ranboo#gl!slimecicle#gl!charlie#gl!sneegsnag#ramblings#i wrote this at 3am please help#ranboolive#showfall media#hashtag#Yeah!

73 notes

·

View notes

Text

Something that worries me about schooling in relation to ChatGPT is the ratcheting effect it will have on work load and assignment design.

When I was in high-school, I didn’t cheat, but found out later my peers had been using some apps and websites (among other things) to effectively cheat on tests and assignments. The teachers had an inkling that such cheating was going on, and their solution was to make the assignments much more difficult than before to overcompensate for the perceived cheating. My grades didn’t suffer, but only because I ended up putting in way more time than normal for those assignments.

I’m sure other students simply suffered grade losses or got intel from other students on how to effectively cheat. But none of the students who used web clients to write papers or plagiarized answers really saw their grades suffer (other than not being able to answer easy content questions during class and looking stupid).

A more concrete example could also be the relationship between AI being used to write papers vs AI being used to check for cheating.

I was in school before ChatGPT was a thing, but I was still forced by the school to put my papers through plagiarism checkers.

There is no doubt in my mind that my school signed away the rights to my writing content to be used in the large language models that would become iterations of ChatGPT. And I would say the process is still ongoing, that whenever you submit a paper to be checked for plagiarism, no matter the service, that it is being used to train a large language model somewhere.

Which begs many questions and shows many problems, a significant one being, once a large language model is able to perfectly imitate my writing style, would I ever be able to escape the charges of plagiarism or honor code type violations involved using ChatGPT? If the plagiarism checkers feed the chatbots, and I feed the plagiarism checker, shouldn’t I be able to escape some culpability if my writing style matches to some degree? Where does that point lie, and can it even be measured?

All of this is also contingent on an even bigger question and that is “Can your average public school teacher even tell what good writing looks like anymore?”

I don’t really know the answers to any of these questions, but I can say that it seems like ChatGPT creates a lot more problems than it solves for an educational environment.

39 notes

·

View notes

Text

Excerpt from this story from Heated:

Energy experts warned only a few years ago that the world had to stop building new fossil fuel projects to preserve a livable climate.

Now, artificial intelligence is driving a rapid expansion of methane gas infrastructure—pipelines and power plants—that experts say could have devastating climate consequences if fully realized.

As large language models like ChatGPT become more sophisticated, experts predict that the nation’s energy demands will grow by a “shocking” 16 percent in the next five years. Tech giants like Amazon, Meta, and Alphabet have increasingly turned to nuclear power plants or large renewable energy projects to power data centers that use as much energy as a small town.

But those cleaner energy sources will not be enough to meet the voracious energy demands of AI, analysts say. To bridge the gap, tech giants and fossil fuel companies are planning to build new gas power plants and pipelines that directly supply data centers. And they increasingly propose keeping those projects separate from the grid, fast tracking gas infrastructure at a speed that can’t be matched by renewables or nuclear.

The growth of AI has been called the “savior” of the gas industry. In Virginia alone, the data center capital of the world, a new state report found that AI demand could add a new 1.5 gigawatt gas plant every two years for 15 consecutive years.

And now, as energy demand for AI rises, oil corporations are planning to build gas plants that specifically serve data centers. Last week, Exxon announced that it is building a large gas plant that will directly supply power to data centers within the next five years. The company claims the gas plant will use technology that captures polluting emissions—despite the fact that the technology has never been used at a commercial scale before.

Chevron also announced that the company is preparing to sell gas to an undisclosed number of data centers. “We're doing some work right now with a number of different people that's not quite ready for prime time, looking at possible solutions to build large-scale power generation,” said CEO Mike Wirth at an Atlantic Council event. The opportunity to sell power to data centers is so promising that even private equity firms are investing billions in building energy infrastructure.

But the companies that will benefit the most from an AI gas boom, according to S&P Global, are pipeline companies. This year, several major U.S. pipeline companies told investors that they were already in talks to connect their sprawling pipeline networks directly to on-site gas power plants at data centers.

“We, frankly, are kind of overwhelmed with the number of requests that we’re dealing with, ” Williams CEO Alan Armstrong said on a call with analysts. The pipeline company, which owns the 10,000 mile Transco system, is expanding its existing pipeline network from Virginia to Alabama partly to “provide reliable power where data center growth is expected,” according to Williams.

19 notes

·

View notes

Text

Why there's no intelligence in Artificial Intelligence

You can blame it all on Turing. When Alan Turing invented his mathematical theory of computation, what he really tried to do was to construct a mechanical model for the processes actual mathematicians employ when they prove a mathematical theorem. He was greatly influenced by Kurt Gödel and his incompleteness theorems. Gödel developed a method to decode logical mathematical statements as numbers and in that way was able to manipulate these statements algebraically. After Turing managed to construct a model capable of performing any arbitrary computation process (which we now call "A Universal Turing Machine") he became convinced that he discovered the way the human mind works. This conviction quickly infected the scientific community and became so ubiquitous that for many years it was rare to find someone who argued differently, except on religious grounds.

There was a good reason for adopting the hypothesis that the mind is a computation machine. This premise was following the extremely successful paradigm stating that biology is physics (or, to be precise, biology is both physics and chemistry, and chemistry is physics), which reigned supreme over scientific research since the eighteenth century. It was already responsible for the immense progress that completely transformed modern biology, biochemistry, and medicine. Turing seemed to supply a solution, within this theoretical framework, for the last large piece in the puzzle. There was now a purely mechanistic model for the way brain operation yields all the complex repertoire of human (and animal) behavior.

Obviously, not every computation machine is capable of intelligent conscious thought. So, where do we draw the line? For instance, at what point can we say that a program running on a computer understands English? Turing provided a purely behavioristic test: a computation understands a language if by conversing with it we cannot distinguish it from a human.

This is quite a silly test, really. It doesn't provide any clue as to what actually happens within the artificial "mind"; it assumes that the external behavior of an entity completely encapsulates its internal state; it requires "man in the loop" to provide the final ruling; it does not state for how long and on what level should this conversation be held. Such a test may serve as a pragmatic common-sense method to filter out obvious failures, but it brings us not an ounce closer to understanding conscious thinking.

Still, the Turing Test stuck. If anyone tried to question the computational model of the mind, he was then confronted with the unavoidable question: what else can it be? After all, biology is physics, and therefore the brain is just a physical machine. Physics is governed by equations, which are all, in theory, computable (at least approximately, with errors being as small as one wishes). So, short of conjuring supernatural soul that magically produces a conscious mind out of biological matter, there can be no other solution.

Nevertheless, not everyone conformed to the new dogma. There were two tiers of reservations to computational Artificial Intelligence. The first, maintained, for example, by the Philosopher John Searl, didn't object to idea that a computation device may, in principle, emulate any human intellectual capability. However, claimed Searl, a simulation of a conscious mind is not conscious in itself.

To demonstrate this point Searl envisioned a person who doesn't know a single word in Chinese, sitting in a secluded room. He receives Chinese texts from the outside through a small window and is expected to return responses in Chinese. To do that he uses written manuals that contain the AI algorithm which incorporates a comprehensive understanding of the Chinese language. Therefore, a person fluent in Chinese that converses with the "room" shall deduce, based on Turing Test, that it understands the language. However, in fact there's no one there but a man using a printed recipe to convert an input message he doesn't understands to an output message he doesn't understands. So, who in the room understands Chinese?

The next tier of opposition to computationalism was maintained by the renowned physicist and mathematician Roger Penrose, claiming that the mind has capabilities which no computational process can reproduce. Penrose considered a computational process that imitates a human mathematician. It analyses mathematical conjecture of a certain type and tries to deduce the answer to that problem. To arrive at a correct answer the process must employ valid logical inferences. The quality of such computerized mathematician is measured by the scope of problems it can solve.

What Penrose proved is that such a process can never verify in any logically valid way that its own processing procedures represent valid logical deductions. In fact, if it assumes, as part of its knowledge base, that its own operations are necessarily logically valid, then this assumption makes them invalid. In other words, a computational machine cannot be simultaneously logically rigorous and aware of being logically rigorous.

A human mathematician, on the other hand, is aware of his mental processes and can verify for himself that he is making correct deductions. This is actually an essential part of his profession. It follows that, at least with respect to mathematicians, cognitive functions cannot be replicated computationally.

Neither Searl's position nor Penrose's was accepted by the mainstream, mainly because, if not computation, "what else can it be?". Penrose's suggestion that mental processes involve quantum effects was rejected offhandedly, as "trying to explicate one mystery by swapping it with another mystery". And the macroscopic hot, noisy brain seemed a very implausible place to look for quantum phenomena, which typically occur in microscopic, cold and isolated systems.

Fast forward several decades. Finaly, it seemed as though the vision of true Artificial Intelligence technology started bearing fruits. A class of algorithms termed Deep Neural Networks (DNN) achieved, at last, some human-like capabilities. It managed to identify specific objects in pictures and videos, generate photorealistic images, translate voice to text, and support a wide variety of other pattern recognition and generation tasks. Most impressively, it seemed to have mastered natural language and could partake in an advanced discourse. The triumph of computational AI appeared more feasible than ever. Or was it?

During my years as undergraduate and graduate student I sometimes met fellow students who, at first impression, appeared to be far more conversant in the academic courses subject matter than me. They were highly confident and knew a great deal about things that were only briefly discussed in lectures. Therefore, I was vastly surprised when it turned out they were not particularly good students, and that they usually scored worse than me in the exams. It took me some time to realize that these people hadn't really possessed a better understanding of the curricula. They just adopted the correct jargon, employed the right words, so that, to the layperson ears, they had sounded as if they knew what they were talking about.

I was reminded of these charlatans when I encountered natural language AIs such as Chat GPT. At first glance, their conversational abilities seem impressive – fluent, elegant and decisive. Their style is perfect. However, as you delve deeper, you encounter all kinds of weird assertions and even completely bogus statements, uttered with absolute confidence. Whenever their knowledge base is incomplete, they just fill the gap with fictional "facts". And they can't distinguish between different levels of source credibility. They're like Idiot Savants – superficially bright, inherently stupid.

What confuses so many people with regard to AIs is that they seem to pass the (purely behavioristic) Turing Test. But behaviorism is a fundamentally non-scientific viewpoint. At the core, computational AIs are nothing but algorithms that generates a large number of statistical heuristics from enormous data sets.

There is an old anecdote about a classification AI that was supposed to distinguish between friendly and enemy tanks. Although the AI performed well with respect to the database, it failed miserably in field tests. Finely, the developers figured out the source of the problem. Most of the friendly tanks' images in the database were taken during good weather and with fine lighting conditions. The enemy tanks were mostly photographed in cloudy, darker weather. The AI simply learned to identify the environmental condition.

Though this specific anecdote is probably an urban legend, it illustrates the fact that AIs don't really know what they're doing. Therefore, attributing intelligence to Arificial Intelligence algorithms is a misconception. Intelligence is not the application of a complicated recipe to data. Rather, it is a self-critical analysis that generates meaning from input. Moreover, because intelligence requires not only understanding of the data and its internal structure, but also inner-understanding of the thought processes that generate this understanding, as well as an inner-understanding of this inner-understanding (and so forth), it can never be implemented using a finite set of rules. There is something of the infinite in true intelligence and in any type of conscious thought.

But, if not computation, "what else can it be?". The substantial progress made in quantum theory and quantum computation revived the old hypothesis by Penrose that the working of the mind is tightly coupled to the quantum nature of the brain. What had been previously regarded as esoteric and outlandish suddenly became, in light of recent advancements, a relevant option.

During the last thirty years, quantum computation has been transformed from a rather abstract idea made by the physicist Richard Feynman into an operational technology. Several quantum algorithms were shown to have a fundamental advantage over any corresponding classical algorithm. Some tasks that are extremely hard to fulfil through standard computation (for example, factorization of integers to primes) are easy to achieve quantum mechanically. Note that this difference between hard and easy is qualitative rather than quantitative. It's independent of which hardware and how much resources we dedicate to such tasks.

Along with the advancements in quantum computation came a surging realization that quantum theory is still an incomplete description of nature, and that many quantum effects cannot be really resolved form a conventional materialistic viewpoint. This understanding was first formalized by John Stewart Bell in the 1960s and later on expanded by many other physicists. It is now clear that by accepting quantum mechanics, we have to abandon at least some deep-rooted philosophical perceptions. And it became even more conceivable that any comprehensive understanding of the physical world should incorporate a theory of the mind that experiences it. It's only stands to reason that, if the human mind is an essential component of a complete quantum theory, then the quantum is an essential component of the workings of the mind. If that's the case, then it's clear that a classical algorithm, sophisticated as it may be, can never achieve true intelligence. It lacks an essential physical ingredient that is vital for conscious, intelligent thinking. Trying to simulate such thinking computationally is like trying to build a Perpetuum Mobile or chemically transmute lead into gold. You might discover all sorts of useful things along the way, but you would never reach your intended goal. Computational AIs shall never gain true intelligence. In that respect, this technology is a dead end.

#physics#ai#artificial intelligence#Alan Turing#computation#science#quantum physics#mind and body#John Searl#Roger Penrose

20 notes

·

View notes

Text

youtube

interesting video about why scaling up neural nets doesn't just overfit and memorise more, contrary to classical machine learning theory.

the claim, termed the 'lottery ticket hypothesis', infers from the ability to 'prune' most of the weights from a large network without completely destroying its inference ability that it is effectively simultaneously optimising loads of small subnetworks and some of them will happen to be initialised in the right part of the solution space to find a global rather than local minimum when they do gradient descent, that these types of solutions are found quicker than memorisation would be, and most of the other weights straight up do nothing. this also presumably goes some way to explaining why quantisation works.

presumably it's then possible that with a more complex composite task like predicting all of language ever, a model could learn multiple 'subnetworks' that are optimal solutions for different parts of the problem space and a means to select into them. the conclusion at the end, where he speculates that the kolmogorov-simplest model for language would be to fully simulate a brain, feels like a bit of a reach but the rest of the video is interesting. anyway it seems like if i dig into pruning a little more, it leads to a bunch of other papers since the original 2018 one, for example, so it seems to be an area of active research.

hopefully further reason to think that high performing model sizes might come down and be more RAM-friendly, although it won't do much to help with training cost.

10 notes

·

View notes