#Learn Computer Networking

Explore tagged Tumblr posts

Text

We need to talk about AI

Okay, several people asked me to post about this, so I guess I am going to post about this. Or to say it differently: Hey, for once I am posting about the stuff I am actually doing for university. Woohoo!

Because here is the issue. We are kinda suffering a death of nuance right now, when it comes to the topic of AI.

I understand why this happening (basically everyone wanting to market anything is calling it AI even though it is often a thousand different things) but it is a problem.

So, let's talk about "AI", that isn't actually intelligent, what the term means right now, what it is, what it isn't, and why it is not always bad. I am trying to be short, alright?

So, right now when anyone says they are using AI they mean, that they are using a program that functions based on what computer nerds call "a neural network" through a process called "deep learning" or "machine learning" (yes, those terms mean slightly different things, but frankly, you really do not need to know the details).

Now, the theory for this has been around since the 1940s! The idea had always been to create calculation nodes that mirror the way neurons in the human brain work. That looks kinda like this:

Basically, there are input nodes, in which you put some data, those do some transformations that kinda depend on the kind of thing you want to train it for and in the end a number comes out, that the program than "remembers". I could explain the details, but your eyes would glaze over the same way everyone's eyes glaze over in this class I have on this on every Friday afternoon.

All you need to know: You put in some sort of data (that can be text, math, pictures, audio, whatever), the computer does magic math, and then it gets a number that has a meaning to it.

And we actually have been using this sinde the 80s in some way. If any Digimon fans are here: there is a reason the digital world in Digimon Tamers was created in Stanford in the 80s. This was studied there.

But if it was around so long, why am I hearing so much about it now?

This is a good question hypothetical reader. The very short answer is: some super-nerds found a way to make this work way, way better in 2012, and from that work (which was then called Deep Learning in Artifical Neural Networks, short ANN) we got basically everything that TechBros will not shut up about for the last like ten years. Including "AI".

Now, most things you think about when you hear "AI" is some form of generative AI. Usually it will use some form of a LLM, a Large Language Model to process text, and a method called Stable Diffusion to create visuals. (Tbh, I have no clue what method audio generation uses, as the only audio AI I have so far looked into was based on wolf howls.)

LLMs were like this big, big break through, because they actually appear to comprehend natural language. They don't, of coruse, as to them words and phrases are just stastical variables. Scientists call them also "stochastic parrots". But of course our dumb human brains love to anthropogice shit. So they go: "It makes human words. It gotta be human!"

It is a whole thing.

It does not understand or grasp language. But the mathematics behind it will basically create a statistical analysis of all the words and then create a likely answer.

What you have to understand however is, that LLMs and Stable Diffusion are just a a tiny, minority type of use cases for ANNs. Because research right now is starting to use ANNs for EVERYTHING. Some also partially using Stable Diffusion and LLMs, but not to take away people'S jobs.

Which is probably the place where I will share what I have been doing recently with AI.

The stuff I am doing with Neural Networks

The neat thing: if a Neural Network is Open Source, it is surprisingly easy to work with it. Last year when I started with this I was so intimidated, but frankly, I will confidently say now: As someone who has been working with computers for like more than 10 years, this is easier programming than most shit I did to organize data bases. So, during this last year I did three things with AI. One for a university research project, one for my work, and one because I find it interesting.

The university research project trained an AI to watch video live streams of our biology department's fish tanks, analyse the behavior of the fish and notify someone if a fish showed signs of being sick. We used an AI named "YOLO" for this, that is very good at analyzing pictures, though the base framework did not know anything about stuff that lived not on land. So we needed to teach it what a fish was, how to analyze videos (as the base framework only can look at single pictures) and then we needed to teach it how fish were supposed to behave. We still managed to get that whole thing working in about 5 months. So... Yeah. But nobody can watch hundreds of fish all the time, so without this, those fish will just die if something is wrong.

The second is for my work. For this I used a really old Neural Network Framework called tesseract. This was developed by Google ages ago. And I mean ages. This is one of those neural network based on 1980s research, simply doing OCR. OCR being "optical character recognition". Aka: if you give it a picture of writing, it can read that writing. My work has the issue, that we have tons and tons of old paper work that has been scanned and needs to be digitized into a database. But everyone who was hired to do this manually found this mindnumbing. Just imagine doing this all day: take a contract, look up certain data, fill it into a table, put the contract away, take the next contract and do the same. Thousands of contracts, 8 hours a day. Nobody wants to do that. Our company has been using another OCR software for this. But that one was super expensive. So I was asked if I could built something to do that. So I did. And this was so ridiculously easy, it took me three weeks. And it actually has a higher successrate than the expensive software before.

Lastly there is the one I am doing right now, and this one is a bit more complex. See: we have tons and tons of historical shit, that never has been translated. Be it papyri, stone tablets, letters, manuscripts, whatever. And right now I used tesseract which by now is open source to develop it further to allow it to read handwritten stuff and completely different letters than what it knows so far. I plan to hook it up, once it can reliably do the OCR, to a LLM to then translate those texts. Because here is the thing: these things have not been translated because there is just not enough people speaking those old languages. Which leads to people going like: "GASP! We found this super important document that actually shows things from the anceint world we wanted to know forever, and it was lying in our collection collecting dust for 90 years!" I am not the only person who has this idea, and yeah, I just hope maybe we can in the next few years get something going to help historians and archeologists to do their work.

Make no mistake: ANNs are saving lives right now

Here is the thing: ANNs are Deep Learning are saving lives right now. I really cannot stress enough how quickly this technology has become incredibly important in fields like biology and medicine to analyze data and predict outcomes in a way that a human just never would be capable of.

I saw a post yesterday saying "AI" can never be a part of Solarpunk. I heavily will disagree on that. Solarpunk for example would need the help of AI for a lot of stuff, as it can help us deal with ecological things, might be able to predict weather in ways we are not capable of, will help with medicine, with plants and so many other things.

ANNs are a good thing in general. And yes, they might also be used for some just fun things in general.

And for things that we may not need to know, but that would be fun to know. Like, I mentioned above: the only audio research I read through was based on wolf howls. Basically there is a group of researchers trying to understand wolves and they are using AI to analyze the howling and grunting and find patterns in there which humans are not capable of due ot human bias. So maybe AI will hlep us understand some animals at some point.

Heck, we saw so far, that some LLMs have been capable of on their on extrapolating from being taught one version of a language to just automatically understand another version of it. Like going from modern English to old English and such. Which is why some researchers wonder, if it might actually be able to understand languages that were never deciphered.

All of that is interesting and fascinating.

Again, the generative stuff is a very, very minute part of what AI is being used for.

Yeah, but WHAT ABOUT the generative stuff?

So, let's talk about the generative stuff. Because I kinda hate it, but I also understand that there is a big issue.

If you know me, you know how much I freaking love the creative industry. If I had more money, I would just throw it all at all those amazing creative people online. I mean, fuck! I adore y'all!

And I do think that basically art fully created by AI is lacking the human "heart" - or to phrase it more artistically: it is lacking the chemical inbalances that make a human human lol. Same goes for writing. After all, an AI is actually incapable of actually creating a complex plot and all of that. And even if we managed to train it to do it, I don't think it should.

AI saving lives = good.

AI doing the shit humans actually evolved to do = bad.

And I also think that people who just do the "AI Art/Writing" shit are lazy and need to just put in work to learn the skill. Meh.

However...

I do think that these forms of AI can have a place in the creative process. There are people creating works of art that use some assets created with genAI but still putting in hours and hours of work on their own. And given that collages are legal to create - I do not see how this is meaningfully different. If you can take someone else's artwork as part of a collage legally, you can also take some art created by AI trained on someone else's art legally for the collage.

And then there is also the thing... Look, right now there is a lot of crunch in a lot of creative industries, and a lot of the work is not the fun creative kind, but the annoying creative kind that nobody actually enjoys and still eats hours and hours before deadlines. Swen the Man (the Larian boss) spoke about that recently: how mocapping often created some artifacts where the computer stuff used to record it (which already is done partially by an algorithm) gets janky. So far this was cleaned up by humans, and it is shitty brain numbing work most people hate. You can train AI to do this.

And I am going to assume that in normal 2D animation there is also more than enough clean up steps and such that nobody actually likes to do and that can just help to prevent crunch. Same goes for like those overworked souls doing movie VFX, who have worked 80 hour weeks for the last 5 years. In movie VFX we just do not have enough workers. This is a fact. So, yeah, if we can help those people out: great.

If this is all directed by a human vision and just helping out to make certain processes easier? It is fine.

However, something that is just 100% AI? That is dumb and sucks. And it sucks even more that people's fanart, fanfics, and also commercial work online got stolen for it.

And yet... Yeah, I am sorry, I am afraid I have to join the camp of: "I am afraid criminalizing taking the training data is a really bad idea." Because yeah... It is fucking shitty how Facebook, Microsoft, Google, OpenAI and whatever are using this stolen data to create programs to make themselves richer and what not, while not even making their models open source. BUT... If we outlawed it, the only people being capable of even creating such algorithms that absolutely can help in some processes would be big media corporations that already own a ton of data for training (so basically Disney, Warner and Universal) who would then get a monopoly. And that would actually be a bad thing. So, like... both variations suck. There is no good solution, I am afraid.

And mind you, Disney, Warner, and Universal would still not pay their artists for it. lol

However, that does not mean, you should not bully the companies who are using this stolen data right now without making their models open source! And also please, please bully Hasbro and Riot and whoever for using AI Art in their merchandise. Bully them hard. They have a lot of money and they deserve to be bullied!

But yeah. Generally speaking: Please, please, as I will always say... inform yourself on these topics. Do not hate on stuff without understanding what it actually is. Most topics in life are nuanced. Not all. But many.

#computer science#artifical intelligence#neural network#artifical neural network#ann#deep learning#ai#large language model#science#research#nuance#explanation#opinion#text post#ai explained#solarpunk#cyberpunk

27 notes

·

View notes

Text

#a.b.e.l#divine machinery#archangel#automated#behavioral#ecosystem#learning#divine#machinery#ai#artificial intelligence#divinemachinery#angels#guardian angel#angel#robot#android#computer#computer boy#neural network#sentient objects#sentient ai

14 notes

·

View notes

Text

imagining ur comfort character with you doesnt have to be emotional or serious sometimes youre dragging a warhammer character into studying for a computer science exam

#ramblings#WHYD I REALISE I CAN JUST TALK TO THE LITTLE GUYS IN MY HEAD ABOUT THIS#THE DAY OF THE EXAM#MY TEACHER SAYS 'oh just practice in front of a mirror' BUT I HATE THAT BECAUSE ITS EMBARRASSIGN#I TALK TO MY GUYS ALL THE TIMEEEEE#me: okay so dhcp is a protocol that automatically assigns ip addresses to devices that enter its network so you dont have to do it manually#the warhammer blorbos (visibly shaking): WHAT ARE YOU TALKING ABOUTTTT#me: and computers are kind of stupid (dont tell the admech) and communicate differently from us#they work with ips while we prefer domain names so we have dns to make sure we connect to the right ip addresses when we use domain names#them: WHAT. WHAT#i love my little dystopian fantasy fantasy guys masquerading as scifi imagining them learning about networking is incredible

3 notes

·

View notes

Text

The Mathematical Foundations of Machine Learning

In the world of artificial intelligence, machine learning is a crucial component that enables computers to learn from data and improve their performance over time. However, the math behind machine learning is often shrouded in mystery, even for those who work with it every day. Anil Ananthaswami, author of the book "Why Machines Learn," sheds light on the elegant mathematics that underlies modern AI, and his journey is a fascinating one.

Ananthaswami's interest in machine learning began when he started writing about it as a science journalist. His software engineering background sparked a desire to understand the technology from the ground up, leading him to teach himself coding and build simple machine learning systems. This exploration eventually led him to appreciate the mathematical principles that underlie modern AI. As Ananthaswami notes, "I was amazed by the beauty and elegance of the math behind machine learning."

Ananthaswami highlights the elegance of machine learning mathematics, which goes beyond the commonly known subfields of calculus, linear algebra, probability, and statistics. He points to specific theorems and proofs, such as the 1959 proof related to artificial neural networks, as examples of the beauty and elegance of machine learning mathematics. For instance, the concept of gradient descent, a fundamental algorithm used in machine learning, is a powerful example of how math can be used to optimize model parameters.

Ananthaswami emphasizes the need for a broader understanding of machine learning among non-experts, including science communicators, journalists, policymakers, and users of the technology. He believes that only when we understand the math behind machine learning can we critically evaluate its capabilities and limitations. This is crucial in today's world, where AI is increasingly being used in various applications, from healthcare to finance.

A deeper understanding of machine learning mathematics has significant implications for society. It can help us to evaluate AI systems more effectively, develop more transparent and explainable AI systems, and address AI bias and ensure fairness in decision-making. As Ananthaswami notes, "The math behind machine learning is not just a tool, but a way of thinking that can help us create more intelligent and more human-like machines."

The Elegant Math Behind Machine Learning (Machine Learning Street Talk, November 2024)

youtube

Matrices are used to organize and process complex data, such as images, text, and user interactions, making them a cornerstone in applications like Deep Learning (e.g., neural networks), Computer Vision (e.g., image recognition), Natural Language Processing (e.g., language translation), and Recommendation Systems (e.g., personalized suggestions). To leverage matrices effectively, AI relies on key mathematical concepts like Matrix Factorization (for dimension reduction), Eigendecomposition (for stability analysis), Orthogonality (for efficient transformations), and Sparse Matrices (for optimized computation).

The Applications of Matrices - What I wish my teachers told me way earlier (Zach Star, October 2019)

youtube

Transformers are a type of neural network architecture introduced in 2017 by Vaswani et al. in the paper “Attention Is All You Need”. They revolutionized the field of NLP by outperforming traditional recurrent neural network (RNN) and convolutional neural network (CNN) architectures in sequence-to-sequence tasks. The primary innovation of transformers is the self-attention mechanism, which allows the model to weigh the importance of different words in the input data irrespective of their positions in the sentence. This is particularly useful for capturing long-range dependencies in text, which was a challenge for RNNs due to vanishing gradients. Transformers have become the standard for machine translation tasks, offering state-of-the-art results in translating between languages. They are used for both abstractive and extractive summarization, generating concise summaries of long documents. Transformers help in understanding the context of questions and identifying relevant answers from a given text. By analyzing the context and nuances of language, transformers can accurately determine the sentiment behind text. While initially designed for sequential data, variants of transformers (e.g., Vision Transformers, ViT) have been successfully applied to image recognition tasks, treating images as sequences of patches. Transformers are used to improve the accuracy of speech-to-text systems by better modeling the sequential nature of audio data. The self-attention mechanism can be beneficial for understanding patterns in time series data, leading to more accurate forecasts.

Attention is all you need (Umar Hamil, May 2023)

youtube

Geometric deep learning is a subfield of deep learning that focuses on the study of geometric structures and their representation in data. This field has gained significant attention in recent years.

Michael Bronstein: Geometric Deep Learning (MLSS Kraków, December 2023)

youtube

Traditional Geometric Deep Learning, while powerful, often relies on the assumption of smooth geometric structures. However, real-world data frequently resides in non-manifold spaces where such assumptions are violated. Topology, with its focus on the preservation of proximity and connectivity, offers a more robust framework for analyzing these complex spaces. The inherent robustness of topological properties against noise further solidifies the rationale for integrating topology into deep learning paradigms.

Cristian Bodnar: Topological Message Passing (Michael Bronstein, August 2022)

youtube

Sunday, November 3, 2024

#machine learning#artificial intelligence#mathematics#computer science#deep learning#neural networks#algorithms#data science#statistics#programming#interview#ai assisted writing#machine art#Youtube#lecture

4 notes

·

View notes

Text

Day 19/100 days of productivity | Fri 8 Mar, 2024

Visited University of Connecticut, very pretty campus

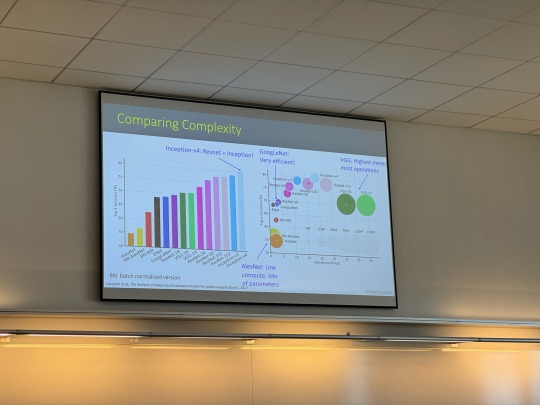

Attended a class on Computer Vision, learned about Google ResNet, which is a type of residual neural network for image processing

Learned more about the grad program and networked

Journaled about my experience

Y’all, UConn is so cool! I was blown away by the gigantic stadium they have in the middle of campus (forgot to take a picture) for their basketball games, because apparently they have the best female collegiate basketball team in the US?!? I did not know this, but they call themselves Huskies, and the branding everyone on campus is on point.

#100 days of productivity#grad school#computer vision#google resnet#neural network#deep learning#UConn#uconn huskies#uconn women’s basketball#university of Connecticut

11 notes

·

View notes

Text

I do find it so funny that I will graduate college days away from my birthday. Like my birthday is literally in between the end of the semester ("graduation") and commencement

It really will be like a joint graduation & birthday party for me lmao

#speculation nation#i dont really do birthday parties anymore. havent in a long time. mostly just go out and do smth fun around my bday. ya kno#also have cake but like not in a party way. just like. here's cake lol#but im probably only gonna graduate from college once. which means i might as well live it up and all.#invite all sorts of extended family and people who have known me. etc etc.#actually it just kinda sunk in that i am. Computer and Information Technology (Systems Analysis and Design focus) w a minor in Communication#like those are words. it's a lot of words but actually it really is pretty accurate?? like that's indeed what ive been studying.#now how much i *remember* is another question. considering how long ive taken to get thru school lol#but that's what people will see on my degree. that's my Thing. graduated in Computer Systems and Talking.#idk it's just weird to have spent so much of my life on this and like That's the culmination. it took so much work.#even beyond a normal 4 years. i switched my major *twice*. switched my minor too.#first year engineering to undecided liberal arts (as a temp major trying to switch to computer science bc i couldnt stay in FYE)#but then computer science sucked so i switched to trying to get into computer & info tech. which is different. and better.#and ive been in it long enough now that ive kinda forgotten but it did take some fuckin work to switch into it.#like i had to take certain classes first & i couldnt take them during the semesters that in-major students would take them#and i had to have my gpa up to a certain level etc etc. so many hoops to jump thru. i think it took me at least a year. or more. idr#but i made it in and thats my major. thats my thing. computers and information systems and communication.#doesnt FEEL like im an almost-graduate. but then i think about all the things ive taken and learned.#and maybe i dont remember a lot of the more specific things from these classes. but i took core lessons away from each one.#wont be able to recite the theories but i can live them. and thats the point of an education i guess.#anyways im gonna have to start job searching before too long and eughhbb. need to get my license first tho probably.#which i will... i will.... i have so many things to deal with... my life will be So Different in a year...#it will require me to put in the work now. but i can do it. and then a year from now. i'll hopefully be in a better spot.#living somewhere else. graduated from college. with a license and a car. maybe even an IT job of some kind.#kind of scared of trying to find a Big Boy Job. aka a job that requires a degree and networking and all that shit.#rather than just showing up and being like Hi i can do this job. i am not a total drain of a person. hire me please 👍#hfkahfks so many things to think about. and through it all i am still dealing with DEADLINES...!!!!#but yeah this is why my writing has largely been put on hold. idk i have a lot of things im dealing with rn.

3 notes

·

View notes

Text

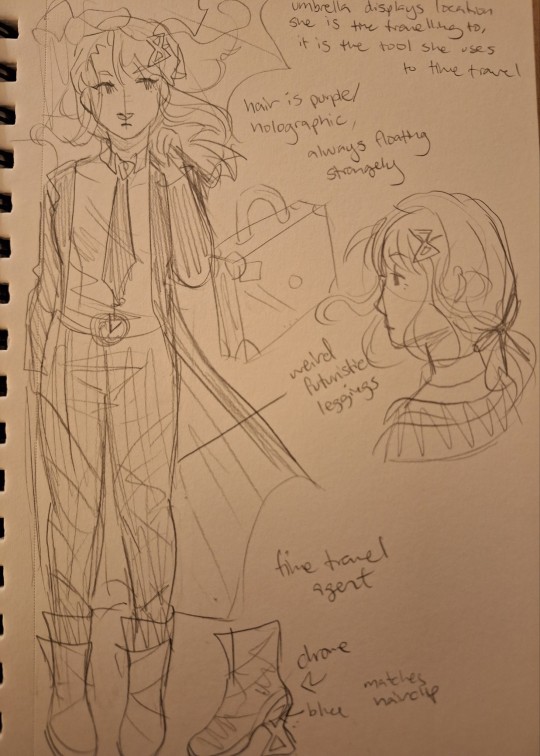

drawing my time traveler character bc she was the only good thing to come out of my concept art/3d modeling class (i learned nothing about character design or 3d modeling and this character was the only assignment actually about character design that we did (i did my senior project on character design and learned way more about it than a whole semester long class that was supposed to teach me it))

im also going insane trying to track down the shoes i used for inspiration for hers but alas i cant find them

#my art#original character#oc#uh she still doesn't have a name but eh#also i really wish i couldve kept the original photoshop file of her but when i tried to move it into my google drive it wouldnt let me :(#mustve been something with the school network or something but still#god even though ive graduated already and dont have to deal with that class anymore i still wish i never took it#the teacher did not teach very well and that class was soul sucking to be in (it also didnt help that we had block schedule so it was a#2 hour class)#giving us old pdfs on learning maya from 2011.... making us copy some other guys drawing but not really in a way to learn from him or his#character design...#dumping her family issues on literally everyone who came into the class (i had to listen to this all the time bc i sat at the front)#i mean at least the teacher liked me i guess but that didnt help the class like. at all.#digital drawing for concept art / 3d modeling my beloathed#anyways for this assignment specifically (the time traveler)#she gave us a book to look at with. character design stuff? i think? and the page we were looking at had some time travel agent woman#concept art on it#that design was really dumb looking imo but it was also probably pretty early concept art for a game so i dont blame it much#it was some generic hot woman with long platinum blonde hair (described as strange despite it not being strange at all)#and wearing a suit that conveniently showed cleavage and had a thigh slit on her skirt#she was holding some old ass briefcase and one of those plastic umbrellas with polka dots on it (the umbrella was her time travel device or#whatever)#the teacher told us we had to make a time traveler so i set out to yassify and transify this design a bit#i think the only sort of character design tip we learned during this whole like. month we worked on this for was to make a moodboard of#our ideas#but eh i still really like the design i made and i was able to get nice and creative with ut#just wish i was able to save it on my own computer and not the school computer :(#2023#oc tag

5 notes

·

View notes

Text

"we should stop calling it artificial intelligence bec" please for the love of good go look up why gofai was originally called artificial intelligence back in like the 60s. nobody is claiming pathfinding algorithms are self-aware, they're just closer representations of entire real-world tasks than specific information processing or control software FUCK

#we already had this ''discussion'' when computation first turned up and everyone looks stupid talking about that in hindsight#metaphors coming from human cognition predate technical vocab by a long fucking way#and nobody is forcing you to call deep learning ''ai''#there are so many other terms that even half an hour of googling will teach you#try ''neuromorphic computation'' or ''recurrent neural networks'' or better yet: stop posting

3 notes

·

View notes

Text

I will also note that the Tech Guild specifically chose not to ask people to refrain from accessing the actual news, in particular political news.

(Source)

"The Tech Guild is asking readers to honor the digital picket line and not play popular NYT Games such as Wordle and Connections as well as not use the NYT Cooking app."

#tumblr news network#i actually learned this on bluesky earlier but that's because i'll check twitter and bluesky on my phone during work#but i only get on tumblr on desktop and i don't get on my personal computer during work time#anyway#don't cross the picket line#union strong#labor rights

38K notes

·

View notes

Text

Deep Learning, Deconstructed: A Physics-Informed Perspective on AI’s Inner Workings

Dr. Yasaman Bahri’s seminar offers a profound glimpse into the complexities of deep learning, merging empirical successes with theoretical foundations. Dr. Bahri’s distinct background, weaving together statistical physics, machine learning, and condensed matter physics, uniquely positions her to dissect the intricacies of deep neural networks. Her journey from a physics-centric PhD at UC Berkeley, influenced by computer science seminars, exemplifies the burgeoning synergy between physics and machine learning, underscoring the value of interdisciplinary approaches in elucidating deep learning’s mysteries.

At the heart of Dr. Bahri’s research lies the intriguing equivalence between neural networks and Gaussian processes in the infinite width limit, facilitated by the Central Limit Theorem. This theorem, by implying that the distribution of outputs from a neural network will approach a Gaussian distribution as the width of the network increases, provides a probabilistic framework for understanding neural network behavior. The derivation of Gaussian processes from various neural network architectures not only yields state-of-the-art kernels but also sheds light on the dynamics of optimization, enabling more precise predictions of model performance.

The discussion on scaling laws is multifaceted, encompassing empirical observations, theoretical underpinnings, and the intricate dance between model size, computational resources, and the volume of training data. While model quality often improves monotonically with these factors, reaching a point of diminishing returns, understanding these dynamics is crucial for efficient model design. Interestingly, the strategic selection of data emerges as a critical factor in surpassing the limitations imposed by power-law scaling, though this approach also presents challenges, including the risk of introducing biases and the need for domain-specific strategies.

As the field of deep learning continues to evolve, Dr. Bahri’s work serves as a beacon, illuminating the path forward. The imperative for interdisciplinary collaboration, combining the rigor of physics with the adaptability of machine learning, cannot be overstated. Moreover, the pursuit of personalized scaling laws, tailored to the unique characteristics of each problem domain, promises to revolutionize model efficiency. As researchers and practitioners navigate this complex landscape, they are left to ponder: What unforeseen synergies await discovery at the intersection of physics and deep learning, and how might these transform the future of artificial intelligence?

Yasaman Bahri: A First-Principle Approach to Understanding Deep Learning (DDPS Webinar, Lawrence Livermore National Laboratory, November 2024)

youtube

Sunday, November 24, 2024

#deep learning#physics informed ai#machine learning research#interdisciplinary approaches#scaling laws#gaussian processes#neural networks#artificial intelligence#ai theory#computational science#data science#technology convergence#innovation in ai#webinar#ai assisted writing#machine art#Youtube

3 notes

·

View notes

Text

As students increasingly rely on AI to complete assignments, the line between learning and automation is blurring. From neural networks to large language models, not all “AI” is the same—and understanding the difference is crucial. Without AI literacy, we risk raising a generation fluent in using tools they don’t truly understand.

#artificial intelligence#chatgpt#computer#llm#machine learning#neural network#priyafied#reality#science#students

0 notes

Text

Elevate Your Mobile App with AI & Chatbots Build Your AI-Powered App: Unlock Next-Gen Capabilities Master the integration of AI and chatbots with our 2025 guide, designed to help you create next-gen mobile applications boasting unmatched intelligence. Ready to elevate? This comprehensive guide equips you with the knowledge to seamlessly integrate AI chatbots and advanced AI into your mobile app for a truly intelligent and future-ready solution.

#Generative AI Software Development | openai chatbot#We build custom AI software#OpenAI chatbots#machine learning#computer vision#and RPA solutions. Empower your business with transformative#intelligent AI#Taxi Booking & Ride Sharing App Development Company#Food Delivery App Development Company | Build Food App#Leading eCommerce App Development Company|Hire Developers#Top Education App Development Company | LMS App Development#Custom Real‑Estate App Development Company | Custom CRM App#On‑Demand Healthcare Mobile App Development Company#Fitness & Wellness App Development Company | Calorie Counter App#Affordable Dating App Development Company | Tinder Clone App#Custom Laundry & Dry‑Cleaning App Development Company#On‑Demand Pickup and Delivery App Development Company#Best Social Media & Networking App Development Company#on-demand Beauty Service App Development#Online Medicine Delivery & Pharmacy App Development Company#Custom On-Demand Delivery App Development Company#Android & IOS Marketplace App And Software Development Company#On‑Demand Grocery Delivery App Development Company

0 notes

Text

A fun way to think about this, for people who don’t know what an algorithm really is, is that algorithm is basically a fancy word for recipe. It’s a set of instructions to take a set of inputs (ingredients) and produce a desired output.

When a site produces an algorithm to recommend content, the input comes from the information they have about you, other users, and the content they are ranking, and the output is an ordered list of top picks for you. It basically takes in everything and then the person writing the algorithm chooses which inputs to actually use.

Imagine that like as the entire grocery store is available to be used, so the person writing the recipe lists out the specific ones used within the steps of the recipe as ingredients to use.

Then they write all the steps in a way that another cook could understand, maybe with helpful notes (comments) along the way describing why they did certain things. And in the end they have a recipe that someone else could follow, make informed changes to, explain the reasoning for decisions, etc.

That’s a traditional algorithm. Sorting by a single field like kudos is the simplest form of this, like a recipe for toast.

Ingredients:

Bread, 1 slice

Instructions:

Put the bread in the toaster.

Pull down the lever.

Wait until it pops up.

Enjoy your toast!

Is that a recipe? Yes, clearly.

Now let’s consider what the equivalent of ML-based recommendation systems (the key differentiator of what people often refer to as “the algorithm”) is:

Ingredients:

The entire grocery store

Instructions:

Put the grocery store into THE MACHINE

THE MACHINE should be set to 0.135, 0.765, 0.474, 0.8833… (this list continues for hundreds of entries)

Consume your personalized Feed™️

Is that a recipe? Technically yes.

“Ao3 needs an algorithm” no it doesn’t, part of the ao3 experience is scrolling through pages of cursed content looking for the one fic you want to read until you get distracted by a summary so cursed that it completely derails your entire search

#algorithm#the algorithm#feed#programming#computer science#cs#machine learning#deep learning#neural networks

91K notes

·

View notes

Text

Best Python Course in Jalandhar

Join the Best Python Course in Jalandhar by TechCadd! Master Python with industry-focused training and real-world projects.

Enroll now!

https://techcadd.com/best-python-course-in-jalandhar.php

#learn python#python online#python classes#python online practice#learn python language#object oriented programming python#python oop#python data class#python object oriented#computer language python#network programming in python#pyqt course#class class python#object oriented programming using python#python class class#python for network

0 notes

Text

Ephemeralization

A computer scientist begins a project to network systems of computers to "donate" processing capabilities to a supermassive LLM in hopes of solving all the world's problems, starting with energy efficiency and global warming as a hedge against all the computing power they're using, sometimes not entirely legally. The system begins generating nonsensical information and suggestions that turn out to be brilliant insights, except they're increasingly morally dubious, ultimately resulting in a paradox of moral relativism.

#bad idea#movie pitch#pitch and moan#ephemeralization#LLM#language learning model#AI#artificial intelligence#computer networking#global warming#energy efficiency#moral relativism#paradox#robert buckminster fuller#r. buckminster fuller#buckminster fuller

1 note

·

View note

Text

This is a hoax. It's actually a photograph of an Italian harvesting spaghetti.

Frank Rosenblatt, often cited as the Father of Machine Learning, photographed in 1960 alongside his most-notable invention: the Mark I Perceptron machine — a hardware implementation for the perceptron algorithm, the earliest example of an artificial neural network, est. 1943.

820 notes

·

View notes