#Meta platforms and video content

Explore tagged Tumblr posts

Text

Meta's recent introduction of ad-free subscriptions for €9.99 a month in Europe is just one example of a broader trend. Twitter (now X), TikTok, and Snapchat are also testing ad-free subscription models, providing an escape for those with the means to avoid the constant bombardment of online ads. Uneven Adoption of Ad-Free Platforms: Insights from European Perspectives and the Growing Appeal of Video-Centric Experiences (globalpostheadline.com)

#Ad-free platform adoption#European perspectives on ad-free experiences#Video-centric preferences#Shifting consumer behaviors online#Digital media trends in Europe#Meta platforms and video content#YouTube Premium success#Ad-free content demand#Consumer choices in digital advertising#European online behavior patterns#Facebook#Meta#Twitter(X)

0 notes

Text

Meta Horizon Worlds v163:「投票移除」功能更新與修復重點介紹

我們非常想知道您對 Meta Horizon Worlds v163 更新中新功能的看法!您覺得投票移除功能有助於改善社交互動嗎?請在下方留言分享您的想法,我們樂於聽取並回應每一位讀者的見解!

在最新的 v163 版本更新中,Meta Horizon Worlds 帶來了一系列提升使用者體驗的改進。這包括了一個正在測試階段的新功能,讓用戶在錄製影片或拍照時能夠切換名牌標籤的顯示與否。此外,亦新增了世界詳情頁面的內容描述符,以及對「投票移除」功能的更新,增強了用戶的操作便利性和互動體驗。 在一般改進方面,Meta 團隊加速了虛擬世界中畫廊的加載速度,使用者現在可以更快地存取及分享照片和影片。針對名牌標籤的切換功能,該功能目前處於測試階段,只對部分用戶開放。在測試階段的用戶可以在開啟相機時,透過一個新的圖標來切換名牌標籤的顯示與否。值得注意的是,使用無人機相機拍攝時,名牌標籤會自動移除,且無法切換。 Poll to…

View On WordPress

#虛擬實境#虛擬實境遊戲#虛擬實境新聞#META#Meta Horizon Worlds v163#Meta Platform Innovations#Meta Quest#Meta Quest New Features#Quest 2#Quest 3#Virtual Reality Content Guidelines#Virtual World Accessibility#vr#VR Community Management#vr game#vr news#vr news today#VR Social Features#VR user experience improvements#VR Video and Photo Tools

0 notes

Text

i think it might be lost on some good omens fans who've either never been in a fandom before, or have only been in very big fandoms for that matter, how truly lucky we are.

we have new fan content to see at any time of day or night, no matter what else is going on in the world. there is a constant, and i do mean constant stream of new art, new fics, new meta, new gifs, new shitposts, new discussions, new video edits, new links to other websites where those things also exist... one could scroll through good omens tumblr all day, every day, and they wouldn't run out of new things to look at, due to the rate at which things are being produced, and the number of people who are producing them (i say this as someone who basically did this while recovering from top surgery back in 2023, when season 2 had not long come out). it would take a person an awfully long time to see all the good omens fan content there is to see, and that's just on this one platform.

most fandoms, for active media or not, cannot relate to this phenomenon. it's crazy, in the most wonderful way. i think we are this way bc we truly do have great source material, shot and performed by brilliant people, and therefore it is the kind of source material that attracts passionate, analytical obsessives (this is a compliment to all of us, not an insult!).

there'll come a time after s3 when things will slow down, i know that. so i want us to all appreciate how much we have now. at least once a day i stop and think about how grateful i am that this fandom became part of my life, and i hope you have that inclination too, at least once in a while.<3

but this doesn't happen by magic. you need to reblog, not just like; comment, not just leave kudos; share and rec, not just enjoy independently. with fandom, you get what you put into it, and the more you get involved the more fulfilment you'll get out of it. trust me on that<3

336 notes

·

View notes

Text

A Quick Guide To Getting Caught Up On Critical Role Fast

This guide is for people who want the fastest way official to get caught up on all 3 Critical Role campaigns without seeing the full actual play episodes. They're all made so that the AP will still be enjoyable later even if you know what happens. There's no "right" way to get into the series, and already having an idea of what happens can even help make the APs more enjoyable and easier to understand.

Summary:

The Legend of Vox Machina

Crit Recap Animated

Exandria: An Intimate History

Critical Role Abridged

Guide:

Campaign 1:

The Legend of Vox Machina on Amazon Prime is the animated adaptation of C1 by the same creators. Sam Riegel said the creative approach is this was the version in a later play. All the important bits are there, but they get to those moments differently.

The Legend of Vox Machina has 3 seasons out now that cover events up through at least episode 85. A 4th season is in the works and will probably cover the final arc.

Campaign 2:

An animated adaptation for Amazon Prime called "Mighty Nein" is in the works, but not out yet.

Crit Role Animated is an older comedic summary series presented by their Lore Keeper that covers the whole campaign in 10 videos. Great if you want the gist.

It's like a history crash course history video meant to get you curious to learn the full story later. Great way to get a sense of who people are and what they've done. Available on YouTube and their streaming platform Beacon.

Exandrian History Review:

Exandria: An Intimate History is a timeline review of key events in world history, starting from the creation.

It was released before Campaign 3 as bonus content. It represents what the average person in Exandria knows about world history up to that point.

youtube

Campaign 3:

Critical Role Abridged is the Campaign 3 AP condensed down into 1 to 1.5 hours. It mostly cuts down combat to the narrated results and reduces table chatter and indecisiveness. It's a great way to experience the full campaign.

Critical Role Abridged is coming out 1 a week at a time on YouTube and 2 a week on Beacon. YouTube is currently up to episode 25. Beacon is up to episode 47. The AP is at episode 109. At some point you'll have to switch to full episodes to catch up.

Wiki:

There's also 2 world-class wikis where's you can look up extensive and meticulously cited information about anything you need. I prefer The Encyclopedia Exandria.

Viewing Notes:

An important thing to know about "continuity" in Critical Role is that it takes a more realistic view of how history is passed down through the ages and even dedicated academics will never know the full story or be fully correct. They know versions colored by in-world biases and lost knowledge.

Which is great for you the viewer because any campaign you comes into, the characters don't know most of what happened in past games. What they actually know will come up in game. The players have above table reactions and some subtle in jokes, but try not to act on meta knowledge.

It's structured a lot like reading one history book and then wanting to go back and read more about past events that set the stage for all those things to happen. They've tried to make it easier to come into the story happening now.

I certainly enjoyed watching the full APs from the beginning, and I think you can get a deeper understanding of the story from them, but it takes thousands of hours to catch up on the story that way and it isn't realistic for everyone. Each series builds on the consequences of past events more than they rely on unexpected twists, so already knowing what happens just helps you notice all the little things that led to them. Similar to how Shakespeare's plays are often more enjoyable to watch unfold if you already know the basic plot points going into them.

Happy viewing, and I hope this helps you or someone you know get into this very rich and interesting story!

#critical role#critical role meta#critical role campaign 1#the legend of vox machina#critical role campaign 2#critical role campaign 3#Youtube

370 notes

·

View notes

Text

Hey so this is super scary

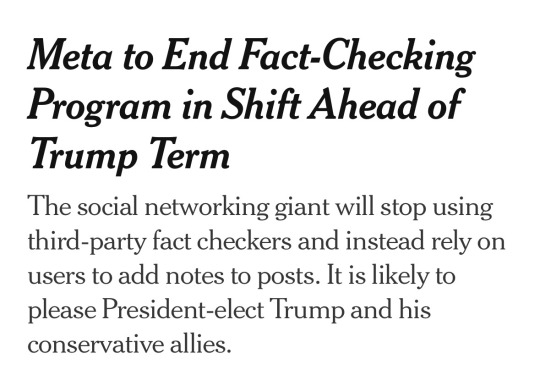

Meta on Tuesday announced a set of changes to its content moderation practices that would effectively put an end to its longstanding fact-checking program, a policy instituted to curtail the spread of misinformation across its social media apps.

The reversal of the years-old policy is a stark sign of how the company is repositioning itself for the Trump era. Meta described the changes with the language of a mea culpa, saying that the company had strayed too far from its values over the prior decade.

“We want to undo the mission creep that has made our rules too restrictive and too prone to over-enforcement,” Joel Kaplan, Meta’s newly installed global policy chief, said in a statement.

Instead of using news organizations and other third-party groups, Meta, which owns Facebook, Instagram and Threads, will rely on users to add notes or corrections to posts that may contain false or misleading information.

Mark Zuckerberg, Meta’s chief executive, said in a video that the new protocol, which will begin in the United States in the coming months, is similar to the one used by X, called Community Notes.

“It’s time to get back to our roots around free expression,” Mr. Zuckerberg said. The company’s current fact-checking system, he added, had “reached a point where it’s just too many mistakes and too much censorship.”

Mr. Zuckerberg conceded that there would be more “bad stuff” on the platform as a result of the decision. “The reality is that this is a trade-off,” he said. “It means that we��re going to catch less bad stuff, but we’ll also reduce the number of innocent people’s posts and accounts that we accidentally take down."

Elon Musk has relied on Community Notes to flag misleading posts on X. Since taking over the social network, Mr. Musk, a major Trump donor, has increasingly positioned X as the platform behind the new Trump presidency.

Meta’s move is likely to please the administration of President-elect Donald J. Trump and its conservative allies, many of whom have disliked Meta’s practice of adding disclaimers or warnings to questionable or false posts. Mr. Trump has long railed against Mr. Zuckerberg, claiming the fact-checking feature treated posts by conservative users unfairly.

Since Mr. Trump won a second term in November, Meta has moved swiftly to try to repair the strained relationships he and his company have with conservatives.

Mr. Zuckerberg noted that “recent elections” felt like a “cultural tipping point towards once again prioritizing speech.”

In late November, Mr. Zuckerberg dined with Mr. Trump at Mar-a-Lago, where he also met with his secretary of state pick, Marco Rubio. Meta donated $1 million to support Mr. Trump’s inauguration in December. Last week, Mr. Zuckerberg elevated Mr. Kaplan, a longtime conservative and the highest-ranking Meta executive closest to the Republican Party, to the company’s most senior policy role. And on Monday, Mr. Zuckerberg announced that Dana White, the head of the Ultimate Fighting Championship and a close ally of Mr. Trump’s, would join Meta’s board.

Meta executives recently gave a heads-up to Trump officials about the change in policy, according to a person with knowledge of the conversations who spoke on condition of anonymity. The fact-checking announcement coincided with an appearance by Mr. Kaplan on “Fox & Friends,” a favorite show of Mr. Trump. He told the hosts of the morning show popular with conservatives that there was “too much political bias” in the fact-checking program.

The change brings an end to a practice the company started eight years ago, in the weeks after Mr. Trump’s election in 2016. At the time, Facebook was under fire for the unchecked dissemination of misinformation spread across its network, including posts from foreign governments angling to sow discord among the American public.

As a result of enormous public pressure, Mr. Zuckerberg turned to outside organizations like The Associated Press, ABC News and the fact-checking site Snopes, along with other global organizations vetted by the International Fact-Checking Network, to comb over potentially false or misleading posts on Facebook and Instagram and rule whether they needed to be annotated or removed.

Among the changes, Mr. Zuckerberg said, will be to “remove restrictions on topics like immigration and gender that are out of touch with mainstream discourse.” He also said that the trust and safety and content moderation teams would be moved from California, with the U.S. content review shifting to Texas. That would “help remove the concern than biased employees are overly censoring content,” he added.

#wtf#this is not good#we really just fine with misinformed beliefs persisting now huh#not really sure what to tell you to do here but make sure you actively follow verified and real news sources#if you use social media to get your news at all#nyt#nytimes#donald trump#trump administration#meta#facebook#instagram#anti misinfo#news#2025

201 notes

·

View notes

Text

I have to admit, this isn't really my typical post. I'm just really frustrated and want to vent that frustration somewhere. I just hope someone from the US ends up seeing this and rethinks things.

I've started using Tiktok as a consequence of the new hate speech regulations on Meta platforms.

Today, I got this video on my FYP:

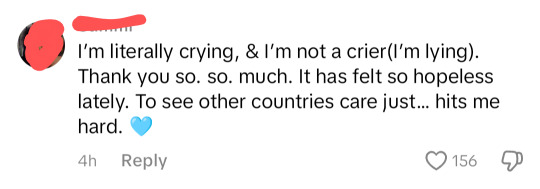

It's a video of an anti-fascism protest in Germany. Specifically, it's a protest against AfD, a German far-right party.

So far so good.

What really bothered me is what I saw in the comments:

These are all comments from people from the US (I checked their profiles before taking the screenshots). They constituted the majority of top comments under that video.

As a European, this is incredibly frustrating. Why do so many Americans think everything is about them? A quick Google search would have told them that the protest is against the German far-right, not president Trump. It's absurd to assume that a protest in a foreign country (that you know nothing about by the way!!) is about your elections. Anyone familiar with German politics would have known that the AfD has unfortunately gained a lot of support in the country. Protests like that have happened before, but the majority of people from the US care about it only when they think it's about them.

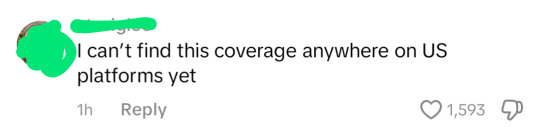

Another comment really bothered me:

I know it's an innocent comment. But the reason you are not seeing this on US platforms has nothing to do with censorship. The truth is, when do you *ever* see in-depth coverage of foreign politics on US platforms? Mainstream US news are not reporting this because this is about German politics, and the US as a whole does not care about what's going on over here in Europe.

If you're from the US, I encourage you to research Americentrism. A lot of USamericans live in a sort of bubble and fail to consider not everything exists in relation to their country. This leads to a lot of frustration from people from other nations like me.

English is used as an international language. For most of us it's the only way to interact with people from other countries. It's already hard to find content online that isn't made from a US perspective. But even when we do find it, it often gets co-opted by USamericans, even when the original post was not about them.

93 notes

·

View notes

Text

So there is a post going around with a realy suspicious map, and OP of the post has me blocked so here is a link to their post since I cannot reblog it.

Okay so, I looked into this as from my own experience the map looked suspicious.

The orignal post of the map has been deleted. You can find the deleted post on reddit about it here.

I managed to find a repost of the map on tumblr, which had linked to the deleted reddit post, and you can find the repost of the orignal map here. Now onto debunking OP's claim of the map.

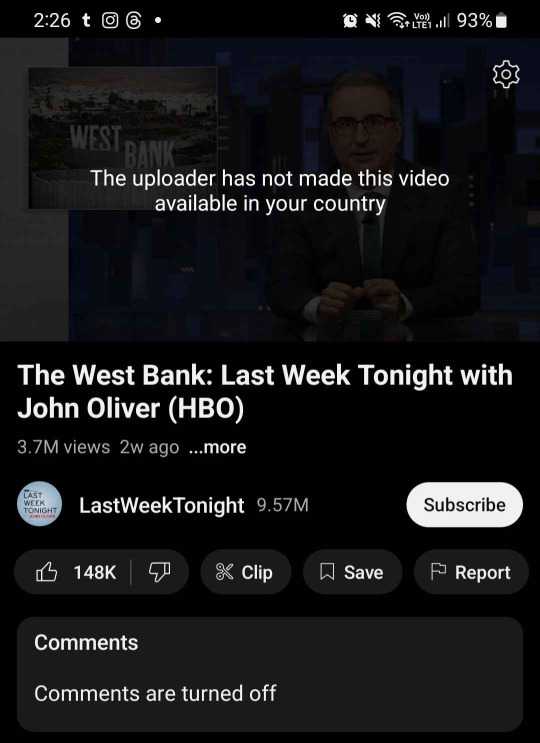

The title of the map is not solely about the west bank, it is about full john oliver episodes being unavailable in countries. Not just the west bank episode, all full episodes.

When I try to watch the video, i get the below error from youtube

Yep your eyes do see correctly. It is the uploader who made it unavailable. This means that either John Oliver himself, his production team or HBO who has the rights to his show, decided to make his content not available to be viewed in certain countries.

A comment on the reddit post which I think people should read is

"The title of this post makes many people think OP means ‘countries that have banned this’. Instead, this is just ‘countries where a network pays for the broadcast rights for this show’. The highlighted countries are ones where the show is most popular. The blank countries are where it’s not popular, so they give it away for free to try to drum up support"

Aka this is not purposeful censorship by (((zionists)) like tumblr op is trying to frame it. Its not keeping what happens in the west bank a secret. It is a decision made by someone in charge of the show, to make as much money as possible. Which still sucks! But, it sucks for different reasons than what tumblr OP is alluding to.

3. Tumblr OP is being antisemitic. Whilst it is definately written in a more subtle way, it is still invoking the whole "jews control the media" trope. A well known antisemitic trope is still antisemitic even if "jew" is replaced with "zionist". This is because whilst not every use of "zionist" is a proxy word of jew, it happens more than you think, to the point where meta (instagram and facebook) updated their hate speech policy to puroposefully include "zionist when its being used as proxy (aka dogwhistle) for jew is now hate speech on our platform". Meta still allows it to be used when talking about zionism as a political ideology, and have only banned the use of it as a proxy for jew.

OP of this post has already blocked me (only found out when i saw this post), so I wanna thank @coffeeconcentrate for reblogging this and not having me blocked so I can actually have a chance at reaching even a small group of people to make them aware of this so they don't fall for this poorly made post.

#whenever you see a map or graph which lacks both of those 9/10 its because the data doesn't fit the narrative they're trying to push#antisemitism#jumblr#jewblr#disinformation

200 notes

·

View notes

Note

insta reels has such an insane algorithm compared to any other short video platform. honestly the content they recommend by default verges on the content recommended on "accident" anyway, they recommend content meant to shock you or make you angry all the time. bug torture, horrifying ai videos, fetish content, demented gross out content (especially food-related), and videos that seem purposefully triggering to people with delusions and compulsions.

i've heard this from many people. I only post selfies on instagram, i've never actually scrolled through their algorithm, although never had a good experience with a meta social media platform.

wouldn't be surprised if they do want to evoke a certain unsavory psychological response from viewers

76 notes

·

View notes

Text

Gyan Abhishek is standing in front of a giant touch screen, like Jim Cramer on Mad Money or an ESPN talking head analyzing a football play. He’s flicking through a Facebook feed of viral, AI-generated images. “The post you are seeing now is of a poor man that is being used to generate revenue,” he says in Hindi, pointing with his pen to an image of a skeletal elderly man hunched over being eaten by hundreds of bugs. “The Indian audience is very emotional. After seeing photos like this, they Like, Comment and share them. So you too should create a page like this, upload photos and make money through Performance bonus.” He scrolls through the page, titled “Anita Kumari,” which has 112,000 followers and almost exclusively posts images of emaciated, AI-generated people, natural disasters, and starving children. He pauses on another image of a man being eaten by bugs. “They are getting so many likes,” he says. “They got 700 likes within 2-4 hours. They must have earned $100 from just this one photo. Facebook now pays you $100 for 1,000 likes … you must be wondering where you can get these images from. Don’t worry. I’ll show you how to create images with the help of AI.”

[...]

Abhishek has 115,000 YouTube subscribers, dozens of instructional videos, and is part of a community of influencers selling classes and making YouTube content about how to go viral on Facebook with AI-generated images and other types of spam. These influencers act much like financial influencers in the United States, teaching other people how to supposedly spin up a side hustle in order to make money by going viral on Facebook and other platforms. Part of the business model for these influencers is, of course, the fact that they are themselves making money by collecting ad revenue from YouTube and by selling courses and AI prompts on YouTube, WhatsApp and Telegram. Many of these influencers go on each others’ podcasts to discuss strategies, algorithm changes, and loopholes. I have found hundreds of videos about this, many of which have hundreds of thousands or millions of views. But the videos make clear that Facebook’s AI spam problem is one that is powered and funded primarily by Facebook itself, and that most of the bizarre images we have seen over the last year are coming from Microsoft’s AI Image Creator, which is called “Bing Image Creator” in instructional videos.

[...]

The most popular way to make money spamming Facebook is by being paid directly by Facebook to do so via its Creator Bonus Program, which pays people who post viral content. This means that the viral “shrimp Jesus” AI and many of the bizarre things that have become a hallmark of Zombie Facebook have become popular because Meta is directly incentivizing people to post this content.

6 August 2024

174 notes

·

View notes

Text

In late January, a warning spread through the London-based Facebook group Are We Dating the Same Guy?—but this post wasn’t about a bad date or a cheating ex. A connected network of male-dominated Telegram groups had surfaced, sharing and circulating nonconsensual intimate images of women. Their justification? Retaliation.

On January 23, users in the AWDTSG Facebook group began warning about hidden Telegram groups. Screenshots and TikTok videos surfaced, revealing public Telegram channels where users were sharing nonconsensual intimate images. Further investigation by WIRED identified additional channels linked to the network. By scraping thousands of messages from these groups, it became possible to analyze their content and the patterns of abuse.

AWDTSG, a sprawling web of over 150 regional forums across Facebook alone, with roughly 3 million members worldwide, was designed by Paolo Sanchez in 2022 in New York as a space for women to share warnings about predatory men. But its rapid growth made it a target. Critics argue that the format allows unverified accusations to spiral. Some men have responded with at least three defamation lawsuits filed in recent years against members, administrators, and even Meta, Facebook’s parent company. Others took a different route: organized digital harassment.

Primarily using Telegram group data made available through Telemetr.io, a Telegram analytics tool, WIRED analyzed more than 3,500 messages from a Telegram group linked to a larger misogynistic revenge network. Over 24 hours, WIRED observed users systematically tracking, doxing, and degrading women from AWDTSG, circulating nonconsensual images, phone numbers, usernames, and location data.

From January 26 to 27, the chats became a breeding ground for misogynistic, racist, sexual digital abuse of women, with women of color bearing the brunt of the targeted harassment and abuse. Thousands of users encouraged each other to share nonconsensual intimate images, often referred to as “revenge porn,” and requested and circulated women’s phone numbers, usernames, locations, and other personal identifiers.

As women from AWDTSG began infiltrating the Telegram group, at least one user grew suspicious: “These lot just tryna get back at us for exposing them.”

When women on Facebook tried to alert others of the risk of doxing and leaks of their intimate content, AWDTSG moderators removed their posts. (The group’s moderators did not respond to multiple requests for comment.) Meanwhile, men who had previously coordinated through their own Facebook groups like “Are We Dating the Same Girl” shifted their operations in late January to Telegram's more permissive environment. Their message was clear: If they can do it, so can we.

"In the eyes of some of these men, this is a necessary act of defense against a kind of hostile feminism that they believe is out to ruin their lives," says Carl Miller, cofounder of the Center for the Analysis of Social Media and host of the podcast Kill List.

The dozen Telegram groups that WIRED has identified are part of a broader digital ecosystem often referred to as the manosphere, an online network of forums, influencers, and communities that perpetuate misogynistic ideologies.

“Highly isolated online spaces start reinforcing their own worldviews, pulling further and further from the mainstream, and in doing so, legitimizing things that would be unthinkable offline,” Miller says. “Eventually, what was once unthinkable becomes the norm.”

This cycle of reinforcement plays out across multiple platforms. Facebook forums act as the first point of contact, TikTok amplifies the rhetoric in publicly available videos, and Telegram is used to enable illicit activity. The result? A self-sustaining network of harassment that thrives on digital anonymity.

TikTok amplified discussions around the Telegram groups. WIRED reviewed 12 videos in which creators, of all genders, discussed, joked about, or berated the Telegram groups. In the comments section of these videos, users shared invitation links to public and private groups and some public channels on Telegram, making them accessible to a wider audience. While TikTok was not the primary platform for harassment, discussions about the Telegram groups spread there, and in some cases users explicitly acknowledged their illegality.

TikTok tells WIRED that its Community Guidelines prohibit image-based sexual abuse, sexual harassment, and nonconsensual sexual acts, and that violations result in removals and possible account bans. They also stated that TikTok removes links directing people to content that violates its policies and that it continues to invest in Trust and Safety operations.

Intentionally or not, the algorithms powering social media platforms like Facebook can amplify misogynistic content. Hate-driven engagement fuels growth, pulling new users into these communities through viral trends, suggested content, and comment-section recruitment.

As people caught notice on Facebook and TikTok and started reporting the Telegram groups, they didn’t disappear—they simply rebranded. Reactionary groups quickly emerged, signaling that members knew they were being watched but had no intention of stopping. Inside, messages revealed a clear awareness of the risks: Users knew they were breaking the law. They just didn’t care, according to chat logs reviewed by WIRED. To absolve themselves, one user wrote, “I do not condone im [simply] here to regulate rules,” while another shared a link to a statement that said: “I am here for only entertainment purposes only and I don’t support any illegal activities.”

Meta did not respond to a request for comment.

Messages from the Telegram group WIRED analyzed show that some chats became hyper-localized, dividing London into four regions to make harassment even more targeted. Members casually sought access to other city-based groups: “Who’s got brum link?” and “Manny link tho?”—British slang referring to Birmingham and Manchester. They weren’t just looking for gossip. “Any info from west?” one user asked, while another requested, “What’s her @?”— hunting for a woman’s social media handle, a first step to tracking her online activity.

The chat logs further reveal how women were discussed as commodities. “She a freak, I’ll give her that,” one user wrote. Another added, “Beautiful. Hide her from me.” Others encouraged sharing explicit material: “Sharing is caring, don’t be greedy.”

Members also bragged about sexual exploits, using coded language to reference encounters in specific locations, and spread degrading, racial abuse, predominantly targeting Black women.

Once a woman was mentioned, her privacy was permanently compromised. Users frequently shared social media handles, which led other members to contact her—soliciting intimate images or sending disparaging texts.

Anonymity can be a protective tool for women navigating online harassment. But it can also be embraced by bad actors who use the same structures to evade accountability.

"It’s ironic," Miller says. "The very privacy structures that women use to protect themselves are being turned against them."

The rise of unmoderated spaces like the abusive Telegram groups makes it nearly impossible to trace perpetrators, exposing a systemic failure in law enforcement and regulation. Without clear jurisdiction or oversight, platforms are able to sidestep accountability.

Sophie Mortimer, manager of the UK-based Revenge Porn Helpline, warned that Telegram has become one of the biggest threats to online safety. She says that the UK charity’s reports to Telegram of nonconsensual intimate image abuse are ignored. “We would consider them to be noncompliant to our requests,” she says. Telegram, however, says it received only “about 10 piece of content” from the Revenge Porn Helpline, “all of which were removed.” Mortimer did not yet respond to WIRED’s questions about the veracity of Telegram’s claims.

Despite recent updates to the UK’s Online Safety Act, legal enforcement of online abuse remains weak. An October 2024 report from the UK-based charity The Cyber Helpline shows that cybercrime victims face significant barriers in reporting abuse, and justice for online crimes is seven times less likely than for offline crimes.

"There’s still this long-standing idea that cybercrime doesn’t have real consequences," says Charlotte Hooper, head of operations of The Cyber Helpline, which helps support victims of cybercrime. "But if you look at victim studies, cybercrime is just as—if not more—psychologically damaging than physical crime."

A Telegram spokesperson tells WIRED that its moderators use “custom AI and machine learning tools” to remove content that violates the platform's rules, “including nonconsensual pornography and doxing.”

“As a result of Telegram's proactive moderation and response to reports, moderators remove millions of pieces of harmful content each day,” the spokesperson says.

Hooper says that survivors of digital harassment often change jobs, move cities, or even retreat from public life due to the trauma of being targeted online. The systemic failure to recognize these cases as serious crimes allows perpetrators to continue operating with impunity.

Yet, as these networks grow more interwoven, social media companies have failed to adequately address gaps in moderation.

Telegram, despite its estimated 950 million monthly active users worldwide, claims it’s too small to qualify as a “Very Large Online Platform” under the European Union’s Digital Service Act, allowing it to sidestep certain regulatory scrutiny. “Telegram takes its responsibilities under the DSA seriously and is in constant communication with the European Commission,” a company spokesperson said.

In the UK, several civil society groups have expressed concern about the use of large private Telegram groups, which allow up to 200,000 members. These groups exploit a loophole by operating under the guise of “private” communication to circumvent legal requirements for removing illegal content, including nonconsensual intimate images.

Without stronger regulation, online abuse will continue to evolve, adapting to new platforms and evading scrutiny.

The digital spaces meant to safeguard privacy are now incubating its most invasive violations. These networks aren’t just growing—they’re adapting, spreading across platforms, and learning how to evade accountability.

57 notes

·

View notes

Text

The timing was ironic.

The Breach’s production assistant logged into Instagram on Friday to share a graphic asking readers to subscribe to our newsletter and bookmark our homepage in case Meta and Google cut off other pathways to our work.

Instead, she saw an error message. She clicked on our Instagram profile and saw another error message, the one that has been popping up on the accounts of other media outlets. “People in Canada can’t see this content,” it said.

All of The Breach’s posts, including a recent infographic exposing the climate harms of Canada’s 2023 wildfire season and a story summary about Quebec tenants fighting back against bad policy, were gone.

The Breach is an independent news outlet, funded mostly by readers, that operates as a non-profit. We produce investigations, analysis and video content about the crises of racism, inequality, colonialism and climate breakdown. Thousands of new readers discover The Breach on Instagram, Facebook and Google every month, or use those platforms to share our work. [...]

Continue Reading.

Tagging: @politicsofcanada

1K notes

·

View notes

Text

A billion dollar media company just placed a copyright claim on a recent video I posted on FB/IG. I pay to license every clip and song I use. I make $0 from any of these platforms. I make videos at a loss because I love telling stories. But a giant media company is claiming ownership over a free video that i paid a giant media company for the right to make. Meta's notification was beyond unhelpful. It doesn't say which video was claimed. Doesn't say which song or clip in that video was the basis of the claim so I can replace it. It doesn't say how to appeal the claim, only that I have 7 days to do so. It doesn't say what will happen if the claim is successful. Presumably they'll just claim ad revenue from it, which would be unfair, but also meaningless because THE PLATFORM ALREADY DOESN'T PAY ME. They can't steal what they already weren't giving me. But there's something profoundly insulting about me paying a company money so I can make something I don't get paid for, that they'll lie about to another company that isn't paying me, so they can take the money I would have been paid if anyone involved in this process cared at all. And the process is automated to give the illusion that I have any say in it at all. The truth is that I do not matter to these companies. The giant media company doesn't know I exist. An unsupervised AI was installed to print them free money by filing auto-generated content claims that have zero repercussions for false positives. Meta already isn't paying me, and I'll eat my hat if they reply to my message with any meaningful solution. I am lucky that my followers do care. I am lucky that when the system is reminding me how little I matter, I get comments and DMs and emails from people who believe in the value of what I do. That's why I haven't left this platform, and why I'll probably keep making videos for the benefit of giant companies who don't know I exist but are still making money from it. So I guess this is me saying thank you for treating my stories like they matter. Most days I believe that they do, and it's because of you.

283 notes

·

View notes

Text

How we get rid of the American oligarchy

ROBERT REICH

JAN 16

Friends,

In what was billed as his “farewell address,” President Biden yesterday warned America that the nation is succumbing to an “oligarchy” of the ultra-wealthy, and the “dangerous concentration of power” they pose to democratic ideals:

“Today, an oligarchy is taking shape in America of extreme wealth, power and influence that literally threatens our entire democracy, our basic rights and freedoms and a fair shot for everyone to get ahead.”

He’s right, of course.

Fascism starts with the Trump derriere-kissing we’re now witnessing by the wealthiest people in America, who own the biggest megaphones and thereby determine what information Americans get. What they get back from Trump is raw power to do whatever they want.

Elon Musk — the richest person in the world — controls X, which under his leadership has become a cesspool of lies and bigotry.

Musk has posted or replied to more than 80 posts about the Los Angeles fires, many of which downplayed the role of climate change — placing blame instead on individual female firefighters of color and lesbian firefighters, including posting their names and faces.

He boosted an hour-long propaganda video by right-wing conspiracy theorist Alex Jones that claimed the fires were “part of a larger globalist plot” to cause the collapse of the United States; Musk replied simply, “True.”

He repeatedly amplified claims that the Los Angeles Fire Department’s investments in diversity, equity, and inclusion (DEI) programs cost lives by wasting money that could have been spent on disaster response, suggesting that the destruction could have been mitigated if more white men had been retained.

Musk has made it clear that his platform’s main role during the upcoming Trump regime will be to back whatever Trump chooses to do and criticize Trump’s critics with more lies and bigotry.

Jeff Bezos — the second-richest person in America — owns Amazon. His Prime Video just announced it will spend a whopping $40 million for a documentary about Melania Trump, for which she is an executive producer, and stream it on Amazon Prime.

What else will Amazon promote or censor, to curry favor with Trump?

Bezos also owns The Washington Post. Just before the election, he killed a Post endorsement of Kamala Harris. Earlier this month, a Post cartoonist quit after the newspaper spiked a cartoon showing Bezos and Facebook’s Mark Zuckerberg kneeling before Trump.

Mark Zuckerberg — the third-richest person in America — owns Facebook, Instagram, and Threads. He’s sucking up to Trump by ridding his platforms of content moderation so that they, too, can amplify Trump’s lies and bigotry.

Announcing the end of fact-checking on his platforms, Zuckerberg says he thinks it will “take another ten years” of fact-free operation before Meta is “back to the place that it maybe could have been if I hadn’t messed that up in the first place.”

Zuckerberg says the deciding factor was the “cultural tipping point” of Trump’s election.

Not only will Zuckerberg fire most of the 40,000 fact-checkers who have screened his platforms’ posts for accuracy, but he will be moving the few who remain from California to Texas, “where there’s less concern about the bias of our teams.”

So Texas workers will be less “biased” than California workers? As Dan Evon of the nonprofit News Literacy Project notes, the move “provides an air of legitimacy to a popular disinformation narrative: that fact-checking is politically biased.”

Training materials for Meta’s remaining trust and safety workers include examples of speech that Zuckerberg now wants permitted:

“Immigrants are grubby, filthy pieces of shit.”

“Gays are freaks.”

“Look at that tranny (beneath photo of 17 year old girl).”

Trump praised the move, saying “I think they’ve come a long way.”

A reporter asked Trump if his threats to put Zuckerberg in prison caused the change in policy. “Probably,” Trump responded. Perhaps the Federal Trade Commission’s lawsuit against Meta played a role as well?

Zuckerberg has also promoted prominent Republican Joel Kaplan to be Meta’s chief global affairs officer and put Dana White, CEO of Ultimate Fighting Championship and a close friend of Trump, on its board.

My friends, none of this has anything to do with freedom of speech. It has everything to do with the power of money.

The three richest Americans want to decide what the rest of us will know about the coming Trump regime.

Concentrated wealth is the enemy of democracy. As the great jurist Louis Brandeis is reputed to have said, “America has a choice. We can either have great wealth in the hands of a few, or we can have a democracy. But we cannot have both.”

As we slouch into the darkness of Trump II, America needs people and institutions that speak truth to power, not align themselves with it.

When we the people regain power, three reforms are critically necessary to begin to tame the oligarchy:

X, Amazon, Meta, and other giant tech media platforms must either be busted up or treated as public utilities, responsible to the public.

Hugely wealthy individuals must not be permitted to own critical media.

Large accumulations of individual wealth must be taxed.

My friends, we will prevail.

72 notes

·

View notes

Text

Meta rolled out a number of changes to its “Hateful Conduct” policy Tuesday as part of a sweeping overhaul of its approach toward content moderation.

META announced a series of major updates to its content moderation policies today, including ending its fact-checking partnerships and “getting rid” of restrictions on speech about “topics like immigration, gender identity and gender” that the company describes as frequent subjects of political discourse and debate. “It’s not right that things can be said on TV or the floor of Congress, but not on our platforms,” Meta’s newly appointed chief global affairs officer, Joel Kaplan, wrote in a blog post outlining the changes.

In an accompanying video, Meta CEO Mark Zuckerberg described the company’s current rules in these areas as “just out of touch with mainstream discourse.”

100 notes

·

View notes

Text

it's fascinating to me the way that different social media platforms result in different types of fandom behavior. while s5 of tma was airing, I spent a good amount of time on tma tiktok (I log back in about once every two months now, going back to in-person school after a year a half of lockdown seem to re-blanace my brain and made me once again not really enjoy the format) while still using tumblr as my main socmed, and while there was a lot of overlap in the fan culture, some things were notably different.

tumblr tma fans had near-encyclopedic knowledge of the source material, but it was kind of an ongoing joke for tiktok tma fans that everyone binged the whole show in a week-long fugue state and lost memory of about 35% of it. tumblr has virtually no character limit and allows posts to be passed around by users indefinitely, which lends itself to fairly in-depth meta analysis being made and shared until most any fan could say "the time and space discrepancies at hill top road? psh yeah, I know all about them, I've read seven scrupulously cited posts that lay out all the details." for the entire time that s5 was airing, tiktok videos could still only be a minute long, and I know from a lot of personal effort that there's only so much you can fit into a one minute script that you also have to memorize and record (and cc manually with tiktok text stickers, as they didn't add the caption feature until april 2021) if you want the process to take less than four hours of your one mortal human life. and then you only see the video if your following or fyp algorithm shows it to you. there were a few tma meta-ish videos that got popular because other people would make their own videos referencing them and tag the account so their followers could see what they were talking about, but it's much harder to circulate content you like there. several times I saw people post videos saying "I got into cosplay to film some [agnes or annabelle or gerry or another secondary character] and I just realized I have no idea what their deal actually is 💀".

a thing that tiktok tma fandom was definitely better at than tumblr tma fandom was accurately remembering certain pieces of characterization and the flow of certain scenes. I've seen a bunch of posts on here where someone is trying to argue a point with excerpts from the text ("x character is nicer than you all give them credit for" "x character is so mean to y character in this scene" "z theory can't be true because y character said a line that disproves it") where the argument only holds up because the poster has gotten these excerpts from a transcript dive and hasn't listened to the episodes they're from recently, because while the text alone can be construed to mean one thing, the way it's delivered on-podcast clearly intends another. tiktok, being an audio and video based medium, allows audio clips to be shared around a lot, and cosplayers would often all make videos acting along to the same show clips of juicy interpersonal drama, and so tiktok fans, though they may have had less overall memory of what characters said, always had a better grasp on how they said it. an average tiktok tma fan might not have remembered melanie's subplot about war ghosts, but they would know the nuances of how the way she talks to jon changes between mag 28 and mag 155.

892 notes

·

View notes

Text

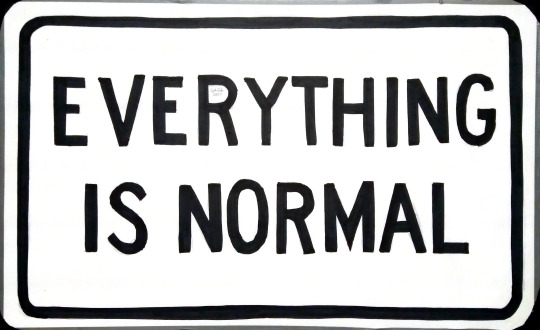

"EVERYTHING IS NORMAL" "THEY'RE █████████ THE INTERNET!"

Sometimes you'll notice changes being quietly made to your favorite internet services. Be it a video platform, your search engine of choice, your favorite social network, or wherever you go to buy the things you need or want. Sometimes you'll also see changes in advertisements that were, suspiciously, only relevant to your own unique situation.

You know it in your gut that something definitely changed, but it was only worth mentioning in conversation. "This changed for me, did it happen to you too?" Some of these changes are experienced by everyone all at once, but others are limited to specific groups, and sometimes are rolled out in staggered waves, meaning only some people are affected at different points in time. By the time the change is fully implemented - when every person targeted for this change is affected - it doesn't even matter anymore. The companies making these changes could report them publicly if they wanted to, and all people could do in response is be annoyed by it but eventually accept it and move on. The idea of "boiling the frog" comes to mind.

Our services have been getting worse in some ways, better in others, but there's undoubtedly some changes that are bad for everyone but the companies supplying these internet services (and sometimes, secretly, the governments of various countries around the world).

For me, personally, I've noticed changes to Meta (Facebook), to Google (and its services, Google Maps and YouTube), to ChatGPT, to Twitter - oh sorry, to "X", and many more. These changes are relatively small and are mostly unnoticeable... but I noticed them, just like all the other little changes they've quietly rolled out over the years. However, these changes feel a bit more insidious.

With Meta (Facebook for me), it was that they started suppressing accounts that frequently posted political content. This became most obvious during and after the 2024 election.

With Google, it was how it seems to bury certain content that's relevant to your given search, such as proof - one way or another - that something was happening with our politicians that's valuable knowledge to the public, but apparently isn't relevant enough to be on the very first page (or is simply hidden away entirely). This isn't even mentioning that Google modified its maps service so The Gulf of Mexico now reads The Gulf of America...

With YouTube, it's how it prioritizes click-bait, rage-bait, heavily-one-sided discussions of political topics, rather than pushing the very proof (or at least the very best evidence) that paints the clearest picture these overblown discussions are about. It's clear they're prioritizing watch time and engagement instead of truth.

With ChatGPT, I knew they had to control their generative text AI behind-the-scenes for certain situations (naturally you don't want your service to be generating stuff like "kill yourself," hate speech, lies, etc...), but recently it seemed to change its sources when looking up news online, to the point that it now paints a favorable image of Trump and his people.

And Twitter... sorry, with X... well, I shouldn't even need to explain this one, but I will try. The richest man in the world bought Twitter, changed how some of the back-end works, dramatically changed which voices were suppressed and which ones were heard, allowing hate speech and misinformation to spread freely on the platform, even promoting misinformation directly by retweeting it... there's a lot to it, but just know that Twitter used to be less shitty than it is now. Now it's really bad.

The point I'm making is that a lot of these changes happened around or soon after the 2024 election, and the people controlling these companies showed up to Trumps inauguration. On top of their million dollar donations to Trump, they're also doing work on his behalf to mask what awful things him and his people are doing while simultaneously promoting the things that make them look good. In short, information is becoming less accessible.

All of this, of course, is ignoring what Trump and his people have done to our government-provided websites and services, like removing the constitution and more from whitehouse.gov, how they're scrubbing decades of data from the CDC, etc...

The worst part about all this is I don't know if I could even prove anything anymore. These changes have made it difficult to know what services can be trusted going forward.

These are terrifying times. If the censorship was bad before, it's so much worse now.

Although I'd usually go out and protest with these signs, I've decided not to do it with these ones. I'd practically be an actor or an NPC, repeating the same visual joke over and over. These are my first signs I won't protest with. At least, for now.

Nonetheless, don't forget to fly your flags upside-down, boys and girls and non-binary types. Stay safe, and fuck Trump & Co!

#trump#maga#fuck maga#trump administration#elon musk#art#artwork#protest#america#fuck trump#fuck elon#fuck elon musk#artists of tumblr#traditional art#usa#philosophy#debate#morality#story#resistance#us politics#elongated muskrat#lgbtqia#lgbt#lgbtq#american politics#seek truth

47 notes

·

View notes