#OpenGL API

Explore tagged Tumblr posts

Text

I want to make this piece of software. I want this piece of software to be a good piece of software. As part of making it a good piece of software, i want it to be fast. As part of making it fast, i want to be able to paralellize what i can. As part of that paralellization, i want to use compute shaders. To use compute shaders, i need some interface to graphics processors. After determining that Vulkan is not an API that is meant to be used by anybody, i decided to use OpenGL instead. In order for using OpenGL to be useful, i need some way to show the results to the user and get input from the user. I can do this by means of the Wayland API. In order to bridge the gap between Wayland and OpenGL, i need to be able to create an OpenGL context where the default framebuffer is the same as the Wayland surface that i've set to be a window. I can do this by means of EGL. In order to use EGL to create an OpenGL context, i need to select a config for the context.

Unfortunately, it just so happens that on my Linux partition, the implementation of EGL does not support the config that i would need for this piece of software.

Therefore, i am going to write this piece of software for 9front instead, using my 9front partition.

#Update#Programming#Technology#Wayland#OpenGL#Computers#Operating systems#EGL (API)#Windowing systems#3D graphics#Wayland (protocol)#Computer standards#Code#Computer graphics#Standards#Graphics#Computing standards#3D computer graphics#OpenGL API#EGL#Computer programming#Computation#Coding#OpenGL graphics API#Wayland protocol#Implementation of standards#Computational technology#Computing#OpenGL (API)#Process of implementation of standards

9 notes

·

View notes

Text

I really want a Murder Drones boomer shooter

ok so I've mentioned before that I play Doom, and it's my hope to one day make a boomer shooter similar to modern Doom set in the Murder Drones universe. I started learning SFML for this purpose recently, but being a university student takes a lot of time and graphics programming is hard to learn (even OpenGL, which is the "easier" of the graphics APIs :3).

However, in an effort to "ghost of done" this one, I'm going to post some various thoughts I had about what I'd like to see in a Murder Drones boomer shooter. This was inspired by a post I saw that was the Doom cover but redrawn completely with various worker drones and disassembly drones populating the area around Doomguy, who was replaced by Khan. The word "Doom" was humorously replaced with the word "Door" and was stylized in the same way as the Doom logo typically is. I believe the art was made by Animate-a-thing

So, okay, I had two ideas, one of which is exceedingly far from canon and probably deserves its own post because it falls firmly into the realm of "fanfiction" rather than being a natural/reasonable continuation to the story that Liam told. So I'll talk about the other one here, which stems from an idea on Reddit that I had seen (that doesn't seem to exist anymore? I couldn't find it, at the least).

The actual idea lol

Okay, so, picture this: Some time post ep8, the Solver is reawakening in Uzi and is beginning to take control again. Not only that, it's begun to spread (somehow) to other Worker Drones. Now, the Solver has an incredibly powerful Uzi and an army of Worker Drones on its side. Moreover, N is in denial. He believes that there must be some way to free Uzi from the Solver, so, of his own free will, he chooses to defend and support Uzi even though he is capable of singlehandedly solving this conflict.

The only Worker Drone who can put an end to this madness is Khan Doorman. His engineering prowess gave him the ability to construct a number of guns, including an improved railgun that can shoot more than once before needing to recharge. He also created some personal equipment that allow him to jump much higher into the air and dash at incredible speeds. It's up to him to fight through the hordes of corrupted Worker Drones (and maybe even some Disassembly Drones?) that the Solver has set up.

I like this because Khan being an engineer makes this idea make a lot of sense flavorwise. I also think it could be interesting mechanically. For example, if you have to fight some Disassembly Drones, since, in the show, they have the ability to heal themselves (unless they get too damaged, in which case they Solver), that could be represented in game mechanically as "If a Disassembly Drone goes too long without taking damage, it will begin to heal over time. So, keep attacking it and don't take your attention away from it to ensure it can't heal, or use a railgun shot to defeat it instantly." I think this setting allows the story to compliment the gameplay really nicely.

11 notes

·

View notes

Text

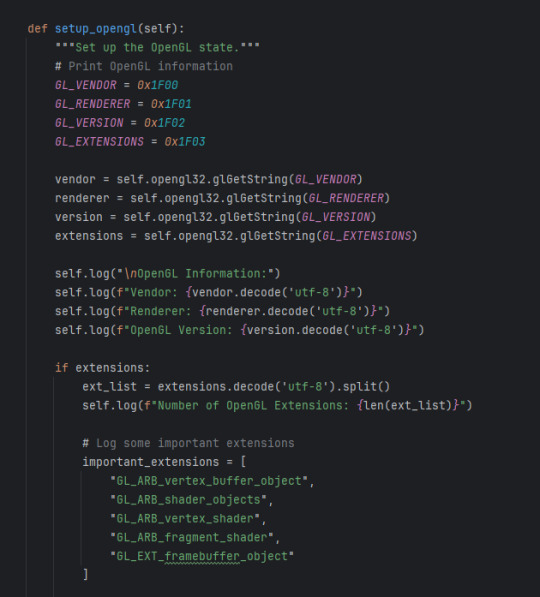

Programs get so spooky when you start using specific hex values as your constants

so because i am a crazy person i resently decided to try and learn how to use driver api's to use the GPU in python without any pipped libraires. in going down this rabbit hole i have disvored that these api's take some constants as specific hex values to indicate different meanings. and it feels so spooky to just use magic numbers pulled from the ether

This has all been in service of being able to run shit like openGL in vanilla with no added libraries ArcGIS Pro so that i could develop a toolbox that could be shared and it would run on anyone's project right out the gate.

so here is a rotating cube as an arcgis toolbox

4 notes

·

View notes

Note

hi julie! hope you're doing well. i'm not up to much new other than silly programming stuff with my partner, it was really fun learning opengl! i don't have any news regarding my sister but i wanted to say hi anyway. also i will be opening a new blog sometime soon so i can ask non-anonymously. anyway,

how are you? i try to keep up with your posts but there may be some that i missed. sisterly bliss looks fun! might try it myself. and i hope you figure out what that weird feeling was :>

that's all i think :3

- 🍇

Cute! I've never been good at programming, the best i could do was python and I am bad at that. "Cross platform graphics API" is magic words i barely understand, BUT i do feel confident in saying that OpenGL stands for Open Girls Love.

I very rarely get non anonymous asks (understandably) so yay that'll be fun and I hope you enjoy having a dedicated blog.

I'm all over the place honestly but mostly chill! Sisterly Bliss has been pretty good so far! I've been enjoying the story a lot and it's cool having a lot of the conflict based around their mum. although i will say that $24.95 USD is not a fun money conversion :(

At least I have the game DRM free so i can always just send it to people if they want (if i can work out an easy way to do that lol).

I'm mostly feeling better now, not really sure what the feeling was but at least it's gone. thank u for the lovely message and it's nice to hear from u :)

#askies#🍇 anon#not a fun currency conversion for me. probably worse for u if you are in turkey although honestly I'm not sure!#presumably it is worse though#do i get in trouble for saying i can give ppl the zip file? idk#i mean it's not the worst conversion in the world but it still feels like a bit of money for a kinda short visual novel yknow?

3 notes

·

View notes

Text

Apple Unveils Mac OS X

Next Generation OS Features New “Aqua” User Interface

MACWORLD EXPO, SAN FRANCISCO

January 5, 2000

Reasserting its leadership in personal computer operating systems, Apple® today unveiled Mac® OS X, the next generation Macintosh® operating system. Steve Jobs demonstrated Mac OS X to an audience of over 4,000 people during his Macworld Expo keynote today, and over 100 developers have pledged their support for the new operating system, including Adobe and Microsoft. Pre-release versions of Mac OS X will be delivered to Macintosh software developers by the end of this month, and will be commercially released this summer.

“Mac OS X will delight consumers with its simplicity and amaze professionals with its power,” said Steve Jobs, Apple’s iCEO. “Apple’s innovation is leading the way in personal computer operating systems once again.”

The new technology Aqua, created by Apple, is a major advancement in personal computer user interfaces. Aqua features the “Dock” — a revolutionary new way to organize everything from applications and documents to web sites and streaming video. Aqua also features a completely new Finder which dramatically simplifies the storing, organizing and retrieving of files—and unifies these functions on the host computer and across local area networks and the Internet. Aqua offers a stunning new visual appearance, with luminous and semi-transparent elements such as buttons, scroll bars and windows, and features fluid animation to enhance the user’s experience. Aqua is a major advancement in personal computer user interfaces, from the same company that started it all in 1984 with the original Macintosh.

Aqua is made possible by Mac OS X’s new graphics system, which features all-new 2D, 3D and multimedia graphics. 2D graphics are performed by Apple’s new “Quartz” graphics system which is based on the PDF Internet standard and features on-the-fly PDF rendering, anti-aliasing and compositing—a first for any operating system. 3D graphics are based on OpenGL, the industry’s most-widely supported 3D graphics technology, and multimedia is based on the QuickTime™ industry standard for digital multimedia.

At the core of Mac OS X is Darwin, Apple’s advanced operating system kernel. Darwin is Linux-like, featuring the same Free BSD Unix support and open-source model. Darwin brings an entirely new foundation to the Mac OS, offering Mac users true memory protection for higher reliability, preemptive multitasking for smoother operation among multiple applications and fully Internet-standard TCP/IP networking. As a result, Mac OS X is the most reliable and robust Apple operating system ever.

Gentle Migration

Apple has designed Mac OS X to enable a gentle migration for its customers and developers from their current installed base of Macintosh operating systems. Mac OS X can run most of the over 13,000 existing Macintosh applications without modification. However, to take full advantage of Mac OS X’s new features, developers must “tune-up” their applications to use “Carbon”, the updated version of APIs (Application Program Interfaces) used to program Macintosh computers. Apple expects most of the popular Macintosh applications to be available in “Carbonized” versions this summer.

Developer Support

Apple today also announced that more than 100 leading developers have pledged their support for the new operating system, including Adobe, Agfa, Connectix, id, Macromedia, Metrowerks, Microsoft, Palm Computing, Quark, SPSS and Wolfram (see related supporting quote sheet).

Availability

Mac OS X will be rolled out over a 12 month period. Macintosh developers have already received two pre-releases of the software, and they will receive another pre-release later this month—the first to incorporate Aqua. Developers will receive the final “beta” pre-release this spring. Mac OS X will go on sale as a shrink-wrapped software product this summer, and will be pre-loaded as the standard operating system on all Macintosh computers beginning in early 2001. Mac OS X is designed to run on all Apple Macintosh computers using PowerPC G3 and G4 processor chips, and requires a minimum of 64 MB of memory.

4 notes

·

View notes

Text

every time i look at game development

my brain hurts a lot. because it isn't the game development i'm interested in. it's the graphics stuff like opengl or vulkan and shaders and all that. i need to do something to figure out how to balance work and life better. i've got a course on vulkan i got a year ago i still haven't touched and every year that passes that i don't understand how to do graphics api stuffs, the more i scream internally. like i'd love to just sit down with a cup of tea and vibe out to learning how to draw lines. i'm just in the wrong kind of job tbh. a lot of life path stuff i coulda shoulda woulda. oh well.

2 notes

·

View notes

Text

thinking of writing a self-aggrandizing "i'm a programmer transmigrated to a fantasy world but MAGIC is PROGRAMMING?!" story only the protagonist stumbles through 50 chapters of agonizing opengl tutorialization to create a 700-phoneme spell that makes a red light that slowly turns blue over ten seconds. this common spell doesn't have the common interface safeties for the purposes of simplicity and so if you mispronounce the magic words for 'ten seconds' maybe it'll continue for five million years or until you die of mana depletion, whichever comes first. what do you mean cast fireballs those involve a totally different api; none of that transfers between light-element spells and fire-element spells

chapter 51 is the protag giving up at being a wizard and settling down to be a middling accountant

11 notes

·

View notes

Note

Not sure if you've been asked/ have answered this before but do you have any particular recommendations for learning to work with Vulkan? Any particular documentation or projects that you found particularly engaging/helpful?

vkguide is def a really great resource! Though I would def approach vulkan after probably learning a more simpler graphics API like OpenGL and such since there is a lot of additional intuitions to understand around why Vulkan/DX12/Metal are designed the way that they are.

Also I personally use Vulkan-Hpp to help make my vulkan code a lot more cleaner and C++-like rather than directly using the C api and exposing myself to the possibility of more mistakes(like forgetting to put the right structure type enum and such). It comes with some utils and macros for making some of the detailed bits of Vulkan a bit easier to manage like pNext chains and such!

12 notes

·

View notes

Note

Hi! 😄

Trying to use reshade on Disney Dreamlight Valley, and the I’m using a YouTube video for all games as I haven’t found a tutorial for strictly DDV. The first problem I’ve ran into is I’m apparently supposed to click home when I get to the menu of the game but nothing pops up so I can’t even get past that. It shows everything downloaded in the DDV files tho. 😅 never done this before so I’m very confused. Thanks!

I haven't played DVV so I'm not sure if it has any quirks or special requirements for ReShade.

Still, for general troubleshooting, make sure that you've installed ReShade into the same folder where the main DVV exe is located.

Does the ReShade banner come up at the top when you start the game? If so, then ReShade is at least installed in the correct place.

If it doesn't show up despite being in the correct location, make sure you've selected the correct rendering api as part of the installation process. You know the part where it asks you to choose between directx 9/10/11/12 or OpenGL/Vulkan? If you choose the wrong one of those ReShade won't load.

You can usually find out the rendering api of most games on PC Gaming Wiki. Here's the page for Disney Dreamlight Valley. It seems to suggest it supports Direct X 10 and 11.

If you're certain everything is installed correctly and the banner is showing up as expected but you still can't open the ReShade menu, you can change the Home key to something else just in case DVV is blocking the use of that for some reason.

Open reshade.ini in a text file and look for the INPUT section.

You'll see a line that says

KeyOverlay=36,0,0,0

36 is the javascript keycode for Home. You can change that to something else.

Check the game's hotkeys to find something that isn't already assigned to a command. For example, a lot of games use F5 to quick save, and F9 to quick load, so you might need to avoid using those. In TS4 at the moment I use F6 to open the overlay, because it's not assigned to anything in the game. You can choose whatever you want. You can find a list of javascript keycodes here. F6, for example, is 117, so you'd change the line to read

KeyOverlay=117,0,0,0

But you can choose whatever you want. Just remember to check it isn't already used by the game.

Note: you can usually do this in the ReShade menu, but since it isn't opening for you at the moment this is a way to change that key manually

Beyond that, I'm not sure what would be stopping the menu from opening. If you've exhausted the options above, you can try asking over in the official ReShade discord server. Please give them as much information as possible in as clear and uncluttered and to-the-point language as possible to increase your chances of someone being able (and willing) to help.

2 notes

·

View notes

Text

Wish List For A Game Profiler

I want a profiler for game development. No existing profiler currently collects the data I need. No existing profiler displays it in the format I want. No existing profiler filters and aggregates profiling data for games specifically.

I want to know what makes my game lag. Sure, I also care about certain operations taking longer than usual, or about inefficient resource usage in the worker thread. The most important question that no current profiler answers is: In the frames that currently do lag, what is the critical path that makes them take too long? Which function should I optimise first to reduce lag the most?

I know that, with the right profiler, these questions could be answered automatically.

Hybrid Sampling Profiler

My dream profiler would be a hybrid sampling/instrumenting design. It would be a sampling profiler like Austin (https://github.com/P403n1x87/austin), but a handful of key functions would be instrumented in addition to the sampling: Displaying a new frame/waiting for vsync, reading inputs, draw calls to the GPU, spawning threads, opening files and sockets, and similar operations should always be tracked. Even if displaying a frame is not a heavy operation, it is still important to measure exactly when it happens, if not how long it takes. If a draw call returns right away, and the real work on the GPU begins immediately, it’s still useful to know when the GPU started working. Without knowing exactly when inputs are read, and when a frame is displayed, it is difficult to know if a frame is lagging. Especially when those operations are fast, they are likely to be missed by a sampling debugger.

Tracking Other Resources

It would be a good idea to collect CPU core utilisation, GPU utilisation, and memory allocation/usage as well. What does it mean when one thread spends all of its time in that function? Is it idling? Is it busy-waiting? Is it waiting for another thread? Which one?

It would also be nice to know if a thread is waiting for IO. This is probably a “heavy” operation and would slow the game down.

There are many different vendor-specific tools for GPU debugging, some old ones that worked well for OpenGL but are no longer developed, open-source tools that require source code changes in your game, and the newest ones directly from GPU manufacturers that only support DirectX 12 or Vulkan, but no OpenGL or graphics card that was built before 2018. It would probably be better to err on the side of collecting less data and supporting more hardware and graphics APIs.

The profiler should collect enough data to answer questions like: Why is my game lagging even though the CPU is utilised at 60% and the GPU is utilised at 30%? During that function call in the main thread, was the GPU doing something, and were the other cores idling?

Engine/Framework/Scripting Aware

The profiler knows which samples/stack frames are inside gameplay or engine code, native or interpreted code, project-specific or third-party code.

In my experience, it’s not particularly useful to know that the code spent 50% of the time in ceval.c, or 40% of the time in SDL_LowerBlit, but that’s the level of granularity provided by many profilers.

Instead, the profiler should record interpreted code, and allow the game to set a hint if the game is in turn interpreting code. For example, if there is a dialogue engine, that engine could set a global “interpreting dialogue” flag and a “current conversation file and line” variable based on source maps, and the profiler would record those, instead of stopping at the dialogue interpreter-loop function.

Of course, this feature requires some cooperation from the game engine or scripting language.

Catching Common Performance Mistakes

With a hybrid sampling/instrumenting profiler that knows about frames or game state update steps, it is possible to instrument many or most “heavy“ functions. Maybe this functionality should be turned off by default. If most “heavy functions“, for example “parsing a TTF file to create a font object“, are instrumented, the profiler can automatically highlight a mistake when the programmer loads a font from disk during every frame, a hundred frames in a row.

This would not be part of the sampling stage, but part of the visualisation/analysis stage.

Filtering for User Experience

If the profiler knows how long a frame takes, and how much time is spent waiting during each frame, we can safely disregard those frames that complete quickly, with some time to spare. The frames that concern us are those that lag, or those that are dropped. For example, imagine a game spends 30% of its CPU time on culling, and 10% on collision detection. You would think to optimise the culling. What if the collision detection takes 1 ms during most frames, culling always takes 8 ms, but whenever the player fires a bullet, the collision detection causes a lag spike. The time spent on culling is not the problem here.

This would probably not be part of the sampling stage, but part of the visualisation/analysis stage. Still, you could use this information to discard “fast enough“ frames and re-use the memory, and only focus on keeping profiling information from the worst cases.

Aggregating By Code Paths

This is easier when you don’t use an engine, but it can probably also be done if the profiler is “engine-aware”. It would require some per-engine custom code though. Instead of saying “The game spent 30% of the time doing vector addition“, or smarter “The game spent 10% of the frames that lagged most in the MobAIRebuildMesh function“, I want the game to distinguish between game states like “inventory menu“, “spell targeting (first person)“ or “switching to adjacent area“. If the game does not use a data-driven engine, but multiple hand-written game loops, these states can easily be distinguished (but perhaps not labelled) by comparing call stacks: Different states with different game loops call the code to update the screen from different places – and different code paths could have completely different performance characteristics, so it makes sense to evaluate them separately.

Because the hypothetical hybrid profiler instruments key functions, enough call stack information to distinguish different code paths is usually available, and the profiler might be able to automatically distinguish between the loading screen, the main menu, and the game world, without any need for the code to give hints to the profiler.

This could also help to keep the memory usage of the profiler down without discarding too much interesting information, by only keeping the 100 worst frames per code path. This way, the profiler can collect performance data on the gameplay without running out of RAM during the loading screen.

In a data-driven engine like Unity, I’d expect everything to happen all the time, on the same, well-optimised code path. But this is not a wish list for a Unity profiler. This is a wish list for a profiler for your own custom game engine, glue code, and dialogue trees.

All I need is a profiler that is a little smarter, that is aware of SDL, OpenGL, Vulkan, and YarnSpinner or Ink. Ideally, I would need somebody else to write it for me.

6 notes

·

View notes

Text

Writeup: Forcing Minecraft to play on a Trident Blade 3D.

The first official companion writeup to a video I've put out!

youtube

So. Uh, yeah. Trident Blade 3D. If you've seen the video already, it's... not good. Especially in OpenGL.

Let's kick things off with a quick rundown of the specs of the card, according to AIDA64:

Trident Blade 3D - specs

Year released: 1999

Core: 3Dimage 9880, 0.25um (250nm) manufacturing node, 110MHz

Driver version: 4.12.01.2229

Interface: AGP 2x @ 1x speed (wouldn't go above 1x despite driver and BIOS support)

PCI device ID: 1023-9880 / 1023-9880 (Rev 3A)

Mem clock: 110MHz real/effective

Mem bus/type: 8MB 64-bit SDRAM, 880MB/s bandwidth

ROPs/TMUs/Vertex Shaders/Pixel Shaders/T&L hardware: 1/1/0/0/No

DirectX support: DirectX 6

OpenGL support: - 100% (native) OpenGL 1.1 compliant - 25% (native) OpenGL 1.2 compliant - 0% compliant beyond OpenGL 1.2 - Vendor string:

Vendor : Trident Renderer : Blade 3D Version : 1.1.0

And as for the rest of the system:

Windows 98 SE w/KernelEX 2019 updates installed

ECS K7VTA3 3.x

AMD Athlon XP 1900+ @ 1466MHz

512MB DDR PC3200 (single stick of OCZ OCZ400512P3) 3.0-4-4-8 (CL-RCD-RP-RAS)

Hitachi Travelstar DK23AA-51 4200RPM 5GB HDD

IDK what that CPU cooler is but it does the job pretty well

And now, with specs done and out of the way, my notes!

As mentioned earlier, the Trident Blade 3D is mind-numbingly slow when it comes to OpenGL. As in, to the point where at least natively during actual gameplay (Minecraft, because I can), it is absolutely beaten to a pulp using AltOGL, an OpenGL-to-Direct3D6 "wrapper" that translates OpenGL API calls to DirectX ones.

Normally, it can be expected that performance using the wrapper is about equal to native OpenGL, give or take some fps depending on driver optimization, but this card?

The Blade 3D may as well be better off like the S3 ViRGE by having no OpenGL ICD shipped in any driver release, period.

For the purposes of this writeup, I will stick to a very specific version of Minecraft: in-20091223-1459, the very first version of what would soon become Minecraft's "Indev" phase, though this version notably lacks any survival features and aside from the MD3 models present, is indistinguishable from previous versions of Classic. All settings are at their absolute minimum, and the window size is left at default, with a desktop resolution of 1024x768 and 16-bit color depth.

(Also the 1.5-era launcher I use is incapable of launching anything older than this version anyway)

Though known to be unstable (as seen in the full video), gameplay in Minecraft Classic using AltOGL reaches a steady 15 fps, nearly triple that of the native OpenGL ICD that ships with Trident's drivers the card. AltOGL also is known to often have issues with fog rendering on older cards, and the Blade 3D is no exception... though, I believe it may be far more preferable to have no working fog than... well, whatever the heck the Blade 3D is trying to do with its native ICD.

See for yourself: (don't mind the weirdness at the very beginning. OBS had a couple of hiccups)

youtube

youtube

Later versions of Minecraft were also tested, where I found that the Trident Blade 3D follows the same, as I call them, "version boundaries" as the SiS 315(E) and the ATi Rage 128, both of which being cards that easily run circles around the Blade 3D.

Version ranges mentioned are inclusive of their endpoints.

Infdev 1.136 (inf-20100627) through Beta b1.5_01 exhibit world-load crashes on both the SiS 315(E) and Trident Blade 3D.

Alpha a1.0.4 through Beta b1.3_01/PC-Gamer demo crash on the title screen due to the animated "falling blocks"-style Minecraft logo on both the ATi Rage 128 and Trident Blade 3D.

All the bugginess of two much better cards, and none of the performance that came with those bugs.

Interestingly, versions even up to and including Minecraft release 1.5.2 are able to launch to the main menu, though by then the already-terrible lag present in all prior versions of the game when run on the Blade 3D make it practically impossible to even press the necessary buttons to load into a world in the first place. Though this card is running in AGP 1x mode, I sincerely doubt that running it at its supposedly-supported 2x mode would bring much if any meaningful performance increase.

Lastly, ClassiCube. ClassiCube is a completely open-source reimplementation of Minecraft Classic in C, which allows it to bypass the overhead normally associated with Java's VM platform. However, this does not grant it any escape from the black hole of performance that is the Trident Blade 3D's OpenGL ICD. Not only this, but oddly, the red and blue color channels appear to be switched by the Blade 3D, resulting in a very strange looking game that chugs along at single-digits. As for the game's DirectX-compatible version, the requirement of DirectX 9 support locks out any chance for the Blade 3D to run ClassiCube with any semblance of performance. Also AltOGL is known to crash ClassiCube so hard that a power cycle is required.

Interestingly, a solid half of the accelerated pixel formats supported by the Blade 3D, according to the utility GLInfo, are "render to bitmap" modes, which I'm told is a "render to texture" feature that normally isn't seen on cards as old as the Blade 3D. Or in fact, at least in my experience, any cards outside of the Blade 3D. I've searched through my saved GLInfo reports across many different cards, only to find each one supporting the usual "render to window" pixel format.

And with that, for now, this is the end of the very first post-video writeup on this blog. Thank you for reading if you've made it this far.

I leave you with this delightfully-crunchy clip of the card's native OpenGL ICD running in 256-color mode, which fixes the rendering problems but... uh, yeah. It's a supported accelerated pixel format, but "accelerated" is a stretch like none other. 32-bit color is supported as well, but it performs about identically to the 8-bit color mode--that is, even worse than 16-bit color performs.

At least it fixes the rendering issues I guess.

youtube

youtube

#youtube#techblog#not radioshack#my posts#writeup#Forcing Minecraft to play on a Trident Blade 3D#Trident Blade 3D#Trident Blade 3D 9880

3 notes

·

View notes

Text

Mesh topologies: done!

Followers may recall that on Thursday I implemented wireframes and Phong shading in my open-source Vulkan project. Both these features make sense only for 3-D meshes composed of polygons (triangles in my case).

The next big milestone was to support meshes composed of lines. Because of how Vulkan handles line meshes, the additional effort to support other "topologies" (point meshes, triangle-fan meshes, line-strip meshes, and so on) is slight, so I decided to support those as well.

I had a false start where I was using integer codes to represent different topologies. Eventually I realized that defining an "enum" would be a better design, so I did some rework to make it so.

I achieved the line-mesh milestone earlier today (Monday) at commit ce7a409. No screenshots yet, but I'll post one soon.

In parallel with this effort, I've been doing what I call "reconciliation" between my Vulkan graphics engine and the OpenGL engine that Yanis and I wrote last April. Reconciliation occurs when I have 2 classes that do very similar things, but the code can't be reused in the form of a library. The idea is to make the source code of the 2 versions as similar as possible, so I can easily see the differences. This facilitates porting features and fixes back and forth between the 2 versions.

I'm highly motivated to make my 2 engine repos as similar as possible: not just similar APIs, but also the same class/method/variable names, the same coding style, similar directory structures, and so on. Once I can easily port code back and forth, my progress on the Vulkan engine should accelerate considerably. (That's how the Vulkan project got user-input handling so quickly, for instance.)

The OpenGL engine will also benefit from reconciliation. Already it's gained a model-import package. That's something we never bothered to implement last year. Last month I wrote one for the Vulkan engine (as part of the tutorial) and already the projects are similar enough that I was able to port it over without much difficulty.

#open source#vulkan#java#software development#accomplishments#github#3d graphics#coding#jvm#3d mesh#opengl#polygon#3d model#making progress#work in progress#topology#milestones

2 notes

·

View notes

Text

(the 'one company' in the Flash case is primarily Apple, although the decision to decisively deprecate and kill it was made across all the browser manufacturers. Apple are also the ones who decided not to let Vulkan and soon OpenGL run on their devices and to have their own graphics API, which leads to the current messy situation where graphics APIs seem to be multiplying endlessly and you have to rely on some kind of abstraction package to transpile between Vulkan, DX12, Metal, WebGPU, OpenGL, ...)

1 note

·

View note

Link

0 notes

Text

How C and C++ Power the Modern World: Key Applications Explained

In an era driven by digital innovation, some of the most impactful technologies are built upon languages that have stood the test of time. Among them, C and C++ remain foundational to the software ecosystem, serving as the backbone of countless systems and applications that fuel the modern world. With exceptional performance, low-level memory control, and unparalleled portability, these languages continue to be indispensable in various domains.

Operating Systems and Kernels

Virtually every modern operating system owes its existence to C and C++. Windows, macOS, Linux, and countless UNIX variants are either fully or partially written in these languages. The reason is clear—these systems demand high efficiency, direct hardware interaction, and fine-grained resource control.

C and C++ programming applications in OS development enable systems to manage memory, execute processes, and handle user interactions with minimal latency. The modular architecture of kernels, drivers, and libraries is often sculpted in C for stability and maintainability, while C++ adds object-oriented capabilities when needed.

Embedded Systems and IoT

Embedded systems—the silent enablers of everyday devices—rely heavily on C and C++. From microwave ovens and washing machines to automotive control systems and industrial automation, these languages are instrumental in programming microcontrollers and real-time processors.

Due to the deterministic execution and small memory footprint required in embedded environments, C and C++ programming applications dominate the firmware layer. In the rapidly expanding Internet of Things (IoT) landscape, where devices must function autonomously with minimal energy consumption, the control and optimization offered by these languages are irreplaceable.

Game Development and Graphics Engines

Speed and performance are paramount in the gaming world. Game engines like Unreal Engine and graphics libraries such as OpenGL and Vulkan are built in C and C++. Their ability to interact directly with GPU hardware and system memory allows developers to craft graphically rich, high-performance games.

From rendering photorealistic environments to simulating physics engines in real time, C and C++ programming applications provide the precision and power that immersive gaming demands. Moreover, their scalability supports development across platforms—PC, console, and mobile.

Financial Systems and High-Frequency Trading

In finance, microseconds can make or break a deal. High-frequency trading platforms and real-time data processing engines depend on the unmatched speed of C and C++. These languages enable systems to handle vast volumes of data and execute trades with ultra-low latency.

C and C++ programming applications in fintech range from algorithmic trading engines and risk analysis tools to database systems and high-performance APIs. Their deterministic behavior and optimized resource utilization ensure reliability in environments where failure is not an option.

Web Browsers and Rendering Engines

Behind every sleek user interface of a web browser lies a robust core built with C and C++. Google Chrome’s V8 JavaScript engine and Mozilla Firefox’s Gecko rendering engine are developed using these languages. They parse, compile, and execute web content at blazing speeds.

C and C++ programming applications in browser architecture enable low-level system access for networking, security protocols, and multimedia rendering. These capabilities translate into faster load times, improved stability, and better overall performance.

Database Management Systems

Databases are at the heart of enterprise computing. Many relational database systems, including MySQL, PostgreSQL, and Oracle, are built using C and C++. The need for high throughput, efficient memory management, and concurrent processing makes these languages the go-to choice.

C and C++ programming applications allow databases to handle complex queries, transaction management, and data indexing with remarkable efficiency. Their capacity to manage and manipulate large datasets in real time is crucial for big data and analytics applications.

C and C++ continue to thrive not because they are relics of the past, but because they are still the most effective tools for building high-performance, scalable, and secure systems. The diversity and depth of C and C++ programming applications underscore their enduring relevance in powering the technologies that shape our digital lives. From embedded controllers to the engines behind global finance, these languages remain quietly omnipresent—and unmistakably essential.

0 notes

Photo

China's first 6nm domestic GPU, the G100 from Lisuan Technology, has successfully powered on, signaling a major step in China's tech independence. This innovative graphics card promises performance comparable to the RTX 4060, although skepticism remains about its actual capabilities. Developed using a 6nm process node, the G100 is based on Lisuan's proprietary TrueGPU architecture—rumored to be entirely in-house. While details are scarce, it’s likely produced by China’s leading foundry SMIC, given export restrictions on U.S.-based manufacturing. Targeting the gaming market, the G100 supports APIs like DirectX 12, Vulkan 1.3, and OpenGL 4.6, hinting at decent performance for lightweight gaming and AI workloads. Software optimization and driver maturity will be critical for its success. Though commercialization may not happen until 2026, this milestone demonstrates significant progress for China’s GPU efforts. It highlights the importance of innovation, extensive R&D, and overcoming supply chain hurdles. Will this mark the beginning of a new era in Chinese chip manufacturing? Stay tuned as benchmark tests and further details emerge! What are your thoughts on China’s push for自主GPU development? Share below! #ChinaTech #GPUInnovation #GraphicsCard #GamingHardware #MadeInChina #TechIndependence #AI #ChipManufacturing #SMIC #TechProgress #FutureOfGPU

0 notes