#Satellite Receiver Software

Explore tagged Tumblr posts

Text

1506TV NEW SOFTWARE SEPTEMBER 2024 DOWNLOAD

#1506tv all software#1506TV DVB Finder Software#1506TV to 1506FV Software#Download#Receiver Software#Satellite Receiver Software#Sunplus Receiver

0 notes

Text

Aip Manufacturers in india

#time and frequency synchronization solutions asia#meinberg products india#IEEE 1588 Solutions#PCI Express Radio Clocks Solutions#USB Radio Clocks Solutions#GNSS Systems#GPS satellite receiver#GLONASS satellite receiver#Galileo satellite receiver#BeiDou satellite receiver#Meinberg Software#Meinberg Germany#Distributor of Meinberg Germany#Solutions of Meinberg Germany#network time servers india#meinberg software maharashtra

0 notes

Text

A new working conspiracy theory regarding election integrity is spreading among Democratic voters after it was revealed that Starlink, a satellite owned by Elon Musk through SpaceX, was used for connectivity on voting tabulators.

A few counties, notably a few in swing states, across the U.S. have come forward about connectivity and functional issues with the voting tabulators that were used on Election Day. On top of that, some of these polling places received bomb threats and had to be evacuated.

This alongside Musk’s comments about knowing the election results 4 hours before the race was called by the Associated Press seem suspicious at the very least to many voters.

Many are taking this as more fuel to demand an investigation into both Trump and Musk’s involvement in the 2024 U.S. presidential election.

Starlink “connectivity” issue:

youtube

Cochise County, AZ:

Centre County, PA:

Battle Creek, MI:

[ID:

Five screenshots.

The first is a screenshot of search results that shows two separate articles from KOLD and KGUN 9 about bomb threats that shut down the elections office in Cochise County, AZ.

The second is a Facebook update by the Cochise County, AZ government. It reads:

"Cochise County Election Update: The tabulation machines are not working properly and the vendor, Election Systems & Software (ESS) has been notified of the situation. Their technicians will arrive this afternoon to address the issue. The Secretary of State has been made aware of our tabulators. Once the tabulators are working, we will send another notification to the public and continue tabulating ballots. Thank you for your continued patience."

The third is a headline of an article by Geoff Ruston that reads "Centre County Elections Office Temporarily Evacuated After Bomb Threat."

The fourth is a screenshot of an article by NewsWeek showing as a search result. The headline reads "Centre County Rescans 13,000 Ballots After Software Issue Delayed Election ..."

The final screenshot is of an article by the Detroit Free Press as a search result. The headline reads "Battle Creek election glitch undercounted thousands of absentee..."

/end ID]

#us news#starlink#elon musk#elongated muskrat#us elections#presidential election#2024 presidential election#conspiracies#Youtube

25 notes

·

View notes

Text

NASA and Italian Space Agency test future lunar navigation technology

As the Artemis campaign leads humanity to the moon and eventually Mars, NASA is refining its state-of-the-art navigation and positioning technologies to guide a new era of lunar exploration.

A technology demonstration helping pave the way for these developments is the Lunar GNSS Receiver Experiment (LuGRE) payload, a joint effort between NASA and the Italian Space Agency to demonstrate the viability of using existing GNSS (Global Navigation Satellite System) signals for positioning, navigation, and timing on the moon.

During its voyage on an upcoming delivery to the moon as part of NASA's CLPS (Commercial Lunar Payload Services) initiative, LuGRE would demonstrate acquiring and tracking signals from both the U.S. GPS and European Union Galileo GNSS constellations during transit to the moon, during lunar orbit, and finally for up to two weeks on the lunar surface itself.

The LuGRE payload is one of the first demonstrations of GNSS signal reception and navigation on and around the lunar surface, an important milestone for how lunar missions will access navigation and positioning technology.

If successful, LuGRE would demonstrate that spacecraft can use signals from existing GNSS satellites at lunar distances, reducing their reliance on ground-based stations on the Earth for lunar navigation.

Today, GNSS constellations support essential services like navigation, banking, power grid synchronization, cellular networks, and telecommunications. Near-Earth space missions use these signals in flight to determine critical operational information like location, velocity, and time.

NASA and the Italian Space Agency want to expand the boundaries of GNSS use cases. In 2019, the Magnetospheric Multiscale (MMS) mission broke the world record for farthest GPS signal acquisition 116,300 miles from the Earth's surface—nearly half of the 238,900 miles between Earth and the moon. Now, LuGRE could double that distance.

"GPS makes our lives safer and more viable here on Earth," said Kevin Coggins, NASA deputy associate administrator and SCaN (Space Communications and Navigation) Program manager at NASA Headquarters in Washington. "As we seek to extend humanity beyond our home planet, LuGRE should confirm that this extraordinary technology can do the same for us on the moon."

Reliable space communication and navigation systems play a vital role in all NASA missions, providing crucial connections from space to Earth for crewed and uncrewed missions alike. Using a blend of government and commercial assets, NASA's Near Space and Deep Space Networks support science, technology demonstrations, and human spaceflight missions across the solar system.

"This mission is more than a technological milestone," said Joel Parker, policy lead for positioning, navigation, and timing at NASA's Goddard Space Flight Center in Greenbelt, Maryland.

"We want to enable more and better missions to the moon for the benefit of everyone, and we want to do it together with our international partners."

The data-gathering LuGRE payload combines NASA-led systems engineering and mission management with receiver software and hardware developed by the Italian Space Agency and their industry partner Qascom—the first Italian-built hardware to operate on the lunar surface.

Any data LuGRE collects is intended to open the door for use of GNSS to all lunar missions, not just those by NASA or the Italian Space Agency. Approximately six months after LuGRE completes its operations, the agencies will release its mission data to broaden public and commercial access to lunar GNSS research.

"A project like LuGRE isn't about NASA alone," said NASA Goddard navigation and mission design engineer Lauren Konitzer. "It's something we're doing for the benefit of humanity. We're working to prove that lunar GNSS can work, and we're sharing our discoveries with the world."

The LuGRE payload is one of 10 science experiments launching to the lunar surface on this delivery through NASA's CLPS initiative.

Through CLPS, NASA works with American companies to provide delivery and quantity contracts for commercial deliveries to further lunar exploration and the development of a sustainable lunar economy. As of 2024, the agency has 14 private partners on contract for current and future CLPS missions.

Demonstrations like LuGRE could lay the groundwork for GNSS-based navigation systems on the lunar surface. Bridging these existing systems with emerging lunar-specific navigation solutions has the potential to define how all spacecraft navigate lunar terrain in the Artemis era.

The payload is a collaborative effort between NASA's Goddard Space Flight Center and the Italian Space Agency.

8 notes

·

View notes

Text

SkyRoof: New Ham Satellite Tracking and SDR Receiver Software

https://www.rtl-sdr.com/skyroof-new-ham-satellite-tracking-and-sdr-receiver-software/

3 notes

·

View notes

Text

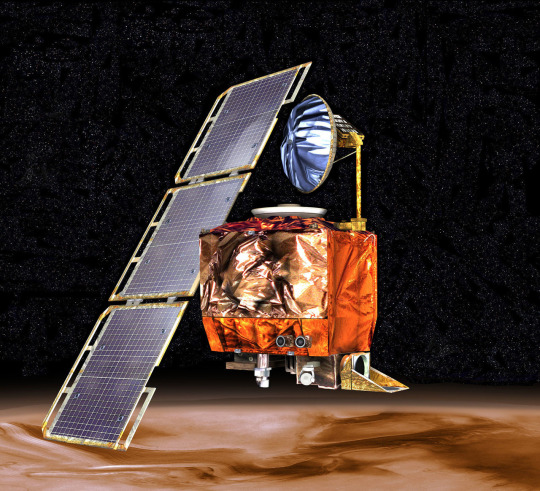

The Mars Climate Orbiter and 1700s pirates

Today I will be engaging in my favorite sport of "extreme leaps of logic" by blaming the failure of the Mars Climate Orbiter in 1998 on Caribbean piracy in the late 1700s.

America, being brand-new right around 1793, had some leeway in the various systems to officially adopt. Thomas Jefferson wanted to look into the also-new metric system from France. The metric system had been devised as a part of the French Revolution's removal of archaic systems (not everything caught on, there was also a decimalized calendar and clock with 10 hours per day, 100 minutes per hour, etc.).

At Jefferson's request, France sent a scientist, Joseph Dombey, to America, and Dombey packed along a set of tools and calibrated items to demonstrate the system. Dombey's ship went off course in a storm and never made it to America. British privateers stole Dombey's tools and, not knowing what they were, auctioned them off. Dombey died in their captivity, and Jefferson never received what he would need to properly introduce America to the metric system.

So, how does that relate to something that happened 205 years later? NASA as a rule uses the metric system just because it's a little silly to build spaceships with measurements based on how long Henry I's arm was. The Mars Climate Orbiter, a satellite designed to orbit around Mars and monitor long-term changes, was not built directly by NASA, though. It was built (and programmed) by contractor Lockheed Martin, who very much did use imperial units in their software.

After a 9.5 month, 669 million km trip to Mars, the Mars Climate Orbiter smashed into the Martian atmosphere and likely disintegrated. As a rule, that's not what satellites are supposed to do. NASA's control software had told it to maintain a height of 110 km from the surface. This is done by firing retrothrusters with very precise amounts of force, measured by NASA in newton-seconds. However, Lockheed Martin used the much sillier unit of "pound-force seconds" in their software. Too much force was applied to the satellite and it bounced off the atmosphere and was destroyed.

Lockheed Martin was the source of the unit conversion problem, but NASA maintains that responsibility for the failure rests of the space program, for not having sufficient quality control over the contractor-produced software. I, though, am going to place the full blame on those pirates in 1793, without whom this whole mess could have been avoided.

2 notes

·

View notes

Text

December - April CAS Project - CanSat

Our CAS project was a part of a larger project we are taking part in, the CanSat competition. The deadline for the CDR (Critical Design Review) report was in January so we started working on it in the middle of December. We started by carefully planning all the tests we have to complete. To be honest, we chose the tests to a large extent based on whether we will be able to get data that can be displayed visually to show our progress. And in the following weeks, we conducted the tests: we tested the parachute (once again, we do that quite a lot because all the prototypes have some flaws that we try to fix). We also did tests of the barometer’s ability to detect change of height over ground and the thermometer’s precision. We also worked on the radio, though we did not manage to get it to work before the CDR deadline and we did GPS tests. While the rest of the team was working on integrating the elements together, I did a lot of analysis of the data we achieved and made graphs, maps, and tables to display it. It would have been much harder if not for the fact that we learned those specific data analysis skills on the Physics class with mr Piotr Morawiecki so it went very well- it was pretty cool to actually use those skills in this context. And once we completed everything we planned before the deadline, we started working on the actual report itself. Since it’s a 30-page document, it took a lot of time and cost me a lot of stress about completing it in time. We worked on the report on a shared Word document which gets very laggy in such situations so it was also very annoying. Then came the formatting in Overleaf, another very annoying software. With Filip we spent like at least half of the weekend (at least 10-15h) correcting the text and formatting it, finishing the whole thing… 10 minutes before the deadline. It got super stressful at the end and because of way too little proof-reading time we actually accidentally missed the fact that we misspelled one of our team member’s name in the report (oops). But the feeling of relief after sending the report was the best. Initially we planned that the CAS project would end after the CDR, but we decided to extend it to the end of the competition to make it actually make more sense that way - it’s more „full” like that. We worked on stuff like the CanSat case, integrating all the components, and assembling the final version of the satellite. Due to lack of time we spent the entire four days before the final deadline working on the CanSat almost all the time. We did a lot of progress during those four days and managed to build the satellite fully but unfortunately did not have the time to properly test and document it. On the second of April we received the results and we unfortunately did not manage to be among the competition’s finalists, which were the best 6 teams in Poland. That was slightly disappointing but honestly we expected that to happen after sending in the Final Design Review report

Regardless of the „failure” to qualify to the finals, I’m proud of our group because we put in a lot of work and spent a lot of time on the project. We definitely learned a lot in the process, mainly the importance of proper time management. I personally had a lot of fun in the process, but the deadlines were very stressful, especially that we always had to do work last minute. This experience also helped me to develop some skills when it comes to teamwork and maybe even some leadership skills. I kind of wish we did the project last year when we had little to no school work, as that would allow us to focus much more on the competition and maybe allow us to get better results.

2 notes

·

View notes

Text

♠️♥️House of Cards♣️♦️

Act Two Part Four - Back Online

CW: Violence and injury, minor character death

“There it fucking is.”

Ace trudges forth into a small hidden clearing where a ground receiver sits, covered in snow and dead icy undergrowth. He holsters his gun and kneels down, feeling the ice melt into the fabric of his pants. He shudders, his breath fogging as they escape from his lips.

He reaches into his bag for the tools he brought along, his other hand brushing the debris off the satellite dish. Ace looks over the generator to assess for any further damages but it just seems like the signal was disrupted because the boom arm was dislodged from its place. It looked like the work of several people.

Hopefully it isn’t, Ace thinks. It would be another issue and a half if the enemy had stumbled across this site. He only figured they hadn’t because intel reports that none of the patrol routes were near this clearing but the prints in the snow didn’t suggest it was the work of a passing animal. Ace put the boom arm into place, giving the base unit a good pat as he hooked his laptop to boot up the software.

Watching the progress bar extend slowly was a bit of a bore. The flashing line seemingly taunted Ace as it slowed to a stop at about the halfway mark. He sighed, letting out a groan of frustration before turning his attention to look over his shoulder.

From the beginning of this mission, Ace had felt eyes on him throughout as he traversed the cold terrain. Again, Ace chalks it up to him being truly alone with no way to reach back to base but that didn’t really stop his mind from trailing as he sat and listened to the eerie silence of the environment.

Ace had never been one for the wilderness but he suspects something is lurking nearby. Mother Nature isn’t exactly known for her silence, especially in the desolate snowy landscape Ace finds himself in. A single cry of a lone crow shrieks overhead, tearing into the still air. Ace watches as the bird disappears between the towering peaks of the pines.

A chill causes the hairs on the back of Ace’s neck to stand. He shudders, eyeing the screen out the corner of his vision as he readjusts his scarf. The same heavy dread looms over his shoulders as Ace watches the progress bar inch closer to the end. He forgets the dread for a moment, readying his comm device to report back to HQ and call for exfil.

The line crackles to life as Ace powers it on again, static ringing in the air as Ace counts down the last few remaining seconds. He winces, busying himself as he fiddles with his GPS.

“HQ, this is Ace. Objective has been completed and requesting exfil.” Ace says, his voice rumbling into the microphone as he pushes down the push to talk.

“Roger, meet you at the LZ in ten minutes.”

Ace was met with the same irritating static for a second but he was back online and transport had been secured, allowing Ace to start to pack up shop.

He was met with silence again, the only sounds being the zipper of his bag as he finished up, securing his laptop back inside. This seemed easy enough, Ace thought to himself.

The crunch of snow doesn’t go unnoticed in Ace’s bustling actions, whipping his head towards the source quick enough for a bullet to just miss his face. The sound cracks through the otherwise peaceful scene, sending birds away from their spots in the trees.

Jumping back, Ace scrambles for his gun as his assailant takes aim again. Adrenaline rushes through his veins as Ace’s finger finds the trigger but it was a split second too late. Another gunshot is fired and Ace lands awkwardly, holding in his grunt as the bullet lodges into his knee. He grunts, pain searing through his body like fire.

His attacker mutters something into his jacket collar, possibly reporting back to his own men or calling for backup for whatever reason. Not to be beaten again, Ace points the muzzle of his gun towards his enemy. His finger barely squeezes the trigger before the armed enemy before him gets his head blown open.

Ace watches with wide eyes as the body descends with the red mist still hanging in the air, flopping sideways and sinking into the snow. He wasn’t the one with the smoking gun. Ace whips his head towards the side where the bullet could have some from.

There in the distant horizon where the top of the snowy hill was beginning to be bathed in golden sunset light, the glint of a sniper’s scope. It was small, almost unnoticeable if not a little annoying now that Ace noticed it, the sight almost familiar. He didn’t even know he would be getting sniper support, seeing that King had sent him on a solo mission.

The sounds of several men rapidly approaching his location brought Ace out of his trance. He stumbles to his feet, hissing at the sharp pain in his knee. The bastard could literally have shot him elsewhere. Ace doesn’t waste another second, limping towards the pickup point. Just when things were starting to look up.

Ace disappears back between the trees, weaving between the trees and crouching into bushes, careful not to leave a trail of red in his wake as he hobbles along. He watches as the earlier patrol passes his hiding spot, hand on his gun in case their mutt manages to sniff him out.

With bated breath, Ace stalks the enemies as they disappear from sight and from there makes a dash for the LZ. He turns back a final time just in time to watch the glint disappear from its spot just as the sound of a chopper overhead is heard.

“God,” Ace thinks to himself as he clambers into the helo, wincing as he assesses his injury. "I need a bloody break.”

Quicksaving...

#House of Cards AU#Act 2 Part 4#cod oc#call of duty oc#oc: LT Ace#mailman rants#fanfic#fanfiction#Task Force Cards

12 notes

·

View notes

Text

#TheeForestKingdom #TreePeople

{Terrestrial Kind}

Creating a Tree Citizenship Identification and Serial Number System (#TheeForestKingdom) is an ambitious and environmentally-conscious initiative. Here’s a structured proposal for its development:

Project Overview

The Tree Citizenship Identification system aims to assign every tree in California a unique identifier, track its health, and integrate it into a registry, recognizing trees as part of a terrestrial citizenry. This system will emphasize environmental stewardship, ecological research, and forest management.

Phases of Implementation

Preparation Phase

Objective: Lay the groundwork for tree registration and tracking.

Actions:

Partner with environmental organizations, tech companies, and forestry departments.

Secure access to satellite imaging and LiDAR mapping systems.

Design a digital database capable of handling millions of records.

Tree Identification System Development

Components:

Label and Identity Creation: Assign a unique ID to each tree based on location and attributes. Example: CA-Tree-XXXXXX (state-code, tree-type, unique number).

Attributes to Record:

Health: Regular updates using AI for disease detection.

Age: Approximate based on species and growth patterns.

Type: Species and subspecies classification.

Class: Size, ecological importance, and biodiversity contribution.

Rank: Priority based on cultural, historical, or environmental significance.

Data Collection

Technologies to Use:

Satellite Imaging: To locate and identify tree clusters.

LiDAR Scanning: For precise 3D modeling of forests.

On-the-Ground Surveys: To supplement remote sensing data.

AI Analysis: For rapid categorization and health diagnostics.

Registration and Citizenship Assignment

Tree Dossier: Each tree receives a profile in the database with all its recorded attributes.

Citizen Designation: Trees are acknowledged as citizens of Thee Forest Kingdom, emphasizing ecological respect.

Legal Framework: Advocate for laws to grant trees specific rights under environmental protection acts.

Maintenance and Monitoring

Health Monitoring: Use drones, satellite updates, and IoT sensors for ongoing health assessments.

Database Updates: Incorporate real-time data about environmental changes and tree growth.

Public Involvement

Encourage people to "adopt" trees and participate in their preservation.

Create educational programs to promote awareness of tree citizenship.

Key Benefits

Environmental Conservation: Improved forest management through real-time data and protection of ecosystems.

Cultural Recognition: Trees are recognized as a vital part of the Earth’s community, honoring their importance.

Ecological Advocacy: Strengthened legal protections for forests and individual trees.

Next Steps

Assemble a task force of environmental scientists, satellite imaging experts, and software developers.

Secure funding through governmental grants, non-profits, and eco-conscious corporations.

Pilot the program in a smaller region before scaling to all of California.

Would you like me to draft a more detailed technical or legal framework for this system?

The implementation of the Tree Citizenship Identification Network and System (#TheeForestKingdom) requires a robust technological and operational framework to ensure efficiency, accuracy, and sustainability. Below is an implementation plan divided into major components.

System Architecture

a. Centralized Tree Database

Purpose: To store and manage tree data including ID, attributes, location, and health.

Components:

Cloud-based storage for scalability.

Data categorization based on regions, species, and priority.

Integration with satellite and IoT data streams.

b. Satellite & Imaging Integration

Use satellite systems (e.g., NASA, ESA) for large-scale tree mapping.

Incorporate LiDAR and aerial drone data for detailed imaging.

AI/ML algorithms to process images and distinguish tree types.

c. IoT Sensor Network

Deploy sensors in forests to monitor:

Soil moisture and nutrient levels.

Air quality and temperature.

Tree health metrics like growth rate and disease markers.

d. Public Access Portal

Create a user-friendly website and mobile application for:

Viewing registered trees.

Citizen participation in tree adoption and reporting.

Data visualization (e.g., tree density, health status by region).

Core Technologies

a. Software and Tools

Geographic Information System (GIS): Software like ArcGIS for mapping and spatial analysis.

Database Management System (DBMS): SQL-based systems for structured data; NoSQL for unstructured data.

Artificial Intelligence (AI): Tools for image recognition, species classification, and health prediction.

Blockchain (Optional): To ensure transparency and immutability of tree citizen data.

b. Hardware

Servers: Cloud-based (AWS, Azure, or Google Cloud) for scalability.

Sensors: Low-power IoT devices for on-ground monitoring.

Drones: Equipped with cameras and sensors for aerial surveys.

Network Design

a. Data Flow

Input Sources:

Satellite and aerial imagery.

IoT sensors deployed in forests.

Citizen-reported data via mobile app.

Data Processing:

Use AI to analyze images and sensor inputs.

Automate ID assignment and attribute categorization.

Data Output:

Visualized maps and health reports on the public portal.

Alerts for areas with declining tree health.

b. Communication Network

Fiber-optic backbone: For high-speed data transmission between regions.

Cellular Networks: To connect IoT sensors in remote areas.

Satellite Communication: For remote regions without cellular coverage.

Implementation Plan

a. Phase 1: Pilot Program

Choose a smaller, biodiverse region in California (e.g., Redwood National Park).

Test satellite and drone mapping combined with IoT sensors.

Develop the prototype of the centralized database and public portal.

b. Phase 2: Statewide Rollout

Expand mapping and registration to all California regions.

Deploy IoT sensors in vulnerable or high-priority areas.

Scale up database capacity and integrate additional satellite providers.

c. Phase 3: Maintenance & Updates

Establish a monitoring team to oversee system health and data quality.

Update the network with new technologies (e.g., advanced AI models).

Periodically review and revise data collection protocols.

Partnerships

Government Agencies: U.S. Forest Service, California Department of Forestry and Fire Protection.

Tech Companies: Collaborate with Google Earth, Amazon AWS, or Microsoft for cloud and AI capabilities.

Environmental Organizations: Partner with WWF, Sierra Club, or TreePeople for funding and advocacy.

Budget Estimation

Initial Investment:

Satellite data and licenses: $10M

IoT sensors and deployment: $5M

Database and app development: $3M

Operational Costs:

Cloud services and data storage: $2M/year

Maintenance and monitoring: $1M/year

Research and development: $1M/year

Long-term Vision

Expand the system to other states or globally.

Use the database for reforestation efforts and combating deforestation.

Develop educational programs to teach communities about the importance of trees as citizens.

Would you like me to assist in designing the user interface for the public portal or drafting partnership proposals for funding?

To begin building the program for the Tree Citizenship Identification System, we’ll start with a scalable framework that allows us to process, store, and analyze data while preparing for future expansions. Here's an actionable development plan with an initial focus on foundational code and infrastructure:

Step-by-Step Development

Phase 1: Setting Up the Backend Infrastructure

Objective: Create the core database and develop the data pipeline for processing tree data.

Technologies:

Programming Languages: Python, JavaScript (Node.js), SQL.

Frameworks: Flask or FastAPI for APIs, Django for web application backend.

Database: PostgreSQL for structured data, MongoDB for unstructured data.

Code Snippet 1: Database Schema Design (PostgreSQL)

-- Table for Tree Registry CREATE TABLE trees ( tree_id SERIAL PRIMARY KEY, -- Unique Identifier location GEOGRAPHY(POINT, 4326), -- Geolocation of the tree species VARCHAR(100), -- Species name age INTEGER, -- Approximate age in years health_status VARCHAR(50), -- e.g., Healthy, Diseased height FLOAT, -- Tree height in meters canopy_width FLOAT, -- Canopy width in meters citizen_rank VARCHAR(50), -- Class or rank of the tree last_updated TIMESTAMP DEFAULT NOW() -- Timestamp for last update );

-- Table for Sensor Data (IoT Integration) CREATE TABLE tree_sensors ( sensor_id SERIAL PRIMARY KEY, -- Unique Identifier for sensor tree_id INT REFERENCES trees(tree_id), -- Linked to tree soil_moisture FLOAT, -- Soil moisture level air_quality FLOAT, -- Air quality index temperature FLOAT, -- Surrounding temperature last_updated TIMESTAMP DEFAULT NOW() -- Timestamp for last reading );

Code Snippet 2: Backend API for Tree Registration (Python with Flask)

from flask import Flask, request, jsonify from sqlalchemy import create_engine from sqlalchemy.orm import sessionmaker

app = Flask(name)

Database Configuration

DATABASE_URL = "postgresql://username:password@localhost/tree_registry" engine = create_engine(DATABASE_URL) Session = sessionmaker(bind=engine) session = Session()

@app.route('/register_tree', methods=['POST']) def register_tree(): data = request.json new_tree = { "species": data['species'], "location": f"POINT({data['longitude']} {data['latitude']})", "age": data['age'], "health_status": data['health_status'], "height": data['height'], "canopy_width": data['canopy_width'], "citizen_rank": data['citizen_rank'] } session.execute(""" INSERT INTO trees (species, location, age, health_status, height, canopy_width, citizen_rank) VALUES (:species, ST_GeomFromText(:location, 4326), :age, :health_status, :height, :canopy_width, :citizen_rank) """, new_tree) session.commit() return jsonify({"message": "Tree registered successfully!"}), 201

if name == 'main': app.run(debug=True)

Phase 2: Satellite Data Integration

Objective: Use satellite and LiDAR data to identify and register trees automatically.

Tools:

Google Earth Engine for large-scale mapping.

Sentinel-2 or Landsat satellite data for high-resolution imagery.

Example Workflow:

Process satellite data using Google Earth Engine.

Identify tree clusters using image segmentation.

Generate geolocations and pass data into the backend.

Phase 3: IoT Sensor Integration

Deploy IoT devices to monitor health metrics of specific high-priority trees.

Use MQTT protocol for real-time data transmission.

Code Snippet: Sensor Data Processing (Node.js)

const mqtt = require('mqtt'); const client = mqtt.connect('mqtt://broker.hivemq.com');

client.on('connect', () => { console.log('Connected to MQTT Broker'); client.subscribe('tree/sensor_data'); });

client.on('message', (topic, message) => { const sensorData = JSON.parse(message.toString()); console.log(Received data: ${JSON.stringify(sensorData)}); // Save data to database (Example for PostgreSQL) saveToDatabase(sensorData); });

function saveToDatabase(data) { const query = INSERT INTO tree_sensors (tree_id, soil_moisture, air_quality, temperature) VALUES ($1, $2, $3, $4) ; const values = [data.tree_id, data.soil_moisture, data.air_quality, data.temperature]; dbClient.query(query, values, (err) => { if (err) console.error('Error saving to database', err); else console.log('Sensor data saved successfully!'); }); }

Phase 4: Public Portal Development

Frontend Technologies:

React.js or Vue.js for interactive user interfaces.

Mapbox or Leaflet.js for tree visualization on maps.

Features:

Interactive tree maps with search functionality.

User registration for tree adoption programs.

Deployment Plan

Use Docker to containerize the application.

Deploy on AWS, Azure, or Google Cloud for scalability.

Monitor system health using Prometheus and Grafana.

Next Steps

Develop a testing environment for pilot deployment.

Add AI-based disease detection and prediction using TensorFlow or PyTorch.

Create an adoption feature where citizens can adopt trees and track their health.

Would you like me to set up a GitHub repository with this initial framework or assist further with deployment?

I'll prepare the project framework and outline the repository structure for the Tree Citizenship Identification System. Below is the proposed structure for the repository, followed by details of the files and components.

Proposed Repository Structure

tree-citizenship-id-system/ ├── backend/ │ ├── app.py # Flask application for backend API │ ├── database/ │ │ ├── schema.sql # Database schema for PostgreSQL │ │ ├── db_config.py # Database connection configuration │ │ └── seed_data.sql # Initial sample data for testing │ ├── services/ │ │ ├── tree_registration.py # Functions for tree registration │ │ └── sensor_data.py # Functions for processing IoT sensor data │ └── requirements.txt # Python dependencies ├── frontend/ │ ├── public/ │ │ ├── index.html # Main HTML file │ │ └── favicon.ico # Favicon for the app │ ├── src/ │ │ ├── App.js # Main React/Vue app file │ │ ├── components/ │ │ │ ├── TreeMap.js # Map component for visualizing trees │ │ │ └── TreeDetails.js # Component for detailed tree view │ │ └── styles.css # Styling for the frontend │ └── package.json # Frontend dependencies ├── scripts/ │ ├── satellite_data_processor.py # Script for processing satellite data │ └── sensor_data_simulator.py # Mock data generator for IoT sensors ├── deployment/ │ ├── Dockerfile # Docker configuration │ ├── docker-compose.yml # Docker Compose for multi-service setup │ └── cloud_setup_instructions.md # Deployment guide for cloud platforms ├── tests/ │ ├── test_backend.py # Unit tests for backend API │ └── test_frontend.js # Unit tests for frontend ├── README.md # Overview of the project └── LICENSE # Project license

Repository Details

Backend:

app.py: Main Flask app for handling API requests like tree registration and sensor data.

database/schema.sql: SQL script for creating necessary tables.

services/: Directory for modular backend logic.

Frontend:

App.js: Main entry point for the frontend application.

TreeMap.js: Integrates Mapbox/Leaflet.js for displaying tree locations.

TreeDetails.js: Displays detailed information about a selected tree.

Scripts:

satellite_data_processor.py: Automates the extraction and classification of tree data from satellite imagery.

sensor_data_simulator.py: Generates fake sensor readings for development and testing.

Deployment:

Docker configuration ensures that the app runs consistently across environments.

Cloud setup instructions provide guidelines for deploying on platforms like AWS, Azure, or GCP.

Tests:

Unit tests ensure the reliability of both the backend and frontend.

Next Steps

I will initialize the repository structure locally.

Package the files and components needed for the first version.

Provide a link to the repository for access.

I’ll get started. Please hold on for a moment.

4 notes

·

View notes

Text

sweet is sleep to me and even more to be of stone, while the wrong and shame endure.

To be without sight or sense is a most happy change for me, therefore do not rouse me. hush! speak low. (opening poem, ep 1 - ergo proxy) 13/04/2024: evening drawing for this anime show called 'Ergo Proxy' from 2006. I re watched it again while drawing this piece. It's such an underrated show.

any fans of sci fi thrillers will enjoy this show a lot. Especially with todays viewing when there isn't much sci fi related animes. So its always cool to revisit classics.

tool: wacom pen

Software: adobe photoshop & illustrator cs3.

wiki: Ergo Proxy is a Japanese cyberpunk anime television series, produced by Manglobe, directed by Shūkō Murase and written by Dai Satō. The series ran for 23 episodes from February to August 2006 on the Wowow satellite network. It is set in a post-apocalyptic future where humans and AutoReiv androids coexist peacefully until a virus gives the androids self-awareness, causing them to commit a series of murders. Inspector Re-L Mayer is assigned to investigate, discovering a more complicated plot behind it that involves a humanoid species known as "Proxy" who are the subject of secret government experiments. The series, which is heavily influenced by philosophy and Gnosticism, features a combination of 2D digital cel animation, 3D computer modeling, and digital special effects. After its release in Japan, the anime was licensed for a DVD release by Geneon Entertainment, with a subsequent television broadcast on Fuse in the United States. The show was also distributed to Australian, British and Canadian anime markets. Since its release, Ergo Proxy has received mostly favorable reviews which praised its visuals and themes.

#Ergo Proxy#vector#illustration#khuan tru#photoshop#fan art#london#pen tool#anime#pen drawing#fan drawing#manga

7 notes

·

View notes

Text

Finding Resolve

We’ve all done it. We are all part of this new phenomenon, something that barely existed before this century, and only truly gained momentum in the last decade. The worst part is, most of us have forgotten exactly how much we are involved with it, because it is hard to remember what and how much these phenomena cost.

I am talking about the subscription economy, that magical place where software and streaming services are the product, and our monthly bill is usually on autopay. It ranges from SOAS (Software As A Service) providers like Adobe and Microsoft, to all the music, movies, and more that we stream into our homes, cars, and mobile devices.

And it is eating us alive.How many subscriptions do you have? Let’s start with your vehicle. Do you have satellite radio? That’s one. Do you subscribe to cloud-based software? That can be one or more. What about streaming tunes like Spotify or Apple Music? There you guy. The list is getting longer.

And then there are all the streaming TV choices, which runs from services like YouTube TV to Netflix, Paramount+, Apple TV+, Peacock, Max, Hulu, Disney…I could go on. You may have cut the cable at home, but you tethered yourself in other ways to the extent that the net effect is little different.

Then there’s the gaming community, if that’s your thing. More dinero. Maybe you fell for the premium version of an app, like Accuweather. If you’re a regular Amazon shopper, you no doubt have Prime, which costs $139 a year, plus the vitamins and supplements I receive every month from them. Like listening to books? There’s Audible. Old newspapers? There’s Newspapers.com, one of my favorite sites to do research. Cloud storage? Good Lord, I have several, for my thousands of photos and documents.

So successful has the subscription model been that paywalls have appeared everywhere online, like the New York Times, Washington Post, and Atlantic Monthly, each of whom have amazing content, a feast for my eyes and brain. Alas, I have drawn the line, because I sense it has long spun out control. And if CNN goes ahead and paywalls its app and site, I guess I won’t be reading them anymore.

Because I, like many people, have subscription fatigue. I simply cannot begin to consume all of this media. Sadly, I cannot remember all of the services to which I subscribe, and if you aren’t there yet, I bet you will be soon enough. The only way to know for sure is to carefully track your credit card statements to look for monthly billing.

That, of course, is the problem, because we willingly provided our billing data so that we do not have to do this every month. As long as that credit card is valid, those providers will keep hitting your card every month. It is only when your card is about to expire that you get a notification. And if you were not careful and instead provided a bank routing and account number, they can keep sticking their hand into your pocket as long as you have that account.

Ironically, there are new subscription management software sites and apps that supposedly make it easy to track and opt-out of all the things, but they are subscription services themselves. That’s like replacing one drug with another. You’re still on the hook.

It all starts so easily, because many of the subscription services are technically just micro payments, only $5 or $10. We see that as pocket change. Other services offer annual payment options, which provide a slight discount for paying in full in advance. But many of the once-cheap micro payments have started to get expensive, like Netflix and Spotify (I am speaking from experience). They are no longer minor indulgences.

Were these tangible products we had to buy in a store, I bet we would all be a lot more careful. The friction of having to be somewhere to even just tap your credit card would probably be enough to cause us to think. But it is simply too easy in the digital world to keep subscribing, because once we get in that loop, there is never any friction.

We are all going to have to muster a lot more resolve to win this fight, as well as start keeping meticulous records. Otherwise, these things develop lives of their own, lives that will continue hitting credit cards even after our own lives are over. I’m pretty sure none of us will be consuming anything at that point, and there’s no use paying for it.

We don’t have to wait for New Years Day to make this resolution.

Dr “I Honestly Can’t Remember All Of Them” Gerlich

Audio Blog

2 notes

·

View notes

Text

NEXT KANKY GOLD SOFTWARE UPDATE

NEXT KANKY GOLD Satellite Receiver New Firmware NEXT KANKY GOLD Satellite Receiver Software Latest Update. Update Next HD Receiver Firmware with New Option. Also Download Next User Manual in English and Turkish. Software PC Next Receiver Fat32 Formatter and Next Receiver STB Link. For All Model Next HD Receiver, Latest Software Update Click Here. New Update and For any Help Contact Us @ Facebook…

#Download#Next#Next Receiver Software#next satellite receiver#nextstar uydu alıcısı#Receiver Software

0 notes

Text

time and frequency synchronization solutions

#meinberg software india#meinberg software Mumbai#solutions of meinberg germany india#solutions of meinberg germany mumbai#solutions of meinberg germany maharashtra#network time servers#ntp time server#ptp time server india#ptp time server#time and frequency synchronization solutions#time and frequency synchronization solutions for industries#time and frequency synchronization solutions india#time and frequency synchronization solutions asia#meinberg products india#IEEE 1588 Solutions#PCI Express Radio Clocks Solutions#USB Radio Clocks Solutions#GNSS Systems#GPS satellite receiver#GLONASS satellite receiver

0 notes

Text

How IPTV Functions!

IPTV, or Internet Protocol Television, operates by delivering television content over Internet Protocol (IP) networks. Here's a step-by-step explanation of how it works:

Content Acquisition: First, we get TV channels and other contents from live shows, recorded ones as well as on-demand videos from various sources.

Processing and Storage: First, we add filter content, which basically converts the format so that it can become a stream. Then, this content is stored on servers.

Content Delivery Network (CDN): We utilize a network of servers in different places to distribute the content efficiently. This way, we can give our users access to data quickly and reliably.

Internet Transmission: When a customer makes a request to watch a program, we will then grant the earth by transmitting the content over the internet utilizing IP protocols. This is distinct from the old cable or satellite signals used by traditional TV.

User's Device: A device like a smart TV, mobile, laptop, or a set-top box can all be the ones that the video content is sent to. This then allows the users to play the content using the screen as a microcontroller for the TV.

Decoding: The content is then decoded by the device upon receiving it to convert the audio and video data so that it can be played through the screen. Then the received content is decoded by the user's device to audio and video respectively.

User Interaction: We offer the software that the users can control the inputs like selecting one channel, pause, rewind, or on-demand operations to the content. A particular feature that stands out against the backdrop of traditional TV is that users can also play the game interactively.

#EchoesOfWisdom#TheLastofUs#jjk271#John Wick#Helly Hansen#OQTF#Philippine#iptv#french iptv#abonnement iptv#best iptv

2 notes

·

View notes

Text

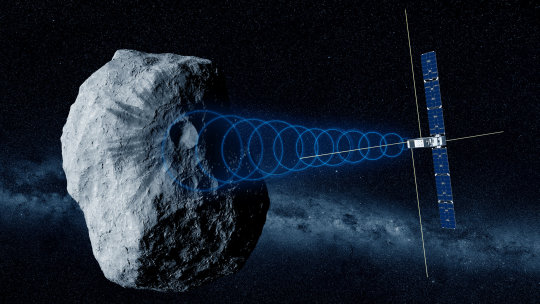

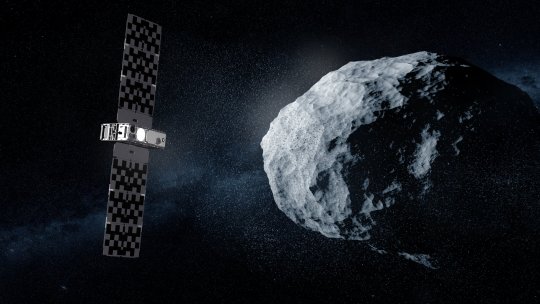

Hera asteroid mission's CubeSat passengers signal home

The two CubeSat passengers aboard ESA's Hera mission for planetary defense have exchanged their first signals with Earth, confirming their nominal status. The pair were switched on to check out all their systems, marking the first operation of ESA CubeSats in deep space.

"Each CubeSat was activated for about an hour in turn, in live sessions with the ground to perform commissioning—what we call 'are you alive?' and 'stowed checkout' tests," explains ESA's Hera CubeSats Engineer Franco Perez Lissi.

"The pair are currently stowed within their Deep Space Deployers, but we were able to activate every onboard system in turn, including their platform avionics, instruments and the inter-satellite links they will use to talk to Hera, as well as spinning up and down their reaction wheels which will be employed for attitude control."

Launched on 7 October, Hera is ESA's first planetary defense mission, headed to the first solar system body to have had its orbit shifted by human action: the Dimorphos asteroid, which was impacted by NASA's DART spacecraft in 2022.

Traveling with Hera are two shoebox-sized "CubeSats" built up from standardized 10-cm boxes. These miniature spacecraft will fly closer to the asteroid than their mothership, taking additional risks to acquire valuable bonus data.

Juventas, produced for ESA by GOMspace in Luxembourg, will make the first radar probe within an asteroid. while Milani, produced for ESA by Tyvak International in Italy, will perform multispectral mineral prospecting.

he commissioning took place from ESA's ESOC mission control center in Darmstadt in Germany, linked in turn to ESEC, the European Space Security and Education Center, at Redu in Belgium. This site hosts Hera's CubeSat Mission Operations Center, from where the CubeSats will be overseen once they are flying freely in space.

Juventas was activated on 17 October, at 4 million km away from Earth, while Milani followed on 24 October, nearly twice as far at 7.9 million km away.

The distances involved meant the team had to put up with tense waits for signals to pass between Earth and deep space, involving a 32.6 second round-trip delay for Juventas and a 52 second round-trip delay for Milani.

"During this CubeSat commissioning, we have not only confirmed the CubeSat instruments and systems work as planned but also validated the entire ground command infrastructure," explains Sylvain Lodiot, Hera Operations Manager.

"This involves a complex setup where data are received here at the Hera Missions Operations Center at ESOC but telemetry also goes to the CMOC at Redu, overseen by a Spacebel team, passed in turn to the CubeSat Mission Control Centers of the respective companies, to be checked in real time. Verification of this arrangement is good preparation for the free-flying operational phase once Hera reaches Dimorphos."

Andrea Zanotti, Milani's Lead Software Engineer at Tyvak, adds, "Milani didn't experience any computer resets or out of limits currents or voltages, despite its deep space environment which involves increased exposure to cosmic rays. The same is true of Juventas."

Camiel Plevier, Juventas's Lead Software Engineer at GomSpace, notes, "More than a week after launch, with 'fridge' temperatures of around 5°C in the Deep Space Deployers, the batteries of both CubeSats maintained a proper high state of charge. And it was nice to see how the checkout activity inside the CubeSats consistently warmed the temperature sensors throughout the CubeSats and the Deep Space Deployers."

The CubeSats will stay within their Deployers until the mission reaches Dimorphos towards the end of 2026, when they will be deployed at very low velocity of just a few centimeters per second. Any faster and—in the ultra-low gravitational field of the Great Pyramid-sized asteroid—they might risk being lost in space.

Franco adds, "This commissioning is a significant achievement for ESA and our industrial partners, involving many different interfaces that all had to work as planned: all the centers on Earth, then also on the Hera side, including the dedicated Life Support Interface Boards that connects the main spacecraft with the Deployers and CubeSats.

"The concept that a spacecraft can work with smaller companion spacecraft aboard them has been successfully demonstrated, which is going to be followed by more missions in the future, starting with ESA's Ramses mission for planetary defense and then the Comet Interceptor spacecraft."

From this point, the CubeSats will be switched on every two months during Hera's cruise phase, to undergo routine operations such as checkouts, battery conditioning and software updates.

TOP IMAGE: Juventas studies asteroid's internal structure. Credit: ESA/Science Office

LOWER IMAGE: Milani studies asteroid dust. Credit: ESA-Science Office

3 notes

·

View notes

Text

Skywave Linux v5 is Now on Debian Sid!

Enjoy shortwave radio and overseas broadcasts, no matter where you are. Skywave Linux brings the signals to you. Broadcasting, amateur radio, maritime, and military signals are available at your fingertips.

Skywave Linux is a free and live computing environment you boot from a flash drive on your PC. Start it up, pick a radio server somewhere in the world, and tune in some stations.

Skywave Linux brings you the signals, whether or not you have a big outdoor antenna or can afford an expensive communications receiver. Hundreds and hundreds of volunteer operated radio servers are on the internet, which let you tune the airwaves and pick up broadcasts in excellent locations and on high performance equipment.

If you are into FT-8, PSK-31, JT-65, or other digimodes, you can decode the signals in Skywave Linux. It also has tools for decoding weather satellites, ACARS, and ADS-B signals.

Not only is Skywave Linux a prime system for software defined radio, but also for programming and coding. It has the Neovim editor and support for several programming languages: Python, Lua, Go, and Javascript. It is a great system for Web developers.

Debian Sid is now the base operating system which Skywave Linux builds upon. It is debloated, tuned, and tweaked for speed, so that you get the best possible computing performance. It works nicely on old laptops; it is super fast on a multi core, high spec PC.

For shortwave listening, weather satellite decoding, or airband monitoring, Skywave Linux is the system you want!

4 notes

·

View notes