#Streaming Software Comparison

Explore tagged Tumblr posts

Text

A Beginner's Guide to Streaming: Unveiling the Power of OBS Software and Hardware Encoding

Introduction In today’s digital age, streaming has become an increasingly popular way to connect, share content, and interact with a global audience. Whether you’re an aspiring gamer, a talented musician, or a knowledgeable creator, streaming allows you to showcase your talents and engage with viewers in real-time. To embark on this exciting journey, you’ll need the right tools and knowledge. In…

View On WordPress

#Best Streaming Practices#Encoding Methods Comparison#Hardware Encoding Benefits#Hardware vs. Software Encoding#How to Start Streaming#Live Streaming Essentials#Live Streaming Setup#OBS Features and Functions#OBS Scene Creation#OBS Software Guide#OBS Tutorial#OBS User Interface#Software Encoding Explained#Stream Quality Optimization#Streaming Equipment Guide#Streaming for Beginners#Streaming Platform Choices#Streaming Software Comparison#Streaming Tips and Tricks

1 note

·

View note

Text

What is the best and safe YouTube to mp4 converter for Windows PC

1. SameMovie VideoOne Downloader Pros: Clean UI, supports HD/4K downloads, fast speed, no ads, can download entire playlists & metadata. Great balance of ease of use and performance. Cons: Some advanced features are in the paid version (but worth it if you're a regular user).

2. 4K Video Downloader Pros: Good for batch downloads, subtitles, and high-res videos. Cons: Free version limits downloads, sometimes buggy updates.

3. yt-dlp (Command-line tool) Pros: Extremely powerful, fully customizable, works with many sites. Cons: Requires technical know-how, no GUI unless you add one.

4. SnapDownloader Pros: Multi-platform, fast, supports tons of websites. Cons: Paid after free trial, UI isn’t the most intuitive.

5. Online tools (like y2mate, SaveFrom) Pros: Quick and browser-based—no installation. Cons: Ads everywhere, limited output quality, not secure for regular use.

#youtube downloader#video downloader#download youtube videos#streaming tools#tech tips#digital tools#download tutorial#yt downloader#save youtube videos#software comparison#video saving tips#content creator tools#streaming hacks#media downloader

1 note

·

View note

Text

Jellyfin vs Plex: Best Self-hosted Media Server

Jellyfin vs Plex: Best Self-hosted Media Server #homelab #selfhosting #JellyfinvsPlex #mediaserversoftwarecomparison #PlexPassfeatures #opensourcemediaserver #mediastreamingsolutions #Jellyfinmediaserver #Plexmediaserver #hardwaretranscoding #mediaserver

Two names generally come up with self-hosting your own media files: Jellyfin and Plex streaming platforms. They each have great capabilities. However, let’s look at Jellyfin vs Plex and see which one might best fit for your home media services for hosting video and audio files. We will compare Plex vs Jellyfin in the following categories for media servers: Device compatibility User…

View On WordPress

#hardware transcoding#Jellyfin media server#Jellyfin vs Plex#live TV support#media server software comparison#media streaming solutions#open-source media server#Plex media server#Plex Pass features#remote media access

0 notes

Text

the scale of AI's ecological footprint

standalone version of my response to the following:

"you need soulless art? [...] why should you get to use all that computing power and electricity to produce some shitty AI art? i don’t actually think you’re entitled to consume those resources." "i think we all deserve nice things. [...] AI art is not a nice thing. it doesn’t meaningfully contribute to us thriving and the cost in terms of energy use [...] is too fucking much. none of us can afford to foot the bill." "go watch some tv show or consume some art that already exists. […] you know what’s more environmentally and economically sustainable […]? museums. galleries. being in nature."

you can run free and open source AI art programs on your personal computer, with no internet connection. this doesn't require much more electricity than running a resource-intensive video game on that same computer. i think it's important to consume less. but if you make these arguments about AI, do you apply them to video games too? do you tell Fortnite players to play board games and go to museums instead?

speaking of museums: if you drive 3 miles total to a museum and back home, you have consumed more energy and created more pollution than generating AI images for 24 hours straight (this comes out to roughly 1400 AI images). "being in nature" also involves at least this much driving, usually. i don't think these are more environmentally-conscious alternatives.

obviously, an AI image model costs energy to train in the first place, but take Stable Diffusion v2 as an example: it took 40,000 to 60,000 kWh to train. let's go with the upper bound. if you assume ~125g of CO2 per kWh, that's ~7.5 tons of CO2. to put this into perspective, a single person driving a single car for 12 months emits 4.6 tons of CO2. meanwhile, for example, the creation of a high-budget movie emits 2840 tons of CO2.

is the carbon cost of a single car being driven for 20 months, or 1/378th of a Marvel movie, worth letting anyone with a mid-end computer, anywhere, run free offline software that consumes a gaming session's worth of electricity to produce hundreds of images? i would say yes. in a heartbeat.

even if you see creating AI images as "less soulful" than consuming Marvel/Fortnite content, it's undeniably "more useful" to humanity as a tool. not to mention this usefulness includes reducing the footprint of creating media. AI is more environment-friendly than human labor on digital creative tasks, since it can get a task done with much less computer usage, doesn't commute to work, and doesn't eat.

and speaking of eating, another comparison: if you made an AI image program generate images non-stop for every second of every day for an entire year, you could offset your carbon footprint by… eating 30% less beef and lamb. not pork. not even meat in general. just beef and lamb.

the tech industry is guilty of plenty of horrendous stuff. but when it comes to the individual impact of AI, saying "i don’t actually think you’re entitled to consume those resources. do you need this? is this making you thrive?" to an individual running an AI program for 45 minutes a day per month is equivalent to questioning whether that person is entitled to a single 3 mile car drive once per month or a single meatball's worth of beef once per month. because all of these have the same CO2 footprint.

so yeah. i agree, i think we should drive less, eat less beef, stream less video, consume less. but i don't think we should tell people "stop using AI programs, just watch a TV show, go to a museum, go hiking, etc", for the same reason i wouldn't tell someone "stop playing video games and play board games instead". i don't think this is a productive angle.

(sources and number-crunching under the cut.)

good general resource: GiovanH's article "Is AI eating all the energy?", which highlights the negligible costs of running an AI program, the moderate costs of creating an AI model, and the actual indefensible energy waste coming from specific companies deploying AI irresponsibly.

CO2 emissions from running AI art programs: a) one AI image takes 3 Wh of electricity. b) one AI image takes 1mn in, for example, Midjourney. c) so if you create 1 AI image per minute for 24 hours straight, or for 45 minutes per day for a month, you've consumed 4.3 kWh. d) using the UK electric grid through 2024 as an example, the production of 1 kWh releases 124g of CO2. therefore the production of 4.3 kWh releases 533g (~0.5 kg) of CO2.

CO2 emissions from driving your car: cars in the EU emit 106.4g of CO2 per km. that's 171.19g for 1 mile, or 513g (~0.5 kg) for 3 miles.

costs of training the Stable Diffusion v2 model: quoting GiovanH's article linked in 1. "Generative models go through the same process of training. The Stable Diffusion v2 model was trained on A100 PCIe 40 GB cards running for a combined 200,000 hours, which is a specialized AI GPU that can pull a maximum of 300 W. 300 W for 200,000 hours gives a total energy consumption of 60,000 kWh. This is a high bound that assumes full usage of every chip for the entire period; SD2’s own carbon emission report indicates it likely used significantly less power than this, and other research has shown it can be done for less." at 124g of CO2 per kWh, this comes out to 7440 kg.

CO2 emissions from red meat: a) carbon footprint of eating plenty of red meat, some red meat, only white meat, no meat, and no animal products the difference between a beef/lamb diet and a no-beef-or-lamb diet comes down to 600 kg of CO2 per year. b) Americans consume 42g of beef per day. this doesn't really account for lamb (egads! my math is ruined!) but that's about 1.2 kg per month or 15 kg per year. that single piece of 42g has a 1.65kg CO2 footprint. so our 3 mile drive/4.3 kWh of AI usage have the same carbon footprint as a 12g piece of beef. roughly the size of a meatball [citation needed].

553 notes

·

View notes

Text

The Artisul team was kind enough to send me their Artisul D16 display tablet to review! Timelapse and review can be found under the read more.

I have been using the same model of display tablet for over 10 years now (a Wacom Cintiq 22HD) and feel like I might be set in my ways, so getting the chance to try a different brand of display tablet was also a new experience for me!

The Unboxing

The tablet arrived in high-quality packaging with enough protection that none of the components get scratched or banged up in the shipping process. I was pleasantly surprised that additionally to the tablet, pen, stand, cables and nibs it also included a smudge guard glove and a pen case.

The stand is very light-weight and I was at first worried that it would not be able to hold up the tablet safely, but it held up really well. I appreciated that it offered steeper levels of inclination for the tablet, since I have seen plenty other display tablets who don’t offer that level of ergonomics for artists. My only gripe is that you can’t anchor the tablet to the stand. It will rest on the stand and can be easily taken on or off, but that also means that you can bump into it and dislodge it from the stand if you aren’t careful. It would require significant force, but as a cat owner, I know that a scenario like that is more likely than I’d like.

Another thing I noticed is how light the tablet is in comparison to my Cintiq. Granted, my Cintiq is larger (22 inches vs the 15.8 inches of the Artisul D16), but the Artisul D16 comes in at about 1.5kg of weight. While I don’t consider display tablets that require a PC and an outlet to work really portable, it would be a lot easier to move with the Artisul D16 from one space to another. In comparison, my Cintiq weighs in at a proud 8.5kg, making it a chore to move around. I have it hooked up to a monitor stand to be able to move it more easily across my desk.

The Setup

The setup of the tablet was quick as well, with only minor hiccups. The drivers installed quickly and basic setup was done in a matter of minutes. That doesn’t mean it came without issues: the cursor vanished as soon as I hovered over the driver window, making it a guessing game where I would be clicking and the pen calibration refused to work on the tablet screen and instead always defaulted to my regular screen. I ended up using the out of the box pen calibration for my test drawing which worked well enough.

The tablet comes with customizable hot-keys that you can reassign in the driver software. I did not end up using the hot-keys, since I use a Razer Tartarus for all my shortcuts, but I did play around with them to get a feel for them. The zoom wheel had a very satisfying haptic feel to it which I really enjoyed, and as far as I could tell, you can map a lot of shortcuts to the buttons, including with modifier keys like ctrl, shift, alt and the win key. I noticed that there was no option to map numpad keys to these buttons, but I was informed by my stream viewers that very few people have a full size keyboard with a numpad anymore.

The pen comes with two buttons as well. Unlike the hot-keys on the side of the tablet, these are barely customizable. I was only able to assign mouse clicks to them (right, left, scroll wheel click, etc) and no other hotkeys. I have the alt key mapped to my pen button on my Cintiq, enabling me to color pick with a single click of the pen. The other button is mapped to the tablet menu for easy display switches. Not having this level of customization was a bit of a bummer, but I just ended up mapping the alt key to a new button on my Razer Tartarus and moved on.

The pen had a very similar size to my Wacom pen, but was significantly lighter. It also rattled slightly when shook, but after inspection this was just the buttons clicking against the outer case and no internal issues. The pen is made from one material, a smooth plastic finish. I would have liked for there to be a rubber-like material at the grip like on the Wacom pen for better handling, but it still worked fine without it.

Despite not being able to calibrate the pen for the display tablet, the cursor offset was minimal. It took me a while at the beginning to get used to the slight difference to my current tablet, but it was easy to get used to it and I was able to smoothly ink and color with the tablet. The screen surface was very smooth, reminding me more of an iPad surface. The included smudge guard glove helped mitigate any slipping or sliding this might have caused, enabling me to draw smoothly. Like with the cursor offset, it took me a while to get used to the different pressure sensitivity of the tablet, but I adapted quickly.

So what do I think of it?

Overall, drawing felt different on this tablet, but I can easily see myself getting used to the quirks of the tablet with time. Most of the issues I had were QoL things I am used to from my existing tablet.

But I think that’s where the most important argument for the tablet comes in: the price.

I love my Cintiq. I can do professional grade work on it and I rebought the same model after my old one got screen issues, I liked it that much. But it also costs more than a 1000 € still, even after being on the market for over 10 years (I bought it for about 1.500 € refurbished in 2014, for reference). The Artisul D16 on the other hand runs you a bit more than 200€. That is a significant price difference! I often get asked by aspiring artists what tools I use and while I am always honest with them, I also preface it by saying that they should not invest in a Cintiq if they are just starting out. They are high quality professional tools and have a price point that reflects that. You do not need these expensive tools to create art. You can get great results on a lot cheaper alternatives! I do this for a living so I can justify paying extra for the QoL upgrades the Cintiq offers me, but I have no illusion that they are an accessible tool for most people.

I can recommend the Artisul D16 as a beginner screen tablet for people who are just getting into art or want to try a display tablet for once. I wouldn’t give up my Cintiq for it, but I can appreciate the value it offers for the competitive price point. If you want to get an Artisul D16 for yourself, you can click this link to check out their shop!

AMAZON.US: https://www.amazon.com/dp/B07TQLGC81

AMAZON.JP: https://www.amazon.co.jp/dp/B07T6ZT84V

AMAZON.MX: https://www.amazon.com.mx/dp/B07T6ZT84V

Once again thank you to the Artisul team for giving me the opportunity to review their display tablet!

105 notes

·

View notes

Text

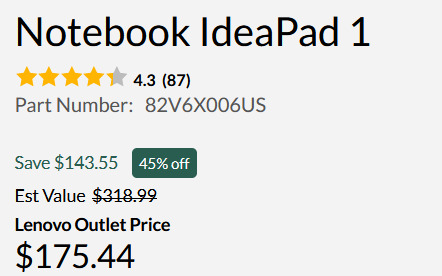

How to Buy a Computer for Cheaper

Buy refurbished. And I'm going to show you how, and, in general, how to buy a better computer than you currently have. I'm fairly tech-knowledgeable, but not an expert. But this is how I've bought my last three computers for personal use and business (graphics). I'm writing this for people who barely know computers. If you have a techie friend or family member, having them help can do a lot for the stress of buying a new computer.

There are three numbers you want to know from your current computer: hard drive size, RAM, and processor speed (slightly less important, unless you're doing gaming or 3d rendering or something else like that)

We're going to assume you use Windows, because if you use Apple I can't help, sorry.

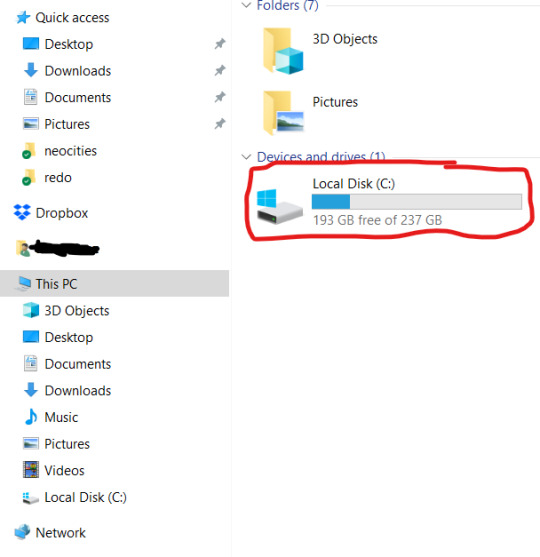

First is hard drive. This is how much space you have to put files. This is in bytes. These days all hard drives are in gigabytes or terabytes (1000 gigabytes = 1 terabyte). To get your hard drive size, open Windows Explorer, go to This PC (or My Computer if you have a really old OS).

To get more details, you can right-click on the drive. and open Properties. But now you know your hard drive size, 237 GB in this case. (this is rather small, but that's okay for this laptop). If you're planning on storing a lot of videos, big photos, have a lot of applications, etc, you want MINIMUM 500 GB. You can always have external drives as well.

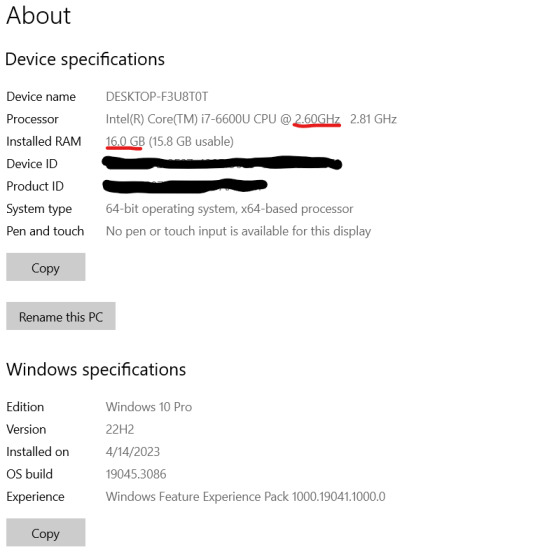

While you've got this open, right-click on This PC (or My Computer). This'll give you a lot of information that can be useful if you're trying to get tech support.

I've underlined in red the two key things. Processor: it can help to know the whole bit (or at least the Intel i# bit) just so you don't buy one that's a bunch older, but processor models are confusing and beyond me. The absolutely important bit is the speed, in gigahertz (GHz). Bigger is faster. The processor speed is how fast your computer can run. In this case the processor is 2.60 GHz, which is just fine for most things.

The other bit is RAM. This is "random-access memory" aka memory, which is easy to confuse for, like how much space you have. No. RAM is basically how fast your computer can open stuff. This laptop has 16 GB RAM. Make sure you note that this is the RAM, because it and the hard drive use the same units.

If you're mostly writing, use spreadsheets, watching streaming, or doing light graphics work 16 GB is fine. If you have a lot of things open at a time or gaming or doing 3d modeling or digital art, get at least 32 GB or it's gonna lag a lot.

In general, if you find your current laptop slow, you want a new one with more RAM and a processor that's at least slightly faster. If you're getting a new computer to use new software, look at the system requirements and exceed them.

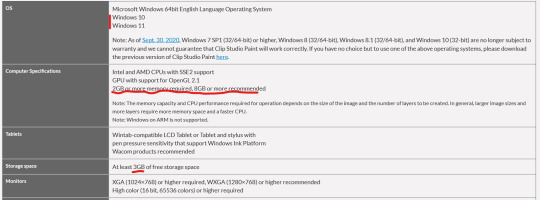

I'll show you an example of that. Let's say I wanted to start doing digital art on this computer, using ClipStudio Paint. Generally the easiest way to find the requirements is to search for 'program name system' in your search engine of choice. You can click around their website if you want, but just searching is a lot faster.

That gives me this page

(Clip Studio does not have very heavy requirements).

Under Computer Specs it tells you the processor types and your RAM requirements. You're basically going to be good for the processor, no matter what. That 2 GB minimum of memory is, again, the RAM.

Storage space is how much space on your hard drive it needs.

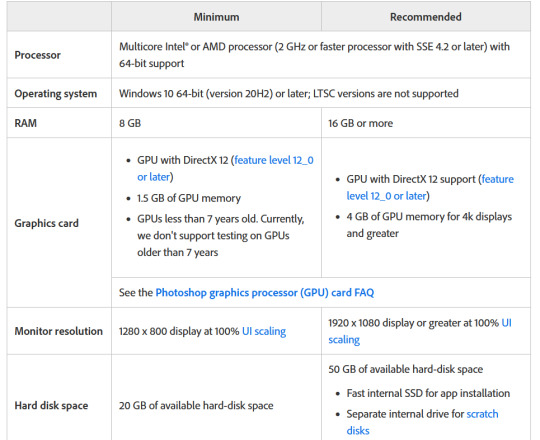

Actually for comparison, let's look at the current Photoshop requirements.

Photoshop wants LOTS of speed and space, greedy bastard that it is. (The Graphics card bit is somewhat beyond my expertise, sorry)

But now you have your three numbers: hard drive space, RAM (memory) and processor (CPU). Now we're going to find a computer that's better and cheaper than buying new!

We're going to buy ~refurbished~

A refurbished computer is one that was used and then returned and fixed up to sell again. It may have wear on the keyboard or case, but everything inside (aside from the battery) should be like new. (The battery may hold less charge.) A good dealer will note condition. And refurbished means any flaws in the hardware will be fixed. They have gone through individual quality control that new products don't usually.

I've bought four computers refurbished and only had one dud (Windows kept crashing during set-up). The dud has been returned and we're waiting for the new one.

You can buy refurbished computers from the manufacturers (Lenovo, Dell, Apple, etc) or from online computer stores (Best Buy and my favorite Newegg). You want to buy from a reputable store because they'll have warranties offered and a good return policy.

I'm going to show you how to find a refurbished computer on Newegg.

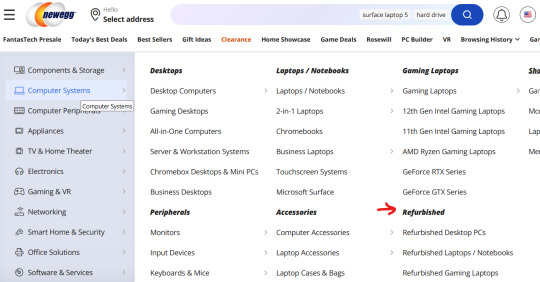

You're going to go to Newegg.com, you're gonna go to computer systems in their menu, and you're gonna find refurbished

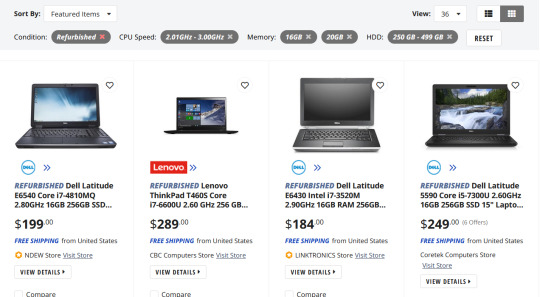

Then, down the side there's a ton of checkboxes where you can select your specifications. If there's a brand you prefer, select that (I like Lenovos A LOT - they last a long time and have very few problems, in my experience. Yes, this is a recommendation).

Put in your memory (RAM), put in your hard drive, put in your CPU speed (processor), and any other preferences like monitor size or which version of Windows you want (I don't want Windows 11 any time soon). I generally just do RAM and hard drive and manually check the CPU, but that's a personal preference. Then hit apply and it'll filter down.

I'm going to say right now, if you are getting a laptop and you can afford to get a SSD, do it. SSD is a solid-state drive, vs a normal hard drive (HDD, hard disk-drive). They're less prone to breaking down and they're faster. But they're also more expensive.

Anyway, we have our filtered list of possible laptops. Now what?

Well, now comes the annoying part. Every model of computer can be different - it can have a better or worse display, it can have a crappy keyboard, or whatever. So you find a computer that looks okay, and you then look for reviews.

Here's our first row of results

Let's take a look at the Lenovo, because I like Lenovos and I loathe Dells (they're... fine...). That Thinkpad T460S is the part to Google (search for 'Lenovo Thinkpad T460s reviews'). Good websites that I trust include PCMag, LaptopMag.com, and Notebookcheck.com (which is VERY techie about displays). But every reviewer will probably be getting one with different specs than the thing you're looking at.

Here are key things that will be the same across all of them: keyboard (is it comfortable, etc), battery life, how good is the trackpad/nub mouse (nub mice are immensely superior to trackpads imho), weight, how many and what kind of ports does it have (for USB, an external monitor, etc). Monitors can vary depending on the specs, so you'll have to compare those. Mostly you're making sure it doesn't completely suck.

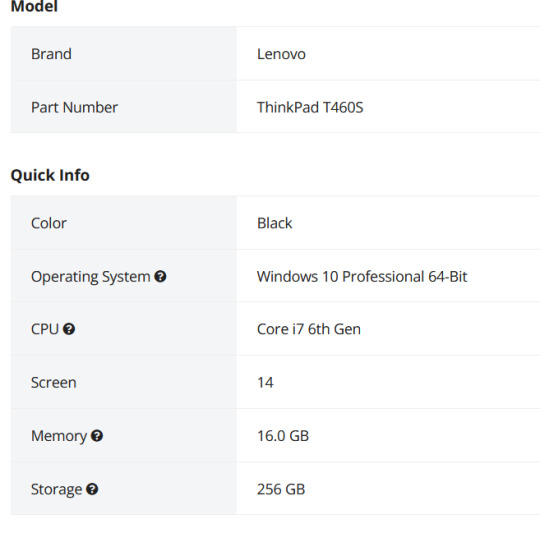

Let's go back to Newegg and look at the specs of that Lenovo. Newegg makes it easy, with tabs for whatever the seller wants to say, the specs, reviews, and Q&A (which is usually empty).

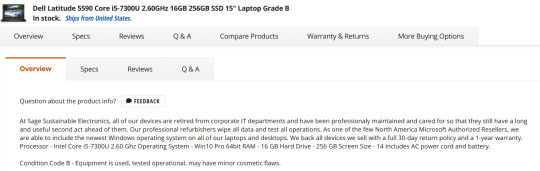

This is the start of the specs. This is actually a lesser model than the laptop we were getting the specs for. It's okay. What I don't like is that the seller gives very little other info, for example on condition. Here's a Dell with much better information - condition and warranty info.

One thing you'll want to do on Newegg is check the seller's reviews. Like on eBay or Etsy, you have to use some judgement. If you worry about that, going to the manufacturer's online outlet in a safer bet, but you won't quite get as good of deals. But they're still pretty damn good as this random computer on Lenovo's outlet shows.

Okay, so I think I've covered everything. I do recommend having a techie friend either help or double check things if you're not especially techie. But this can save you hundreds of dollars or allow you to get a better computer than you were thinking.

998 notes

·

View notes

Text

i think one of the fundamental problems with the word "techbro" is that it has multiple meanings, some of which contradict each other.

the original term brogrammer referred to programmers who act in a very stereotypical masculine way, as a pejorative. the word "techbro" was sometimes used as a synonym for this. this is why the word "bro" is there, because it's a comparison to frat bros. this is also the only sense mentioned on the wikipedia page. this is also the sense i see the least usage of on tumblr; it was really more of a thing back in 2012-2013 or so.

people also use it to refer to people who are pushing the latest fad; web 3.0, blockchain shit, NFTs, LLMs, whatever. this usage does not require that the person actually knows anything about programming. some of these people genuinely believe in what they're advocating for, some of them are just hopping onto the latest money-making thing. this is the y combinator set.

a third usage is to refer to people who are very into self-hosting, and "own your hardware" type stuff and don't understand that computing is a compromise and not everyone wants to spend all their effort getting stuff to work. this is the rms type. unlike the second definition, this one requires the person to have fairly deep technical knowledge. theoretically you could have someone who doesn't know a lot about computers but is real big into this kind of stuff, but in practice that never happens.

(i'm broadly sympathetic to this type; i avoid music streaming and sync all my music using open-source software, that sort of thing. the "techbro" part, in theory, comes when they look down on others for not making the same choices. of course, the line between "you're looking down on me" and "you're arrogant for simply believing that you're right" is thin.)

in particular, sense-2 and sense-3 "techbros" have very opposite beliefs! one wants to run everything "in the cloud", the other wants to run everything locally. one wants to let chatgpt run your life, the other hates the idea of something they can't audit be that important. both tend to be very "technology will save us" types, but the way they go about that is very different. one makes very sleek-looking but extremely limited UI, the other will make ultra-customizable, ultra-functional UI that's the most hideous and hard-to-use thing you've seen in your life.

and so you can see here the problem: what can we actually say about "techbros" that's meaningful, other than "techbro is when i don't like someone who likes technology"? if a word isn't used as a self-descriptor, but only as an insult, what stops it from becoming broader and broader until it loses all usefulness?

213 notes

·

View notes

Text

more on art production ~under capitalism~

reading Who Owns This Sentence?, a very engaging and fiercely critical history of the concept of copyright, and it's pretty fire. there's all sorts of fascinating intricacies in the way the notion of IP formed around the world (albeit so far the narrative has mainly focused on Europe, and to a limited extent China), and the different ideologies that justified the types of monopolies that it granted. the last chapter i read skewers the idea that the ability to exploit copyright and patents is what motivates the writing of books and research/invention, and I'll try and pull out the shape of the argument tomorrow. so far I'm only up to the 18th century; I'm looking forward to the rest of their story of how copyright grew from the limited forms of that period into the monster it is today.

it's on libgen if you wanna read it! i feel like the authors would be hypocrites to object :p

it is making me think about the differences between the making of books and other media, from (since this has been rattling around my head lately) an economic angle...

writing books, at least in the case of fiction is usually done on a prospective, spec-work kind of basis (you write your novel with no guarantee it will get published unless you're already an established author under contract). admittedly, a lot of us probably read books by authors who managed to 'make it' as professional authors and write full time - but this is not a lucrative thing to do and to make it work you need truly exceptional luck to get a major hit, or to be extremely prolific in things people want to read.

the films and games of the types most of us play are, by contrast, generally made by teams of salaried people - and thus do rarely get made without the belief it will be profitable. if you went on about your 'monetisation model' when writing a book, people would look at you funny and rightly so, but it's one of the first questions that gets asked when pitching a game.

open source software is a notable comparison here. a lot of it is done for its own sake without any expectation of profit, taking untold hours, but large free software projects tend to sprout foundations, which take donations (typically from companies that use the software) to pay for full time developers. mozilla, notably, gets a huge part of its funding from google paying for their search engine to be the default in Firefox; this in turn drives development of not just Firefox itself but also the Rust programming language (as discussed in this very enlightening talk by Evan Czaplicki). Blender is rightly celebrated as one of the best open source projects for its incredibly fast development, but they do have an office in amsterdam and a number of full time devs.

what money buys in regards to creative works is not motivation, but time - time to work on a project, iterate and polish and all that. in societies where you have to buy food etc. to survive, your options for existence are basically:

work at a job

own capital

rely on someone else (e.g. a parent or partner)

rely on state benefits if you can get them

beg

steal

if you're working at a job, this takes up a lot of your time and energy. you can definitely make art anyway, loads of people do, but you're much more limited in how you can work at it compared to someone who doesn't have to work another job.

so again, what money buys in art is the means of subsistence for someone, freeing them to work fully on realising a project.

where does the money come from that lets people work full time on art? a few places.

one is selling copies of the work itself. what's remarkable is that, when nearly everything can be pirated without a great deal of effort, it is still possible to do this to some degree - though in many ways the ease of digital copying (or at least the fear if it) has forced new models for purely digital creations, which either trade on convenience (streaming services) or in the case of games, find some way to enforce scarcity like requiring connection to a central server and including 'in-app purchases', where you pay to have the software display that you are the nebulous owner of an imaginary thing, and display this to other players. anyway, whichever exact model, the idea is that you turn the IP into capital which you then use to manufacture a product like 'legal copies', 'subscriptions' or 'accounts with a rare skin unlocked'.

the second is using the work to promote some other, more profitable thing - merchandising, an original work, etc. this is the main way that something like anime makes money (for the production committee, if not the studio) - the anime is, economics-wise, effectively an ad for its own source manga, figurines, shirts etc. the reason why there is so much pro media chasing the tastes of otaku is partly because otaku spend a lot on merch. (though it's also because the doujin scene kind of feeds into 'pro' production)

the third is some kind of patronage relationship, notably government grants, but also academic funding bodies, or selling commissions, or subscriptions on a streaming platform/patreon etc.

grants are how most European animated films are funded, and they often open with the logos of a huge list of arts organisations in different countries. the more places you can get involved, the more funds you can pull on. now, instead of working out how to sell your creation to customers who might buy a copy, under this model you need to convince funding bodies that it fits their remit. requesting grants involves its own specialised language.

in general the issue with the audience patronage model is that it only really pays enough to live on if you're working on a pretty huge scale. a minority make a fortune; the vast majority get a pittance at most, and if they do 'make it', it takes years of persistence.

the fourth is, for physical media, to sell an original. this only works if you can accumulate enough prestige, and the idea is to operate on extreme scarcity. the brief fad of NFTs attempted to abstract the idea of 'owning' an original from the legal right to control the physical object to something completely nebulous. in practice this largely ended up just being a speculative bubble - but then again, a lot of the reason fine art is bought and sold for such eye watering sums is pretty much the same, it's an arbitrary holder of an investment.

the fifth is artworks which are kind of intrinsically scarce, like live performances. you can only fit so many people in the house. and in many cases people will pay to see something that can be copied in unique circumstances, like seeing a film at a cinema or festival - though this is a special case of selling copies.

the sixth is to sell advertising: turn your audience into the product, and your artwork into the bait on the hook.

the alternative to all of these options is unpaid volunteer work, like a collab project. the participants are limited to the time and energy they have left after taking care of survival. this can still lead to great things, but it tends to be more unstable by its nature. so many of these projects will lose steam or participants will flake and they'll not get finished - and that's fine! still, huge huge amounts of things already get created on this kind of hobby/indie/doujin basis, generally (tho not always) with no expectation of making enough money to sustain someone.

in every single one of these cases, the economic forces shape the types of artwork that will get made. different media are more or less demanding of labour, and that in turn shapes what types of projects are viable.

books can be written solo, and usually are - collaborations are not the norm there. the same goes for illustrations. on the other hand, if you want to make a hefty CRPG or an action game or a feature length movie, and you're trying to fit that project around your day job... i won't say it's impossible, I can think of some exceptional examples, but it won't be easy, and for many people it just won't be possible.

so, that's a survey of possibilities under the current regime. how vital is copyright really to this whole affair?

one thing that is strange to me is that there aren't a lot of open source games. there are some - i have memories of seeing Tux Racer, but a more recent example would be Barotrauma (which is open source but not free, and does not take contributions from outside the company). could it work? could you pay the salaries of, say, 10 devs on a 'pay what you can' model?

it feels like the only solution to all of this in the long run is some kind of UBI type of thing - that or a very generous art grants regime. if people were free to work on what they wanted and didn't need to be paid, you wouldn't have any reason for copyright. the creations could be publicly archived. but then the question i have is, what types of artwork would thrive in that kind of ecosystem?

I've barely talked about the book that inspired this, but i think it was worth the trouble to get the contours of this kind of analysis down outside my head...

20 notes

·

View notes

Text

tier list where it's "comparison of the state of the art vs it's best open source competititor"

S tier: FOSS is undisputed SOTA:

cryptography

wiki software

compilers and languages

database systems

A tier: FOSS is comparable with SOTA but closed-source competitors exist:

web browsers

operating systems (industry)

graphics libraries

streaming and recording software

text editing

B tier: FOSS can do the job at a professional level, but most prefer closed-source products:

operating systems (consumer)

beer

3d modeling

word processing

messaging applications

C tier: FOSS exists but does not work nearly as well as SOTA:

social media

processor architectures

payment processing

video games

CAD software

digital audio workstations

D tier: FOSS alternatives basically nonexistent:

dating apps

27 notes

·

View notes

Text

When you're a Writer and a Vtuber...

If you like storytelling, Vtubing, or both, this one's for you 🥰

You'd think there'd be more overlap of "People who want to be fantasy characters and hide their face" and "Writers" but there really aren't. I know like me and @moonfeatherblue and that's it for the writing/worldbuilding and Vtuber overlap lololol. I think there should be more of us!

So, let me convince you to become a storytelling/worldbuilding/writing Vtuber like me...

In addition to just loving all the cool things you can do with Live2D as a software (if like art and you don't just stare at rigging showcases on YouTube, you should. It's so cool!), I also love storytelling in a social media space. Like, how do you tell a story over time? How much information should you reveal and when? What colors, images, and sounds evoke the feelings you want? And what feelings will keep people coming back for more? Basically, Vtubers have made me fall in love with marketing because the best marketing is just storytelling with some sort of call-to-action at the end.

Am I GOOD at marketing? No, lol, but maybe YOU would be! KEEP READING to find out XD

On the flipside, so so many Vtubers are like "I'm the embodiment of sin and also a gamer and a singer" or "I'm a cottagecore whale who is also the collector of lost souls and I play video games" and it's like... clearly Vtuber audiences LIKE the fantasy aspects of this. Why is the Vtuber default just gaming? Why is there not waaaaay more whimsy and storytelling? (This isn't to say Gaming Bad TM. I'm literally a game dev. I NEED streamers to play my games. I love them.) There's just so much opportunity for cool storytelling with Vtubers!

If you wanna get into being a fictional character/having a kayfabe-like wrestling persona for your writing, you should 100% get into Vtubing. You don't even need to stream to be a Vtuber -- and honestly I'm not even sure you need to post videos necessarily lol -- GIFs and pictures could probably get you pretty far on the right platforms. You could start out using a PNG --there are so many good, free PNGtuber softwares and you could use Picrew images (with the right permissions!) for your PNGtuber to start. Or if you can draw, you can just DO THAT.

Or if you have like $50 USD, Raindrop Atelier has a FULLY rigged Vtuber "Picrew" with chibi models that are so high quality and cute! Or if you have like $300 USD, you could get one of the Picrew-like Vtubers from Charat Genesis. (Yes, that's a lot BUT most Vtuber models — 2D and 3D alike — run you from $2000 to $8000 sooooo $300 is a steal in comparison.)

And then, over time, you could post and reveal facts about yourself and tidbits of your lore! And you could give writing advice or talking about your worldbuilding in-character! I've had this idea to make a fantasy creature mockumentary for actually years now and I'm just trying to find a good scope for it...

On the downside, as with all "storytelling in real time", it can a little discouraging at the start when you don't have a big audience. And this specific niche is especially underdeveloped so it's definitely hard to find a foothold. BUT I find that, because it's all play -- it's all FANTASY -- I have a lot more energy for this kind of marketing than I would if I were promoting myself as "just a writer, trying to sell my writing." Getting people to like me is exhausting. Getting people to like my writing is part of the writing process!

Cuz like, aside from just "inhabiting a fictional character" and "reducing your face presence online while still giving your personal brand a face", being a Vtuber is also a fun way to tie into your work.

Like, my Vtuber model is Arlasaire and she's the protagonist of my (probably) upcoming RPG, Untitled Yssaia Game, (Not the final name, real name pending lol). She talks about cooking and music and geography over on my YouTube channel and it's all infused with cool fantasy music and sound effects. She speaks in and teaches you about conlangs in the world. And she goes on fantasy dates or fantasy vacations! So now, I'm getting people attached to this character and her world BEFORE I even get into her actual story. And all her merchandising and stuff is really just game merchandising and so on and so forth... and that's just good branding! Hopefully some day, this translates into more people playing the game and seeing more of my work!

But obviously, I'm very new to marketing or else this blog post would convince more writers to become Vtubers and more Vtubers to write lol.

Anyway, here's some Arlasaire art (art: LexiKoumori on IG, rig: Kanijam) AS WELL AS some of her earlier model sketches before I asked for her hair to be silkier and less feathery! And lastly, a short unedited video of me being cringe so you KNOW you could do better :DDDD

#writeblr#worldbuilding#live2d#vtuber#marketing#pngtuber#vtuber model#vtubing#fantasy writing#fantasy lore#amaiguri

26 notes

·

View notes

Text

This Week in Rust 572

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on X (formerly Twitter) or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Want TWIR in your inbox? Subscribe here.

Updates from Rust Community

Official

October project goals update

Next Steps on the Rust Trademark Policy

This Development-cycle in Cargo: 1.83

Re-organising the compiler team and recognising our team members

This Month in Our Test Infra: October 2024

Call for proposals: Rust 2025h1 project goals

Foundation

Q3 2024 Recap from Rebecca Rumbul

Rust Foundation Member Announcement: CodeDay, OpenSource Science(OS-Sci), & PROMOTIC

Newsletters

The Embedded Rustacean Issue #31

Project/Tooling Updates

Announcing Intentrace, an alternative strace for everyone

Ractor Quickstart

Announcing Sycamore v0.9.0

CXX-Qt 0.7 Release

An 'Educational' Platformer for Kids to Learn Math and Reading—and Bevy for the Devs

[ZH][EN] Select HTML Components in Declarative Rust

Observations/Thoughts

Safety in an unsafe world

MinPin: yet another pin proposal

Reached the recursion limit... at build time?

Building Trustworthy Software: The Power of Testing in Rust

Async Rust is not safe with io_uring

Macros, Safety, and SOA

how big is your future?

A comparison of Rust’s borrow checker to the one in C#

Streaming Audio APIs in Rust pt. 3: Audio Decoding

[audio] InfinyOn with Deb Roy Chowdhury

Rust Walkthroughs

Difference Between iter() and into_iter() in Rust

Rust's Sneaky Deadlock With if let Blocks

Why I love Rust for tokenising and parsing

"German string" optimizations in Spellbook

Rust's Most Subtle Syntax

Parsing arguments in Rust with no dependencies

Simple way to make i18n support in Rust with with examples and tests

How to shallow clone a Cow

Beginner Rust ESP32 development - Snake

[video] Rust Collections & Iterators Demystified 🪄

Research

Charon: An Analysis Framework for Rust

Crux, a Precise Verifier for Rust and Other Languages

Miscellaneous

Feds: Critical Software Must Drop C/C++ by 2026 or Face Risk

[audio] Let's talk about Rust with John Arundel

[audio] Exploring Rust for Embedded Systems with Philip Markgraf

Crate of the Week

This week's crate is wtransport, an implementation of the WebTransport specification, a successor to WebSockets with many additional features.

Thanks to Josh Triplett for the suggestion!

Please submit your suggestions and votes for next week!

Calls for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

RFCs

No calls for testing were issued this week.

Rust

No calls for testing were issued this week.

Rustup

No calls for testing were issued this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here or through a PR to TWiR or by reaching out on X (formerly Twitter) or Mastodon!

CFP - Events

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

If you are an event organizer hoping to expand the reach of your event, please submit a link to the website through a PR to TWiR or by reaching out on X (formerly Twitter) or Mastodon!

Updates from the Rust Project

473 pull requests were merged in the last week

account for late-bound depth when capturing all opaque lifetimes

add --print host-tuple to print host target tuple

add f16 and f128 to invalid_nan_comparison

add lp64e RISC-V ABI

also treat impl definition parent as transparent regarding modules

cleanup attributes around unchecked shifts and unchecked negation in const

cleanup op lookup in HIR typeck

collect item bounds for RPITITs from trait where clauses just like associated types

do not enforce ~const constness effects in typeck if rustc_do_not_const_check

don't lint irrefutable_let_patterns on leading patterns if else if let-chains

double-check conditional constness in MIR

ensure that resume arg outlives region bound for coroutines

find the generic container rather than simply looking up for the assoc with const arg

fix compiler panic with a large number of threads

fix suggestion for diagnostic error E0027

fix validation when lowering ? trait bounds

implement suggestion for never type fallback lints

improve missing_abi lint

improve duplicate derive Copy/Clone diagnostics

llvm: match new LLVM 128-bit integer alignment on sparc

make codegen help output more consistent

make sure type_param_predicates resolves correctly for RPITIT

pass RUSTC_HOST_FLAGS at once without the for loop

port most of --print=target-cpus to Rust

register ~const preds for Deref adjustments in HIR typeck

reject generic self types

remap impl-trait lifetimes on HIR instead of AST lowering

remove "" case from RISC-V llvm_abiname match statement

remove do_not_const_check from Iterator methods

remove region from adjustments

remove support for -Zprofile (gcov-style coverage instrumentation)

replace manual time convertions with std ones, comptime time format parsing

suggest creating unary tuples when types don't match a trait

support clobber_abi and vector registers (clobber-only) in PowerPC inline assembly

try to point out when edition 2024 lifetime capture rules cause borrowck issues

typingMode: merge intercrate, reveal, and defining_opaque_types

miri: change futex_wait errno from Scalar to IoError

stabilize const_arguments_as_str

stabilize if_let_rescope

mark str::is_char_boundary and str::split_at* unstably const

remove const-support for align_offset and is_aligned

unstably add ptr::byte_sub_ptr

implement From<&mut {slice}> for Box/Rc/Arc<{slice}>

rc/Arc: don't leak the allocation if drop panics

add LowerExp and UpperExp implementations to NonZero

use Hacker's Delight impl in i64::midpoint instead of wide i128 impl

xous: sync: remove rustc_const_stable attribute on Condvar and Mutex new()

add const_panic macro to make it easier to fall back to non-formatting panic in const

cargo: downgrade version-exists error to warning on dry-run

cargo: add more metadata to rustc_fingerprint

cargo: add transactional semantics to rustfix

cargo: add unstable -Zroot-dir flag to configure the path from which rustc should be invoked

cargo: allow build scripts to report error messages through cargo::error

cargo: change config paths to only check CARGO_HOME for cargo-script

cargo: download targeted transitive deps of with artifact deps' target platform

cargo fix: track version in fingerprint dep-info files

cargo: remove requirement for --target when invoking Cargo with -Zbuild-std

rustdoc: Fix --show-coverage when JSON output format is used

rustdoc: Unify variant struct fields margins with struct fields

rustdoc: make doctest span tweak a 2024 edition change

rustdoc: skip stability inheritance for some item kinds

mdbook: improve theme support when JS is disabled

mdbook: load the sidebar toc from a shared JS file or iframe

clippy: infinite_loops: fix incorrect suggestions on async functions/closures

clippy: needless_continue: check labels consistency before warning

clippy: no_mangle attribute requires unsafe in Rust 2024

clippy: add new trivial_map_over_range lint

clippy: cleanup code suggestion for into_iter_without_iter

clippy: do not use gen as a variable name

clippy: don't lint unnamed consts and nested items within functions in missing_docs_in_private_items

clippy: extend large_include_file lint to also work on attributes

clippy: fix allow_attributes when expanded from some macros

clippy: improve display of clippy lints page when JS is disabled

clippy: new lint map_all_any_identity

clippy: new lint needless_as_bytes

clippy: new lint source_item_ordering

clippy: return iterator must not capture lifetimes in Rust 2024

clippy: use match ergonomics compatible with editions 2021 and 2024

rust-analyzer: allow interpreting consts and statics with interpret function command

rust-analyzer: avoid interior mutability in TyLoweringContext

rust-analyzer: do not render meta info when hovering usages

rust-analyzer: add assist to generate a type alias for a function

rust-analyzer: render extern blocks in file_structure

rust-analyzer: show static values on hover

rust-analyzer: auto-complete import for aliased function and module

rust-analyzer: fix the server not honoring diagnostic refresh support

rust-analyzer: only parse safe as contextual kw in extern blocks

rust-analyzer: parse patterns with leading pipe properly in all places

rust-analyzer: support new #[rustc_intrinsic] attribute and fallback bodies

Rust Compiler Performance Triage

A week dominated by one large improvement and one large regression where luckily the improvement had a larger impact. The regression seems to have been caused by a newly introduced lint that might have performance issues. The improvement was in building rustc with protected visibility which reduces the number of dynamic relocations needed leading to some nice performance gains. Across a large swath of the perf suit, the compiler is on average 1% faster after this week compared to last week.

Triage done by @rylev. Revision range: c8a8c820..27e38f8f

Summary:

(instructions:u) mean range count Regressions ❌ (primary) 0.8% [0.1%, 2.0%] 80 Regressions ❌ (secondary) 1.9% [0.2%, 3.4%] 45 Improvements ✅ (primary) -1.9% [-31.6%, -0.1%] 148 Improvements ✅ (secondary) -5.1% [-27.8%, -0.1%] 180 All ❌✅ (primary) -1.0% [-31.6%, 2.0%] 228

1 Regression, 1 Improvement, 5 Mixed; 3 of them in rollups 46 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

[RFC] Default field values

RFC: Give users control over feature unification

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

[disposition: merge] Add support for use Trait::func

Tracking Issues & PRs

Rust

[disposition: merge] Stabilize Arm64EC inline assembly

[disposition: merge] Stabilize s390x inline assembly

[disposition: merge] rustdoc-search: simplify rules for generics and type params

[disposition: merge] Fix ICE when passing DefId-creating args to legacy_const_generics.

[disposition: merge] Tracking Issue for const_option_ext

[disposition: merge] Tracking Issue for const_unicode_case_lookup

[disposition: merge] Reject raw lifetime followed by ', like regular lifetimes do

[disposition: merge] Enforce that raw lifetimes must be valid raw identifiers

[disposition: merge] Stabilize WebAssembly multivalue, reference-types, and tail-call target features

Cargo

No Cargo Tracking Issues or PRs entered Final Comment Period this week.

Language Team

No Language Team Proposals entered Final Comment Period this week.

Language Reference

No Language Reference RFCs entered Final Comment Period this week.

Unsafe Code Guidelines

No Unsafe Code Guideline Tracking Issues or PRs entered Final Comment Period this week.

New and Updated RFCs

[new] Implement The Update Framework for Project Signing

[new] [RFC] Static Function Argument Unpacking

[new] [RFC] Explicit ABI in extern

[new] Add homogeneous_try_blocks RFC

Upcoming Events

Rusty Events between 2024-11-06 - 2024-12-04 🦀

Virtual

2024-11-06 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2024-11-07 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-11-08 | Virtual (Jersey City, NJ, US) | Jersey City Classy and Curious Coders Club Cooperative

Rust Coding / Game Dev Fridays Open Mob Session!

2024-11-12 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2024-11-14 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-11-14 | Virtual and In-Person (Lehi, UT, US) | Utah Rust

Green Thumb: Building a Bluetooth-Enabled Plant Waterer with Rust and Microbit

2024-11-14 | Virtual and In-Person (Seattle, WA, US) | Seattle Rust User Group

November Meetup

2024-11-15 | Virtual (Jersey City, NJ, US) | Jersey City Classy and Curious Coders Club Cooperative

Rust Coding / Game Dev Fridays Open Mob Session!

2024-11-19 | Virtual (Los Angeles, CA, US) | DevTalk LA

Discussion - Topic: Rust for UI

2024-11-19 | Virtual (Washington, DC, US) | Rust DC

Mid-month Rustful

2024-11-20 | Virtual and In-Person (Vancouver, BC, CA) | Vancouver Rust

Embedded Rust Workshop

2024-11-21 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-11-21 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Trustworthy IoT with Rust--and passwords!

2024-11-21 | Virtual (Rotterdam, NL) | Bevy Game Development

Bevy Meetup #7

2024-11-25 | Bratislava, SK | Bratislava Rust Meetup Group

ONLINE Talk, sponsored by Sonalake - Bratislava Rust Meetup

2024-11-26 | Virtual (Dallas, TX, US) | Dallas Rust

Last Tuesday

2024-11-28 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-12-03 | Virtual (Buffalo, NY, US) | Buffalo Rust Meetup

Buffalo Rust User Group

Asia

2024-11-28 | Bangalore/Bengaluru, IN | Rust Bangalore

RustTechX Summit 2024 BOSCH

2024-11-30 | Tokyo, JP | Rust Tokyo

Rust.Tokyo 2024

Europe

2024-11-06 | Oxford, UK | Oxford Rust Meetup Group

Oxford Rust and C++ social

2024-11-06 | Paris, FR | Paris Rustaceans

Rust Meetup in Paris

2024-11-09 - 2024-11-11 | Florence, IT | Rust Lab

Rust Lab 2024: The International Conference on Rust in Florence

2024-11-12 | Zurich, CH | Rust Zurich

Encrypted/distributed filesystems, wasm-bindgen

2024-11-13 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup

2024-11-14 | Stockholm, SE | Stockholm Rust

Rust Meetup @UXStream

2024-11-19 | Leipzig, DE | Rust - Modern Systems Programming in Leipzig

Daten sichern mit ZFS (und Rust)

2024-11-21 | Edinburgh, UK | Rust and Friends

Rust and Friends (pub)

2024-11-21 | Oslo, NO | Rust Oslo

Rust Hack'n'Learn at Kampen Bistro

2024-11-23 | Basel, CH | Rust Basel

Rust + HTMX - Workshop #3

2024-11-27 | Dortmund, DE | Rust Dortmund

Rust Dortmund

2024-11-28 | Aarhus, DK | Rust Aarhus

Talk Night at Lind Capital

2024-11-28 | Augsburg, DE | Rust Meetup Augsburg

Augsburg Rust Meetup #10

2024-11-28 | Berlin, DE | OpenTechSchool Berlin + Rust Berlin

Rust and Tell - Title

North America

2024-11-07 | Chicago, IL, US | Chicago Rust Meetup

Chicago Rust Meetup

2024-11-07 | Montréal, QC, CA | Rust Montréal

November Monthly Social

2024-11-07 | St. Louis, MO, US | STL Rust

Game development with Rust and the Bevy engine

2024-11-12 | Ann Arbor, MI, US | Detroit Rust

Rust Community Meetup - Ann Arbor

2024-11-14 | Mountain View, CA, US | Hacker Dojo

Rust Meetup at Hacker Dojo

2024-11-15 | Mexico City, DF, MX | Rust MX

Multi threading y Async en Rust parte 2 - Smart Pointes y Closures

2024-11-15 | Somerville, MA, US | Boston Rust Meetup

Ball Square Rust Lunch, Nov 15

2024-11-19 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2024-11-23 | Boston, MA, US | Boston Rust Meetup

Boston Common Rust Lunch, Nov 23

2024-11-25 | Ferndale, MI, US | Detroit Rust

Rust Community Meetup - Ferndale

2024-11-27 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

Oceania

2024-11-12 | Christchurch, NZ | Christchurch Rust Meetup Group

Christchurch Rust Meetup

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

Any sufficiently complicated C project contains an adhoc, informally specified, bug ridden, slow implementation of half of cargo.

– Folkert de Vries at RustNL 2024 (youtube recording)

Thanks to Collin Richards for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

3 notes

·

View notes

Text

STREAM-OF-CONSCIOUSNESS "ESSAY" ABOUT MY KINTYPE + A LITTLE BIT MORE.

------

I used to label myself a Felidae cladotherian, but it just wasn't enough. At the moment, "polymorphic shape-changer" feels far closer to the truth. It may even actually be the truth. I pulled the term out of my ass like a week ago, frankly, but after some discussion with others within the OtherConnect Discord server, I've found a way to define it that is basically perfect, in my opinion. Read my Pinned post on this side-blog if you want the definition as I see it.

I am the sole individual who utilizes this label, so far. It's probably gonna be that way for a very long while. Maybe one day that'll change and I can create a designated Discord server for the lot of us, who knows. I'm no good at designing flags or whatnot, but perhaps I can commission someone to make some stuff for me like that eventually.

I feel very close with the idea of werecats, AKA ailuranthropes. (Like how werewolves can be referred to as lycanthropes.) I dunno when precisely or why this feline fixation of mine ignited; loving cats is a big part of my earliest memories. I tend to simply shrug and claim that I was born enamored with these creatures. It's hardwired into my brain, my DNA, my soul. I am an example of metaphysical feline software running on flesh-and-blood physical human hardware. For as long as I can look back, I've felt like I should be one of them, that they were my kith and kin -- no irony intended -- and that I should have at least one around me in my personal living space at all times.

However, when I was little, rarely did any cat like me or even tolerate me in return. I was too loud, too obnoxious and rudely grabby. The numerous scratches I rightfully received from all those cats never, ever deterred me at all. Sure, it made me heartbroken at the time, but I'd bounce back soon enough. Now that I'm older, calmer, more self-aware and respectful of cats in general, they usually allow me into their proximity for affection, if they're the type of kitty to want it.

Moving on to my teen years, I was but a fledgling, insecure and depressed alterhuman, with no clue what my true identity was yet. I tried identifying as many things, fictotypes, theriotypes...all brief, including my tenure as Bluefur/-star from Warrior Cats. Didn't work out with her in specific, but something in me spoke that I was on the right track by looking into her, if only because of the felinity. I mislabeled myself many, many times as I aged throughout my youth, my journey of growth. Self-discovery was eager to smack me in the face every once in awhile. Lots of embarrassment for me as I continued to mislabel myself over and over, with lots of encouragement and peptalks from my online, fellow alterhuman friends to not give up.

Now, I am cognizant of myself being both a cat person and a literal cat-person. The latter is what my base/default form resembles at basically all times, thinking about it. It took some serious questioning from my previously mentioned online buddies to help me uncover what I was probably critically overthinking. I have a sense that my base/default form is going to gain yet more defining physically traits as time goes on and I dig up these things like a work-weary archaeologist. The exact details of my own appearance frequently change: coloration, eye color, and markings as well as fur length and texture. One day they may just stabilize with finality, and I can feel like I can drop the "polymorphic shape-changer" name and substitute it with just "shape-shifter/-changer" for certain, forevermore.

Not that that's something I particularly dearly wish for. I can remain a polymorphic shape-changer for the rest of my days if need be. Whatever happens will happen.

Anyway, I can clearly see now that I've always had some degree and measure of felinity within me. It's integral to me on the inside. All my other forms I take are secondary in comparison, not lesser but also not as "big." My felinity is the most spiritual part of my identity, alongside what I do as a seemingly "average human being" out in the big wide world. Human isn't how I identify, but yeah, it's nowhere near all-bad. I like it at times. Doesn't change that my felinity means more to me than it.

I have a fiction novel planned that features werecats as the main characters. It's urban fantasy + magic realism. Untitled right now, slowly being built and penned. The first draft isn't even completed yet...or started. I have a long notes Word document about it though. I realize now that I subconsciously and heavily based amd modeled the werecats in the tale after my own base/default form. One day, I'll publish it and then excitedly scour the online alterhuman community to check if anybody else identifies as the type of werecat in my book. Honestly, I'll welcome anyone who identifies as any of my OCs!! I'd adore that, or even as a noncanon member of the species. The second thing I look forward to most are the fanfictions and fanarts folks will surely make of my media. There might even be a film or TV adaptation of it...but I'm getting way overly ahead of myself by daydreaming so much.

On the note of artwork, I viscerally hate it when artists put the warning "do not tag as ID or me" under their pieces. Like, so sorry your art was so amazing that it resonated with me on a deeply personal, important level. Jerk. I know certain artists do it because the art is of their own alterhuman memories or noemata and the like, but normal human creators? What excuses do you have? Why do you care so badly? ...In the end, unfortunately, I cannot dictate how any other artist wants their works to be seen or used. Sigh. But I can definitely whine and bitch about it -- as evidenced.

#leon speaks#my post#alterhuman#polymorph#polymorphic shape changer#otherkin#shapeshifter#ok to reply#essay#i wrote the rough draft of this first in my handwritten journal#and then i digitalized it for y'all#journal#outdated#like GREATLY outdated now#oh well

4 notes

·

View notes

Text

how i make gifs using filmora x (for anon ❤️)

read under the cut!

hey!! thanks for reading this! just a few notes before i explain about my editing process:

filmora x works very differently from photoshop. it's a video-editing software anyways, so treat it like that!

always use high-quality sources!! most of my issues with grainy gifs come from using low-quality sources. so, i always ensure to use sources at 1080p, at least!

if i'm creating multiple gifs from one match, i usually download the entire match (which is a hugeeee file 😵💫 but it's so worth it!!). but if it's only a scene or two, i screen record them! i have an astro supersport subscription and a beinsports account, so i don't have an issue screen recording clips, as their content are always in high-quality! but if you're using other sources or streams, then do ensure the quality is good!!

if the only available source is of low-quality, my trick is to make smaller gifs! for smaller gifs, i usually keep a 1:1 or 4:3 size ratio, and post them side-by-side in a single post - usually two in a row!

general colouring stuff applies here as well, you can check out the photoshop guide i've linked in the ask!

remember, there isn't one "correct" way to gif, you can gif however you like!

and now without further ado:-

step one: adjust video settings, speed and length after importing the clip into filmora, setting the aspect ratio, resolution and frame rate according to preference, the first thing i'd do is to adjust the speed of the clip. i like to slow them down, so i usually go for a 0.5x speed. you can always adjust the speed to your preference!

i like to keep my gifs within a 3 to 5-second length, depending on the content, so i'll trim the clip or adjust the speed as desired. if the clip is shaky, i usually add stabilization at about 10%, but you can adjust as you like! here's an example of a clip before and after speed reduction:

step two: auto-enhance once the clip is at your preferred length, size and speed, now it's time to make it look pretty! in filmora, there's an 'auto-enhance' feature, so i usually begin with that, setting it somewhere between 50% to 100%. here's an example of how it looks like before and after auto-enhancing at 100%:

step three: colour correction head over to 'advanced colour correction', where you can use either the given presets, or manually adjust to your liking. i always manually adjust them!! you can also start off with a preset and make additional manual adjustments as I did below! what i did here was to darken it, then adjust the colour enhancement, white balance (hue and tint), colour (exposure, brightness, contrast, vibrance, saturation), lighting (highlight, shadow, black, white), and hsl (for this example, i adjusted only the red).

you can also save your adjustments as custom presets so that you can use them again in the future!

here's a quick look at how i do the colouring! from the before and after colour correction examples, you can see that this is the important part of the whole process!!

step four: sharpen once i'm satisfied with my colouring, i sharpen them by adding the 'luma sharp' effect (usually at 50% or 70% alpha and 50% intensity)

here's how it looks like before and after sharpening:

step five: final touches and exporting before i export, i make some final tweaks to the brightness, contrast and saturation, etc., ... and voila!! there also many other effects available for you to add (grainy effect, blur effect, etc.) so feel free to play around!

once you're satisfied with your result, it's time to export! now, video-editing softwares HATE gifs. you can always just export as gif from filmora directly, but i don't really like the way it turns out 😭 so, i export them as video (.mp4) and use external gifmakers (like ezgif!) to convert them from video to gif!

aaaand that's all!! here's a comparison of the original clip vs the end result!

final note: remember to size your gifs correctly for tumblr (540px for full width, and 268px for half), and keep each gif within the size limit of 10mb!! if you find that your gifs exceed the size limit, try reducing the number of frames or removing duplicate frames, increasing the contrast, or you may also crop the height if necessary.

if you have any questions about making gifs using filmora, feel free to reach out! thank you for reading, mwah mwah!! 💞

10 notes

·

View notes

Text

Thor Pirate Software's coverage of the Stop Killing Games Initiative has been deeply frustrating to me because one of the main points he's been getting on their ass about is being vague, but there are several times that he has been incredibly vague about the information he's used to draw the conclusions he's presented and where he got that information from.

The example of this that I'm going to use is in his first video when he talks about The Crew.*1 There's a bit where he claims that the game was always marketed as online only, and the only source he cites for this is legitimately "everything I've found online." I'm not saying this is false, but I have so little information on why he thinks the game was only ever marketed as online only that I can't say it's true with 100% confidence, especially with the knowledge that the crew had a story campaign that could be played entirely in single player*2. Not a perfect comparison, but the majority of Splatoon 1's marketing, at least from trailers, was based around its multiplayer (I'm not about to do the same thing I'm criticizing him for after getting on his ass about that, I do have some self awareness), and yet you can still play its single player campaign after the servers are dead. And I'm not saying the single player mode was ignored in the marketing, like I say it's not a perfect comparison to the claim he's making, but the vast majority of the marketing for this game was showing its multiplayer gameplay and features. This being the case, the vast majority of my playtime with that game as well as the rest of the series has been single player. Just because a game is marketed based on its multiplayer content, that doesn't mean people won't only play it for its single player content or that the single player content can't be a major selling point and he didn't go through any effort to show that this wasn't the case for The Crew. Show some trailers, a screenshot of the game's storefront page from when it was being sold, anything more specific that "Everything I've found."

And if we want to talk about being vague, how about deleting 2 weeks worth of stream content, including all the VODs in which he talked about the initiative. I would say deleting multiple hours of your coverage of a topic is not the best way to keep the specific details of your argument in tact. I simply refuse to believe that he managed to fit all of his opinions and takes about the initiative into 23 minutes and 9 seconds and I'm not going to hunt for clips on tiktok, youtube or twitter just for the sake of understanding the perspective of someone who doesn't know I exist. If he wanted his perspective to be understood, he would consistently show clearly and specifically what information he uses to come to his conclusions and where he got that information from.

I have a lot more thoughts, but I am wayy too tired to get them down now so I'll cut this off here. TLDR: put the bare minimum effort to show where you're getting your information from, jesus christ.

*1 There used to be a bit where I said The Crew's Wikipedia page was the only source he used for information about the game. This is false, he also used cites Steam's active player count tracker to show that the game had a large drop in players when The Crew 2 came out. This is the kind of thing I'm talking about and I wish he was more consistent about showing information like this. He also shows the release dates of the sequels that the Crew received, but I don't count this showing sources for information about The Crew 1 and I forgot about him showing the player tracker when I was writing this initially

*2 I realised I did the thing here, This Steam discussion post and this Reddit post contain people discussing the single player campaign, which is how I know about it. Cite your fucking sources, me. Also, The Crew's Steam Page lists the ability to "fly solo" as a key feature of the game, as well as boasting a 30 hour+ story campaign in the Content section. Most of it is focused on multiplayer, but to say the game was only marketed as online only isn't even true.

#“It turns out that all of the cars in the game were licensed from the car companies”#I'm sure they were but I would like you to show me how you know that#If I had the energy I'd go through the whole video and write a paragraph of similar length about every time he does this#And then do that with the second video#I am petty enough for that#But I'm too tired and it's not worth the effort#Pirate Software#Stop Killing Games

4 notes

·

View notes

Text

The PlayStation 3* was the best video game console of all time

It could play CDs, DVDs, and Blu-rays, PS1, PS2, and PS3 games, as well as save pictures, music, videos, and games to its internal hard drive. It could surf the internet, it could stream video (back in the day you put a disc from Netflix in the PS3 to run the Netflix software to stream stuff) and online play for all games was free.

The PS2 probably has a better overall library but it’s hard to actually make that comparison, and since the original run of PS3s literally had a physical PS2 built into them (it wasn’t emulation!) the PS3 was basically just a PS2-2, just a bigger better version of the previous generation, everything a sequel should be.

I love a lot of consoles but the PlayStation 3 was, and is, the GOAT

*The original run of PS3s, and the second run as well, but not the later runs or the slims, since the original production run had backwards compatibility with both the PS2 and by extension the PS1. The second generation had backwards compatibility with software emulation, instead of simply by sticking the physical guts of a PS2 inside the PS3

3 notes

·

View notes

Text

The assumption that all production is a monolith is certainly a choice here. Two major things on cinematography:

Cinematographers are generally good at their job. They know way more than you could ever know about lighting a scene. Someone taking a screenshot and "brightening" it in software like photoshop is NEVER going to prove the point you think you're proving. Because lighting doesn't work that way and you're taking a 2D still of a 3D film that already has processing and compression and using a brightening tool. Like that's not how that works at all. This is a dumb comparison to make. You don't have the original captured image and all of its data. You cannot adjust the brightness with a screenshot: you need to be able to process the full image data.

A big downfall, though: Movies are lit specifically for the best case scenario of lighting and color nuance. Theater screens and projectors are supposed to be kept clean, working, and up to spec, and movies are lit for that purpose. A movie is rarely "too dark" it's just that you're watching it sub-optimally on a computer, phone, or TV, or god forbid on a movie projector that hasn't had its routine maintenance (something that notably got worse because of the pandemic!). This isn't your fault as a consumer btw, it's just a stupid disparity that exists.

Cinematographers light differently than you'd expect. White is meant to be seen as the BRIGHTEST thing on the screen. Every ounce of brightness is lit down based on that. Your computer screen? There are things just as bright as white because of the way brightness is done on computer monitors. Cinematographers use a system called False Color in order to identify and "visualize" brightness levels: