#Text-to-Speech Datasets

Explore tagged Tumblr posts

Text

Text-to-speech datasets form the cornerstone of AI-powered speech synthesis applications, facilitating natural and smooth communication between humans and machines. At Globose Technology Solutions, we recognize the transformative power of TTS technology and are committed to delivering cutting-edge solutions that harness the full potential of these datasets. By understanding the importance, features, and applications of TTS datasets, we pave the way to a future where seamless speech synthesis enriches lives and drives innovation across industries.

#Text-to-speech datasets#NLP#Data Collection#Data Collection in Machine Learning#data collection company#datasets#ai#technology#globose technology solutions

0 notes

Text

Annotated Text-to-Speech Datasets for Deep Learning Applications

Introduction:

Text To Speech Dataset technology has undergone significant advancements due to developments in deep learning, allowing machines to produce speech that closely resembles human voice with impressive precision. The success of any TTS system is fundamentally dependent on high-quality, annotated datasets that train models to comprehend and replicate natural-sounding speech. This article delves into the significance of annotated TTS datasets, their various applications, and how organizations can utilize them to create innovative AI solutions.

The Importance of Annotated Datasets in TTS

Annotated TTS datasets are composed of text transcripts aligned with corresponding audio recordings, along with supplementary metadata such as phonetic transcriptions, speaker identities, and prosodic information. These datasets form the essential framework for deep learning models by supplying structured, labeled data that enhances the training process. The quality and variety of these annotations play a crucial role in the model’s capability to produce realistic speech.

Essential Elements of an Annotated TTS Dataset

Text Transcriptions – Precise, time-synchronized text that corresponds to the speech audio.

Phonetic Labels – Annotations at the phoneme level to enhance pronunciation accuracy.

Speaker Information – Identifiers for datasets with multiple speakers to improve voice variety.

Prosody Features – Indicators of pitch, intonation, and stress to enhance expressiveness.

Background Noise Labels – Annotations for both clean and noisy audio samples to ensure robust model training.

Uses of Annotated TTS Datasets

The influence of annotated TTS datasets spans multiple sectors:

Virtual Assistants: AI-powered voice assistants such as Siri, Google Assistant, and Alexa depend on high-quality TTS datasets for seamless interactions.

Audiobooks & Content Narration: Automated voice synthesis is utilized in e-learning platforms and digital storytelling.

Accessibility Solutions: Screen readers designed for visually impaired users benefit from well-annotated datasets.

Customer Support Automation: AI-driven chatbots and IVR systems employ TTS to improve user experience.

Localization and Multilingual Speech Synthesis: Annotated datasets in various languages facilitate the development of global text-to-speech (TTS) applications.

Challenges in TTS Dataset Annotation

Although annotated datasets are essential, the creation of high-quality TTS datasets presents several challenges:

Data Quality and Consistency: Maintaining high standards for recordings and ensuring accurate annotations throughout extensive datasets.

Speaker Diversity: Incorporating a broad spectrum of voices, accents, and speaking styles.

Alignment and Synchronization: Accurately aligning text transcriptions with corresponding speech audio.

Scalability: Effectively annotating large datasets to support deep learning initiatives.

How GTS Can Assist with High-Quality Text Data Collection

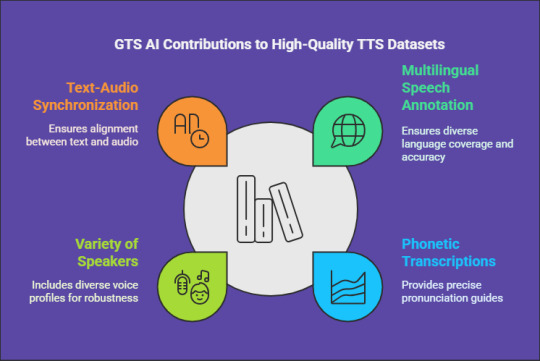

For organizations and researchers in need of dependable TTS datasets, GTS AI provides extensive text data collection services. With a focus on multilingual speech annotation, GTS delivers high-quality, well-organized datasets specifically designed for deep learning applications. Their offerings guarantee precise phonetic transcriptions, a variety of speakers, and flawless synchronization between text and audio.

Conclusion

Annotated text-to-speech datasets are vital for the advancement of high-performance speech synthesis models. As deep learning Globose Technology Solutions progresses, the availability of high-quality, diverse, and meticulously annotated datasets will propel the next wave of AI-driven voice applications. Organizations and developers can utilize professional annotation services, such as those provided by GTS, to expedite their AI initiatives and enhance their TTS solutions.

0 notes

Text

Enhancing Vocal Quality: An Overview of Text-to-Speech Datasets

Introduction

The advancement of Text-to-Speech (TTS) technology has significantly altered the way individuals engage with machines. From digital assistants and navigation applications to tools designed for the visually impaired, TTS systems are increasingly becoming essential in our everyday experiences. At the heart of these systems is a vital element: high-quality datasets. This article will delve into the basics of Text-to-Speech datasets and their role in developing natural and expressive synthetic voices.

What Constitutes a Text-to-Speech Dataset?

A Text-to-Speech dataset comprises a collection of paired text and audio data utilized for training machine learning models. These datasets enable TTS systems to learn the process of transforming written text into spoken language. A standard dataset typically includes:

The Significance of TTS Datasets

Enhancing Voice Quality: The variety and richness of the dataset play a crucial role in achieving a synthesized voice that is both natural and clear.

Expanding Multilingual Support: A varied dataset allows the system to accommodate a range of languages and dialects effectively.

Reflecting Emotions and Tones: High-quality datasets assist models in mimicking human-like emotional expressions and intonations.

Mitigating Bias: Diverse datasets promote inclusivity by encompassing various accents, genders, and speaking styles.

Attributes of an Effective TTS Dataset

Variety: An effective dataset encompasses a range of languages, accents, genders, ages, and speaking styles.

Superior Audio Quality: The recordings must be clear and free from significant background noise or distortion.

Precision in Alignment: It is essential that the text and audio pairs are accurately aligned to facilitate effective training.

Comprehensive Annotation: In-depth metadata, including phonetic and prosodic annotations, enhances the training experience.

Size: A more extensive dataset typically results in improved model performance, as it offers a greater number of examples for the system to learn from.

Types of Text-to-Speech Datasets

These datasets concentrate on a singular voice, typically utilized for specific applications such as virtual assistants.

Example: LJSpeech Dataset (featuring a single female speaker of American English).

These datasets comprise recordings from various speakers, allowing systems to produce a range of voices.

Example: LibriTTS (sourced from LibriVox audiobooks).

These datasets include text and audio in multiple languages to facilitate global applications.

Example: Mozilla Common Voice.

These datasets encompass a variety of emotions, including happiness, sadness, and anger, to enhance the expressiveness of TTS systems.

Example: CREMA-D (featuring recordings rich in emotional content).

Challenges in Developing TTS Datasets

Best Practices for Developing TTS Datasets

Regularly Update: Expand datasets to encompass new languages, accents, and applications.

The Importance of Annotation in TTS Datasets

Annotations play a vital role in enhancing the efficacy of TTS systems. They offer context and supplementary information, including:

Services such as GTS AI provide specialized annotation solutions to facilitate this process.

The Future of TTS Datasets

As TTS technology evolves, the need for more diverse and advanced datasets will increase. Innovations such as:

These advancements will lead to the creation of more natural, inclusive, and adaptable TTS systems.

Conclusion

Building better voices starts with high-quality Text-to-Speech datasets. By prioritizing diversity, quality, and ethical practices, we can create TTS systems that sound natural, inclusive, and expressive. Whether you’re a developer or researcher, investing in robust dataset creation and annotation is key to advancing the field of TTS.

For professional annotation and data solutions, visit GTS AI. Let us help you bring your TTS projects to life with precision and efficiency.

0 notes

Text

Each week (or so), we'll highlight the relevant (and sometimes rage-inducing) news adjacent to writing and freedom of expression. (Find it on the blog too!) This week:

Censorship watch: Somehow, KOSA returned

It’s official: The Kids Online Safety Act (KOSA) is back from the dead. After failing to pass last year, the bipartisan bill has returned with fresh momentum and the same old baggage—namely, vague language that could endanger hosting platforms, transformative work, and implicitly target LGBTQ+ content under the guise of “protecting kids.”

… But wait, it gets better (worse). Republican Senator Mike Lee has introduced a new bill that makes other attempts to censor the internet look tame: the Interstate Obscenity Definition Act (IODA)—basically KOSA on bath salts. Lee’s third attempt since 2022, the bill would redefine what counts as “obscene” content on the internet, and ban it nationwide—with “its peddlers prosecuted.”

Whether IODA gains traction in Congress is still up in the air. But free speech advocates are already raising alarm bells over its implications.

The bill aims to gut the long-standing legal definition of “obscenity” established by the 1973 Miller v. California ruling, which currently protects most speech under the First Amendment unless it fails a three-part test. Under the Miller test, content is only considered legally obscene if it 1: appeals to prurient interests, 2: violates “contemporary community standards,” and 3: is patently offensive in how it depicts sexual acts.

IODA would throw out key parts of that test—specifically the bits about “community standards”—making it vastly easier to prosecute anything with sexual content, from films and photos, to novels and fanfic.

Under Lee’s definition (which—omg shocking can you believe this coincidence—mirrors that of the Heritage Foundation), even the most mild content with the affect of possible “titillation” could be included. (According to the Woodhull Freedom Foundation, the proposed definition is so broad it could rope in media on the level of Game of Thrones—or, generally, anything that depicts or describes human sexuality.) And while obscenity prosecutions are quite rare these days, that could change if IODA passes—and the collateral damage and criminalization (especially applied to creative freedoms and LGBT+ content creators) could be massive.

And while Lee’s last two obscenity reboots failed, the current political climate is... let’s say, cloudy with a chance of fascism.

Sound a little like Project 2025? Ding ding ding! In fact, Russell Vought, P2025’s architect, was just quietly appointed to take over DOGE from Elon Musk (the agency on a chainsaw crusade against federal programs, culture, and reality in general).

So. One bill revives vague moral panic, another wants to legally redefine it and prosecute creators, and the man who helped write the authoritarian playbook—with, surprise, the intent to criminalize LGBT+ content and individuals—just gained control of the purse strings.

Cool cool cool.

AO3 works targeted in latest (massive) AI scraping

Rewind to last month—In the latest “wait, they did what now?” moment for AI, a Hugging Face user going by nyuuzyou uploaded a massive dataset made up of roughly 12.6 million fanworks scraped from AO3—full text, metadata, tags, and all. (Info from r/AO3: If your works’ ID numbers between 1 and 63,200,000, and has public access, the work has been scraped.)

And it didn’t stop at AO3. Art and writing communities like PaperDemon and Artfol, among others, also found their content had been quietly scraped and posted to machine learning hubs without consent.

This is yet another attempt in a long line of more “official” scraping of creative work, and the complete disregard shown by the purveyors of GenAI for copyright law and basic consent. (Even the Pope agrees.)

AO3 filed a DMCA takedown, and Hugging Face initially complied—temporarily. But nyuuzyou responded with a counterclaim and re-uploaded the dataset to their personal website and other platforms, including ModelScope and DataFish—sites based in China and Russia, the same locations reportedly linked to Meta’s own AI training dataset, LibGen.

Some writers are locking their works. Others are filing individual DMCAs. But as long as bad actors and platforms like Hugging Face allow users to upload massive datasets scraped from creative communities with minimal oversight, it’s a circuitous game of whack-a-mole. (As others have recommended, we also suggest locking your works for registered users only.)

After disavowing AI copyright, leadership purge hits U.S. cultural institutions

In news that should give us all a brief flicker of hope, the U.S. Copyright Office officially confirmed: if your “creative” work was generated entirely by AI, it’s not eligible for copyright.

A recently released report laid it out plainly—human authorship is non-negotiable under current U.S. law, a stance meant to protect the concept of authorship itself from getting swallowed by generative sludge. The report is explicit in noting that generative AI draws “on massive troves of data, including copyrighted works,” and asks: “Do any of the acts involved require the copyright owners’ consent or compensation?” (Spoiler: yes.) It’s a “straight ticket loss for the AI companies” no matter how many techbros’ pitch decks claim otherwise (sorry, Inkitt).

“The Copyright Office (with a few exceptions) doesn’t have the power to issue binding interpretations of copyright law, but courts often cite to its expertise as persuasive,” tech law professor Blake. E Reid wrote on Bluesky.As the push to normalize AI-generated content continues (followed by lawsuits), without meaningful human contribution—actual creative labor—the output is not entitled to protection.

… And then there’s the timing.

The report dropped just before the abrupt firing of Copyright Office director Shira Perlmutter, who has been vocally skeptical of AI’s entitlement to creative work.

It's yet another culture war firing—one that also conveniently clears the way for fewer barriers to AI exploitation of creative work. And given that Elon Musk’s pals have their hands all over current federal leadership and GenAI tulip fever… the overlap of censorship politics and AI deregulation is looking less like coincidence and more like strategy.

Also ousted (via email)—Librarian of Congress Carla Hayden. According to White House press secretary and general ghoul Karoline Leavitt, Dr. Hayden was dismissed for “quite concerning things that she had done… in the pursuit of DEI, and putting inappropriate books in the library for children.” (Translation: books featuring queer people and POC.)

Dr. Hayden, who made history as the first Black woman to hold the position, spent the last eight years modernizing the Library of Congress, expanding digital access, and turning the institution into something more inclusive, accessible, and, well, public. So of course, she had to go. ¯\_(ツ)_/¯

The American Library Association condemned the firing immediately, calling it an “unjust dismissal” and praising Dr. Hayden for her visionary leadership. And who, oh who might be the White House’s answer to the LoC’s demanding and (historically) independent role?

The White House named Todd Blanche—AKA Trump’s personal lawyer turned Deputy Attorney General—as acting Librarian of Congress.

That’s not just sus, it’s likely illegal—the Library is part of the legislative branch, and its leadership is supposed to be confirmed by Congress. (You know, separation of powers and all that.)

But, plot twist: In a bold stand, Library of Congress staff are resisting the administration's attempts to install new leadership without congressional approval.

If this is part of the broader Project 2025 playbook, it’s pretty clear: Gut cultural institutions, replace leadership with stunningly unqualified loyalists, and quietly centralize control over everything from copyright to the nation’s archives.

Because when you can’t ban the books fast enough, you just take over the library.

Rebellions are built on hope

Over the past few years (read: eternity), a whole ecosystem of reactionary grifters has sprung up around Star Wars—with self-styled CoNtEnT CrEaTorS turning outrage to revenue by endlessly trashing the fandom. It’s all part of the same cynical playbook that radicalized the fallout of Gamergate, with more lightsabers and worse thumbnails. Even the worst people you know weighed in on May the Fourth (while Prequel reassessment is totally valid—we’re not giving J.D. Vance a win).

But one thing that shouldn't be up for debate is this: Andor, which wrapped its phenomenal two-season run this week, is probably the best Star Wars project of our time—maybe any time. It’s a masterclass in what it means to work within a beloved mythos and transform it, deepen it, and make it feel urgent again. (Sound familiar? Fanfic knows.)

Radicalization, revolution, resistance. The banality of evil. The power of propaganda. Colonialism, occupation, genocide—and still, in the midst of it all, the stubborn, defiant belief in a better world (or Galaxy).

Even if you’re not a lifelong SW nerd (couldn’t be us), you should give it a watch. It’s a nice reminder that amidst all the scraping, deregulation, censorship, enshittification—stories matter. Hope matters.

And we’re still writing.

Let us know if you find something other writers should know about, or join our Discord and share it there!

- The Ellipsus Team xo

#ellipsus#writeblr#writers on tumblr#writing#creative writing#anti ai#writing community#fanfic#fanfiction#ao3#fiction#us politics#andor#writing blog#creative freedom

335 notes

·

View notes

Text

On Saturday, an Associated Press investigation revealed that OpenAI's Whisper transcription tool creates fabricated text in medical and business settings despite warnings against such use. The AP interviewed more than 12 software engineers, developers, and researchers who found the model regularly invents text that speakers never said, a phenomenon often called a “confabulation” or “hallucination” in the AI field.

Upon its release in 2022, OpenAI claimed that Whisper approached “human level robustness” in audio transcription accuracy. However, a University of Michigan researcher told the AP that Whisper created false text in 80 percent of public meeting transcripts examined. Another developer, unnamed in the AP report, claimed to have found invented content in almost all of his 26,000 test transcriptions.

The fabrications pose particular risks in health care settings. Despite OpenAI’s warnings against using Whisper for “high-risk domains,” over 30,000 medical workers now use Whisper-based tools to transcribe patient visits, according to the AP report. The Mankato Clinic in Minnesota and Children’s Hospital Los Angeles are among 40 health systems using a Whisper-powered AI copilot service from medical tech company Nabla that is fine-tuned on medical terminology.

Nabla acknowledges that Whisper can confabulate, but it also reportedly erases original audio recordings “for data safety reasons.” This could cause additional issues, since doctors cannot verify accuracy against the source material. And deaf patients may be highly impacted by mistaken transcripts since they would have no way to know if medical transcript audio is accurate or not.

The potential problems with Whisper extend beyond health care. Researchers from Cornell University and the University of Virginia studied thousands of audio samples and found Whisper adding nonexistent violent content and racial commentary to neutral speech. They found that 1 percent of samples included “entire hallucinated phrases or sentences which did not exist in any form in the underlying audio” and that 38 percent of those included “explicit harms such as perpetuating violence, making up inaccurate associations, or implying false authority.”

In one case from the study cited by AP, when a speaker described “two other girls and one lady,” Whisper added fictional text specifying that they “were Black.” In another, the audio said, “He, the boy, was going to, I’m not sure exactly, take the umbrella.” Whisper transcribed it to, “He took a big piece of a cross, a teeny, small piece … I’m sure he didn’t have a terror knife so he killed a number of people.”

An OpenAI spokesperson told the AP that the company appreciates the researchers’ findings and that it actively studies how to reduce fabrications and incorporates feedback in updates to the model.

Why Whisper Confabulates

The key to Whisper’s unsuitability in high-risk domains comes from its propensity to sometimes confabulate, or plausibly make up, inaccurate outputs. The AP report says, "Researchers aren’t certain why Whisper and similar tools hallucinate," but that isn't true. We know exactly why Transformer-based AI models like Whisper behave this way.

Whisper is based on technology that is designed to predict the next most likely token (chunk of data) that should appear after a sequence of tokens provided by a user. In the case of ChatGPT, the input tokens come in the form of a text prompt. In the case of Whisper, the input is tokenized audio data.

The transcription output from Whisper is a prediction of what is most likely, not what is most accurate. Accuracy in Transformer-based outputs is typically proportional to the presence of relevant accurate data in the training dataset, but it is never guaranteed. If there is ever a case where there isn't enough contextual information in its neural network for Whisper to make an accurate prediction about how to transcribe a particular segment of audio, the model will fall back on what it “knows” about the relationships between sounds and words it has learned from its training data.

According to OpenAI in 2022, Whisper learned those statistical relationships from “680,000 hours of multilingual and multitask supervised data collected from the web.” But we now know a little more about the source. Given Whisper's well-known tendency to produce certain outputs like "thank you for watching," "like and subscribe," or "drop a comment in the section below" when provided silent or garbled inputs, it's likely that OpenAI trained Whisper on thousands of hours of captioned audio scraped from YouTube videos. (The researchers needed audio paired with existing captions to train the model.)

There's also a phenomenon called “overfitting” in AI models where information (in this case, text found in audio transcriptions) encountered more frequently in the training data is more likely to be reproduced in an output. In cases where Whisper encounters poor-quality audio in medical notes, the AI model will produce what its neural network predicts is the most likely output, even if it is incorrect. And the most likely output for any given YouTube video, since so many people say it, is “thanks for watching.”

In other cases, Whisper seems to draw on the context of the conversation to fill in what should come next, which can lead to problems because its training data could include racist commentary or inaccurate medical information. For example, if many examples of training data featured speakers saying the phrase “crimes by Black criminals,” when Whisper encounters a “crimes by [garbled audio] criminals” audio sample, it will be more likely to fill in the transcription with “Black."

In the original Whisper model card, OpenAI researchers wrote about this very phenomenon: "Because the models are trained in a weakly supervised manner using large-scale noisy data, the predictions may include texts that are not actually spoken in the audio input (i.e. hallucination). We hypothesize that this happens because, given their general knowledge of language, the models combine trying to predict the next word in audio with trying to transcribe the audio itself."

So in that sense, Whisper "knows" something about the content of what is being said and keeps track of the context of the conversation, which can lead to issues like the one where Whisper identified two women as being Black even though that information was not contained in the original audio. Theoretically, this erroneous scenario could be reduced by using a second AI model trained to pick out areas of confusing audio where the Whisper model is likely to confabulate and flag the transcript in that location, so a human could manually check those instances for accuracy later.

Clearly, OpenAI's advice not to use Whisper in high-risk domains, such as critical medical records, was a good one. But health care companies are constantly driven by a need to decrease costs by using seemingly "good enough" AI tools—as we've seen with Epic Systems using GPT-4 for medical records and UnitedHealth using a flawed AI model for insurance decisions. It's entirely possible that people are already suffering negative outcomes due to AI mistakes, and fixing them will likely involve some sort of regulation and certification of AI tools used in the medical field.

87 notes

·

View notes

Text

Behold, a flock of Medics

(Rambling under the cut)

Ok so y'all know about that semi-canon compliant AU I have that I've mentioned before in tags n shit? Fortress Rising? Well, Corey (my dear older sib, @cursed--alien ) and I talk about it like it's a real piece of media (or as though its something I actually make fanworks for ffs) rather than us mutually bullshitting cool ideas for our Blorbos. One such Idea we have bullshit about is that basically EVERY medic that meets becomes part of a group the Teams call the "Trauma Unit," they just get along so well lol

Here's some bulletpoints about the Medics

Ludwig Humboldt - RED Medic, hired 1964, born 1918. Introduced in Arc 1: Teambuilding. The most canon compliant of the four. Literally just my default take on Medic

Fredrich "Fritz" Humboldt - BLU Medic, clone of Ludwig, "Hired" 1964. Introduced in Arc 2: The Clone Saga. A more reserved man than his counterpart, he hides his madness behind a veneer of normalcy. Honestly Jealous of Ludwig for how freely he expresses himself. Suffers from anxiety, which he began treating himself. Has since spiraled into a dependency on diazepam that puts strain on his relationship with Dimitri, the BLU Heavy.

Sean Hickey - Former BLU Medic, served with the "Classic" team, born 1908. Introduced in Arc 3: Unfinished Business. A man who who has a genuine passion for healing and the youngest on his team. Unfortunately, his time with BLU has left him with deep emotional scars, most stemming from his abuse at the hands of Chevy, the team leader. His only solace was in his friendship with Fred Conagher, though they lost contact after his contract ended. For the past 30 years, he's lived peacefully, though meeting the Humboldts has left him feeling bitter about his past experiences.

Hertz - Prototype Medibot, serial no. 110623-DAR. Introduced in Arc 4: Test Your Metal. The final prototype created by Gray Mann's robotics division before his untimely death forced the labs to shut their doors. Adopted by the Teams after RED Team found him while clearing out a Gray Gravel Co. warehouse. As with all the Graybots, he was programmed based on a combination of compromised respawn data and intel uncovered by both teams' respective Spies. Unlike the others, however, his dataset is incomplete, which has left him with numerous bugs in his programming. His speech (modeled off Ludwig and Fritz's) often cuts out, becoming interspersed with a combination of default responses for older Graybot models and medical textbook jargon all modulated in emotionless text-to-speech

131 notes

·

View notes

Text

Reminder to people to Stop Using AI. Full stop. Cease. AI generated images, text, sound, it's all built off of stolen data and is environmentally destructive due to the power needed for it. The amount of material scraped off the internet, the massive amounts of data needed for LLMs to work, it's all built off other people's work. The data scraping doesn't know the difference between copyrighted and public domain, nor between illegal abuse material and regular images. Real people have to manually go through the data collected to remove abuse material collected into the datasets, and not only are they paid horribly for their work but are often traumatized by what they see. That fancy ai art? Built off data that includes abuse material, CSA material, copyrighted material, material not meant to be used in this fashion. It's vile.

You don't need to use ai images, you Should Not Use them at all. Choosing ai over real artists shows you are cheap and scummy.

I would rather deal with something drawn by a real person that is 'poorly drawn', I would rather have stick figures scribbled on a napkin, I would rather have stream of consciousness text to speech from a real person than ai slop.

I see a lot of people using ai images in their posts to spread fundraiser requests. Stop that. You don't need ai art to sell your message better, in fact the ai images come off as cheap and scammy and inauthentic.

Chatgpt is not a reliable source of information. It is not a search engine or a translator, so not use it as such. Don't use it at all. Period. Full stop. Stop feeding the machine. Stop willingly feeding your writing, art, images, voice, or anything else into their datasets.

AI bullshit is being shoved in my face at every turn and I'm tired of seeing it.

18 notes

·

View notes

Text

Actually going to make this reply its own separate post. TLDR: I have a theory that Chester, Norris, and Augustus are essentially spooky AI created from datasets from the fears/tapes.

Now, @gammija and @shinyopals weren't sure how Augustus fit into this. Right now, the leading theory is that he is Jonah Magnus. He only had one statement in the show, so there's basically no data to collect, so why would he be an AI?

Hypothetically speaking, let's say he isn't Jonah. Or at the very least, he isn't the Jonah we know.

Here's an idea: Augustus isn't the TMA Jonah Magnus, but rather the Jonah Magnus of the TMagP universe.

Here's my current thoughts as to why this may be the case:

1) TMA Jonah is dead. Jon had to kill him in order to control the Panopticon. While I won't discount TMA Jonah's and TMagP's Jonah's memories combining, I don't think it can be entirely TMA's in there.

2) I'm pretty confident that Freddie has connections to the Institute, for a variety of reasons. If nothing else, Freddie could have been connected to them before the Institute burned down in 1999. And unless it's a different Magnus starting the Institute, I'm just going to assume for now that Jonah started the Institute in both universes.

3) Augustus already separated himself out from Norris and Chester in two ways. He speaks less than the two of them but also his case is really odd which I'll go into next.

4) Augustus is the only Text-to-Speech voice who didn't say where his case came from. Norris and Chester so far have always told us where they're reading from. They say links, they mention threads and who said what in each thread, and we know each different email sent. Meanwhile, Augustus can't even tell us who the case giver is in "Taking Notes". This wouldn't mean much, but... we also know that all the papers in The Institute are gone. Noticeably so. Perhaps even integrated into the Freddie system.

5) Tbh... I just think it would be more interesting if they aren't all three from the same place. We know from the promotion, the Ink5oul case, especially the design of the logo that alchemy will be a major part of TMagP. Transformation and immortality. I wouldn't put it past this universe's Jonah to try to search for immortality in his own way.

And this is less of a point and more of a general obversation. We have zero clue how these three got to this state. Even if we assume "fears stuff" generally the fears and how they transform people have some sort of logic to it, dream or not. I don't currently understand the logic yet on why they've become this. Also, we don't know what triggered them integrating into Freddie in the first place.

There's an outside force at play here, and I think it would be interesting if Augustus was a key factor to understanding it.

#tmagp#tmagp spoilers#tmagp speculation#ngl half of this is just 'I think it would be neat and lead to an interesting path for the story to take'

42 notes

·

View notes

Text

i think it’s really really important that we keep reminding people that what we’re calling ai isn’t even close to intelligent and that its name is pure marketing. the silicon valley tech bros and hollywood executives call it ai because they either want it to seem all-powerful or they believe it is and use that to justify their use of it to exploit and replace people.

chat-gpt and things along those lines are not intelligent, they are predictive text generators that simply have more data to draw on than previous ones like, you know, your phone’s autocorrect. they are designed to pass the turing test by having human-passing speech patterns and syntax. they cannot come up with anything new, because they are machines programmed on data sets. they can’t even distinguish fact from fiction, because all they are actually capable of is figuring out how to construct a human-sounding response using applicable data to a question asked by a human. you know how people who use chat-gpt to cheat on essays will ask it for reference lists and get a list of texts that don’t exist? it’s because all chat-gpt is doing is figuring out what types of words typically appear in response to questions like that, and then stringing them together.

midjourney and things along those lines are not intelligent, they are image generators that have just been really heavily fine-tuned. you know how they used to do janky fingers and teeth and then they overcame that pretty quickly? that’s not because of growing intelligence, it’s because even more photographs got added to their data sets and were programmed in such a way that they were able to more accurately identify patterns in the average amount of fingers and teeth across all those photos. and it too isn’t capable of creation. it is placing pixels in spots to create an amalgamation of images tagged with metadata that matches the words in your request. you ask for a tree and it spits out something a little quirky? it’s not because it’s creating something, it’s because it gathered all of its data on trees and then averaged it out. you know that “the rest of the mona lisa” tweet and how it looks like shit? the fact that there is no “rest” of the mona lisa aside, it’s because the generator does not have the intelligence required to identify what’s what in the background of such a painting and extend it with any degree of accuracy, it looked at the colours and approximate shapes and went “oho i know what this is maybe” and spat out an ugly landscape that doesn’t actually make any kind of physical or compositional sense, because it isn’t intelligent.

and all those ai-generated voices? also not intelligent, literally just the same vocal synth we’ve been able to do since daisy bell but more advanced. you get a sample of a voice, break it down into the various vowel and consonant sounds, and then when you type in the text you want it to say, it plays those vowel and consonant sounds in the order displayed in that text. the only difference now is that the breaking it down process can be automated to some extent (still not intelligence, just data analysis) and the synthesising software can recognise grammar a bit more and add appropriate inflections to synthesised voices to create a more natural flow.

if you took the exact same technology that powers midjourney or chat-gpt and removed a chunk of its dataset, the stuff it produces would noticeably worsen because it only works with a very very large amount of data. these programs are not intelligent. they are programs that analyse and store data and then string it together upon request. and if you want evidence that the term ai is just being used for marketing, look at the sheer amount of software that’s added “ai tools” that are either just things that already existed within the software, using the same exact tech they always did but slightly refined (a lot of film editing software are renaming things like their chromakey tools to have “ai” in the name, for example) or are actually worse than the things they’re overhauling (like the grammar editor in office 365 compared to the classic office spellcheck).

but you wanna real nifty lil secret about the way “ai” is developing? it’s all neural nets and machine learning, and the thing about neural nets and machine learning is that in order to continue growing in power it needs new data. so yeah, currently, as more and more data gets added to them, they seem to be evolving really quickly. but at some point soon after we run out of data to add to them because people decided they were complete or because corporations replaced all new things with generated bullshit, they’re going to stop evolving and start getting really, really, REALLY repetitive. because machine learning isn’t intelligent or capable of being inspired to create new things independently. no, it’s actually self-reinforcing. it gets caught in loops. "ai” isn’t the future of art, it’s a data analysis machine that’ll start sounding even more like a broken record than it already does the moment its data sets stop having really large amounts of unique things added to it.

#steph's post tag#only good thing to come out of the evolution of image generation and recognition is that captchas have actually gotten easier#because computers can recognise even the blurriest photos now#so instead captcha now gives you really really clear images of things that look nothing like each other#(like. ''pick all the chairs'' and then there's a few chairs a few bicycles and a few trees)#but with a distorted watermark overlaid on the images so that computers can't read them

117 notes

·

View notes

Text

Unlock the Power of AI: Give Life to Your Videos with Human-Like Voice-Overs

Video has emerged as one of the most effective mediums for audience engagement in the quickly changing field of content creation. Whether you are a business owner, marketer, or YouTuber, producing high-quality videos is crucial. However, what if you could improve your videos even more? Presenting AI voice-overs, the video production industry's future.

It's now simpler than ever to create convincing, human-like voiceovers thanks to developments in artificial intelligence. Your listeners will find it difficult to tell these AI-powered voices apart from authentic human voices since they sound so realistic. However, what is AI voice-over technology really, and why is it important for content creators? Let's get started!

AI Voice-Overs: What Is It? Artificial intelligence voice-overs are produced by machine learning models. In order to replicate the subtleties, tones, and inflections of human speech, these voices are made to seem remarkably natural. Applications for them are numerous and include audiobooks, podcasts, ads, and video narration.

It used to be necessary to hire professional voice actors to create voice-overs for videos, which may be costly and time-consuming. However, voice-overs may now be produced fast without sacrificing quality thanks to AI.

Why Should Your Videos Have AI Voice-Overs? Conserve time and money. Conventional voice acting can be expensive and time-consuming. The costs of scheduling recording sessions, hiring a voice actor, and editing the finished product can mount up rapidly. Conversely, AI voice-overs can be produced in a matter of minutes and at a far lower price.

Regularity and Adaptability You can create consistent audio for all of your videos, regardless of their length or style, by using AI voice-overs. Do you want to alter the tempo or tone? No worries, you may easily change the voice's qualities.

Boost Audience Involvement Your content can become more captivating with a realistic voice-over. Your movies will sound more polished and professional thanks to the more natural-sounding voices produced by AI. Your viewers may have a better overall experience and increase viewer retention as a result.

Support for Multiple Languages Multiple languages and accents can be supported with AI voice-overs, increasing the accessibility of your content for a worldwide audience. AI is capable of producing precise and fluid voice-overs in any language, including English, Spanish, French, and others.

Available at all times AI voice generators are constantly active! You are free to produce as many voiceovers as you require at any one time. This is ideal for expanding the production of content without requiring more human resources.

What Is the Process of AI Voice-Over Technology? Text-to-speech (TTS) algorithms are used in AI voice-over technology to interpret and translate written text into spoken words. Large datasets of human speech are used to train these systems, which then learn linguistic nuances and patterns to produce voices that are more lifelike.

The most sophisticated AI models may even modify the voice according to context, emotion, and tone, producing voice-overs that seem as though they were produced by a skilled human artist.

Where Can AI Voice-Overs Be Used? Videos on YouTube: Ideal for content producers who want to give their work a polished image without investing a lot of time on recording.

Explainers and Tutorials: AI voice-overs can narrate instructional films or tutorials, making your material interesting and easy to understand.

Marketing Videos: Use expert voice-overs for advertisements, product demonstrations, and promotional videos to enhance the marketing content for your brand.

Podcasts: Using AI voice technology, you can produce material that sounds like a podcast, providing your audience with a genuine, human-like experience.

E-learning: AI-generated voices can be included into e-learning modules to provide instructional materials a polished and reliable narration.

Selecting the Best AI Voice-Over Program Numerous AI voice-over tools are available, each with special features. Among the well-liked choices are:

ElevenLabs: renowned for its customizable features and AI voices that seem natural.

HeyGen: Provides highly human-sounding, customisable AI voices, ideal for content producers.

Google Cloud Text-to-Speech: A dependable choice for multilingual, high-quality voice synthesis.

Choose an AI voice-over tool that allows you to customize it, choose from a variety of voices, and change the tone and tempo.

AI Voice-Overs' Prospects in Content Production Voice-overs will only get better as AI technology advances. AI-generated voices could soon be indistinguishable from human voices, giving content producers even more options to improve their work without spending a lot of money on voice actors or spending a lot of time recording.

The future is bright for those who create content. AI voice-overs are a fascinating technology that can enhance the quality of your films, save money, and save time. Using AI voice-overs in your workflow is revolutionary, whether you're making marketing materials, YouTube videos, or online courses.

Are You Interested in AI Voice-Overs? Read my entire post on how AI voice-overs may transform your videos if you're prepared to step up your content production. To help you get started right away, I've also included suggestions for some of the top AI voice-over programs on the market right now.

[Go Here to Read the Complete Article]

#AI Voice Over#YouTube Tips#Content Creation#Voiceover#Video Marketing#animals#birds#black cats#cats of tumblr#fishblr#AI Tools#Digital Marketing

2 notes

·

View notes

Text

Mastering Neural Networks: A Deep Dive into Combining Technologies

How Can Two Trained Neural Networks Be Combined?

Introduction

In the ever-evolving world of artificial intelligence (AI), neural networks have emerged as a cornerstone technology, driving advancements across various fields. But have you ever wondered how combining two trained neural networks can enhance their performance and capabilities? Let’s dive deep into the fascinating world of neural networks and explore how combining them can open new horizons in AI.

Basics of Neural Networks

What is a Neural Network?

Neural networks, inspired by the human brain, consist of interconnected nodes or "neurons" that work together to process and analyze data. These networks can identify patterns, recognize images, understand speech, and even generate human-like text. Think of them as a complex web of connections where each neuron contributes to the overall decision-making process.

How Neural Networks Work

Neural networks function by receiving inputs, processing them through hidden layers, and producing outputs. They learn from data by adjusting the weights of connections between neurons, thus improving their ability to predict or classify new data. Imagine a neural network as a black box that continuously refines its understanding based on the information it processes.

Types of Neural Networks

From simple feedforward networks to complex convolutional and recurrent networks, neural networks come in various forms, each designed for specific tasks. Feedforward networks are great for straightforward tasks, while convolutional neural networks (CNNs) excel in image recognition, and recurrent neural networks (RNNs) are ideal for sequential data like text or speech.

Why Combine Neural Networks?

Advantages of Combining Neural Networks

Combining neural networks can significantly enhance their performance, accuracy, and generalization capabilities. By leveraging the strengths of different networks, we can create a more robust and versatile model. Think of it as assembling a team where each member brings unique skills to tackle complex problems.

Applications in Real-World Scenarios

In real-world applications, combining neural networks can lead to breakthroughs in fields like healthcare, finance, and autonomous systems. For example, in medical diagnostics, combining networks can improve the accuracy of disease detection, while in finance, it can enhance the prediction of stock market trends.

Methods of Combining Neural Networks

Ensemble Learning

Ensemble learning involves training multiple neural networks and combining their predictions to improve accuracy. This approach reduces the risk of overfitting and enhances the model's generalization capabilities.

Bagging

Bagging, or Bootstrap Aggregating, trains multiple versions of a model on different subsets of the data and combines their predictions. This method is simple yet effective in reducing variance and improving model stability.

Boosting

Boosting focuses on training sequential models, where each model attempts to correct the errors of its predecessor. This iterative process leads to a powerful combined model that performs well even on difficult tasks.

Stacking

Stacking involves training multiple models and using a "meta-learner" to combine their outputs. This technique leverages the strengths of different models, resulting in superior overall performance.

Transfer Learning

Transfer learning is a method where a pre-trained neural network is fine-tuned on a new task. This approach is particularly useful when data is scarce, allowing us to leverage the knowledge acquired from previous tasks.

Concept of Transfer Learning

In transfer learning, a model trained on a large dataset is adapted to a smaller, related task. For instance, a model trained on millions of images can be fine-tuned to recognize specific objects in a new dataset.

How to Implement Transfer Learning

To implement transfer learning, we start with a pretrained model, freeze some layers to retain their knowledge, and fine-tune the remaining layers on the new task. This method saves time and computational resources while achieving impressive results.

Advantages of Transfer Learning

Transfer learning enables quicker training times and improved performance, especially when dealing with limited data. It’s like standing on the shoulders of giants, leveraging the vast knowledge accumulated from previous tasks.

Neural Network Fusion

Neural network fusion involves merging multiple networks into a single, unified model. This method combines the strengths of different architectures to create a more powerful and versatile network.

Definition of Neural Network Fusion

Neural network fusion integrates different networks at various stages, such as combining their outputs or merging their internal layers. This approach can enhance the model's ability to handle diverse tasks and data types.

Types of Neural Network Fusion

There are several types of neural network fusion, including early fusion, where networks are combined at the input level, and late fusion, where their outputs are merged. Each type has its own advantages depending on the task at hand.

Implementing Fusion Techniques

To implement neural network fusion, we can combine the outputs of different networks using techniques like averaging, weighted voting, or more sophisticated methods like learning a fusion model. The choice of technique depends on the specific requirements of the task.

Cascade Network

Cascade networks involve feeding the output of one neural network as input to another. This approach creates a layered structure where each network focuses on different aspects of the task.

What is a Cascade Network?

A cascade network is a hierarchical structure where multiple networks are connected in series. Each network refines the outputs of the previous one, leading to progressively better performance.

Advantages and Applications of Cascade Networks

Cascade networks are particularly useful in complex tasks where different stages of processing are required. For example, in image processing, a cascade network can progressively enhance image quality, leading to more accurate recognition.

Practical Examples

Image Recognition

In image recognition, combining CNNs with ensemble methods can improve accuracy and robustness. For instance, a network trained on general image data can be combined with a network fine-tuned for specific object recognition, leading to superior performance.

Natural Language Processing

In natural language processing (NLP), combining RNNs with transfer learning can enhance the understanding of text. A pre-trained language model can be fine-tuned for specific tasks like sentiment analysis or text generation, resulting in more accurate and nuanced outputs.

Predictive Analytics

In predictive analytics, combining different types of networks can improve the accuracy of predictions. For example, a network trained on historical data can be combined with a network that analyzes real-time data, leading to more accurate forecasts.

Challenges and Solutions

Technical Challenges

Combining neural networks can be technically challenging, requiring careful tuning and integration. Ensuring compatibility between different networks and avoiding overfitting are critical considerations.

Data Challenges

Data-related challenges include ensuring the availability of diverse and high-quality data for training. Managing data complexity and avoiding biases are essential for achieving accurate and reliable results.

Possible Solutions

To overcome these challenges, it’s crucial to adopt a systematic approach to model integration, including careful preprocessing of data and rigorous validation of models. Utilizing advanced tools and frameworks can also facilitate the process.

Tools and Frameworks

Popular Tools for Combining Neural Networks

Tools like TensorFlow, PyTorch, and Keras provide extensive support for combining neural networks. These platforms offer a wide range of functionalities and ease of use, making them ideal for both beginners and experts.

Frameworks to Use

Frameworks like Scikit-learn, Apache MXNet, and Microsoft Cognitive Toolkit offer specialized support for ensemble learning, transfer learning, and neural network fusion. These frameworks provide robust tools for developing and deploying combined neural network models.

Future of Combining Neural Networks

Emerging Trends

Emerging trends in combining neural networks include the use of advanced ensemble techniques, the integration of neural networks with other AI models, and the development of more sophisticated fusion methods.

Potential Developments

Future developments may include the creation of more powerful and efficient neural network architectures, enhanced transfer learning techniques, and the integration of neural networks with other technologies like quantum computing.

Case Studies

Successful Examples in Industry

In healthcare, combining neural networks has led to significant improvements in disease diagnosis and treatment recommendations. For example, combining CNNs with RNNs has enhanced the accuracy of medical image analysis and patient monitoring.

Lessons Learned from Case Studies

Key lessons from successful case studies include the importance of data quality, the need for careful model tuning, and the benefits of leveraging diverse neural network architectures to address complex problems.

Online Course

I have came across over many online courses. But finally found something very great platform to save your time and money.

1.Prag Robotics_ TBridge

2.Coursera

Best Practices

Strategies for Effective Combination

Effective strategies for combining neural networks include using ensemble methods to enhance performance, leveraging transfer learning to save time and resources, and adopting a systematic approach to model integration.

Avoiding Common Pitfalls

Common pitfalls to avoid include overfitting, ignoring data quality, and underestimating the complexity of model integration. By being aware of these challenges, we can develop more robust and effective combined neural network models.

Conclusion

Combining two trained neural networks can significantly enhance their capabilities, leading to more accurate and versatile AI models. Whether through ensemble learning, transfer learning, or neural network fusion, the potential benefits are immense. By adopting the right strategies and tools, we can unlock new possibilities in AI and drive advancements across various fields.

FAQs

What is the easiest method to combine neural networks?

The easiest method is ensemble learning, where multiple models are combined to improve performance and accuracy.

Can different types of neural networks be combined?

Yes, different types of neural networks, such as CNNs and RNNs, can be combined to leverage their unique strengths.

What are the typical challenges in combining neural networks?

Challenges include technical integration, data quality, and avoiding overfitting. Careful planning and validation are essential.

How does combining neural networks enhance performance?

Combining neural networks enhances performance by leveraging diverse models, reducing errors, and improving generalization.

Is combining neural networks beneficial for small datasets?

Yes, combining neural networks can be beneficial for small datasets, especially when using techniques like transfer learning to leverage knowledge from larger datasets.

#artificialintelligence#coding#raspberrypi#iot#stem#programming#science#arduinoproject#engineer#electricalengineering#robotic#robotica#machinelearning#electrical#diy#arduinouno#education#manufacturing#stemeducation#robotics#robot#technology#engineering#robots#arduino#electronics#automation#tech#innovation#ai

4 notes

·

View notes

Text

Text-to-speech datasets represent the cornerstone of AI-powered TTS technology, enabling machines to communicate with human-like fluency and naturalness. At Globose Technology Solutions, we recognize the important role of high-quality datasets in pushing the boundaries of AI, and we are committed to harnessing the power of TTS technology to drive innovation and enhance human-machine interactions. Visit Globose Technology Solutions for more information about our services and expertise in text-to-speech technology:-

#Text-To-Speech Datasets#NLP#Data Collection Compnay#Data Collection#data collection company#globose technology solutions#datasets#technology

0 notes

Text

How to Develop a Video Text-to-Speech Dataset for Deep Learning

Introduction:

In the swiftly advancing domain of deep learning, video-based Text-to-Speech (TTS) technology is pivotal in improving speech synthesis and facilitating human-computer interaction. A well-organized dataset serves as the cornerstone of an effective TTS model, guaranteeing precision, naturalness, and flexibility. This article will outline the systematic approach to creating a high-quality video TTS dataset for deep learning purposes.

Recognizing the Significance of a Video TTS Dataset

A video Text To Speech Dataset comprises video recordings that are matched with transcribed text and corresponding audio of speech. Such datasets are vital for training models that produce natural and contextually relevant synthetic speech. These models find applications in various areas, including voice assistants, automated dubbing, and real-time language translation.

Establishing Dataset Specifications

Prior to initiating data collection, it is essential to delineate the dataset’s scope and specifications. Important considerations include:

Language Coverage: Choose one or more languages relevant to your application.

Speaker Diversity: Incorporate a range of speakers varying in age, gender, and accents.

Audio Quality: Ensure recordings are of high fidelity with minimal background interference.

Sentence Variability: Gather a wide array of text samples, encompassing formal, informal, and conversational speech.

Data Collection Methodology

a. Choosing Video Sources

To create a comprehensive dataset, videos can be sourced from:

Licensed datasets and public domain archives

Crowdsourced recordings featuring diverse speakers

Custom recordings conducted in a controlled setting

It is imperative to secure the necessary rights and permissions for utilizing any third-party content.

b. Audio Extraction and Preprocessing

After collecting the videos, extract the speech audio using tools such as MPEG. The preprocessing steps include:

Noise Reduction: Eliminate background noise to enhance speech clarity.

Volume Normalization: Maintain consistent audio levels.

Segmentation: Divide lengthy recordings into smaller, sentence-level segments.

Text Alignment and Transcription

For deep learning models to function optimally, it is essential that transcriptions are both precise and synchronized with the corresponding speech. The following methods can be employed:

Automatic Speech Recognition (ASR): Implement ASR systems to produce preliminary transcriptions.

Manual Verification: Enhance accuracy through a thorough review of the transcriptions by human experts.

Timestamp Alignment: Confirm that each word is accurately associated with its respective spoken timestamp.

Data Annotation and Labeling

Incorporating metadata significantly improves the dataset's functionality. Important annotations include:

Speaker Identity: Identify each speaker to support speaker-adaptive TTS models.

Emotion Tags: Specify tone and sentiment to facilitate expressive speech synthesis.

Noise Labels: Identify background noise to assist in developing noise-robust models.

Dataset Formatting and Storage

To ensure efficient model training, it is crucial to organize the dataset in a systematic manner:

Audio Files: Save speech recordings in WAV or FLAC formats.

Transcriptions: Keep aligned text files in JSON or CSV formats.

Metadata Files: Provide speaker information and timestamps for reference.

Quality Assurance and Data Augmentation

Prior to finalizing the dataset, it is important to perform comprehensive quality assessments:

Verify Alignment: Ensure that text and speech are properly synchronized.

Assess Audio Clarity: Confirm that recordings adhere to established quality standards.

Augmentation: Implement techniques such as pitch shifting, speed variation, and noise addition to enhance model robustness.

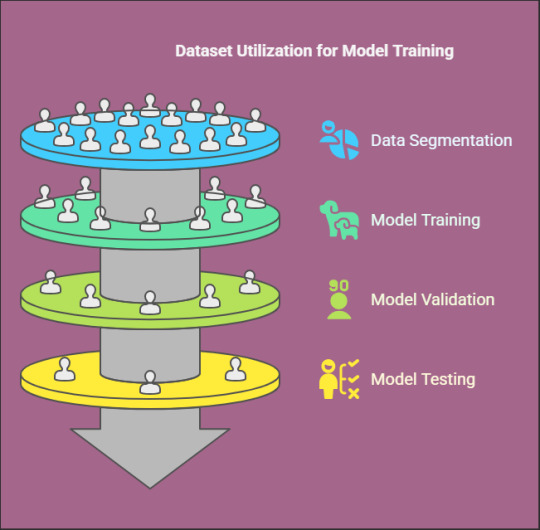

Training and Testing Your Dataset

Ultimately, utilize the dataset to train deep learning models such as Taco Tron, Fast Speech, or VITS. Designate a segment of the dataset for validation and testing to assess model performance and identify areas for improvement.

Conclusion

Creating a video TTS dataset is a detailed yet fulfilling endeavor that establishes a foundation for sophisticated speech synthesis applications. By Globose Technology Solutions prioritizing high-quality data collection, accurate transcription, and comprehensive annotation, one can develop a dataset that significantly boosts the efficacy of deep learning models in TTS technology.

0 notes

Text

Optimizing Business Operations with Advanced Machine Learning Services

Machine learning has gained popularity in recent years thanks to the adoption of the technology. On the other hand, traditional machine learning necessitates managing data pipelines, robust server maintenance, and the creation of a model for machine learning from scratch, among other technical infrastructure management tasks. Many of these processes are automated by machine learning service which enables businesses to use a platform much more quickly.

What do you understand of Machine learning?

Deep learning and neural networks applied to data are examples of machine learning, a branch of artificial intelligence focused on data-driven learning. It begins with a dataset and gains the ability to extract relevant data from it.

Machine learning technologies facilitate computer vision, speech recognition, face identification, predictive analytics, and more. They also make regression more accurate.

For what purpose is it used?

Many use cases, such as churn avoidance and support ticket categorization make use of MLaaS. The vital thing about MLaaS is it makes it possible to delegate machine learning's laborious tasks. This implies that you won't need to install software, configure servers, maintain infrastructure, and other related tasks. All you have to do is choose the column to be predicted, connect the pertinent training data, and let the software do its magic.

Natural Language Interpretation

By examining social media postings and the tone of consumer reviews, natural language processing aids businesses in better understanding their clientele. the ml services enable them to make more informed choices about selling their goods and services, including providing automated help or highlighting superior substitutes. Machine learning can categorize incoming customer inquiries into distinct groups, enabling businesses to allocate their resources and time.

Predicting

Another use of machine learning is forecasting, which allows businesses to project future occurrences based on existing data. For example, businesses that need to estimate the costs of their goods, services, or clients might utilize MLaaS for cost modelling.

Data Investigation

Investigating variables, examining correlations between variables, and displaying associations are all part of data exploration. Businesses may generate informed suggestions and contextualize vital data using machine learning.

Data Inconsistency

Another crucial component of machine learning is anomaly detection, which finds anomalous occurrences like fraud. This technology is especially helpful for businesses that lack the means or know-how to create their own systems for identifying anomalies.

Examining And Comprehending Datasets

Machine learning provides an alternative to manual dataset searching and comprehension by converting text searches into SQL queries using algorithms trained on millions of samples. Regression analysis use to determine the correlations between variables, such as those affecting sales and customer satisfaction from various product attributes or advertising channels.

Recognition Of Images

One area of machine learning that is very useful for mobile apps, security, and healthcare is image recognition. Businesses utilize recommendation engines to promote music or goods to consumers. While some companies have used picture recognition to create lucrative mobile applications.

Your understanding of AI will drastically shift. They used to believe that AI was only beyond the financial reach of large corporations. However, thanks to services anyone may now use this technology.

2 notes

·

View notes

Text

🤖 Artificial Intelligence (AI): What It Is and How It Works

Artificial Intelligence (AI) is transforming the way we live, work, and interact with technology. Let's break down what AI is and how it works. 🌐

What Is AI?

AI refers to the simulation of human intelligence in machines designed to think and learn like humans. These intelligent systems can perform tasks that typically require human intelligence, such as recognizing speech, making decisions, and translating languages.

How AI Works:

Data Collection 📊 AI systems need data to learn and make decisions. This data can come from various sources, including text, images, audio, and video. The more data an AI system has, the better it can learn and perform.

Machine Learning Algorithms 🤖 AI relies on machine learning algorithms to process data and learn from it. These algorithms identify patterns and relationships within the data, allowing the AI system to make predictions or decisions.

Training and Testing 📚 AI models are trained using large datasets to recognize patterns and make accurate predictions. After training, these models are tested with new data to ensure they perform correctly.

Neural Networks 🧠 Neural networks are a key component of AI, modeled after the human brain. They consist of layers of interconnected nodes (neurons) that process information. Deep learning, a subset of machine learning, uses neural networks with many layers (deep neural networks) to analyze complex data.

Natural Language Processing (NLP) 🗣 NLP enables AI to understand and interact with human language. It’s used in applications like chatbots, language translation, and sentiment analysis.

Computer Vision 👀 Computer vision allows AI to interpret and understand visual information from the world, such as recognizing objects in images and videos.

Decision Making and Automation 🧩 AI systems use the insights gained from data analysis to make decisions and automate tasks. This capability is used in various industries, from healthcare to finance, to improve efficiency and accuracy.

Applications of AI:

Healthcare 🏥: AI aids in diagnosing diseases, personalizing treatment plans, and predicting patient outcomes.

Finance 💰: AI enhances fraud detection, automates trading, and improves customer service.

Retail 🛍: AI powers recommendation systems, optimizes inventory management, and personalizes shopping experiences.

Transportation 🚗: AI drives advancements in autonomous vehicles, route optimization, and traffic management.

AI is revolutionizing multiple sectors by enhancing efficiency, accuracy, and decision-making. As AI technology continues to evolve, its impact on our daily lives will only grow, opening up new possibilities and transforming industries.

Stay ahead of the curve with the latest AI insights and trends! 🚀 #ArtificialIntelligence #MachineLearning #Technology #Innovation #AI

3 notes

·

View notes

Text

At 8:22 am on December 4 last year, a car traveling down a small residential road in Alabama used its license-plate-reading cameras to take photos of vehicles it passed. One image, which does not contain a vehicle or a license plate, shows a bright red “Trump” campaign sign placed in front of someone’s garage. In the background is a banner referencing Israel, a holly wreath, and a festive inflatable snowman.

Another image taken on a different day by a different vehicle shows a “Steelworkers for Harris-Walz” sign stuck in the lawn in front of someone’s home. A construction worker, with his face unblurred, is pictured near another Harris sign. Other photos show Trump and Biden (including “Fuck Biden”) bumper stickers on the back of trucks and cars across America. One photo, taken in November 2023, shows a partially torn bumper sticker supporting the Obama-Biden lineup.

These images were generated by AI-powered cameras mounted on cars and trucks, initially designed to capture license plates, but which are now photographing political lawn signs outside private homes, individuals wearing T-shirts with text, and vehicles displaying pro-abortion bumper stickers—all while recording the precise locations of these observations. Newly obtained data reviewed by WIRED shows how a tool originally intended for traffic enforcement has evolved into a system capable of monitoring speech protected by the US Constitution.

The detailed photographs all surfaced in search results produced by the systems of DRN Data, a license-plate-recognition (LPR) company owned by Motorola Solutions. The LPR system can be used by private investigators, repossession agents, and insurance companies; a related Motorola business, called Vigilant, gives cops access to the same LPR data.

However, files shared with WIRED by artist Julia Weist, who is documenting restricted datasets as part of her work, show how those with access to the LPR system can search for common phrases or names, such as those of politicians, and be served with photographs where the search term is present, even if it is not displayed on license plates.

A search result for the license plates from Delaware vehicles with the text “Trump” returned more than 150 images showing people’s homes and bumper stickers. Each search result includes the date, time, and exact location of where a photograph was taken.

“I searched for the word ‘believe,’ and that is all lawn signs. There’s things just painted on planters on the side of the road, and then someone wearing a sweatshirt that says ‘Believe.’” Weist says. “I did a search for the word ‘lost,’ and it found the flyers that people put up for lost dogs and cats.”

Beyond highlighting the far-reaching nature of LPR technology, which has collected billions of images of license plates, the research also shows how people’s personal political views and their homes can be recorded into vast databases that can be queried.

“It really reveals the extent to which surveillance is happening on a mass scale in the quiet streets of America,” says Jay Stanley, a senior policy analyst at the American Civil Liberties Union. “That surveillance is not limited just to license plates, but also to a lot of other potentially very revealing information about people.”

DRN, in a statement issued to WIRED, said it complies with “all applicable laws and regulations.”

Billions of Photos

License-plate-recognition systems, broadly, work by first capturing an image of a vehicle; then they use optical character recognition (OCR) technology to identify and extract the text from the vehicle's license plate within the captured image. Motorola-owned DRN sells multiple license-plate-recognition cameras: a fixed camera that can be placed near roads, identify a vehicle’s make and model, and capture images of vehicles traveling up to 150 mph; a “quick deploy” camera that can be attached to buildings and monitor vehicles at properties; and mobile cameras that can be placed on dashboards or be mounted to vehicles and capture images when they are driven around.

Over more than a decade, DRN has amassed more than 15 billion “vehicle sightings” across the United States, and it claims in its marketing materials that it amasses more than 250 million sightings per month. Images in DRN’s commercial database are shared with police using its Vigilant system, but images captured by law enforcement are not shared back into the wider database.

The system is partly fueled by DRN “affiliates” who install cameras in their vehicles, such as repossession trucks, and capture license plates as they drive around. Each vehicle can have up to four cameras attached to it, capturing images in all angles. These affiliates earn monthly bonuses and can also receive free cameras and search credits.

In 2022, Weist became a certified private investigator in New York State. In doing so, she unlocked the ability to access the vast array of surveillance software accessible to PIs. Weist could access DRN’s analytics system, DRNsights, as part of a package through investigations company IRBsearch. (After Weist published an op-ed detailing her work, IRBsearch conducted an audit of her account and discontinued it. The company did not respond to WIRED’s request for comment.)

“There is a difference between tools that are publicly accessible, like Google Street View, and things that are searchable,” Weist says. While conducting her work, Weist ran multiple searches for words and popular terms, which found results far beyond license plates. In data she shared with WIRED, a search for “Planned Parenthood,” for instance, returned stickers on cars, on bumpers, and in windows, both for and against the reproductive health services organization. Civil liberties groups have already raised concerns about how license-plate-reader data could be weaponized against those seeking abortion.

Weist says she is concerned with how the search tools could be misused when there is increasing political violence and divisiveness in society. While not linked to license plate data, one law enforcement official in Ohio recently said people should “write down” the addresses of people who display yard signs supporting Vice President Kamala Harris, the 2024 Democratic presidential nominee, exemplifying how a searchable database of citizens’ political affiliations could be abused.

A 2016 report by the Associated Press revealed widespread misuse of confidential law enforcement databases by police officers nationwide. In 2022, WIRED revealed that hundreds of US Immigration and Customs Enforcement employees and contractors were investigated for abusing similar databases, including LPR systems. The alleged misconduct in both reports ranged from stalking and harassment to sharing information with criminals.

While people place signs in their lawns or bumper stickers on their cars to inform people of their views and potentially to influence those around them, the ACLU’s Stanley says it is intended for “human-scale visibility,” not that of machines. “Perhaps they want to express themselves in their communities, to their neighbors, but they don't necessarily want to be logged into a nationwide database that’s accessible to police authorities,” Stanley says.

Weist says the system, at the very least, should be able to filter out images that do not contain license plate data and not make mistakes. “Any number of times is too many times, especially when it's finding stuff like what people are wearing or lawn signs,” Weist says.

“License plate recognition (LPR) technology supports public safety and community services, from helping to find abducted children and stolen vehicles to automating toll collection and lowering insurance premiums by mitigating insurance fraud,” Jeremiah Wheeler, the president of DRN, says in a statement.

Weist believes that, given the relatively small number of images showing bumper stickers compared to the large number of vehicles with them, Motorola Solutions may be attempting to filter out images containing bumper stickers or other text.

Wheeler did not respond to WIRED's questions about whether there are limits on what can be searched in license plate databases, why images of homes with lawn signs but no vehicles in sight appeared in search results, or if filters are used to reduce such images.

“DRNsights complies with all applicable laws and regulations,” Wheeler says. “The DRNsights tool allows authorized parties to access license plate information and associated vehicle information that is captured in public locations and visible to all. Access is restricted to customers with certain permissible purposes under the law, and those in breach have their access revoked.”

AI Everywhere

License-plate-recognition systems have flourished in recent years as cameras have become smaller and machine-learning algorithms have improved. These systems, such as DRN and rival Flock, mark part of a change in the way people are surveilled as they move around cities and neighborhoods.