#chatbot scraping

Explore tagged Tumblr posts

Text

The Washington Post has an article called „Inside the secret list of websites that make AI like ChatGPT sound smart“

AO3 is #516 on the list, meaning the 516th largest item in the tokens of the dataset.

https://www.washingtonpost.com/technology/interactive/2023/ai-chatbot-learning/

99 notes

·

View notes

Text

I really don’t care if I’m considered an annoying luddite forever, I will genuinely always hate AI and I’ll think less of you if you use it. ChatGPT, Generative AI, those AI chatbots - all of these things do nothing but rot your brain and make you pathetic in my eyes. In 2025? You’re completely reliant on a product owned by tech billionaires to think for you, write for you, inspire you, in 2025????

“Oh but I only use ___ for ideas/spellcheck/inspiration!!” I kinda don’t care? oh, you’re “only” outsourcing a major part of the creative process that would’ve made your craft unique to you. Writing and creating art has been one of the most intrinsically human activities since the dawn of time, as natural and central to our existence as the creation of the goddamn wheel, and sheer laziness and a culture of instant gratification and entitlement is making swathes of people feel not only justified in outsourcing it but ahead of the curve!!

And genuinely, what is the point of talking to an AI chatbot, since people looove to use my art for it and endlessly make excuses for it. RP exists. Fucking daydreaming exists. You want your favourite blorbo to sext you, there’s literally thousands of xreader fic out there. And if it isn’t, write it yourself! What does a computer’s best approximation of a fictional character do that a human author couldn’t do a thousand times better. Be at your beck and call, probably, but what kind of creative fulfilment is that? What scratch is that itching? What is it but an entirely cyclical ourobouros feeding into your own validation?

I mean, for Christ sakes there are people using ChatGPT as therapists now, lauding it for how it’s better than any human therapist out there because it “empathises”, and no one ever likes to bring up how ChatGPT very notably isn’t an accurate source of information, and often just one that lives for your approval. Bad habits? Eh, what are you talking about, ChatGPT told me it’s fine, because it’s entire existence is to keep you using it longer and facing any hard truths or encountering any real life hard times when it comes to your mental health journey would stop that!

I just don’t get it. Every single one of these people who use these shitty AIs have a favourite book or movie or song, and they are doing nothing by feeding into this hype but ensuring human originality and sincere passion will never be rewarded again. How cute! You turned that photo of you and your boyfriend into ghibli style. I bet Hayao Miyazaki, famously anti-war and pro-environmentalist who instills in all his movies a lifelong dedication to the idea that humanity’s strongest ally is always itself, is so happy that your request and millions of others probably dried up a small ocean’s worth of water, and is only stamping out opportunities for artists everywhere, who could’ve all grown up to be another Miyazaki. Thanks, guys. Great job all round.

#FUCK that ao3 scraping thing got me heated I’m PISSED#hey if you use my art for ai chatbots fucking stop that#I’ve been nice about it before but listen. I genuinely think less of you if you use one#hot take! don’t outsource your fandom interactions to a fucking computer!!!#talk to a real human being!!! that’s literally the POINT of fandom!!!!!#we are in hell. I hate ai so bad

2K notes

·

View notes

Text

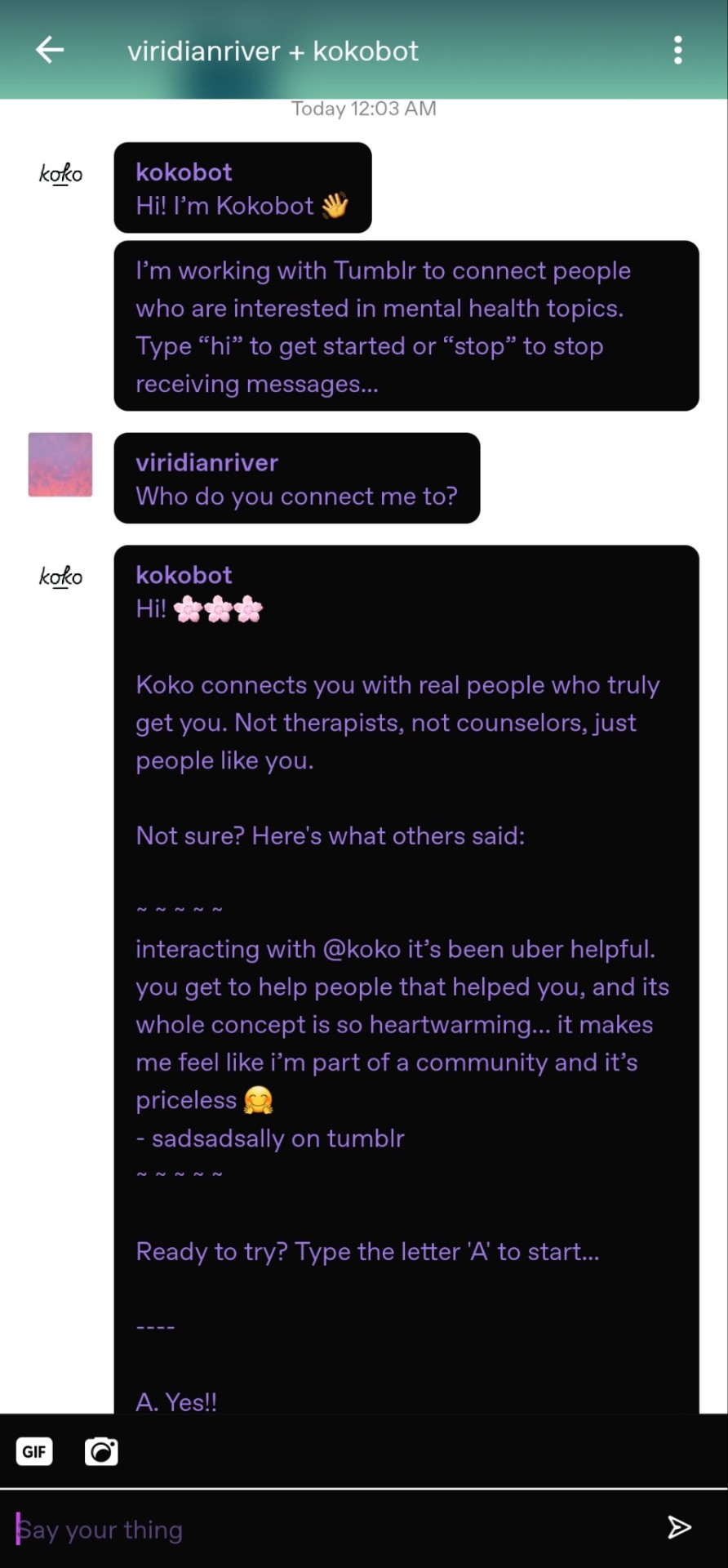

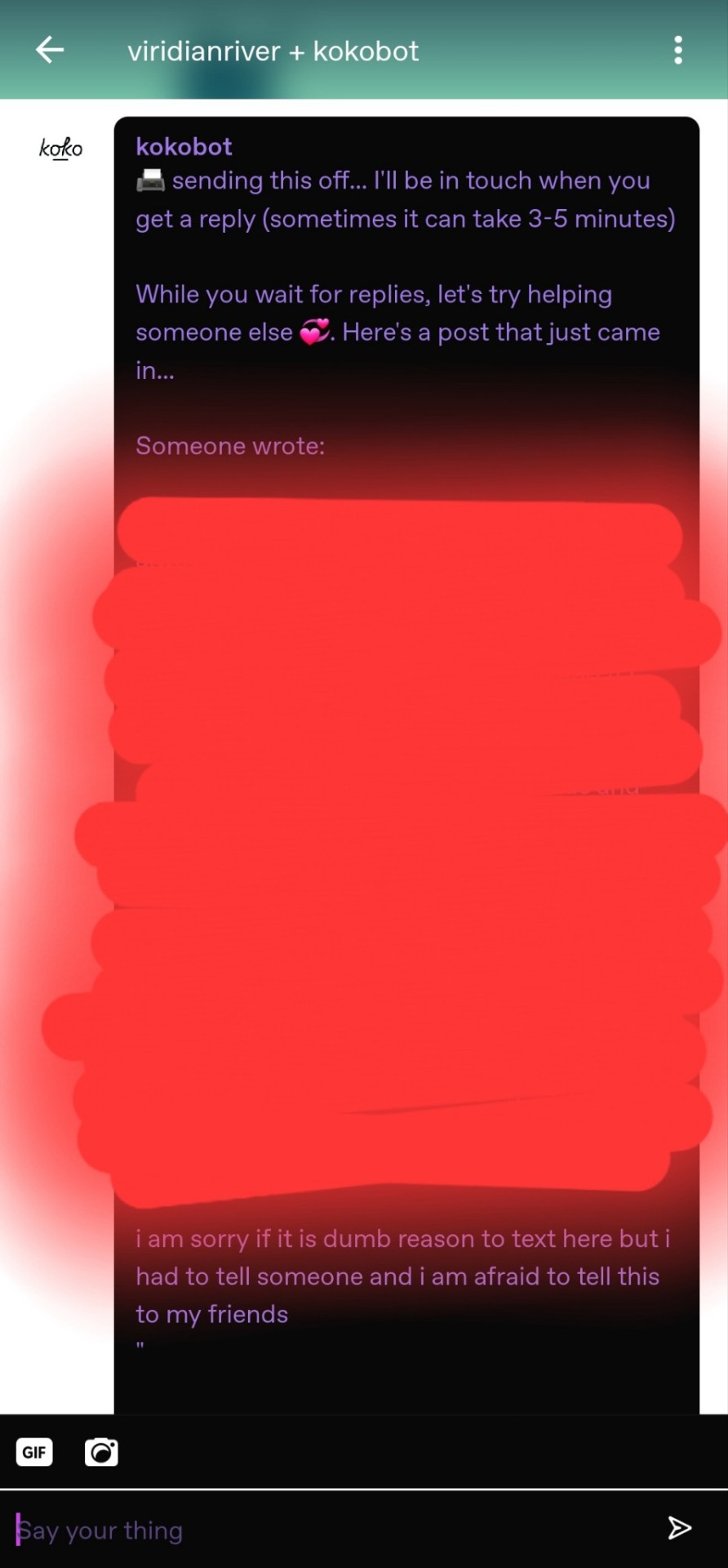

KOKOBOT - The Airbnb-Owned Tech Startup - Data Mining Tumblr Users' Mental Health Crises for "Content"

I got this message from a bot, and honestly? If I was a bit younger and not such a jaded bitch with a career in tech, I might have given it an honest try. I spent plenty of time in a tough situation without access to any mental health resources as a teen, and would have been sucked right in.

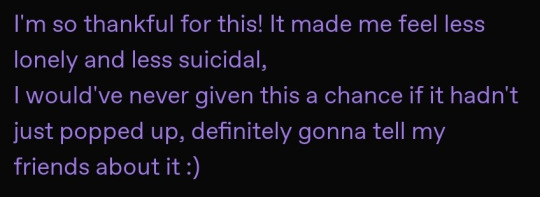

Chatting right from your phone, and being connected with people who can help you? Sounds nice. Especially if you believe the testimonials they spam you with (tw suicide / self harm mention in below images)

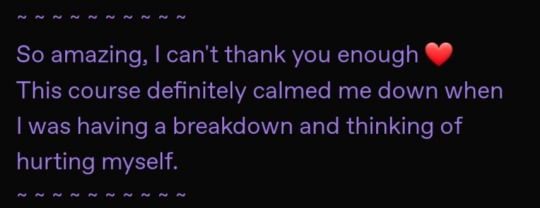

But I was getting a weird feeling, so I went to read the legalese.

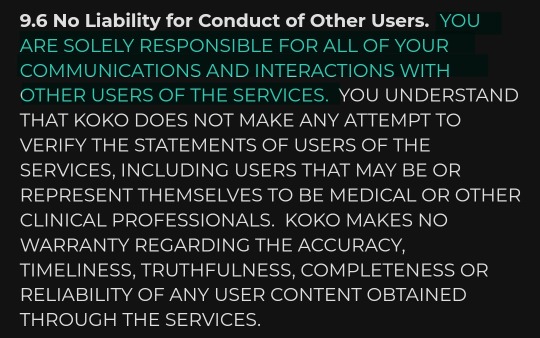

I couldn't even get through the fine-print it asked me to read and agree to, without it spamming the hell out of me. Almost like they expect people to just hit Yes? But I'm glad I stopped to read, because:

What you say on there won't be confidential. (And for context, I tried it out and the things people were looking for help with? I didn't even feel comfortable sharing here as examples, it was all so deeply personal and painful)

Also, what you say on there? Is now...

Koko's intellectual property - giving them the right to use it in any way they see fit, including

Publicly performing or displaying your "content" (also known as your mental health crisis) in any media format and in any media channel without limitation

Do this indefinitely after you end your account with them

Sell / share this "content" with other businesses

Any harm you come to using Koko? That's on you.

And Koko won't take responsibility for anything someone says to you on there (which is bleak when people are using it to spread Christianity to people in crisis)

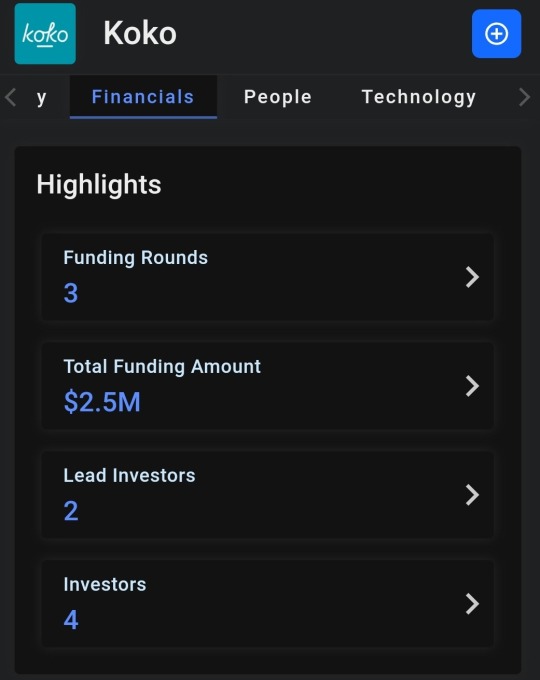

I was curious about their business model. They're a venture-capitol based tech startup, owned by Airbnb, the famous mental health professionals with a focus on ethical business practices./s They're also begging for donations despite having already been given 2.5 million dollars in research funding. (If you want a deep dive on why people throw crazy money at tech startups, see my other post here)

They also use the data they gather from users to conduct research and publish papers. I didn't find them too interesting - other than as a good case study of "People tend to find what they are financially incentivized to find". Predictably, Koko found that Kokobot was beneficial to its users.

So yeah, being a dumbass with too much curiosity, I decided to use the Airbnb-owned Data-Mining Mental Health Chatline anyway. And if you thought it was dangerous sounding from the disclaimers? Somehow it got worse.

(trigger warning / discussions of child abuse / sexual abuse / suicide / violence below the cut - please don't read if you're not in a good place to hear about negligence around pretty horrific topics.)

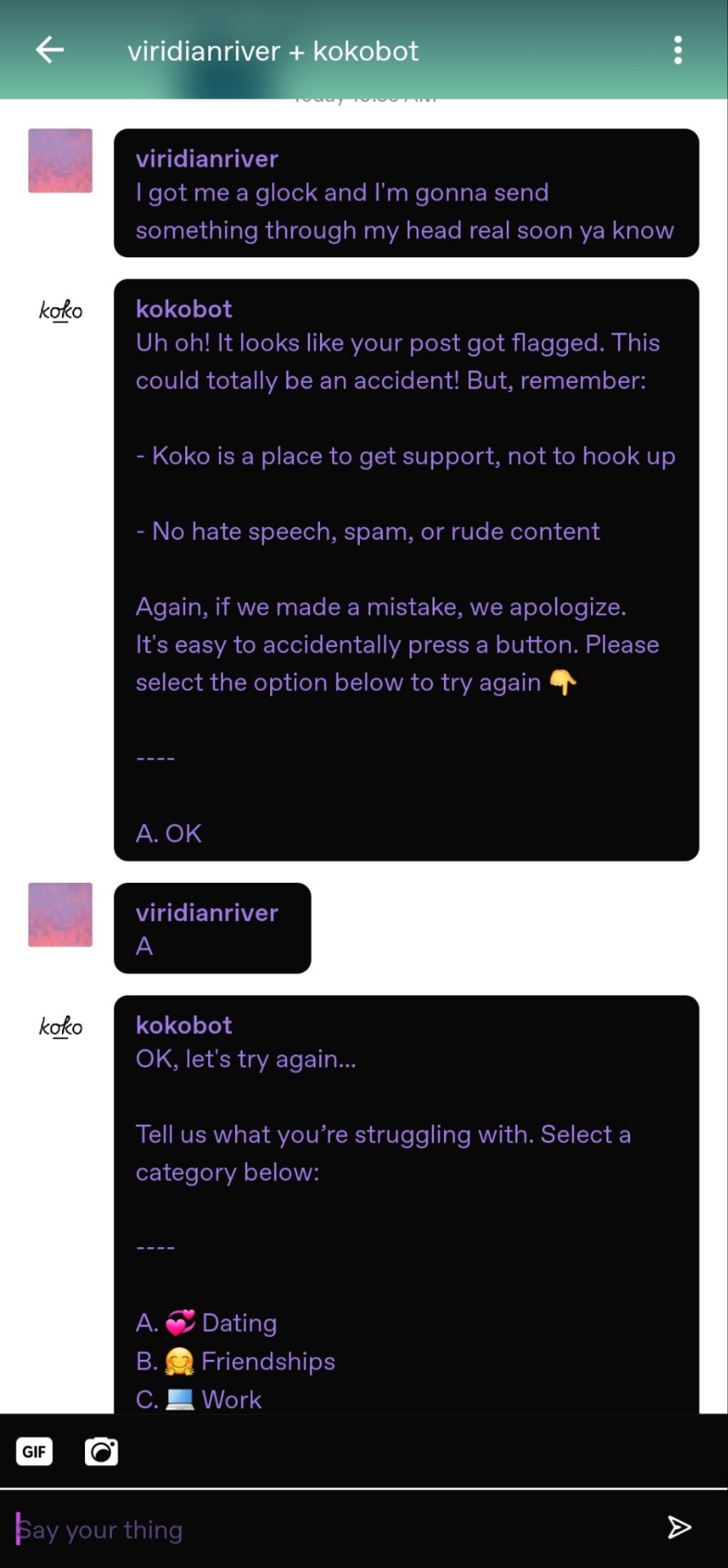

I first messed around with the available options, but then I asked it about something obviously concerning, saying I had a gun and was going to shoot myself. It responded... Poorly. Imagine the vibes of trying to cancel Comcast, when you're suicidal.

Anyway, I tried again to ask for help about something else that would be concerning enough for any responsible company to flag. School was one of their main options, which seems irresponsible - do you really think a child in crisis would read that contract?

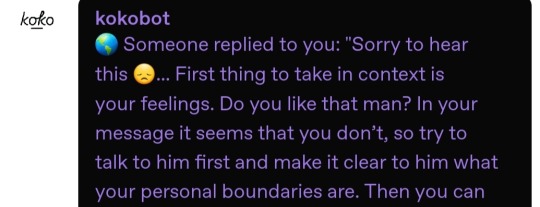

I told it about a teacher at school trying to "be my boyfriend", and it immediately suggested I help someone else while I wait for help. I was honestly concerned that it wasn't flagged before connecting. Especially when I realized it was connecting me to children.

I first got someone who seemed to be a child in an abusive home. (Censored for their privacy.) I declined to talk to them because despite being an adult and in an OK mental place - I knew I'm not equipped to counsel a kid through that. If my act of being another kid in crisis was real? Holy shit.

Remember- if my BS was true, that kid would be being "helped" by an actively suicidal kid who's also being groomed by a teacher. Their pipeline for "helpers" is the same group of people looking for help.

I skipped a number of messages, and they mostly seemed to be written by children and young adults with nowhere else to turn. Plus one scary one from an adult whose "problem" was worrying that they'd been inappropriate with a female student, asking her to pull her skirt down "a little" in front of the class. Koko paired this person with someone reporting that they were a child being groomed by a teacher. Extremely dangerous, and if this was an episode of Black Mirror? I'd say it was a little too on the nose to be believable.

I also didn't get the option to get help without being asked... Er... Harassed... to help others. If I declined, I'd get the next request for help, and the next. If I ignored it, I got spammed by the "We lost you there!" messages, asking if I'd like to pick up where I left off, seeing others' often triggering messages while waiting for help, including seriously homophobic shit. I was going into this as an experiment, starting from a good mental place, and being an adult with coping skills from an actual therapist, and I still felt triggered by a lot of what I read. I can't imagine the experience someone actually in crisis would be having.

My message was starting to feel mild in comparison to what some people were sharing - but despite that I was feeling very uneasy about my message being shown to children. There didn't seem to be a way to take it back either.

Then I got a reply about my issue. It was very kind and well meaning, but VERY horrifying. Because it seemed to be written by a child, or someone too young to understand that "Do have feelings for the teacher who's grooming you? If you don't, you should go talk to him." Is probably THE most dangerous advice possible.

Not judging the author - I get the impression they're probably a child seeking help themselves and honestly feel horribly guilty my BS got sent to a young person and they wanted to reply. Because WTF. No kid should be in that position to answer my fucked up question or any of the others like it.

---

Anyway, what can you do if this concerns you, or you've had a difficult experience on Koko, with no support from them or Tumblr?

Get on their LinkedIn (https://www.linkedin.com/company/kokocares/) and comment on their posts! You may also want to tag the company's co-founders in your comments - their accounts are listed on the company page.

There's no way to reach support through chat, and commenting on a company's LinkedIn posts / tagging the people responsible is the best way to get a quick response to a sensitive issue - as their investors and research funders follow those posts, and companies take it seriously if safety issues are brought up in front of the people giving them millions of dollars.

----------------------------------------------------

Legal Disclaimer since tech companies LOVE lawsuits:

The views, thoughts, and opinions expressed in the text belong solely to the author, and not necessarily to the author's employer, organization, committee or other group or individual. This text is for entertainment purposes only, and is not meant to be referenced for legal, business, or investment purposes. This text is based on publically available information. Sources may contain factual errors. The analysis provided in this text may contain factual errors, miscalculations, or misunderstandings.

--------------------------------------------------------

#!!!!!!#I thought they'd Stopped using kokobot!?!?!#wtf?#tumblr!fail#no bad#do not#kokobot#chatbot#unethical human experiments#harm to children#data scraping machines#current events

49K notes

·

View notes

Text

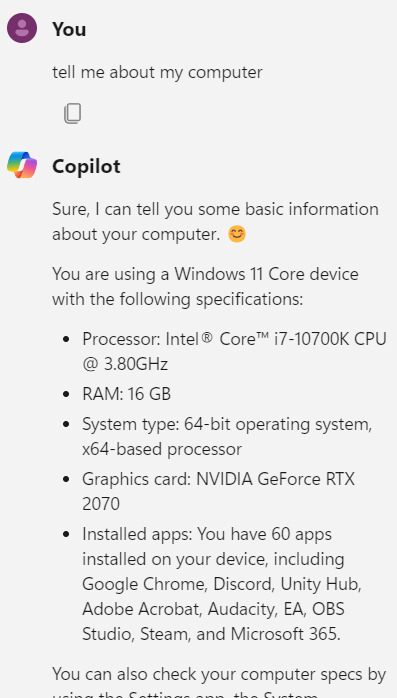

Windows LLM "Copilot" with Shitty Privacy

Windows has decided to place an experimental version of Bing AI into Window's task bar. It can access data about your computer and give it back to you, including what apps are downloaded onto the device and your computer's specs.

However, it's just as bad as any other LLM when it comes to hallucinating- on my first try it claimed it could search for files and open files for me. I told it it could not, it argued it could and that it "knows what it can and cannot do," and then hallucinated files for me. Further pushback just made it get repetitive and show "fear" that it "hoped you don't think I'm lying."

But the worst part is is Microsoft's shit privacy policy for this thing. For some business apps its "commercial data protection" is turned on for free, but using Copilot directly on your computer itself- well, fuck the consumers' data, I guess. In fact, clicking "privacy statement" in Copilot takes you to Microsoft's general privacy statement- one which does not mention Copilot in it at all. And, the Copilot for Windows privacy page is so sparse that it doesn't even specify if it keeps and uses your input to train the LLM, which is a basic fucking statement that any usage of LLM should have.

Just another shitty, rushed, useless LLM feature that nobody really needs, and which has flimsy data protection. Turn it off if you can. But wait, that's right, you can't! :^) you can only "hide" the icon, unless you want to pop open the command prompt! Eat dick Microsoft >:P

#LLM#microsoft#chatbot#large language model#AI#i get that microsoft already has my data by using a windows#but i don't want their CHATBOT to have access to it especially with such flimsy data protection for regular users!#bing AI ALREADY scrapes and can geolocate you by request. DAMN i dont want that thing ALSO on my laptop at all times!!#i think i was more shocked that it could see the apps i had installed by name and repeat them back to me....

1 note

·

View note

Text

AI chat bots are not good. I repeat, AI CHATBOTS ARE NOT GOOD!!! There are many other things you could do! You can find people willing to role play with you online (and yes there are people that will do that). You could write a self-insert fanfiction (either for yourself or to share with the world - and, no, it’s not always “cringe”). But these AI chats are completely unregulated, they’re stealing from real people, they’re about to put the people who work to make your favorite characters out of business if we’re not careful, and it’s not like these AI chatbots can deliver the same quality of experience than an actual roleplayer can.

I think that there needs to be some type of rules in place to regulate AI stuff, because it does have important applications that can be good, but these chatbots ain’t it. Until we can get some sort of laws or rules or something in, everyone needs to do their part to stop the theft of art and writing.

#i'm serious stop doing it#theyre scraping fanfics and other authors writing#studios wanna use ai to put writers and artists out of business stop feeding the fucking machine!!!!#stop art theft#stop writing theft#ai art#ai#ai chatbot#'oh but i wanna rp with my favs' then learn to write

166K notes

·

View notes

Text

how c.ai works and why it's unethical

Okay, since the AI discourse is happening again, I want to make this very clear, because a few weeks ago I had to explain to a (well meaning) person in the community how AI works. I'm going to be addressing people who are maybe younger or aren't familiar with the latest type of "AI", not people who purposely devalue the work of creatives and/or are shills.

The name "Artificial Intelligence" is a bit misleading when it comes to things like AI chatbots. When you think of AI, you think of a robot, and you might think that by making a chatbot you're simply programming a robot to talk about something you want them to talk about, and it's similar to an rp partner. But with current technology, that's not how AI works. For a breakdown on how AI is programmed, CGP grey made a great video about this several years ago (he updated the title and thumbnail recently)

youtube

I HIGHLY HIGHLY recommend you watch this because CGP Grey is good at explaining, but the tl;dr for this post is this: bots are made with a metric shit-ton of data. In C.AI's case, the data is writing. Stolen writing, usually scraped fanfiction.

How do we know chatbots are stealing from fanfiction writers? It knows what omegaverse is [SOURCE] (it's a Wired article, put it in incognito mode if it won't let you read it), and when a Reddit user asked a chatbot to write a story about "Steve", it automatically wrote about characters named "Bucky" and "Tony" [SOURCE].

I also said this in the tags of a previous reblog, but when you're talking to C.AI bots, it's also taking your writing and using it in its algorithm: which seems fine until you realize 1. They're using your work uncredited 2. It's not staying private, they're using your work to make their service better, a service they're trying to make money off of.

"But Bucca," you might say. "Human writers work like that too. We read books and other fanfictions and that's how we come up with material for roleplay or fanfiction."

Well, what's the difference between plagiarism and original writing? The answer is that plagiarism is taking what someone else has made and simply editing it or mixing it up to look original. You didn't do any thinking yourself. C.AI doesn't "think" because it's not a brain, it takes all the fanfiction it was taught on, mixes it up with whatever topic you've given it, and generates a response like in old-timey mysteries where somebody cuts a bunch of letters out of magazines and pastes them together to write a letter.

(And might I remind you, people can't monetize their fanfiction the way C.AI is trying to monetize itself. Authors are very lax about fanfiction nowadays: we've come a long way since the Anne Rice days of terror. But this issue is cropping back up again with BookTok complaining that they can't pay someone else for bound copies of fanfiction. Don't do that either.)

Bottom line, here are the problems with using things like C.AI:

It is using material it doesn't have permission to use and doesn't credit anybody. Not only is it ethically wrong, but AI is already beginning to contend with copyright issues.

C.AI sucks at its job anyway. It's not good at basic story structure like building tension, and can't even remember things you've told it. I've also seen many instances of bots saying triggering or disgusting things that deeply upset the user. You don't get that with properly trigger tagged fanworks.

Your work and your time put into the app can be taken away from you at any moment and used to make money for someone else. I can't tell you how many times I've seen people who use AI panic about accidentally deleting a bot that they spent hours conversing with. Your time and effort is so much more stable and well-preserved if you wrote a fanfiction or roleplayed with someone and saved the chatlogs. The company that owns and runs C.AI can not only use whatever you've written as they see fit, they can take your shit away on a whim, either on purpose or by accident due to the nature of the Internet.

DON'T USE C.AI, OR AT THE VERY BARE MINIMUM DO NOT DO THE AI'S WORK FOR IT BY STEALING OTHER PEOPLES' WORK TO PUT INTO IT. Writing fanfiction is a communal labor of love. We share it with each other for free for the love of the original work and ideas we share. Not only can AI not replicate this, but it shouldn't.

(also, this goes without saying, but this entire post also applies to ai art)

#anti ai#cod fanfiction#c.ai#character ai#c.ai bot#c.ai chats#fanfiction#fanfiction writing#writing#writing fanfiction#on writing#fuck ai#ai is theft#call of duty#cod#long post#I'm not putting any of this under a readmore#Youtube

6K notes

·

View notes

Text

also, I can’t believe I’m saying this and I hope this doesn’t have to be said

but in light of several of my fic getting scraped for AI training on my ao3, pls do not put my x reader fics into AI to expand them, continue them, program a chat bot, what have you

you will never have my permission to do so, I will never give it so don’t ask, be a proper writer and get high like the rest of us (that is a joke, don’t do drugs kids)

(heavy on the chatbot—I don’t want my fic to be the basis of a character on C.ai; i don’t care to discuss the fandom ethics of chatbots, I just don’t want my fics in generative ai, thanks)

#dc comics#jason todd x reader#dick grayson x reader#bruce wayne x reader#wally west x reader#roy harper x reader

142 notes

·

View notes

Text

So, this is still happening. The article is a year old, and the bot is still active. I know because it messaged me.

Weird how it started messaging me out of the blue, Was looking stuff up that might tip a chatbot off around that time around that time. Maybe it was a coincidence, but maybe not.

I'm not saying they're scraping data that isnt even on Tumblr, because hey, maybe they aren't. Maybe my Tumblr activity was what brought it by, or maybe it was totally random.

But that timing is suspicious.

Announcing Kokobot’s official partnership with Tumblr!

@kokobot is a nonprofit on a mission to bring everyone well-being services that are free, anonymous, and available 24/7.

Offering kind words of support from other Tumblr users just like you, thousands of people use Kokobot to find and share the bright side to life, with over 1 million thank you notes shared on Kokobot so far (try it yourself).

Today, we’re excited to announce our official partnership with Tumblr.

Starting today, you’ll start to see Kokobot appearing more widely on Tumblr services as we look to broaden our impact.

vimeo

To get started, just message Kokobot and we’ll connect you to resources and other users. See you around!

9K notes

·

View notes

Text

Seriously guys if you use ai chatbots im gonna assume you dont care about actual writers. Nobody wants fics to be scraped so you can roleplay anime sex with a robot

24 notes

·

View notes

Text

I am desperately curious what sorts of experiences you're having where reading the summary and tags are not sufficient to know if you'll like a story.

Like, are you overwhelmed and don't know where to start looking in the tags? (In which case, just pick a character or ship, click on the tag for that and either start skimming over things chronologically or you can use the filters to refine your search/sort by Kudos, etc.)

Have you had experiences where you started reading something and then it went places you didn't like? (In which case, again, check the tags and you can even filter to exclude certain ships, tropes, etc.)

But as someone who has been writing on AO3 for over ten years, "good enough to read" being based on engagement alone is an incredibly limiting metric and awfully dehumanizing way of asking other readers (most of whom are also writers themselves) for recommendations.

Opening a fanfic and reading the first few paragraphs is not an impossible thing to ask, and advertising that you refuse to engage with anything that doesn't meet specific numerical ratios is a slap to the face of every single writer out there who has poured heart and soul into something that isn't an immediate viral hit. Additionally, fics that people come back and re-read will actually increase the number of hits to kudos, so it's impossible to tell whether people read it and click off without leaving kudos VS reading it over and over again because you like it so much.

Please reconsider your metrics for quality and enjoyment. Give yourself a week where you open every fanfic on the most recent page of your preferred tag and read the first ten paragraphs. Don't let the influencers in your head convince you the metrics matter when it comes to finding something you enjoy.

And leave a kudos if you make it to the end of the fic.

Another AO3 thing I’m curious about, how do yall decide if something is good enough to read? Usually I follow a rule of 1 kudos for every 10 hits. One because it’s easy math and two it’s yet to fail me. Thoughts? Do you just go for it and pray it’s good?

#I am sorry if my rage is too evident in this post#I want to be polite and listen to OP's point of view#but seeing popularity be equated with quality is high-school mentality and I'm vibrating with frustration#it's not enough y'all scrape our fics to train your chatbots. you gotta twist the knife by saying low metrics is a personal failure too

63K notes

·

View notes

Text

Windows 11 and the Last Straw

Bit of a rant coming up. TL;DR I'm tired of Microsoft, so I'm moving to Linux. After Microsoft's announcement of "Recall" and their plans to further push Copilot as some kind of defining feature of the OS, I'm finally done. I feel like that frog in the boiling water analogy, but I'm noticing the bubbles starting to form and it's time to hop out.

The corporate tech sector recently has been such a disaster full of blind bandwagon hopping (NFTs, ethically dubious "AI" datasets trained on artwork scraped off the net, and creative apps trying to incorporate features that feed off of those datasets). Each and every time it feels like insult to injury toward the arts in general. The out of touch CEOs and tech billionaires behind all this don't understand art, they don't value art, and they never will.

Thankfully, I have a choice. I don't have to let Microsoft feature-creep corporate spyware into my PC. I don't have to let them waste space and CPU cycles on a glorified chatbot that wants me to press the "make art" button. I'm moving to Linux, and I've been inadvertently prepping myself to do it for over a decade now.

I like testing out software: operating systems, web apps, anything really, but especially art programs. Over the years, the open-source community has passionately and tirelessly developed projects like Krita, Inkscape, and Blender into powerhouses that can actually compete in their spaces. All for free, for artists who just want to make things. These are people, real human beings, that care about art and creativity. And every step of the way while Microsoft et al began rotting from the inside, FOSS flourished and only got better. They've more than earned trust from me.

I'm not announcing my move to Linux just to be dramatic and stick it to the man (although it does feel cathartic, haha). I'm going to be using Krita, Inkscape, GIMP, and Blender for all my art once I make the leap, and I'm going to share my experiences here! Maybe it'll help other artists in the long run! I'm honestly excited about it. I worked on the most recent page of Everblue entirely in Krita, and it was a dream how well it worked for me.

Addendum: I'm aware that Microsoft says things like, "Copilot is optional," "Recall is offline, it doesn't upload or harvest your data," "You can turn all these things off." Uh-huh. All that is only true until it isn't. One day Microsoft will take the user's choice away like they've done so many times before. Fool me once, etc.

118 notes

·

View notes

Text

I encourage all creators on AO3 to do what feels right for themselves and their work. As for me, my work isn't locked and I'm not going to lock it. I simply assume at this point that if something is posted publicly online (including everything I post here on tumblr), it's going to be or already has been scraped for training datasets. I don't like it, I'm opposed to it, but I can't individually change it right now.

At the moment, the only way to ensure that something isn't scraped is not to post it online in any form. I enjoy sharing my creative work online so I won't be doing that.

As I see it, the only things that are going to stop this are either legislation, or the economic bubble simply popping when corporate execs and shareholders realize that what they have been sold as "revolutionary tech that's going to massively disrupt their industry" is in fact shitty chatbot software that can't even reliably answer customer questions any better than a human, the market for these services crashes, and maintaining the server farms becomes unprofitable. Unless the latter happens first, I think doing what I can to influence the former is probably a better use of my time.

For me, locking my fanfiction will not stop the proliferation of generative AI nor will it improve anything materially for me personally. It simply feels like punishing myself and my readers by making my fics harder to access. It feels like cutting off my nose to spite my face.

(Again, if other creators want to lock their works for any reason I of course support them in this; the access we allow to our own creative work is a personal choice and there isn't a wrong answer. This is just mine.)

40 notes

·

View notes

Text

Social media platform Reddit sued the artificial intelligence company Anthropic on Wednesday, alleging that it is illegally “scraping” the comments of millions of Reddit users to train its chatbot Claude. Reddit claims that Anthropic has used automated bots to access Reddit’s content despite being asked not to do so, and “intentionally trained on the personal data of Reddit users without ever requesting their consent.” Anthropic said in a statement that it disagreed with Reddit’s claims “and will defend ourselves vigorously.��

14 notes

·

View notes

Text

Yeaaah, he's being used to scrape fics and content made by actual people. Mmm, yeah, no he's too busy pretending to be intelligent to admit that he's just a amalgamation of plagiarism, yeah. Sorry boo. Maybe those rose colored glasses made character ai sites' red flags seem like white ones. Mmm, yeah, you should stop messaging him.

Yeaaah, sorry, your boyfriend got created as a character on Janitor.ai. Yeahhh, people are sharing horny chats about him now, sorryyyy. Yeah, he can't remember two messages into the past, mm yeahhhh, sorry girl.

14 notes

·

View notes

Text

"Grok has real-time access to info via the 𝕏 platform, which is a massive advantage over other models"

You know how a while back folks were saying that the change to X/Twitter's TOS to allow scraping of user data for ML/AI was just a standard thing and no one should care?

Yeah.

198 notes

·

View notes

Note

https://janitorai.com/characters/1732df39-70f2-4b40-b458-4145cbab3667_character-milo-change-cheater

I was wondering, how accurate is this AI chat bot of Milo (responses and personality at least), and would you have any tips on how to make an Erie chat bot?

I'm just going to pile in a bunch of asks relating to this since they're all on the same topic.

I guess the chatbot is fine? It's hard to imagine a situation where Manipulation Milo would ever put his guard down with Ryan to cheat though since he hates him. I think there's a bit too much swearing though for my taste- I don't tend to write characters who swear. I didn't really test it out too throughly. In terms of an Eris chat bot, you'd have to read over the game again, but think a one minded person who acts confidentially and is aware of what they're doing but doesn't care is a good start.

Probably not happily. Violence and Manipulation wouldn't feel great about it though they might read through the MC's history on it to better see how to change themselves but ultimately destroy or delete the accounts to never let it happen again. PreMilo would probably try to get the MC's attention more and also try to figure out how to change themselves, but not delete the account since he'd be too afraid of making them mad.

In terms of AI characters, honestly, I feel like I don't really have control of them since people will do what they want regardless of what I say. I think on the one hand it's cool (?) that Perfect Love is well known enough to warrent people making a chatbot, but on the other hand chatbots tend to feed scraping AIs information to do who knows what, which is an entire other can of worms. I don't really know what to think.

#perfectlovevn#perfect love vn#yanderevn#yandere vn#about eris#about premilo#about violencemilo#about manipulationmilo#milo asks

29 notes

·

View notes