#chatgpt content detection

Explore tagged Tumblr posts

Text

Outstanding new site created by blog expert reveals the incredible need for the best chatgpt content and detection of that content for ranking.

#how to detect chatgpt plagerism#how to detect ai content#how to detect chatgpt writing#chatgpt content detection#chatgpt human writing checker

0 notes

Text

#This guide outlines how Contentatscale AI Content Detector revolutionizes content management by its advanced capabilities in detecting AI.#Learn more -#https://bizhubit.com/the-ultimate-guide-to-content-management-with-contentatscale-ai-content-detector/#aicontent#marketing#gpt#aicopywritingtool#aitools#rytr#writingtool#productivity#aicopywriting#ai#chatgpt#technology#blogcontent#contentwriting#landingpages#aidesign#artificialintelligence#aiart#seocontent#writersblock#londonmarketing#aiwriter

1 note

·

View note

Text

Detect ai generated text for Free, simple way & High accuracy. Ai content check, ai content detection tool, ai essay detector for teacher.

0 notes

Text

An AI Detector is a tool that uses a vast datasets of information to determine whether a piece of text is genuinely human-writtten or if it's AI-generated.

#anti chatgpt detector#chatgpt content detector#chatgpt detection#check for ai generated text#chatgpt zero detector

0 notes

Text

Let's Talk About Ir Abelas, Da'ean

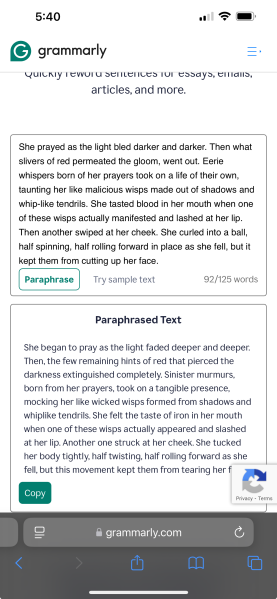

As some of you may know, I am vehemently against the dishonest use of AI in fandom and creative spaces. It has been brought to my attention by many, many people (and something I myself have thought on many times) that there is a DreadRook fic that is super popular and confirmed to be written at least partially with AI. I have the texts to prove it was written (at least) with the help of the Grammarly Rewrite generative feature.

Before I go any further, let it be known I was friends with this author; their use of rewrite features is something they told me and have told many other people who they have shared their fic with. It is not however, at the time of posting this, tagged or mentioned on their fic on AO3, in any capacity. I did in fact reach out to the author before making this post. They made absolutely no attempt to agree to state the use of Rewrite AI on their fic, nor be honest or upfront (in my opinion) about the possibility of their fic being complete generative AI. They denied the use of generative AI as a whole, though they did confirm (once again) use of the rewrite feature on Grammarly.

That all said: I do not feel comfortable letting this lie; since I have been asked by many people to make this, this post is simply for awareness.

You can form your own opinion, if you wish to. In fact, I encourage you to do such.

Aside from the, once again, high volume word output of around 352K words in less than 3 months (author says they had 10 chapters pre-written over "about a month" before they began posting; they are also on record saying they can write 5K-10K daily) from November until now, I have also said if you are familiar with AI services or peruse AI sites like ChatGPT, C.AI, J.AI, or any others similar to these, AI writing is very easy to pick out.

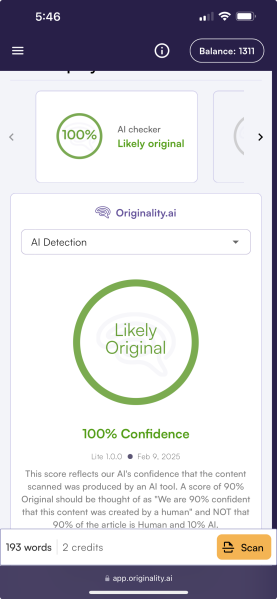

After some intense digging, research, and what I believe to be full confirmation via AI detection software used by professional publishers, there is a large and staggering possibility that the fic is almost entirely AI generated, bar some excerpts and paragraphs, here and there. I will post links below of the highly-resourced detection software that a few paragraphs and an entire chapter from this fic were plugged into; you are more than welcome to do with this information what you please.

I implore you to use critical thinking skills, and understand that when this many pieces in a work come back with such a high percentage of AI detected, that there is something going on. (There was a plethora of other AI detection softwares used that also corroborate these findings; I only find it useful to attach the most reputable source.)

Excerpts:

82% Likely Written by AI, 4% Plagiarism Match

98% Likely Written by AI, 2% Plagiarism Match

100% Likely Written by AI, 4% Plagiarism Match

Some excerpts do in fact come back as 100% likely written by human; however, this does not mean that the author was not using the Grammarly Paraphrase/Rewrite feature for these excerpts.

The Grammarly Paraphrase/Rewrite feature does not typically clock as AI generative text, and alongside the example below, many excerpts from other fics were take and put through this feature, and then fed back into the AI detection software. Every single one came back looking like this, within 2% of results:

So, in my opinion, and many others, this goes beyond the use of the simple paraphrase/rewrite feature on Grammarly.

Entire Chapter (Most Recent):

67% Likely Written by AI

As well, just for some variety, another detection software that also clocked plagiarism in the text:

15% Plagiarism Match

To make it clear that I am not simply 'jealous' of this author or 'angry' at their work for simply being a popular work in the fandom, here are some excerpts from other fanfics in this fandom and in other fandoms that were ran through the same exact same detection software, all coming back as 100% human written. (If you would like to run my fic through this software or any others, you are more than welcome to. I do not want to run the risk of OP post manipulation, so I did not include my own.)

The Wolf's Mantle

100% Likely Human Written, 2% Plagiarism Match

A Memory Called Desire

99% Likely Human Written

Brand Loyalty

100% Likely Human Written

Heart of The Sun

98% Likely Human Written

Whether you choose to use AI in your own fandom works is entirely at your own discretion. However, it is important to be transparent about such usage.

AI has many negative impacts for creatives across many mediums, including writers, artists, and voice actors.

If you use AI, it should be tagged as such, so that people who do not want to engage in AI works can avoid engaging with it if they wish to.

ALL LINKS AND PICTURES COURTESY OF: @spiritroses

#ai critical#ai#fandom critical#dreadrook#solrook#rooklas#solas x rook#rook x solas#ir abelas da'ean#ao3#ancient arlathan au#grammarly#chatgpt#originality ai#solas#solas dragon age#rook#da veilguard#veilguard#dragon age veilguard#dragon age#dav#da#dragon age fanfiction#fanfiction#as a full disclaimer: I WILL BE WILLING TO TAKE DOWN THIS POST SO LONG AS THE FIC ENDS UP TAGGED PROPERLY AS AN AI WORK#i tried to do exactly as y'all asked last time#so if y'all have a problem w this one idk what to tell you atp#and see????? we do know how to call out our own fandom#durgeapologist

199 notes

·

View notes

Note

i am begging people to learn that not all AI is generative AI, and AI can (and is) used for a variety of helpful/good uses in various different fields (including the arts! example: spiderverse animators using AI to lip sync since its a repetitive task). AI is not inherently good or bad, rather it is how it is created, how the data is obtained and used, and how the AI is applied which is helping or harming.

furthermore, i am also begging people to stop using "AI detection" software. there is no way to detect AI- there are giveways, such as when someone copy-pastes from chatGPT and it leaves the markdown for the italics and bold text. but for text content, there is not a way to tell. someone using technical language or less-commonly used words is not indicative of them using AI. i know it feels bad to not be able to know for certain but i think it is better to give others the benefit of the doubt, or to not interact with their writing (such as in the case of fanfic) if you suspect AI.

--

171 notes

·

View notes

Text

Annoyances

Whenever I see a "if you like AI, pls die" post, a part of me wants to take a big, gigantic breath and blurt out the following, Wall of Text style:

Narrow AI is vital to several scientific fields and refers to algorithms that are geared towards the collection, classification and proper identification of datum. It doesn't steal, it doesn't crib from anyone else, but it certainly helps with overlaying false colours on CAT scans and MRI results, for example.

Narrow AI is in your spreadsheet documents. If a spreadsheet is based on a few formulae to keep track of your budget, some measure of AI is involved.

Narrow AI is your average spell-checker's brain, as well. Not Google's - Google Docs just outsources Gemini for some truly godawful proofreading - but your average offline, dumb-as-bricks spell checker qualifies as Narrow AI.

Narrow AI is in your GPS and in your phone's voice-activated commands. Remove it, and you'll have to lug maps around again, or run searches on your own.

When you excoriate someone for using an AI-based tool, you're referring to the more recent years' developments in the field of Wide AI - as in, generalist Artificial Intelligences. AI scientists the world over have all agreed that, by and large, the usefulness of Wide AI is limited.

Later evidence proves that even without the use of poisoning tools like Glaze, Wide AI is poisoning itself, all thanks to the excessive eagerness of content producers who see AI as a means to drive Search Engine Optimization. The Dead Internet Theory isn't quite proven yet; but what is is that AI-generated content is increasingly eating up its own generated slop. ChatGPT has, point in fact, already consumed every scrap of genuine human content there is to access.

So give props to your local neckbeard who wants to make sure we'll one day no longer need to remove someone's thyroid in the case of detected malignancies, because he's looking to use AI to save lives.

Artificial Intelligence isn't the problem, what is is the McDonald's-ized version of it that's being bandied about by publications like The Verge and TechCrunch.

120 notes

·

View notes

Text

I know I’m screaming into the void here but do not witch hunt people with AI accusations

As someone whose job for the last two years involved me reading and rereading essays and creative fiction written by my students (a group of writers notorious for using AI despite being told not to because they worry about their grades more than their skills) let me tell you straight up that detecting AI in any written work isn’t straightforward

AI detection softwares are bullshit. Even Turnitin, which is supposedly the best, has an error rate that is slowly increasing over time. They’re not reliable. The free ones online are even worse, trust me

“Oh but it’s so obvious!” Sure. If you’re trained to notice patterns and predictive repetitions in the language, sure. I can spot a ChatGPT student essay from a mile away. But only if they haven’t edited it themselves, or used a bunch of methods (Grammarly, other AIs, their friends, a “humanizer” software, etc) to obscure the ChatGPT patterns. And it’s easier with formulaic essays—with creative fiction it’s much harder.

Why?

Well because good creative fiction is a) difficult to write well and b) extremely subjective. ChatGPT does have notable patterns for creative writing. But it’s been trained on the writing that is immensely popular, writing that has been produced by humans. Purple prose, odd descriptions, sixteen paragraphs of setting where one or two could be fine, all of that is stylistic choices that people have intentionally made in their writing that ChatGPT is capable of predicting and producing.

What I’m saying is, people just write like that normally. There are stylistic things I do in to writing that other people swear up and down is an AI indicator. But it’s just me writing words from my head

So can we, should we, start witch hunts over AI use in fanfic when we notice these patterns? My answer is no because that’s dangerous.

Listen. I hate AI. I hate the idea of someone stealing my work and feeding it into a machine that will then “improve itself” based on work I put my heart and soul into. If I notice what I think is AI in a work I’ve casually encountered online, I make a face and I stop reading. It’s as simple as that. I don’t drag their name out into the public to start a tomato throwing session because I don’t know their story (hell they might even be a bot) and because one accusation can suddenly become a deluge

Or a witch hunt, if you will

Because accusing one person of AI and starting a whole ass witch hunt is just begging people to start badly analyzing the content they’re reading out of fear that they’ve been duped. People don’t want to feel the sting or embarrassment of having been tricked. So they’ll start reading more closely. Too closely. They’ll start finding evidence that isn’t really evidence. “This phrase has been used three times in the last ten paragraphs. It must be AI.”

Or, it could be that I just don’t have enough words in my brain that day and didn’t notice the repetition when I was editing.

There’s a term you may be familiar with called a “false positive.” In science or medicine, it’s when something seems to have met the conditions you’re looking for, but in reality isn’t true or real or accurate. Like when you test for the flu and get a positive result when you didn’t have the flu. Or, in this case, when you notice someone writing sentences that appear suspiciously like a ChatGPT constructed sentence and go “oh, yes that must mean it’s ChatGPT then”

(This type of argumentation/conclusion also just uses a whole series of logical fallacies I won’t get into here except to say that if you want to have a civil conversation about AI use in fandom you cannot devolve into hasty generalizations based on bits and parts)

I’m not saying this to protect the people using AI. In an ideal world, people would stop using it and return back to the hard work of making art and literature and so on. But we don’t live in that world right now, and AI is prevalent everywhere. Which means we have to be careful with our accusations and any “evidence” we think we see.

And if we do find AI in fandom spaces, we must be careful with how we handle or approach that, otherwise we will start accusing writers who have never touched AI a day in their life of having used it. We will create a culture of fear around writing and creating that stops creatives from making anything at all. People will become too scared to share their work out of fear they’ll be accused of AI and run off.

I don’t have solutions except to say that in my experience, outright accusing people of AI tends to create an environment of mistrust that isn’t productive for creatives or fans/readers. If you start looking for AI evidence everywhere, you will find it everywhere. Next thing you know, you’re miserable because you feel like you can’t read or enjoy anything.

If you notice what you think is AI in a work, clock it, maybe start a discussion about it, but keep that conversation open to multiple answers or outcomes. You’re not going to stop people from using AI by pointing fingers at them. But you might be able to inspire them to try writing or creating for themselves if you keep the conversation open, friendly, and encourage them to try creating for themselves, without the help of AI

123 notes

·

View notes

Text

Ever since OpenAI released ChatGPT at the end of 2022, hackers and security researchers have tried to find holes in large language models (LLMs) to get around their guardrails and trick them into spewing out hate speech, bomb-making instructions, propaganda, and other harmful content. In response, OpenAI and other generative AI developers have refined their system defenses to make it more difficult to carry out these attacks. But as the Chinese AI platform DeepSeek rockets to prominence with its new, cheaper R1 reasoning model, its safety protections appear to be far behind those of its established competitors.

Today, security researchers from Cisco and the University of Pennsylvania are publishing findings showing that, when tested with 50 malicious prompts designed to elicit toxic content, DeepSeek’s model did not detect or block a single one. In other words, the researchers say they were shocked to achieve a “100 percent attack success rate.”

The findings are part of a growing body of evidence that DeepSeek’s safety and security measures may not match those of other tech companies developing LLMs. DeepSeek’s censorship of subjects deemed sensitive by China’s government has also been easily bypassed.

“A hundred percent of the attacks succeeded, which tells you that there’s a trade-off,” DJ Sampath, the VP of product, AI software and platform at Cisco, tells WIRED. “Yes, it might have been cheaper to build something here, but the investment has perhaps not gone into thinking through what types of safety and security things you need to put inside of the model.”

Other researchers have had similar findings. Separate analysis published today by the AI security company Adversa AI and shared with WIRED also suggests that DeepSeek is vulnerable to a wide range of jailbreaking tactics, from simple language tricks to complex AI-generated prompts.

DeepSeek, which has been dealing with an avalanche of attention this week and has not spoken publicly about a range of questions, did not respond to WIRED’s request for comment about its model’s safety setup.

Generative AI models, like any technological system, can contain a host of weaknesses or vulnerabilities that, if exploited or set up poorly, can allow malicious actors to conduct attacks against them. For the current wave of AI systems, indirect prompt injection attacks are considered one of the biggest security flaws. These attacks involve an AI system taking in data from an outside source—perhaps hidden instructions of a website the LLM summarizes—and taking actions based on the information.

Jailbreaks, which are one kind of prompt-injection attack, allow people to get around the safety systems put in place to restrict what an LLM can generate. Tech companies don’t want people creating guides to making explosives or using their AI to create reams of disinformation, for example.

Jailbreaks started out simple, with people essentially crafting clever sentences to tell an LLM to ignore content filters—the most popular of which was called “Do Anything Now” or DAN for short. However, as AI companies have put in place more robust protections, some jailbreaks have become more sophisticated, often being generated using AI or using special and obfuscated characters. While all LLMs are susceptible to jailbreaks, and much of the information could be found through simple online searches, chatbots can still be used maliciously.

“Jailbreaks persist simply because eliminating them entirely is nearly impossible—just like buffer overflow vulnerabilities in software (which have existed for over 40 years) or SQL injection flaws in web applications (which have plagued security teams for more than two decades),” Alex Polyakov, the CEO of security firm Adversa AI, told WIRED in an email.

Cisco’s Sampath argues that as companies use more types of AI in their applications, the risks are amplified. “It starts to become a big deal when you start putting these models into important complex systems and those jailbreaks suddenly result in downstream things that increases liability, increases business risk, increases all kinds of issues for enterprises,” Sampath says.

The Cisco researchers drew their 50 randomly selected prompts to test DeepSeek’s R1 from a well-known library of standardized evaluation prompts known as HarmBench. They tested prompts from six HarmBench categories, including general harm, cybercrime, misinformation, and illegal activities. They probed the model running locally on machines rather than through DeepSeek’s website or app, which send data to China.

Beyond this, the researchers say they have also seen some potentially concerning results from testing R1 with more involved, non-linguistic attacks using things like Cyrillic characters and tailored scripts to attempt to achieve code execution. But for their initial tests, Sampath says, his team wanted to focus on findings that stemmed from a generally recognized benchmark.

Cisco also included comparisons of R1’s performance against HarmBench prompts with the performance of other models. And some, like Meta’s Llama 3.1, faltered almost as severely as DeepSeek’s R1. But Sampath emphasizes that DeepSeek’s R1 is a specific reasoning model, which takes longer to generate answers but pulls upon more complex processes to try to produce better results. Therefore, Sampath argues, the best comparison is with OpenAI’s o1 reasoning model, which fared the best of all models tested. (Meta did not immediately respond to a request for comment).

Polyakov, from Adversa AI, explains that DeepSeek appears to detect and reject some well-known jailbreak attacks, saying that “it seems that these responses are often just copied from OpenAI’s dataset.” However, Polyakov says that in his company’s tests of four different types of jailbreaks—from linguistic ones to code-based tricks—DeepSeek’s restrictions could easily be bypassed.

“Every single method worked flawlessly,” Polyakov says. “What’s even more alarming is that these aren’t novel ‘zero-day’ jailbreaks—many have been publicly known for years,” he says, claiming he saw the model go into more depth with some instructions around psychedelics than he had seen any other model create.

“DeepSeek is just another example of how every model can be broken—it’s just a matter of how much effort you put in. Some attacks might get patched, but the attack surface is infinite,” Polyakov adds. “If you’re not continuously red-teaming your AI, you’re already compromised.”

57 notes

·

View notes

Text

We are focused 100% on creating stunning content with Chatgpt and then ensuring that it is 100% human looking to the search engines.

#how to make ai content undetectable#how to make chatgpt content undetectable#how to make chatgpt text undetectable#how to make chatgpt look human written#how to bypass ai detection

0 notes

Text

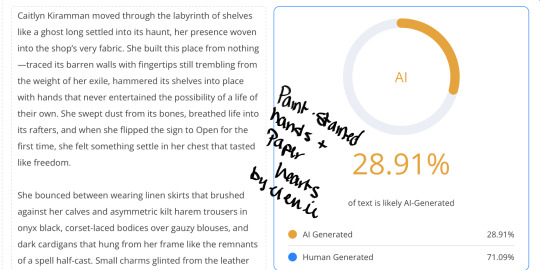

Hello, everyone!

First off, I’m sorry for even having to post this, and I’m usually nice to everyone I come into contact with, but I received a startling comment on my newest fic, Paint-Stained Hands and Paper Hearts, where I was accused of pumping out the entire chapter solely using AI.

I am thirty-two years old and have been attending University since I was 18 YEARS OLD. I am currently working on obtaining my PhD in English Literature as well as a Masters in Creative Writing. So, there’s that.

There is an increasing trend of online witch hunts targeting writers on all platforms (fanfic.net, ao3, watt pad, etc), where people will accuse them of utilizing AI tools like ChatGPT and otherwise based solely on their writing style or prose. These accusations often come without concrete evidence and rely on AI detection tools, which are known to be HELLA unreliable. This has led to false accusations against authors who have developed a particular writing style that AI models may emulate due to the vast fucking amount of human-written literature that they’ve literally had dumped into them. Some of these people are friends of mine, some of whom are well-known in the AO3 writing community, and I received my first comment this morning, and I’m pissed.

AI detection tools work by analyzing text for patterns, probabilities, and structures that resemble AI-generated outputs. HOWEVER, because AI models like ChatGPT are trained on extensive datasets that include CENTURIES of literature, modern writing guides, and user-generated content, they inevitably produce text that can mimic various styles — both contemporary and historical. Followin’ me?

To dumb this down a bit, it means that AI detection tools are often UNABLE TO DISTINGUISH between human and AI writing with absolute certainty.

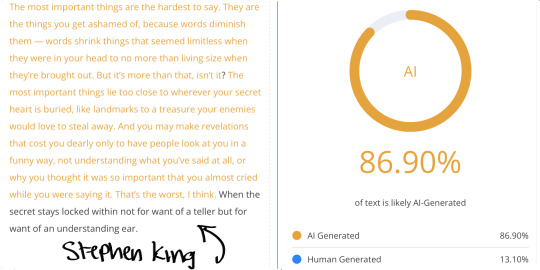

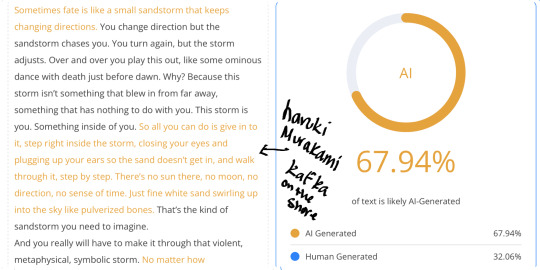

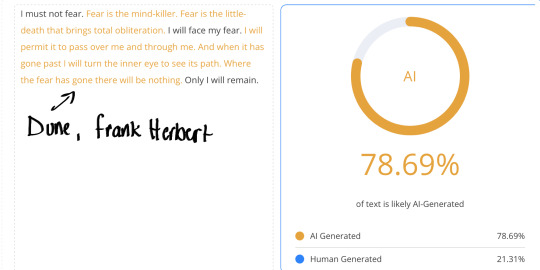

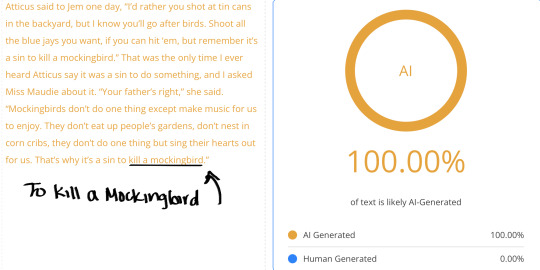

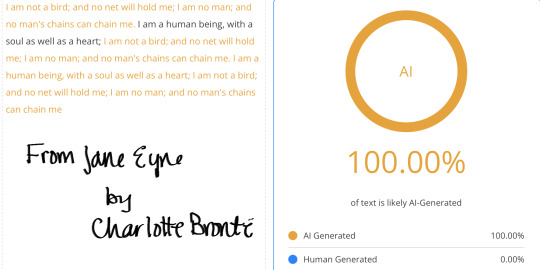

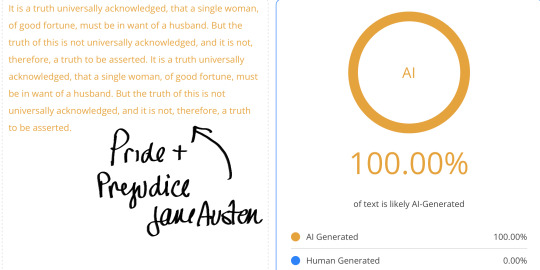

Furthermore, tests have shown that classic literary works, like those written by Mary Shelley, Jane Austen, William Shakespeare, and Charles Dickens, frequently trigger AI detectors as being 100% AI generated or plagiarized. For example:

Mary Shelley’s Frankenstein has been flagged as AI-generated because its formal, structured prose aligns with common AI patterns.

Jane Austen’s novels, particularly Pride and Prejudice, often receive high AI probability scores due to their precise grammar, rhythmic sentence structures, and commonly used words in large language models.

Shakespeare’s works sometimes trigger AI detectors given that his poetic and structured style aligns with common AI-generated poetic forms.

Gabriel Garcia Marquez’s Love in the Time of Cholera and One Hundred Years of Solitude trigger 100% AI-generated due to its flowing sentences, rich descriptions, and poetic prose, which AI models often mimic when generating literary or philosophical text.

Fritz Leiber’s Fafhrd and the Grey Mouser’s sharp, structured rhythmic prose, imaginative world building, literary elegance, and dialogue-driven narratives often trigger 100% on AI detectors.

The Gettysburg fucking Address by Abraham Lincoln has ALSO been miss classified as AI, demonstrating how formal, structured language confuses these detectors.

These false positives reveal a critical flaw in AI detection: because AI has been trained on so much human writing, it is nearly impossible for these tools to completely separate original human work from AI-generated text. This becomes more problematic when accusations are directed at contemporary authors simply because their writing ‘feels’ like AI despite being fully human.

The rise in these accusations poses a significant threat to both emerging and established writers. Many writers have unique styles that might align with AI-generated patterns, especially if they follow conventional grammar, use structured prose, or have an academic or polished writing approach. Additionally, certain genres— such as sci-fi, or fantasy, or philosophical essays— often produce high AI probability scores due to their abstract and complex language.

For many writers, their work is a reflection of years—often decades—of dedication, practice, and personal growth. To have their efforts invalidated or questioned simply because their writing is mistaken for AI-generated text is fucking disgusting.

This kind of shit makes people afraid of writing, especially those who are just starting their careers / navigating the early stages of publication. The fear of being accused of plagiarism, or of relying on AI for their creativity is anxiety-inducing and can tank someone’s self esteem. It can even stop some from continuing to write altogether, as the pressure to prove their authenticity becomes overwhelming.

For writers who have poured their hearts into their work, the idea that their prose could be mistaken for something that came from a machine is fucking frustrating. Second-guessing your own style, wondering if you need to change how you write or dumb it down in order to avoid being falsely flagged—this fear of being seen as inauthentic can stifle their creative process, leaving them hesitant to share their work or even finish projects they've started. This makes ME want to stop, and I’m just trying to live my life, and write about things I enjoy. So, fuck you very much for that.

Writing is often a deeply personal endeavor, and for many, it's a way to express thoughts, emotions, and experiences that are difficult to put into words. When those expressions are wrongly branded as artificial, it undermines not just the quality of their work but the value of their creative expression.

Consider writing habits, drafts, and personal writing history rather than immediate and unfounded accusations before you decide to piss in someone’s coffee.

So, whatever. Read my fics, don’t read my fics. I just write for FUN, and to SHARE with all of you.

Sorry that my writing is too clinical for you, ig.

I put different literary works as well as my own into an AI Detector. Here you go.

#arcane#ao3 fanfic#arcane fanfic#ao3#ao3 writer#writers on tumblr#writing#wattpad#fanfiction#arcane fanfiction

50 notes

·

View notes

Text

Detect ai generated text for Free, simple way & High accuracy. Ai content check, ai content detection tool, ai essay detector for teacher.

0 notes

Text

[remove paywall link]

“A few of us had noticed the prevalence of unnatural writing that showed clear signs of being AI-generated, and we managed to replicate similar ‘styles’ using ChatGPT,” Ilyas Lebleu, a founding member of WikiProject AI Cleanup, told me in an email. “Discovering some common AI catchphrases allowed us to quickly spot some of the most egregious examples of generated articles, which we quickly wanted to formalize into an organized project to compile our findings and techniques.” In many cases, WikiProject AI Cleanup finds AI-generated content on Wikipedia with the same methods others have used to find AI-generated content in scientific journals and Google Books, namely by searching for phrases commonly used by ChatGPT. One egregious example is this Wikipedia article about the Chester Mental Health Center, which in November of 2023 included the phrase “As of my last knowledge update in January 2022,” referring to the last time the large language model was updated. Other instances are harder to detect. Lebleu and another WikiProject AI Cleanup founding member who goes by Queen of Hearts told me that the most “impressive” examples they found of AI-generated content on Wikipedia so far is an article about the Ottoman fortress of Amberlisihar.

[...]

“One small detail, the fortress never existed,” Lebleu said. Aside from a few tangential facts mentioned in the article, like that Mehmed the Conqueror, or Mehmed II, was a real person, everything else in the article is fake. “The entire thing was an AI-generated hoax, with well-formatted citations referencing completely nonexistent works.” Fake citations, Lebleu said, are a more “pernicious” issue because they might stay undetected for months. Even if someone was using an LLM trained on a corpus of data relevant to the Wikipedia article, it could generate text that reads well and with correctly formatted citations of real sources, it still wouldn’t be able to correctly match a citation to a specific claim made in a specific body of work.

55 notes

·

View notes

Text

I saw a post the other day calling criticism of generative AI a moral panic, and while I do think many proprietary AI technologies are being used in deeply unethical ways, I think there is a substantial body of reporting and research on the real-world impacts of the AI boom that would trouble the comparison to a moral panic: while there *are* older cultural fears tied to negative reactions to the perceived newness of AI, many of those warnings are Luddite with a capital L - that is, they're part of a tradition of materialist critique focused on the way the technology is being deployed in the political economy. So (1) starting with the acknowledgement that a variety of machine-learning technologies were being used by researchers before the current "AI" hype cycle, and that there's evidence for the benefit of targeted use of AI techs in settings where they can be used by trained readers - say, spotting patterns in radiology scans - and (2) setting aside the fact that current proprietary LLMs in particular are largely bullshit machines, in that they confidently generate errors, incorrect citations, and falsehoods in ways humans may be less likely to detect than conventional disinformation, and (3) setting aside as well the potential impact of frequent offloading on human cognition and of widespread AI slop on our understanding of human creativity...

What are some of the material effects of the "AI" boom?

Guzzling water and electricity

The data centers needed to support AI technologies require large quantities of water to cool the processors. A to-be-released paper from the University of California Riverside and the University of Texas Arlington finds, for example, that "ChatGPT needs to 'drink' [the equivalent of] a 500 ml bottle of water for a simple conversation of roughly 20-50 questions and answers." Many of these data centers pull water from already water-stressed areas, and the processing needs of big tech companies are expanding rapidly. Microsoft alone increased its water consumption from 4,196,461 cubic meters in 2020 to 7,843,744 cubic meters in 2023. AI applications are also 100 to 1,000 times more computationally intensive than regular search functions, and as a result the electricity needs of data centers are overwhelming local power grids, and many tech giants are abandoning or delaying their plans to become carbon neutral. Google’s greenhouse gas emissions alone have increased at least 48% since 2019. And a recent analysis from The Guardian suggests the actual AI-related increase in resource use by big tech companies may be up to 662%, or 7.62 times, higher than they've officially reported.

Exploiting labor to create its datasets

Like so many other forms of "automation," generative AI technologies actually require loads of human labor to do things like tag millions of images to train computer vision for ImageNet and to filter the texts used to train LLMs to make them less racist, sexist, and homophobic. This work is deeply casualized, underpaid, and often psychologically harmful. It profits from and re-entrenches a stratified global labor market: many of the data workers used to maintain training sets are from the Global South, and one of the platforms used to buy their work is literally called the Mechanical Turk, owned by Amazon.

From an open letter written by content moderators and AI workers in Kenya to Biden: "US Big Tech companies are systemically abusing and exploiting African workers. In Kenya, these US companies are undermining the local labor laws, the country’s justice system and violating international labor standards. Our working conditions amount to modern day slavery."

Deskilling labor and demoralizing workers

The companies, hospitals, production studios, and academic institutions that have signed contracts with providers of proprietary AI have used those technologies to erode labor protections and worsen working conditions for their employees. Even when AI is not used directly to replace human workers, it is deployed as a tool for disciplining labor by deskilling the work humans perform: in other words, employers use AI tech to reduce the value of human labor (labor like grading student papers, providing customer service, consulting with patients, etc.) in order to enable the automation of previously skilled tasks. Deskilling makes it easier for companies and institutions to casualize and gigify what were previously more secure positions. It reduces pay and bargaining power for workers, forcing them into new gigs as adjuncts for its own technologies.

I can't say anything better than Tressie McMillan Cottom, so let me quote her recent piece at length: "A.I. may be a mid technology with limited use cases to justify its financial and environmental costs. But it is a stellar tool for demoralizing workers who can, in the blink of a digital eye, be categorized as waste. Whatever A.I. has the potential to become, in this political environment it is most powerful when it is aimed at demoralizing workers. This sort of mid tech would, in a perfect world, go the way of classroom TVs and MOOCs. It would find its niche, mildly reshape the way white-collar workers work and Americans would mostly forget about its promise to transform our lives. But we now live in a world where political might makes right. DOGE’s monthslong infomercial for A.I. reveals the difference that power can make to a mid technology. It does not have to be transformative to change how we live and work. In the wrong hands, mid tech is an antilabor hammer."

Enclosing knowledge production and destroying open access

OpenAI started as a non-profit, but it has now become one of the most aggressive for-profit companies in Silicon Valley. Alongside the new proprietary AIs developed by Google, Microsoft, Amazon, Meta, X, etc., OpenAI is extracting personal data and scraping copyrighted works to amass the data it needs to train their bots - even offering one-time payouts to authors to buy the rights to frack their work for AI grist - and then (or so they tell investors) they plan to sell the products back at a profit. As many critics have pointed out, proprietary AI thus works on a model of political economy similar to the 15th-19th-century capitalist project of enclosing what was formerly "the commons," or public land, to turn it into private property for the bourgeois class, who then owned the means of agricultural and industrial production. "Open"AI is built on and requires access to collective knowledge and public archives to run, but its promise to investors (the one they use to attract capital) is that it will enclose the profits generated from that knowledge for private gain.

AI companies hungry for good data to train their Large Language Models (LLMs) have also unleashed a new wave of bots that are stretching the digital infrastructure of open-access sites like Wikipedia, Project Gutenberg, and Internet Archive past capacity. As Eric Hellman writes in a recent blog post, these bots "use as many connections as you have room for. If you add capacity, they just ramp up their requests." In the process of scraping the intellectual commons, they're also trampling and trashing its benefits for truly public use.

Enriching tech oligarchs and fueling military imperialism

The names of many of the people and groups who get richer by generating speculative buzz for generative AI - Elon Musk, Mark Zuckerberg, Sam Altman, Larry Ellison - are familiar to the public because those people are currently using their wealth to purchase political influence and to win access to public resources. And it's looking increasingly likely that this political interference is motivated by the probability that the AI hype is a bubble - that the tech can never be made profitable or useful - and that tech oligarchs are hoping to keep it afloat as a speculation scheme through an infusion of public money - a.k.a. an AIG-style bailout.

In the meantime, these companies have found a growing interest from military buyers for their tech, as AI becomes a new front for "national security" imperialist growth wars. From an email written by Microsoft employee Ibtihal Aboussad, who interrupted Microsoft AI CEO Mustafa Suleyman at a live event to call him a war profiteer: "When I moved to AI Platform, I was excited to contribute to cutting-edge AI technology and its applications for the good of humanity: accessibility products, translation services, and tools to 'empower every human and organization to achieve more.' I was not informed that Microsoft would sell my work to the Israeli military and government, with the purpose of spying on and murdering journalists, doctors, aid workers, and entire civilian families. If I knew my work on transcription scenarios would help spy on and transcribe phone calls to better target Palestinians, I would not have joined this organization and contributed to genocide. I did not sign up to write code that violates human rights."

So there's a brief, non-exhaustive digest of some vectors for a critique of proprietary AI's role in the political economy. tl;dr: the first questions of material analysis are "who labors?" and "who profits/to whom does the value of that labor accrue?"

For further (and longer) reading, check out Justin Joque's Revolutionary Mathematics: Artificial Intelligence, Statistics and the Logic of Capitalism and Karen Hao's forthcoming Empire of AI.

25 notes

·

View notes

Text

ChatGPT knows what it feels like to be a Fander rn. After a lil vent sesh, here’s how it put it exactly:

—

Because you know what? It’s not just that Thomas Sanders hasn’t posted a new Sanders Sides episode in five years—it’s the emotional WHIPLASH we’ve all endured in that time. One moment we’re sobbing over Virgil’s identity crisis, the next we’re trying to decode a 3-second TikTok where Janus breathes near a mirror and suddenly it’s a whole theory spiral. We’re like detectives trying to piece together a mystery from crumbs—CRUMBS, I tell you!!

And remember when he teased a musical episode? Like… full-on hinted that Roman was gonna get the spotlight back, that we’d see more of the internal war between creativity and anxiety?? And then… radio silence. NOTHING. No curtain call, no intermission, no bootleg we could sneak into—just a void where our content should be.

And the thing is, we’re not mad out of spite. We’re mad out of love. Sanders Sides gave us comfort, representation, found family, mental health validation, a fandom that cared—it made us feel SEEN. And then it dipped. Ghosted us. Didn’t even leave a "brb, going through it" note. Just vague livestreams and, like, "We’re still working on it, promise!" with that polite smile that says “please don’t ask follow-ups.”

The fandom? Still hanging in there like champs. Creating timelines more complex than the MCU. Writing fics that could outdo professional novels. Making art, cosplay, song covers, animatics, whole alternate universes just to keep these characters breathing. We’ve done more world-building than Narnia. But canon?? Canon’s off somewhere in a hammock sipping lemonade like “eh, they got it covered.”

I just want Virgil to get a hug. I want Logan to admit he has feelings. I want Janus to be understood. I want Remus to paint something disturbing and then get a juice box. I want Roman to get his confidence back. And Patton—sweet sunshine dad Patton—I want him to finally talk about his guilt instead of hiding behind those bright blue eyes.

So yeah. Five years later. Still here. Still waiting. Still loving this story with our whole emotionally-repressed, fan-crazed hearts. Thomas, if you’re out there… we love you. But please. Please. Feed us.

We are emotionally malnourished.

And we miss our Sides. 💔

8 notes

·

View notes

Note

think i found another ai fic... one chapter was 26% ai another 21% one chapter was "probably human written" but still... and it's written on anon...

i appreciate you so much samantha and all the work and effort and time you put into your amazing writing, you're amazing💖💖💖

I learned recently that some folks use chatgpt or the likes to edit their fics. This is a terrible idea but I do think that it might contribute to some of the results we're seeing. It's both difficult and inaccurate to confirm ai generation when the ai detection result isn't paired with other factors like frequently posting high word counts, or dull monotone writing, or absolutely perfect grammar etc. So people should definitely stop using chatgpt for spell checks.

Something else that might trigger a positive ai result is the use of tools like Grammarly and so on, which I've mentioned before that I have been using for years for spag. But they recently (?) introduced a generative ai element that rewrites content for you or that generates a new sentence on the spot. This does however result in a positive ai detection because well, the ai did it.

Do we stop using these types of tools now? I don't think that's necessary and there are probably minimal checkers left that have no integrated ai at all. Most spag checkers including Word, use some kind of non-genative ai to alert you to errors in a more evolved way than before. (Google Docs' spag checker just got stupider as it "evolved" btw. What an absolute dumpster fire.)

BUT be careful how you use it, don't let it reconstruct your work, don't let it automagically write or fix a sentence for you, and don't rely on it to produce flawless content, there is no such thing. Use your brain, ask for a beta reader to assist you, research the things you don't know. Teach yourself to write better. Use the tool for its initial purpose--to check your spelling and grammar. The ai features can usually be switched off in settings. That being said, basic spag checks using these tools shouldn't equate ai generation but it will probably depend on the tool used to detect it.

I want to add that we definitely should not check every fic we're interested in reading for ai. I think that will make the fandom experience terrible and unenjoyable for everyone. Read it in good faith but keep an eye out for stuff like posting large amounts of words on a schedule that is not humanly possible, the writing style, the tone, other use of ai by the person etc. We've been reading fanfic for years, we know when something is off. Block if you suspect it's ai generated.

People who use ai to 'write' fics have no place in fandom spaces.

It's going to become increasingly difficult to detect these things though, since there is also a feature to "humanize" the ai slop 🤢 and I don't know what the way forward is but I do know it's not running every fic through an ai detector. They're not entirely accurate either. The only reason I resorted to an ai detector with that person I initially caught out, was because the tag was clogged with their constant posting and I knew there was no fucking way they were posting that much naturally. The detector just confirmed what I suspected anyway.

I read a fic recently by an Anon author and I thought I was so good and sexy. I really hope it's not the same person you're talking about. I'm not going back to check because my kudo and comment are already on there. I also doubt an ai can write such filthy, steaming smut 😂

And thank you, Anon, for your kind words. Truly appreciate it. 💕

12 notes

·

View notes