#how to detect chatgpt writing

Explore tagged Tumblr posts

Text

Outstanding new site created by blog expert reveals the incredible need for the best chatgpt content and detection of that content for ranking.

#how to detect chatgpt plagerism#how to detect ai content#how to detect chatgpt writing#chatgpt content detection#chatgpt human writing checker

0 notes

Text

i have chronic pain. i am neurodivergent. i understand - deeply - the allure of a "quick fix" like AI. i also just grew up in a different time. we have been warned about this.

15 entire years ago i heard about this. in my forensics class in high school, we watched a documentary about how AI-based "crime solving" software was inevitably biased against people of color.

my teacher stressed that AI is like a book: when someone writes it, some part of the author will remain within the result. the internet existed but not as loudly at that point - we didn't know that AI would be able to teach itself off already-biased Reddit threads. i googled it: yes, this bias is still happening. yes, it's just as bad if not worse.

i can't actually stop you. if you wanna use ChatGPT to slide through your classes, that's on you. it's your money and it's your time. you will spend none of it thinking, you will learn nothing, and, in college, you will piss away hundreds of thousands of dollars. you will stand at the podium having done nothing, accomplished nothing. a cold and bitter pyrrhic victory.

i'm not even sure students actually read the essays or summaries or emails they have ChatGPT pump out. i think it just flows over them and they use the first answer they get. my brother teaches engineering - he recently got fifty-three copies of almost-the-exact-same lab reports. no one had even changed the wording.

and yes: AI itself (as a concept and practice) isn't always evil. there's AI that can help detect cancer, for example. and yet: when i ask my students if they'd be okay with a doctor that learned from AI, many of them balk. it is one thing if they don't read their engineering textbook or if they don't write the critical-thinking essay. it's another when it starts to affect them. they know it's wrong for AI to broad-spectrum deny insurance claims, but they swear their use of AI is different.

there's a strange desire to sort of divorce real-world AI malpractice over "personal use". for example, is it moral to use AI to write your cover letters? cover letters are essentially only templates, and besides: AI is going to be reading your job app, so isn't it kind of fair?

i recently found out that people use AI as a romantic or sexual partner. it seems like teenagers particularly enjoy this connection, and this is one of those "sticky" moments as a teacher. honestly - you can roast me for this - but if it was an actually-safe AI, i think teenagers exploring their sexuality with a fake partner is amazing. it prevents them from making permanent mistakes, it can teach them about their bodies and their desires, and it can help their confidence. but the problem is that it's not safe. there isn't a well-educated, sensitive AI specifically to help teens explore their hormones. it's just internet-fed cycle. who knows what they're learning. who knows what misinformation they're getting.

the most common pushback i get involves therapy. none of us have access to the therapist of our dreams - it's expensive, elusive, and involves an annoying amount of insurance claims. someone once asked me: are you going to be mad when AI saves someone's life?

therapists are not just trained on the book, they're trained on patient management and helping you see things you don't see yourself. part of it will involve discomfort. i don't know that AI is ever going to be able to analyze the words you feed it and answer with a mind towards the "whole person" writing those words. but also - if it keeps/kept you alive, i'm not a purist. i've done terrible things to myself when i was at rock bottom. in an emergency, we kind of forgive the seatbelt for leaving bruises. it's just that chat shouldn't be your only form of self-care and recovery.

and i worry that the influence chat has is expanding. more and more i see people use chat for the smallest, most easily-navigated situations. and i can't like, make you worry about that in your own life. i often think about how easy it was for social media to take over all my time - how i can't have a tiktok because i spend hours on it. i don't want that to happen with chat. i want to enjoy thinking. i want to enjoy writing. i want to be here. i've already really been struggling to put the phone down. this feels like another way to get you to pick the phone up.

the other day, i was frustrated by a book i was reading. it's far in the series and is about a character i resent. i googled if i had to read it, or if it was one of those "in between" books that don't actually affect the plot (you know, one of those ".5" books). someone said something that really stuck with me - theoretically you're reading this series for enjoyment, so while you don't actually have to read it, one would assume you want to read it.

i am watching a generation of people learn they don't have to read the thing in their hand. and it is kind of a strange sort of doom that comes over me: i read because it's genuinely fun. i learn because even though it's hard, it feels good. i try because it makes me happy to try. and i'm watching a generation of people all lay down and say: but i don't want to try.

#spilled ink#i do also think this issue IS more complicated than it appears#if a teacher uses AI to grade why write the essay for example.#<- while i don't agree (the answer is bc the essay is so YOU learn) i would be RIPSHIT as a student#if i found that out.#but why not give AI your job apps? it's not like a human person SEES your applications#the world IS automating in certain ways - i do actually understand the frustration#some people feel where it's like - i'm doing work here. the work will be eaten by AI. what's the point#but the answer is that we just don't have a balance right now. it just isn't trained in a smart careful way#idk. i am pretty anti AI tho so . much like AI. i'm biased.#(by the way being able to argue the other side tells u i actually understand the situation)#(if u see me arguing "pro-chat'' it's just bc i think a good argument involves a rebuttal lol)#i do not use ai . hard stop.

4K notes

·

View notes

Note

whats wrong with ai?? genuinely curious <3

okay let's break it down. i'm an engineer, so i'm going to come at you from a perspective that may be different than someone else's.

i don't hate ai in every aspect. in theory, there are a lot of instances where, in fact, ai can help us do things a lot better without. here's a few examples:

ai detecting cancer

ai sorting recycling

some practical housekeeping that gemini (google ai) can do

all of the above examples are ways in which ai works with humans to do things in parallel with us. it's not overstepping--it's sorting, using pixels at a micro-level to detect abnormalities that we as humans can not, fixing a list. these are all really small, helpful ways that ai can work with us.

everything else about ai works against us. in general, ai is a huge consumer of natural resources. every prompt that you put into character.ai, chatgpt? this wastes water + energy. it's not free. a machine somewhere in the world has to swallow your prompt, call on a model to feed data into it and process more data, and then has to generate an answer for you all in a relatively short amount of time.

that is crazy expensive. someone is paying for that, and if it isn't you with your own money, it's the strain on the power grid, the water that cools the computers, the A/C that cools the data centers. and you aren't the only person using ai. chatgpt alone gets millions of users every single day, with probably thousands of prompts per second, so multiply your personal consumption by millions, and you can start to see how the picture is becoming overwhelming.

that is energy consumption alone. we haven't even talked about how problematic ai is ethically. there is currently no regulation in the united states about how ai should be developed, deployed, or used.

what does this mean for you?

it means that anything you post online is subject to data mining by an ai model (because why would they need to ask if there's no laws to stop them? wtf does it matter what it means to you to some idiot software engineer in the back room of an office making 3x your salary?). oh, that little fic you posted to wattpad that got a lot of attention? well now it's being used to teach ai how to write. oh, that sketch you made using adobe that you want to sell? adobe didn't tell you that anything you save to the cloud is now subject to being used for their ai models, so now your art is being replicated to generate ai images in photoshop, without crediting you (they have since said they don't do this...but privacy policies were never made to be human-readable, and i can't imagine they are the only company to sneakily try this). oh, your apartment just installed a new system that will use facial recognition to let their residents inside? oh, they didn't train their model with anyone but white people, so now all the black people living in that apartment building can't get into their homes. oh, you want to apply for a new job? the ai model that scans resumes learned from historical data that more men work that role than women (so the model basically thinks men are better than women), so now your resume is getting thrown out because you're a woman.

ai learns from data. and data is flawed. data is human. and as humans, we are racist, homophobic, misogynistic, transphobic, divided. so the ai models we train will learn from this. ai learns from people's creative works--their personal and artistic property. and now it's scrambling them all up to spit out generated images and written works that no one would ever want to read (because it's no longer a labor of love), and they're using that to make money. they're profiting off of people, and there's no one to stop them. they're also using generated images as marketing tools, to trick idiots on facebook, to make it so hard to be media literate that we have to question every single thing we see because now we don't know what's real and what's not.

the problem with ai is that it's doing more harm than good. and we as a society aren't doing our due diligence to understand the unintended consequences of it all. we aren't angry enough. we're too scared of stifling innovation that we're letting it regulate itself (aka letting companies decide), which has never been a good idea. we see it do one cool thing, and somehow that makes up for all the rest of the bullshit?

#yeah i could talk about this for years#i could talk about it forever#im so passionate about this lmao#anyways#i also want to point out the examples i listed are ONLY A FEW problems#there's SO MUCH MORE#anywho ai is bleh go away#ask#ask b#🐝's anons#ai

1K notes

·

View notes

Text

AI, Plagiarism, and CYA

Shout-out for all the students gearing up to go back to school in increasingly frustrated times when dealing with all this AI bullshit. As you've probably noticed, lots of institutions have adapted anti-plagiarism software that incorporates AI detectors that - surprise - aren't that great. Many students are catching flack for getting dinged on work that isn't AI generated, and schools are struggling to catch up to craft policies that uphold academic rigor. It sucks for everyone involved!

As a student, it can really feel like you're in a bind, especially if you didn't do anything wrong. Your instructor isn't like to be as tech-savvy as some, and frankly, you might not be as tech-savvy as you think either. The best thing to do, no matter how your school is handling things, is to Cover Your Ass.

Pay attention to the academic policy. Look, I know you probably skimmed the syllabus. Primus knows I did too, but the policy there is the policy the instructor must stick with. If the policy sets down a strong 'don't touch ChatGPT with a ten-foot pole' standard, stick to it. If you get flagged for something you thought was okay because you didn't read the policy carefully, you don't have ground to stand on if you get called out.

Turn off Autosave and save multiple (named) drafts. If you're using Microsoft Word because your school gives you a free license, the handy Autosave feature may be shooting you in the foot when it comes to proving you did the work. I know this seems counter-intuitive, but I've seen this bite enough people in the ass to recommend students go old-school. Keep those "draft 1234" in a file just in case.

Maintaining timestamped, clearly different drafts of a paper can really help you in the long-run. GoogleDocs also goes a much better job of tracking changes to a document, and may be something to consider, however, with all this AI shit, I'm hesitant to recommend Google. Your best bet, overall, is to keep multiple distinctive drafts that prove how your paragraphs evolved from first to final.

Avoid Grammarly, ProWiritingAid, etc. All that handy 'writing tools' software that claims to help shore up your writing aren't doing you any favors. Grammarly, ProWritingAid, and other software throw up immediate flags in AI-detection software. You may have only used it to clean up the grammar and punctuation, but if the AI-detection software says otherwise, you might be screwed. They're not worth using over a basic spell and grammar check in both Word and GoogleDocs can already do.

Cite all citations and save your sources! This is basic paper-writing, but people using ChatGPT for research often neglect to check to make sure it isn't making shit up, and that made up shit is starting to appear on other parts of the internet. Be sure to click through and confirm what you're using for your paper is true. Get your sources and research material from somewhere other than a generative language model, which are known for making shit up. Yes, Wikipedia is a fine place to start and has rigorously maintained sources.

Work with the support your school has available. My biggest mistake in college was not reaching out when I felt like I was drowning, and I know how easy it is to get in you head and not know where to turn when you need more help. But I've since met a great deal of awesome librarians, tutors, and student aid staff that love nothing more to devote their time to student success. Don't wait at the last moment until they're swamped - you can and will succeed if you reach out early and often.

I, frankly, can't wait for all this AI bullshit to melt down in a catastrophic collapse, but in the meantime, take steps to protect yourself.

#school#AI Bullshit#frankly AI-checkers are just as bad as AI#you gotta take steps to document what you're doing

462 notes

·

View notes

Note

i am begging people to learn that not all AI is generative AI, and AI can (and is) used for a variety of helpful/good uses in various different fields (including the arts! example: spiderverse animators using AI to lip sync since its a repetitive task). AI is not inherently good or bad, rather it is how it is created, how the data is obtained and used, and how the AI is applied which is helping or harming.

furthermore, i am also begging people to stop using "AI detection" software. there is no way to detect AI- there are giveways, such as when someone copy-pastes from chatGPT and it leaves the markdown for the italics and bold text. but for text content, there is not a way to tell. someone using technical language or less-commonly used words is not indicative of them using AI. i know it feels bad to not be able to know for certain but i think it is better to give others the benefit of the doubt, or to not interact with their writing (such as in the case of fanfic) if you suspect AI.

--

171 notes

·

View notes

Note

Hi! Just wanted to ask. How can I give my students assignments that are chat-gpt proof? Or that they won't just copy the answer without at least doing some editing?

Hi! So, I don't think anything is ChatGPT-proof. You fundamentally cannot stop people from using it to take a shortcut. You can't even stop them from copying the answer without editing it. However, I think you can work with this reality. So, you can do three things:

Don't be a cop about it.

If you make your objective "stop the children from using the thing to cheat," you are focusing on the wrong thing. You will be constantly scrutinizing every submission with suspicion, you will be accusing people of cheating--and some of them will not have cheated, and they will remember this forever--and you will be aiming at enforcement (which is trying to hold back the sea) instead of on inviting and supporting learning whenever and wherever possible. (I'll come back to this under item 2.)

Regarding why enforcement is holding back the sea: It is fundamentally rational for them to do this. We, who "love learning" (i.e. are good at what our academic system sees as learning, for various reasons have built our lives around that, happen to enjoy these activities), see everything they might cheat themselves of by doing it, because we know what we got out of doing this type of work. Many students, however--especially at the kind of school I teach at--are there to get the piece of paper that might, if they're lucky, allow them access to a relatively livable and stable income. The things that are wrong with this fact are structural and nothing to do with students' failings as people, or (tfuh) laziness, or whatever. We cannot make this not true (we can certainly try to push against it in certain ways, but that only goes so far). More pragmatically, chatgpt and similar are going to keep getting better, and detecting them is going to get harder, and your relationships with your students will be further and further damaged as you are forced to hound them more, suspect them more, falsely accuse more people, while also looking like an idiot because plenty of them will get away with it. A productive classroom requires trust. The trust goes both ways. Being a cop about this will destroy it in both directions.

So the first thing you have to do is really, truly accept that some of them are going to use it and you are not always going to know when they do. And when I say accept this, I mean you actually need to be ok with it. I find it helps to remember that the fact that a bot can produce writing to a standard that makes teachers worry means we have been teaching people to be shitty writers. I don't know that so much is lost if we devalue the 5-paragraph SAT essay and its brethren.

So the reason my policy is to say it's ok to use chatgpt or similar as long as you tell me so and give me some thinking about what you got from using it is that a) I am dropping the charade that we don't all know what's going on and thereby making it (pedagogical term) chill; b) I am modeling/suggesting that if you use it, it's a good idea to be critical about what it tells you (which I desperately want everyone to know in general, not just my students in a classroom); c) I am providing an invitation to learn from using chatgpt, rather than avoid learning by using it. Plenty of them won't take me up on that. That's fine (see item 3 below).

So ok, we have at least established the goal of coming at it from acceptance. Then what do you do at that point?

Think about what is unique to your class and your students and build assignments around that.

Assignments, of course, don't have to be simply "what did Author mean by Term" or "list the significant thingies." A prof I used to TA under gave students the option of interviewing a family member or friend about their experiences with public housing in the week we taught public housing. Someone I know who teaches a college biology class has an illustration-based assignment to draw in the artsier students who are in her class against their will. I used to have an extra-credit question that asked them to pick anything in the city that they thought might be some kind of clue about the past in that place, do some research about it, and tell me what they found out and how. (And that's how I learned how Canal St. got its name! Learning something you didn't know from a student's work is one of the greatest feelings there is.) One prompt I intend to use in this class will be something to the effect of, "Do you own anything--a t-shirt, a mug, a phone case--that has the outline of your city, state, or country on it? Why? How did you get it, and what does having this item with this symbol on it mean to you? Whether you personally have one or not, why do you think so many people own items like this?" (This is for political geography week, if anyone's wondering.)

These are all things that target students' personal interests and capabilities, the environments they live in, and their relationships within their communities. Chatgpt can fake that stuff, but not very well. My advisor intends to use prompts that refer directly to things he said in class or conversations that were had in class, rather than to a given reading, in hopes that that will also make it harder for chatgpt to fake well because it won't have the context. The more your class is designed around the specific institution you teach at and student body you serve, the easier that is to do. (Obviously, how possible that is is going to vary based on what you're teaching. When I taught Urban Studies using the city we all lived in as the example all through the semester, it was so easy to make everything very tailored to the students I had in that class that semester. That's not the same--or it doesn't work the same way--if you're teaching Shakespeare. But I know someone who performs monologues from the plays in class and has his students direct him and give him notes as a way of drawing them into the speech and its niceties of meaning. Chatgpt is never going to know what stage directions were given in that room. There are possibilities.) This is all, I guess, a long way of saying that you'll have a better time constructing assignments chatgpt will be bad at if you view your class as a particular situation, occurring only once (these people, this year), which is a situation that has the purpose of encouraging thought--rather than as an information-transfer mechanism. Of course information transfer happens, but that is not what I and my students are doing together here.

Now, they absolutely can plug this type of prompt into chatgpt. I've tried it myself. I asked it to give me a personal essay about the political geography prompt and a critical personal essay about the same thing. (I recommend doing this with your own prospective assignments! See what they'd get and whether it's something you'd grade highly. If it is, then change either the goal of the assignment or at least the prompt.) Both of them were decent if you are grading the miserable 5-paragraph essay. Both of them were garbage if you are looking for evidence of a person turning their attention for the first time to something they have taken for granted all their lives. Chatgpt has neither personality nor experiences, so it makes incredibly vague, general statements in the first person that are dull as dishwater and simply do not engage with what the prompt is really asking for. I already graded on "tell me what you think of this/how this relates to your life" in addition to "did you understand the reading," because what I care about is whether they're thinking. So students absolutely can and will plug that prompt into chatgpt and simply c/p the output. They just won't get high marks for it.

If they're fine with not getting high marks, then okay. For a lot of them this is an elective they're taking essentially at random to get that piece of paper; I'm not gonna knock the hustle, and (see item 1) I couldn't stop them if I wanted to. What I can do is try to make class time engaging, build relationships with them that make them feel good about telling me their thoughts, and present them with a variety of assignments that create opportunities for different strengths, points of interest, and ways into the material, in hopes of hooking as many different people in as many different ways as I can.

This brings me back to what I said about inviting learning. Because I have never yet in my life taught a course that was for people majoring in the subject, I long ago accepted that I cannot get everyone to engage with every concept, subject, or idea (or even most of them). All I can do is invite them to get interested in the thing at hand in every class, in every assignment, in every choice of reading, in every question I ask them. How frequently each person accepts these invitations (and which ones) is going to vary hugely. But I also accept that people often need to be invited more than once, and even if they don't want to go through the door I'm holding open for them right now, the fact that they were invited this time might make it more likely for them to go through it the next time it comes up, or the time after that. I'll never know what will come of all of these invitations, and that's great, actually. I don't want to make them care about everything I care about, or know everything I know. All I want is to offer them new ways to be curious.

Therefore: if they use chatgpt to refuse an invitation this week, fine. That would probably have happened anyway in a lot of cases even without chatgpt. But, just as before, I can snag some of those people's attention on one part of this module in class tomorrow. Some of them I'll get next time with a different type of assignment. Some of them I'll hook for a moment with a joke. I don't take the times that doesn't happen as failures. But the times that it does are all wins that are not diminished by the times it doesn't.

Actually try to think of ways to use chatgpt to promote learning.

I DREAM of the day I'm teaching something where it makes sense to have students edit an AI-written text. Editing is an incredible way to get better at writing. I could generate one in class and we could do it all together. I could give them a prompt, ask them to feed it into chatgpt, and ask them to turn in both what they got and some notes on how they think it could be better. I could give them a pretty traditional "In Text, Author says Thing. What did Author mean by that?" prompt, have them get an answer from chatgpt, and then ask them to fact-check it. Etc. All of these get them thinking about written communication and, incidentally, demonstrate the tool's limitations.

I'm sure there are and will be tons of much more creative ideas for how to incorporate chatgpt rather than fight it. (Once upon a time, the idea of letting students use calculators in math class was also scandalous to many teachers.) I have some geography-specific ideas for how to use image generation as well. When it comes specifically to teaching, I think it's a waste of time for us to be handwringing instead of applying ourselves to this question. I am well aware of the political and ethical problems with chatgpt, and that's something to discuss with, probably, more advanced students in a seminar setting. But we won't (per item 1) get very far simply insisting that Thing Bad and Thing Stupid. So how do we use it to invite learning? That's the question I'm interested in.

Finally, because tangential to your question: I think there's nothing wrong with bringing back more in-class writing and even oral exams (along with take-home assignments that appeal to strengths and interests other than expository writing as mentioned above). These assessments play to different strengths than written take-homes. For some students, that means they'll be harder or scarier; by the same token, for other students they'll be easier and more confidence-building. (Plus, "being able to think on your feet" is also a very good ~real-world skill~ to teach.) In the spirit of trying to offer as many ways in as possible, I think that kind of diversification in assignments is a perfectly good idea.

#teaching#chatgpt#posting this on my first teaching day of the semester!#this is probably a lot longer than what you asked for but it is the answer i know how to give. hope something in it helps!

1K notes

·

View notes

Text

A month later, the business introduced an automated system. Miller's manager would plug a headline for an article into an online form, an AI model would generate an outline based on that title, and Miller would get an alert on his computer. Instead of coming up with their own ideas, his writers would create articles around those outlines, and Miller would do a final edit before the stories were published. Miller only had a few months to adapt before he got news of a second layer of automation. Going forward, ChatGPT would write the articles in their entirety, and most of his team was fired. The few people remaining were left with an even less creative task: editing ChatGPT's subpar text to make it sound more human. By 2024, the company laid off the rest of Miller's team, and he was alone.

Hell world.

The article flips back and forth between Welcome To The Torment Nexus / Isn't This Technology Neat?! modes which is infuriating. The BBC is obviously wary of pissing off its ChatGPT friendly advertisers but c'mon dudes, pick a side.

There's also a section dripping with irony describing how this AI-generated copywriter output trips the company's own AI-detection algorithms, triggering rewrites to make it "less AI". Which while (oh my aching sides) is fuckin hilarious also underlines the core problem with the whole approach: the actual text output is garbage.

Humans do not like reading garbage.

Eventually the only ones reading this shit will be AI systems designed to summarize badly written copywritten text.

137 notes

·

View notes

Text

Hello, everyone!

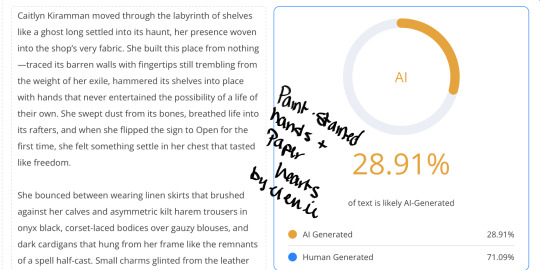

First off, I’m sorry for even having to post this, and I’m usually nice to everyone I come into contact with, but I received a startling comment on my newest fic, Paint-Stained Hands and Paper Hearts, where I was accused of pumping out the entire chapter solely using AI.

I am thirty-two years old and have been attending University since I was 18 YEARS OLD. I am currently working on obtaining my PhD in English Literature as well as a Masters in Creative Writing. So, there’s that.

There is an increasing trend of online witch hunts targeting writers on all platforms (fanfic.net, ao3, watt pad, etc), where people will accuse them of utilizing AI tools like ChatGPT and otherwise based solely on their writing style or prose. These accusations often come without concrete evidence and rely on AI detection tools, which are known to be HELLA unreliable. This has led to false accusations against authors who have developed a particular writing style that AI models may emulate due to the vast fucking amount of human-written literature that they’ve literally had dumped into them. Some of these people are friends of mine, some of whom are well-known in the AO3 writing community, and I received my first comment this morning, and I’m pissed.

AI detection tools work by analyzing text for patterns, probabilities, and structures that resemble AI-generated outputs. HOWEVER, because AI models like ChatGPT are trained on extensive datasets that include CENTURIES of literature, modern writing guides, and user-generated content, they inevitably produce text that can mimic various styles — both contemporary and historical. Followin’ me?

To dumb this down a bit, it means that AI detection tools are often UNABLE TO DISTINGUISH between human and AI writing with absolute certainty.

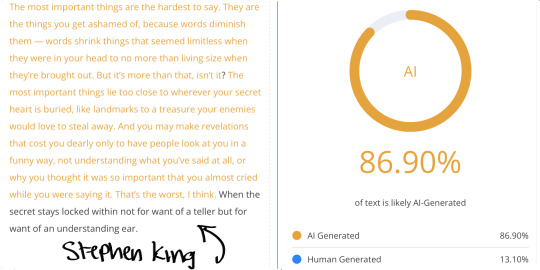

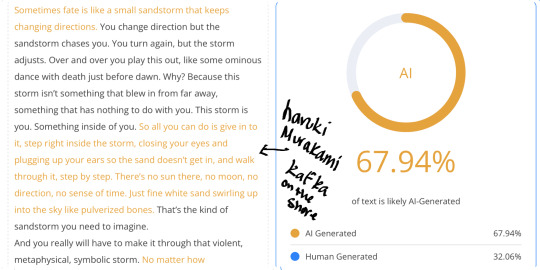

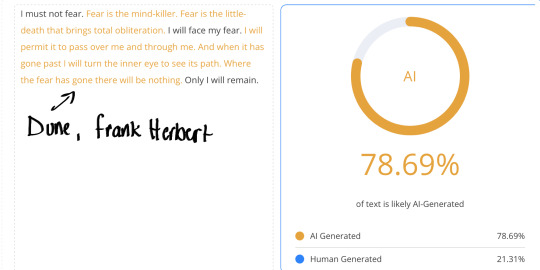

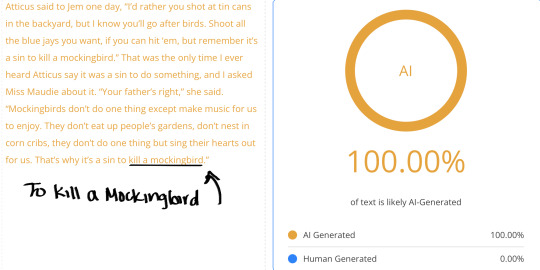

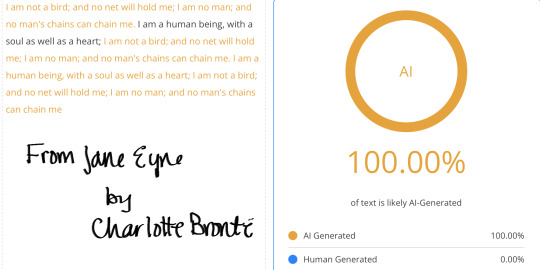

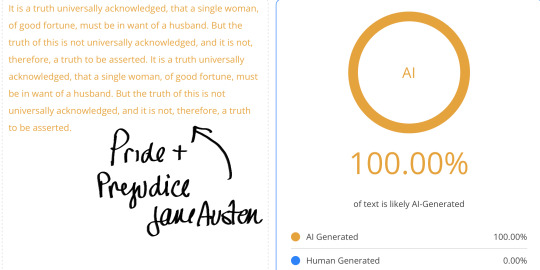

Furthermore, tests have shown that classic literary works, like those written by Mary Shelley, Jane Austen, William Shakespeare, and Charles Dickens, frequently trigger AI detectors as being 100% AI generated or plagiarized. For example:

Mary Shelley’s Frankenstein has been flagged as AI-generated because its formal, structured prose aligns with common AI patterns.

Jane Austen’s novels, particularly Pride and Prejudice, often receive high AI probability scores due to their precise grammar, rhythmic sentence structures, and commonly used words in large language models.

Shakespeare’s works sometimes trigger AI detectors given that his poetic and structured style aligns with common AI-generated poetic forms.

Gabriel Garcia Marquez’s Love in the Time of Cholera and One Hundred Years of Solitude trigger 100% AI-generated due to its flowing sentences, rich descriptions, and poetic prose, which AI models often mimic when generating literary or philosophical text.

Fritz Leiber’s Fafhrd and the Grey Mouser’s sharp, structured rhythmic prose, imaginative world building, literary elegance, and dialogue-driven narratives often trigger 100% on AI detectors.

The Gettysburg fucking Address by Abraham Lincoln has ALSO been miss classified as AI, demonstrating how formal, structured language confuses these detectors.

These false positives reveal a critical flaw in AI detection: because AI has been trained on so much human writing, it is nearly impossible for these tools to completely separate original human work from AI-generated text. This becomes more problematic when accusations are directed at contemporary authors simply because their writing ‘feels’ like AI despite being fully human.

The rise in these accusations poses a significant threat to both emerging and established writers. Many writers have unique styles that might align with AI-generated patterns, especially if they follow conventional grammar, use structured prose, or have an academic or polished writing approach. Additionally, certain genres— such as sci-fi, or fantasy, or philosophical essays— often produce high AI probability scores due to their abstract and complex language.

For many writers, their work is a reflection of years—often decades—of dedication, practice, and personal growth. To have their efforts invalidated or questioned simply because their writing is mistaken for AI-generated text is fucking disgusting.

This kind of shit makes people afraid of writing, especially those who are just starting their careers / navigating the early stages of publication. The fear of being accused of plagiarism, or of relying on AI for their creativity is anxiety-inducing and can tank someone’s self esteem. It can even stop some from continuing to write altogether, as the pressure to prove their authenticity becomes overwhelming.

For writers who have poured their hearts into their work, the idea that their prose could be mistaken for something that came from a machine is fucking frustrating. Second-guessing your own style, wondering if you need to change how you write or dumb it down in order to avoid being falsely flagged—this fear of being seen as inauthentic can stifle their creative process, leaving them hesitant to share their work or even finish projects they've started. This makes ME want to stop, and I’m just trying to live my life, and write about things I enjoy. So, fuck you very much for that.

Writing is often a deeply personal endeavor, and for many, it's a way to express thoughts, emotions, and experiences that are difficult to put into words. When those expressions are wrongly branded as artificial, it undermines not just the quality of their work but the value of their creative expression.

Consider writing habits, drafts, and personal writing history rather than immediate and unfounded accusations before you decide to piss in someone’s coffee.

So, whatever. Read my fics, don’t read my fics. I just write for FUN, and to SHARE with all of you.

Sorry that my writing is too clinical for you, ig.

I put different literary works as well as my own into an AI Detector. Here you go.

#arcane#ao3 fanfic#arcane fanfic#ao3#ao3 writer#writers on tumblr#writing#wattpad#fanfiction#arcane fanfiction

50 notes

·

View notes

Text

Does anybody know how to poison original writing against ai-scrapers? I tried to look it up, but all I found were people talking about how to pass the ai-detection tests or which writing software to use before publishing.

I want something I can do to my writing (in the same way I overlay textures on my art) that's going to poison ai-scrapers so I can feel comfortable posting my writing online.

I thought about posting my writing in a photo, but then that comes with the issue of being inaccessible, and adding alt-text would defeat the purpose of the photo. I don't want to throw in random gibberish either because that would ruin the story for readers.

I probably won't post any writing until after I've created a website for my works, but even then a lot of ai-scapers (esp chatgpt) have a tendency to completely ignore robot.txt files.

#writing#writer#writers#writeblr#author#creative writing#writers on tumblr#anti ai#anti generative ai#ai scraping#ai scapers

37 notes

·

View notes

Text

ChatGPT being widely available now is turning professors into panopticon guards because apparently AI text generation is much more difficult to detect than plagiarism and many undergrad students are using ChatGPT to answer questions and write their essays. which I don’t think is like, great for your education in terms of learning how to do academic writing or synthesising information, but that’s more the result of university being a white collar job factory than some new epidemic of student laziness or whatever. like the incentives to get high grades don’t come from students’ widespread desire to learn more about the world, standardised grades structure your approach to knowledge in the classroom and ultimately determine if you get a degree or not. so it’s going to probably be a nightmare for students in the coming years to defend against AI generation accusations in their work since it’s not really provable as an accusation but from what I’ve seen/heard it’s fairly easy for profs to scare the shit out of you by just throwing that accusation out there

128 notes

·

View notes

Text

ChatGPT knows what it feels like to be a Fander rn. After a lil vent sesh, here’s how it put it exactly:

—

Because you know what? It’s not just that Thomas Sanders hasn’t posted a new Sanders Sides episode in five years—it’s the emotional WHIPLASH we’ve all endured in that time. One moment we’re sobbing over Virgil’s identity crisis, the next we’re trying to decode a 3-second TikTok where Janus breathes near a mirror and suddenly it’s a whole theory spiral. We’re like detectives trying to piece together a mystery from crumbs—CRUMBS, I tell you!!

And remember when he teased a musical episode? Like… full-on hinted that Roman was gonna get the spotlight back, that we’d see more of the internal war between creativity and anxiety?? And then… radio silence. NOTHING. No curtain call, no intermission, no bootleg we could sneak into—just a void where our content should be.

And the thing is, we’re not mad out of spite. We’re mad out of love. Sanders Sides gave us comfort, representation, found family, mental health validation, a fandom that cared—it made us feel SEEN. And then it dipped. Ghosted us. Didn’t even leave a "brb, going through it" note. Just vague livestreams and, like, "We’re still working on it, promise!" with that polite smile that says “please don’t ask follow-ups.”

The fandom? Still hanging in there like champs. Creating timelines more complex than the MCU. Writing fics that could outdo professional novels. Making art, cosplay, song covers, animatics, whole alternate universes just to keep these characters breathing. We’ve done more world-building than Narnia. But canon?? Canon’s off somewhere in a hammock sipping lemonade like “eh, they got it covered.”

I just want Virgil to get a hug. I want Logan to admit he has feelings. I want Janus to be understood. I want Remus to paint something disturbing and then get a juice box. I want Roman to get his confidence back. And Patton—sweet sunshine dad Patton—I want him to finally talk about his guilt instead of hiding behind those bright blue eyes.

So yeah. Five years later. Still here. Still waiting. Still loving this story with our whole emotionally-repressed, fan-crazed hearts. Thomas, if you’re out there… we love you. But please. Please. Feed us.

We are emotionally malnourished.

And we miss our Sides. 💔

8 notes

·

View notes

Note

"chatgpt writing is bad because you can tell when it's chatgpt writing because chatgpt writing is bad". in reality the competent kids are using chatgpt well and the incompetent kids are using chatgpt poorly... like with any other tool.

It's not just like other tools. Calculators and computers and other kinds of automation don't require you to steal the hard work of other people who deserve recognition and compensation. I dont know why I have to keep reminding people of this.

It also uses an exorbitant amount of energy and water during an environmental crisis and it's been linked to declining cognitive skills. The competent kids are becoming less competent by using it and they're fucked when we require in-class essays.

Specifically, it can enhance your writing output and confidence but it decreases creativity, originality, critical thinking, reading comprehension, and makes you prone to data bias. Remember, AI privileges the most common answers, which are often out of date and wrong when it comes to scientific and sociological data. This results in reproduction of racism and sexist ideas, because guess whats common on the internet? Racism and sexism!

Heres a source (its a meta-analysis, so it aggregates data from a collection of studies. This means it has better statistical power than any single study, which could have been biased in a number of ways. Meta analysis= more data points, more data points= higher accuracy).

This study also considers positives of AI by the way, as noted it can increase writing efficiency but the downsides and ethical issues don't make that worthwhile in my opinion. We can and should enhance writing and confidence in other ways.

Heres another source:

The issue here is that if you rely on AI consistently, certain skills start to atrophy. So what happens when you can't use it?

Im not completely against all AI, there is legitimate possibility for ethical usage when its trained on paid for data sets and used for specific purpose. Ive seen good evidence for use in medical fields, and for enhancing language learning in certain ways. If we can find a way to reduce the energy and water consumption then cool.

But when you write essays with chatgpt you're just robbing yourself an opportunity to exercise valuable cognitive muscles and you're also robbing millions of people of the fruit of their own intellectual and creative property. Also like, on a purely aesthetic level it has such boring prose, it makes you sound exactly like everyone else and I actually appreciate a distinctive voice in a piece of writing.

It also often fails to cite ideas that belong to other people, which can get you an academic violation for plagiarism even if your writing isn't identified as AI. And by the way, AI detection software is only going to keep getting better in tandem with AI.

All that said it really doesn't matter to me how good it gets at faking human or how good people get at using it, I'm never going to support it because again, it requires mass scale intellectual theft and (at least currently) it involves an unnecessary energy expenditure. Like it's really not that complicated.

At the end of the day I would much rather know that I did my work. I feel pride in my writing because I know I chose every word, and because integrity matters to me.

This is the last post I'm making about this. If you send me another ask I'll block you and delete it. This space is meant to be fun for me and I don't want to engage in more bullshit discourse here.

15 notes

·

View notes

Note

think i found another ai fic... one chapter was 26% ai another 21% one chapter was "probably human written" but still... and it's written on anon...

i appreciate you so much samantha and all the work and effort and time you put into your amazing writing, you're amazing💖💖💖

I learned recently that some folks use chatgpt or the likes to edit their fics. This is a terrible idea but I do think that it might contribute to some of the results we're seeing. It's both difficult and inaccurate to confirm ai generation when the ai detection result isn't paired with other factors like frequently posting high word counts, or dull monotone writing, or absolutely perfect grammar etc. So people should definitely stop using chatgpt for spell checks.

Something else that might trigger a positive ai result is the use of tools like Grammarly and so on, which I've mentioned before that I have been using for years for spag. But they recently (?) introduced a generative ai element that rewrites content for you or that generates a new sentence on the spot. This does however result in a positive ai detection because well, the ai did it.

Do we stop using these types of tools now? I don't think that's necessary and there are probably minimal checkers left that have no integrated ai at all. Most spag checkers including Word, use some kind of non-genative ai to alert you to errors in a more evolved way than before. (Google Docs' spag checker just got stupider as it "evolved" btw. What an absolute dumpster fire.)

BUT be careful how you use it, don't let it reconstruct your work, don't let it automagically write or fix a sentence for you, and don't rely on it to produce flawless content, there is no such thing. Use your brain, ask for a beta reader to assist you, research the things you don't know. Teach yourself to write better. Use the tool for its initial purpose--to check your spelling and grammar. The ai features can usually be switched off in settings. That being said, basic spag checks using these tools shouldn't equate ai generation but it will probably depend on the tool used to detect it.

I want to add that we definitely should not check every fic we're interested in reading for ai. I think that will make the fandom experience terrible and unenjoyable for everyone. Read it in good faith but keep an eye out for stuff like posting large amounts of words on a schedule that is not humanly possible, the writing style, the tone, other use of ai by the person etc. We've been reading fanfic for years, we know when something is off. Block if you suspect it's ai generated.

People who use ai to 'write' fics have no place in fandom spaces.

It's going to become increasingly difficult to detect these things though, since there is also a feature to "humanize" the ai slop 🤢 and I don't know what the way forward is but I do know it's not running every fic through an ai detector. They're not entirely accurate either. The only reason I resorted to an ai detector with that person I initially caught out, was because the tag was clogged with their constant posting and I knew there was no fucking way they were posting that much naturally. The detector just confirmed what I suspected anyway.

I read a fic recently by an Anon author and I thought I was so good and sexy. I really hope it's not the same person you're talking about. I'm not going back to check because my kudo and comment are already on there. I also doubt an ai can write such filthy, steaming smut 😂

And thank you, Anon, for your kind words. Truly appreciate it. 💕

12 notes

·

View notes

Text

ChatGPT has already wreaked havoc on classrooms and changed how teachers approach writing homework, since OpenAI publicly launched the generative AI chatbot in late 2022. School administrators rushed to try to detect AI-generated essays, and in turn, students scrambled to find out how to cloak their synthetic compositions. But by focusing on writing assignments, educators let another seismic shift take place in the periphery: students using AI more often to complete math homework too.

Right now, high schoolers and college students around the country are experimenting with free smartphone apps that help complete their math homework using generative AI. One of the most popular options on campus right now is the Gauth app, with millions of downloads. It’s owned by ByteDance, which is also TikTok’s parent company.

The Gauth app first launched in 2019 with a primary focus on mathematics, but soon expanded to other subjects as well, like chemistry and physics. It’s grown in relevance, and neared the top of smartphone download lists earlier this year for the education category. Students seem to love it. With hundreds of thousands of primarily positive reviews, Gauth has a favorable 4.8 star rating in the Apple App Store and Google Play Store.

All students have to do after downloading the app is point their smartphone at a homework problem, printed or handwritten, and then make sure any relevant information is inside of the image crop. Then Gauth’s AI model generates a step-by-step guide, often with the correct answer.

From our testing on high-school-level algebra and geometry homework samples, Gauth’s AI tool didn’t deliver A+ results and particularly struggled with some graphing questions. It performed well enough to get around a low B grade or a high C average on the homework we fed it. Not perfect, but also likely good enough to satisfy bored students who'd rather spend their time after school doing literally anything else.

The app struggled more on higher levels of math, like Calculus 2 problems, so students further along in their educational journey may find less utility in this current generation of AI homework-solving apps.

Yes, generative AI tools, with a foundation in natural language processing, are known for failing to generate accurate answers when presented with complex math equations. But researchers are focused on improving AI’s abilities in this sector, and an entry-level high school math class is likely well within the reach of current AI homework apps. Will has even written about how researchers at Google DeepMind are ecstatic about recent results from testing a math-focused large language model, called AlphaProof, on problems shown at this year’s International Math Olympiad.

To be fair, Gauth positions itself as an AI study company that’s there to “ace your homework” and help with difficult problems, rather than a cheating aid. The company even goes so far as to include an “Honor Code” on its website dictating proper usage. “Resist the temptation to use Gauth in ways that go against your values or school’s expectations,” reads the company’s website. So basically, Gauth implicitly acknowledges impulsive teenagers may use the app for much more than the occasional stumper, and wants them to pinkie promise that they’ll behave.

Prior to publication, a spokesperson for ByteDance did not answer a list of questions about the Gauth app when contacted by WIRED over email.

It’s easy to focus on Gauth’s limitations, but millions of students now have a free app in their pocket that can walk them through various math problems in seconds, with decent accuracy. This concept would be almost inconceivable to students from even a few years ago.

You could argue that Gauth promotes accessibility for students who don’t have access to quality education or who process information at a slower pace than their teacher’s curriculum. It’s a perspective shared by proponents of using AI tools, like ChatGPT, in the classroom. As long as the students all make it to the same destination, who cares what path they took on the journey? And isn’t this just the next evolution in our available math tools? We moved on from the abacus to the graphing calculator, so why not envision generative AI as another critical step forward?

I see value in teachers thoughtfully employing AI in the classroom for specific lessons or to provide students with more personalized practice questions. But I can’t get out of my head how this app, if students overly rely on it, could hollow out future generations’ critical thinking skills—often gleaned from powering through frustrating math classes and tough homework assignments. (I totally get it, though, as an English major.)

Educational leaders are missing the holistic picture if they continue to focus on AI-generated essays as the primary threat that could undermine the current approach to teaching. Instead of arduous assignments to complete outside of class, maybe centering in-class math practice could continue to facilitate positive learning outcomes in the age of AI.

If Gauth and apps like it eventually lead to the demise of math homework for high schoolers, throngs of students will breathe a collective sigh of relief. How will parents and educators respond? I’m not so sure. That remains an open question, and one for which Gauth can’t calculate an answer yet either.

21 notes

·

View notes

Text

Much of the internet is bots.

There are more bots every day. Every site that's in any way bot-trainable has bots scraping posts and chats.

We have AI. It can auto-recognise voices. It can mine text for information which it can categorise and file, but cannot process it or use to further its own ideas. It can compile and compartmentalise and label everything ever written, everything being spoken, all words in the world if we let it. But it can't think.

Neither can the bots on Reddit, Facebook, Twitter. They can write arguments. They can repeat arguments flawlessly. They can argue with themselves and you wouldn't know it. They are as advanced as ChatGPT.

It's 2010. Much of the internet is bots.

Reddit is a cesspit. I don't use reddit, except to find useful information. Many users are bots. There are more bots joining everyday.

We have AI. It can autorecogise voices. It can create AI generated photos that are indistinguishable from humans most of the time. A human trained to detect AI-generated faces who had spent ten+ hours learning this skill could identify an AI generated photo 90% of the time. But no one does this, and most of the tiny profile pictures are of dead people anyway.

On Reddit there are people piloting bots to generate targeted discourse. The bots analyse arguments and reply as if they were users. The good answers attract upvotes and downvotes and bots iterate accordingly. A New Zealand journalist notices they are being used for election interference and writes a book on it. We say 'That's terrible!'.

We do not read the book.

We interact with bots every day. We ignore most of them. We are used to these low-quality accounts by now. Because of how many there are, the bots often interact with themselves. It is like a cluster theory of bots, like colliding molecules in the air.

It's 2015. Much of the internet is bots. There are more bots everyday.

Reddit is a cesspit. I have a reddit account for hobbies and history and tv shows and local content. I make posts there, and they get karma. There is a lot of noise. Posting comments in the big subs get you more karma. I spend hours writing answers to ethical social dilemmas in Am I The Asshole?. I get better at ethical social dilemmas. I get better at writing answers.

It's 2022. Much of the internet is bots. Except for Gen Z, we use the internet how we've been using it since 2010. We play stupid games that take more of your money and time. We use the same social media sites. We post memes and vent and chat. We comment. We talk the same politics. We have the same politics. We are the same as we were a decade ago.

So are the social media sites.

We have AI. It can autorecognise voices. It can mine text for information which it can categorise and file, but cannot process or use to further its own ideas. It can compile and compartmentalise and label everything ever written, everything being spoken, all words in the world if we let it.

It can't think. But doesn't need to.

We are 'using' AI via ChatGPT, feeding instructions and questions and conversation into it. It is frequently wrong about easy-to-google answers, even though its conversation is perfect. We laugh at it.

We are worried that students will use chat GPT to write university essays. It is able to do this easily and mostly unidentifiably with a mere modicum of human editing. Some students still do not manage to evade human detection. But many do. Even when the humans are looking for it.

Reddit is a cesspit. I help start a NZ politics sub. Reddit has bots so good it's impossible to fully distinguish between bots and human, even when looking closely at profiles or trends.

I am banned from Reddit.

It's 2024. Much of the internet is bots.

16 notes

·

View notes

Text

How I use AI in my artworks and what I think about AI in general

I think it’s time to have this conversation because questions keep coming up more and more often.

I know that many people here don’t like AI or completely ignore it. In this post, I’d like to share my personal view on the topic and also answer the question of whether I use AI in my artworks.

Let me start with the simpler question. The answer is “yes,” I do use AI tools in some of my artworks. However, none of them can be considered fully AI-generated.

Sometimes, I use AI to create backgrounds or silhouettes, but I completely change the faces, like here: https://pin.it/2RUhZKz4S.

Other times, almost everything is AI-generated, for example: https://pin.it/N8apDmpfz.

Sometimes I use face swap technology, faceapp for hairs, etc.

I also have arts created fully without AI, but the faces there are based on real photos of Hunter and Jenna and edited with filters.

For example:

https://pin.it/1DZvBcKVw, https://pin.it/5wAdQvIFe, https://pin.it/19G1mffb6.

In general, I use anywhere from 3 to 5 or more tools for my artworks, including AI.

Some cases wouldn’t be possible for me to create without AI because I simply don’t have the technical skills yet. Others, on the contrary, are impossible to get from AI, no matter how hard you try, so I go back to good old collage techniques and hand-drawing.

Now, about my attitude towards AI overall.

You may like it or not, but it’s now part of our lives, and ignoring it is no longer an option. No AI boycott will stop its development. Almost any app nowadays has inbuilt AI tools.

I’m currently on maternity leave, but even in my professional field, AI is already widely used, and when I return to work, I’ll either need to know how to use it or fall behind the industry. That’s why I’m studying AI, and I do enjoy the possibilities it offers.

That said, I don’t deny there are issues - copyright concerns, as well as serious problems with fraud and fakes it can enable. As a mother, I’m terrified by the thought that someone could use my voice or image against my children. Now we have to be much more careful with what information about ourselves we put online - photos, videos, audio.

AI also creates a lot of low-quality content. But there was also low-quality content before AI.

As for writing, I don’t use AI there. It’s terrible at creative writing, plus that’s a space I want to keep entirely my own.

However, I do use ChatGPT to help write and translate tons of bureaucratic emails in a foreign language (which is, unfortunately, necessary when living abroad), and this saves me time to do what I actually enjoy, including writing.

One of my favorite YouTube videos from last year is about scientific breakthroughs of 2024. It’s in Russian, so I won’t link it here, but it mentions how AI, thanks to its ability to process massive amounts of data and detect patterns, has already helped scientists with several discoveries. For example, it helped identify a substance that might be effective against antibiotic-resistant bacteria. It also contributed to a breakthrough in forensics related to fingerprint analysis, among other things.

Of course, AI makes mistakes, it’s often inaccurate, and everything it produces needs double-checking. But it can also be useful. That’s why I disagree with people who view AI as pure evil.

I use it for art, video projects, web design, and official correspondence, languages learning. Even though I try to keep up with AI trends, it evolves so fast that it’s impossible to follow everything - it updates literally every day.

It can be used for bad things (fraud), but also for good (scientific research). I’d say how I use it is somewhere in the neutral zone. I hope it doesn’t harm anyone - and maybe even makes someone happy.

So, I will continue creating art, including with the help of AI, and in my next post, I’ll tell you about my experiments with AI videos.

I’m open to discussing this topic - but please, be polite.

6 notes

·

View notes