#clockspeed

Explore tagged Tumblr posts

Link

Gear up for a revolution in desktop processing power. Intel has unveiled its latest flagship, the 14th-generation Core i9-14900KS, a processor designed to push the boundaries of performance for both gamers and content creators. This successor to the i9-13900KS boasts a record-breaking maximum clock speed of 6.2 GHz, ushering in a new era of desktop computing capabilities. Let's delve deeper into the intricacies of the Intel Core i9-14900KS, exploring its features, specifications, pricing, and potential impact on the market. Blazing Performance: Core i9-14900KS Specifications The heart of the i9-14900KS lies in its impressive core configuration. This 24-core processor packs a punch with 8 high-performance cores (P-cores) designed to tackle demanding tasks like video editing and 3D rendering. Additionally, 16 efficient cores (E-cores) handle background processes seamlessly, ensuring smooth multitasking. This combination translates to 32 threads, allowing the CPU to manage multiple instructions simultaneously for enhanced efficiency. But the true game-changer lies in the clock speeds. The i9-14900KS boasts a base clock of 3.2 GHz for the P-cores and 2.4 GHz for the E-cores. However, the magic happens with Intel Thermal Velocity Boost, a technology that dynamically pushes the P-cores up to a staggering 6.2 GHz when thermal headroom permits. This translates to significantly faster processing times, especially for single-threaded workloads that benefit most from high clock speeds. Power Delivery and Memory Support To unleash the full potential of the i9-14900KS, Intel equips it with a 150W base power rating (TDP). This ensures adequate power delivery for peak performance. However, under heavy loads, the processor can leverage Intel's Thermal Velocity Boost, which can temporarily increase power consumption to 253W. Additionally, an "extreme" power profile option allows experienced users to push the limits further, reaching a maximum turbo power of 320W. It's crucial to note that such extreme power profiles require robust cooling solutions to maintain optimal thermal conditions. The i9-14900KS caters to the ever-growing memory demands of modern applications. It supports a generous 192GB of DDR5 RAM at speeds of up to 5600 MT/s. This high-bandwidth memory ensures smooth data transfer between the CPU and other components, minimizing bottlenecks and maximizing performance, especially in memory-intensive tasks like video editing and complex simulations. For users who prefer DDR4 memory, the i9-14900KS remains compatible with speeds of up to 3200 MT/s. Connectivity and Compatibility The i9-14900KS isn't just about raw processing power; it also offers robust connectivity options. The processor boasts 20 PCIe lanes, including 16 lanes for the cutting-edge PCIe 5.0 standard and four lanes for PCIe 4.0. This enables blazing-fast data transfer speeds for high-performance graphics cards, solid-state drives (SSDs), and other peripherals. This ensures a smooth user experience for tasks that rely on fast data transfer, such as gaming with high-resolution textures and working with large media files. For motherboard compatibility, the i9-14900KS seamlessly integrates with the latest Z790 and Z690 chipsets. However, Intel recommends updating the motherboard BIOS to the latest version to ensure optimal performance and compatibility with the processor's advanced features. Performance Gains and Target Audience The 14th-generation Core i9-14900KS promises significant performance improvements over its predecessors. Benchmarking results suggest up to a 15% overall performance increase compared to the i9-13900KS. Furthermore, specific tasks like 3D rendering and content creation can benefit from an impressive 73% performance boost. These substantial gains cater to a specific audience: Gamers: The i9-14900KS unlocks the potential for ultra-high frame rates in even the most demanding games. With its high clock speeds and excellent single-threaded performance, gamers can experience smoother gameplay and minimize frame drops, even at high resolutions and with demanding graphics settings. Content Creators: Editors, animators, and 3D modelers will appreciate the significant performance boost offered by the i9-14900KS. Faster rendering times allow for quicker project completion and increased productivity. The Future of Desktop Processing: Conclusion The arrival of the Intel Core i9-14900KS marks a significant leap in desktop processor technology. With its record-breaking clock speeds, impressive core configuration, and robust features, this CPU caters to the most demanding users, including hardcore gamers, content creators, and professionals who rely on exceptional processing power. While the price tag reflects its top-tier performance, the i9-14900KS is poised to become the heart of powerful custom-built PCs and pre-built desktops designed to handle the most intensive workloads. FAQs Q: How much faster is the i9-14900KS compared to the i9-13900KS? A: Benchmarks suggest an overall performance increase of up to 15% for the i9-14900KS compared to its predecessor. Specific tasks like 3D rendering can see even more significant improvements, reaching up to 73%. Q: Is the i9-14900KS compatible with my existing motherboard? A: The i9-14900KS is compatible with Z790 and Z690 motherboards. However, for optimal performance, updating the motherboard BIOS to the latest version is highly recommended. Q: When will the i9-14900KS be available in India? A: While the processor is already available in some regions, an official launch date and pricing for the Indian market haven't been announced yet. Stay tuned for further updates. Q: Is the i9-14900KS worth the price? A: The i9-14900KS delivers top-tier performance, making it ideal for demanding users like gamers, content creators, and professionals. If you require the absolute best processing power for your needs, the price might be justifiable. However, for more budget-conscious users, alternative processors might offer a better value proposition.

#14thGenIntelCoreProcessor#clockspeed#compatibility#contentcreation#cores#CPU#desktopprocessor#Gaming#IntelCorei914900KS#IntelCorei914900KSDesktopProcessor#MemorySupport#PCIe#Performance#price#rendering#Threads

0 notes

Text

having very strange issues with my gpu. the amdgpu driver occasionally crashes, which makes my screen go black and sets the fan speed to 100%, the system at this point is still responsive, but a reboot is needed to have graphics again. there's also a mild static on my second monitor when GPU utilization is very high, such as when playing games, but this isn't present on windows for some reason, even when the clocks and power draw are identical, which makes me think it's the software. also, bizarrely, in its default configuration the linux drivers set the maximum clock speed to 3000mhz, which is 400mhz more than its maximum boost clock.

this baffled me because that's higher than even what the most aggressive overclocking guides recommend. I had to manually lower clocks (with LACT) to fix this, but for some reason the card still targets around 150mhz more than the user set (e.g. setting it to 2500mhz it will peak at 2650mhz). it is unstable in its default configuration, so I had to underclock it even more and undervolt it to make it more stable, despite this not being needed on windows (although admittedly I did test it less)

that being said, it somehow performs well undervolted and underclocked compared with the stock configuration on windows. in the dawntrail benchmark it's like 15% better with the clocks set to 100mhz less than stock. I've no fucking clue what's going on

11 notes

·

View notes

Note

I couldn't find it if it's been asked before, but what is a mule/mule kick?

A semiohazard is deleterious information that can be encoded into any stimulus. A tripwire is the medium that a semiohazard occupies, whether it’s a precise sight, sound, temperature sequence, or an injected thought. A mulekick is the effect of perceiving a hazard, ranging in severity from dizziness, to unconsciousness, to autoimmune response, to mind death. A mule can reproduce a predetermined semiohazard with its body. They are like walking bombs.

Whether a mule is immune to its own kick depends on how worthwhile their deployer deems the mule’s survival or reuse. Some mules are not immune, so when they detonate their semiohazard, they mulekick themselves and anyone who witnesses them. Some mules are immune for reasons I’ll describe, and they can be reused after detonation.

A mule can either be an organism (an animal/human, plant, microbe) or a seedlet (conscious artificial intelligence).

There are four methods to mitigating a semiohazard:

Having…

Fewer senses to perceive the semiohazard in the first place

More acidic whiterooms (mental immune system)

Slower clockspeed (cognition rate)

Fewer life systems vulnerable to mulekick (typically this means using a seedlet instead of a human since seedlets don’t have organs that can be shut off)

Seedlets are uniquely suited to deal in semiohazards. However, a human can don sensory cancellation apparel, be nootically subtracted (cognitively engineered before embryonic development) to have a slower clockspeed and potent whiteroom, have mulekick-vulnerable organs artifically replaced, and/or be born without certain senses at all (blind/deaf/anosmic/anaptic). These are called lightfooted soldiers since they don’t “trip wires.”

Slower clockspeed can only be created prenatally. It can either be fixed (clunky but consistent) or toggleable (finicky but versatile). Clockspeed can only be slowed, not quickened.

Some clockspeeds have an oscillating or randomized walk to disarm timing-based tripwires. In other words, the perceiver’s sense of time is warped, eliminating the threat of a hazard that needs to be perceived in exact sequence.

Having a slower rate at ingesting information allows a person’s whiteroom or accompanying mustard (a seedlet specialized in detecting/filtering semiohazards) to find and eliminate the hazard before it mulekicks the perceiver.

Lightfooted soldiers and semiotic disposal technicians can get away with preserving some of their senses when handling hazards. This is until the far future discovery of the theoretical universal silencer, or UNISILE, a semiohazard capable of killing organics and seedlets by targeting the anima, or measurable soul, via a minimally complex tripwire.

But they don’t have to worry about that right now.

327 notes

·

View notes

Text

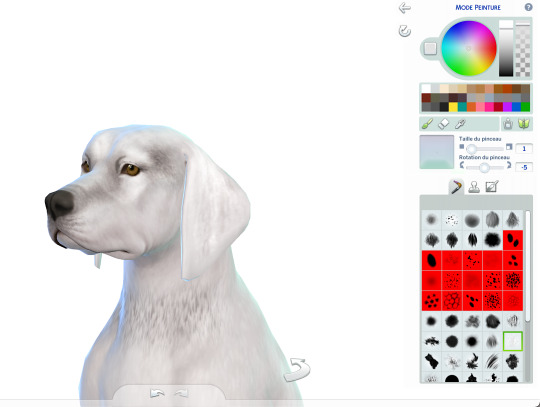

Some tricks to paint your animals in CAS

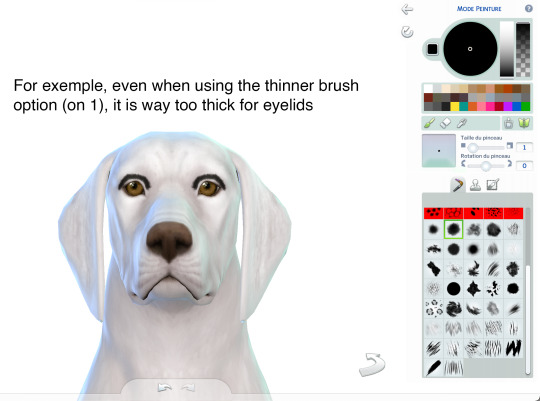

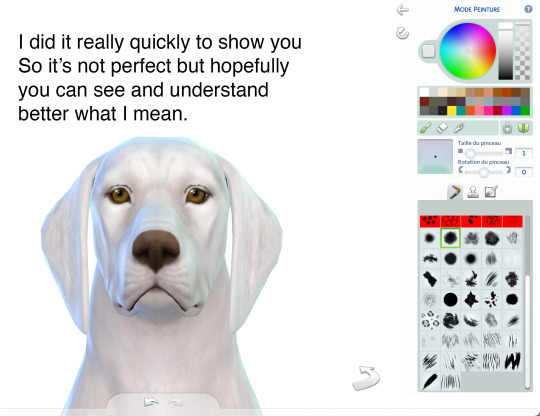

I won't make a tutorial because I wouldn't know where to start and honestly, it's mostly through practice that you'll improve yourself at painting. But here are a few tricks that might help you. 1. Enter the cheat code "cas. clockspeed 2" to almost pause the time in CAS. When the animal is breathing too fast it really makes it more difficult to paint details. 2. When you want to paint small details on your pet's face, click on "edit details", then double click anywhere else to exit the details painting mode without zooming out. You'll have a closer look of what you're doing. 3. Be aware that you can have a much darker black paint by pulling down the slider lower than it is set.

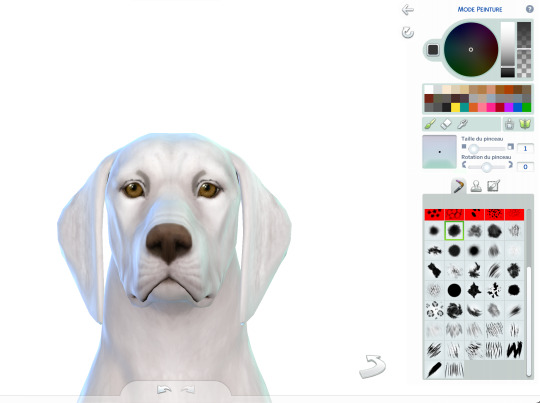

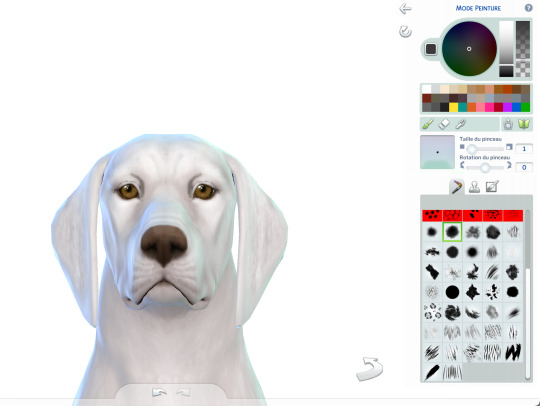

4. Use pictures as references. Can sound silly, but it isn't just for inspiration, it will really help you to see better where you are supposed to paint the lights. Aaand they are really important since they will give much more landforms, and therefore, realism to your animals. 5. Paint the eyelids and the nose of your pets. Trust me, they will look much more expressive and realistic. 6. You can paint thinner traits with a simple technique. First, you make the trait you want using the colour you want, usually darker than your pet's colour.

Once you've done your trait the way you want it, select the pet's base colour (white in this exemple) and draw an other trait just next to the first one. It has to be very close to erase some of it but not all.

Same here:

7. Make a hairy effect using the fur brushes. Use different ones, set them to to smallest option on the face and more or less bigger on the body, depending on the hair length. Also, you can start with a dark colour and draw lighter above. Pay attention of the orientation of your brushes; respect the hair's direction. 8. Don't hesitate to mess around with different colours and opacity. 9. Use fur brushes with a darker colour to give some shadows and landforms to your animals.

That's it, I'll update this post if I can think of anything else. I hope some of you will find it useful. Have fun ! ᵔᴥᵔ

#sims 4#ts4#s4#sims 4 pets#sims 4 cc finds#sims 4 cats and dogs#sims 4 dog#sims 4 tutorial#sims 4 tricks#sims 4 painting tricks#sims 4 help#sims 4 realistic pets

308 notes

·

View notes

Text

DO NOT WORRY ABOUT FLAMEWARS

One of the oldest rules of the internet.

The old internet was a wild-wild west. Back in high school, my high school was next to a university, so we had access to the internet before... pretty much anyone else did. I was told that it was impossible to search for porn on it.

Before this, I had found a way to hack the library's computer system. I didn't do anything with it, but it gave my computer access to more network power and priority.

So, when I found out about the internet thing, my first instincts were to try and break them. Impossible ended up being 3 clicks from the schools webpage. Did I ever do this again, at school? No. But this was my introduction to Pamela's Andersons's implants. It was later on that porn figured out the business model for online businesses, so most websites were:

hosted on someone's computer

entirely volunteer

works of passion

Before porn, the most used website was Slashdot, "News for Nerds, stuff that matters."

This was home to the Slashdot Effect. Which was an accidental DDOS. Like I said, most websites were hosted at home. A megabit connection was something epic, that only the biggest corporations had. The average clock was so slow that increasing the clockspeed would ruin games, we had a button to slow it down.

When a news site with thousands of users links to you, your usage rate increases several thousand times. The world wide web was so small that you could - literally - visit every - single website on a topic.

So, what did nerds do on a worldwide communication tool?

Well, what do you think?

e-mail/bbs

Computing Science

Nerd Out

Dodge Nuclear Weapons

We argued, about anything and everything. One of the biggest topic was gay Star Trek erotica, because women have to ruin everything.

in a nerd environment, arguing is not just considered normal, it's considered good. If you don't have an impassioned argument on the internet about (nerd topic), do you even care about it? The answer is no.

Thanks to the near exclusivity of the nerd, (ior erotica), community, there was no fear about people bringing stupid human emotions into your passionate nerd debate.

Well, then someone put Pamela's Anderson's implants on the internet, and all of a sudden normies had a reason to come here. And now when you call someone a paedo gayfag who doesn't know the meaning of xor, and they go crying to the police. And then gayfags made it against the law to call them gayfags, instead of pulling up their goddamn big girl panties and arguing about which Star Trek ship is better.

To be fair, we now have vtubers. So, silver linings.

#yesteryear#internet#world wide web#www#flamewar#flame war#the modular kernel is so obviously superior that you know bill gates commits war crimes for insisting on a monolithic one#and publicly admitting he wants to depopulate the world with a forced vaccine.

3 notes

·

View notes

Text

This was an art piece for a pseudo "series" of art I made.

Mk #2-1 (MK.2 Series, Art Piece Number 1) "Clockspeed"

Probably going to put it on merch honestly I rly like this design

4 notes

·

View notes

Note

Imagine mech preds that have differing designs, sometimes the quality of acid is not as good because its all the maker could afford, so digestion takes looong and youre just sloshing around in there while the engines hum and the machine does whatever it needs to.... Or a mech pred thats so high end and "fuel efficient" that its capable of processing several humanoids in a really quick time, so it always has a high "intake"...

"Yeah, I'm an older model... they don't really make my acid composition anymore, and the new stuff corrodes my stomach lining... sorry. I don't know if that's even gonna get through your scales. Um. Let's just give it a few hours, okay?"

And cue a not-quite-endo ride as I hear it whirr into overtime sloooowly breaking me down. By the time it checks in on me I'm *too* digested to be spat out, but still clearly going to take days to work me over.

vs.

"I got a top-of-the-line gut. Wanna see?"

And with its high clockspeed I don't even get to respond before I'm gulped down, and I simply melt completely on contact with that acid, glorping in an instant to become instant fuel...

What I'm saying is they should both make out with me in the middle to see which fate I get....

#ask#kobbleyips#mech preds#imagining the former with a very boxy 70's style#and the latter as one of those sleek beveled macines

18 notes

·

View notes

Text

My laptops thermals are so bad that I'm reducing my CPU to about 50% of its max clockspeed just to play modded minecraft at 25fps. I am truely one with those teenage girls playing modded sims on a macbook

#lmao shut up haz#I'm gonna take it somewhere to get the thermal paste replaced I think. That and get the dust blasted the hell out of it lol#it's about 3 years old and has seen some crazy use over that time so the paste being slightly worn out is hopefully the issue

8 notes

·

View notes

Text

Daylight savings time, but your CPUs clockspeeds go down or up by increments of 0,5GHz per 1GHz your CPU has, and not your actual clock.

2 notes

·

View notes

Text

Thinking about how we keep pushing for faster and faster clockspeeds, pushing our ability to force the voltage into a square wave in the allotted time, and how the brain doesn't need nearly the Hz. Squeezing blood from a stone while saying all the other stones are too inefficient.

Perhaps it's time that we reexamine the fundamental architecture. Asynchronous processing sounds interesting if you can wrap your head around it.

Also thinking about a relativistic approach, maybe the state of the whole machine doesn't need to matter, and each component only has to care about itself in relation to the things it is directly interacting with...

0 notes

Text

CUDIMM in Action: Case Studies and Practical Applications

The CUDIMM Standard Will Make Desktop Memory Much Robust and a Little Smarter

While the new CAMM and LPCAMM memory modules for laptops have attracted notice in recent months, the PC memory sector is experiencing changes beyond mobile. The desktop memory market will get a new DIMM type dubbed the Clocked Unbuffered DIMM to increase DIMM performance. At this year’s Computex trade expo, numerous memory makers displayed their first CUDIMM products, offering a glimpse into desktop memory’s future.

Clocked UDIMMs and SODIMMs are another answer to DDR5 memory‘s signal integrity issues. DDR5 allows for fast transfer rates with removable (and easily installed) DIMMs, but further performance increases are running into the laws of physics when it comes to the electrical challenges of supporting memory on a stick, especially with so many capacity/performance combinations today. While these issues aren’t insurmountable, DDR5 (and eventually DDR6) will need more electrically resistant DIMMs to maintain growing speed, which is why the CUDIMM was created.

CUDIMMs, standardised by JEDEC earlier this year as JESD323, add a clock driver (CKD) to the unbuffered DIMM to drive the memory chips. CUDIMMs can improve stability and reliability at high memory speeds by generating a clean clock locally on the DIMM rather than using the CPU clock, as is the case today. This prevents electrical issues from causing reliability issues. A clock driver is needed to keep DDR5 running reliably at high clockspeeds.

JEDEC recommends CUDIMMs for DDR5-6400 speeds and above, with the initial version covering DDR5-7200. On paper, the new DIMMs will be drop-in compatible with existing systems, using the same 288-pin socket as the regular DDR5 UDIMM and allowing a smooth transition to higher DDR5 clockspeeds.

CUDIMMs = Faster DDR5

High-clocked memory subsystems struggle to preserve signal integrity, especially over long distances and with many interconnections (e.g., multiple DIMMs per channel). Since UDIMMs are dumb devices, the memory controller/CPU and motherboard have traditionally carried this load. However, CUDIMMs will make DIMMs intelligent and assist maintain signal integrity.

Clock drivers (CKDs) accept base clock signals and regenerate them for module memory components, which is the main change. CKDs buffer and amplify the clock signal before driving it to DIMM memory chips. CKDs use signal conditioning characteristics including duty cycle correction to reduce jitter and clock signal timing fluctuations.

Another important CKD function is minimising clock skew, or the difference in clock signal arrival times at various components. The CKD synchronises memory chips and DIMMs by matching propagation delays for each clock path.

Phase adjustment lets CKD match the clock signal to component timing needs, which requires more work from the memory module builder. Many memory module makers have yet to demonstrate their CKD-enabled devices since they are still learning the technology. As a scaled-down version of the Registered DIMM (RDIMM), which has been used in servers for years and is the only DDR5 DIMM supported by Intel and AMD’s server (and workstation) CPUs, embedding clock drivers in DIMMs isn’t a novel notion.

RDIMMs buffer the command and address buses along with the clock signal, but CUDIMMs just buffer the clock signal. In that sense, CUDIMMs are half-RDIMMs.

While some CPU designers would be thrilled if all systems employed RDIMMs (and ECC), consumer PC economics favour cheaper and simpler solutions where available. A JEDEC-standard CKD has 35 pins, about half of which are voltage/ground pins. CKDs increase to DIMM construction costs, but they are purposefully cheaper than RDIMMs.

CKDs will be available in all JEDEC DDR5 memory form factors. Clocked SODIMMs, CUDIMMs, and DDR5 CAMM2 memory modules will need clock drivers.Since memory frequency determines the need for clocked DIMMs, CUDIMMs and their other variants are backwards compatible with DDR5 systems and memory controllers. So CUDIMMs use the same 288-pin DIMM slot as DDR5 DIMMs.

In PLL Bypass mode, a CUDIMM can run a clock signal via its CKD buffers or bypass them. Bypass mode is only supported up to DDR5 6000 (3000MHz), hence JEDEC complaint DIMMs will use CKD mode (Single or Dual PLL) at DDR5-6400 and higher. A CUDIMM can bypass a slower/older DDR5 memory controller, while DIMMs without a CKD can’t reach higher speeds (at JEDEC-standard voltages and timings).

CUDIMMs, CSODIMMs at Computex

G.Skill, TeamGroup, and V-Color displayed CUDIMMs and CSODIMMs at Computex. Memory vendors aren’t revealing details because these new DIMMs accompany new systems. However, because they displayed the hardware, don’t be shocked if they enter production systems (and retail shelves) shortly.

While Biwin still sells high-performance devices under the Acer Predator brand, enthusiast-grade memory modules are a newer product. However, its 16 GB and 32 GB modules may operate at 6400–8800 MT/s, which is faster than’regular’ enthusiast-grade DIMMs. These devices will launch in September.

G.Skill, a longtime enthusiast memory vendor, showed their Trident Z5 CK CUDIMMs at Computex. The company did not highlight their results, maybe because it is still perfecting its CKD-enabled goods and not establishing records. Finally, G.Skill has shown a substantially overclocked system running at DDR5-10600 using ordinary DDR5 modules, thus early CUDIMMs are less impressive.

At the trade event, V-Color displayed CUDIMMs and CSODIMMs, showing that it is taking advantage of CKD chips for high-performance memory. The business plans to sell 16GB and 24 GB CUDIMMs with performance bins between 6400 MT/s and 9000 MT/s at 1.1 V–1.45 V. This is supposed to demonstrate the benefits of clock unbuffered memory modules, as 9000 MT/s is faster than any widely available enthusiast-class memory kit.

Four of many high-performance memory module providers showed CUDIMMs at Computex. Only two talked about CUDIMM performance (TeamGroup’s demonstration seemed incomplete). Since the JEDEC standard has been in place for about half a year, they will soon be joined by the many PC memory providers.

Read more on govindhtech.com

#CUDIMM#LPCAMM#DDR5#DDR5memory#ddr6#memorychip#pcs#cpu#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Note

question: would nosciocircus (i Will spell it right one day) Kinger be rehabbable or would the shock of being disconnected effectively just euthanize him

“Nosciocircus” is a valid spelling, it’s more phonetic!

Kinger is in an incredibly difficult spot for rehabilitation, but he carries some unexpected aces.

He has been sieved for years and his body’s receptiveness to a middleman is weak. Despite this, he has unintentionally prepared himself for blindness by covering his avatar’s “eyes” in the latter years of insertion. His flashes of sharp consciousness continue into his new life, but it takes a year of nootic remapping therapy to grip tight enough to a communication schema to prove it.

While in the circus, Kinger’s whiteroom (mental immune system) channeled one of the mulekicks into his clockspeed (cognitive rate) as a desperate measure against boredom, grief, and unpredictable damage to mental architecture. As a result, the long hours in silence may pass calmly and with less cognitive activity than expected. This unfortunately also impacts his reaction speeds.

Once he can express yes-or-no, he is given the option of euthanasia. He chooses against it, but not lightly.

93 notes

·

View notes

Text

Nothing Phone 2a:The Midrange killer?

Nothing's a series is launched to provide consumers with premium experience under rupees 30,000.

By the launch of this phone Nothing has showed the world that it is focusing on Indian market and taking Indian consumer's very seriously as there customers. This is the reason Nothing launched this phone in India.

The Nothing Phone(2a) was launched on 5th March, 2024.It comes with a 6.7'' FHD+ Flexible Amoled

touchscreen display with a resolution of 1080x2412 px. This makes the colors look vivid and beautiful to look on the display. Display has 120 Hz refresh rate wich makes the touch experience ultra smooth and lag free.

It has Mediatek Dimensity 7200 Pro processor which is a 5g processor having ultra efficient '4nm' chip with clockspeed as high as 2.8 GHz which supports globally '13' 5g bands which makes this smartphone future ready. It comes with Nothing 0S 2.5 based on Android 14.

Now, talking about the camera, it has a dual camera set-up with a front camera. Both the rear cameras are 50MP. One is the main camera and another is the ultrawide camera. In normal conditions the camera provides good color with a good dynamic range. Both the sensors has OIS support for videography.I comes with plethora of basic features like slow-motion, time lapse, portrait mode, panoroma mode. The photo quality is satisfactory. The front camera comes with a 32MP shooter which provides near-to-natural skin tone which is a good thing Nothing has did. After clicking your shot, the AI will provide you the processed image. So, it does take few seconds in processing the image but the wait is worth it.

In short front camera is really good.

Now the best part of this product which the brand is also highlighting the most is the design of the phone. Nothing is a brand which want to be recognized for making a unique design phone specially by providing the 'Glyph light' which makes them stand out in the competition. The Glyph light is a touch or go like feature. Consumers may like it or may not, but this feature is really useful in certain ways. The phone comes with a plastic black which registers fingerprints frequently if not used with cover.

Nothing has done a great job by balancing the overall weight of the inspite of putting a massive 5000 mAh battery which lasts upto 1 whole day on a single charge .The phone weighs around 190 gm. It also provides fast charging support which charges the battery from 0-100 in less than 1 hour. Nothing has done a good job by providing 2 beautiful and timeless color options that are black and white.

When you open the box,you will first see the device itself. Below the deivce, the box contains documentation, sim card tool and USB Type C-C cable. No charger included in the box, you have to buy it seperately.

So talking about the reviews regarding this phone, Nothing has done a really good job considering it as there only 3rd product as a brand. They have tried to provide a premium flagship experience at an affordable price .This phone has really increased the competition among its rivals. It comes with 3 variants with prices at Rs.23,999 for 8GB RAM,128 GB storage, Rs.25,999 for 8GB RAM,256 GB storage and Rs.27,999 for 12GB RAM,256 GB storage. I would say the phone is best priced at under 25,000 for the specifications it offer. It is surely a value for money smartphone. So if you have decent budget and willing to buy a new smartphone than you can look at this phone as a prime choice.Nothing Phone(2a) Full Specifications

SPECIFICATIONS:

1.BASIC

Brand Nothing

Model Phone(2a)

Price in India Rs.23,999(8,128) Rs.25,999(8,256) Rs.27,999(12,256) Release date 5th March, 2024(Released)

Weight 190.00(Approx.) (Handy)(Grams)

Battery 5000

capacity

(mAh)

Fast charging Yes(45W wired)

Wireless charging Yes(15W Qi)

Colors Black and White

2.SCREEN(DISPLAY)

Refresh rate 120 Hz

Screen size 6.7" Flexible AMOLED

Touchscreen Yes

Resolution 1080x2412 pixels

Protection type Corning Gorilla Glass 5

Screen-to-Body 91.65%

ratio

Touch sampling 240 Hz

rate

3.SOFTWARE

Processor size octa-core

Processor Media Tek Dimensity 7200 Pro

RAM 8GB,12GB

Internal storage 128 GB,256GB

Expandable storage No

Expandable RAM 20GB(12+8)

4.CAEMRA CONFIGURATION

Rear Camera 50-megapixel main(f/1.9) + 50-megapixel ultrawide(f/2.2, 114 deg view)

No.of rear 2

cameras

Rear flash Yes

Front camera 32-megapixel(f/2.2, wide)

(selfie)

5.OPERATING SYSTEM

OS Android 14

Software Nothing OS 2.5

DESIGN

Back Hard Plastic

Type Transparent

Additional Glyph Lighting

features

6.CONNECTIVITY FEATURES

Wi-Fi Yes, 802.11

Bluetooth Yes, V.5.3

NFC Yes

USB Type-C Yes

Headphone jack No

5G Yes(Supports 13 bands globally)

7.SECURITY

Fingerprint sensor Yes(In-display)

Face Unlock Yes

1 note

·

View note

Text

HP 17 Laptop, 17.3” HD+ Display, 11th Gen Intel Core i3-1125G4 Processor, 32GB RAM, 1TB SSD, Wi-Fi, HDMI, Webcam, Windows 11 Home, Silver

Price: (as of – Details) Key Features and Benefits:CPU: 11th Gen Intel Core i3-1125G4 Processor (4 Cores, 8 Threads, 8MB L3 Smart Cache, Clockspeed at 2.0 GHz, Up to 3.7 GHz at Max Turbo Frequency)Memory: Up to 32GB DDR4 RAMHard Drive: Up to 2TB PCIe NVMe M.2 Solid State DriveOperating System: Windows 11 HomeGraphics: Intel UHD GraphicsDisplay: 17.3″ diagonal, HD+ (1600 x 900), anti-glare, 250…

View On WordPress

0 notes

Text

But compared to a Phoenix (1) chip with Zen 4 cores, this is a significant and notable difference. Whereas all 8 cores on Phoenix can get to 4GHz+ when power and thermal conditions allow it, there’s no surpassing the lower clockspeeds of Zen 4c. In that respect, Zen 4c is not equivalent to Zen 4; it’s markedly slower. In practice, things aren’t going to be this disparate, of course. In a 15W device there’s little room for a 6/8 core Zen 4 setup to hit those clockspeeds, and we have no reason to doubt the accuracy of AMD’s performance graphs from their slides. Phoenix 2 probably is more efficient – and thus higher scoring – in heavily multithreaded scenarios. But the central problem remains: AMD is not doing themselves a favor by failing to disclose the maximum clockspeeds of the Zen 4c cores. Despite AMD’s desire to paper over the differences, Zen 4 and Zen 4c are not identical CPU cores. Zen 4c is for all practical purposes AMD’s efficiency core, and it needs to be treated as such. Which is to say that its clockspeeds need to be disclosed separately from the other cores, similar to the kind of disclosures that Intel and Qualcomm make today.

yeah but also they made their efficiency core logically equivalent to their performance core that's so interesting

#wow anandtech still occasionally puts out stuff worth reading#now do it more than once every few months. pls.

1 note

·

View note

Text

Geforce or … 🤔! | List of Best Graphics Cards

Intro: You may ask ” Geforce or … 🤔!”. Here are the top GPUs for gaming:

The GPU is the beating heart of any gaming PC, it pours the pixels onto your screen. While there is no one-size-fits-all answer, we’re here to present some options. Some people want the quickest graphics card, while others want the best value, and still, others want the best GPUs for the money. Because there are no other components that have as much of an impact on your gaming moments as your graphics card, it’s critical to have a good balance between performance, price, features, and efficiency.

The GPUs below were sorted by performance and our own rankings. We consider performance, price, power, and features before typing the review; however, opinions will undoubtedly differ. Furthermore, considering the prices nowadays, it’s too hard to determine the rank of anything.

1. GeForce RTX 3080:

SPECIFICATIONS: GPU: Ampere (GA102)

GPU Cores: 8704

Boost Clock: 1,710 MHz

Video RAM: 10GB GDDR6X 19 Gbps

TDP: 320 watts

Advantages: 1. Can do 4K Ultra at 60 frames per second. 2. Significantly quicker than previous-generation GPUs. 3. In comparison to 3090, this is in a good price. 4. Good performance.

Disadvantages: 1. Power consumption is 320W. 2. Usually costs a lot more.

GeForce RTX 3080 Performance: The upgraded Ampere architecture is included on Nvidia’s GeForce RTX 3080. It’s about 30% faster than the previous-generation 2080 Ti. This is the card you should get if you’re want playing at 4K or 1440p.

Ampere adds better tensor cores for DLSS. We’re noticing that a huge number of games use DLSS 2.0 nowadays, thanks to the fact that it’s just a toggle and UI update in Unreal Engine and Unity to get it functioning. Nvidia’s RT and DLSS performance is also far better than AMD’s new RX 6000 cards, which is a good thing because Nvidia can lag behind in traditional rasterization performance.

2. Radeon RX 6800 XT:

SPECIFICATIONS: GPU: Navi 21 XT

GPU Cores: 4608

Boost Clock: 2,250 MHz

Video RAM: 16GB GDDR6 16 Gbps

TDP: 300 watts

Advantages: 1. The new RDNA2 architecture provides outstanding performance. 2. In rasterization games, it beats the 3080. 3. 4K and 1440p resolutions.

Disadvantages: 1. Ray tracing performance is poor.

Radeon RX 6800 XT Performance: In comparison to the RX 5700 XT, the RX 6800 XT offers a significant increase in performance and functionality. It adds ray tracing functionality and speeds up our test suite by 70-90 percent.

Radeon RX 6800 XT Clock Speed: The RAM and number of shader cores in Navi 21 are both double that of Navi 10. Clock rates are also increased by around 300 MHz to 2.1-2.3 GHz depending on the card model, which is a very high clock speed for a GPU. And AMD did it without significantly boosting power consumption, The RX 6800 XT’s TDP is 300W, which is a little less than the 320W of the RTX 3080.

Radeon RX 6800 XT Infinity Cache: The huge 128MB Infinity Cache is responsible for a large portion of AMD’s speed. According to AMD, the effective bandwidth has improved by 119 percent.

3. GeForce RTX 3090:

SPECIFICATIONS: GPU: Ampere (GA102)

GPU Cores: 10496

Boost Clock: 1,695 MHz

Video RAM: 24GB GDDR6X 19.5 Gbps

TDP: 350 watts

Advantages: 1. Very fast GPU. 2. 4K and possibly 8K games. 3. In professional apps, it’s approx. 30% faster than the 3080.

Disadvantages: 1. Availability is quite limited. 2. Power requirements are high.

GeForce RTX 3090 Performance: For some people, the best GPUs are the fastest, regardless of the price. The GeForce RTX 3090 is very suitable for this type of customer. It’s a Titan RTX alternative.

The 3090 gives the GeForce brand a Titan-class performance and features (particularly, the 24GB VRAM). The RTX 3090 is the fastest graphics card available if you really need to get a very fast graphics card available.

GeForce RTX 3090 NVLink: Of course, it’s not just about gaming. The only GeForce Ampere with NVLink support is the RTX 3090, which is perhaps more helpful for professional programs and GPU computing than SLI. The 24GB of GDDR6X memory is very suitable for content development applications.

4. GeForce RTX 3060 Ti:

SPECIFICATIONS: GPU: Ampere (GA104)

GPU Cores: 4864

Boost Clock: 1,665 MHz

Video RAM: 8GB GDDR6 14 Gbps

TDP: 200 watts

Advantages: 1. Good overall value (frames per dollar). 2. Good for 1440p RT with DLSS.

Disadvantages: 1. In the long run, 8GB of VRAM might not be enough.

GeForce RTX 3060 Ti Comparison: The GeForce RTX 3060 Ti includes all of the same features in the remaining GPUs in the series 30.

The 3060 Ti outperforms the previous-generation 2080 Super, is 9% slower than the 3070, and costs 20% less. If you still prefer using the GTX series, such as a RX Vega 56 or GTX 1070, the 3060 Ti is better for you.

GeForce RTX 3060 Ti VRAM: The shortage of VRAM is one of the major worries. Of course, you can reduce the quality of texture a notch and not notice any change.

5. GeForce RTX 3070:

SPECIFICATIONS: Ampere (GA104)

GPU Cores: 5888

Boost Clock: 1,730 MHz

Video RAM: 8GB GDDR6 14 Gbps

TDP: 220 watts

Advantages: 1. All of the Ampere improvements. 2. Not as energy-hungry as the 3080.

Disadvantages 1. Some games don’t support 4K ultra at 60 frames per second. 2. Only 8GB of VRAM.

GeForce RTX 3070 Performance: The 3070 is less expensive than AMD’s new GPUs while still providing superior ray tracing performance and DLSS. For $100 extra, the new RTX 3070 Ti offers slightly higher performance, but it also consumes 30% more power.

GeForce RTX 3070 Ray Tracing: While 1440p and 4K gaming are both viable, 4K at its highest quality frequently falls below 60 frames per second. If a game supports it, DLSS can help, but even with DLSS, ray tracing at 4K frequently implies 40-50 frames per second.

GeForce RTX 3070 Clock Speed: The 8GB of GDDR6 is a source of concern. It has less memory on a narrower bus than the 3080 and also runs at a lower clock speed.

General Verdict: After all, it’s one of the greatest overall cards. For the time being, if you’ve always desired an RTX 2080 Ti but couldn’t justify the price, you can choose GeForce RTX 3070.

6. Radeon RX 6700 XT:

SPECIFICATIONS: GPU: Navi 22

GPU Cores: 2560

Boost Clock: 2581 MHz

Video RAM: 12GB GDDR6 16 Gbps

TDP: 230 watts

Advantages: 1. The 1440p performance is Very good. 2. There’s plenty of VRAM. 3. In non-RT, it comes close to the 3070.

Disadvantages: 1. RT’s performance is not perfect. 2. FSR is powerless against DLSS.

RX 6700 XT Performance: The RX 6700 XT features the same amount of GPU cores as the RX 5700 XT from the previous generation, but slightly faster clock speeds and greater cache give it a 25% performance improvement at higher settings and resolutions.

RX 6700 XT Clock Speed: The RX 6700 XT from AMD has some of the highest clock speeds we’ve ever seen on a GPU, reaching to 2.5GHz and higher during gaming.

7. GeForce RTX 3060 12GB:

SPECIFICATIONS: GPU: Ampere (GA106)

GPU Cores: 3840

Boost Clock: 1,777 MHz

Video RAM: 12GB GDDR6 15 Gbps

TDP: 170 watts

Advantages: 1. Excellent value For 1080p/1440p. 2. There’s enough VRAM for the average user.

Disadvantages: 1. Not a good choice for 1440p.

GeForce RTX 3060 Performance: With a 192-bit memory interface and 12GB VRAM, this is the first GA106 card. Overall performance is only on pace with the RTX 2070, with 26 percent fewer GPU cores and lower memory bandwidth than the 3060 Ti.

GeForce RTX 3060 VRAM Capacity: VRAM capacity isn’t an issue, and the 3060 12GB starts to narrow the gap with the 3060 Ti in a few situations.

GeForce RTX 3060 Comparison: If you ignore ray tracing and DLSS, the RTX 3060 has nearly the same performance as AMD’s RX 5700 XT.

8. Radeon RX 6600 XT:

SPECIFICATIONS: GPU: Navi 23

GPU Cores: 2048

Boost Clock: 2,589MHz

Video RAM: 8GB GDDR6 16 Gbps

TDP: 160 watts

Advantages: 1. Faster than the RX 5700 XT and the 3060. 2. Very good 1080p performance. 3. The 32MB Infinity Cache is still functional.

Disadvantages 1. Ray tracing performance is poor.

Radeon RX 6600 XT Performance: Performance of Radeon RX 6600 XT is somewhat better than the previous generation RX 5700 XT. However, the 8GB of VRAM is a concern, and there are plenty of situations where the RTX 3060 is better. When you consider the memory bandwidth, it’s still astonishing how much a 32MB Infinity Cache appears to improve performance.

Radeon RX 6600 XT Ray Tracing: Several games with DXR (DirectX Raytracing) capabilities couldn’t even manage 20 frames per second at 1080p.

9. Radeon RX 6900 XT:

SPECIFICATIONS: GPU: Navi 21 XTX

GPU Cores: 5120

Boost Clock: 2250 MHz

Video RAM: 16GB GDDR6 16 Gbps

TDP: 300 watts

Advantages: 1. Fantastic performance. 2. Infinity Cache and a lot of VRAM. 3. SPECviewperf results are very good.

Disadvantages: 1. It’s not that much quicker than the 6800 XT.

Navi 21: On the RX 6900 XT, AMD pulled out all the stops. It has a fully functional Navi 21 GPU, which contributes to its scarcity.

Radeon RX 6900 XT Ray Tracing: The same red flags remain, such as the lack of a direct alternative to DLSS and inadequate ray tracing performance.

General Verdict: The 6900 XT is good for those who simply want one of the quickest AMD GPUs.

10. GTX 1660 Super:

SPECIFICATIONS: GPU: Turing (TU116)

GPU Cores: 1408

Boost Clock: 1,785 MHz

Video RAM: 6GB GDDR6 14 Gbps

TDP: 125 watts

Advantages: 1. The price is reasonable. 2. GDDR6 gives it a significant performance improvement.

Disadvantages 1. No support for ray tracing in the hardware.

GTX 1660 Super Performance: The GTX 1660 Super offers the same level of performance as the older GTX 1070. It also includes upgraded Turing NVENC, making it an excellent choice for streaming video.

GTX 1660 Super Comparison: The GTX 1660 Super is 15% quicker than the ordinary 1660 and nearly 20% faster than the RX 5500 XT 8GB. GTX 1660 Super Power Consumption Despite the fact that the chips are built on TSMC’s 12nm FinFET, the actual power consumption is nearly equal to AMD’s Navi 14 chips, which are made on TSMC’s 7nm FinFET.

11. GTX 1650 Super:

SPECIFICATIONS: GPU: TU116

GPU Cores: 1280

Boost Clock: 1,725 MHz

Video RAM: 4GB GDDR6 12 Gbps

TDP: 100 watts

Advantages: 1. Reasonable frame rate. 2. Architecture with high efficiency. 3. The latest version of NVENC is excellent for video.

Disadvantages: 1. 4GB VRAM is insufficient.

GTX 1650 Super Comparison: The GTX 1650 Super has displaced the RX 570 4GB. Also, The 1650 Super outperforms 1650 by roughly 30% while consuming significantly less electricity.

GTX 1650 Super for Gaming: Including the latest NVENC hardware is a great thing for the 1650 Super. That implies that if you’re looking for a low-cost streaming PC, this will serve, especially for games like CSGO or LoL.

GTX 1650 Super Power: Just keep in mind that, unlike previous-generation GTX 1050 cards, the 1650 Super requires a 6-pin power adapter.

12. RX 5500 XT 4GB:

SPECIFICATIONS: GPU: Navi 14

GPU Cores: 1408

Boost Clock: 1,845 MHz

Video RAM: 4GB GDDR6 14 Gbps

TDP: 100 watts

Advantages: 1. Fast enough to keep up with any current game. 2. 7nm technology is highly efficient. 3. Not as expensive as the 8GB models.

Disadvantages: 1. VRAM is still limited at 4GB. 2. Prices are likewise exorbitant. 3. A 6-pin power connector is required.

RX 5500 XT Performance: Between the GTX 1650 Super and the RX 5500 XT 4GB, there’s a bit of a tie. They’re nearly identical in terms of performance and power.

RX 5500 XT for gaming: The RX 5500 XT is a capable graphics card that can run any game at 1080p and medium to high quality, though not always at 60 frames per second. The 5500 XT averaged nearly 90 frames per second in our 1080p medium testing.

This review is adapted from some trusted reviews in this field.

#GeForce#pixels#Specifications#ampere#amperearchitecture#nvidia#navi21xt#raytracing#navi21#navi10#clockrates#amd#8kgames#Series30#FramesPerSecond#1440P#4kgaming#clockspeed#GDDR6#navi22#1080p#navi23#DXR#navi21xtx#infinitycache#SPECviewperf#navi14#getreview4u

1 note

·

View note