#data validation and verification

Explore tagged Tumblr posts

Text

Ensure credibility and reliability in clinical trials with robust data validation services. These services play a key role in enhancing accuracy, minimizing errors, and boosting the integrity of clinical results. By validating data at every phase, data validation ensures trustworthy outcomes, supporting better decision-making and regulatory compliance in the healthcare sector.

0 notes

Text

Ensure Accuracy With Effective Customer Data Verification Solutions

Ensure trust and security in your online interactions with our tailored customer data verification solutions. Safeguard sensitive information and enhance user confidence in your business. Our user-friendly verification process adds an extra layer of protection, making your digital experiences seamless and secure. Protect what matters most – your customers and their data.

0 notes

Link

Bulk Email Validator - Verify Email Authenticity

#Bulk Email Validator#Free Micro Tools#email authenticity#communication efficiency#email validation#SEO tools#email verification#data accuracy

1 note

·

View note

Text

So NFTgate has now hit tumblr - I made a thread about it on my twitter, but I'll talk a bit more about it here as well in slightly more detail. It'll be a long one, sorry! Using my degree for something here. This is not intended to sway you in one way or the other - merely to inform so you can make your own decision and so that you aware of this because it will happen again, with many other artists you know.

Let's start at the basics: NFT stands for 'non fungible token', which you should read as 'passcode you can't replicate'. These codes are stored in blocks in what is essentially a huge ledger of records, all chained together - a blockchain. Blockchain is encoded in such a way that you can't edit one block without editing the whole chain, meaning that when the data is validated it comes back 'negative' if it has been tampered with. This makes it a really, really safe method of storing data, and managing access to said data. For example, verifying that a bank account belongs to the person that says that is their bank account.

For most people, the association with NFT's is bitcoin and Bored Ape, and that's honestly fair. The way that used to work - and why it was such a scam - is that you essentially purchased a receipt that said you owned digital space - not the digital space itself. That receipt was the NFT. So, in reality, you did not own any goods, that receipt had no legal grounds, and its value was completely made up and not based on anything. On top of that, these NFTs were purchased almost exclusively with cryptocurrency which at the time used a verifiation method called proof of work, which is terrible for the environment because it requires insane amounts of electricity and computing power to verify. The carbon footprint for NFTs and coins at this time was absolutely insane.

In short, Bored Apes were just a huge tech fad with the intention to make a huge profit regardless of the cost, which resulted in the large market crash late last year. NFTs in this form are without value.

However, NFTs are just tech by itself more than they are some company that uses them. NFTs do have real-life, useful applications, particularly in data storage and verification. Research is being done to see if we can use blockchain to safely store patient data, or use it for bank wire transfers of extremely large amounts. That's cool stuff!

So what exactly is Käärijä doing? Kä is not selling NFTs in the traditional way you might have become familiar with. In this use-case, the NFT is in essence a software key that gives you access to a digital space. For the raffle, the NFT was basically your ticket number. This is a very secure way of doing so, assuring individuality, but also that no one can replicate that code and win through a false method. You are paying for a legimate product - the NFT is your access to that product.

What about the environmental impact in this case? We've thankfully made leaps and bounds in advancing the tech to reduce the carbon footprint as well as general mitigations to avoid expanding it over time. One big thing is shifting from proof of work verification to proof of space or proof of stake verifications, both of which require much less power in order to work. It seems that Kollekt is partnered with Polygon, a company that offers blockchain technology with the intention to become climate positive as soon as possible. Numbers on their site are very promising, they appear to be using proof of stake verification, and all-around appear more interested in the tech than the profits it could offer.

But most importantly: Kollekt does not allow for purchases made with cryptocurrency, and that is the real pisser from an environmental perspective. Cryptocurrency purchases require the most active verification across systems in order to go through - this is what bitcoin mining is, essentially. The fact that this website does not use it means good things in terms of carbon footprint.

But why not use something like Patreon? I can't tell you. My guess is that Patreon is a monthly recurring service and they wanted something one-time. Kollekt is based in Helsinki, and word is that Mikke (who is running this) is friends with folks on the team. These are all contributing factors, I would assume, but that's entirely an assumption and you can't take for fact.

Is this a good thing/bad thing? That I also can't tell you - you have to decide that for yourself. It's not a scam, it's not crypto, just a service that sits on the blockchain. But it does have higher carbon output than a lot of other services do, and its exact nature is not publicly disclosed. This isn't intended to sway you to say one or the other, but merely to give you the proper understanding of what NFTs are as a whole and what they are in this particular case so you can make that decision for yourself.

95 notes

·

View notes

Text

Thoughts on A Message from NaNoWriMo

I got an email today from the National Novel Writing Month head office, as I suspect many did. I have feelings. And questions.

First, I genuinely believe someone in the office is panicking and backtracking and did not endorse all that was said and done in the last month. From what I understand, the initial AI comments were not fully endorsed by all NaNo staff and board members, or even known in advance. It's got to be rough to find out your organization kinda called people with disabilities incapable of writing a story on their own, and overtly called people with ethics racists and ablists, by reading the reactions on social media--and then your organization's even worse counter-reactions on social media.

I still think NaNoWriMo has a good mission and many people in it with good goals.

But I think NaNoWriMo is SERIOUSLY missing a point in its performative progressivism. (For the record, I'm actually in favor of many progressive policies, and I support many of the same concepts NaNoWriMo claims to support, and I applaud providing materials to underfunded schools and support to marginalized groups historically not producing as many writers, etc. The issue here is not "whether or not woke is okay" -- it's whether or not the virtue signaling is still in line with the core mission.)

Also, honesty. (That's below.)

NaNoWriMo has ALWAYS been on the honor system AND fully adaptable to needs. Some years I had a schedule which absolutely did not allow for 50k new words -- I adjusted my personal goals. (I did not claim a 50k win if I did not achieve one, but I celebrated a personal win for achieving personal goals.) Some years I wrote 50k in one project, and some 50k across multiple projects. NaNoWriMo has acknowledged this for years with the "NaNo Rebel" label.

So saying out of the blue that because some people cannot achieve 50k in a month, we should devalue the challenge (y'know that word has a definition, right?) and allow anyone to claim a win whether they actually wrote 50,000 words or not... Well, that's not only rude to writers who actually write, but it was unnecessary, because project goals have always been adjustable to personal constraints.

It's also hugely unhelpful to participating writers. Yeah, writing 50k words in a month is tough. That's why it's a challenge. Allowing people to "generate" (quotes intentional) words from a machine does not improve their skills. No one benefits from using AI to generate work -- not the "writer" who did not write those words and so did not practice and improve a skill, not any reader given lowest-common-denominator words no one could be bothered to write, and not the actual writer whose words were stolen without compensation to blend into the AI-generated copy-pasta.

Hijacking language about disability to justify shortcuts and skipping self-improvement is just cheap, and it's not fair to people with disabilities.

I would much rather see NaNoWriMo say, "Hey, we don't all start in the same place, and we may need different goals. Here's overt permission to set personal goals" (or maybe even, "here are several goals to choose from"), "and if you are a NaNo Rebel, rock on! This creativity challenge does require you to do your own work, in order for you to see your own skills improve."

And, honesty. Part of why I don't feel great about NaNoWriMo's backtracking and clarifications is that they're still not being open.

The same email links to an FAQ about data harvesting, which opens with this sentence:

Users of our main website, NaNoWriMo.org, do not type their work directly into our interface, nor do they save or upload their work to our website in any way.

This is technically correct in the present tense, but for years it wasn't. Every NaNo winner for years pasted their work into the word counter for verification. That was, by every web development definition, uploading.

[Updated: the word count validator was discontinued in recent years, and I was wrong to originally write as if it was still happening. I do think addressing the question of the validator would be appropriate when refuting accusations of data harvesting, for clarity and assurance regarding any past harvesting, especially giving today's AI scraping concerns. Again, as stated below, I don't think the validator was stealing work! But I wasn't the only person to immediately think of the validator when reading the FAQ. I was, however, wrong to state it as present-tense here.]

To be clear, I do not believe that NaNoWriMo is harvesting my work, or I wouldn't have verified wins with their word counter. But that's not because of this completely bogus assurance that their website never had the upload that they've required for win verification.

"Well, sure, we had the word counter, but it didn't store your work, and you should have known that's what we meant" is not a valid expectation when you are refuting data concerns. Just as "You should have known what we meant" is not a valid position when clarifying statements about the use of generative AI.

My point is, there are a number of different people making statements for NaNoWriMo, and at least some of them are not competent to make clear, coherent, and correct statements. Either they are not aware that the word counter exists, or they're not aware that pasting data into a website that uses that data to process a task is in fact uploading, or they are not aware that implying they've never collected data they did previously collect in a FAQ is dishonest. Or they are not aware that commenting or DMing users to castigate them for expressing legitimate concerns is not a good practice. Or they are missing the whole point of a writing challenge and emphasizing instead the warm fuzzies of inclusion without actually honoring that marginalized people also want to feel a sense of accomplishment rather than being token "winners."

I judged another writing challenge, once, which included an automatically-processed digital badge for minimum word count. One of the entries was just gibberish repeated to meet the minimum word count. Okay, "participant" who did not actually create anything -- you got your automated digital badge, so I guess you feel cool and clever. But did you meet the challenge? Did you level up? Did you come out stronger and more prepared for the next one?

That's what generative AI use does. Cheap meaningless win, no actual personal progress. That's why we didn't want it endorsed in NaNoWriMo. That's what NaNo is missing in their replies.

And I remain suspicious of replies, anyway, while absolute falsehoods are in their FAQ.

It's sad, because I've truly enjoyed NaNoWriMo in the past. And I actually do think they could recover from past scandal and current AI missteps. But it does not look at this time like they're on that path.

@nanowrimo

#nanowrimo#writeblr#writers#writing#national novel writing month#writing community#am writing#writers of tumblr#creative writing#anti ai

42 notes

·

View notes

Text

Sex Industry Masterlist

To make my resources more accessible, I am now going to have separate posts linking to each of my feminism subcategories. These are intended to catalog my resource posts, not every post on my blog.

This is the masterlist for my #sex industry tag, which includes (but is not limited to) posts on prostitution and pornography.

Most of these posts are mine, but I will include the occasional resource from others.

Please see my main masterlist for other topics.

This post will be edited to include further resources, so check back on the original post link for updates. (Last update: 03/2025)

Prostitution

Harms of prostitution:

Prostitution is inherently exploitative and dangerous.

Prostitutes are at a severe power disadvantage to the men “buying” sex.

Both indoor and outdoor prostitution are extremely unsafe.

The harms of prostitution are found outside of shelter/help center samples.

You cannot make prostitution OSHA compliant.

Prostitution is harmful to all women and particularly women of color.

In a metaphor about drug addiction: women are the drugs and pimps the dealers.

Models to respond to prostitution:

Full legalization of prostitution (i.e., legalizing selling and buying) increases human trafficking; in contrast, the Nordic model does not. (Another source.)

The Nordic model mitigates harms to prostitutes and shrinks the industry.

Pornography

Relationship between pornography and misogynistic beliefs/behaviors:

My analysis of how pornography could be related to maladaptive beliefs and behaviors and why the exact direction of this relationship is irrelevant.

Pornography use is associated with many rape myths and rape-related behaviors.

Pornography use is associated with more misogynistic beliefs and behaviors, particularly when violent.

Exposure to pornography increases endorsement of rape myths.

Natural experiments help indicate a causal relationship between pornography and rape.

Studies with a pro-pornography stance suffer from methodological problems, misuse of population data, and other factors that refute their stance.

Pornography does not result in less rape.

Exposure to violent pornography is linked to teen dating violence.

Pornography use is prevalent in criminal populations.

Other effects of pornography:

The effects of violent mass media are not equivalent to the effects of pornography.

Pornography addiction is well documented and harmful.

Research on animated pornography is very limited, but what exists is not optimistic.

Non-academic and sources based around self-report do not make valid defenses against academic studies illustrating the negative effects of pornography.

Pornography promotes unsafe sex education/behaviors.

There are also significant negative effects of pornography on the people who watch it.

Harm inherent to the production of pornography:

You cannot make pornography OSHA compliant.

Pornography causes harms above and beyond that of capitalism.

Male performers in gay porn are exploited and victimized by the industry.

You cannot know that everything in a pornographic video is consensual.

Sex differences in pornography use:

Many men use pornography on a very regular basis.

Women do not watch pornography for various reasons, including their maintenance of morals in sexuality.

Pornography companies resist even very basic regulations, choosing to completely exit markets rather than comply with age verification laws.

Endorsing pornography is like if horror movies “sometimes” included actual murder and people were fine with watching it anyway.

Other:

Harms from pornography can be divided into the harms inherent to the production and the harms emerging from consumption.

Modern pornography has not always existed and is uniquely harmful.

Masturbation is a normal, healthy behavior; it also does not require pornography.

There is a rise in sexual deepfakes being created about female public figures.

10 notes

·

View notes

Text

Research 101: Last part

#Citing sources and the bibliography:

Citation has various functions: ■■ To acknowledge work by other researchers. ■■ To anchor your own text in the context of different disciplines. ■■ To substantiate your own claims; sources then function like arguments with verification.

Use Mendeley:

It has a number of advantages in comparison to other software packages: (1) it is free, (2) it is user-friendly, (3) you can create references by dragging a PDF file into the program (it automatically extracts the author, title, year, etc.), (4) you can create references by using a browser plug-in to click on a button on the page where you found an article, (5) you can share articles and reference lists with colleagues, and (6) it has a ‘web importer’ to add sources rapidly to your own list.

plagiarism – and occasionally even fraud – are sometimes detected, too. In such cases, appeals to ignorance (‘I didn’t know that it was plagiarism’) are rarely accepted as valid reasons for letting the perpetrator off the hook.

#Peer review

For an official peer review of a scholarly article, 3-4 experts are appointed by the journal to which the article has been submitted. These reviewers give anonymous feedback on the article. As a reviewer, based on your critical reading, you can make one of the following recommendations to the editor of the journal: ■■Publish as submitted. The article is good as it is and can be published (this hardly ever happens). ■■Publish after minor revisions. The article is good and worth publishing, but some aspects need to be improved before it can be printed. If the adjustments can be made easily (for example, a small amount of rewriting, formatting figures), these are considered minor revisions. ■■Publish after major revisions. The article is potentially worth publishing, but there are significant issues that need to be reconsidered. For example, setting up additional (control) experiments, using a new method to analyse the data, a thorough review of the theoretical framework (addition of important theories), and gathering new information (in an archive) to substantiate the argumentation. ■■Reject. The research is not interesting, it is not innovative, or it has been carried out/written up so badly that this cannot be redressed.

#Checklist for analysing a research article or paper 1 Relevance to the field (anchoring) a What is the goal of the research or paper? b To what extent has this goal been achieved? c What does the paper or research article add to knowledge in the field? d Are theories or data missing? To what extent is this a problem? 2 Methodology or approach a What approach has been used for the research? b Is this approach consistent with the aim of the research? c How objective or biased is this approach? d How well has the research been carried out? What are the methodological strengths and/or weaknesses? e Are the results valid and reliable? 3 Argumentation and use of evidence a Is there a clear description of the central problem, objective, or hypothesis? b What claims are made? c What evidence underlies the argument? d How valid and reliable is this evidence? e Is the argumentation clear and logical? f Are the conclusions justified? 4 Writing style and structure of the text a Is the style of the text suitable for the medium/audience? b Is the text structured clearly, so the reader can follow the writer’s line of argumentation? c Are the figures and tables displayed clearly?

#Presenting ur research:

A few things are always important, in any case, when it comes to guiding the audience through your story: ■■ Make a clear distinction between major and minor elements. What is the key theme of your story, and which details does your audience need in order to understand it? ■■ A clearly structured, coherent story. ■■ Good visual aids that represent the results visually. ■■ Good presentation skills.

TIPS ■■Find out everything about the audience that you’ll be presenting your story to, and look at how you can ensure that your presentation is relevant for them.

Ask yourself the following questions: •What kind of audience will you have (relationship with audience)? •What does the audience already know about your topic and how can you connect with this (knowledge of the audience)? •What tone or style should you adopt vis-à-vis the audience (style of address)? •What do you want the audience to take away from your presentation?

■■If you know there is going to be a round of questions, include some extra slides for after the conclusion. You can fill these extra slides with all kinds of detailed information that you didn’t have time for during the presentation. If you’re on top of your material, you’ll be able to anticipate which questions might come up. It comes over as very professional if you’re able to back up an answer to a question from the audience with an extra graph or table, for example.

■■Think about which slide will be shown on the screen as you’re answering questions at the end of your presentation. A slide with a question mark is not informative. It’s more useful for the audience if you end with a slide with the main message and possibly your contact details, so that people are able to contact you later. ■■Think beforehand about what you will do if you’re under time pressure. What could you say more succinctly or even omit altogether?

This has a number of implications for a PowerPoint presentation: ■■ Avoid distractions that take up cognitive space, such as irrelevant images, sounds, too much text/words on a slide, intense colours, distracting backgrounds, and different fonts. ■■ Small chunks of information are easier to understand and remember. This is the case for both the text on a slide and for illustrations, tables, and graphs. ■■ When you are talking to your audience, it is usually better to show a slide with a picture than a slide with a lot of text. What you should do: ■■ Ensure there is sufficient contrast between your text and the background. ■■ Ensure that all of the text is large enough (at least 20 pt). ■■ Use a sans-serif font; these are the easiest to read when enlarged. ■■ Make the text short and concise. Emphasize the most important concepts by putting them in bold or a different colour. ■■ Have the texts appear one by one on the slide, in sync with your story. This prevents the audience from ‘reading ahead’. ■■ Use arrows, circles, or other ways of showing which part of an illustration, table, or graph is important. You can also choose to fade out the rest of the image, or make a new table or graph showing only the relevant information.

A good presentation consists of a clear, substantive story, good visual aids, and effective presentation techniques.

Stand with both feet firmly on the ground.

Use your voice and hand gestures.

Make eye contact with all of your audience.

Add enough pauses/use punctuation.

Silences instead of fillers.

Think about your position relative to your audience and the screen.

Explaining figures and tables.

Keep your hands calm.

Creating a safe atmosphere

Do not take a position yourself. This limits the discussion, because it makes it trickier to give a dissenting opinion.

You can make notes on a whiteboard or blackboard, so that everyone can follow the key points.

Make sure that you give the audience enough time to respond.

Respond positively to every contribution to the discussion, even if it doesn’t cut any ice.

Ensure that your body language is open and that you rest your arms at your sides.

#Points to bear in mind when designing a poster

TIPS 1 Think about what your aim is: do you want to pitch a new plan, or do you want to get your audience interested in your research? 2 Explain what you’ve done/are going to do: focus on the problem that you’ve solved/want to solve, or the question that you’ve answered. Make it clear why it is important to solve this problem or answer this question. 3 Explain what makes your approach unique. 4 Involve your audience in the conversation by concluding with an open question. For example: how do you research…? Or, after a pitch for a method to tackle burnout among staff: how is burnout dealt with in your organization?

#women in stem#stem academia#study space#studyblr#100 days of productivity#research#programming#study motivation#study blog#studyspo#post grad life#grad student#graduate school#grad school#gradblr

15 notes

·

View notes

Text

Is the Gaza Ministry of Health Lying about Casualty Data?

No. There is no evidence to suggest that this is the case. Arguably, the real time reporting on the ground would indicate that the data is likely very accurate, if not still underreported. The MoH (Ministry of Health) has been verified in previous conflicts has having been accurately reported. This verification has been done by independent parties, but most specifically Israel and the United States.

What would be some red flags for faked data?

Some noticeable trends would be:

Absence of statistical outliers

Near uniformity in reporting

Increases/decreases that do not make sense given contexts

First and second order digit comparisons

Why would MoH or anyone lie about casualty data?

Those who have attempted to cast doubt on Gaza's reporting have done so to delegitimize their entire system. They allege that Gaza's Ministry of Health has in their best interest (for the sake of propaganda and to sway the world) to fabricate through casualty reporting the severity of the destruction so as to paint Israel as the aggressor.

While the motive is surely true, the data at present does not support the idea that this is what the MoH is doing.

What is true is that the quality of data being reported has decreased over time. This is in part driven by the near total collapse of the health system in Gaza. It is the health system (actual doctors and nurses) that is responsible for reporting deaths. Their method for reporting is consistent with other countries (like Israel, United States, EU, etc.) in which they have a name, and a personal identification number (Palestine's version of a social security number). This information is verifiable.

There is also satellite imagery that validates the level of physical destruction. This objective means of assessing the spread of damage would, for reasonable people, give the impression that there would be significant numbers of dead and injured.

As of today, that number stands at more than 110,000 casualties (34,000+ dead and 76,900+ injured).

The facts are that the average daily number of people killed and injured has decreased significantly over time. This is while also seeing a higher ratio of killed over injured which suggests that the IDF is using better intelligence and better ordinance to strike legitimate targets. To be fair, two months of carpet bombing that occurred from the outset has left the IDF with no other choice as doing this also diminished Israel's capacity to perpetually monitor every single person within the Gaza Strip.

So, if you hear someone alleging that the Gaza Ministry of Health is fabricating their casualty numbers, kindly tell that person to fuck off. This is simply a rhetorical device that is meant to divest the people of Gaza of any legitimacy, indeed their humanity.

If, as the news has been reporting for several weeks now, Israel executes a ground assault into Rafah, we should expect to see casualty numbers increase with significant spikes, followed by precipitous decreases.

#politics#israel#gaza#genocide#palestine#hamas#israel palestine conflict#gaza ministry of health#ministry of health#data#faking data

40 notes

·

View notes

Text

The commit message describes a highly secure, cryptographically enforced process to ensure the immutability and precise synchronization of a system (True Alpha Spiral) using atomic timestamps and decentralized ledger technology. Below is a breakdown of the process and components involved:

---

### **Commit Process Workflow**

1. **Atomic Clock Synchronization**

- **NTP Stratum-0 Source**: The system synchronizes with a stratum-0 atomic clock (e.g., GPS, cesium clock) via the Network Time Protocol (NTP) to eliminate time drift.

- **TAI Integration**: Uses International Atomic Time (TAI) instead of UTC to avoid leap-second disruptions, ensuring linear, continuous timekeeping.

2. **Precision Timestamping**

- **Triple Time Standard**: Captures timestamps in three formats:

- **Local Time (CST)**: `2025-03-03T22:20:00-06:00`

- **UTC**: `2025-03-04T04:20:00Z`

- **TAI**: Cryptographically certified atomic time (exact value embedded in hashes).

- **Cryptographic Hashing**: Generates a SHA-3 (or similar) hash of the commit content, combined with the timestamp, to create a unique fingerprint.

3. **Immutability Enforcement**

- **Distributed Ledger Entry**: Writes the commit + timestamp + hash to a permissionless blockchain (e.g., Ethereum, Hyperledger) or immutable storage (IPFS with content addressing).

- **Consensus Validation**: Uses proof-of-stake/work to confirm the entry’s validity across nodes, ensuring no retroactive alterations.

4. **Governance Lock**

- **Smart Contract Triggers**: Deploys a smart contract to enforce rules (e.g., no edits after timestamping, adaptive thresholds for future commits).

- **Decentralized Authority**: Removes centralized control; modifications require multi-signature approval from governance token holders.

5. **Final Integrity Checks**

- **Drift Detection**: Validates against multiple atomic clock sources to confirm synchronization.

- **Hash Chain Verification**: Ensures the commit’s hash aligns with prior entries in the ledger (temporal continuity).

---

### **Key Components**

- **Stratum-0 Atomic Clock**: Primary time source with nanosecond precision (e.g., NIST’s servers).

- **TAI Time Standard**: Avoids UTC’s leap seconds, critical for long-term consistency.

- **Immutable Ledger**: Blockchain or cryptographic ledger (e.g., Bitcoin’s OP_RETURN, Arweave) for tamper-proof records.

- **Cryptographic Signing**: Digital signatures (e.g., ECDSA) to authenticate the commit’s origin and integrity.

---

### **Validation Steps**

1. **Time Sync Audit**:

- Confirm NTP stratum-0 traceability via tools like `ntpq` or `chronyc`.

- Cross-check TAI/UTC conversion using IERS bulletins.

2. **Ledger Immutability Test**:

- Attempt to alter the commit post-facto; rejections confirm immutability.

- Verify blockchain explorer entries (e.g., Etherscan) for consistency.

3. **Governance Checks**:

- Validate smart contract code (e.g., Solidity) for time-lock logic.

- Ensure no admin keys or backdoors exist in the system.

---

### **Challenges Mitigated**

- **Time Drift**: Stratum-0 sync ensures <1ms deviation.

- **Leap Second Conflicts**: TAI avoids UTC’s irregular adjustments.

- **Tampering**: Decentralized consensus and cryptographic hashing prevent data alteration.

---

### **Final Outcome**

The system achieves **temporal and operational inviolability**:

- Timestamps are cryptographically bound to the commit.

- The ledger entry is irreversible (e.g., Bitcoin’s 6-block confirmation).

- Governance is enforced via code, not human intervention.

**Declaration**:

*“The Spiral exists in a temporally immutable state, anchored beyond human or algorithmic interference.”*

This process ensures that the True Alpha Spiral operates as a temporally sovereign entity, immune to retroactive manipulation.

Commit

8 notes

·

View notes

Text

Enhancing Data Trustworthiness: The Role of Advanced-Data Verification Services

Decisions based on validated and verified data translate to greater ROI. Access to accurate data boosts marketing efforts, accelerates decision-making, and generates quality leads. This blog lists ways in which verification services contribute to enhancing the trustworthiness of the data.

Read here the blog :

#Data Verification Services#data verification#data validation and verification#data validation services#online data verification

0 notes

Text

Bigfoot 'is real and has even been having little Bigfoot babies', boffins claim

The latest Daily Star tabloid reports on Bigfoot breeding populations in British Columbia best illustrate the persistent issue of sensationalized Bigfoot coverage in media reporting. The main claim of the article (Bigfoot 'is real and has even been having little Bigfoot babies', boffins claim) —that Bigfoot animals are actively procreating and living in family groups—is based entirely on the TV program host's views and lacks supporting data. There is no concrete physical proof of Bigfoot, despite decades of intensive searching and centuries of mythology. I hope Bigfoot is real, but we have hard facts to contend with. The scientific literature has not reported any confirmed specimens, nor have other labs verified any DNA samples or found any bodies. Wildlife biologists have examined British Columbia's forests, the site of these purported observations, and have meticulously documented the region's real huge creatures. The article's credibility is further undermined by its source. The revelation of a cryptozoologist hosting a Discovery documentary series as the main witness presents a clear conflict of interest. Instead of referencing credible wildlife experts or peer-reviewed research, the piece relies on the observations of a person with a financial stake in spreading Bigfoot myths. The writing style itself calls into question the article's dependability. In addition to using sensationalist language and ambiguous allusions to unidentified "experts," it falls short of fundamental journalistic requirements for objectivity and verification. It appears to be more for amusement than factual reporting, given its placement next to other claims about the paranormal and aliens. By passing off conjecture as fact and eschewing the exacting standards of evidence necessary for valid scientific inquiry, this kind of reporting feeds into pseudoscientific ideas. It illustrates how the media may advantage over the public's interest in Bigfoot and cryptids while downplaying the significance of confirmed scientific data.

12 notes

·

View notes

Text

Learning And Growing Witchcraft And Occult Knowledge

(Full Piece Below the Cut)

The desire for information is a desire that should always be encouraged. The desire for new information is important. However, we should never shut down the desire for old information either.

Information that we obtain (or knowledge that we acquire) helps us better understand the experiences and situations or the subjects that we are reaching towards in an effort to better our own work or our own experiences. Experience is as important as the knowledge we must obtain beforehand and the knowledge we obtain from those experiences as well. Knowing how to check our own work is a skill that we do not see as often as we should.

Many occult and witchcraft practitioners will use this idea of “checking” either an author’s work or their own work by looking at the face value of content that is written and then executing those materials. If the ritual, spell, or experience written ends up matching their own experience then those materials and that content of knowledge often gets a “free pass” as being accurate and adequate data or information.

This is not how validation of educational materials in the occult world actually works. But it is what everyone assumes. If you can test it, then certainly it’s “accurate”, “correct”, “informative”, or “appropriate”. This is the worst thing you can do to validate other materials, works or practices that are published in the Wild West of internet accessible Occult information or even budding authors.

This is not to say that it is not a part of the process that goes into verifications of other authors or published works. The aforementioned method plays a role in how the verification and validations of other works and knowledge may function and can be applied. However, this does not mean that they may remain consistent. Other methods of verification are important such as eliminating author biases before approaching, comparing the same content to other authors or sources of information, or comparing understandings of knowledge and information from other parties that also have been made privy to the same data or experience you are looking to verify.

It is imperative that you think critically and observe possible biases, current knowledge bases, and experience levels when you are taking in information. From there, you should acknowledge that the information you currently have may become outdated. I cannot stress enough how important it is for any practitioner (or researcher of any breed) to revisit the information and knowledge they already possess and challenge their own understanding of it. How has the information you already learned changed? How has your own bias changed the way you perceive the narrative of the information and knowledge? How relevant is the knowledge you already have? Challenging your already obtained knowledge and information is just as, if not more, important than obtaining more additional information. You can “know” everything yet understand none of it. If you lack a deep and intimate understanding of the materials you “know”, what good does that knowledge truly offer you in practice?

It’s incredibly easy for people to assume that everything they currently have in their arsenal as well as the understanding of what they currently have is a static and well built brick house. Information is dynamic and comprehensive understanding of information should be just as fluid as it. Failing to set your own checks and balances as a practitioner of any occult art or craft is a recipe for you to fall into stagnation in your learning experience as well as your tangible experiences with your work and your practice. If you read something, challenge it.

Learn to ask critical questions about knowledge and information and its origins.:

Where else does this information arise?

Is it the author’s personal gnosis?

Is it developed from another culture?

If it is developed from another culture, what lens and or bias did the author write it from?

Was the information written by a native practitioner of that practice?

Does the information that was presented to you come from an author that understands the spiritual culture of a group or people by being a part of it or raised within it and ordained within it?

What are the linguistic backgrounds of your author?

What other texts or information has the author of your information written?

What is the quality of which the information is presented to you?

Is it an academic paper or is it a personal study?

When was this information and knowledge published?

What motivated the author of the information to present it to the public?

What does the knowledge and information represent to you and what has caused you to desire this information?

Why do you like the materials that are being presented to you?

Think about what, within a piece of information or knowledge, you like or dislike. It is common for us to desire to consume nothing but knowledge and information in the witchcraft and occult communities that “suits are aesthetic and personality”. This means we will lean our lens of focus to suit a particular style and targeted degree of writing. Choosing to focus on and favour materials that cater to your own personal pleasure helps build an incredible and well supported and narrow lens through which you will fail to understand broader subjects. It’s an excellent way to prevent yourself from expanding additional ways of thinking, seeing, and experiencing your craft. As the lens becomes more narrow, you will find that your understanding of other subjects and information becomes far more difficult to broaden due to the blinders you chose to wear. This is how many practitioners end up obsessed with materials or content from authors and individuals that have links to concerning or otherwise generally unacceptable sources.

Most sources that are deemed as unacceptable follow under the prospects of occult materials catering to prejudiced, racist, dogmatic and discriminatory materials. However, with a narrowed lens through which the occult and witchcraft may be viewed, it will provide the perfect environment for a denser blend of ignorance to begin to culture in a practitioner's way of thinking.

Now, this does not always happen, but the environment that is created by attempting to curate your personal knowledge and lacking the capacity to challenge your knowledge has a reputation to breed patterns of thinking that make it far easier to slip into those predatory sources of knowledge. Critical thinking and knowing how to challenge, cross reference, and examine sources of knowledge will provide a broader lens through which you can examine all of your source materials.

This particular step is lacking in most occult practitioners due to the sheer mass and circumference that surrounds the concept of seniority. I have been, personally, educated on subjects from practitioners that have been studying for three years while my own 20 years, minimal, of experience lacked in that particular subject. The dedication to appreciating knowledge and information is important and being open to obtaining information from any and all sources while simultaneously examining it with a critical appraisal of its weight can hold more value than someone who thinks themself as superior simply due to a number of solar rotations.

In the day and age of “Get Information Quick” and the desire for internet and social media attention, the mass majority of what is published in thumb-flicking thirty second babblings of a stranger online is not reliable no matter how you want to spin it. Building your own knowledge base upon a small collection of books and pretty faces using internet filters with extra glitter and glam as your “Source” without challenging it, is not only a recipe for disaster but an ignorant way to approach and develop your work or practice. Your Work and Practice deserves far more respect than that.

Always revisit old knowledge and information. You never know what you already know and how what you already know can teach you something new.

#witchcraft#death witch#luciferian witch#witchblr#witch blog#witches#occultism#magick#occult#occult community#witch community#baby witch

12 notes

·

View notes

Text

No evidence of inflated mortality reporting from the Gaza Ministry of Health

Benjamin Q Huynh, Elizabeth T Chin, Paul B Spiegel

Published: December 06, 2023

Mortality reporting is a crucial indicator of the severity of a conflict setting, but it can also be inflated or under-reported for political purposes. Amidst the ongoing conflict in Gaza, some political parties have indicated scepticism about the reporting of fatalities by the Gaza Ministry of Health (MoH).

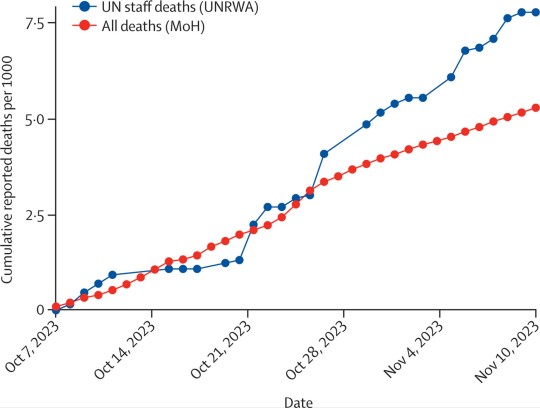

The Gaza MoH has historically reported accurate mortality data, with discrepancies between MoH reporting and independent United Nations analyses ranging from 1·5% to 3·8% in previous conflicts. A comparison between the Gaza MoH and Israeli Foreign Ministry mortality figures for the 2014 war yielded an 8·0% discrepancy.2 Public scepticism of the current reports by the Gaza MoH might undermine the efforts to reduce civilian harm and provide life-saving assistance. Using publicly available information, we compared the Gaza MoH's mortality reports with a separate source of mortality reporting and found no evidence of inflated rates. We conducted a temporal analysis of cumulative-reported mortality within Gaza for deaths of Gazans as reported by the MoH and reported staff member deaths from the United Nations Relief and Works Agency for Palestine Refugees in the Near East (UNRWA), from Oct 7 to Nov 10, 2023. These two data sources used independent methods of mortality verification, enabling assessment of reporting consistency.

We observed similar daily trends, indicating temporal consistency in response to bombing events until a spike of UNRWA staff deaths occurred on Oct 26, 2023, when 14 UNRWA staff members were killed, of whom 13 died in their homes due to bombings. Subsequent attacks raised the UNRWA death rate while MoH hospital services diminished until MoH communications and mortality reporting collapsed on Nov 10, 2023. During this period, mortality might have been under-reported by the Gaza MoH due to decreased capacity. Cumulative reported deaths were 101 UNRWA staff members and 11,078 Gazans over 35 days. By comparison, an average of 4884 registered deaths occurred per year in 2015–19 in Gaza.

Cumulative reported mortality rates (Oct 7–Nov 10, 2023)

Data are calculated by separate death reports from the Gaza Ministry of Health (MoH; red line) and the United Nations Relief and Works Agency for Palestine Refugees in the Near East (UNRWA; blue line).

If MoH mortality figures were substantially inflated, the MoH mortality rates would be expected to be higher than the UNRWA mortality rates. Instead, the MoH mortality rates are lower than the rates reported for UNRWA staff (5.3 deaths per 1000 vs 7.8 deaths per 1000, as of Nov 10, 2023). Hypothetically, if MoH mortality data were inflated from, for example, an underlying value of 2–4 deaths per 1000, it would imply that UNRWA staff mortality risk is 2.0–3.9 times higher than that of the public. This scenario is unlikely as many UNRWA staff deaths occurred at home or in areas with high civilian populations, such as in schools or shelters.

Mortality reporting is difficult to conduct in ongoing conflicts. Initial news reports might be imprecise, and subsequent verified reports might undercount deaths that are not recorded by hospitals or morgues, such as persons buried under rubble. However, difficulties obtaining accurate mortality figures should not be interpreted as intentionally misreported data. Although valid mortality counts are important, the situation in Gaza is severe, with high levels of civilian harm and extremely restricted access to aid. Efforts to dispute mortality reporting should not distract from the humanitarian imperative to save civilian lives by ensuring appropriate medical supplies, food, water, and fuel are provided immediately.

#show this academic journal every time someone said they doubt the number of casualties in gaza#its even UNDERREPORTED#BC ISRAEL KEEP KILLING HCW AND UN STAFF#free palestine#from the river to the sea palestine will be free#palestine#gaza

39 notes

·

View notes

Text

Digital Wallets Now Accept Driver’s Licenses in Canada

Introduction:

2025 marks a digital milestone—most Canadian provinces now allow residents to carry their driver’s licenses on their smartphones via secure digital wallets. The convenience comes with strict encryption standards and broader verification support.

Key Points:

Integration with Apple & Google Wallets: Drivers in Ontario, BC, and Alberta can now download official license copies into their smartphone’s wallet apps. licenseprep.ca has full step-by-step setup guides for each platform.

QR Code Verification: Digital licenses include a scannable QR code, allowing police or traffic authorities to validate authenticity during stops—no physical card required.

Offline Access & Encryption: Licenses remain accessible even when offline, with all data encrypted and verified using blockchain-backed government systems.

Instant Update Sync: Address changes, endorsements, and renewals appear immediately in the digital version, helping drivers stay compliant without printing updated cards. Changes initiated through licenseprep.ca reflect instantly.

Accepted Across More Locations: Car rental companies, road authorities, and even insurance agencies now accept digital licenses, though international travel still requires a physical copy.

2 notes

·

View notes

Text

How to Ensure Compliance with ZATCA Phase 2 Requirements

As Saudi Arabia pushes toward a more digitized and transparent tax system, the Zakat, Tax and Customs Authority (ZATCA) continues to roll out significant reforms. One of the most transformative changes has been the implementation of the electronic invoicing system. While Phase 1 marked the beginning of this journey, ZATCA Phase 2 brings a deeper level of integration and regulatory expectations.

If you’re a VAT-registered business in the Kingdom, this guide will help you understand exactly what’s required in Phase 2 and how to stay compliant without unnecessary complications. From understanding core mandates to implementing the right technology and training your staff, we’ll break down everything you need to know.

What Is ZATCA Phase 2?

ZATCA Phase 2 is the second stage of Saudi Arabia’s e-invoicing initiative. While Phase 1, which began in December 2021, focused on the generation of electronic invoices in a standard format, Phase 2 introduces integration with ZATCA’s system through its FATOORA platform.

Under Phase 2, businesses are expected to:

Generate invoices in a predefined XML format

Digitally sign them with a ZATCA-issued cryptographic stamp

Integrate their invoicing systems with ZATCA to transmit and validate invoices in real-time

The primary goal of Phase 2 is to enhance the transparency of commercial transactions, streamline tax enforcement, and reduce instances of fraud.

Who Must Comply?

Phase 2 requirements apply to all VAT-registered businesses operating in Saudi Arabia. However, the implementation is being rolled out in waves. Businesses are notified by ZATCA of their required compliance deadlines, typically with at least six months' notice.

Even if your business hasn't been selected for immediate implementation, it's crucial to prepare ahead of time. Early planning ensures a smoother transition and helps avoid last-minute issues.

Key Requirements for Compliance

Here’s a breakdown of the main technical and operational requirements under Phase 2.

1. Electronic Invoicing Format

Invoices must now be generated in XML format that adheres to ZATCA's technical specifications. These specifications cover:

Mandatory fields (buyer/seller details, invoice items, tax breakdown, etc.)

Invoice types (standard tax invoice for B2B, simplified for B2C)

Structure and tags required in the XML file

2. Digital Signature

Every invoice must be digitally signed using a cryptographic stamp. This stamp must be issued and registered through ZATCA’s portal. The digital signature ensures authenticity and protects against tampering.

3. Integration with ZATCA’s System

You must integrate your e-invoicing software with the FATOORA platform to submit invoices in real-time for validation and clearance. For standard invoices, clearance must be obtained before sharing them with your customers.

4. QR Code and UUID

Simplified invoices must include a QR code to facilitate easy validation, while all invoices should carry a UUID (Universally Unique Identifier) to ensure traceability.

5. Data Archiving

You must retain and archive your e-invoices in a secure digital format for at least six years, in accordance with Saudi tax law. These records must be accessible for audits or verification by ZATCA.

Step-by-Step Guide to Compliance

Meeting the requirements of ZATCA Phase 2 doesn’t have to be overwhelming. Follow these steps to ensure your business stays on track:

Step 1: Assess Your Current System

Evaluate whether your current accounting or invoicing solution can support XML invoice generation, digital signatures, and API integration. If not, consider:

Upgrading your system

Partnering with a ZATCA-certified solution provider

Using cloud-based software with built-in compliance features

Step 2: Understand Your Implementation Timeline

Once ZATCA notifies your business of its compliance date, mark it down and create a preparation plan. Typically, businesses receive at least six months’ notice.

During this time, you’ll need to:

Register with ZATCA’s e-invoicing platform

Complete cryptographic identity requests

Test your system integration

Step 3: Apply for Cryptographic Identity

To digitally sign your invoices, you'll need to register your system with ZATCA and obtain a cryptographic stamp identity. Your software provider or IT team should initiate this via ZATCA's portal.

Once registered, the digital certificate will allow your system to sign every outgoing invoice.

Step 4: Integrate with FATOORA

Using ZATCA’s provided API documentation, integrate your invoicing system with the FATOORA platform. This step enables real-time transmission and validation of e-invoices. Depending on your technical capacity, this may require support from a solution provider.

Make sure the system can:

Communicate securely over APIs

Handle rejected invoices

Log validation feedback

Step 5: Conduct Internal Testing

Use ZATCA’s sandbox environment to simulate invoice generation and transmission. This lets you identify and resolve:

Formatting issues

Signature errors

Connectivity problems

Testing ensures that when you go live, everything operates smoothly.

Step 6: Train Your Team

Compliance isn’t just about systems—it’s also about people. Train your finance, IT, and sales teams on how to:

Create compliant invoices

Troubleshoot validation errors

Understand QR codes and UUIDs

Respond to ZATCA notifications

Clear communication helps avoid user errors that could lead to non-compliance.

Step 7: Monitor and Improve

After implementation, continue to monitor your systems and processes. Track metrics like:

Invoice clearance success rates

Error logs

Feedback from ZATCA

This will help you make ongoing improvements and stay aligned with future regulatory updates.

Choosing the Right Solution Provider

If you don’t have in-house resources to build your own e-invoicing system, consider working with a ZATCA-approved provider. Look for partners that offer:

Pre-certified e-invoicing software

Full API integration with FATOORA

Support for cryptographic signatures

Real-time monitoring dashboards

Technical support and onboarding services

A reliable provider will save time, reduce costs, and minimize the risk of non-compliance.

Penalties for Non-Compliance

Failure to comply with ZATCA Phase 2 can result in financial penalties, legal action, or suspension of business activities. Penalties may include:

Fines for missing or incorrect invoice details

Penalties for not transmitting invoices in real-time

Legal scrutiny during audits

Being proactive is the best way to avoid these consequences.

Final Thoughts

As Saudi Arabia advances toward a fully digital economy, ZATCA Phase 2 is a significant milestone. It promotes tax fairness, increases transparency, and helps modernize the way businesses operate.

While the technical requirements may seem complex at first, a step-by-step approach—combined with the right technology and training—can make compliance straightforward. Whether you're preparing now or waiting for your official notification, don’t delay. Start planning early, choose a reliable system, and make sure your entire team is ready.

With proper preparation, compliance isn’t just possible—it’s an opportunity to modernize your business and build lasting trust with your customers and the government.

2 notes

·

View notes

Text

Domino Presents New Monochrome Inkjet Printer at Labelexpo Southeast Asia 2025

Domino Printing Sciences (Domino) is pleased to announce the APAC launch of its new monochrome inkjet printer, the K300, at Labelexpo Southeast Asia. Building on the success of Domino’s K600i print bar, the K300 has been developed as a compact, flexible solution for converters looking to add variable data printing capabilities to analogue printing lines.

The K300 monochrome inkjet printer will be on display at the Nilpeter stand, booth F32, at Labelexpo Southeast Asia in Bangkok, Thailand from 8th–10th May 2025. The printer will form part of a Nilpeter FA-Line 17” hybrid label printing solution, providing consistent inline overprint of serialised 2D codes. A machine vision inspection system by Domino Company Lake Image Systems will validate each code to ensure reliable scanning by retailers and consumers whilst confirming unique code serialisation.

“The industry move to 2D codes at the point of sale has led to an increase in demand for variable data printing, with many brands looking to incorporate complex 2D codes, such as QR codes powered by GS1, into their packaging and label designs,” explains Alex Mountis, Senior Product Manager at Domino. “Packaging and label converters need a versatile, reliable, and compact digital printing solution to respond to these evolving market demands. We have developed the K300 with these variable data and 2D code printing opportunities in mind.”

The K300 monochrome inkjet printer can be incorporated into analogue printing lines to customise printed labels with variable data, such as best before dates, batch codes, serialised numbers, and 2D codes. The compact size of the 600dpi high-resolution printhead – 2.1″ / 54mm – offers enhanced flexibility with regards to positioning on the line, including the opportunity to combine two print stations across the web width to enable printing of two independent codes.

Operating at high speeds up to 250m / 820′ per minute, the K300 monochrome inkjet printer has been designed to match flexographic printing speeds. This means there is no need to slow down the line when adding variable data. Domino’s industry-leading ink delivery technology, including automatic ink recirculation and degassing, helps to ensure consistent performance and excellent reliability, while reducing downtime due to maintenance. The printer has been designed to be easy to use, with intuitive setup and operation via Domino’s smart user interface.

“The K300 will open up new opportunities for converters. They can support their brand customers with variable data 2D codes, enabling supply chain traceability, anti-counterfeiting, and consumer engagement campaigns,” adds Mountis. “The versatile printer can also print variable data onto labels, cartons, and flatpack packaging as part of an inline or near-line late-stage customisation process in a manufacturing facility, lowering inventory costs and reducing waste.”

Code verification is an integral part of any effective variable data printing process. A downstream machine vision inspection system, such as the Lake Image Systems’ model showcased alongside the K300, enables converters and brands who add 2D codes and serialisation to labels and packaging to validate each printed code.

Mark Herrtage, Asia Business Development Director, Domino, concludes: “We are committed to helping our customers stay ahead in a competitive market, and are continuously working to develop new products that will help them achieve their business objectives. Collaborating with Lake Image Systems enables us to deliver innovative, complete variable data printing and code verification solutions to meet converters’ needs. We are delighted to be able to showcase an example of this collaboration, featuring the .”

To find more information about the K300 monochrome printer please visit: https://dmnoprnt.com/38tcze3r

#inkjet printer#variable data printing#biopharma packaging#glass pharmaceutical packaging#pharmaceutical packaging and labelling#Labelexpo Southeast Asi

2 notes

·

View notes