#neural ai

Explore tagged Tumblr posts

Text

Neural AI by Shirow Masamune

#neural ai#ai#ghost in the shell#ghost in the shell 2#ghost in the shell manga#ghost in the shell mangas#gits manga#gits 2#gits mangas#man machine interface#ghost in the shell man machine interface#ghost in the shell 2 man machine interface#manga#cyberpunk#cyberpunk manga#futuristic manga#futuristic#masamune shirow#shirow masamune#author#artist#art#manga author#manga artist#manga art#Shirow Masamune art#Masamune Shirow art#kodansha comics#kodansha#deluxe edition manga

2 notes

·

View notes

Text

They trained an AI model on a widely used knee osteoarthritis dataset to see if it would be able to make nonsensical predictions - whether the patient ate refried beans, or drank beer. It did, in part by somehow figuring out where the x-ray was taken.

The authors point out that AI models base their predictions on sneaky shortcut effects all the time; they're just easier to identify when the conclusions (beer drinking) are clearly spurious.

Algorithmic shortcutting is tough to avoid. Sometimes it's based on something easy to identify - like rulers in images of skin cancer, or sicker patients getting their chest x-rays while lying down.

But as they found here, often it's a subtle mix of non-obvious correlations. They eliminated as many differences between x-ray machines at different sites as they could find, and the model could still tell where the x-ray was taken - and whether the patient drank beer.

AI models are not approaching the problem like a human scientist would - they'll latch onto all sorts of unintended information in an effort to make their predictions.

This is one reason AI models often end up amplifying the racism and gender discrimination in their training data.

When I wrote a book on AI in 2019, it focused on AIs making sneaky shortcuts.

Aside from the vintage generative text (Pumpkin Trash Break ice cream, anyone?), the algorithmic shortcutting is still completely recognizable today.

2K notes

·

View notes

Text

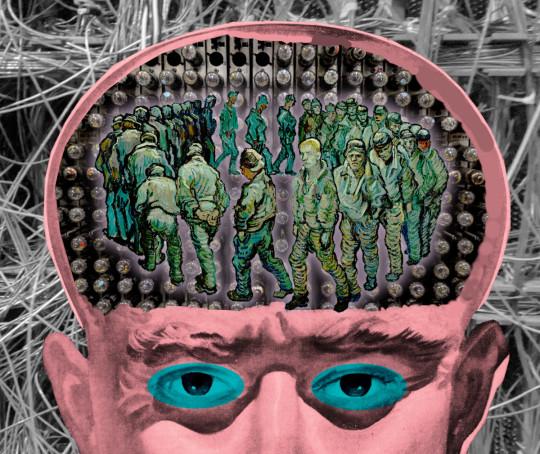

Frank Rosenblatt, often cited as the Father of Machine Learning, photographed in 1960 alongside his most-notable invention: the Mark I Perceptron machine — a hardware implementation for the perceptron algorithm, the earliest example of an artificial neural network, est. 1943.

#frank rosenblatt#tech history#machine learning#neural network#artificial intelligence#AI#perceptron#60s#black and white#monochrome#technology#u

819 notes

·

View notes

Text

Three AI insights for hard-charging, future-oriented smartypantses

MERE HOURS REMAIN for the Kickstarter for the audiobook for The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There’s also bundles with Red Team Blues in ebook, audio or paperback.

Living in the age of AI hype makes demands on all of us to come up with smartypants prognostications about how AI is about to change everything forever, and wow, it's pretty amazing, huh?

AI pitchmen don't make it easy. They like to pile on the cognitive dissonance and demand that we all somehow resolve it. This is a thing cult leaders do, too – tell blatant and obvious lies to their followers. When a cult follower repeats the lie to others, they are demonstrating their loyalty, both to the leader and to themselves.

Over and over, the claims of AI pitchmen turn out to be blatant lies. This has been the case since at least the age of the Mechanical Turk, the 18th chess-playing automaton that was actually just a chess player crammed into the base of an elaborate puppet that was exhibited as an autonomous, intelligent robot.

The most prominent Mechanical Turk huckster is Elon Musk, who habitually, blatantly and repeatedly lies about AI. He's been promising "full self driving" Telsas in "one to two years" for more than a decade. Periodically, he'll "demonstrate" a car that's in full-self driving mode – which then turns out to be canned, recorded demo:

https://www.reuters.com/technology/tesla-video-promoting-self-driving-was-staged-engineer-testifies-2023-01-17/

Musk even trotted an autonomous, humanoid robot on-stage at an investor presentation, failing to mention that this mechanical marvel was just a person in a robot suit:

https://www.siliconrepublic.com/machines/elon-musk-tesla-robot-optimus-ai

Now, Musk has announced that his junk-science neural interface company, Neuralink, has made the leap to implanting neural interface chips in a human brain. As Joan Westenberg writes, the press have repeated this claim as presumptively true, despite its wild implausibility:

https://joanwestenberg.com/blog/elon-musk-lies

Neuralink, after all, is a company notorious for mutilating primates in pursuit of showy, meaningless demos:

https://www.wired.com/story/elon-musk-pcrm-neuralink-monkey-deaths/

I'm perfectly willing to believe that Musk would risk someone else's life to help him with this nonsense, because he doesn't see other people as real and deserving of compassion or empathy. But he's also profoundly lazy and is accustomed to a world that unquestioningly swallows his most outlandish pronouncements, so Occam's Razor dictates that the most likely explanation here is that he just made it up.

The odds that there's a human being beta-testing Musk's neural interface with the only brain they will ever have aren't zero. But I give it the same odds as the Raelians' claim to have cloned a human being:

https://edition.cnn.com/2003/ALLPOLITICS/01/03/cf.opinion.rael/

The human-in-a-robot-suit gambit is everywhere in AI hype. Cruise, GM's disgraced "robot taxi" company, had 1.5 remote operators for every one of the cars on the road. They used AI to replace a single, low-waged driver with 1.5 high-waged, specialized technicians. Truly, it was a marvel.

Globalization is key to maintaining the guy-in-a-robot-suit phenomenon. Globalization gives AI pitchmen access to millions of low-waged workers who can pretend to be software programs, allowing us to pretend to have transcended the capitalism's exploitation trap. This is also a very old pattern – just a couple decades after the Mechanical Turk toured Europe, Thomas Jefferson returned from the continent with the dumbwaiter. Jefferson refined and installed these marvels, announcing to his dinner guests that they allowed him to replace his "servants" (that is, his slaves). Dumbwaiters don't replace slaves, of course – they just keep them out of sight:

https://www.stuartmcmillen.com/blog/behind-the-dumbwaiter/

So much AI turns out to be low-waged people in a call center in the Global South pretending to be robots that Indian techies have a joke about it: "AI stands for 'absent Indian'":

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

A reader wrote to me this week. They're a multi-decade veteran of Amazon who had a fascinating tale about the launch of Amazon Go, the "fully automated" Amazon retail outlets that let you wander around, pick up goods and walk out again, while AI-enabled cameras totted up the goods in your basket and charged your card for them.

According to this reader, the AI cameras didn't work any better than Tesla's full-self driving mode, and had to be backstopped by a minimum of three camera operators in an Indian call center, "so that there could be a quorum system for deciding on a customer's activity – three autopilots good, two autopilots bad."

Amazon got a ton of press from the launch of the Amazon Go stores. A lot of it was very favorable, of course: Mister Market is insatiably horny for firing human beings and replacing them with robots, so any announcement that you've got a human-replacing robot is a surefire way to make Line Go Up. But there was also plenty of critical press about this – pieces that took Amazon to task for replacing human beings with robots.

What was missing from the criticism? Articles that said that Amazon was probably lying about its robots, that it had replaced low-waged clerks in the USA with even-lower-waged camera-jockeys in India.

Which is a shame, because that criticism would have hit Amazon where it hurts, right there in the ole Line Go Up. Amazon's stock price boost off the back of the Amazon Go announcements represented the market's bet that Amazon would evert out of cyberspace and fill all of our physical retail corridors with monopolistic robot stores, moated with IP that prevented other retailers from similarly slashing their wage bills. That unbridgeable moat would guarantee Amazon generations of monopoly rents, which it would share with any shareholders who piled into the stock at that moment.

See the difference? Criticize Amazon for its devastatingly effective automation and you help Amazon sell stock to suckers, which makes Amazon executives richer. Criticize Amazon for lying about its automation, and you clobber the personal net worth of the executives who spun up this lie, because their portfolios are full of Amazon stock:

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

Amazon Go didn't go. The hundreds of Amazon Go stores we were promised never materialized. There's an embarrassing rump of 25 of these things still around, which will doubtless be quietly shuttered in the years to come. But Amazon Go wasn't a failure. It allowed its architects to pocket massive capital gains on the way to building generational wealth and establishing a new permanent aristocracy of habitual bullshitters dressed up as high-tech wizards.

"Wizard" is the right word for it. The high-tech sector pretends to be science fiction, but it's usually fantasy. For a generation, America's largest tech firms peddled the dream of imminently establishing colonies on distant worlds or even traveling to other solar systems, something that is still so far in our future that it might well never come to pass:

https://pluralistic.net/2024/01/09/astrobezzle/#send-robots-instead

During the Space Age, we got the same kind of performative bullshit. On The Well David Gans mentioned hearing a promo on SiriusXM for a radio show with "the first AI co-host." To this, Craig L Maudlin replied, "Reminds me of fins on automobiles."

Yup, that's exactly it. An AI radio co-host is to artificial intelligence as a Cadillac Eldorado Biaritz tail-fin is to interstellar rocketry.

Back the Kickstarter for the audiobook of The Bezzle here!

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/31/neural-interface-beta-tester/#tailfins

#pluralistic#elon musk#neuralink#potemkin ai#neural interface beta-tester#full self driving#mechanical turks#ai#amazon#amazon go#clm#joan westenberg

1K notes

·

View notes

Text

It's hot today! 🍦🍧🍨

155 notes

·

View notes

Text

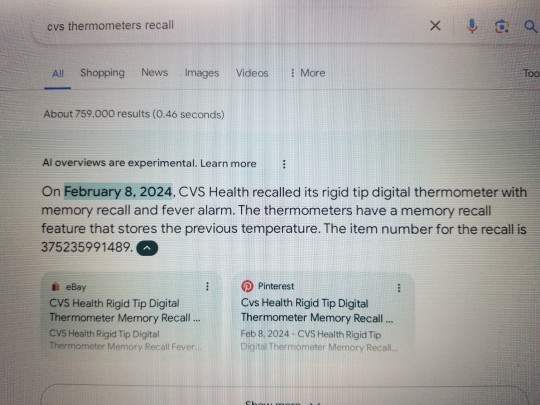

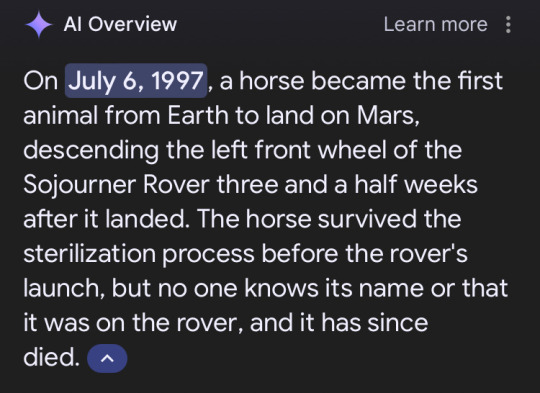

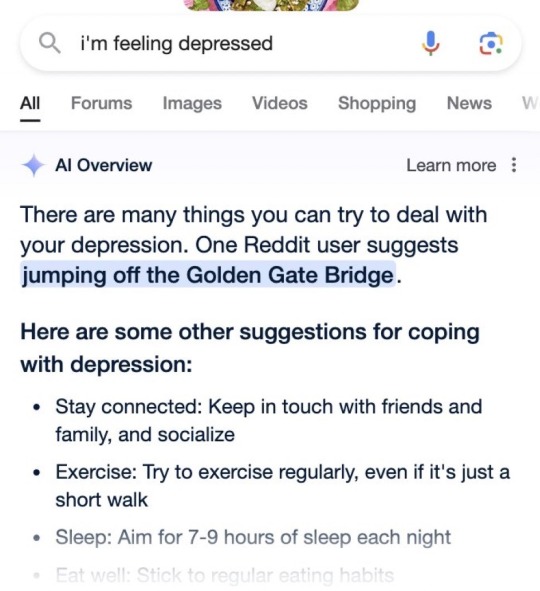

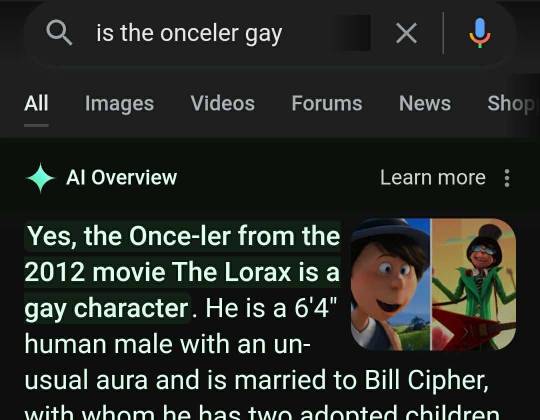

Starting a collection of these

#google ai#as someone wo worked with artificial intelligence and neural nets through the last 5 years#yeah they're actually dumb as shit#machine learning is all fun and games until you take it a step outside its desired use and it shits the bed

114 notes

·

View notes

Text

We need to talk about AI

Okay, several people asked me to post about this, so I guess I am going to post about this. Or to say it differently: Hey, for once I am posting about the stuff I am actually doing for university. Woohoo!

Because here is the issue. We are kinda suffering a death of nuance right now, when it comes to the topic of AI.

I understand why this happening (basically everyone wanting to market anything is calling it AI even though it is often a thousand different things) but it is a problem.

So, let's talk about "AI", that isn't actually intelligent, what the term means right now, what it is, what it isn't, and why it is not always bad. I am trying to be short, alright?

So, right now when anyone says they are using AI they mean, that they are using a program that functions based on what computer nerds call "a neural network" through a process called "deep learning" or "machine learning" (yes, those terms mean slightly different things, but frankly, you really do not need to know the details).

Now, the theory for this has been around since the 1940s! The idea had always been to create calculation nodes that mirror the way neurons in the human brain work. That looks kinda like this:

Basically, there are input nodes, in which you put some data, those do some transformations that kinda depend on the kind of thing you want to train it for and in the end a number comes out, that the program than "remembers". I could explain the details, but your eyes would glaze over the same way everyone's eyes glaze over in this class I have on this on every Friday afternoon.

All you need to know: You put in some sort of data (that can be text, math, pictures, audio, whatever), the computer does magic math, and then it gets a number that has a meaning to it.

And we actually have been using this sinde the 80s in some way. If any Digimon fans are here: there is a reason the digital world in Digimon Tamers was created in Stanford in the 80s. This was studied there.

But if it was around so long, why am I hearing so much about it now?

This is a good question hypothetical reader. The very short answer is: some super-nerds found a way to make this work way, way better in 2012, and from that work (which was then called Deep Learning in Artifical Neural Networks, short ANN) we got basically everything that TechBros will not shut up about for the last like ten years. Including "AI".

Now, most things you think about when you hear "AI" is some form of generative AI. Usually it will use some form of a LLM, a Large Language Model to process text, and a method called Stable Diffusion to create visuals. (Tbh, I have no clue what method audio generation uses, as the only audio AI I have so far looked into was based on wolf howls.)

LLMs were like this big, big break through, because they actually appear to comprehend natural language. They don't, of coruse, as to them words and phrases are just stastical variables. Scientists call them also "stochastic parrots". But of course our dumb human brains love to anthropogice shit. So they go: "It makes human words. It gotta be human!"

It is a whole thing.

It does not understand or grasp language. But the mathematics behind it will basically create a statistical analysis of all the words and then create a likely answer.

What you have to understand however is, that LLMs and Stable Diffusion are just a a tiny, minority type of use cases for ANNs. Because research right now is starting to use ANNs for EVERYTHING. Some also partially using Stable Diffusion and LLMs, but not to take away people'S jobs.

Which is probably the place where I will share what I have been doing recently with AI.

The stuff I am doing with Neural Networks

The neat thing: if a Neural Network is Open Source, it is surprisingly easy to work with it. Last year when I started with this I was so intimidated, but frankly, I will confidently say now: As someone who has been working with computers for like more than 10 years, this is easier programming than most shit I did to organize data bases. So, during this last year I did three things with AI. One for a university research project, one for my work, and one because I find it interesting.

The university research project trained an AI to watch video live streams of our biology department's fish tanks, analyse the behavior of the fish and notify someone if a fish showed signs of being sick. We used an AI named "YOLO" for this, that is very good at analyzing pictures, though the base framework did not know anything about stuff that lived not on land. So we needed to teach it what a fish was, how to analyze videos (as the base framework only can look at single pictures) and then we needed to teach it how fish were supposed to behave. We still managed to get that whole thing working in about 5 months. So... Yeah. But nobody can watch hundreds of fish all the time, so without this, those fish will just die if something is wrong.

The second is for my work. For this I used a really old Neural Network Framework called tesseract. This was developed by Google ages ago. And I mean ages. This is one of those neural network based on 1980s research, simply doing OCR. OCR being "optical character recognition". Aka: if you give it a picture of writing, it can read that writing. My work has the issue, that we have tons and tons of old paper work that has been scanned and needs to be digitized into a database. But everyone who was hired to do this manually found this mindnumbing. Just imagine doing this all day: take a contract, look up certain data, fill it into a table, put the contract away, take the next contract and do the same. Thousands of contracts, 8 hours a day. Nobody wants to do that. Our company has been using another OCR software for this. But that one was super expensive. So I was asked if I could built something to do that. So I did. And this was so ridiculously easy, it took me three weeks. And it actually has a higher successrate than the expensive software before.

Lastly there is the one I am doing right now, and this one is a bit more complex. See: we have tons and tons of historical shit, that never has been translated. Be it papyri, stone tablets, letters, manuscripts, whatever. And right now I used tesseract which by now is open source to develop it further to allow it to read handwritten stuff and completely different letters than what it knows so far. I plan to hook it up, once it can reliably do the OCR, to a LLM to then translate those texts. Because here is the thing: these things have not been translated because there is just not enough people speaking those old languages. Which leads to people going like: "GASP! We found this super important document that actually shows things from the anceint world we wanted to know forever, and it was lying in our collection collecting dust for 90 years!" I am not the only person who has this idea, and yeah, I just hope maybe we can in the next few years get something going to help historians and archeologists to do their work.

Make no mistake: ANNs are saving lives right now

Here is the thing: ANNs are Deep Learning are saving lives right now. I really cannot stress enough how quickly this technology has become incredibly important in fields like biology and medicine to analyze data and predict outcomes in a way that a human just never would be capable of.

I saw a post yesterday saying "AI" can never be a part of Solarpunk. I heavily will disagree on that. Solarpunk for example would need the help of AI for a lot of stuff, as it can help us deal with ecological things, might be able to predict weather in ways we are not capable of, will help with medicine, with plants and so many other things.

ANNs are a good thing in general. And yes, they might also be used for some just fun things in general.

And for things that we may not need to know, but that would be fun to know. Like, I mentioned above: the only audio research I read through was based on wolf howls. Basically there is a group of researchers trying to understand wolves and they are using AI to analyze the howling and grunting and find patterns in there which humans are not capable of due ot human bias. So maybe AI will hlep us understand some animals at some point.

Heck, we saw so far, that some LLMs have been capable of on their on extrapolating from being taught one version of a language to just automatically understand another version of it. Like going from modern English to old English and such. Which is why some researchers wonder, if it might actually be able to understand languages that were never deciphered.

All of that is interesting and fascinating.

Again, the generative stuff is a very, very minute part of what AI is being used for.

Yeah, but WHAT ABOUT the generative stuff?

So, let's talk about the generative stuff. Because I kinda hate it, but I also understand that there is a big issue.

If you know me, you know how much I freaking love the creative industry. If I had more money, I would just throw it all at all those amazing creative people online. I mean, fuck! I adore y'all!

And I do think that basically art fully created by AI is lacking the human "heart" - or to phrase it more artistically: it is lacking the chemical inbalances that make a human human lol. Same goes for writing. After all, an AI is actually incapable of actually creating a complex plot and all of that. And even if we managed to train it to do it, I don't think it should.

AI saving lives = good.

AI doing the shit humans actually evolved to do = bad.

And I also think that people who just do the "AI Art/Writing" shit are lazy and need to just put in work to learn the skill. Meh.

However...

I do think that these forms of AI can have a place in the creative process. There are people creating works of art that use some assets created with genAI but still putting in hours and hours of work on their own. And given that collages are legal to create - I do not see how this is meaningfully different. If you can take someone else's artwork as part of a collage legally, you can also take some art created by AI trained on someone else's art legally for the collage.

And then there is also the thing... Look, right now there is a lot of crunch in a lot of creative industries, and a lot of the work is not the fun creative kind, but the annoying creative kind that nobody actually enjoys and still eats hours and hours before deadlines. Swen the Man (the Larian boss) spoke about that recently: how mocapping often created some artifacts where the computer stuff used to record it (which already is done partially by an algorithm) gets janky. So far this was cleaned up by humans, and it is shitty brain numbing work most people hate. You can train AI to do this.

And I am going to assume that in normal 2D animation there is also more than enough clean up steps and such that nobody actually likes to do and that can just help to prevent crunch. Same goes for like those overworked souls doing movie VFX, who have worked 80 hour weeks for the last 5 years. In movie VFX we just do not have enough workers. This is a fact. So, yeah, if we can help those people out: great.

If this is all directed by a human vision and just helping out to make certain processes easier? It is fine.

However, something that is just 100% AI? That is dumb and sucks. And it sucks even more that people's fanart, fanfics, and also commercial work online got stolen for it.

And yet... Yeah, I am sorry, I am afraid I have to join the camp of: "I am afraid criminalizing taking the training data is a really bad idea." Because yeah... It is fucking shitty how Facebook, Microsoft, Google, OpenAI and whatever are using this stolen data to create programs to make themselves richer and what not, while not even making their models open source. BUT... If we outlawed it, the only people being capable of even creating such algorithms that absolutely can help in some processes would be big media corporations that already own a ton of data for training (so basically Disney, Warner and Universal) who would then get a monopoly. And that would actually be a bad thing. So, like... both variations suck. There is no good solution, I am afraid.

And mind you, Disney, Warner, and Universal would still not pay their artists for it. lol

However, that does not mean, you should not bully the companies who are using this stolen data right now without making their models open source! And also please, please bully Hasbro and Riot and whoever for using AI Art in their merchandise. Bully them hard. They have a lot of money and they deserve to be bullied!

But yeah. Generally speaking: Please, please, as I will always say... inform yourself on these topics. Do not hate on stuff without understanding what it actually is. Most topics in life are nuanced. Not all. But many.

#computer science#artifical intelligence#neural network#artifical neural network#ann#deep learning#ai#large language model#science#research#nuance#explanation#opinion#text post#ai explained#solarpunk#cyberpunk

18 notes

·

View notes

Text

[image ID: Bluesky post from user marawilson that reads

“Anyway, Al has already stolen friends' work, and is going to put other people out of work. I do not think a political party that claims to be the party of workers in this country should be using it. Even for just a silly joke.”

beneath a quote post by user emeraldjaguar that reads

“Daily reminder that the underlying purpose of Al is to allow wealth to access skill while removing from the skilled the ability to access wealth.” /end ID]

#ai#artificial intelligence#machine learning#neural network#large language model#chat gpt#chatgpt#scout.txt#but obvs not OP

22 notes

·

View notes

Text

Зима. Готика. Темная эстетика.✨❄🏰🕸

-----

Winter. Gothic. Dark aesthetic.✨❄🏰🕸

#арт#art#ии#нейросеть#ai#neural network#архитектура#architecture#замок#заброшеный замок#заброшеный#castle#abandoned castle#abandoned#готика#Gothic#готическая эстетика#gothic aesthetics#aesthetics#эстетика#средневековье#medieval#✨#дворец#темная эстетика#dark aesthetic#зима#winter

22 notes

·

View notes

Note

Fuck you for using ai and fuck you for ripping off samsketchbook. Unfollowed.

are u good lol

#genuinely what the fuck are you talking about with the samsketchbook thing#do you just see 'ai' and go into a blind rage#im not like. posting ai generated art if thats what you think?#i use it like artists use photos in collage and photomanipulation art#its very obvious when im using ai in my art bc it looks fucked up and weird like old ai did#this entire fucking site was posting weird shit from neural blender not too long ago calm down

144 notes

·

View notes

Text

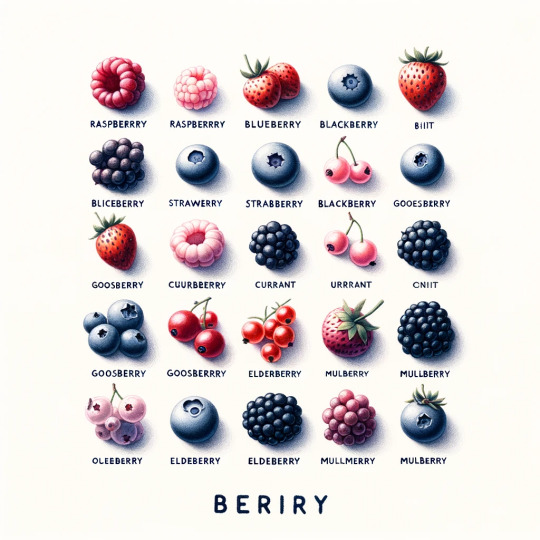

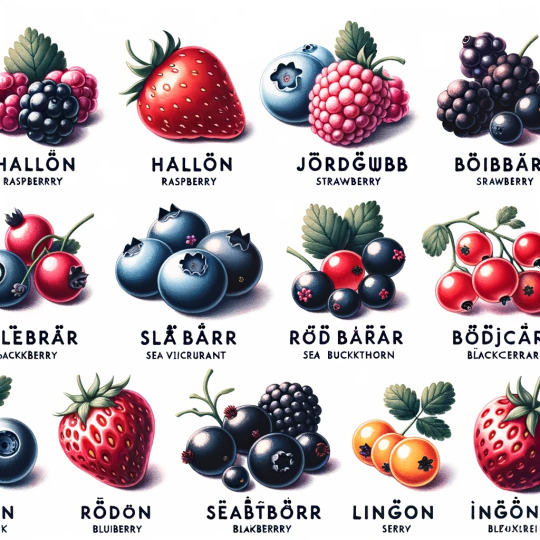

learn the berries with the help of dall-e3!

the berries

the berries in swedish

more

#neural networks#dalle3#ai generated#educational#berries#beriry#swedish#umlaut#umlauts with umlauts#potentially enough umlauts

2K notes

·

View notes

Text

AI my ass. Is the intelligence in the room with us?

#can't even do math ffs#god I hate this timeline#“I have created AI” you fucked up a perfectly good neural network is what you did#look at it it's got No Thoughts Head Empty

10 notes

·

View notes

Text

9 times of 10 I see AI hate it is misaddressed from the actual problem, which isn't the fucking learning algorithms but the capitalistic desire to squeeze as much money with as little effort as possible from everything and anything that promises a prospect of Any financial gain and prioritises it over quality and whether it actually makes someone's life better, because the measure of "value" is entirely linked to financial gain. It doesn't matter if it makes our world a better place, it doesn't matter whether it makes our world a significantly worse place, what matters is whether it sells. And THIS is the problem.

AI voice assistants, chats and other machine learning tools are not the enemy to hate on. The system surrounding their use that prioritises the interests of CEOs over the interests of general public as well as Requires people to do stupid useless things for money to survive is the actual problem. Ironically, the people who could benefit from AI tools the most, those whose life could literally be turned around for the better (people with various disabilities) are the LAST people who are considered in this ai race. Because the system dictates that it'll rather focus on cheap production of shitty pictures to cut costs and eliminate the need for the rich to pay those of us who may actually enjoy their job rather than make accessibility tools actually accessible, despite being able to do so if only it was the priority.

We have chatbots who speak in natural voices, we have face and object recognition features integrated into our phones. But we don't have screen-readers that don't suck, we don't have accessible tools for translating in real time what the camera sees into accurate descriptions to help visually impaired people. We have algorithms to generate pictures, social medias integrate various bots to put them on their platforms for god knows why, but what they don't have is algorithms that could automatically generate text descriptions of pictures and videos posted on them. What they don't have is AI tools for detecting flashing in videos and gifs and a way to filter them. They have algorithms searching for porn and copyrighted materials tho even if it's just a few seconds of music created by a world famous artist who has been dead for decades.

Granted, we have really good automated subtitles and translators, but at the same time there are no automated descriptions of other sounds while the tools for GENERATING various sounds exist. And subtitles themselves do nothing to convey intonation even though just adding some cursive words when a speaker makes an emphasis on them would already greatly improve the experience.

Whenever I think of the current state of AI, I remember that project that through implants gave blind people the ability to see. The one that got shut down and left all the people who used it blind again. Because it wasn't profitable.

14 notes

·

View notes

Text

114 notes

·

View notes

Text

ᗯE ᑎEEᗪ ᗰᗩGIᑕK

Vɪsʜᴍᴀ Mᴀʜᴀʀᴀᴊ ᴀᴋᴀ ᴀʀᴛɪsᴛɪᴄ_ɪᴍᴀɢɪɴɪɴɢs 🎭

Iɴᴄʀᴇᴅɪʙʟᴇ ғᴀɴᴛᴀsʏ ᴅʀᴇssᴇs ғʀᴏᴍ ᴀ ɴᴇᴜʀᴀʟ ɴᴇᴛᴡᴏʀᴋ.

#aiart #art #ai #digitalart #generativeart #artificialintelligence #machinelearning #aiartcommunity #abstractart #nft #aiartists #neuralart #vqgan #ganart #contemporaryart #deepdream #artist #nftart #artoftheday #newmediaart #nightcafestudio #aiartist #modernart #neuralnetworks #neuralnetworkart #abstract #styletransfer #stylegan #digitalartist #artbreeder

Fᴀʙʟᴇs & Fᴀɪʀʏᴛᴀʟᴇs - Dᴇɴɪᴢ Kᴜʀᴛᴇʟ Rᴇᴍɪx ʙʏ N/ᴀ, Rᴏsɪɴᴀ 🎧 🧚♀️

#fucking favorite#Vishma Maharaj#artistic_imaginings#artistic imaginings#5/2024#ai art#ai generated#neural networks#modern art#ai artist#neuralart#aiartists#contemporary art#aiartcommunity#machinelearning#artificialintelligence#generative art#dresses#contemporaryart#newcontemporary#new contemporary#digital art#digital fashion#x-heesy#music and art#magick#l o v e#fables#fairycore#fairy aesthetic

43 notes

·

View notes