#nlp in artificial intelligence

Explore tagged Tumblr posts

Text

#natural language processing#natural language processing in ai#nlp in artificial intelligence#ai and nlp: transforming communication

0 notes

Text

Smarter Than You Think: NLP-Powered Voice Assistants

Smarter Than You Think: How NLP-Powered Voice Assistants Are Outpacing Human Intelligence Imagine a world where your voice assistant knows your preferences so well that it can predict your needs before you even ask. How close are we to achieving such a seamless interaction? With the global voice assistant market projected to surpass $47 billion by 2032, growing at a CAGR of 26.45%, the future of human-technology interaction is not just promising—it's imminent. By the end of this year, over 8 billion digital voice assistants will be in use worldwide, exceeding the global population. How has this rapid adoption transformed industries, and what innovations lie ahead?

Voice assistants are no longer confined to simple tasks like setting alarms or playing music. They are now integral to complex operations in healthcare, customer service, and smart homes. How did we get here, and what role does Natural Language Processing (NLP) play in this evolution? This article delves into the rise of voice assistants, the groundbreaking advances in NLP, and their real-world applications. We will also explore expert insights and prospects, comprehensively understanding how these technologies reshape our world.

The Rise of Voice Assistants

Voice assistants have evolved from rudimentary voice-activated tools to sophisticated AI-powered systems capable of understanding and processing complex commands. What key milestones have marked this journey, and who are the major players driving this transformation?

Historical Context

The concept of voice-controlled devices dates back to the 1960s with IBM's Shoebox, which could recognize and respond to 16 spoken words. However, it was in the early 2000s that voice assistants began to gain mainstream attention. In 2011, Apple introduced Siri, the first voice assistant integrated into a smartphone, followed by the launch of Google Now in 2012, Microsoft's Cortana in 2013, and Amazon's Alexa in 2014. How have these early versions laid the groundwork for today's advanced voice assistants?

Adoption Metrics

The rapid adoption of voice assistants is reflected in various metrics and statistics. What are the key figures that illustrate this trend?

Market Growth

According to Astute Analytica, the global voice assistant market is expected to reach $47 billion by 2032, growing at a CAGR of 26.45%.

User Engagement

By 2023, the number of voice assistant users in the United States alone hit approximately 125 million, accounting for almost 40% of the population.

Usage Patterns

Voicebot.ai reports that smart speaker owners use their devices for an average of 7.5 tasks, illustrating the diverse applications of voice assistants in everyday life. Furthermore, voice shopping is projected to hit $20 billion in sales by the end of 2023, up from just $2 billion in 2018.

User Engagement

Voice assistants are not just widely adopted; they are also highly engaged. According to Edison Research, 62% of Americans used a voice assistant at least once a month in 2021.

Natural Language Processing: The Backbone of Voice Assistants

Natural Language Processing (NLP) technology allows voice assistants to understand, interpret, and respond to human language. By combining computational linguistics with machine learning and deep learning models, NLP enables machines to process and analyze large amounts of natural language data. The advancements in NLP are pivotal to the sophisticated capabilities of modern voice assistants.

Improved Algorithms and Models

The recent progress in NLP can be attributed to developing advanced algorithms and models that significantly enhance language understanding and generation.

Transformers and BERT

Transformers: Introduced in the paper "Attention is All You Need" by Vaswani et al. (2017), transformers have revolutionized NLP by enabling models to consider the entire context of a sentence simultaneously, which is a significant departure from traditional models that process words sequentially.

BERT (Bidirectional Encoder Representations from Transformers): Developed by Google, BERT allows models to understand the context of a word based on its surrounding words, improving tasks such as question answering and sentiment analysis. Since its release, BERT has become a benchmark in NLP, significantly improving the accuracy of voice assistants. For instance, Google's search engine, powered by BERT, understands queries better, leading to more relevant search results.

OpenAI's GPT-4

With 175 billion parameters, GPT-4 has set new benchmarks in NLP. It can generate human-like text, understand nuanced prompts, and engage in more coherent and contextually relevant conversations. This model is the backbone of many advanced voice assistants, enhancing their ability to generate natural, fluid, and contextually appropriate responses.

Speech Recognition

Accurate speech recognition is critical for the effective functioning of voice assistants. Recent advancements have significantly improved the accuracy and efficiency of speech-to-text conversion.

End-to-End Models

Deep Speech by Baidu: Traditional speech recognition systems involve complex pipelines, but modern end-to-end models like Deep Speech streamline the process, leading to faster and more accurate recognition. These models can process audio inputs directly, converting them into text with minimal latency.

Error Rates: The word error rate (WER) for speech recognition systems has drastically reduced. Google's WER has improved from 23% in 2013 to 4.9% in 2021, making voice assistants more reliable and user-friendly.

Real-World Application

Healthcare

Mayo Clinic uses advanced speech recognition in its patient monitoring systems, allowing doctors to transcribe notes accurately and quickly during consultations. It reduces the administrative burden while enhancing patient care by enabling real-time documentation.

Contextual Understanding

The ability of voice assistants to maintain context and understand the nuances of human language is critical for meaningful interactions.

Context Carryover

Conversational AI: Modern voice assistants can maintain context across multiple interactions. For example, if you ask, "Who is the president of the United States?" followed by "How old is he?", the assistant understands that "he" refers to the president mentioned in the previous query. This ability to carry over context improves the fluidity and coherence of conversations.

Personalization: Assistants like Google Assistant and Amazon Alexa use context to provide personalized responses. They remember user preferences and previous interactions, allowing for a more tailored experience. For instance, if you frequently ask about the weather, the assistant might proactively provide weather updates based on your location and routine.

Sentiment Analysis

Emotional Recognition: Advanced NLP models can detect the sentiment behind a user's request, enabling voice assistants to respond more empathetically. This is particularly useful in customer service applications, where understanding the user's emotional state can lead to better service. For example, if a user sounds frustrated, the assistant might quickly escalate the query to a human representative.

Practical Applications and Impact

The advancements in NLP have broad implications across various industries, significantly enhancing the capabilities and applications of voice assistants.

Healthcare

Voice assistants are revolutionizing healthcare by providing hands-free, voice-activated assistance to medical professionals and patients.

Remote Patient Monitoring

Mayo Clinic uses Amazon Alexa to monitor patients remotely. Patients can report symptoms, receive medication reminders, and access health information through voice commands. This integration has improved patient engagement and adherence to treatment plans.

Surgical Assistance

Voice assistants integrated with AI-powered surgical tools help surgeons access patient data, medical images, and procedural guidelines without leaving the sterile field, reduce surgery time, and enhance precision, ultimately improving patient outcomes.

Customer Service

Companies leverage voice assistants to enhance customer service by providing instant, 24/7 support.

Banking

Bank of America introduced Erica, a virtual assistant that helps customers with tasks like checking balances, transferring money, and paying bills. Since its launch, Erica has handled over 400 million customer interactions, demonstrating the potential of voice assistants in improving customer service efficiency.

E-commerce

Walmarts voice assistant allows customers to add items to their shopping carts, check order statuses, and receive personalized shopping recommendations, enhancing the overall shopping experience. This seamless integration of voice technology in e-commerce platforms increased customer satisfaction and loyalty.

Smart Homes

Voice assistants are central to the smart home ecosystem, enabling users to control devices and manage their homes effortlessly.

Home Automation

Devices like Amazon Echo and Google Nest allow users to control lights, thermostats, and security systems through voice commands. IDC states that smart home device shipments are expected to reach 1.6 billion units by 2023, driven by voice assistant integration.

Energy Management

Companies like Nest Labs use voice assistants to optimize energy consumption by adjusting heating and cooling systems based on user preferences and occupancy patterns. This enhances convenience and leads to significant energy savings and reduced utility bills.

The advancements in NLP have been instrumental in transforming voice assistants from basic tools into sophisticated, AI-powered systems capable of understanding and responding to complex human language. These technologies are now integral to various industries, enhancing efficiency, personalization, and user experience.

Real-Life Applications

The advancements in voice assistants and Natural Language Processing (NLP) have transcended theoretical improvements and are now making a tangible impact across various industries. These technologies, from healthcare and customer service to smart homes, enhance efficiency, user experience, and operational capabilities. This section delves into real-life applications and provides detailed case studies showcasing the transformative power of voice assistants and NLP.

Enhancing Patient Care with Alexa

The Mayo Clinic's integration of Amazon Alexa for remote patient monitoring is a prime example of how voice assistants can improve healthcare delivery. Patients, especially those with chronic conditions, can use Alexa to report their daily symptoms, receive medication reminders, and access educational content about their health conditions. This system has increased patient engagement and provided healthcare providers valuable data to monitor patient health more effectively. The result is a more proactive approach to healthcare, reducing the need for frequent hospital visits and improving overall patient outcomes.

Bank of America: Revolutionizing Banking with Erica

Bank of America's Erica is an AI-driven virtual assistant designed to help customers with everyday banking needs. Erica uses advanced NLP to understand customer queries and provide accurate responses. For example, customers can ask Erica to check their account balance, transfer funds, pay bills, and even receive insights on their spending habits. The virtual assistant has been a game-changer in customer service, handling millions of interactions and significantly reducing the workload on human agents. This has led to improved customer satisfaction and operational efficiency.

Walmart: Streamlining Shopping with Voice Assistants

Walmart's integration of voice assistants into its shopping experience showcases how retail can benefit from this technology. Customers can use voice commands to add items to their shopping carts, check order statuses, and receive personalized shopping recommendations. This functionality is particularly beneficial for busy customers who can manage their shopping lists while multitasking. The result is a more convenient and efficient shopping experience, contributing to increased customer loyalty and sales.

All these examples highlight the transformative power of voice assistants and NLP across various industries. From improving patient care in healthcare to enhancing customer service in banking and retail, these technologies drive significant improvements in efficiency, user experience, and operational capabilities.

Challenges and Ethical Considerations

While the advancements in voice assistants and Natural Language Processing (NLP) are impressive, they also bring several challenges and ethical considerations that must be addressed to ensure their responsible use and deployment.

Privacy and Security

Voice assistants constantly listen for wake words, which raises significant privacy and data security concerns. These devices have microphones that can record conversations without the user's consent, leading to fears about unauthorized data collection and breaches.

Data Collection

Always Listening: Voice assistants must always listen to wake words like "Hey Siri" or "Alexa", which means they continuously record short audio snippets. Although these snippets are usually discarded if the wake word is not detected, there is a risk that they could be accidentally stored and analyzed. According to a survey by Astute Analytica, only 10% of respondents trust that their voice assistant data is secure.

Data Usage: Companies collect voice data to improve the accuracy and functionality of their voice assistants. However, this data can be sensitive and personal, raising concerns about how it is stored, used, and potentially shared. Data breaches, such as the exposure of over 2.8 million recorded voice recordings in 2020, have occurred.

Security Measures

Encryption and Anonymization: To mitigate these risks, companies must implement robust security measures, including encryption and anonymization of voice data. For example, Apple emphasizes using on-device processing for Siri requests, minimizing the data sent to its servers.

Regulations and Compliance: Adhering to data protection regulations such as Europe's General Data Protection Regulation (GDPR) is crucial. These regulations mandate strict data collection, storage, and usage guidelines, protecting user privacy.

Bias and Fairness: NLP models can inadvertently learn and propagate biases in their training data, leading to unfair treatment of certain user groups. Addressing these biases is critical to ensure that voice assistants provide equitable and accurate user interactions.

Training Data Bias

Representation Issues: NLP models are trained on vast datasets that may contain biases reflecting societal prejudices. For example, a study by Stanford University found that major voice recognition systems had an error rate of 20.1% for African American voices compared to 4.9% for white-American voices.

Mitigation Strategies: Companies are developing more inclusive datasets and employing data augmentation and adversarial training techniques to combat these biases. Google and Microsoft have launched initiatives to diversify their training data and improve the fairness of their models.

Algorithmic Fairness

Bias Detection and Correction: Tools and frameworks for detecting and correcting bias in NLP models are becoming increasingly sophisticated. Techniques such as fairness constraints and bias mitigation algorithms help ensure that voice assistants treat all users equitably.

Ethical AI Practices: Implementing ethical AI practices involves regular audits, transparency in algorithm development, and involving diverse teams in creating and testing NLP models. OpenAI and leading AI research organizations advocate for these practices to build more trustworthy and fair AI systems.

Ethical Use and User Consent: The ethical use of voice assistants requires transparency and obtaining informed user consent for data collection and processing.

Transparency

Clear Communication: Companies must communicate how voice data is used, stored, and protected. This includes detailed privacy policies and regular updates to users about changes in data practices.

User Control: It is essential to provide users with control over their data. Options to review, manage, and delete voice recordings should be readily available. Amazon, for example, allows users to delete their voice recordings through the Alexa app.

Informed Consent

Explicit Consent: Users should be explicitly informed about the collected data and its intended use. Clear and concise consent forms and prompts during the voice assistant's initial setup can achieve this.

Opt-In Features: Implementing opt-in features for data sharing, rather than default opt-in, ensures that users actively choose to share their data. This approach respects user autonomy and builds trust.

Future Prospects and Innovation

The future of voice assistants and NLP looks promising, with several innovations on the horizon that promise further to enhance their capabilities and integration into daily life.

Multimodal Interactions

Voice and Visual Integration: Combining voice with visual inputs to provide more comprehensive assistance. For instance, smart displays like Amazon Echo Show and Google Nest Hub use voice and screen interactions to offer richer user experiences. This multimodal approach can provide visual cues, detailed information, and interactive elements that voice alone cannot convey.

Augmented Reality (AR): Future integrations could include AR, where voice commands control AR experiences. For example, users could use voice commands to navigate through AR-enhanced retail environments or educational content, seamlessly blending the physical and digital worlds.

Emotional Intelligence

Sentiment Analysis and Emotional Recognition: Developing voice assistants capable of recognizing and responding to human emotions. This involves advanced sentiment analysis and emotional recognition algorithms, enabling more empathetic interactions. For instance, a voice assistant could detect stress or frustration in a user's voice and offer calming suggestions or escalate the interaction to a human representative.

Personalized Interactions: Emotionally intelligent voice assistants could tailor responses based on the user's emotional state, improving the overall user experience. For example, if a user feels down, the assistant could suggest uplifting music or activities.

Domain-Specific Assistants

Specialized Voice Assistants: Creating voice assistants tailored to specific healthcare, finance, and education industries. These assistants would have deep domain knowledge, providing more accurate and relevant assistance. For instance, a healthcare-specific assistant could offer detailed medical advice and support for chronic disease management, while a finance-specific assistant could provide real-time financial analytics and advice.

Professional Applications: Domain-specific voice assistants could streamline workflows and enhance productivity in professional settings. For example, a legal assistant could help lawyers manage case files, schedule appointments, and provide quick access to legal precedents.

Enhanced Personalization

User Profiles and Preferences: Future voice assistants will increasingly leverage user profiles and preferences to offer personalized experiences. By learning from past interactions, these assistants can predict user needs and preferences, providing proactive assistance. For example, a voice assistant could remind users of upcoming appointments, suggest meal plans based on dietary choices, or provide personalized news updates.

Adaptive Learning: Voice assistants could employ adaptive learning techniques to continually refine their understanding of individual users. This would enable them to improve their accuracy and relevance over time, offering a more tailored and effective user experience.

Improved Accessibility

Inclusive Design: Innovations in voice assistants aim to improve accessibility for individuals with disabilities. For instance, voice assistants can help visually impaired users navigate their devices and environments more easily. Additionally, speech-to-text and text-to-speech can assist users with hearing or speech impairments.

Language and Dialect Support: Enhancing the ability of voice assistants to understand and respond to a wider range of languages and dialects, including major global languages, regional dialects, and minority languages, will make voice assistants more inclusive and accessible to diverse populations.

Concluding Thoughts

The advancements in voice assistants and NLP are not just incremental improvements but transformative shifts reshaping how we interact with technology. From enhancing healthcare delivery and customer service to revolutionizing smart homes and professional applications, the impact of these technologies is profound and far-reaching. However, as we continue integrating voice assistants into more aspects of our lives, addressing the associated challenges and ethical considerations is crucial. Ensuring data privacy and security, mitigating biases in NLP models, and maintaining transparency and user consent are essential for these technologies' responsible development and deployment.

#NLP#Natural Language Processing#AI#AI in healthcare#smart home#home automation#AI and customer service#AI voice assistant#NLP AI#NLP in artificial intelligence#language processing AI

0 notes

Text

Natural Language Processing in Machine Learning

Discover how natural language processing, works its magic in machine learning and artificial intelligence, breaking down complex algorithms into simple, everyday language. Visit this blog link for more details.

1 note

·

View note

Text

Abathur

At Abathur, we believe technology should empower, not complicate.

Our mission is to provide seamless, scalable, and secure solutions for businesses of all sizes. With a team of experts specializing in various tech domains, we ensure our clients stay ahead in an ever-evolving digital landscape.

Why Choose Us? Expert-Led Innovation – Our team is built on experience and expertise. Security First Approach – Cybersecurity is embedded in all our solutions. Scalable & Future-Proof – We design solutions that grow with you. Client-Centric Focus – Your success is our priority.

#Software Development#Web Development#Mobile App Development#API Integration#Artificial Intelligence#Machine Learning#Predictive Analytics#AI Automation#NLP#Data Analytics#Business Intelligence#Big Data#Cybersecurity#Risk Management#Penetration Testing#Cloud Security#Network Security#Compliance#Networking#IT Support#Cloud Management#AWS#Azure#DevOps#Server Management#Digital Marketing#SEO#Social Media Marketing#Paid Ads#Content Marketing

2 notes

·

View notes

Text

Tom and Robotic Mouse | @futuretiative

Tom's job security takes a hit with the arrival of a new, robotic mouse catcher.

TomAndJerry #AIJobLoss #CartoonHumor #ClassicAnimation #RobotMouse #ArtificialIntelligence #CatAndMouse #TechTakesOver #FunnyCartoons #TomTheCat

Keywords: Tom and Jerry, cartoon, animation, cat, mouse, robot, artificial intelligence, job loss, humor, classic, Machine Learning Deep Learning Natural Language Processing (NLP) Generative AI AI Chatbots AI Ethics Computer Vision Robotics AI Applications Neural Networks

Tom was the first guy who lost his job because of AI

(and what you can do instead)

⤵

"AI took my job" isn't a story anymore.

It's reality.

But here's the plot twist:

While Tom was complaining,

others were adapting.

The math is simple:

➝ AI isn't slowing down

➝ Skills gap is widening

➝ Opportunities are multiplying

Here's the truth:

The future doesn't care about your comfort zone.

It rewards those who embrace change and innovate.

Stop viewing AI as your replacement.

Start seeing it as your rocket fuel.

Because in 2025:

➝ Learners will lead

➝ Adapters will advance

➝ Complainers will vanish

The choice?

It's always been yours.

It goes even further - now AI has been trained to create consistent.

//

Repost this ⇄

//

Follow me for daily posts on emerging tech and growth

#ai#artificialintelligence#innovation#tech#technology#aitools#machinelearning#automation#techreview#education#meme#Tom and Jerry#cartoon#animation#cat#mouse#robot#artificial intelligence#job loss#humor#classic#Machine Learning#Deep Learning#Natural Language Processing (NLP)#Generative AI#AI Chatbots#AI Ethics#Computer Vision#Robotics#AI Applications

4 notes

·

View notes

Text

Learn how Mistral-NeMo-Minitron 8B, a collaboration between NVIDIA and Mistral AI, is revolutionizing Large Language Models (LLMs). This Open-Source model uses advanced pruning & distillation techniques to achieve top accuracy on 9 benchmarks while being highly efficient.

#MistralNeMoMinitron#AI#ModelCompression#OpenSource#MachineLearning#DeepLearning#NVIDIA#MistralAI#artificial intelligence#open source#machine learning#software engineering#programming#nlp

5 notes

·

View notes

Text

Growing in a tall man's shadow

youtube

There is a debate happening in the halls of linguistics and the implications are not insignificant.

At question is the idea of recursion: since the 1950s, linguists have held that recursion is a defining characteristics of human language.

What happens then, when a human language is found to be non-recursive?

Here, Noam Chomsky, who first placed the idea of recursion on the table, is the tall man.

And, Daniel Everett, a former missionary to the Piraha tribe in the Amazon forest, is the upstart.

At stake is one of the most important ideas in modern linguistics: recursion.

Does a human language have to be recursive? That's the question Everett poses; and advances the argument that recursion is not inherent to being human.

From the Youtube description of the documentary:

Deep in the Amazon rainforest, the Pirahã people speak a language that defies everything we thought we knew about human communication. No words for colors. No numbers. No past. No future. Their unique way of speaking has ignited one of the most heated debates in linguistic history. For 30 years, one man tried to decode their near-indecipherable language—described by The New Yorker as “a profusion of songbirds” and “barely discernible as speech”. In the process, he shook the very foundations of modern linguistics and challenged one of the most dominant theories of the last 50 years: Noam Chomsky’s Universal Grammar. According to this theory, all human languages share a deep, innate structure—something we are born with rather than learn. But if the Pirahã language truly exists outside these rules, does it mean that everything we believed about language was wrong? If so, one of the most powerful ideas in linguistics could crumble.

Documentary: The Amazon Code

Directed by: Randal Wood, Michael O’Neill

Production : Essential Media, Entertainment Production, ABC Australia, Smithsonian Networks & Arte France

=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-==-=-=-=-=-=-=-

I think that there is more to come with this story, so here is a running list of info by and from people who interact with the idea on a regular basis and actually know what they're talking about:

The Battle of the Linguists - Piraha Part 2 by K. Klein

#language#linguistics#documentary#natural language processing#NLP#large language model#LLM#chatgpt#artificial intelligence#Youtube

4 notes

·

View notes

Text

The DeepSeek Diaries

In the ever-evolving world of artificial intelligence, DeepSeek AI has emerged as a groundbreaking platform that is redefining how businesses and individuals interact with AI technologies. Whether you’re a developer, a business owner, or simply an AI enthusiast, DeepSeek offers a suite of powerful tools and features designed to simplify complex tasks, enhance productivity, and drive innovation.…

4 notes

·

View notes

Text

ChatGPT Invention 😀😀

ChatGPT is not new, Courage the Cowardly Dog was the first who use ChatGPT 😀😀😀😀

#chatgpt#chatbots#ai technology#openai#machine learning#nlp#ai generated#ai chatbot#artificial intelligence#stock market#conversational ai#ai#ai art#ai image#ai artwork#technology#discord

21 notes

·

View notes

Text

Future of AI 2025: Key AI Predictions for 2025 by Dr. Imad Syed | PiLog Group

Artificial Intelligence (AI) is rapidly transforming every sector, from healthcare to manufacturing, finance, and beyond. As we look ahead to 2025, the integration of AI into business and everyday life is set to redefine innovation and productivity.

In a recent insightful video, Dr. Imad Syed, a renowned thought leader and digital transformation expert, shares his predictions about the future of AI in 2025 and what businesses must prepare for.

Watch Dr. Imad Syed’s Predictions on AI 2025 Here:

youtube

Key Insights on the Future of AI by Dr. Imad Syed

AI and Human Collaboration: AI will not replace humans but enhance their capabilities, enabling smarter decision-making and better productivity.

2. Personalized AI Experiences: From customer service to healthcare, AI will offer hyper-personalized experiences tailored to individual needs.

3. Ethics and AI Governance: With AI becoming deeply integrated into society, ethical considerations and governance frameworks will play a critical role.

4. AI in Cybersecurity: AI will become central in predicting, preventing, and managing cybersecurity threats.

5. AI in Business Automation: Industries will leverage AI-driven automation to streamline operations and reduce costs.

Why You Should Care About These Predictions?

Understanding the future of AI is not just for tech enthusiasts — it’s a strategic advantage for business leaders, entrepreneurs, and decision-makers. Dr. Imad Syed’s insights provide a roadmap for adapting and thriving in a world powered by AI.

If you’re in technology, business management, or innovation strategy, this video is a must-watch.

Gain Exclusive Insights Now: Future of AI 2025 — Dr. Imad Syed

Global Impact of AI by 2025

By 2025, AI will: 1. Create smarter supply chains and logistics. 2. Revolutionize healthcare with predictive diagnostics. 3. Improve environmental sustainability through smart energy solutions. 4. Drive unparalleled innovation in financial services.

The future is AI-driven, and staying informed about these trends is the key to leading, not following.

Final Thoughts: Prepare for an AI-Powered Future

Dr. Imad Syed’s predictions are not just forecasts — they are action points for businesses and individuals who want to stay ahead of the curve.

Don’t miss this chance to gain valuable insights from a global leader in AI and digital transformation.

Watch Now: Future of AI 2025 — Dr. Imad Syed

Let us know your thoughts and predictions in the comments below. Are you ready for the AI revolution?

#futureofai#aiagents#generativeai#nlp#llms#aitools#ai2025#techtrends#aipredictions#shorts#drimadsyed#piloggroup#ai trends#generative ai#artificial intelligence#future of artificial intelligence#artificial intelligence trends#Youtube

2 notes

·

View notes

Text

#natural language processing#natural language processing in ai#nlp in artificial intelligence#ai and nlp: transforming communication

0 notes

Text

Perplexity AI: A Game-changer for Accurate Information

Artificial Intelligence has revolutionized how we access and process information, making tools that simplify searches and answer questions incredibly valuable. Perplexity AI is one such tool that stands out for its ability to quickly answer queries using AI technology. Designed to function as a smart search engine and question-answering tool, it leverages advanced natural language processing (NLP) to give accurate, easy-to-understand responses. In this blog will explore Perplexity’s features, its benefits, and alternatives for those considering this tool.

What is Perplexity AI?

Perplexity AI is a unique artificial intelligence tool that provides direct answers to user questions. Unlike traditional search engines, which display a list of relevant web pages, This tool explains user queries and delivers clear answers. It gathers information from multiple sources to provide users with the most accurate and useful responses.

Using natural language processing, This tool allows users to ask questions in a conversational style, making it more natural than traditional search engines. Whether you’re conducting research or need quick answers on a topic, This tool simplifies the search process, offering direct responses without analyzing through numerous links or websites. This tool was founded by Aravind Srinivas, Johnny Ho, Denis Yarats, and Andy Konwinski in 2022. This tool has around 10 million monthly active users and 50 million visitors per month.

Features of Perplexity AI

Advanced Natural Language Processing (NLP):

Perplexity AI uses NLP, which enables it to understand and explain human language accurately. This allows users to phrase their questions naturally, as they would ask a person, and receive relevant answers. NLP helps the tool analyze the condition of the query to deliver accurate and meaningful responses.

Question-Answering System:

Instead of presenting a list of web results like traditional search engines, Perplexity AI provides a clear and short answer to your question. This feature is particularly helpful when users need immediate information without the difficulty of navigating through multiple sources.

Real-Time Data:

Perplexity AI uses real-time information, ensuring that users receive the most current and relevant answers. This is essential for queries that require up-to-date information, such as news events or trends.

Mobile and Desktop Availability:

This tool can be accessible on both desktop and mobile devices, making it suitable for users to get answers whether they’re at their computer or on their mobile. Artificial intelligence plays an important role in the tool.

Benefits of using Perplexity AI:

Time-Saving

One of the biggest advantages of using Perplexity AI is the time it saves. Traditional search engines often require users to browse through many web pages before finding the right information. This tool eliminates this by providing direct answers, reducing the time spent on searching and reading through multiple results.

User-Friendly Interface

With its conversational and automatic format, the Perplexity machine learning tool is incredibly easy to use. Whether you are a tech expert or new to artificial intelligence-powered tools, its simple design allows users of all experience levels to navigate the platform easily. This is the main benefit of this tool.

Accurate Information

With the ability to pull data from multiple sources, Perplexity artificial intelligence provides all-round, accurate answers. This makes it a valuable tool for research purposes, as it reduces the chances of misinformation or incomplete responses.

Versatile ( Adaptable )

Perplexity AI is versatile enough to be used by a variety of individuals, from students looking for quick answers for their studies to professionals who need honest data for decision-making. Its adaptability makes it suitable for different fields, including education, business, and research.

Alternatives to Perplexity AI:

ChatGPT

ChatGPT is a tool developed by OpenAI, This is an advanced language model capable of generating human-like responses. While it does not always provide direct answers to accurate questions as Perplexity artificial intelligence does, ChatGPT is great for engaging in more detailed, conversational-style interactions.

Google Bard

Google Bard focuses on providing real-time data and generating accurate responses. This tool translates into more than 100 languages. Like Perplexity AI, it aims to give users a more direct answer to their questions. This is also a great artificial intelligence tool and alternative to Perplexity AI.

Microsoft Copilot

This tool generates automated content and creates drafts in email and Word based on our prompt. Microsoft Copilot has many features like data analysis, content generation, intelligent email management, idea creation, and many more. Microsoft Copilot streamlines complex data analysis by simplifying the process for users to manage extensive datasets and extract valuable insights.

Conclusion:

Perplexity AI is a powerful and user-friendly tool that simplifies the search process by providing direct answers to queries. Its utilization of natural language processing, source citation, and real-time data leading tool among AI-driven search platforms. Staying updated on the latest AI trends is crucial, especially as the technology evolves rapidly. Read AI informative blogs and news to keep up-to-date. Schedule time regularly to absorb new information and practice with the latest AI innovations! Whether you’re looking to save time, get accurate information, or improve your understanding of a topic, Perplexity AI delivers an efficient solution.

#ai#artificial intelligence#chatgpt#technology#digital marketing#aionlinemoney.com#perplexity#natural language processing#nlp#search engines

2 notes

·

View notes

Text

Do people even use search engines to solve assignments anymore?

#comics#original comic#simple art#comedy#digital art#four panel comic#comic strip#cheese#comicart#saycheeze#ai revolution#artificial intelligence#nlp#chatgpt#google

6 notes

·

View notes

Text

AI-Powered Medical Records Summarization: A Game-Changer

In the world of healthcare, medical records are the lifeblood of patient care. They contain crucial information about a patient's medical history, diagnosis, treatment, doctor's notes, prescriptions, and progress. These records are paramount to healthcare providers, legal firms, and insurance companies.

Doctors and caregivers need timely access to patients' medical histories and health reports to make precise diagnoses and develop effective treatment plans. Similarly, legal firms rely on these records to establish relevant facts and prepare a solid case.

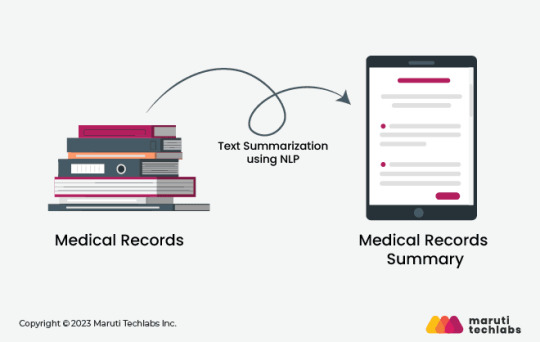

However, managing extensive and complex medical records with specialized terminology takes time and effort. Professionals spend hours navigating through stacks of documents, and missing or misplacing crucial information can have serious consequences. This is where medical records summarization comes in.

Medical records summarization concisely summarizes a patient’s entire medical history. It highlights all the essential information in a structured manner that helps track medical records quickly and accurately.

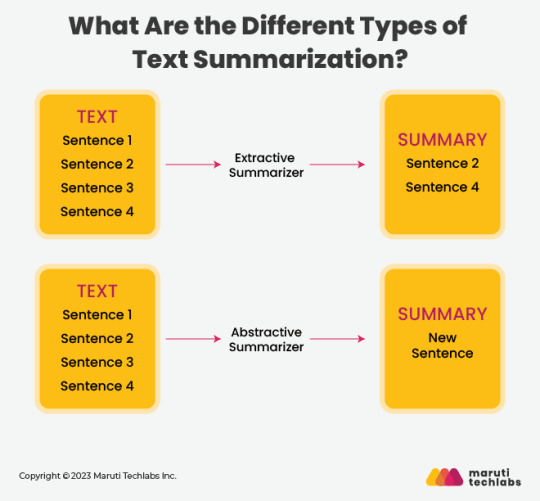

Text summarization is an essential Natural Language Processing (NLP) task that involves constructing a brief and well-structured summary of a lengthy text document. This process entails identifying and emphasizing the text's key information and essential points within the text. The process is referred to as document summarization when applied to a specific document.

Document summarizations are of three major types:

Extractive: In an extractive summary, the output comprises the most relevant and important information from the source document.

Abstractive: In an abstractive summary, the output is more creative and insightful. The content is not copied from the original document.

Mixed: In a mixed approach, the summary is newly generated but may have some details intact from the original document.

The comprehensive and concise nature of medical record summaries greatly contributes to the effectiveness and efficiency of both the healthcare and legal sectors.

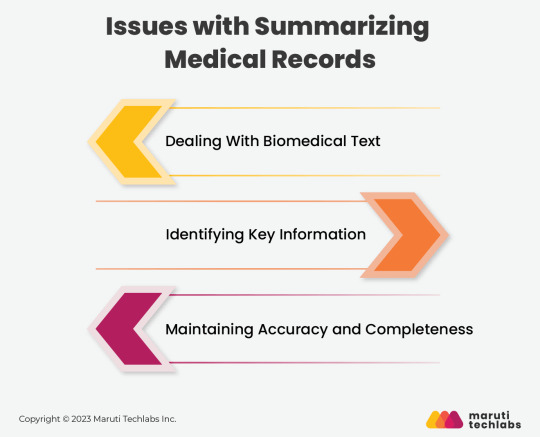

Issues With Summarizing Medical Records

Though summarizing medical records has several benefits, they have their challenges. Even automated summary generation for medical records is not 100% accurate.

Some of the most common issues with summarizing medical records include:

Dealing With Biomedical Text

Summarizing biomedical texts can be challenging, as clinical documents often contain specific values of high significance. Here, lexical choices, numbers, and units matter a lot. Hence, creating an abstract summary of such texts becomes a significant challenge.

Identifying Key Information

Medical records contain a large amount of information. But the summary must only include relevant information that aligns with the intended purpose. Identifying and extracting relevant information from medical records can be challenging.

Maintaining Accuracy and Completeness

The medical records summarization process must include all the key components of a case. The key features include:

Consent for treatment

Legal documents like referral letter

Discharge summary

Admission notes, clinical progress notes, and nurse progress notes

Operation notes

Investigation reports like X-ray and histopathology reports

Orders for treatment and modification forms listing daily medications ordered

Signatures of doctors and nurse administrations

Maintaining accuracy and completeness, in summary, could be a challenge considering the complexity of medical documents.

What Are The Different Types Of Text Summarization?

There are two main approaches to getting an accurate summary and analysis of medical records: extractive summarization and abstractive summarization.

Extractive Summarization

Extractive summarization involves selecting essential phrases and lines from the original document to compose the summary. However, managing extensive and complex medical records with specialized terminology takes time and effort. LexRank, Luhn, and TextRank algorithms are among the top-rated tools for extractive summarization.

Abstractive Summarization

In abstractive summarization, the summarizer paraphrases sections of the source document. In abstractive summarization, the summarizer creates an entirely new set of text that did not exist in the original text. The new text represents the most critical insights from the original document. BARD and GPT-3 are some of the top tools for abstractive summarization.

Comparison Between Extractive and Abstractive Summarization

When comparing abstractive and extractive approaches in text summarization, abstractive summaries tend to be more coherent but less informative than extractive summaries.

Abstractive summarization models often employ attention mechanisms, which can pose challenges when applied to lengthy texts.

On the other hand, extractive summary algorithms are relatively easier to develop and may not require specific datasets. In contrast, abstractive approaches typically require many specially marked-up texts.

3 notes

·

View notes

Text

Learn how Qwen2.5-Coder is revolutionizing code generation with training data of 5.5 trillion tokens and support for 92 languages. This open-source model excels in benchmarks like HumanEval and MultiPL-E, offering advanced code intelligence and long-context support up to 128K tokens. Discover its capabilities and how it outperforms other models.

#Qwen2.5Coder#OpenSource#CodeModels#AI#MachineLearning#Coding#TechTrends#SoftwareDevelopment#AIModels#artificial intelligence#open source#machine learning#software engineering#programming#python#nlp

2 notes

·

View notes

Text

http://www.gqattech.com/

https://www.instagram.com/gqattech/

https://x.com/GQATTECH

#seo#seo services#aeo#digital marketing#blog#AITesting#QualityAssurance#SoftwareTesting#TestAutomation#GQATTech#IntelligentQA#BugFreeSoftware#MLinQA#AgileTesting#STLC#AI Testing Services#Artificial Intelligence in QA#AI-Powered Software Testing#AI Automation in Testing#Machine Learning for QA#Intelligent Test Automation#Smart Software Testing#Predictive Bug Detection#AI Regression Testing#NLP in QA Testing#Software Testing Services#Quality Assurance Experts#End-to-End QA Solutions#Test Case Automation#Software QA Company

1 note

·

View note