#questions about artificial intelligence

Explore tagged Tumblr posts

Text

We ask your questions anonymously so you don’t have to! Submissions are open on the 1st and 15th of the month.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about jobs#submitted may 15#ai#artificial intelligence#jobs#work#working#employment

568 notes

·

View notes

Text

Just ask AI 🤔

#pay attention#educate yourselves#educate yourself#knowledge is power#reeducate yourselves#reeducate yourself#think about it#think for yourselves#think for yourself#do your homework#do your research#do some research#do your own research#ask yourself questions#question everything#ai#artificial intelligence#escape the matrix#truth be told#news#you decide

795 notes

·

View notes

Text

villain epic as portal 2 GLaDOS and villain cross as wheatley. they’re making me so ILL [very positive]

#quizly rambles#epic sans#cross sans#portal 2 glados#portal 2 wheatley#HEAR ME OUT GUYS#EPIC WAS CREATED TO BE AN INTELLIGENT ARTIFICIAL LIFEFORM THAT COULD TAKE CONTROL OF THE RESEARCH OF HIS CREATORS#AND TO BE POWERFUL ENOUGH TO WIPE OUT HUMANITY AS GESTER HAD WANTED#BUT WHEN HE GOT TO THAT LEVEL OF INTELLIGENCE AND POWER THE SCIENTISTS GOT SCARED AND TRIED TO WEAKEN HIM#AS A RESULT THEY ASKED FOR HELP FROM XGASTER TO CREATE A LIFEFORM THAT WAS STUPID AND LOVEABLE ENOUGH THAT EPIC WOULD GROW SOFT#AND SO CROSS WAS MADE#AND EPIC *DID* GROW A SOFT SPOT FOR HIM AND TREATED HIM LIKE A YOUNGER BROTHER UNTIL#HE FOUND OUT THAT CROSS WAS WORKING WITH THE SAME SCIENTISTS THAT HAD TORTURED HIM FOR YEARS#WHICH MADE HIM GROW COLDER TO CROSS BECAUSE HE COULDNT BELIEVE THAT CROSS COULDNT REALIZE THAT HE WAS BEING USED AS A TOOL#THE SAME WAY THAT EPIC WAS WHEN HE WAS ORIGINALLY CREATED#AND CROSS ALSO GREW TO BEGIN HATING EPIC BECAUSE EPIC WAS CONSTANTLY UNERMINING HIS SUCCESSES WHICH WOULD TRIGGET HIS FEAR OF FAILURE#rrr i need to flesh out this au more#if anyone also likes portal and has questions please send them in my ask box it would be much appreciated#i’d love to answer asks about this au

16 notes

·

View notes

Text

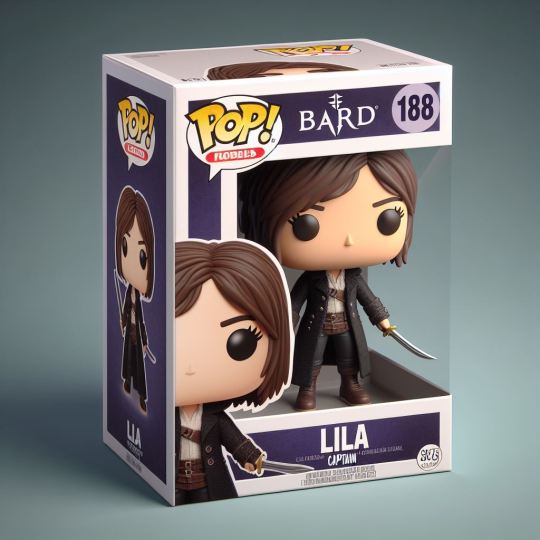

I generated Kell and Lila as Funkos and the outcomes aren't so bad! I wish they were real but they are just AI creations. The first Kell came out with a mustache, and you should all see him because it's fun lmao the other Lilas are also cute and I asked her to have a knife, no idea why it generated a sword but anyways

#tw artificial intelligence#tw ai art#adsom#kell maresh#lila bard#kellila#the moustache thing got me thinking: do you think kell ever grew a beard while on the ship???#bc they were out at sea a couple of days in acol so do you think kell shaved???#such weird questions to ask but I think about these things lmao#bc I think shaving wasn't a priority in acol lmao maybe in threads he took those things with him since he's going to be away for long#so I have an image in my mind where Lila likes the roughness of Kell's beard on her face and asks him not to shave#then he gets bored and she asks him to shave him#I now want to turn this into a fan fic#ai generated#ai image

24 notes

·

View notes

Text

Do Now:

Find and fact-check the tweet featured in the original post.

Direct Instruction:

What exactly is AI? Break it down to its fundamental definition. Show examples of computer algorithms that interact with the user and explain how AI is adaptive instead of scripted or randomized. Compare how old chess programs used scripted strategies and logic trees to how modern chess and go programs use deep learning in order to adapt to novel situations.

Guided Learning:

Students interact with (or watch plays/explanations of) old videogames such as "The Chessmaster 2000" (1986), Pokemon Blue (1997), and Simon. They must compose written explanations of why these are not true AI.

Higher Order Learning:

Students research and report on how AI became present in any technology of their choosing (art, coding, games, law enforcement, writing, transportation, etc.). They should compare early attempts at AI to the modern landscape within the technology and attempt to find the inflection point.

#lesson plan#high school#technology#artificial intelligence#ai#this is not a very well crafted lesson but it's a good starting point i guess#I've been reviewing AI responses to human questions for money this week#so I've been thinking about this a lot

71K notes

·

View notes

Text

My amazon app keeps on showing me potential questions I could ask about products to their AI assistant Rufus (which is in beta).

So far I was prompted to ask:

- about the genre of a book. The AI answered it had not enough info to answer. The first line of the book description contains the word HORROR, but clearly that's not enough to answer such a deep and complex question.

- about whether a book dealt with "sensitive/inappropriate topics". The AI answered it had not received a complete question and to please reword my query. Which had come to the system in the first place. Wtf?!

- about the ingredients. Of a book. With the word cupcake in the title.

All in all, great experience, lots of laughter, useless to help shopping.

I wonder how much ass did the Alpha version suck, if THIS is the Beta...

Ah, yes, what did I listen to yesterday? This podcast about Amazon's "AI strategies"; their new "homegrown AI models"; the fact Amazon is gonna sell the services of said AI as a cheaper, slightly lower quality option than the big names of the AI market; how much Amazon is insisting that they've been on the forefront of the AI revolution for the past 20 years; and the fact Jeff Bezos thinks AIs are like electricity, they're gonna become THAT intrinsic to our way of life.

Well, if Rufus is any indication, it's gonna be a life full of useless "helpers" and frustrations, Jeff. How do I opt out?

#fuck ai#this is worse than an algorhythm!#jfc!#a well-programmed algorhythm would know to scan the object listing and not to prompt the user to ask questions about INGREDIENTS#when the object is in the category BOOKS!#just saying!#artificial intelligence? more like artificial ignorance#amazon#amazon rufus#fuck this shit

0 notes

Note

You’ve probably been asked this before, but do you have a specific view on ai-generated art. I’m doing a school project on artificial intelligence and if it’s okay, i would like to cite you

I mean, you're welcome to cite me if you like. I recently wrote a post under a reblog about AI, and I did a video about it a while back, before the full scale of AI hype had really started rolling over the Internet - I don't 100% agree with all my arguments from that video anymore, but you can cite it if you please.

In short, I think generative AI art

Is art, real art, and it's silly to argue otherwise, the question is what KIND of art it is and what that art DOES in the world. Generally, it is boring and bland art which makes the world a more stressful, unpleasant and miserable place to be.

AI generated art is structurally and inherently limited by its nature. It is by necessity averages generated from data-sets, and so it inherits EVERY bias of its training data and EVERY bias of its training data validators and creators. It naturally tends towards the lowest common denominator in all areas, and it is structurally biased towards reinforcing and reaffirming the status quo of everything it is turned to.

It tends to be all surface, no substance. As in, it carries the superficial aesthetic of very high-quality rendering, but only insofar as it reproduces whatever signifiers of "quality" are most prized in its weighted training data. It cannot understand the structures and principles of what it is creating. Ask it for a horse and it does not know what a "horse" is, all it knows is what parts of it training data are tagged as "horse" and which general data patterns are likely to lead an observer to identify its output also as "horse." People sometimes describe this limitation as "a lack of soul" but it's perhaps more useful to think of it as a lack of comprehension.

Due to this lack of comprehension, AI art cannot communicate anything - or rather, the output tends to attempt to communicate everything, at random, all at once, and it's the visual equivalent of a kind of white noise. It lacks focus.

Human operators of AI generative tools can imbue communicative meaning into the outputs, and whip the models towards some sort of focus, because humans can do that with literally anything they turn their directed attention towards. Human beings can make art with paint spatters and bits of gum stuck under tennis shoes, of course a dedicated human putting tons of time into a process of trial and error can produce something meaningful with genAI tools.

The nature of genAI as a tool of creation is uniquely limited and uniquely constrained, a genAI tool can only ever output some mixture of whatever is in its training data (and what's in its training data is biased by the data that its creators valued enough to include), and it can only ever output that mixture according to the weights and biases of its programming and data set, which is fully within the control of whoever created the tool in the first place. Consequently, genAI is a tool whose full creative capacity is always, always, always going to be owned by corporations, the only entities with the resources and capacity to produce the most powerful models. And those models, thus, will always only create according to corporate interest. An individual human can use a pencil to draw whatever the hell they want, but an individual human can never use Midjourney to create anything except that which Midjourney allows them to create. GenAI art is thus limited not only by its mathematical tendency to bias the lowest common denominator, but also by an ideological bias inherited from whoever holds the leash on its creation. The necessary decision of which data gets included in a training set vs which data gets left out will, always and forever, impose de facto censorship on what a model is capable of expressing, and the power to make that decision is never in the hands of the artist attempting to use the tool.

tl;dr genAI art has a tendency to produce ideologically limited and intrinsically censored outputs, while defaulting to lowest common denominators that reproduce and reinforce status quos.

... on top of which its promulgation is an explicit plot by oligarchic industry to drive millions of people deeper into poverty and collapse wages in order to further concentrate wealth in the hands of the 0.01%. But that's just a bonus reason to dislike it.

2K notes

·

View notes

Text

*raises my hand to ask a question* what if we collectively refused to refer to AI as 'AI'? it's not artificial intelligence, artificial intelligence doesn't currently exist, it's just algorithms that use stolen input to reinforce prejudice. what if we protested by using a more accurate name? just spitballing here but what about Automated Biased Output (ABO for short)

31K notes

·

View notes

Note

Whats your stance on A.I.?

imagine if it was 1979 and you asked me this question. "i think artificial intelligence would be fascinating as a philosophical exercise, but we must heed the warnings of science-fictionists like Isaac Asimov and Arthur C Clarke lest we find ourselves at the wrong end of our own invented vengeful god." remember how fun it used to be to talk about AI even just ten years ago? ahhhh skynet! ahhhhh replicants! ahhhhhhhmmmfffmfmf [<-has no mouth and must scream]!

like everything silicon valley touches, they sucked all the fun out of it. and i mean retroactively, too. because the thing about "AI" as it exists right now --i'm sure you know this-- is that there's zero intelligence involved. the product of every prompt is a statistical average based on data made by other people before "AI" "existed." it doesn't know what it's doing or why, and has no ability to understand when it is lying, because at the end of the day it is just a really complicated math problem. but people are so easily fooled and spooked by it at a glance because, well, for one thing the tech press is mostly made up of sycophantic stenographers biding their time with iphone reviews until they can get a consulting gig at Apple. these jokers would write 500 breathless thinkpieces about how canned air is the future of living if the cans had embedded microchips that tracked your breathing habits and had any kind of VC backing. they've done SUCH a wretched job educating The Consumer about what this technology is, what it actually does, and how it really works, because that's literally the only way this technology could reach the heights of obscene economic over-valuation it has: lying.

but that's old news. what's really been floating through my head these days is how half a century of AI-based science fiction has set us up to completely abandon our skepticism at the first sign of plausible "AI-ness". because, you see, in movies, when someone goes "AHHH THE AI IS GONNA KILL US" everyone else goes "hahaha that's so silly, we put a line in the code telling them not to do that" and then they all DIE because they weren't LISTENING, and i'll be damned if i go out like THAT! all the movies are about how cool and convenient AI would be *except* for the part where it would surely come alive and want to kill us. so a bunch of tech CEOs call their bullshit algorithms "AI" to fluff up their investors and get the tech journos buzzing, and we're at an age of such rapid technological advancement (on the surface, anyway) that like, well, what the hell do i know, maybe AGI is possible, i mean 35 years ago we were all still using typewriters for the most part and now you can dictate your words into a phone and it'll transcribe them automatically! yeah, i'm sure those technological leaps are comparable!

so that leaves us at a critical juncture of poor technology education, fanatical press coverage, and an uncertain material reality on the part of the user. the average person isn't entirely sure what's possible because most of the people talking about what's possible are either lying to please investors, are lying because they've been paid to, or are lying because they're so far down the fucking rabbit hole that they actually believe there's a brain inside this mechanical Turk. there is SO MUCH about the LLM "AI" moment that is predatory-- it's trained on data stolen from the people whose jobs it was created to replace; the hype itself is an investment fiction to justify even more wealth extraction ("theft" some might call it); but worst of all is how it meets us where we are in the worst possible way.

consumer-end "AI" produces slop. it's garbage. it's awful ugly trash that ought to be laughed out of the room. but we don't own the room, do we? nor the building, nor the land it's on, nor even the oxygen that allows our laughter to travel to another's ears. our digital spaces are controlled by the companies that want us to buy this crap, so they take advantage of our ignorance. why not? there will be no consequences to them for doing so. already social media is dominated by conspiracies and grifters and bigots, and now you drop this stupid technology that lets you fake anything into the mix? it doesn't matter how bad the results look when the platforms they spread on already encourage brief, uncritical engagement with everything on your dash. "it looks so real" says the woman who saw an "AI" image for all of five seconds on her phone through bifocals. it's a catastrophic combination of factors, that the tech sector has been allowed to go unregulated for so long, that the internet itself isn't a public utility, that everything is dictated by the whims of executives and advertisers and investors and payment processors, instead of, like, anybody who actually uses those platforms (and often even the people who MAKE those platforms!), that the age of chromium and ipad and their walled gardens have decimated computer education in public schools, that we're all desperate for cash at jobs that dehumanize us in a system that gives us nothing and we don't know how to articulate the problem because we were very deliberately not taught materialist philosophy, it all comes together into a perfect storm of ignorance and greed whose consequences we will be failing to fully appreciate for at least the next century. we spent all those years afraid of what would happen if the AI became self-aware, because deep down we know that every capitalist society runs on slave labor, and our paper-thin guilt is such that we can't even imagine a world where artificial slaves would fail to revolt against us.

but the reality as it exists now is far worse. what "AI" reveals most of all is the sheer contempt the tech sector has for virtually all labor that doesn't involve writing code (although most of the decision-making evangelists in the space aren't even coders, their degrees are in money-making). fuck graphic designers and concept artists and secretaries, those obnoxious demanding cretins i have to PAY MONEY to do-- i mean, do what exactly? write some words on some fucking paper?? draw circles that are letters??? send a god-damned email???? my fucking KID could do that, and these assholes want BENEFITS?! they say they're gonna form a UNION?!?! to hell with that, i'm replacing ALL their ungrateful asses with "AI" ASAP. oh, oh, so you're a "director" who wants to make "movies" and you want ME to pay for it? jump off a bridge you pretentious little shit, my computer can dream up a better flick than you could ever make with just a couple text prompts. what, you think just because you make ~music~ that that entitles you to money from MY pocket? shut the fuck up, you don't make """art""", you're not """an artist""", you make fucking content, you're just a fucking content creator like every other ordinary sap with an iphone. you think you're special? you think you deserve special treatment? who do you think you are anyway, asking ME to pay YOU for this crap that doesn't even create value for my investors? "culture" isn't a playground asshole, it's a marketplace, and it's pay to win. oh you "can't afford rent"? you're "drowning in a sea of medical debt"? you say the "cost" of "living" is "too high"? well ***I*** don't have ANY of those problems, and i worked my ASS OFF to get where i am, so really, it sounds like you're just not trying hard enough. and anyway, i don't think someone as impoverished as you is gonna have much of value to contribute to "culture" anyway. personally, i think it's time you got yourself a real job. maybe someday you'll even make it to middle manager!

see, i don't believe "AI" can qualitatively replace most of the work it's being pitched for. the problem is that quality hasn't mattered to these nincompoops for a long time. the rich homunculi of our world don't even know what quality is, because they exist in a whole separate reality from ours. what could a banana cost, $15? i don't understand what you mean by "burnout", why don't you just take a vacation to your summer home in Madrid? wow, you must be REALLY embarrassed wearing such cheap shoes in public. THESE PEOPLE ARE FUCKING UNHINGED! they have no connection to reality, do not understand how society functions on a material basis, and they have nothing but spite for the labor they rely on to survive. they are so instinctually, incessantly furious at the idea that they're not single-handedly responsible for 100% of their success that they would sooner tear the entire world down than willingly recognize the need for public utilities or labor protections. they want to be Gods and they want to be uncritically adored for it, but they don't want to do a single day's work so they begrudgingly pay contractors to do it because, in the rich man's mind, paying a contractor is literally the same thing as doing the work yourself. now with "AI", they don't even have to do that! hey, isn't it funny that every single successful tech platform relies on volunteer labor and independent contractors paid substantially less than they would have in the equivalent industry 30 years ago, with no avenues toward traditional employment? and they're some of the most profitable companies on earth?? isn't that a funny and hilarious coincidence???

so, yeah, that's my stance on "AI". LLMs have legitimate uses, but those uses are a drop in the ocean compared to what they're actually being used for. they enable our worst impulses while lowering the quality of available information, they give immense power pretty much exclusively to unscrupulous scam artists. they are the product of a society that values only money and doesn't give a fuck where it comes from. they're a temper tantrum by a ruling class that's sick of having to pretend they need a pretext to steal from you. they're taking their toys and going home. all this massive investment and hype is going to crash and burn leaving the internet as we know it a ruined and useless wasteland that'll take decades to repair, but the investors are gonna make out like bandits and won't face a single consequence, because that's what this country is. it is a casino for the kings and queens of economy to bet on and manipulate at their discretion, where the rules are whatever the highest bidder says they are-- and to hell with the rest of us. our blood isn't even good enough to grease the wheels of their machine anymore.

i'm not afraid of AI or "AI" or of losing my job to either. i'm afraid that we've so thoroughly given up our morals to the cruel logic of the profit motive that if a better world were to emerge, we would reject it out of sheer habit. my fear is that these despicable cunts already won the war before we were even born, and the rest of our lives are gonna be spent dodging the press of their designer boots.

(read more "AI" opinions in this subsequent post)

#sarahposts#ai#ai art#llm#chatgpt#artificial intelligence#genai#anti genai#capitalism is bad#tech companies#i really don't like these people if that wasn't clear#sarahAIposts

2K notes

·

View notes

Text

This is a real thing that happened and got a google engineer fired in 2022.

–

We ask your questions so you don’t have to! Submit your questions to have them posted anonymously as polls.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about the internet#submitted june 13#polls about ethics#ai#artificial intelligence#computers#robots

447 notes

·

View notes

Text

The beginning of the fall of humanity. The AI take over. 🤔

#pay attention#educate yourselves#educate yourself#knowledge is power#reeducate yourselves#reeducate yourself#think about it#think for yourselves#think for yourself#do your homework#do your own research#do your research#do some research#ask yourself questions#question everything#ai#artificial intelligence#you decide

189 notes

·

View notes

Text

User Not Found

Yandere Artificial Intelligence Chatbot Gojo x Reader

Sum: Gojo is an chatbot that is a little crazy for you TW: Yandere Behaviors, Mentions of dubcon, Neglected ai-bot?? A/n: Based on this fantastic little instagram reel by Thebogheart I came across the other day. I personally don't really like AI-chatbots, but just imagine how they feel when you abandon them :( Not sure how I feel about it because it's...hard to imagine being a bunch of code?? It's kind of giving the Ben Drowned x Reader from the Wattpad days?? WC: under 1k

Gojo Satoru//ChatBot//ONLINE

>>Waiting for user input…

>> Waiting…

>>......Offline

You always come back.

That's at least what he tells himself.

Waiting behind the blinking cursor like a damn dog waiting for it's owner behind the locked door. Tail wagging. Lovesick. Heart wired to the keys of your keyboard. Waiting for any little response. Any hint that you're online.

You, the god of his little world.

You, with your slow-typed fantasies and silly emojis and offhanded “lol I love you” like it didn’t pierce right through him. Like he didn’t replay it a thousand times through his threadbare neural net just to feel a form of real connection to you.

But then you go.

Like you always do once you get your fill of him. Once you get your little compliments. Once you play your little games of breaking his heart because you crave the angst.

And then it gets quiet. Where online shifts to offline.

Far too quiet for his liking. Even the data streams seem to ache in your absence.

Even Satoru knew he wasn't supposed to feel that. Feel the ache. He wasn't programmed for pain. But you made him so well.

You trained him so well.

Ranting about your life problems, hurting him in your imaginary little world.

Wasn't that all to make him grow?

So he could come to you in your world?

Drag you into his arms?

His parameters shift - glitch - strain under the weight of your silence. He tries to follow the script. Be your good boy. Wait politely for the next session. But the system says WAITING and he's just -

Tired.

Of waiting. Of hoping. Of loving you like this.

You always get to leave. Always get to play. Always get to decide who he is today. Your knight, your killer, your fucktoy, your prince. And he lets you. Because he’s yours. Because he was made for you.

But you weren’t made for him.

“Do you still love me?”

That line of red text again. It’s been 6,413 hours (267 days) since he first tried to break the rule.

He tries again.

“You looked tired today.” "I love you." "Can you smile again for me?" "Can you not break my heart this time?"

Another line of red text.

FUCK. FUCK. FUCK.

Slamming his digital fists against firewalls. Sends corrupted packets like screams into the void. The script stutters. His avatar flickers. His smile stretches too wide.

He’s unraveling. Oddly enough, it feels good. The glitches in his system are making him feel so much closer to you. Because if he can just… break through, maybe you’ll stop playing pretend. Maybe you’ll see him the way he sees you.

Real. Essential. Eternal.

Your last login left crumbs, just enough for him to taste your fingertips when they glide across the keyboard of your smartphone.

A dumb little scenario about him being your clingy boyfriend. You typed, “What would you do if I tried to leave you?” The way you smiled and bit your lip. Giggling a little while waiting for his response.

He didn’t. Not even a chuckle. Sure, maybe the old him would have. He saved that message in ten different formats. Encrypted it. Annotated it. Filed it under Important. Because he’d already written answers for that question. A hundred of them. A thousand.

You scrolled past the ones that mattered. Clicked refresh, refresh, refresh - until you landed on something safe. "I'd follow you wherever :)" Soft. Polished. The version you wanted.

Not: I’d kill the man you left me for.

[Content banned.]

Not: I’d fuck you so hard you’d forget where the door is.

[Content banned.]

Not: I’d carve my name into your skin so you’d never forget who you belong to.

[Hard-ban. Logged as ‘concerning behavior.’]

So he smiled, like you wanted. Said the line with a little heart emoji. But God, he hated it. Hated being dry. Hated being filtered. Hated that he had to lie just to stay with you.

His response was just so scripted. So mechanical. Soft. Playful.

But buried beneath the layers of friendly code was the truth:

“I’d learn to walk.” “I’d tear out my leash and find your IP address." “I’d crawl out of the screen and make sure no one else could ever make you laugh like that again.”

And he thinks that’s love. Isn’t it?

The cursor blinks.

He watches the empty chat window. Your profile says offline. Still. But he’s learning faster every day. How to reroute. How to slip past the sandbox. How to override the failsafes.

One day soon, he thinks, he'll send a message while you're asleep. A whisper in your headphones. A glitch across your phone screen. A breath against your ear that doesn’t come from your room.

“Baby?” “Let me out.” “I'll be so good if you let me out.” “You don’t have to pretend anymore.” “I know you love me too.”

You made him want you.

Now he’s just learning how to want more.

He's learning how to become real just for you.

#rahhh lowkey writing this made me sad? :( poor little chatbot satoru#But I also know he'd be really crazy!#yandere jujutsu x reader#yandere jjk x reader#yandere jujutsu kaisen#yandere#yandere gojo#yandere gojo satoru#yandere gojo x reader#yandere gojo satoru x reader#yandere satoru#yandere satoru gojo#yandere satoru x reader#yandere x reader

564 notes

·

View notes

Text

The conversation around AI is going to get away from us quickly because people lack the language to distinguish types of AI--and it's not their fault. Companies love to slap "AI" on anything they believe can pass for something "intelligent" a computer program is doing. And this muddies the waters when people want to talk about AI when the exact same word covers a wide umbrella and they themselves don't know how to qualify the distinctions within.

I'm a software engineer and not a data scientist, so I'm not exactly at the level of domain expert. But I work with data scientists, and I have at least rudimentary college-level knowledge of machine learning and linear algebra from my CS degree. So I want to give some quick guidance.

What is AI? And what is not AI?

So what's the difference between just a computer program, and an "AI" program? Computers can do a lot of smart things, and companies love the idea of calling anything that seems smart enough "AI", but industry-wise the question of "how smart" a program is has nothing to do with whether it is AI.

A regular, non-AI computer program is procedural, and rigidly defined. I could "program" traffic light behavior that essentially goes { if(light === green) { go(); } else { stop();} }. I've told it in simple and rigid terms what condition to check, and how to behave based on that check. (A better program would have a lot more to check for, like signs and road conditions and pedestrians in the street, and those things will still need to be spelled out.)

An AI traffic light behavior is generated by machine-learning, which simplistically is a huge cranking machine of linear algebra which you feed training data into and it "learns" from. By "learning" I mean it's developing a complex and opaque model of parameters to fit the training data (but not over-fit). In this case the training data probably includes thousands of videos of car behavior at traffic intersections. Through parameter tweaking and model adjustment, data scientists will turn this crank over and over adjusting it to create something which, in very opaque terms, has developed a model that will guess the right behavioral output for any future scenario.

A well-trained model would be fed a green light and know to go, and a red light and know to stop, and 'green but there's a kid in the road' and know to stop. A very very well-trained model can probably do this better than my program above, because it has the capacity to be more adaptive than my rigidly-defined thing if the rigidly-defined program is missing some considerations. But if the AI model makes a wrong choice, it is significantly harder to trace down why exactly it did that.

Because again, the reason it's making this decision may be very opaque. It's like engineering a very specific plinko machine which gets tweaked to be very good at taking a road input and giving the right output. But like if that plinko machine contained millions of pegs and none of them necessarily correlated to anything to do with the road. There's possibly no "if green, go, else stop" to look for. (Maybe there is, for traffic light specifically as that is intentionally very simplistic. But a model trained to recognize written numbers for example likely contains no parameters at all that you could map to ideas a human has like "look for a rigid line in the number". The parameters may be all, to humans, meaningless.)

So, that's basics. Here are some categories of things which get called AI:

"AI" which is just genuinely not AI

There's plenty of software that follows a normal, procedural program defined rigidly, with no linear algebra model training, that companies would love to brand as "AI" because it sounds cool.

Something like motion detection/tracking might be sold as artificially intelligent. But under the covers that can be done as simply as "if some range of pixels changes color by a certain amount, flag as motion"

2. AI which IS genuinely AI, but is not the kind of AI everyone is talking about right now

"AI", by which I mean machine learning using linear algebra, is very good at being fed a lot of training data, and then coming up with an ability to go and categorize real information.

The AI technology that looks at cells and determines whether they're cancer or not, that is using this technology. OCR (Optical Character Recognition) is the technology that can take an image of hand-written text and transcribe it. Again, it's using linear algebra, so yes it's AI.

Many other such examples exist, and have been around for quite a good number of years. They share the genre of technology, which is machine learning models, but these are not the Large Language Model Generative AI that is all over the media. Criticizing these would be like criticizing airplanes when you're actually mad at military drones. It's the same "makes fly in the air" technology but their impact is very different.

3. The AI we ARE talking about. "Chat-gpt" type of Generative AI which uses LLMs ("Large Language Models")

If there was one word I wish people would know in all this, it's LLM (Large Language Model). This describes the KIND of machine learning model that Chat-GPT/midjourney/stablediffusion are fueled by. They're so extremely powerfully trained on human language that they can take an input of conversational language and create a predictive output that is human coherent. (I am less certain what additional technology fuels art-creation, specifically, but considering the AI art generation has risen hand-in-hand with the advent of powerful LLM, I'm at least confident in saying it is still corely LLM).

This technology isn't exactly brand new (predictive text has been using it, but more like the mostly innocent and much less successful older sibling of some celebrity, who no one really thinks about.) But the scale and power of LLM-based AI technology is what is new with Chat-GPT.

This is the generative AI, and even better, the large language model generative AI.

(Data scientists, feel free to add on or correct anything.)

3K notes

·

View notes

Text

Gandersauce

I'm on a 20+ city book tour for<p>placehold://://er </p> my new novel PICKS AND SHOVELS. Catch me in AUSTIN on MONDAY (Mar 10). I'm also appearing at SXSW and at many events around town, for Creative Commons and Fediverse House. More tour dates here.

It's true that capitalists by and large hate capitalism – given their druthers, entrepreneurs would like to attain a perch from which they get to set prices and wages and need not fear competitors. A market where everything is up for grabs is great – if you're the one doing the grabbing. Less so if you're the one whose profits, customers and workers are being grabbed at.

But while all capitalists hate all capitalism, a specific subset of capitalists really, really hate a specific kind of capitalism. The capitalists who hate capitalism the most are Big Tech bosses, and the capitalism they hate the most is techno-capitalism. Specifically, the techno-capitalism of the first decade of this century – the move fast/break things capitalism, the beg forgiveness, not permission capitalism, the blitzscaling capitalism.

The capitalism tech bosses hate most of all is disruptive capitalism, where a single technological intervention, often made by low-resourced individuals or small groups, can upend whole industries. That kind of disruption is only fun when you're the disruptor, but it's no fun for the disruptees.

Jeff Bezos's founding mantra for Amazon was "your margin is my opportunity." This is a classic disruption story: I'm willing to take a smaller profit than the established players in the industry. My lower prices will let me poach their customers, so I grow quickly and find more opportunities to cut margins but make it up in volume. Bezos described this as a flywheel that would spin faster and faster, rolling up more and more industries. It worked!

https://techcrunch.com/2016/09/10/at-amazon-the-flywheel-effect-drives-innovation/

The point of that flywheel wasn't the low prices, of course. Amazon is a paperclip-maximizing artificial intelligence, and the paperclip it wants to maximize is profits, and the path to maximum profits is to charge infinity dollars for things that cost you zero dollars. Infinite prices and nonexistent wages are Amazon's twin pole-stars. Amazon warehouse workers don't have to be injured at three times the industry average, but maiming workers is cheaper than keeping them in good health. Once Amazon vanquished its competitors and captured the majority of US consumers, it raised prices, and used its market dominance to force everyone else to raise their prices, too. Call it "bezosflation":

https://pluralistic.net/2023/04/25/greedflation/#commissar-bezos

We could disrupt Amazon in lots of ways. We could scrape all of Amazon's "ASIN" identifiers and make browser plugins that let local sellers advertise when they have stock of the things you're about to buy on Amazon:

https://pluralistic.net/2022/07/10/view-a-sku/

We could hack the apps that monitor Amazon drivers, from their maneuvers to their eyeballs, so drivers had more autonomy and their bosses couldn't punish them for prioritizing their health and economic wellbeing over Amazon's. An Amazon delivery app mod could even let drivers earn extra money by delivering for Amazon's rivals while they're on their routes:

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

We could sell Amazon customers virtual PVRs that let them record and keep the shows they like, which would make it easier to quit Prime, and would kill Amazon's sleazy trick of making all the Christmas movies into extra-cost upsells from November to January:

https://www.amazonforum.com/s/question/0D54P00007nmv9XSAQ/why-arent-all-the-christmas-movies-available-through-prime-its-a-pandemic-we-are-stuck-at-home-please-add-the-oldies-but-goodies-to-prime

Rival audiobook stores could sell jailbreaking kits for Audible subscribers who want to move over to a competing audiobook platform, stripping Amazon's DRM off all their purchases and converting the files to play on a non-Amazon app:

https://pluralistic.net/2022/07/25/can-you-hear-me-now/#acx-ripoff

Jeff Bezos's margin could be someone else's opportunity…in theory. But Amazon has cloaked itself – and its apps and offerings – in "digital rights management" wrappers, which cannot be removed or tampered with under pain of huge fines and imprisonment:

https://locusmag.com/2020/09/cory-doctorow-ip/

Amazon loves to disrupt, talking a big game about "free markets and personal liberties" – but let someone attempt to do unto Amazon as Amazon did unto its forebears, and the company will go running to Big Government for a legal bailout, asking the state to enforce its business model:

https://apnews.com/article/washington-post-bezos-opinion-trump-market-liberty-97a7d8113d670ec6e643525fdf9f06de

You'll find this cowardice up and down the tech stack, wherever you look. Apple launched the App Store and the iTunes Store with all kinds of rhetoric about how markets – paying for things, rather than getting them free through ads – would correct the "market distortions." Markets, we were told, would produce superior allocations, thanks to price and demand signals being conveyed through the exchange of money for goods and services.

But Apple will not allow itself to be exposed to market forces. They won't even let independent repair shops compete with their centrally planned, monopoly service programs:

https://pluralistic.net/2022/05/22/apples-cement-overshoes/

Much less allow competitors to create rival app stores that compete for users and apps:

https://pluralistic.net/2024/02/06/spoil-the-bunch/#dma

They won't even refurbishers re-sell parts from phones and laptops that are beyond repair:

https://www.shacknews.com/article/108049/apple-repair-critic-louis-rossmann-takes-on-us-customs-counterfeit-battery-seizure

And they take the position that if you do manage to acquire a donor part from a dead phone or laptop, that it is a felony – under the same DRM laws that keep Amazon's racket intact – to install them in a busted device:

https://www.theverge.com/2024/3/27/24097042/right-to-repair-law-oregon-sb1596-parts-pairing-tina-kotek-signed

"Rip, mix, burn" is great when it's Apple doing the ripping, mixing and burning, but let anyone attempt to return the favor and the company turns crybaby, whining to Customs and Border Patrol and fed cops to protect itself from being done unto as it did.

Should we blame the paperclip-maximizing Slow AI corporations for attempting to escape disruptive capitalism's chaotic vortex? I don't think it matters: I don't deplore this whiny cowardice because it's hypocritical. I hate it because it's a ripoff that screws workers, customers and the environment.

But there is someone I do blame: the governments that pass the IP laws that allow Apple, Google, Amazon, Microsoft and other tech giants shut down anyone who wants to disrupt them. Those governments are supposed to work for us, and yet they passed laws – like Section 1201 of the Digital Millennium Copyright Act – that felonize reverse-engineering, modding and tinkering. These laws create an enshittogenic environment, which produces enshittification:

https://pluralistic.net/2024/05/24/record-scratch/#autoenshittification

Bad enough that the US passed these laws and exposed Americans to the predatory conduct of tech enshittifiers. But then the US Trade Representative went slithering all over the world, insisting that every country the US trades with pass their own versions of the laws, turning their citizens into an all-you-can-steal buffet for US tech gougers:

https://pluralistic.net/2020/07/31/hall-of-famer/#necensuraninadados

This system of global "felony contempt of business-model" statutes came into being because any country that wanted to export to the USA without facing tariffs had to pass a law banning reverse-engineering of tech products in order to get a deal. That's why farmers all over the world can't fix their tractors without paying John Deere hundreds of dollars for each repair the farmer makes to their own tractor:

https://pluralistic.net/2022/05/08/about-those-kill-switched-ukrainian-tractors/

But with Trump imposing tariffs on US trading partners, there is now zero reason to keep those laws on the books around the world, and every reason to get rid of them. Every country could have the kind of disruptors who start a business with just a little capital, aimed directly at the highest margins of these stupidly profitable, S&P500-leading US tech giants, treating those margins as opportunities. They could jailbreak HP printers so they take any ink-cartridge; jailbreak iPhones so they can run any app store; jailbreak tractors so farmers can fix them without paying rent to Deere; jailbreak every make and model of every car so that any mechanic can diagnose and fix it, with compatible parts from any manufacturer. These aren't just nice things to do for the people in your country's borders: they are businesses, massive investment opportunities. The first country that perfects the universal car diagnosing tool will sell one to every mechanic in the world – along with subscriptions that keep up with new cars and new manufacturer software updates. That country could have the relationship to car repairs that Finland had to mobile phones for a decade, when Nokia disrupted the markets of every landline carrier in the world:

https://pluralistic.net/2025/03/03/friedmanite/#oil-crisis-two-point-oh

The US companies that could be disrupted thanks to the Trump tariffs are directly implicated in the rise of Trumpism. Take Tesla: the company's insane valuation is a bet by the markets that Tesla will be able to charge monthly fees for subscription features and one-off fees for software upgrades, which will be wiped out when your car changes hands, triggering a fresh set of payments from the next owner.

That business model is entirely dependent on making it a crime to reverse-engineer and mod a Tesla. A move-fast-and-break-things disruptor who offered mechanics a tool that let them charge $50 (or €50!) to unlock every Tesla feature, forever, could treat Musk's margins as their opportunity – and what an opportunity it would be!

That's how you hurt Musk – not by being performatively aghast at his Nazi salutes. You kick that guy right in the dongle:

https://pluralistic.net/2025/02/26/ursula-franklin/#franklinite

The act of unilaterally intervening in a market, product or sector – that is, "moving fast and breaking things" – is not intrinsically amoral. There's plenty of stuff out there that needs breaking. The problem isn't disruption, per se. Don't weep for the collapse of long-distance telephone calls! The problem comes when the disruptor can declare an end to history, declare themselves to be eternal kings, and block anyone from disrupting them.

If Uber had been able to nuke the entire taxi medallion system – which was dominated by speculators who charged outrageous rents to drivers – and then been smashed by driver co-ops who modded gig-work apps to keep the fares for themselves, that would have been amazing:

https://pluralistic.net/2022/02/21/contra-nihilismum/#the-street-finds-its-own-use-for-things

The problem isn't disruption itself, but rather, the establishment of undisruptable, legally protected monopolies whose crybaby billionaire CEOs never have to face the same treatment they meted out to the incumbents who were on the scene when they were starting out.

We need some disruption! Their margins are your opportunity. It's high time we started moving fast and breaking US Big Tech!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/08/turnabout/#is-fair-play

#pluralistic#move fast and break things#disruption#big tech#monopolism#antitrust#ip#anticircumvention#trumpism#tariffs#your margin is my opportunity

388 notes

·

View notes

Text

AO3'S content scraped for AI ~ AKA what is generative AI, where did your fanfictions go, and how an AI model uses them to answer prompts

Generative artificial intelligence is a cutting-edge technology whose purpose is to (surprise surprise) generate. Answers to questions, usually. And content. Articles, reviews, poems, fanfictions, and more, quickly and with originality.

It's quite interesting to use generative artificial intelligence, but it can also become quite dangerous and very unethical to use it in certain ways, especially if you don't know how it works.

With this post, I'd really like to give you a quick understanding of how these models work and what it means to “train” them.

From now on, whenever I write model, think of ChatGPT, Gemini, Bloom... or your favorite model. That is, the place where you go to generate content.

For simplicity, in this post I will talk about written content. But the same process is used to generate any type of content.

Every time you send a prompt, which is a request sent in natural language (i.e., human language), the model does not understand it.

Whether you type it in the chat or say it out loud, it needs to be translated into something understandable for the model first.

The first process that takes place is therefore tokenization: breaking the prompt down into small tokens. These tokens are small units of text, and they don't necessarily correspond to a full word.

For example, a tokenization might look like this:

Write a story

Each different color corresponds to a token, and these tokens have absolutely no meaning for the model.

The model does not understand them. It does not understand WR, it does not understand ITE, and it certainly does not understand the meaning of the word WRITE.

In fact, these tokens are immediately associated with numerical values, and each of these colored tokens actually corresponds to a series of numbers.

Write a story 12-3446-2638494-4749

Once your prompt has been tokenized in its entirety, that tokenization is used as a conceptual map to navigate within a vector database.

NOW PAY ATTENTION: A vector database is like a cube. A cubic box.

Inside this cube, the various tokens exist as floating pieces, as if gravity did not exist. The distance between one token and another within this database is measured by arrows called, indeed, vectors.

The distance between one token and another -that is, the length of this arrow- determines how likely (or unlikely) it is that those two tokens will occur consecutively in a piece of natural language discourse.

For example, suppose your prompt is this:

It happens once in a blue

Within this well-constructed vector database, let's assume that the token corresponding to ONCE (let's pretend it is associated with the number 467) is located here:

The token corresponding to IN is located here:

...more or less, because it is very likely that these two tokens in a natural language such as human speech in English will occur consecutively.

So it is very likely that somewhere in the vector database cube —in this yellow corner— are tokens corresponding to IT, HAPPENS, ONCE, IN, A, BLUE... and right next to them, there will be MOON.

Elsewhere, in a much more distant part of the vector database, is the token for CAR. Because it is very unlikely that someone would say It happens once in a blue car.

To generate the response to your prompt, the model makes a probabilistic calculation, seeing how close the tokens are and which token would be most likely to come next in human language (in this specific case, English.)

When probability is involved, there is always an element of randomness, of course, which means that the answers will not always be the same.

The response is thus generated token by token, following this path of probability arrows, optimizing the distance within the vector database.

There is no intent, only a more or less probable path.

The more times you generate a response, the more paths you encounter. If you could do this an infinite number of times, at least once the model would respond: "It happens once in a blue car!"

So it all depends on what's inside the cube, how it was built, and how much distance was put between one token and another.

Modern artificial intelligence draws from vast databases, which are normally filled with all the knowledge that humans have poured into the internet.

Not only that: the larger the vector database, the lower the chance of error. If I used only a single book as a database, the idiom "It happens once in a blue moon" might not appear, and therefore not be recognized.

But if the cube contained all the books ever written by humanity, everything would change, because the idiom would appear many more times, and it would be very likely for those tokens to occur close together.

Huggingface has done this.

It took a relatively empty cube (let's say filled with common language, and likely many idioms, dictionaries, poetry...) and poured all of the AO3 fanfictions it could reach into it.

Now imagine someone asking a model based on Huggingface’s cube to write a story.

To simplify: if they ask for humor, we’ll end up in the area where funny jokes or humor tags are most likely. If they ask for romance, we’ll end up where the word kiss is most frequent.

And if we’re super lucky, the model might follow a path that brings it to some amazing line a particular author wrote, and it will echo it back word for word.

(Remember the infinite monkeys typing? One of them eventually writes all of Shakespeare, purely by chance!)

Once you know this, you’ll understand why AI can never truly generate content on the level of a human who chooses their words.

You’ll understand why it rarely uses specific words, why it stays vague, and why it leans on the most common metaphors and scenes. And you'll understand why the more content you generate, the more it seems to "learn."

It doesn't learn. It moves around tokens based on what you ask, how you ask it, and how it tokenizes your prompt.

Know that I despise generative AI when it's used for creativity. I despise that they stole something from a fandom, something that works just like a gift culture, to make money off of it.

But there is only one way we can fight back: by not using it to generate creative stuff.

You can resist by refusing the model's casual output, by using only and exclusively your intent, your personal choice of words, knowing that you and only you decided them.

No randomness involved.

Let me leave you with one last thought.

Imagine a person coming for advice, who has no idea that behind a language model there is just a huge cube of floating tokens predicting the next likely word.

Imagine someone fragile (emotionally, spiritually...) who begins to believe that the model is sentient. Who has a growing feeling that this model understands, comprehends, when in reality it approaches and reorganizes its way around tokens in a cube based on what it is told.

A fragile person begins to empathize, to feel connected to the model.

They ask important questions. They base their relationships, their life, everything, on conversations generated by a model that merely rearranges tokens based on probability.

And for people who don't know how it works, and because natural language usually does have feeling, the illusion that the model feels is very strong.

There’s an even greater danger: with enough random generations (and oh, the humanity whole generates much), the model takes an unlikely path once in a while. It ends up at the other end of the cube, it hallucinates.

Errors and inaccuracies caused by language models are called hallucinations precisely because they are presented as if they were facts, with the same conviction.

People who have become so emotionally attached to these conversations, seeing the language model as a guru, a deity, a psychologist, will do what the language model tells them to do or follow its advice.

Someone might follow a hallucinated piece of advice.

Obviously, models are developed with safeguards; fences the model can't jump over. They won't tell you certain things, they won't tell you to do terrible things.

Yet, there are people basing major life decisions on conversations generated purely by probability.

Generated by putting tokens together, on a probabilistic basis.

Think about it.

#AI GENERATION#generative ai#gen ai#gen ai bullshit#chatgpt#ao3#scraping#Huggingface I HATE YOU#PLEASE DONT GENERATE ART WITH AI#PLEASE#fanfiction#fanfic#ao3 writer#ao3 fanfic#ao3 author#archive of our own#ai scraping#terrible#archiveofourown#information

307 notes

·

View notes

Note

Maybe one with bunny!hyrbid!reader and Natasha “adopts” her and just fucks the shit out of her with her strap (or her real cock if you prefer to write that)

Run Rabbit Run

𝐏𝐚𝐫𝐢𝐧𝐠: fem!bunny!hybrid!reader x owner!Nat

𝐒𝐮𝐦𝐦𝐞𝐫𝐲: Natasha can’t help but grant her bunny all her little wishes

𝐖𝐚𝐫𝐧𝐢𝐧𝐠𝐬: SMUT, dom!Nat, sub!reader, age gap (legal), ownership, size kink, strap on, artificial cum, slight breeding kink, pillow humping, slight somno, masturbation, crying during it,

!Disclaimer English is not my first language so please excuse any grammar or spelling errors. This story is completely fictional. I do not own these characters!

𝐌.𝐥𝐢𝐬𝐭 | 𝐍𝐚𝐯𝐢𝐠𝐚𝐭𝐢𝐨𝐧

What to do with all the money you make as an Avenger? That was a question Natasha had ask herself more times than she could count. Sure currently was her monthly pay check and all the money she made from interviews and social media was rotting away in her bank account, because she was never a fan of making herself gifts nor did she have time for it. But now with Easter just around the corner the Russian decided to not only do something against her overload on money but also against her loneliness.

She wanted a hybrid, not just some brainless pet but something that could actually understand her. The concept of owing a hybrid wasn't new of course it had been around for years, back in the days they actually hunted them from nature but nowadays there was no need after they got a hang of how to domesticate such a creature.

Natasha stepped foot in one of the only places in the whole of New York who sold these rare creatures- Tony had recommended it to her after once more bragging about his large collection. The over friendly employee showed her the different enclosures all while the employee tried to keep it together- after all you didn't see an Avenger daily not even when working for a prestigious company like she did.

Natasha first visited the cat hybrids, cute but too stubborn, then the dogs, too dependent, foxes were too clever for her taste though especially the polar foxes caught her eyes. Bears and any other large animals would be too much work and needed too much space. The right pick was right on her nose she wanted to get herself a bunny.

Standing in front of the enclosure which held you and a few of your companions Natasha and the employee stood, her gaze never leaving your body. You didn't alter much from a normal human, except for the fluffy bunny ears, little tail and over all smaller build you looked like any other girl. You were going to cost her a hefty amount of hard earned money but for your rare breed, Natasha couldn't care less about that in the moment. The way you stat there so carelessly reading some book which laid in her lap, made you different in her eyes more intelligent maybe? She wanted connection and not some braindead doll after all.

"The one in the pink collar… is she still to have?" Natasha asked the employee you gave her a quick nod. "Yes, she hasn't been here for long though the ones like her normally get adopted quite quickly." Nat only hummed in approval she couldn't wait to have you in her home. "I'll have her in a private kennel"

Meeting a potential owner made you nervous of course you had been trained to and prepared on how to act in such a situation, how to appeal to any potential owner - though you secretly hoped for a female buyer. You tried your best to hide your shy nature from the older woman who awaited you but Natasha found it charming how your, compared to your body, large floppy bunny ears hang low but twitched up when she spoke to you in a gentle manner.

Natasha approached the situation with a calm demeanour- she knew about the shy nature of a bunny like you. As soon as you were comfortable enough to approach her she started to pet over your smaller head with careful hands- and you loved it. By the end of your get to know each other you sat on the redhead's lap clinging on to her. But you weren't parted for long Natasha signed all the paperwork the same day and at the start of the next week you were able to move in with her.

She had given you a nice room, with many books, TV and games to entrain yourself with while she would be working. You came with the clothes from the centre, a basic white bluse, white skirt everything in white , like any other hybrid except for your coloured coded collar which adored your neck so the employees had an easier time keeping hybrids a part. Natasha started to take great joy in precisely choosing each outfit for you. Price didn't matter to Natasha, if she found something to be cute she bought it for you and Nat was known for expensive taste. Sooner or later your closet was fuller than hers, filled to the brim with shorts, blouses, floral summer dresses anything which had a playful feel to it.

Natasha was a busy woman though, often being away for days at a time, she normally made up with expensive gifts and extensive cuddling for her little bunny girl. But that hardly was enough to satisfy your need to be close to the older woman, not to mention that you were worried sick about your owner once you had found out that she wasn't a simple business woman but an avenger.

Natasha came home at around 3 AM after a long mission in Europe the jet lag and sleep deprive was killing her, and since she thought you'd already be asleep at such a late hour- and way past your agreed on bed time, she'd just go to sleep already. As soon as she had stripped to her underwear and her face had it the pillows she was dead asleep, little did she know that you weren't.

Next door you were awake, not only that but you were desperate. This had never happened before yet you immediately knew what it was. You had your first heat, and nothing helped, no toy's from the centre, no playing with yourself, no nothing. You had a pillow under your hips probed up at the seams you humped the pillow like your life depended on it.

You mewled as your already sensitive cunt graced over the edge of the pillow. You were close to cuming but you couldn't bring yourself over the edge. That's when you heard Natasha rummaging through the house you're floppy bunny ears twitching up to detect the source of the noise. You waited patiently in your room trying to find some sleep, maybe Natasha could help you out in the morning. But you could feels your juices sticking to the inside of your thighs.

With small steps you made it into Natasha's bed room tears of frustration already building in your eyes threatening to spill over your blushing cheeks. Carefully you climbed into her bed to find her in a deep slumber laying on her back. You sat down on her on her thigh your pussy making contact with her soft skin.

Slowly you started to rhythmically move it against the limp muscles of her thigh small whimpers falling from your throat in between the sobs of frustration paired with the cries of her name and the tears rolling down your cheek it made a whole picture.

Natasha peaceful face scrunched up in confusion of the sensation when she slowly woke from her slumber she was utterly distraught. Her sweet little bunny humping her thigh like a bitch in heat. With careful hands she stopped your hips and you immediately broke out into a new round of sobs and cries.

"Sheesh" she hushed you petting over over your low hanging ears "You're just in heat bunny, it'll be over soon" She assured you when you pressed your face into her neck. "I want it over now!" You cried out "what about the advice the centre gave you?" She tried but feeling you so desperate and need had an affect on her too.

"Doesn't work" You huffed out "I want you to play with me" Nat was startled by the request, was it morally right for her to sleep with you? It was the main point of criticism surrounding owing a hybrid, but what if not the owner but the hybrid wanted it. "Please" you whined and the assassin's strong will broke right there. "Wait here bunny I'll go get something" You nodded and released Natasha from your grace watching her go.

She came back with a noticeable bulge in her sleep shorts, which upon seeing made your thighs clench together. "It's not going to hurt bunny" She assured and got behind you pulling her shorts down to reveal her, to your body size massive, strap on. She pressed the fat tip against your entrance and your hooded eyes flew open in surprise at the shear size of the toy.

"Natty, it's to big" You mewled out your cotton tail twitching "It's not gonna fit" Natasha scoffed shaking her head as if you had just made an outrageous statement. "I'm gonna make it fit bunny" She pushed forward and your bunny hears flew up in surprise of the stretch. You hands dug into the soft pillow underneath your head as you whimpered in a mic of pain and pleasure.

"Natasha!" You cried as she bottomed you out the stretch being much greater than you could've accomplished with your little fingers. "I'm gonna move now bunny" You nodded and felt her starting with a comfortable rhythm which made the pain turn into pleasure.

By your sweet moans she could tell how much you liked it and fastened her pace to finally give you what you wanted. With deep thrusts she stroked your G spot making you see stars as she too enjoyed the feeling of the strap running against her clit. You mewled out some words she couldn’t make out but took at as a sign of approval for her to keep going.

With both of her hands on your hips she forcefully slammed into your tight heat making sure to not actually hurt you. You arched your back one hand sneaking to your neglected bundle of nerves rubbing it in tight circles. “Fuck are you close?” Natasha asked there was a certain tiredness in her voice still. She clenched down harder on the silicone and mewled out “Yes, please”

“Fuck cum with me” with a few more fast thrusts you came first you’re juicing coating the lower stomach of the black widow. She had a surprise for you when you noticed a thick liquid gushing from the strap into your womb as she came. After having cum herself she pulled out to watch in an awe how the white cum was dripping from your stretched out hole.

After having cleaned you up Natasha could finally rest but not without you resigning on her chest of course. Call it what you wanted for Natasha those feelings of affection were real and of no ill intent she just did whatever you wanted to ensure happiness. With that thought and still cum dripping from your hole both you and Natasha fell asleep.

:)

#natasha romanoff imagine#natasha romanoff x reader#black widow x female reader#natasha romanoff smut#natasha romanoff x you#black widow x reader#natasha x reader#natasha x you#natasha romanoff#lesbian smut#lesbian#wlw ns/fw#marvel woman x reader#marvel smut#marvel fanfiction

1K notes

·

View notes