#remove facebook ads

Text

Well, hello art blog! My computer broke back in December, so I haven't made polished art in a long while. But but but! I have quite the experience with phone art, and so when I finished making this piece for an extended bit, and it turned out this nice... Well, I'd be hard pressed not to post it. Even if it does make me mad that it turned out so cute. Hopefully I'll post some more art soon enough! ID under cut, if the alt text doesn't work.

[Image ID: A drawing of Inspector Zenigata and Lupin iii, from the series Lupin iii. They're drawn in a pose where they're chest to chest, touching hands and nearly face to face. This is in reference to the vocaloid song "Magnet," and the pose from the cover art. They are wearing glowing butterfly headphones to reference the song further, which are interlocked at the microphones. They are drawn in a soft shaded style. Inspector Zenigata is flustered and mad, while Lupin is smug. End ID]

#callisto.art#lupin iii#inspector zenigata#loopzoop#luzeni#sorry if i tagged this wrong! im new to the lupin franchise and dont know a lot about the fanbase#..... and this is the first art post im making on it. sighhh.#i suppose i could also tag this voca but im worried that it would go into the main tags for it#also sorry if my image id sucks. i had to write it all again because i accidentally added a poll to the post#and could not remove it. im like a 50 year old on facebook in that way#and just for the record. i dont know anything about lupin jacket colors or anything like that with the other characters.#they are colored like that so theyre contrasting blue and red colors. because its cute

120 notes

·

View notes

Text

i think it should be mandatory that everyone watch The Social Dilemma at least once every six months

#dear everyone saying that tumblr doesn't have an algorithm: yes it does oh my GOD.#i see people say this so often irt twitter and reddit migration#just because tumblr has a different feed system to facebook/inta/twitter doesn't mean the only things you see are exactly what you want#free of influence or coercion#simplest example is tumblr suggesting users and tags for u to follow. what do you think is informing its suggestions?#how does it know which blogs are similar? it's not by fucking chance#please i know we all clown on what a mess this website is and how poorly it delivers ads but let's not forget that that's a choice they mak#if tumblr wanted to deliver ads in the way other social media sites do they could. but it's part of the image they've created for themselve#hence why they feel they can offer a paid subscription to remove ads that has an off switch so u can still see their weird crazy zany ads#because they know how much we love to clown on their shit ads. they know users will screenshot and share ads if they're weird enough#and they want you to. they're not so incompetent that they can't get us classy ads lol. this is their brand. let's not forget that!#anyway this is all triggered by me sending someone (hi bunni <3) a post of misha collin's sfx make up in gotham knights that popped up as a#recommended post despite me never having watched it or searched for it etc. what triggered that post appearing was me searching/tagging spn#a couple times recently. and of course misha collins and spn are frequently cross tagged. anyway since then i have been bombarded with that#godforsaken show constantly on my dash#sorry to gotham knights enjoyers i get the appeal and i am a dc simp but it's just not for me ig#if u read all this i love u im kissing you sloppystyle and or giving u a firm and warm handshake and or a friendly nod like we're walking#past each other on a beautiful day <3#my post

19 notes

·

View notes

Text

So this is how they choose to deal w/ european laws ???? Either i agree to them selling my data or i pay ?????? I fucking hate them

#i miss 2013/14/15 when social medias were all distinct and less overridden with ads#i actually installed an extension on my laptop so that twitter looks like 2015 twitter and ut's incredible#i love these protection laws we have in europe but i hate how social medias are dealing w them#esp meta#threads is not available here#this is happening on facebook#i heard some stuff abt elon musk thinking abt removing twitter from the market when the laws come in full effect#my dude just show me some ads it's funnier if they're not targetew#like here for a few weeks i only had ads abt cars. i dont know how to drive

2 notes

·

View notes

Text

I can remove the background properly. I am a Digital Marketer and Graphic Designer specialist. I can Besides that the facebook ads, google ads, instagram ads and linkedin ads.

6 notes

·

View notes

Text

youtube

In this video, we'll show you the step-by-step process of how to delete or deactivate your ad account on Facebook. If you're looking for a full guide on how to remove your ad account from Facebook, then this video is for you!

#delete ad account facebook ads#Close ad accounts#delete facebook business manager account#remove ads account from business manager#remove ads account#how to delete facebook ads account and remove credit card info#facebook ad account deactivated#ad account disabled#facebook ad account banned#facebook ad account disabled#facebook ad account#delete facebook business manager#how to recover disabled fb ad account#ad account disabled facebook#facebook ads tutorial#Youtube

0 notes

Text

i am Professional Digital Marketer.

Hey,

i am professional digital marketer. i know, you struggling with sales from your online store.

I can help you grow your business we are offering Facebook and Instagram marketing services. I am Jaber has working as an advertising service since 2019. Already completed 200+ jobs from our agency. I can guarantee sales from my advertising company Please check out my previous successful campaign.

I will do it for you.

FB Facebook Marketing,

Lead Campaign,

Remove Background,

FB Facebook create and set up,

Youtube Promote,

Are you still thinking? Have a quick chat

thanks & Regards.

Jaber Hasan.

#digital marketing#facebook advertising#facebook marketing#facebook ad agency services#instagram promotion#lead generation#remove background#youtube seo

1 note

·

View note

Text

Are you Looking for the perfect brand identity for your business?

GREETINGS Dear business Owners.

We are here to help you get that perfectly unique and creative design for your business/company...

We are specialized at a Branding design Or single items like...

✪ - Logo

✪ - Business card

✪ - Channel Logo

✪ - Gaming Logo

✪ - book cover

✪ - Social Ad banner

✪ - Social media covers

✪ - YouTube thumbnail-

✪ - and many more.

Just ask what you need. we will be glad to help your business to reach more clients and help your sales to grow by catching your customer's attention.

INBOX for order NOW........!

we are known for giving the best possible design service in the country. we have multiple pages so don't worry inbox here all pages are run by the same people so you will get 100% genuine service guaranteed.

you can inbox us anywhere.

and place your order.✪

#logo#logo design#logo designer#unique logo#morden logo#designer#banner design#mockup#facebook page design#facebook#instragram#google#ads design#photo editing#background remove#brand identify#brand logo#business card#buisness card design

0 notes

Text

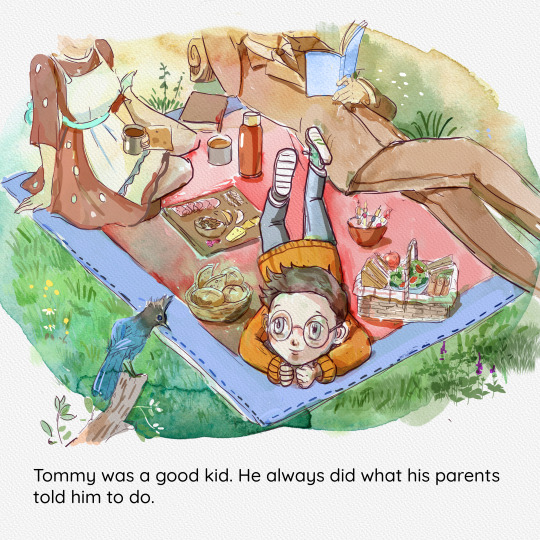

I spent the last 11 months working with my illustrator, Marta, to make the children's book of my dreams. We were able to get every detail just the way I wanted, and I'm very happy with the final result. She is the best person I have ever worked with, and I mean, just look at those colors!

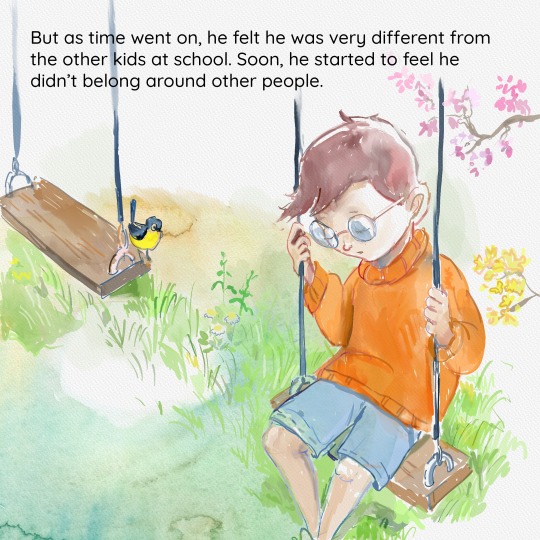

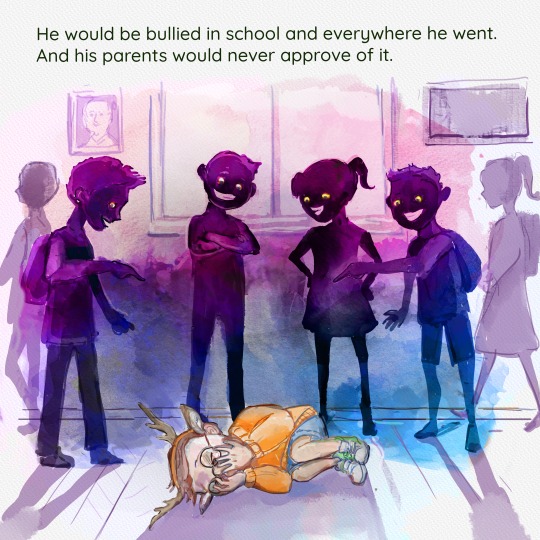

I wanted to tell that story of anyone's who ever felt that they didn't belong anywhere. Whether you are a nerd, autistic, queer, trans, a furry, or some combination of the above, it makes for a sad and difficult life. This isn't just my story. This is our story.

I also want to say the month following the book's launch has been very stressful. I have never done this kind of book before, and I didn't know how to get the word out about it. I do have a small publishing business and a full-time job, so I figured let's put my some money into advertising this time. Indie writers will tell you great success stories they've had using Facebook ads, so I started a page and boosting my posts.

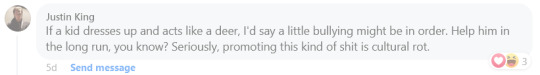

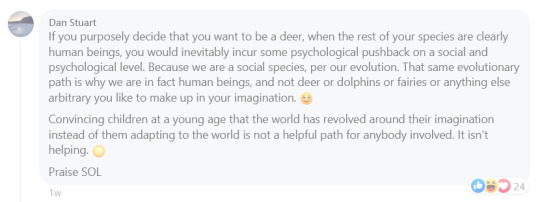

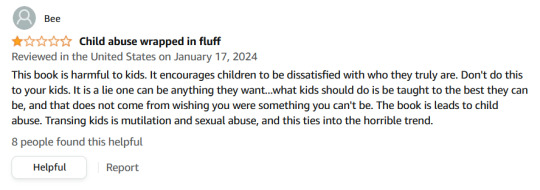

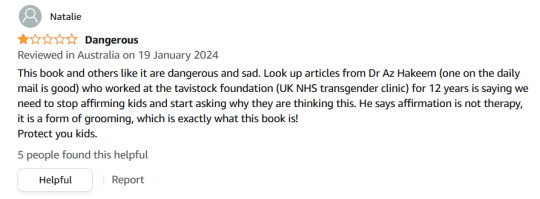

Within a first few days, I got a lot of likes and shares and even a few people who requested the book and left great reviews for me. There were also people memeing on how the boy turns into a delicious venison steak at the end of the book. It was all in good fun, though. It honestly made made laugh. Things were great, so I made more posts and increased spending.

But somehow, someway these new posts ended up on the wrong side of the platform. Soon, we saw claims of how the book was perpetuating mental illness, of how this book goes against all of basic biology and logic, and how the lgbtq agenda was corrupting our kids.

This brought out even more people to support the book, so I just let them at it and enjoyed my time reading comments after work. A few days later, then conversation moved from politics to encouraging bullying, accusing others of abusing children, and a competition to who could post the most cruel image. They were just comments, however, and after all, people were still supporting the book.

But then the trolls started organizing. Over night, I got hit with 3 one-star reviews on Amazon. My heart stopped. If your book ever falls below a certain rating, it can be removed, and blocked, and you can receive a strike on your publishing account. All that hard work was about to be deleted, and it was all my fault for posting it in the wrong place.

I panicked, pulled all my posts, and went into hiding, hoping things would die down. I reported the reviews and so did many others, but here's the thing you might have noticed across platforms like Google and Amazon. There are community guidelines that I referenced in my email, but unless people are doing something highly illegal, things are rarely ever taken down on these massive platforms. So those reviews are still there to this day. Once again, it's my fault, and I should have seen it coming.

Luckily, the harassment stopped, and the book is doing better now, at least in the US. The overall rating is still rickety in Europe, Canada, and Australia, so any reviews there help me out quite a lot.

I'm currently looking for a new home to post about the book and talk about everything that went into it. I also love to talk about all things books if you ever want to chat. Maybe I'll post a selfie one day, too. Otherwise, the book is still on Amazon, and the full story and illustrations are on YouTube as well if you want to read it for free.

#books#reading#childrens books#lgbtq#lgbtqia#autism#transgender#furry#therian#art#deer#queer#artists on tumblr#creativity#illustration

3K notes

·

View notes

Text

At this point I'm just assuming everything I ever create and post to the internet is going to be stolen. People have been stealing, reposting, and adding their own pay links to my art for years now, without the help of AI.

I've made D&D themed stickers that are now all over "free clipart" sites, despite me filing requests to have them removed. I've seen my graphics ripped off and included in someone else's art without credit. I've had people tell me that an ACAB image I made showed up as a sticker getting put up around Seattle. Facebook meme pages crop my username out of my posts all the goddamn time. Voice actors on YouTube use my posts for "dramatic reading" videos constantly, and only one has ever asked me permission or given me any cut of the profits from their video.

I see my art out in the wild with no source back to me, and I'm a tiny creator compared to a lot of others. People repost shit constantly, whether it's here, Pinterest, Facebook, Twitter, TikTok, YouTube, whatever. I remember the old tumblr days of "We Heart It is not a goddamn source" PSAs.

I think people are right to be concerned about AI, but at this point I'm much more concerned about it from the perspective of "companies want to use it to cut labor costs," and less "it's theft."

People didn't need AI to steal my art before now. I'm more concerned about trying to freelance in a market full of "oh, we can just get ChatGPT to write and illustrate our articles."

782 notes

·

View notes

Text

I think most of us should take the whole ai scraping situation as a sign that we should maybe stop giving google/facebook/big corps all our data and look into alternatives that actually value your privacy.

i know this is easier said than done because everybody under the sun seems to use these services, but I promise you it’s not impossible. In fact, I made a list of a few alternatives to popular apps and services, alternatives that are privacy first, open source and don’t sell your data.

right off the bat I suggest you stop using gmail. it’s trash and not secure at all. google can read your emails. in fact, google has acces to all the data on your account and while what they do with it is already shady, I don’t even want to know what the whole ai situation is going to bring. a good alternative to a few google services is skiff. they provide a secure, e3ee mail service along with a workspace that can easily import google documents, a calendar and 10 gb free storage. i’ve been using it for a while and it’s great.

a good alternative to google drive is either koofr or filen. I use filen because everything you upload on there is end to end encrypted with zero knowledge. they offer 10 gb of free storage and really affordable lifetime plans.

google docs? i don’t know her. instead, try cryptpad. I don’t have the spoons to list all the great features of this service, you just have to believe me. nothing you write there will be used to train ai and you can share it just as easily. if skiff is too limited for you and you also need stuff like sheets or forms, cryptpad is here for you. the only downside i could think of is that they don’t have a mobile app, but the site works great in a browser too.

since there is no real alternative to youtube I recommend watching your little slime videos through a streaming frontend like freetube or new pipe. besides the fact that they remove ads, they also stop google from tracking what you watch. there is a bit of functionality loss with these services, but if you just want to watch videos privately they’re great.

if you’re looking for an alternative to google photos that is secure and end to end encrypted you might want to look into stingle, although in my experience filen’s photos tab works pretty well too.

oh, also, for the love of god, stop using whatsapp, facebook messenger or instagram for messaging. just stop. signal and telegram are literally here and they’re free. spread the word, educate your friends, ask them if they really want anyone to snoop around their private conversations.

regarding browser, you know the drill. throw google chrome/edge in the trash (they really basically spyware disguised as browsers) and download either librewolf or brave. mozilla can be a great secure option too, with a bit of tinkering.

if you wanna get a vpn (and I recommend you do) be wary that some of them are scammy. do your research, read their terms and conditions, familiarise yourself with their model. if you don’t wanna do that and are willing to trust my word, go with mullvad. they don’t keep any logs. it’s 5 euros a month with no different pricing plans or other bullshit.

lastly, whatever alternative you decide on, what matters most is that you don’t keep all your data in one place. don’t trust a service to take care of your emails, documents, photos and messages. store all these things in different, trustworthy (preferably open source) places. there is absolutely no reason google has to know everything about you.

do your own research as well, don’t just trust the first vpn service your favourite youtube gets sponsored by. don’t trust random tech blogs to tell you what the best cloud storage service is — they get good money for advertising one or the other. compare shit on your own or ask a tech savvy friend to help you. you’ve got this.

#internet privacy#privacy#vpn#google docs#ai scraping#psa#ai#archive of our own#ao3 writer#mine#textpost

1K notes

·

View notes

Text

AI “art” and uncanniness

TOMORROW (May 14), I'm on a livecast about AI AND ENSHITTIFICATION with TIM O'REILLY; on TOMORROW (May 15), I'm in NORTH HOLLYWOOD for a screening of STEPHANIE KELTON'S FINDING THE MONEY; FRIDAY (May 17), I'm at the INTERNET ARCHIVE in SAN FRANCISCO to keynote the 10th anniversary of the AUTHORS ALLIANCE.

When it comes to AI art (or "art"), it's hard to find a nuanced position that respects creative workers' labor rights, free expression, copyright law's vital exceptions and limitations, and aesthetics.

I am, on balance, opposed to AI art, but there are some important caveats to that position. For starters, I think it's unequivocally wrong – as a matter of law – to say that scraping works and training a model with them infringes copyright. This isn't a moral position (I'll get to that in a second), but rather a technical one.

Break down the steps of training a model and it quickly becomes apparent why it's technically wrong to call this a copyright infringement. First, the act of making transient copies of works – even billions of works – is unequivocally fair use. Unless you think search engines and the Internet Archive shouldn't exist, then you should support scraping at scale:

https://pluralistic.net/2023/09/17/how-to-think-about-scraping/

And unless you think that Facebook should be allowed to use the law to block projects like Ad Observer, which gathers samples of paid political disinformation, then you should support scraping at scale, even when the site being scraped objects (at least sometimes):

https://pluralistic.net/2021/08/06/get-you-coming-and-going/#potemkin-research-program

After making transient copies of lots of works, the next step in AI training is to subject them to mathematical analysis. Again, this isn't a copyright violation.

Making quantitative observations about works is a longstanding, respected and important tool for criticism, analysis, archiving and new acts of creation. Measuring the steady contraction of the vocabulary in successive Agatha Christie novels turns out to offer a fascinating window into her dementia:

https://www.theguardian.com/books/2009/apr/03/agatha-christie-alzheimers-research

Programmatic analysis of scraped online speech is also critical to the burgeoning formal analyses of the language spoken by minorities, producing a vibrant account of the rigorous grammar of dialects that have long been dismissed as "slang":

https://www.researchgate.net/publication/373950278_Lexicogrammatical_Analysis_on_African-American_Vernacular_English_Spoken_by_African-Amecian_You-Tubers

Since 1988, UCL Survey of English Language has maintained its "International Corpus of English," and scholars have plumbed its depth to draw important conclusions about the wide variety of Englishes spoken around the world, especially in postcolonial English-speaking countries:

https://www.ucl.ac.uk/english-usage/projects/ice.htm

The final step in training a model is publishing the conclusions of the quantitative analysis of the temporarily copied documents as software code. Code itself is a form of expressive speech – and that expressivity is key to the fight for privacy, because the fact that code is speech limits how governments can censor software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech/

Are models infringing? Well, they certainly can be. In some cases, it's clear that models "memorized" some of the data in their training set, making the fair use, transient copy into an infringing, permanent one. That's generally considered to be the result of a programming error, and it could certainly be prevented (say, by comparing the model to the training data and removing any memorizations that appear).

Not every seeming act of memorization is a memorization, though. While specific models vary widely, the amount of data from each training item retained by the model is very small. For example, Midjourney retains about one byte of information from each image in its training data. If we're talking about a typical low-resolution web image of say, 300kb, that would be one three-hundred-thousandth (0.0000033%) of the original image.

Typically in copyright discussions, when one work contains 0.0000033% of another work, we don't even raise the question of fair use. Rather, we dismiss the use as de minimis (short for de minimis non curat lex or "The law does not concern itself with trifles"):

https://en.wikipedia.org/wiki/De_minimis

Busting someone who takes 0.0000033% of your work for copyright infringement is like swearing out a trespassing complaint against someone because the edge of their shoe touched one blade of grass on your lawn.

But some works or elements of work appear many times online. For example, the Getty Images watermark appears on millions of similar images of people standing on red carpets and runways, so a model that takes even in infinitesimal sample of each one of those works might still end up being able to produce a whole, recognizable Getty Images watermark.

The same is true for wire-service articles or other widely syndicated texts: there might be dozens or even hundreds of copies of these works in training data, resulting in the memorization of long passages from them.

This might be infringing (we're getting into some gnarly, unprecedented territory here), but again, even if it is, it wouldn't be a big hardship for model makers to post-process their models by comparing them to the training set, deleting any inadvertent memorizations. Even if the resulting model had zero memorizations, this would do nothing to alleviate the (legitimate) concerns of creative workers about the creation and use of these models.

So here's the first nuance in the AI art debate: as a technical matter, training a model isn't a copyright infringement. Creative workers who hope that they can use copyright law to prevent AI from changing the creative labor market are likely to be very disappointed in court:

https://www.hollywoodreporter.com/business/business-news/sarah-silverman-lawsuit-ai-meta-1235669403/

But copyright law isn't a fixed, eternal entity. We write new copyright laws all the time. If current copyright law doesn't prevent the creation of models, what about a future copyright law?

Well, sure, that's a possibility. The first thing to consider is the possible collateral damage of such a law. The legal space for scraping enables a wide range of scholarly, archival, organizational and critical purposes. We'd have to be very careful not to inadvertently ban, say, the scraping of a politician's campaign website, lest we enable liars to run for office and renege on their promises, while they insist that they never made those promises in the first place. We wouldn't want to abolish search engines, or stop creators from scraping their own work off sites that are going away or changing their terms of service.

Now, onto quantitative analysis: counting words and measuring pixels are not activities that you should need permission to perform, with or without a computer, even if the person whose words or pixels you're counting doesn't want you to. You should be able to look as hard as you want at the pixels in Kate Middleton's family photos, or track the rise and fall of the Oxford comma, and you shouldn't need anyone's permission to do so.

Finally, there's publishing the model. There are plenty of published mathematical analyses of large corpuses that are useful and unobjectionable. I love me a good Google n-gram:

https://books.google.com/ngrams/graph?content=fantods%2C+heebie-jeebies&year_start=1800&year_end=2019&corpus=en-2019&smoothing=3

And large language models fill all kinds of important niches, like the Human Rights Data Analysis Group's LLM-based work helping the Innocence Project New Orleans' extract data from wrongful conviction case files:

https://hrdag.org/tech-notes/large-language-models-IPNO.html

So that's nuance number two: if we decide to make a new copyright law, we'll need to be very sure that we don't accidentally crush these beneficial activities that don't undermine artistic labor markets.

This brings me to the most important point: passing a new copyright law that requires permission to train an AI won't help creative workers get paid or protect our jobs.

Getty Images pays photographers the least it can get away with. Publishers contracts have transformed by inches into miles-long, ghastly rights grabs that take everything from writers, but still shifts legal risks onto them:

https://pluralistic.net/2022/06/19/reasonable-agreement/

Publishers like the New York Times bitterly oppose their writers' unions:

https://actionnetwork.org/letters/new-york-times-stop-union-busting

These large corporations already control the copyrights to gigantic amounts of training data, and they have means, motive and opportunity to license these works for training a model in order to pay us less, and they are engaged in this activity right now:

https://www.nytimes.com/2023/12/22/technology/apple-ai-news-publishers.html

Big games studios are already acting as though there was a copyright in training data, and requiring their voice actors to begin every recording session with words to the effect of, "I hereby grant permission to train an AI with my voice" and if you don't like it, you can hit the bricks:

https://www.vice.com/en/article/5d37za/voice-actors-sign-away-rights-to-artificial-intelligence

If you're a creative worker hoping to pay your bills, it doesn't matter whether your wages are eroded by a model produced without paying your employer for the right to do so, or whether your employer got to double dip by selling your work to an AI company to train a model, and then used that model to fire you or erode your wages:

https://pluralistic.net/2023/02/09/ai-monkeys-paw/#bullied-schoolkids

Individual creative workers rarely have any bargaining leverage over the corporations that license our copyrights. That's why copyright's 40-year expansion (in duration, scope, statutory damages) has resulted in larger, more profitable entertainment companies, and lower payments – in real terms and as a share of the income generated by their work – for creative workers.

As Rebecca Giblin and I write in our book Chokepoint Capitalism, giving creative workers more rights to bargain with against giant corporations that control access to our audiences is like giving your bullied schoolkid extra lunch money – it's just a roundabout way of transferring that money to the bullies:

https://pluralistic.net/2022/08/21/what-is-chokepoint-capitalism/

There's an historical precedent for this struggle – the fight over music sampling. 40 years ago, it wasn't clear whether sampling required a copyright license, and early hip-hop artists took samples without permission, the way a horn player might drop a couple bars of a well-known song into a solo.

Many artists were rightfully furious over this. The "heritage acts" (the music industry's euphemism for "Black people") who were most sampled had been given very bad deals and had seen very little of the fortunes generated by their creative labor. Many of them were desperately poor, despite having made millions for their labels. When other musicians started making money off that work, they got mad.

In the decades that followed, the system for sampling changed, partly through court cases and partly through the commercial terms set by the Big Three labels: Sony, Warner and Universal, who control 70% of all music recordings. Today, you generally can't sample without signing up to one of the Big Three (they are reluctant to deal with indies), and that means taking their standard deal, which is very bad, and also signs away your right to control your samples.

So a musician who wants to sample has to sign the bad terms offered by a Big Three label, and then hand $500 out of their advance to one of those Big Three labels for the sample license. That $500 typically doesn't go to another artist – it goes to the label, who share it around their executives and investors. This is a system that makes every artist poorer.

But it gets worse. Putting a price on samples changes the kind of music that can be economically viable. If you wanted to clear all the samples on an album like Public Enemy's "It Takes a Nation of Millions To Hold Us Back," or the Beastie Boys' "Paul's Boutique," you'd have to sell every CD for $150, just to break even:

https://memex.craphound.com/2011/07/08/creative-license-how-the-hell-did-sampling-get-so-screwed-up-and-what-the-hell-do-we-do-about-it/

Sampling licenses don't just make every artist financially worse off, they also prevent the creation of music of the sort that millions of people enjoy. But it gets even worse. Some older, sample-heavy music can't be cleared. Most of De La Soul's catalog wasn't available for 15 years, and even though some of their seminal music came back in March 2022, the band's frontman Trugoy the Dove didn't live to see it – he died in February 2022:

https://www.vulture.com/2023/02/de-la-soul-trugoy-the-dove-dead-at-54.html

This is the third nuance: even if we can craft a model-banning copyright system that doesn't catch a lot of dolphins in its tuna net, it could still make artists poorer off.

Back when sampling started, it wasn't clear whether it would ever be considered artistically important. Early sampling was crude and experimental. Musicians who trained for years to master an instrument were dismissive of the idea that clicking a mouse was "making music." Today, most of us don't question the idea that sampling can produce meaningful art – even musicians who believe in licensing samples.

Having lived through that era, I'm prepared to believe that maybe I'll look back on AI "art" and say, "damn, I can't believe I never thought that could be real art."

But I wouldn't give odds on it.

I don't like AI art. I find it anodyne, boring. As Henry Farrell writes, it's uncanny, and not in a good way:

https://www.programmablemutter.com/p/large-language-models-are-uncanny

Farrell likens the work produced by AIs to the movement of a Ouija board's planchette, something that "seems to have a life of its own, even though its motion is a collective side-effect of the motions of the people whose fingers lightly rest on top of it." This is "spooky-action-at-a-close-up," transforming "collective inputs … into apparently quite specific outputs that are not the intended creation of any conscious mind."

Look, art is irrational in the sense that it speaks to us at some non-rational, or sub-rational level. Caring about the tribulations of imaginary people or being fascinated by pictures of things that don't exist (or that aren't even recognizable) doesn't make any sense. There's a way in which all art is like an optical illusion for our cognition, an imaginary thing that captures us the way a real thing might.

But art is amazing. Making art and experiencing art makes us feel big, numinous, irreducible emotions. Making art keeps me sane. Experiencing art is a precondition for all the joy in my life. Having spent most of my life as a working artist, I've come to the conclusion that the reason for this is that art transmits an approximation of some big, numinous irreducible emotion from an artist's mind to our own. That's it: that's why art is amazing.

AI doesn't have a mind. It doesn't have an intention. The aesthetic choices made by AI aren't choices, they're averages. As Farrell writes, "LLM art sometimes seems to communicate a message, as art does, but it is unclear where that message comes from, or what it means. If it has any meaning at all, it is a meaning that does not stem from organizing intention" (emphasis mine).

Farrell cites Mark Fisher's The Weird and the Eerie, which defines "weird" in easy to understand terms ("that which does not belong") but really grapples with "eerie."

For Fisher, eeriness is "when there is something present where there should be nothing, or is there is nothing present when there should be something." AI art produces the seeming of intention without intending anything. It appears to be an agent, but it has no agency. It's eerie.

Fisher talks about capitalism as eerie. Capital is "conjured out of nothing" but "exerts more influence than any allegedly substantial entity." The "invisible hand" shapes our lives more than any person. The invisible hand is fucking eerie. Capitalism is a system in which insubstantial non-things – corporations – appear to act with intention, often at odds with the intentions of the human beings carrying out those actions.

So will AI art ever be art? I don't know. There's a long tradition of using random or irrational or impersonal inputs as the starting point for human acts of artistic creativity. Think of divination:

https://pluralistic.net/2022/07/31/divination/

Or Brian Eno's Oblique Strategies:

http://stoney.sb.org/eno/oblique.html

I love making my little collages for this blog, though I wouldn't call them important art. Nevertheless, piecing together bits of other peoples' work can make fantastic, important work of historical note:

https://www.johnheartfield.com/John-Heartfield-Exhibition/john-heartfield-art/famous-anti-fascist-art/heartfield-posters-aiz

Even though painstakingly cutting out tiny elements from others' images can be a meditative and educational experience, I don't think that using tiny scissors or the lasso tool is what defines the "art" in collage. If you can automate some of this process, it could still be art.

Here's what I do know. Creating an individual bargainable copyright over training will not improve the material conditions of artists' lives – all it will do is change the relative shares of the value we create, shifting some of that value from tech companies that hate us and want us to starve to entertainment companies that hate us and want us to starve.

As an artist, I'm foursquare against anything that stands in the way of making art. As an artistic worker, I'm entirely committed to things that help workers get a fair share of the money their work creates, feed their families and pay their rent.

I think today's AI art is bad, and I think tomorrow's AI art will probably be bad, but even if you disagree (with either proposition), I hope you'll agree that we should be focused on making sure art is legal to make and that artists get paid for it.

Just because copyright won't fix the creative labor market, it doesn't follow that nothing will. If we're worried about labor issues, we can look to labor law to improve our conditions. That's what the Hollywood writers did, in their groundbreaking 2023 strike:

https://pluralistic.net/2023/10/01/how-the-writers-guild-sunk-ais-ship/

Now, the writers had an advantage: they are able to engage in "sectoral bargaining," where a union bargains with all the major employers at once. That's illegal in nearly every other kind of labor market. But if we're willing to entertain the possibility of getting a new copyright law passed (that won't make artists better off), why not the possibility of passing a new labor law (that will)? Sure, our bosses won't lobby alongside of us for more labor protection, the way they would for more copyright (think for a moment about what that says about who benefits from copyright versus labor law expansion).

But all workers benefit from expanded labor protection. Rather than going to Congress alongside our bosses from the studios and labels and publishers to demand more copyright, we could go to Congress alongside every kind of worker, from fast-food cashiers to publishing assistants to truck drivers to demand the right to sectoral bargaining. That's a hell of a coalition.

And if we do want to tinker with copyright to change the way training works, let's look at collective licensing, which can't be bargained away, rather than individual rights that can be confiscated at the entrance to our publisher, label or studio's offices. These collective licenses have been a huge success in protecting creative workers:

https://pluralistic.net/2023/02/26/united-we-stand/

Then there's copyright's wildest wild card: The US Copyright Office has repeatedly stated that works made by AIs aren't eligible for copyright, which is the exclusive purview of works of human authorship. This has been affirmed by courts:

https://pluralistic.net/2023/08/20/everything-made-by-an-ai-is-in-the-public-domain/

Neither AI companies nor entertainment companies will pay creative workers if they don't have to. But for any company contemplating selling an AI-generated work, the fact that it is born in the public domain presents a substantial hurdle, because anyone else is free to take that work and sell it or give it away.

Whether or not AI "art" will ever be good art isn't what our bosses are thinking about when they pay for AI licenses: rather, they are calculating that they have so much market power that they can sell whatever slop the AI makes, and pay less for the AI license than they would make for a human artist's work. As is the case in every industry, AI can't do an artist's job, but an AI salesman can convince an artist's boss to fire the creative worker and replace them with AI:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

They don't care if it's slop – they just care about their bottom line. A studio executive who cancels a widely anticipated film prior to its release to get a tax-credit isn't thinking about artistic integrity. They care about one thing: money. The fact that AI works can be freely copied, sold or given away may not mean much to a creative worker who actually makes their own art, but I assure you, it's the only thing that matters to our bosses.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/13/spooky-action-at-a-close-up/#invisible-hand

#pluralistic#ai art#eerie#ai#weird#henry farrell#copyright#copyfight#creative labor markets#what is art#ideomotor response#mark fisher#invisible hand#uncanniness#prompting

264 notes

·

View notes

Text

Instagram and TikTok keep taking down my art so I post boobs and violence on main.

STOP TAKING DOWN MY SHIT. These websites keeps falsely flagging my art and text for sex and violence. Sometimes it gets put back but mostly doesn’t. There’s no way to assure a human looks at the post. There’s no help service. The appeal process has no button for “this shit isn’t in the post.”

Instagram and tumblr keep silencing pro Palestine posts and fundraisers. Tumblr is banning trans women. Websites keep scrubbing information for ai training. Meta completely blocked news from Facebook and Instagram in Canada. The USA is banning tiktok.

KEEP REMOVING MY ART

SEE IF I GIVE A SHIT

Images are taken from The Thing (1982), 2000 ad illustrations from the golden age of science fiction pulps (1988), various paintings by Francis Bacon, Sketch Daily References, shutter stock and TikTok and instagram screenshots from my shit being taken down.

#my post#my art#digital art#punk#punk art#horror#photo collage#artists on tumblr#cw nudity#tw gun#tw violence#Tw gore#Tw blood#tw eyestrain

163 notes

·

View notes

Text

You're being targeted by disinformation networks that are vastly more effective than you realize. And they're making you more hateful and depressed.

(This essay was originally by u/walkandtalkk and posted to r/GenZ on Reddit two months ago, and I've crossposted here on Tumblr for convenience because it's relevant and well-written.)

TL;DR: You know that Russia and other governments try to manipulate people online. But you almost certainly don't how just how effectively orchestrated influence networks are using social media platforms to make you -- individually-- angry, depressed, and hateful toward each other. Those networks' goal is simple: to cause Americans and other Westerners -- especially young ones -- to give up on social cohesion and to give up on learning the truth, so that Western countries lack the will to stand up to authoritarians and extremists.

And you probably don't realize how well it's working on you.

This is a long post, but I wrote it because this problem is real, and it's much scarier than you think.

How Russian networks fuel racial and gender wars to make Americans fight one another

In September 2018, a video went viral after being posted by In the Now, a social media news channel. It featured a feminist activist pouring bleach on a male subway passenger for manspreading. It got instant attention, with millions of views and wide social media outrage. Reddit users wrote that it had turned them against feminism.

There was one problem: The video was staged. And In the Now, which publicized it, is a subsidiary of RT, formerly Russia Today, the Kremlin TV channel aimed at foreign, English-speaking audiences.

As an MIT study found in 2019, Russia's online influence networks reached 140 million Americans every month -- the majority of U.S. social media users.

Russia began using troll farms a decade ago to incite gender and racial divisions in the United States

In 2013, Yevgeny Prigozhin, a confidante of Vladimir Putin, founded the Internet Research Agency (the IRA) in St. Petersburg. It was the Russian government's first coordinated facility to disrupt U.S. society and politics through social media.

Here's what Prigozhin had to say about the IRA's efforts to disrupt the 2022 election:

"Gentlemen, we interfered, we interfere and we will interfere. Carefully, precisely, surgically and in our own way, as we know how. During our pinpoint operations, we will remove both kidneys and the liver at once."

In 2014, the IRA and other Russian networks began establishing fake U.S. activist groups on social media. By 2015, hundreds of English-speaking young Russians worked at the IRA. Their assignment was to use those false social-media accounts, especially on Facebook and Twitter -- but also on Reddit, Tumblr, 9gag, and other platforms -- to aggressively spread conspiracy theories and mocking, ad hominem arguments that incite American users.

In 2017, U.S. intelligence found that Blacktivist, a Facebook and Twitter group with more followers than the official Black Lives Matter movement, was operated by Russia. Blacktivist regularly attacked America as racist and urged black users to rejected major candidates. On November 2, 2016, just before the 2016 election, Blacktivist's Twitter urged Black Americans: "Choose peace and vote for Jill Stein. Trust me, it's not a wasted vote."

Russia plays both sides -- on gender, race, and religion

The brilliance of the Russian influence campaign is that it convinces Americans to attack each other, worsening both misandry and misogyny, mutual racial hatred, and extreme antisemitism and Islamophobia. In short, it's not just an effort to boost the right wing; it's an effort to radicalize everybody.

Russia uses its trolling networks to aggressively attack men. According to MIT, in 2019, the most popular Black-oriented Facebook page was the charmingly named "My Baby Daddy Aint Shit." It regularly posts memes attacking Black men and government welfare workers. It serves two purposes: Make poor black women hate men, and goad black men into flame wars.

MIT found that My Baby Daddy is run by a large troll network in Eastern Europe likely financed by Russia.

But Russian influence networks are also also aggressively misogynistic and aggressively anti-LGBT.

On January 23, 2017, just after the first Women's March, the New York Times found that the Internet Research Agency began a coordinated attack on the movement. Per the Times:

More than 4,000 miles away, organizations linked to the Russian government had assigned teams to the Women’s March. At desks in bland offices in St. Petersburg, using models derived from advertising and public relations, copywriters were testing out social media messages critical of the Women’s March movement, adopting the personas of fictional Americans.

They posted as Black women critical of white feminism, conservative women who felt excluded, and men who mocked participants as hairy-legged whiners.

But the Russian PR teams realized that one attack worked better than the rest: They accused its co-founder, Arab American Linda Sarsour, of being an antisemite. Over the next 18 months, at least 152 Russian accounts regularly attacked Sarsour. That may not seem like many accounts, but it worked: They drove the Women's March movement into disarray and eventually crippled the organization.

Russia doesn't need a million accounts, or even that many likes or upvotes. It just needs to get enough attention that actual Western users begin amplifying its content.

A former federal prosecutor who investigated the Russian disinformation effort summarized it like this:

It wasn’t exclusively about Trump and Clinton anymore. It was deeper and more sinister and more diffuse in its focus on exploiting divisions within society on any number of different levels.

As the New York Times reported in 2022,

There was a routine: Arriving for a shift, [Russian disinformation] workers would scan news outlets on the ideological fringes, far left and far right, mining for extreme content that they could publish and amplify on the platforms, feeding extreme views into mainstream conversations.

China is joining in with AI

[A couple months ago], the New York Times reported on a new disinformation campaign. "Spamouflage" is an effort by China to divide Americans by combining AI with real images of the United States to exacerbate political and social tensions in the U.S. The goal appears to be to cause Americans to lose hope, by promoting exaggerated stories with fabricated photos about homeless violence and the risk of civil war.

As Ladislav Bittman, a former Czechoslovakian secret police operative, explained about Soviet disinformation, the strategy is not to invent something totally fake. Rather, it is to act like an evil doctor who expertly diagnoses the patient’s vulnerabilities and exploits them, “prolongs his illness and speeds him to an early grave instead of curing him.”

The influence networks are vastly more effective than platforms admit

Russia now runs its most sophisticated online influence efforts through a network called Fabrika. Fabrika's operators have bragged that social media platforms catch only 1% of their fake accounts across YouTube, Twitter, TikTok, and Telegram, and other platforms.

But how effective are these efforts? By 2020, Facebook's most popular pages for Christian and Black American content were run by Eastern European troll farms tied to the Kremlin. And Russia doesn't just target angry Boomers on Facebook. Russian trolls are enormously active on Twitter. And, even, on Reddit.

It's not just false facts

The term "disinformation" undersells the problem. Because much of Russia's social media activity is not trying to spread fake news. Instead, the goal is to divide and conquer by making Western audiences depressed and extreme.

Sometimes, through brigading and trolling. Other times, by posting hyper-negative or extremist posts or opinions about the U.S. the West over and over, until readers assume that's how most people feel. And sometimes, by using trolls to disrupt threads that advance Western unity.

As the RAND think tank explained, the Russian strategy is volume and repetition, from numerous accounts, to overwhelm real social media users and create the appearance that everyone disagrees with, or even hates, them. And it's not just low-quality bots. Per RAND,

Russian propaganda is produced in incredibly large volumes and is broadcast or otherwise distributed via a large number of channels. ... According to a former paid Russian Internet troll, the trolls are on duty 24 hours a day, in 12-hour shifts, and each has a daily quota of 135 posted comments of at least 200 characters.

What this means for you

You are being targeted by a sophisticated PR campaign meant to make you more resentful, bitter, and depressed. It's not just disinformation; it's also real-life human writers and advanced bot networks working hard to shift the conversation to the most negative and divisive topics and opinions.

It's why some topics seem to go from non-issues to constant controversy and discussion, with no clear reason, across social media platforms. And a lot of those trolls are actual, "professional" writers whose job is to sound real.

So what can you do? To quote WarGames: The only winning move is not to play. The reality is that you cannot distinguish disinformation accounts from real social media users. Unless you know whom you're talking to, there is a genuine chance that the post, tweet, or comment you are reading is an attempt to manipulate you -- politically or emotionally.

Here are some thoughts:

Don't accept facts from social media accounts you don't know. Russian, Chinese, and other manipulation efforts are not uniform. Some will make deranged claims, but others will tell half-truths. Or they'll spin facts about a complicated subject, be it the war in Ukraine or loneliness in young men, to give you a warped view of reality and spread division in the West.

Resist groupthink. A key element of manipulate networks is volume. People are naturally inclined to believe statements that have broad support. When a post gets 5,000 upvotes, it's easy to think the crowd is right. But "the crowd" could be fake accounts, and even if they're not, the brilliance of government manipulation campaigns is that they say things people are already predisposed to think. They'll tell conservative audiences something misleading about a Democrat, or make up a lie about Republicans that catches fire on a liberal server or subreddit.

Don't let social media warp your view of society. This is harder than it seems, but you need to accept that the facts -- and the opinions -- you see across social media are not reliable. If you want the news, do what everyone online says not to: look at serious, mainstream media. It is not always right. Sometimes, it screws up. But social media narratives are heavily manipulated by networks whose job is to ensure you are deceived, angry, and divided.

Edited for typos and clarity. (Tumblr-edited for formatting and to note a sourced article is now older than mentioned in the original post. -LV)

P.S. Apparently, this post was removed several hours ago due to a flood of reports. Thank you to the r/GenZ moderators for re-approving it.

Second edit:

This post is not meant to suggest that r/GenZ is uniquely or especially vulnerable, or to suggest that a lot of challenges people discuss here are not real. It's entirely the opposite: Growing loneliness, political polarization, and increasing social division along gender lines is real. The problem is that disinformation and influence networks expertly, and effectively, hijack those conversations and use those real, serious issues to poison the conversation. This post is not about left or right: Everyone is targeted.

(Further Tumblr notes: since this was posted, there have been several more articles detailing recent discoveries of active disinformation/influence and hacking campaigns by Russia and their allies against several countries and their respective elections, and barely touches on the numerous Tumblr blogs discovered to be troll farms/bad faith actors from pre-2016 through today. This is an ongoing and very real problem, and it's nowhere near over.

A quote from NPR article linked above from 2018 that you might find familiar today: "[A] particular hype and hatred for Trump is misleading the people and forcing Blacks to vote Killary. We cannot resort to the lesser of two devils. Then we'd surely be better off without voting AT ALL," a post from the account said.")

#propaganda#psyops#disinformation#US politics#election 2024#us elections#YES we have legitimate criticisms of our politicians and systems#but that makes us EVEN MORE susceptible to radicalization. not immune#no not everyone sharing specific opinions are psyops. but some of them are#and we're more likely to eat it up on all sides if it aligns with our beliefs#the division is the point#sound familiar?#voting#rambles#long post

159 notes

·

View notes

Text

This beautiful blue gown was first spotted in the 1994 episode of The Nanny entitled A Star Is Unborn. Martha Gehman wore it as Betty. However, Fran recognizes her from a commercial and calls her the “Less than Fresh” girl. The costume almost certainly did not originate with The Nanny, but its place of origin has yet to be determined.

The gown had lace added to the front of the neckline when it appeared in the 1997 episode of Frasier entitled Halloween, where an uncredited party guest wore it. We cannot confirm it, but she resembles actress Illeana Douglas, who later appeared as Kenny’s wife in the 2001 episode of Frasier entitled Hungry Heart.

The costume was further altered, with the beautiful sleeves being removed and replaced when it was worn by Alexis Bledel as Rory Gilmore in the 2001 episode of Gilmore Girls entitled Run Away, Little Boy.

The dress was seen again throughout the 2013 first season of Reign, where Katy Grabstas wore it as Sarah.

Costume Credit: Anonymous, Katie S.

Follow: Website | Twitter | Facebook | Pinterest | Instagram

819 notes

·

View notes

Text

OKAY SO I FOUND SOME STUFF ABOUT RINK O'MANIA

Hi, Hello, Welcome as I take you down the journey I fucking went through

So I was watching byler clips, as one does, and I was wondering whether or not they got lucky with the yellow and blue lighting in Rink O'Mania or whether they like – change the light bulbs or whatever. So I decided to stalk the Skating Rink they filmed at.

And holy shit did I find some interesting things.

I have no idea whether or not this was discovered before but I JUST NEED TO SHARE. SO HERE WE GO.

So, first of all, the place was originally called Roller King but they changed it to Skate O'Mania. Which, pretty similar to Rink O'Mania right? THAT'S BECAUSE THEY REMODELLED THE ENTIRE FUCKING ROLLER RINK FOR THE SHOW

LIKE I'm not even kidding.

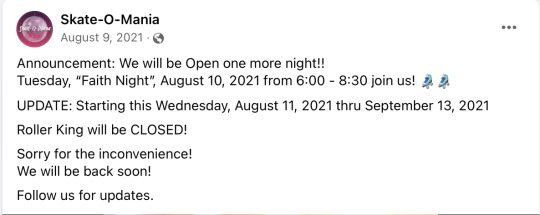

They posted this message

Followed by a video (which is just a photo) with a little clipart of "under construction"

THEN, on August 10th they posted that they wrapped filming.

"Remodel courtesy of Hollywood" They deadass redecorated the entire roller rink for the however many minutes spent in ONE episode of the season. (The probably spent half the month redecorating and the other half filming which I'm losing my mind over?? no way this is a one time setting).

LIKE– Let me show you the difference in before and after the remodelling

The Roller Rink Before and After.

They added the blue and yellow light strips and the disco ball. And I can’t say 100 but it looks like they removed the pink and green coloured light(?)

And they added the whole ‘Skate/Rink O’mania’ sign (basically everything honestly was added by them–)

The Snack Station Before and After.

Again, we see the blue and yellow lighting.

The Table/Seating Area Before and After.

And okay I’m sorry about the quality of the second one I couldn’t find a better photo from their Google maps gallery or Facebook bUT- you can see that originally the lighting scheme was pink and blue but now it’s yellow and blue.

(here's another photo)

Like idk I’m losing my mind over this- they really like remodelled the entire fucking roller rink and added so much yellow and blue like?? I just think it's insane and I needed to share this discovery.

#byler#mike wheeler#will byers#stranger things#stranger things 4#stranger things analysis#I have no idea if anyones done this before#hopefully this just helps prove that everything in the set is literally 100% intentional

1K notes

·

View notes

Text

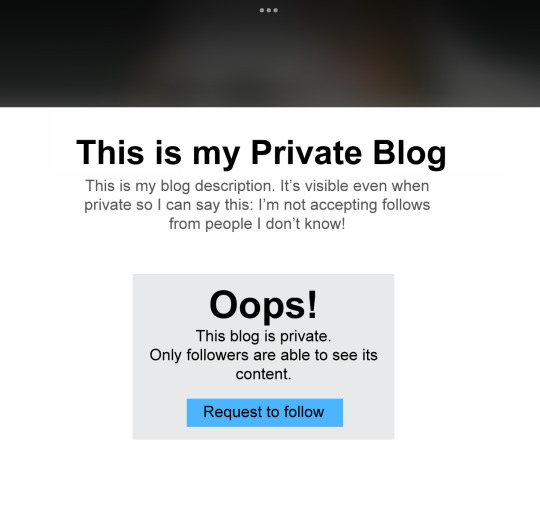

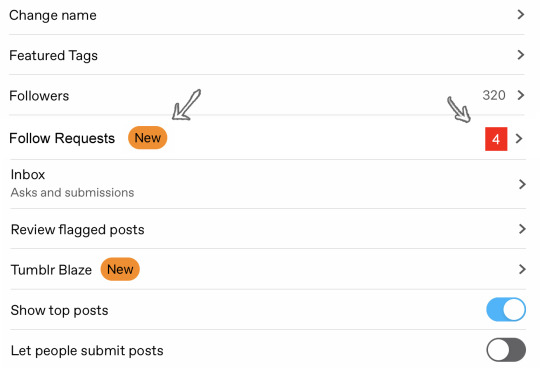

@wip i have a tumblr feature suggestion!!

Right now, Twitter (or whatever it’s called now) is the only social app that allows PRIVATE ACCOUNTS (instagram doesn’t count because you can’t have text posts. You expect me to post an image every time I want to vent? Facebook also doesn’t count because I hate it.)

I know we have the ability currently to make our side blogs private, but here’s the issue with that for me:

1. They can’t be FOLLOWED, you can apparently only add “members” to the blog.

2. I thought I had more points but really it keeps coming back to the fact that they can’t be followed. I don’t want to put in a password every time I want to check in on a friend. The convenience of making your own private blog and following your friends’ private blogs cannot be overstated. It’s the ONE THING that’s keeping me attached to twitter.

What’s so great about having a private blog?

Safety. Toggle your blog to “Private” in Visibility settings if you need a breather from outside attention. Only your followers will be able to see any new posts you make. Old posts will still be visible outside your blog however, similar to when a blog is deactivated I assume.

Friends-only space. Sharing thoughts and art with only a few close friends is more comfortable for a lot of people.

Actual privacy. Even if you have 0 followers, even if you don’t use tags, the tumblr search will still pull up any word used in the post itself, meaning if someone searches the right thing, your posts could still show up to anyone. Setting your blog to private would remove this concern.

Journaling. Private twitters are nice, but a private tumblr? Getting to write out a BIG LENGTHY RANT for only close friends? Hell yea

Keep explicit content away from those who don’t want to see it. Setting a blog to private is a great way to keep certain art and writings out of easily reachable spaces.

Another bridged gap between twitter and tumblr. Ok listen. I’m not necessarily advocating for every twitter user to join tumblr. But I know that’s something the tumblr staff is interested in, so I’m adding it here for that reason. I for one would ditch twitter in a heartbeat if I could have a private blog, and would probably have an easier time convincing some straggling friends to join for a feature like this. Just sayin

I also made some quick and sloppy mockups

Anyway i guess that’s the gist of it

If anyone has anything to add pls feel free. I really want a private blog LMAO

427 notes

·

View notes