#social media content moderation

Text

youtube

a u.s. supreme court case for 2023 may change the internet. legal eagle (above video) explains.

Gonzalez v. Google LLC is about whether youtube can be held liable for the content that its algorithm recommends to viewers. it involves a terrorist attack by isis and the fact that youtube was recommending isis videos to its users.

#u.s. supreme court#u.s. law#2023 u.s. supreme court cases#youtube algorithm#communications decency act#section 230#social media content moderation#isis#youtube recommendations#online radicalization#this dystopian nightmare of a country#duty not to support terrorists (ATA)#Youtube#Gonzalez v. Google LLC#legaleagle#antiterrorism act (ATA)#3rd party content moderation#FOSTA#SESTA

13 notes

·

View notes

Text

👉 How Agile Content Moderation Process Improves a Brand’s Online Visibility

🤷♀️ Agile content moderation enhances a brand’s online presence by swiftly addressing and adapting to evolving content challenges. This dynamic approach ensures a safer and more positive digital environment, boosting visibility and trust.

🔊 Read the blog: https://www.sitepronews.com/2022/12/20/how-agile-content-moderation-process-improves-a-brands-online-visibility/

View On WordPress

#content moderation#content moderation solution#Outsource Content Moderation#Outsource content moderation services#social media content moderation

0 notes

Link

#Social Media Content Moderation#United States#social networking sites#objectionable content#Content moderation services#content moderators#social media platforms#Pre-moderation#post-moderation#reactive moderation#spam contents#Unwanted Contents#facebook#linkdin#twiitter#Instagram#YouTube#Tumblr#TikTok

1 note

·

View note

Text

Better failure for social media

Content moderation is fundamentally about making social media work better, but there are two other considerations that determine how social media fails: end-to-end (E2E), and freedom of exit. These are much neglected, and that’s a pity, because how a system fails is every bit as important as how it works.

Of course, commercial social media sites don’t want to be good, they want to be profitable. The unique dynamics of social media allow the companies to uncouple quality from profit, and more’s the pity.

Social media grows thanks to network effects — you join Twitter to hang out with the people who are there, and then other people join to hang out with you. The more users Twitter accumulates, the more users it can accumulate. But social media sites stay big thanks to high switching costs: the more you have to give up to leave a social media site, the harder it is to go:

https://www.eff.org/deeplinks/2021/08/facebooks-secret-war-switching-costs

Nature bequeaths some in-built switching costs on social media, primarily the coordination problem of reaching consensus on where you and the people in your community should go next. The more friends you share a social media platform with, the higher these costs are. If you’ve ever tried to get ten friends to agree on where to go for dinner, you know how this works. Now imagine trying to get all your friends to agree on where to go for dinner, for the rest of their lives!

But these costs aren’t insurmountable. Network effects, after all, are a double-edged sword. Some users are above-average draws for others, and if a critical mass of these important nodes in the network map depart for a new service — like, say, Mastodon — that service becomes the presumptive successor to the existing giants.

When that happens — when Mastodon becomes “the place we’ll all go when Twitter finally becomes unbearable” — the downsides of network effects kick in and the double-edged sword begins to carve away at a service’s user-base. It’s one thing to argue about which restaurant we should go to tonight, it’s another to ask whether we should join our friends at the new restaurant where they’re already eating.

Social media sites who want to keep their users’ business walk a fine line: they can simply treat those users well, showing them the things they ask to see, not spying on them, paying to police their service to reduce harassment, etc. But these are costly choices: if you show users the things they ask to see, you can’t charge businesses to show them things they don’t want to see. If you don’t spy on users, you can’t sell targeting services to people who want to force them to look at things they’re uninterested in. Every moderator you pay to reduce harassment draws a salary at the expense of your shareholders, and every catastrophe that moderator prevents is a catastrophe you can’t turn into monetizable attention as gawking users flock to it.

So social media sites are always trying to optimize their mistreatment of users, mistreating them (and thus profiting from them) right up to the point where they are ready to switch, but without actually pushing them over the edge.

One way to keep dissatisfied users from leaving is by extracting a penalty from them for their disloyalty. You can lock in their data, their social relationships, or, if they’re “creators” (and disproportionately likely to be key network nodes whose defection to a rival triggers mass departures from their fans), you can take their audiences hostage.

The dominant social media firms all practice a low-grade, tacit form of hostage-taking. Facebook downranks content that links to other sites on the internet. Instagram prohibits links in posts, limiting creators to “Links in bio.” Tiktok doesn’t even allow links. All of this serves as a brake on high-follower users who seek to migrate their audiences to better platforms.

But these strategies are unstable. When a platform becomes worse for users (say, because it mandates nonconsensual surveillance and ramps up advertising), they may actively seek out other places on which to follow each other, and the creators they enjoy. When a rival platform emerges as the presumptive successor to an incumbent, users no longer face the friction of knowing which rival they should resettle to.

When platforms’ enshittification strategies overshoot this way, users flee in droves, and then it’s time for the desperate platform managers to abandon the pretense of providing a public square. Yesterday, Elon Musk’s Twitter rolled out a policy prohibiting users from posting links to rival platforms:

https://web.archive.org/web/20221218173806/https://help.twitter.com/en/rules-and-policies/social-platforms-policy

This policy was explicitly aimed at preventing users from telling each other where they could be found after they leave Twitter:

https://web.archive.org/web/20221219015355/https://twitter.com/TwitterSupport/status/1604531261791522817

This, in turn, was a response to many users posting regular messages explaining why they were leaving Twitter and how they could be found on other platforms. In particular, Twitter management was concerned with departures by high-follower users like Taylor Lorenz, who was retroactively punished for violating the policy, though it didn’t exist when she violated it:

https://deadline.com/2022/12/washington-post-journalist-taylor-lorenz-suspended-twitter-1235202034/

As Elon Musk wrote last spring: “The acid test for two competing socioeconomic systems is which side needs to build a wall to keep people from escaping? That’s the bad one!”

https://twitter.com/elonmusk/status/1533616384747442176

This isn’t particularly insightful. It’s obvious that any system that requires high walls and punishments to stay in business isn’t serving its users, whose presence is attributable to coercion, not fulfillment. Of course, the people who operate these systems have all manner of rationalizations for them.

The Berlin Wall, we were told, wasn’t there to keep East Germans in — rather, it was there to keep the teeming hordes clamoring to live in the workers’ paradise out. In the same way, platforms will claim that they’re not blocking outlinks or sideloading because they want to prevent users from defecting to a competitor, but rather, to protect those users from external threats.

This rationalization quickly wears thin, and then new ones step in. For example, you might claim that telling your friends that you’re leaving and asking them to meet you elsewhere is like “giv[ing] a talk for a corporation [and] promot[ing] other corporations”:

https://mobile.twitter.com/mayemusk/status/1604550452447690752

Or you might claim that it’s like “running Wendy’s ads [on] McDonalds property,” rather than turning to your friends and saying, “The food at McDonalds sucks, let’s go eat at Wendy’s instead”:

https://twitter.com/doctorow/status/1604559316237037568

The truth is that any service that won’t let you leave isn’t in the business of serving you, it’s in the business of harming you. The only reason to build a wall around your service — to impose any switching costs on users- is so that you can fuck them over without risking their departure.

The platforms want to be Anatevka, and we the villagers of Fiddler On the Roof, stuck plodding the muddy, Cossack-haunted roads by the threat of losing all our friends if we try to leave:

https://doctorow.medium.com/how-to-leave-dying-social-media-platforms-9fc550fe5abf

That’s where freedom of exit comes in. The public should have the right to leave, and companies should not be permitted to make that departure burdensome. Any burdens we permit companies to impose is an invitation to abuse of their users.

This is why governments are handing down new interoperability mandates: the EU’s Digital Markets Act forces the largest companies to offer APIs so that smaller rivals can plug into them and let users walkaway from Big Tech into new kinds of platforms — small businesses, co-ops, nonprofits, hobby sites — that treat them better. These small players are overwhelmingly part of the fediverse: the federated social media sites that allow users to connect to one another irrespective of which server or service they use.

The creators of these platforms have pledged themselves to freedom of exit. Mastodon ships with a “Move Followers” and “Move Following” feature that lets you quit one server and set up shop on another, without losing any of the accounts you follow or the accounts that follow you:

https://codingitwrong.com/2022/10/10/migrating-a-mastodon-account.html

This feature is as yet obscure, because the exodus to Mastodon is still young. Users who flocked to servers without knowing much about their managers have, by and large, not yet run into problems with the site operators. The early trickle of horror stories about petty authoritarianism from Mastodon sysops conspicuously fail to mention that if the management of a particular instance turns tyrant, you can click two links, export your whole social graph, sign up for a rival, click two more links and be back at it.

This feature will become more prominent, because there is nothing about running a Mastodon server that means that you are good at running a Mastodon server. Elon Musk isn’t an evil genius — he’s an ordinary mediocrity who lucked into a lot of power and very little accountability. Some Mastodon operators will have Musk-like tendencies that they will unleash on their users, and the difference will be that those users can click two links and move elsewhere. Bye-eee!

Freedom of exit isn’t just a matter of the human right of movement, it’s also a labor issue. Online creators constitute a serious draw for social media services. All things being equal, these services would rather coerce creators’ participation — by holding their audiences hostage — than persuade creators to remain by offering them an honest chance to ply their trade.

Platforms have a variety of strategies for chaining creators to their services: in addition to making it harder for creators to coordinate with their audiences in a mass departure, platforms can use DRM, as Audible does, to prevent creators’ customers from moving the media they purchase to a rival’s app or player.

Then there’s “freedom of reach”: platforms routinely and deceptively conflate recommending a creator’s work with showing that creator’s work to the people who explicitly asked to see it.

https://pluralistic.net/2022/12/10/e2e/#the-censors-pen

When you follow or subscribe to a feed, that is not a “signal” to be mixed into the recommendation system. It’s an order: “Show me this.” Not “Show me things like this.”

Show.

Me.

This.

But there’s no money in showing people the things they tell you they want to see. If Amazon showed shoppers the products they searched for, they couldn’t earn $31b/year on an “ad business” that fills the first six screens of results with rival products who’ve paid to be displayed over the product you’re seeking:

https://pluralistic.net/2022/11/28/enshittification/#relentless-payola

If Spotify played you the albums you searched for, it couldn’t redirect you to playlists artists have to shell out payola to be included on:

https://pluralistic.net/2022/09/12/streaming-doesnt-pay/#stunt-publishing

And if you only see what you ask for, then product managers whose KPI is whether they entice you to “discover” something else won’t get a bonus every time you fatfinger a part of your screen that navigates you away from the thing you specifically requested:

https://doctorow.medium.com/the-fatfinger-economy-7c7b3b54925c

Musk, meanwhile, has announced that you won’t see messages from the people you follow unless they pay for Twitter Blue:

https://www.wired.com/story/what-is-twitter-blue/

And also that you will be nonconsensually opted into seeing more “recommended” content from people you don’t follow (but who can be extorted out of payola for the privilege):

https://www.socialmediatoday.com/news/Twitter-Expands-Content-Recommendations/637697/

Musk sees Twitter as a publisher, not a social media site:

https://twitter.com/elonmusk/status/1604588904828600320

Which is why he’s so indifferent to the collateral damage from this payola/hostage scam. Yes, Twitter is a place where famous and semi-famous people talk to their audiences, but it is primarily a place where those audiences talk to each other — that is, a public square.

This is the Facebook death-spiral: charging to people to follow to reach you, and burying the things they say in a torrent of payola-funded spam. It’s the vision of someone who thinks of other people as things to use — to pump up your share price or market your goods to — not worthy of consideration.

As Terry Pratchett’s Granny Weatherwax put it: “Sin is when you treat people like things. Including yourself. That’s what sin is.”

Mastodon isn’t perfect, but its flaws are neither fatal nor permanent. The idea that centralized media is “easier” surely reflects the hundreds of billions of dollars that have been pumped into refining social media Roach Motels (“users check in, but they don’t check out”).

Until a comparable sum has been spent refining decentralized, federated services, any claims about the impossibility of making the fediverse work for mass audiences should be treated as unfalsifiable, motivated reasoning.

Meanwhile, Mastodon has gotten two things right that no other social media giant has even seriously attempted:

I. If you follow someone on Mastodon, you’ll see everything they post; and

II. If you leave a Mastodon server, you can take both your followers and the people you follow with you.

The most common criticism of Mastodon is that you must rely on individual moderators who may be underresourced, incompetent on malicious. This is indeed a serious problem, but it isn’t the same serious problem that Twitter has. When Twitter is incompetent, malicious, or underresourced, your departure comes at a dear price.

On Mastodon, your choice is: tolerate bad moderation, or click two links and move somewhere else.

On Twitter, your choice is: tolerate moderation, or lose contact with all the people you care about and all the people who care about you.

The interoperability mandates in the Digital Markets Act (and in the US ACCESS Act, which seems unlikely to get a vote in this session of Congress) only force the largest platforms to open up, but Mastodon shows us the utility of interop for smaller services, too.

There are lots of domains in which “dominance” shouldn’t be the sole criteria for whether you are expected to treat your customers fairly.

A doctor with a small practice who leaks all ten patients’ data harms those patients as surely as a hospital system with a million patients would have. A small-time wedding photographer who refuses to turn over your pictures unless you pay a surprise bill is every bit as harmful to you as a giant chain that has the same practice.

As we move into the realm of smalltime, community-oriented social media servers, we should be looking to avoid the pitfalls of the social media bubble that’s bursting around us. No matter what the size of the service, let’s ensure that it lets us leave, and respects the end-to-end principle, that any two people who want to talk to each other should be allowed to do so, without interference from the people who operate their communications infrastructure.

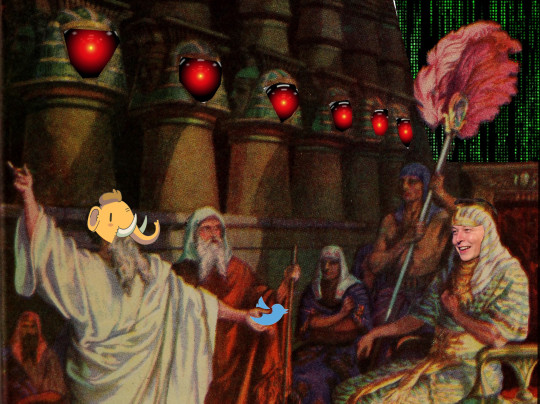

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

Heisenberg Media (modified)

https://commons.wikimedia.org/wiki/File:Elon_Musk_-_The_Summit_2013.jpg

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/deed.en

[Image ID: Moses confronting the Pharaoh, demanding that he release the Hebrews. Pharaoh’s face has been replaced with Elon Musk’s. Moses holds a Twitter logo in his outstretched hand. The faces embossed in the columns of Pharaoh’s audience hall have been replaced with the menacing red eye of HAL9000 from 2001: A Space Odyssey. The wall over Pharaoh’s head has been replaced with a Matrix ‘code waterfall’ effect. Moses’s head has been replaced with that of the Mastodon mascot.]

#pluralistic#e2e#end-to-end#freedom of reach#mastodon#interoperability#social media#twitter#economy#creator economy#artists rights#content moderation#como#right of exodus#let my tweeters go#exodus#right of exit#technological self-determination

3K notes

·

View notes

Text

Social Media is Nice in Theory, but...

Something I cannot help but think about is how awesome social media can be - theoretically - and how much it sucks for the most part.

I am a twitter refugee. I came to tumblr after Elmo bought twitter and made the plattform a rightwing haven. But, I mean... There is in general an issue with pretty much all social media, right? Like, most people will hate on one plattform specifically and stuff, while upholding another plattform. But let's be honest... They all suck. Just in different ways.

And the main reasons for them sucking are all the same, right? For one, there is advertisement and with that the need to make the plattform advertiser-friendly. But then there is also just the impossibility to properly moderate a plattform used by millions of people.

I mean, the advertisement stuff is already a big issue. Because... Sure, big platforms need big money because they are hosting just so much stuff in videos, images and what not. Hence, duh, they do need to get the money somewhere. And right now the only way to really make enough money is advertisement. Because we live under capitalism and it sucks.

And with this comes the need to make everything advertiserfriendly. On one hand this can be good, because it creates incentives for the platform to not host stuff like... I don't know. Holocaust denial and shit. The kinda stuff that makes most advertisers pull out. But on the other hand...

Well, we all know the issue: Porn bans. And not only porn bans, but also policing of anything connected to nude bodies. Especially nude bodies that are perceived to be female. Because society still holds onto those ideas that female bodies need to be regulated and controlled.

We only recently had a big crackdown on NSFW content on even sides that are not primarily advertiser driven - like Gumroad and Patreon. Because... Well, folks are very intrested in outlawing any form of porn. Often because they claim to want to protect children. The truth is of course that they often do quite the opposite. Because driving everyone away from properly vetted websites also means, that on one hand kids are more likely to come across the real bad stuff. And on the other hand, well... The more dingy the websites are, that folks consume their porn on, the more likely it is to find some stuff like CP and snuff on those sides. Which will get more attention like this.

But there is also the less capitalist issue of moderating the content. Which is... kinda hard on a lot of websites. Of course, to save money a lot of the big social media platforms are not really trying. Because they do not want to for proper moderators. But it makes it more likely for really bad stuff to happen. Like doxxing and what not.

I mean, like with everything: I do think that social media could be a much better place if only we did not have capitalism. But I also think that a lot about the way social media is constructed (with the anonymity and stuff, but also things like this dopamine rush when people like your stuff) will not just change, if you stop capitalism.

#solarpunk#lunarpunk#social media#social networks#facebook#twitter#tiktok#anti capitalism#content moderation#fuck capitalism

12 notes

·

View notes

Text

so mozilla is making a Mastodon instance which is fine but i really like their approach to moderation policies (emph. mine):

You’ll notice a big difference in our content moderation approach compared to other major social media platforms. We’re not building another self-declared “neutral” platform. We believe that far too often, “neutrality” is used as an excuse to allow behaviors and content that’s designed to harass and harm those from communities that have always faced harassment and violence. Our content moderation plan is rooted in the goals and values expressed in our Mozilla Manifesto — human dignity, inclusion, security, individual expression and collaboration. We understand that individual expression is often seen, particularly in the US, as an absolute right to free speech at any cost. Even if that cost is harm to others. We do not subscribe to this view. We want to be clear about this. We’re building an awesome sandbox for us all to play in, but it comes with rules governing how we engage with one another. You’re completely free to go elsewhere if you don’t like them.

yes yes yes yessss. i really wish platforms would make these kinds of considerations and try and avoid this idea of "neutrality".

neutral is just another way of upholding the status quo

38 notes

·

View notes

Quote

The end state of a platform that allows hate speech but gives you the option to "hide" it is that hate speech proliferates. Mobs form. Assholes coordinate their abuse and use sock-puppets to boost their presence, and eventually this spills over into real-world harm.

Some (Probably) Worthless Thoughts About Content Moderation

26 notes

·

View notes

Text

The Supreme Court on Monday grappled with knotty free speech questions as it weighed laws in Florida and Texas that seek to impose restrictions on the ability of social media companies to moderate content.

After almost four hours of oral arguments, a majority of the justices appeared skeptical that states can prohibit platforms from barring or limiting the reach of some problematic users without violating the free speech rights of the companies.

But justices from across the ideological spectrum raised fears about the power and influence of big social media platforms like YouTube and Facebook and questioned whether the laws should be blocked entirely.

Trade groups NetChoice and the Computer and Communications Industry Association, known as CCIA, say that both laws infringe upon the free speech rights of companies under the Constitution’s First Amendment by restricting their ability to choose what content they wish to publish on their platforms.

First Amendment free speech protections apply to government actions, not those by private entities, including companies.

"Why isn't that, you know, a classic First Amendment violation for the state to come in and say, 'We're not going to allow you to enforce those sorts of restrictions'?" asked liberal Justice Elena Kagan, in reference to the Florida law's content moderation provisions.

As Chief Justice John Roberts put it, because the companies are not bound by the First Amendment, "they can discriminate against particular groups that they don't like."

Some justices, however, suggested the laws might have some legitimate applications against other platforms or services, including messaging applications, which could mean the court stops short of striking them down.

The eventual ruling could lead to further litigation in lower courts as to whether the laws should be blocked. Both are currently on hold.

"Separating the wheat from the chaff here is pretty difficult," said conservative Justice Neil Gorsuch.

Fellow conservatives Clarence Thomas and Samuel Alito seemed most sympathetic to the states.

Alito at one point appeared openly mocking of the concept of content moderation.

"Is it anything more than a euphemism for censorship?" he asked.

The laws were enacted by the Republican-led states in 2021 after Twitter, Facebook and others banned former President Donald Trump after his effort to overturn the 2020 presidential election ended in his supporters storming the U.S. Capitol on Jan. 6, 2021.

This was before Twitter was taken over the following year by billionaire Elon Musk, who has allied himself with conservative critics of the platform and allowed various banned users, including Trump, to return.

Both laws seek to impose restrictions on content moderation and require companies to provide individualized explanations to users when content is removed.

The Florida law among other things prevents companies from banning public figures running for political office and restricts “shadow banning,” whereby certain user content is made difficult to find by other users. The state claims that such actions are a form of censorship.

The Texas law similarly prevents platforms from banning users based on the views they express. Each law requires the companies to disclose their moderation policies.

The states seek to equate social media companies with the telecommunications industry, which transmits speech but has no editorial input. These “common carriers” are heavily regulated by the government and do not implicate free speech issues.

As the argument session unfolded it appeared clear that justices were concerned the laws could apply well beyond the traditional social media giants to companies like Uber and Etsy that allow some user-created content.

Similarly, some social media companies, including Facebook, allow direct messaging. One of the biggest tech companies, YouTube owner Google, operates the Gmail email service.

Application of the laws against direct messaging or email services would not raise the same free speech concerns, and justices seemed hesitant about blocking the laws entirely.

"It makes me a little nervous," said conservative Justice Amy Coney Barrett.

The cases have a political edge, with President Joe Biden’s administration filing a brief backing the legal challenges and Trump supporting the laws.

In May 2022, after the New Orleans-based 5th U.S. Circuit Court of Appeals declined to put the Texas law on hold, the Supreme Court stepped in, preventing it from going into effect. Then, four of the nine justices said the court should not have intervened at that stage.

The Florida measure was blocked by the Atlanta-based 11th U.S. Circuit Court of Appeals, prompting the state to appeal to the Supreme Court.

The challenges to the Texas and Florida laws are among several legal questions related to social media that the Supreme Court is currently grappling with.

A legal question not present in the case but lurking in the background is legal immunity that internet companies have long enjoyed for content posted by their users. Last year, the court sidestepped a ruling on that issue.

Alito suggested the social media companies were guilty of hypocrisy in adopting a free speech argument now when the liability shield was premised on them giving free rein to users to post whatever they want.

He paraphrased the companies' arguments as: "It's your message when you want to escape state regulation. But it's not your message when you want to escape liability."

#us politics#news#nbc news#us supreme court#1st amendment#first amendment#us constitution#freedom of speech#free speech#content moderation#social media#2024

3 notes

·

View notes

Text

By: Anna Davis

Published: Oct 23, 2024

Internet users should be able to choose what content they see instead of tech companies censoring on their behalf, a report said on Wednesday.

Author Michael Shellenberger said the power to filter content should be given to social media and internet users rather than tech firms or governments in a bid to ensure freedom of speech.

In his report Free Speech and Big Tech: The Case for User Control, Mr Shellenberger warned that if governments or large tech companies had power over the legal speech of citizens they would have unprecedented influence over thoughts, opinions and “accepted views” in society.

He warned that attempts to censor the internet to protect the public from disinformation could be abused and end up limiting free speech.

He wrote: “Regulation of speech on social media platforms such as Facebook, X (Twitter), Instagram, and YouTube has increasingly escalated in attempts to prevent ‘hate speech’, ‘misinformation’ and online ‘harm’.”

This represents a “fundamental shift in our approach to freedom of speech and censorship” he said, warning that legal content was being policed. He added: “The message is clear: potential ‘harm’ from words alone is beginning to take precedence over free speech.”

Mr Shellenberger’s paper is published today ahead of next week’s Alliance for Responsible Citizenship conference in Greenwich, where he will speak along with MPs Kemi Badenoch and Michael Gove. Free speech is one of the themes the conference will cover.

Explaining how his system of “user control” would work, Mr Shellenberger wrote: “When you use Google, YouTube, Facebook, X/Twitter, or any other social media platform, the company would be required to ask you how you want to moderate your content. Would you like to see the posts of the people you follow in chronological or reverse chronological order? Or would you like to use a filter by a group or person you trust?

“On what basis would you like your search results to be ranked? Users would have infinite content moderation filters to choose from. This would require social media companies to allow them the freedom to choose.”

--

By: Michael Shellenberger

“Speech is fundamental to our humanity because of its inextricable link to thought. One cannot think freely without being able to freely express those thoughts and ideas through speech. Today, this fundamental right is under attack.”

In this paper, Michael Shellenberger exposes the extent of speech censorship online and proposes a ‘Bill of Rights' for online freedom of speech that would restore content moderation to the hands of users.

Summary of Research Paper

In this paper:

The War on Free Speech

Giving users control of moderation

A Bill of Rights for Online Freedom of Speech

The Right to Speak Freely Online

The advent of the internet gave us a double-edged sword: the greatest opportunity for freedom of speech and information ever known to humanity, but also the greatest danger of mass censorship ever seen.

Thirty years on, the world has largely experienced the former. However, in recent years, the tide has been turning as governments and tech companies become increasingly fearful of what the internet has unleashed. In particular, regulation of speech on social media platforms such as Facebook, X (formerly Twitter), Instagram, and YouTube has increased in attempts to prevent “hate speech”, “misinformation”, and online “harm”.

Pieces of pending regulation legislation across the West represent a fundamental shift in our approach to freedom of speech and censorship. The proposed laws explicitly provide a basis for the policing of legal content, and in some cases, the deplatforming or even prosecution of its producers. Beneath this shift is an overt decision to eradicate speech deemed to have the potential to “escalate” into something dangerous—essentially prosecuting in anticipation of crime, rather than its occurrence. The message is clear: potential “harm” from words alone is beginning to take precedence over free speech—neglecting the foundational importance of the latter to our humanity.

This shift has also profoundly altered the power of the state and Big Tech companies in society. If both are able to moderate which views are seen as “acceptable” and have the power to censor legal expressions of speech and opinion, their ability to shape the thought and political freedom of citizens will threaten the liberal, democratic norms we have come to take for granted.

Citizens should have the right to speak freely—within the bounds of the law—whether in person or online. Therefore, it is imperative that we find another way, and halt the advance of government and tech regulation of our speech.

Network Effects and User Control

There is a clear path forward which protects freedom of speech, allows users to moderate what they engage with, and limits state and tech power to impose their own agendas. It is in essence, very simple: if we believe in personal freedom with responsibility, then we must return content moderation to the hands of users.

Social media platforms such as Facebook, X, Instagram, and YouTube could offer users a wide range of filters regarding content they would like to see, or not see. Users can then evaluate for themselves which of these filters they would like to deploy, if any. These simple steps can allow individuals to curate the content they wish to engage with, without infringing on another’s right to free speech.

User moderation also provides tech companies with new metrics with which to target advertisements and should increase user satisfaction with their respective platforms. Governments can also return to the subsidiary role of fostering an environment in which people flourish and can freely exchange ideas.

These proposals turn on the principle of regulating social media platforms according to their “network effects”, which are generated when a product or service delivers greater economic and social value the more people use it. Many network effects, including those realised by the internet and social media platforms are public goods—that is, a product or service that is non-excludable, where everyone benefits equally and enjoy access to it. As social media platforms are indispensable for communication, the framework that regulates online discourse must take into account the way in which these private platforms deliver a public good in the form of network effects.

A Bill of Rights for Online Freedom of Speech

This paper provides a digital “Bill of Rights”, outlining the key principles needed to safeguard freedom of speech online:

Users decide their own content moderation by choosing “no filter”, or content moderation filters offered by platforms.

All content moderation must occur through filters whose classifications are transparent to users.

No secret content moderation.

Companies must keep a transparent log of content moderation requests and decisions.

Users own their data and can leave the platform with it.

No deplatforming for legal content.

Private right of action is provided for users who believe companies are violating these legal provisions.

If such a charter were embraced, the internet could once again fulfil its potential to become the democratiser of ideas, speech, and information of our generation, while giving individuals the freedom to choose the content they engage with, free from government or tech imposition.

--

youtube

When people ask me, you know, to make the case for free speech, I sort of smile because of course it's, I'm a little disoriented by the experience of needing to defend free speech.

Literally, just a few years ago, I would have thought that anybody who had to defend free speech was sort of cringe, like that's silly. Like, who would need to defend free speech? But here we find ourselves having to defend all of the pillars of civilization, free speech, law and order, meritocracy, cheap and abundant energy, food and housing. These things all seem obviously as the pillars of a free, liberal, democratic society, but they're clearly not accepted as that. And so you get the sense that there has been a takeover by a particularly totalitarian worldview which is suggesting that that something comes before free speech.

Well, what is that thing? That thing they say is "reducing harm." And so what we're seeing is safety-ism emerging out of the culture and being demanded and enforced by powerful institutions in society.

I think ARC has so much promise to reaffirm the pillars of civilization. It's not complicated. It's freedom of speech. It's cheap and abundant energy, food and housing, law and order. It's meritocracy.

If we don't have those things, we do not have a functioning liberal, democratic civilization. And that means that it needs to do the work to make the case intellectually, communicate it well in a pro-human, pro-civilization, pro-Western way.

The world wants these things. We are dealing with crises, you know, chaos on our borders, both in Europe and the United States, because people around the world want to live in a free society. They want abundance and prosperity and freedom. They don't want to live in authoritarian or totalitarian regimes.

So we know what the secret ingredients are of success. We need to reaffirm them because the greatest threats are really coming from within. And that means that the reaction and the affirmation of civilizational values also needs to come from within.

#Michael Shellenberger#free speech#freedom of speech#bill of rights#content moderation#censorship#social media#hate speech#misinformation#online harm#religion is a mental illness

2 notes

·

View notes

Link

A different culture

What I missed about Mastodon was its very different culture. Ad-driven social media platforms are willing to tolerate monumental volumes of abusive users. They’ve discovered the same thing the Mainstream Media did: negative emotions grip people’s attention harder than positive ones. Hate and fear drives engagement, and engagement drives ad impressions.

Mastodon is not an ad-driven platform. There is absolutely zero incentives to let awful people run amok in the name of engagement. The goal of Mastodon is to build a friendly collection of communities, not an attention leeching hate mill. As a result, most Mastodon instance operators have come to a consensus that hate speech shouldn’t be allowed. Already, that sets it far apart from twitter, but wait, there’s more. When it comes to other topics, what is and isn’t allowed is on an instance-by-instance basis, so you can choose your own adventure.

You might be wondering, how can you maintain a friendly atmosphere if anyone can just start their own Mastodon instance and spew hate? This is the part I misunderstood when I said Mastodon wouldn’t scale.

The accountability economy

Most Mastodon servers run on donations, which creates a very different dynamic. It is very easy for a toxic platform to generate revenue through ad impressions, but most people are not willing to pay hard-earned money to get yelled at by extremists all day. This is why Twitter’s subscription model will never work. With Mastodon, people find a community they enjoy, and thus are happy to donate to maintain. Which add a new dynamic.

Since Mastodon is basically a network of communities, it is expected that moderators are responsible for their own community, lowering the burden for everyone. Let’s say you run a Mastodon instance and a user of another instance has become problematic towards your users. You report them to their instance’s moderators, but the moderators decline to act. What can you do? Well a lot, actually.

You can block the user’s account from communicating with your instance, so their posts cannot reach anyone on your instance.

You can block the user’s entire instance from communicating with yours, so that nobody on that instance can communicate with anyone on your own.

Now, #2 may sound excessive and overly dramatic, but it’s what makes Mastodon so beautiful. Let me explain.

Instead of expecting instance moderators to moderate incoming toxicity from thousands of other instances, instance owner can be held heavily accountable for their users. If an instance is blocked (de-federated) due to insufficient moderation, everyone on that instance can no-longer reach anyone on any instance that has blocked them, which sucks. This not only impacts the experience of users on the de-federated instance, but also gives them a feeling of guilt by association. But, Marcus, how is collateral damage at all a good thing, you may ask.

Democratic self-regulation

Well, Mastodon users have the ability to transfer their accounts between instances. If your instance starts welcoming extremists, you can move. If your instance starts getting de-federated for abuse, you can move. Many of us, myself included, left Twitter due to the proliferation of extremism. This had a huge personal cost as there is no other Twitter. On Mastodon, there’s always another instance. You can move to another instance, have the same features, keep all your followers, and still communicate with people on other instances via the fediverse.

The ability for users to choose if they wish to be collateral damage is what makes Mastodon work. If an instance is de-federated due to extremism, the users can pressure their moderators to act in order to gain re-federation. Otherwise, they must make the decision if to go down with the ship or simply move. This creates a healthy self-regulating ecosystem where once an instance starts to get de-federated, reasonable users will move their accounts, leaving behind unreasonable ones, which further justifies de-federation, and will lead to more and more instances choosing to de-federate the offending one.

Since instance owners are either running their instance for community purposes, or for profit via donations, user loss is very harmful. As such, there is an economy of accountability which shifts moderation to the source, rather than the destination. It’s in an instance owner’s best interest to maintain the friendliest possible community, as this is what attracts new users and keeps exiting ones. With instance moderators held accountable for their own instances, this significantly reduces the moderation burden on other instances. It also creates a double-moderation system, where if some badness does slip past the source instance’s moderators, moderators on the recipient instances can pick up the slack.

Obviously, de-federation can and does happen for asinine reasons. But this is also a good thing. Recently, the instance I use was blocked by a few smaller instances for working with the Cybersecurity and Infrastructure Security Agency (CISA). CISA’s goal is to improve cybersecurity and protect organizations from hackers, but it’s also a part of the Department of Homeland Security (DHS). DHS is also responsible for Immigrations and Customs Enforcement (ICE), an agency with a track record of rampant humans rights abuse. Understandably, people are hostile towards anyone working with DHS. The best we can do is explain that CISA is not ICE, it is a benevolent agency that serves to help us. If people still choose to maintain hostilities towards us, then it is for the best that they block our instance, because clearly our views are fundamentally incompatible and will only lead to further unfriendliness on both side.

Happy to be wrong

Having spent time on Mastodon, I now realize how hilariously wrong I was about how moderation would work. I was seeing Mastodon through the lenses of Twitter, rather than as a different culture with different technology. I’m now fairly confident in saying Mastodon is friendlier than Twitter and will remain so, regardless of who and how many join.

I enjoy that everyone is on the same page about not having rampant extremism. I can’t believe that is even a thing that has to be said in this day and age, but I digress (no really, I don’t). But more, I like the choose-your-own-adventure nature of Mastodon. If something isn’t blocked, and you wish it was, you can move to an Instance where it is. On the flip side, if something is blocked, and you wish it wasn’t, you can move to an instance where it isn’t.

The crucial mistake of social media was trying to force people with wildly incompatible views to co-exist in the same space. In real-life, I can choose who I associate with. Now, I’m most certainly not looking for an echo chamber. I need my views to be questioned and debated, but it must be by people capable of civil discourse. We should discuss, we should reassess, we should admit when we’re wrong. That is not the status quo online.

Traditional social media does not promote diversity of thought, it is for all intents and purposes an echo chamber, albeit, one that encourages piling on passers-by who possess different opinions. Mastodon feels much closer to hanging out with reasonable friends and acquaintances, whereas Twitter is equivalent to having 15 racists drunk uncles assigned to follow you wherever you go.

I really hope federated networks can be the future of social media.

#opinions#article#mastodon#content moderation#internet#Social media#fediverse#cultural critique#cultural criticism

15 notes

·

View notes

Text

#there is a way. social media is not like live tv which has predetermined commercial schedules#so social media can simply implement moderating buffer time to screen all media contents.#social media simply has to employ an army of screeners to kill off live streaming of violence.#and the internet domain controller can also shut down a site that’s streaming directly. they just have to implement and act#taiwantalk#Israel#Hamas#live streaming of execution

3 notes

·

View notes

Text

.

#sorry i do find the current fic being fed into ai thing is getting a bit overblown sorry#like i understand why people are uncomfortable#qnd i would prefer that we all get a bit more control over our online data and how it stored and processed#and there probably should be some talk about consenting to having your writing used or whatever#but it's getting a bit moral panicky now ngl#like i really dont think this is the great issue of our time#also im 90% sure that chatGPT doesn't learn from conversations/submissions#at least not in a straightforward way#it's relying on its own old database not what you give it#anyway my real issue with ai is the issue of content moderation and the exploitation of moderators#which is an issue that goes far beyond just ai and is inherent to all social media#i would much rather scroll through endless posts about that issue than fearmongering abt random teenagers#who use ai to write boring bad endings for fics they like#as if chatGPT will produce anything worth reading anyway

2 notes

·

View notes

Text

Mandatory use of clear names for a peaceful Internet?

In our blog, we want to report on network policy, data protection and online security. We already reported on the topic of the clear name requirement in a blog post last year. In view of the current debate in Austria, we have decided to take another closer look at the topic. Therefore, this article is intended to provide an overview of the current discussion patterns, empirical values and…

View On WordPress

#clear name requirement#community platform#content moderation#online community#social media#user verification

0 notes

Text

Uncover the Influence of Social Media Moderation: Explore the transformative impact of moderation tools on your brand image. Gain insights into various moderation types, and discover essential tips to ensure a thriving online presence that not only stays relevant but also seamlessly aligns with your brand identity.

Ready to harness the full potential of social media moderation? Dive into a journey where strategic moderation not only shapes perception but also propels your brand towards unparalleled success.

#social media#ugc#user-generated content#tumbler#tools#social media tools#content moderation#insights#facebook#instagram#linkedin#influencers#digital creator#branding#technology#tech

0 notes

Text

Keeping it Clean: The Role of Content Moderation

Content Moderation plays in maintaining the integrity and safety of online platforms. This insightful work dives into the multifaceted responsibilities of content moderators, examining their role as guardians of digital communities.

"Keeping it Clean" illuminates the essential role content moderation plays in fostering inclusive and respectful digital spaces for users around the globe.

0 notes

Text

posted self harm harm reduction advice on twitter and got locked for encouraging self harm

1 note

·

View note