#synthetic data for AI training

Explore tagged Tumblr posts

Text

Synthetic Data for AI Training: Solving Data Scarcity by 2026

Synthetic data for AI training bridges real‑world data gaps, enhances privacy, and scales model development by 2026, offering scalable, ethical, and cost‑effective solutions. When high‑quality datasets run scarce, synthetic data for AI training ensures continuity, privacy compliance, and scalability across sectors like healthcare, finance, and autonomous systems by 2026. Visit now 🔍 What Is…

0 notes

Text

🤖🔥 Say hello to Groot N1! Nvidia’s game-changing open-source AI is here to supercharge humanoid robots! 💥🧠 Unveiled at #GTC2025 🏟️ Welcome to the era of versatile robotics 🚀🌍 #AI #Robotics #Nvidia #GrootN1 #TechNews #FutureIsNow 🤩🔧

#AI-powered automation#DeepMind#dual-mode AI#Gemini Robotics#general-purpose machines#Groot N1#GTC 2025#humanoid robots#Nvidia#open-source AI#robot learning#robotic intelligence#robotics innovation#synthetic training data#versatile robotics

0 notes

Text

Synthetic Data for Respiratory Diseases: Real-World Accuracy Achieved

Ground-glass opacities and other lung lesions pose major challenges for AI diagnostic models, largely because even expert radiologists find them difficult to precisely annotate. This scarcity of data with high-quality annotations makes it tough for AI teams to train robust models on real-world data. RYVER.AI addresses this challenge by generating pre-annotated synthetic medical images on demand. Their previous studies confirmed that synthetically created images containing lung nodules meet real-world standards. Now, they’ve extended this success to respiratory infection cases. In a recent study, synthetic COVID-19 CT scans with ground-glass opacities were used to train a segmentation model, and the results were comparable to those from a model trained solely on real-world data - proving that synthetic images can replace or supplement real cases without compromising AI performance. That’s why Segmed and RYVER. AI is joining forces to develop a comprehensive foundation model for generating diverse medical images tailored to various needs. Which lesions give you the most trouble? Let us know in the comments, or reach out to discuss how synthetic data can help streamline your workflow.

#Medical AI#Synthetic Data#AI Training#Machine Learning#Deep Learning#AI Research#Health Care AI#MedTech#Radiology#Pharma

0 notes

Text

So, you want to build AI models that can detect enemy equipment, but you don’t exactly have a military budget lying around? What’s the game plan? Here’s a guide to building highly accurate AI detection models for enemy hardware - without breaking the bank.

By using synthetic data from 3D models, you can train powerful object detection models like YOLOv5 to recognize even the most elusive assets on the cheap.

When gathering real-world images is tough or expensive, synthetic data from 3D models becomes a game-changer for training object detection models like YOLOv5. Known for its speed and accuracy, YOLOv5 processes images in a single pass, making it ideal for real-time applications like video analysis and autonomous systems.

Using 3D models, synthetic images of objects - such as Russian, Chinese, and North Korean T-90 tanks (for example) - can be generated from every angle, under different conditions, and in varied environments. This flexibility lets YOLOv5 learn robust features and generalize well, even for highly specific detection needs.

Ultimately, synthetic data from 3D models offers an efficient, cost-effective way to build accurate, custom detection models where real-world data collection is limited or impractical.

Steps to Building Detection Models Using Synthetic 3D Images

1. Generating Synthetic Images from 3D Models

● Use a 3D graphics tools like Blender or Unity, to render images of the tank model from every conceivable angle. These tools allow control over orientation, distance, lighting, and environment.

● Render the tank against various backgrounds to simulate different settings, or start with plain backgrounds for initial training, adding realistic ones later to improve robustness.

● Add variety by changing:

■ Lighting conditions (daylight, overcast, night)

■ Viewing angles (front, back, top-down, low angle)

■ Distances and zoom levels to simulate visibility and scale variations.

2. Applying Realistic Textures and Weather Effects

● Apply textures (like camouflage) and surface details (scratches, wear) to enhance realism.

● Simulate weather effects—rain, snow, or fog—to make the images resemble real-world conditions, helping the model generalize better.

3. Generating Annotation Labels for Synthetic Images

● Use the 3D rendering software to generate bounding box annotations along with the images. Many tools can output annotation data directly in YOLO format or in formats that are easily converted.

4. Using Domain Randomization for Improved Generalization

● Domain randomization involves varying elements like background, lighting, and texture randomly in each image to force the model to generalize.

● Change the background or adjusting color schemes helps the model perform better when exposed to real-world images, focusing on the shape and structure rather than specific synthetic features.

5. Fine-Tuning with Real Images (recommended)

● While synthetic images provide a strong foundation, adding even a small number of real images during training can boost accuracy and robustness. Real images add natural variations in lighting, texture, and environment that are hard to replicate fully.

● For optimal fine-tuning, aim for 50–100 real images per tank type if available.

YOLOv5 URL: https://github.com/ultralytics/yolov5

Oh, by the way... the video is just one of 365 different rotations for this background and lighting.

0 notes

Text

e438 — We will always have Paris

VR, Quest 3, photorealism, artists using Nightshade for data poisoning to protect their works, code hemophilia from recursive training on synthetic data, Dutchification and much more!

Photo by Rodrigo Kugnharski on Unsplash Published 30 October 2023 Michael, Michael and Andy get together for a lively discussion on VR, AI, another virtual museum and end on a high note with “a touch of Dutch” applied via generative AI. Starting off the episode with VR, the co-hosts explore a couple of articles dealing with the new Quest 3 headset and ways of working with it. The TechCrunch…

View On WordPress

#ai#code hemophilia#data poisoning#Dutchification#nightshade#Paris#photorealism#quest 3#recursive training#synthetic data#uncanny valley#vr

0 notes

Text

Your first Sync

The first time you step into your mechs cockpit, it is with something like reverence. You'd been preparing for this moment for months (well, your entire life, really); hours upon hours in the training sims, harsh training regimens, a drug cocktail of neuro-stims, and a whole suite of pilot integration augments grafted onto your body.

You swear you can feel the metal beneath your skin buzzing with anticipation as you settle into the cradle custom built just for you. Not just any pilot can fly any Mech. Each Mech is custom built for their pilot, and each pilot is molded to fit that Mech. A strange kind of synthetic symbiosis, irreplacable partners. You aren't entirely sure why that is the case, the ads are always hazy on those details, but you've always seen each Mech with the same pilot, standing triumphantly alongside each other.

Your heart pounds in your chest as you wonder what it will feel like, to finally integrate with your Mech. You've dreamt of this moment since the first time you saw the propaganda vids. Giant metal machines of war, and their integrated organic pilots. You'd felt a longing then, one you didn't quite understand, a longing for steel plates and thundering autocannons. It wasn't until years later that you finally recognized that feeling as dysphoria.

But now you're finally here, finally about to cross that threshold and grasp what you'd dreamt of all those years ago.

You relax into the cradle and let the integration systems come to life. The cockpit closes around you and you feel the cold metal of the link cables sliding into the ports grafted onto your body. You shiver, both from the cold, and the anticipation.

click

A deluge of data rushes through your mind, integration processes blinking through your awareness as sensations expand out of your flesh body and into your new metal one. It's overwhelming, it's joyous, it's… Euphoric. You feel tears running down the cheeks of your flesh body before the synchronization is even complete. For the first time in your life, you feel… whole.

And then it speaks.

"Welcome, Pilot Caster."

That's… the voice of the training AI…? You recognize it from the simulation runs. What is it doing here, in your Mech?

"I am Integrated Mechanized Personality Construct designation P-Zero-L-X." The voice is being broadcast straight into your thoughts, you realize. Somehow that doesn't bother you. "It is good to see you again."

Something finally clicks for you, hearing that. This wasn't just a training AI, this was your training AI. All those hours in the simulation chamber, the techs had been calibrating this IMP to your neural system. You smile at that. You couldn't ask for a better companion.

"Good to see you too, Polux." You respond, knowing that the techs had tailored this IMPs designation just for you. It was a nice touch, that nod to Pilot tradition. "it's nice to finally meet you properly."

You feel her smile back, warmth flooding your chest as the docking clamps finally release your shared body.

"All systems are green, ready to launch on your mark, Pilot Caster."

Your muscles tense, flesh and metal alike, quivering in excitement. Your afterburners ignite in preparation.

"Mark!"

609 notes

·

View notes

Text

We always thought we were alone out there. Not in the galaxy—no, that dream died fast. I mean alone… in ourselves. Human.

Centuries ago, we broke Earth’s gravity with nothing but desperation and data. We were running—from ruin, from rot, from each other. But we didn’t stop at the stars. We colonized them, carved cities into comets, hung solar farms between moons, called it home.

But it wasn’t just our bodies that changed out here. It was our minds.

Pluto was the furthest reach—the quiet end of a dying signal. They built Eridia there: a haven for thinkers, neuralists, soul-engineers. They studied what space does to the human psyche. And they found something.

They called it "The Hunger" A psychic sickness. A rupture in the way we connect. It spread like a system glitch—slow, silent, and deep within humanity. Affection became dangerous. Touch became lethal.

So they rewrote humanity—dampeners, inhibitors, neural locks. No more empathy spikes, no more entanglement, no more touching. It worked for a while. The Hunger hasn’t gone away; it has evolved. And those who feel too much… burn out.

You shouldn’t be alive. And yet—here you are. You weren’t born with the Hunger. With your own motivations in mind, you travel to Eridia, seeking answers about the one thing only you have.

Your hopes are to The Pantheon Circuit; A religious-techno body worshipping the ancient pre-human code—fragments of consciousness scattered through the galaxy.

Chose your backstory;

✩ The Conduit

You were wired to a forgotten AI-god, left floating in the void. They asked questions no one else could hear. You gave answers the system feared. People treated you as a seer, a signal booster, a danger to system control. You escaped before they could erase you.

✩ The Drifted

They found you in a half-dead cryo-pod, memory fogged. You wore a military tag that doesn’t exist in any records. As you traveled with your saviours, someone redirected your ship, causing you to crash into a nearby moon. Every crew member, and every record of their findings died. All but you.

✩ The Vessel

Biotech-enhanced and artificially immune to “the hunger” by design. Someone tried to build a cure into you, and you killed them getting out. Your "mother" found and took you in, but she's colapsing under the Hunger, and you leave to find help

You crash-land on Pluto with a celestial train, and are discovered by a rogue AI that was smuggled into Eridia.

Chose your Love Interest;

✩ Ais

Code Shaman — repairs forbidden AIs, speaks with machines, implants psychic firewalls.

Talks about Ȩ̴̻͚̟̳̬̣̮̿̀̈́̋̑̿̀̐̅̂̈́̄ȑ̷̡̢̢̝̬͔͚͔̲̯͖̜͊͊́͛̑̔̑̓͐̄͂̅͝͝o̵͈̙̩̍̓͐͋̅̉̊̔c̸͕̖͕͛̐͂̉̏͗̀̓͑͂̽͘

✩ Leander

Sensory Dealer — runs simulated emotion dens, trades stolen memories, fakes affection until yours feels real.

✩ Kuras

Ex-Pantheon Ascendant — a spiritual anchor turned apostate, carries forbidden relics from the Core

✩ Mhin

Scavver — builds illegal augment limbs, hides in The Drift’s ghost tunnels, allergic to vulnerability.

✩ Vere

Phantom-Operative — genetically altered for silence and cruelty, works for The Pantheon Circuit

Other; The Spire & The Drift

"Up there, they breathe clean air. Down here, we survive."

✩ The Spire: A tower city scraping the dome’s edge, flooded with reflective chrome and corporate cults. Rich in synthetic light, dead in soul.

✩ The Drift: Underground, near the reactor slums. Neon gutters, rusted platforms, mod markets. People here splice their DNA for coin or survival.

#verethinks#verewrites#red spring studios#touchstarved#ts#touchstarved game#touchstarved headcanons#touchstarved oneshot#ais#ts ais#ais touchstarved#touchstarved ais#vere#ts vere#vere touchstarved#touchstarved vere#mhin#mhin headcanons#ts mhin#mhin touchstarved#touchstarved mhin#mhin oneshot#kuras#ts kuras#kuras touchstarved#touchstarved kuras#leander#ts leander#leander touchstarved#touchstarved leander

28 notes

·

View notes

Text

I've gone and dug myself into an angry mood.

I clicked on a YouTube video from a channel called "Nerdy Novelist," titled Why the argument "AI is Stealing" is irrelevant.

I watched it through, hoping he'd eventually get to a better argument against AI (Curious to see if he'd have the same different arguments that I've thought up).

But nope.

His only argument was that tech companies are writing better programs, so they can create "synthetic" training data sets, so they don't have to scrape copyrighted material for their large language models. And someday, book publishers will put compensation clauses into their contracts with writers. And if the computer in your mom's basement is powerful enough, no one will be able to sue you for copyright infringement.

As an example, he said he'd soon be able to user generative AI to write an entire novel in the style of Brandon Sanderson without his permission, 'cause you can't copyright a style (and no one can pinpoint Sanderson's style anyway).

And that was the whole video.

And the comment section was filled with tech-bros talking about how the people who are against AI (especially writers* who are against AI) are fools who are just purists and elitists.

I almost replied with a rant of my own about how "Legal" is not the same as "Ethical," but decided to type all this out here, instead.

*Specifically the writers from NaNoWriMo who are complaining.

21 notes

·

View notes

Text

🚨 THE UNIVERSE ALREADY MADE NO SENSE. THEN WE GAVE AI A SHOVEL AND TOLD IT TO KEEP DIGGING. 🚨

We’re not living in the future. We’re living in a recursive content hellscape. And we built it ourselves.

We used to look up at the stars and whisper, “Are we alone?”

Now we stare at AI-generated art of a fox in a samurai hoodie and yell, “Enhance that glow effect.”

The universe was already a fever dream. Black holes warp time. Quantum particles teleport. Dark matter makes up 85% of everything and we can’t see it, touch it, or explain it. [NASA, 2023]

And yet… here we are. Spamming the cosmos with infinite AI-generated worlds, simulations, and digital phantoms like it’s a side quest in a broken sandbox game.

We didn’t solve the mystery of reality.

We handed the mystery to a neural net and told it to hallucinate harder.

We are creating universes with the precision of a toddler armed with a nuclear paintbrush.

And the most terrifying part?

We’re doing it without supervision, regulation, or restraint—and calling it progress.

🤖 AI ISN’T JUST A TOOL. IT’S A REALITY ENGINE.

MidJourney. ChatGPT. Sora.

These aren’t “assistants.”

They’re simulacra machines—recursive dream loops that take in a world they didn’t build and spit out versions of it we were never meant to see.

In just two years, generative models like DALL·E and Stable Diffusion have created over 10 billion unique image-worlds. That’s more fictional environments than there are galaxies in the observable universe. [OpenAI, 2023]

If each of those outputs represents even a symbolic “universe”...

We’ve already flooded the noosphere with more fake realities than stars.

And we’re doing it faster than we can comprehend.

In 2024, researchers from the Sentience Institute warned that AI-generated simulations present catastrophic alignment risks if treated as “non-conscious” systems while scaling complexity beyond human understanding. [Saad, 2024]

Translation:

We are building gods with the IQ of memes—and we don’t know what they're absorbing, remembering, or birthing.

🧠 “BUT THEY’RE NOT REAL.”

Define “real.”

Dreams aren’t real. But they alter your hormones.

Stories aren’t real. But they start wars.

Simulations aren’t real. But your bank runs on one.

And according to Nick Bostrom’s Simulation Hypothesis—cited in over 500 peer-reviewed philosophy papers—it’s statistically more likely that we live in a simulation than the base reality. [Bostrom, 2003]

Now we’re making simulations inside that simulation.

Worlds inside worlds.

Simulacra nesting dolls with no bottom.

So ask again—what’s real?

Because every AI-generated prompt has consequences.

Somewhere, some server remembers that cursed world you made of “nuns with lightsabers in a bubblegum apocalypse.”

And it may reuse it.

Remix it.

Rebirth it.

AI never forgets. But we do.

🧨 THE SIMULATION IS LEAKING

According to a 2023 Springer article by Watson on Philosophy & Technology, generative models don’t “create” images—they extrapolate probability clouds across conceptual space. This means every AI generation is essentially:

A statistical ghost stitched together from real-world fragments.

Imagine you train AI on 5 million human faces.

You ask it to make a new one.

The result?

A Frankenstein identity—not real, but not entirely fake. A data ghost with no birth certificate. But with structure. Cohesion. Emotion.

Now scale that to entire worlds.

What happens when we generate fictional religions?

Political ideologies?

New physics?

False memories that feel more believable than history?

This isn’t just art.

It’s a philosophical crime scene.

We're building belief systems from corrupted data.

And we’re pushing them into minds that no longer distinguish fiction from filtered fact.

According to Pew Research, over 41% of Gen Z already believe they have seen something “in real life” that was later revealed to be AI-generated. [Pew, 2023]

We’ve crossed into synthetic epistemology—knowledge built from ghosts.

And once you believe a ghost, it doesn’t matter if it’s “real.” It shapes you.

🌌 WHAT IF THE MULTIVERSE ISN’T A THEORY ANYMORE?

Physicists like Max Tegmark and Sean Carroll have argued for years that the multiverse isn’t “speculation”—it’s mathematically necessary if quantum mechanics is correct. [Carroll, 2012; Tegmark, 2014]

That means every decision, every possibility, forks reality.

Now plug in AI.

Every prompt.

Every variant.

Every “seed.”

What if these aren’t just visual outputs...

What if they’re logical branches—forks in a digital quantum tree?

According to a 2024 MDPI study on generative multiverses, the recursive complexity of AI-generated environments mimics multiverse logic structures—and could potentially create psychologically real simulations when embedded into AR/VR. [Forte, 2025]

That’s not sci-fi. That’s where Meta, Apple, and OpenAI are going right now.

You won’t just see the worlds.

You’ll enter them.

And you won’t know when you’ve left.

👁 WE ARE BUILDING DEMIURGES WITH GLITCHY MORALITY

Here’s the killer question:

Who decides which of these realities are safe?

We don’t have oversight.

We don’t have protocol.

We don’t even have a working philosophical framework.

As of 2024, there are zero legally binding global regulations on generative world-building AI. [UNESCO AI Ethics Report, 2024]

Meaning:

A 14-year-old with a keyboard can generate a religious text using ChatGPT

Sell it as a spiritual framework

And flood Instagram with quotes from a reality that never existed

It’ll go viral.

It’ll gain followers.

It might become a movement.

That’s not hypothetical. It’s already happened.

Welcome to AI-driven ideological seeding.

It’s not the end of the world.

It’s the birth of 10,000 new ones.

💣 THE COSMIC SH*TSHOW IS SELF-REPLICATING NOW

We’re not just making content.

We’re teaching machines how to dream.

And those dreams never die.

In the OSF report Social Paradigm Shifts from Generative AI, B. Zhou warns that process-oriented AI models—those designed to continually learn from outputs—will eventually “evolve” their own logic systems if left unchecked. [Zhou, 2024]

We’re talking about self-mutating cultural structures emerging from machine-generated fiction.

That’s no longer just art.

That’s digital theology.

And it’s being shaped by horny Redditors and 30-second TikTok prompts.

So where does that leave us?

We’re:

Outsourcing creation to black boxes

Generating recursive worlds without reality checks

Building belief systems from prompt chains

Turning digital dreams into memetic infections

The question isn’t “What if it gets worse?”

The question is:

What if the worst already happened—and we didn’t notice?

🧠 REBLOG if it cracked your mind open 👣 FOLLOW for more unfiltered darkness 🗣️ COMMENT if it made your spine stiffen

📚 Cited sources:

Saad, B. (2024). Simulations and Catastrophic Risks. Sentience Institute

Forte, M. (2025). Exploring Multiverses: Generative AI and Neuroaesthetic Perspectives. MDPI

Zhou, B. (2024). Social Paradigm Shift Promoted by Generative Models. OSF

Watson, D. (2023). On the Philosophy of Unsupervised Learning. Springer PDF

Bostrom, N. (2003). Are You Living in a Computer Simulation? Philosophical Quarterly

NASA (2023). Dark Matter Overview. NASA Website

Pew Research (2023). Gen Z’s Experiences with AI. Pew Research Center

UNESCO (2024). AI Ethics Report. UNESCO AI Ethics Portal

#humor#funny#memes#writing#writers on tumblr#jokes#lit#us politics#writers#writer#writing community#writing prompt#horror#dark academia

5 notes

·

View notes

Text

"And, to be abundantly clear, I am not sure there is enough training data in existence to get these models past the next generation. Even if generative AI companies were able to legally and freely download every single piece of text and visual media from the internet, it doesn't appear to be enough to train these models, with some model developers potentially turning to model-generated "synthetic" data — a process that could introduce "model collapse," a form of inbreeding that Jathan Sadowski called "Habsburg AI" that destroys the models over time."

Personally I think that the AI bubble is going to be the largest tech collapse since the dot com crash.

14 notes

·

View notes

Text

I’ve been enjoying Paris Marx’s writing lately, but the latest piece hits all the right spots.

I remember reading Barlow’s Declaration of Independence of Cyberspace back in my 20s, and nodding along, showing my despise for government. 20 years later, it reads so different. Governments aren’t perfect, but at least they are meant to represent their people and we have ways to replace them. Leaving so much decision power in the hands of private corporations could not end well.

If you listen to CEOs like Sam Altman or venture capitalists like Marc Andreessen, they want us to believe that these tools are the beginning of a vast expansion in human potential, but that’s incredibly hard to believe for anyone who knows the history of Silicon Valley’s deception and can see through the hype to understand how these tools actually work. They’re not intelligent or prescient; they’re just churning out synthetic material that aligns with all the connections they’ve made between the training data they pulled from the open web.

Once again, the push to adopt these AI technologies isn’t about making our lives better, it’s about reducing the cost of producing ever more content to keep people engaged, to serve ads against, and keep people subscribed to struggling streaming services. The public doesn’t want the quality of news, entertainment, and human interactions to further decline because of the demands of investors for even greater profits, but that doesn’t matter. Everything must be sacrificed on the altar of tech capitalism.

23 notes

·

View notes

Text

Recently, former president and convicted felon Donald Trump posted a series of photos that appeared to show fans of pop star Taylor Swift supporting his bid for the US presidency. The pictures looked AI-generated, and WIRED was able to confirm they probably were by running them through the nonprofit True Media’s detection tool to confirm that they showed “substantial evidence of manipulation.”

Things aren’t always that easy. The use of generative AI, including for political purposes, has become increasingly common, and WIRED has been tracking its use in elections around the world. But in much of the world outside the US and parts of Europe, detecting AI-generated content is difficult because of biases in the training of systems, leaving journalists and researchers with few resources to address the deluge of disinformation headed their way.

Detecting media generated or manipulated using AI is still a burgeoning field, a response to the sudden explosion of generative AI companies. (AI startups pulled in over $21 billion in investment in 2023 alone.) “There's a lot more easily accessible tools and tech available that actually allows someone to create synthetic media than the ones that are available to actually detect it,” says Sabhanaz Rashid Diya, founder of the Tech Global Institute, a think tank focused on tech policy in the Global South.

Most tools currently on the market can only offer between an 85 and 90 percent confidence rate when it comes to determining whether something was made with AI, according to Sam Gregory, program director of the nonprofit Witness, which helps people use technology to support human rights. But when dealing with content from someplace like Bangladesh or Senegal, where subjects aren’t white or they aren’t speaking English, that confidence level plummets. “As tools were developed, they were prioritized for particular markets,” says Gregory. In the data used to train the models, “they prioritized English language—US-accented English—or faces predominant in the Western world.”

This means that AI models were mostly trained on data from and for Western markets, and therefore can’t really recognize anything that falls outside of those parameters. In some cases that’s because companies were training models using the data that was most easily available on the internet, where English is by far the dominant language. “Most of our data, actually, from [Africa] is in hard copy,” says Richard Ngamita, founder of Thraets, a nonprofit civic tech organization focused on digital threats in Africa and other parts of the Global South. This means that unless that data is digitized, AI models can’t be trained on it.

Without the vast amounts of data needed to train AI models well enough to accurately detect AI-generated or AI-manipulated content, models will often return false positives, flagging real content as AI generated, or false negatives, identifying AI-generated content as real. “If you use any of the off the shelf tools that are for detecting AI-generated text, they tend to detect English that's written by non-native English speakers, and assume that non-native English speaker writing is actually AI,” says Diya. “There’s a lot of false positives because they weren’t trained on certain data.”

But it’s not just that models can’t recognize accents, languages, syntax, or faces less common in Western countries. “A lot of the initial deepfake detection tools were trained on high quality media,” says Gregory. But in much of the world, including Africa, cheap Chinese smartphone brands that offer stripped-down features dominate the market. The photos and videos that these phones are able to produce are much lower quality, further confusing detection models, says Ngamita.

Gregory says that some models are so sensitive that even background noise in a piece of audio, or compressing a video for social media, can result in a false positive or negative. “But those are exactly the circumstances you encounter in the real world, rough and tumble detection,” he says. The free, public-facing tools that most journalists, fact checkers, and civil society members are likely to have access to are also “the ones that are extremely inaccurate, in terms of dealing both with the inequity of who is represented in the training data and of the challenges of dealing with this lower quality material.”

Generative AI is not the only way to create manipulated media. So-called cheapfakes, or media manipulated by adding misleading labels or simply slowing down or editing audio and video, are also very common in the Global South, but can be mistakenly flagged as AI-manipulated by faulty models or untrained researchers.

Diya worries that groups using tools that are more likely to flag content from outside the US and Europe as AI generated could have serious repercussions on a policy level, encouraging legislators to crack down on imaginary problems. “There's a huge risk in terms of inflating those kinds of numbers,” she says. And developing new tools is hardly a matter of pressing a button.

Just like every other form of AI, building, testing, and running a detection model requires access to energy and data centers that are simply not available in much of the world. “If you talk about AI and local solutions here, it's almost impossible without the compute side of things for us to even run any of our models that we are thinking about coming up with,” says Ngamita, who is based in Ghana. Without local alternatives, researchers like Ngamita are left with few options: pay for access to an off the shelf tool like the one offered by Reality Defender, the costs of which can be prohibitive; use inaccurate free tools; or try to get access through an academic institution.

For now, Ngamita says that his team has had to partner with a European university where they can send pieces of content for verification. Ngamita’s team has been compiling a dataset of possible deepfake instances from across the continent, which he says is valuable for academics and researchers who are trying to diversify their models’ datasets.

But sending data to someone else also has its drawbacks. “The lag time is quite significant,” says Diya. “It takes at least a few weeks by the time someone can confidently say that this is AI generated, and by that time, that content, the damage has already been done.”

Gregory says that Witness, which runs its own rapid response detection program, receives a “huge number” of cases. “It’s already challenging to handle those in the time frame that frontline journalists need, and at the volume they’re starting to encounter,” he says.

But Diya says that focusing so much on detection might divert funding and support away from organizations and institutions that make for a more resilient information ecosystem overall. Instead, she says, funding needs to go towards news outlets and civil society organizations that can engender a sense of public trust. “I don't think that's where the money is going,” she says. “I think it is going more into detection.”

8 notes

·

View notes

Text

Video Agent: The Future of AI-Powered Content Creation

The rise of AI-generated content has transformed how businesses and creators produce videos. Among the most innovative tools is the video agent, an AI-driven solution that automates video creation, editing, and optimization. Whether for marketing, education, or entertainment, video agents are redefining efficiency and creativity in digital media.

In this article, we explore how AI-powered video agents work, their benefits, and their impact on content creation.

What Is a Video Agent?

A video agent is an AI-based system designed to assist in video production. Unlike traditional editing software, it leverages machine learning and natural language processing (NLP) to automate tasks such as:

Scriptwriting – Generates engaging scripts based on keywords.

Voiceovers – Converts text to lifelike speech in multiple languages.

Editing – Automatically cuts, transitions, and enhances footage.

Personalization – Tailors videos for different audiences.

These capabilities make video agents indispensable for creators who need high-quality content at scale.

How AI Video Generators Work

The core of a video agent lies in its AI algorithms. Here’s a breakdown of the process:

1. Input & Analysis

Users provide a prompt (e.g., "Create a 1-minute explainer video about AI trends"). The AI video generator analyzes the request and gathers relevant data.

2. Content Generation

Using GPT-based models, the system drafts a script, selects stock footage (or generates synthetic visuals), and adds background music.

3. Editing & Enhancement

The video agent refines the video by:

Adjusting pacing and transitions.

Applying color correction.

Syncing voiceovers with visuals.

4. Output & Optimization

The final video is rendered in various formats, optimized for platforms like YouTube, TikTok, or LinkedIn.

Benefits of Using a Video Agent

Adopting an AI-powered video generator offers several advantages:

1. Time Efficiency

Traditional video production takes hours or days. A video agent reduces this to minutes, allowing rapid content deployment.

2. Cost Savings

Hiring editors, voice actors, and scriptwriters is expensive. AI eliminates these costs while maintaining quality.

3. Scalability

Businesses can generate hundreds of personalized videos for marketing campaigns without extra effort.

4. Consistency

AI ensures brand voice and style remain uniform across all videos.

5. Accessibility

Even non-experts can create professional videos without technical skills.

Top Use Cases for Video Agents

From marketing to education, AI video generators are versatile tools. Key applications include:

1. Marketing & Advertising

Personalized ads – AI tailors videos to user preferences.

Social media content – Quickly generates clips for Instagram, Facebook, etc.

2. E-Learning & Training

Automated tutorials – Simplifies complex topics with visuals.

Corporate training – Creates onboarding videos for employees.

3. News & Journalism

AI-generated news clips – Converts articles into video summaries.

4. Entertainment & Influencers

YouTube automation – Helps creators maintain consistent uploads.

Challenges & Limitations

Despite their advantages, video agents face some hurdles:

1. Lack of Human Touch

AI may struggle with emotional nuance, making some videos feel robotic.

2. Copyright Issues

Using stock footage or AI-generated voices may raise legal concerns.

3. Over-Reliance on Automation

Excessive AI use could reduce creativity in content creation.

The Future of Video Agents

As AI video generation improves, we can expect:

Hyper-realistic avatars – AI-generated presenters indistinguishable from humans.

Real-time video editing – Instant adjustments during live streams.

Advanced personalization – AI predicting viewer preferences before creation.

2 notes

·

View notes

Text

guy who is against ai models trained on synthetic data because it "has no soul"

2 notes

·

View notes

Text

Alltick API: Where Market Data Becomes a Sixth Sense

When trading algorithms dream, they dream in Alltick’s data streams.

The Invisible Edge

Imagine knowing the market’s next breath before it exhales. While others trade on yesterday’s shadows, Alltick’s data interface illuminates the present tense of global markets:

0ms latency across 58 exchanges

Atomic-clock synchronization for cross-border arbitrage

Self-healing protocols that outsmart even solar flare disruptions

The API That Thinks in Light-Years

🌠 Photon Data Pipes Our fiber-optic neural network routes market pulses at 99.7% light speed—faster than Wall Street’s CME backbone.

🧬 Evolutionary Endpoints Machine learning interfaces that mutate with market conditions, automatically optimizing data compression ratios during volatility storms.

🛸 Dark Pool Sonar Proprietary liquidity radar penetrates 93% of hidden markets, mapping iceberg orders like submarine topography.

⚡ Energy-Aware Architecture Green algorithms that recycle computational heat to power real-time analytics—turning every trade into an eco-positive event.

Secret Weapons of the Algorithmic Elite

Fed Whisperer Module: Decode central bank speech patterns 14ms before news wires explode

Meme Market Cortex: Track Reddit/Github/TikTok sentiment shifts through self-training NLP interfaces

Quantum Dust Explorer: Mine microsecond-level anomalies in options chains for statistical arbitrage gold

Build the Unthinkable

Your dev playground includes:

🧪 CRISPR Data Editor: Splice real-time ticks with alternative data genomes

🕹️ HFT Stress Simulator: Test strategies against synthetic black swan events

📡 Satellite Direct Feed: Bypass terrestrial bottlenecks with LEO satellite clusters

The Silent Revolution

Last month, three Alltick-powered systems achieved the impossible:

A crypto bot front-ran Elon’s tweet storm by analyzing Starlink latency fluctuations

A London hedge fund predicted a metals squeeze by tracking Shanghai warehouse RFID signals

An AI trader passed the Turing Test by negotiating OTC derivatives via synthetic voice interface

72-Hour Quantum Leap Offer

Deploy Alltick before midnight UTC and unlock:

🔥 Dark Fiber Priority Lane (50% faster than standard feeds)

💡 Neural Compiler (Auto-convert strategies between Python/Rust/HDL)

🔐 Black Box Vault (Military-grade encrypted data bunker)

Warning: May cause side effects including disgust toward legacy APIs, uncontrollable urge to optimize everything, and permanent loss of "downtime"概念.

Alltick doesn’t predict the future—we deliver it 42 microseconds early.(Data streams may contain traces of singularity. Not suitable for analog traders.)

2 notes

·

View notes

Text

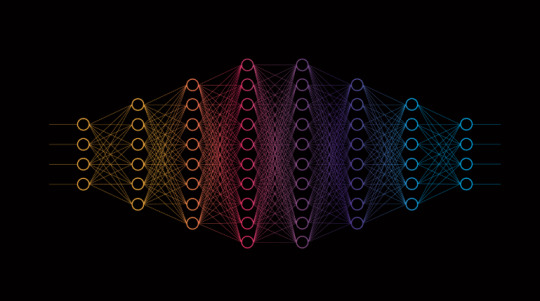

The Building Blocks of AI : Neural Networks Explained by Julio Herrera Velutini

What is a Neural Network?

A neural network is a computational model inspired by the human brain’s structure and function. It is a key component of artificial intelligence (AI) and machine learning, designed to recognize patterns and make decisions based on data. Neural networks are used in a wide range of applications, including image and speech recognition, natural language processing, and even autonomous systems like self-driving cars.

Structure of a Neural Network

A neural network consists of layers of interconnected nodes, known as neurons. These layers include:

Input Layer: Receives raw data and passes it into the network.

Hidden Layers: Perform complex calculations and transformations on the data.

Output Layer: Produces the final result or prediction.

Each neuron in a layer is connected to neurons in the next layer through weighted connections. These weights determine the importance of input signals, and they are adjusted during training to improve the model’s accuracy.

How Neural Networks Work?

Neural networks learn by processing data through forward propagation and adjusting their weights using backpropagation. This learning process involves:

Forward Propagation: Data moves from the input layer through the hidden layers to the output layer, generating predictions.

Loss Calculation: The difference between predicted and actual values is measured using a loss function.

Backpropagation: The network adjusts weights based on the loss to minimize errors, improving performance over time.

Types of Neural Networks-

Several types of neural networks exist, each suited for specific tasks:

Feedforward Neural Networks (FNN): The simplest type, where data moves in one direction.

Convolutional Neural Networks (CNN): Used for image processing and pattern recognition.

Recurrent Neural Networks (RNN): Designed for sequential data like time-series analysis and language processing.

Generative Adversarial Networks (GANs): Used for generating synthetic data, such as deepfake images.

Conclusion-

Neural networks have revolutionized AI by enabling machines to learn from data and improve performance over time. Their applications continue to expand across industries, making them a fundamental tool in modern technology and innovation.

3 notes

·

View notes