#AI chip design

Explore tagged Tumblr posts

Text

Discover the Synopsys stock price forecast for 2025–2029, with insights into financial performance, AI-driven growth, and investment tips. #Synopsys #SNPS #SNPSstock #stockpriceforecast #EDAsoftware #semiconductorIP #AIchipdesign #stockinvestment #financialperformance #Ansysacquisition #sharebuyback

#AI chip design#Ansys acquisition#Best semiconductor stocks to buy#EDA software#Financial performance#Investment#Investment Insights#Is Synopsys a good investment#semiconductor IP#share buyback#SNPS#SNPS stock#SNPS stock analysis 2025–2029#Stock Forecast#Stock Insights#stock investment#Stock Price Forecast#Synopsys#Synopsys AI-driven chip design#Synopsys Ansys acquisition impact#Synopsys financial performance 2024#Synopsys share buyback program#Synopsys stock#Synopsys stock buy or sell#Synopsys stock price forecast 2025#Synopsys stock price target 2025

0 notes

Text

Will AI Eliminate Verification?

A recent blog post looked at the impact artificial intelligence (AI) is having on chip development, focusing on register-transfer-level (RTL) design in general, and the hardware-software interface (HSI) in particular. It seems natural to extend this topic to ask how AI is affecting functional verification of chip designs. Again, there’s plenty of hype on this topic, but it’s important to separate “perhaps someday” visions from the current reality of what AI can accomplish.

AI and IP Verification

The recent post noted that the generation of RTL designs is a domain where AI has shown some success. It used the example of asking ChatGPT to “generate SystemVerilog code for an 8-bit priority encoder” and showed its output. In theory, such generated designs should be “correct by construction” since AI tools are trained on huge datasets that include many successful real-world RTL IP designs. Does this mean that they don’t need to be verified?

The answer, of course, is “no!” since training datasets may include designs with bugs in them, resulting in a large language model (LLM) with errors. In addition, the well documented tendency of AI tools to hallucinate means that the output may sometimes not make sense. It is important to verify any RTL code generated by AI, just as code written by humans. This verification may happen in a standalone IP block testbench, in higher levels of the chip hierarchy, or both.

This leads to the question of whether AI can generate the verification testbench and tests. Typing “generate SystemVerilog UVM testbench and tests for an 8-bit priority encoder” into ChatGPT yields a basic solution that includes a top-level testbench, UVM agent, driver, monitor, and scoreboard, plus a sequence (shown below) with a few common tests. It’s a good start, although an experienced verification engineer would want to add coverage and some additional tests.class priority_encoder_sequence extends uvm_sequence #(priority_encoder_transaction); // Constructor function new(string name = "priority_encoder_sequence"); super.new(name); endfunction // Generate test cases virtual task body(); priority_encoder_transaction tx; // Test case 1: All zeros tx = priority_encoder_transaction::type_id::create("tx"); tx.in_data = 8'b00000000; start_item(tx); finish_item(tx); // Test case 2: Only one bit set tx.in_data = 8'b00000100; start_item(tx); finish_item(tx); // Test case 3: Multiple bits set tx.in_data = 8'b11000000; start_item(tx); finish_item(tx); // Test case 4: All ones tx.in_data = 8'b11111111; start_item(tx); finish_item(tx); endtask endclass

AI and SoC Verification

It seems likely that any AI tool capable of generating an RTL IP block is also capable of generating a standalone testbench and some basic tests. These could also be used to verify a hand-written IP block, or one generated by a different AI tool or LLM. Of course, it is important to verify at least some of the functionality of an IP block once it is instantiated into the chip design. Since AI is not today able to generate the RTL design for a complete chip, it is unreasonable to expect that it could verify one.

Fortunately, there are some aspects of a complex chip that can be verified by current AI tools. The previous post focused on the HSI, which is an essential part of any system on chip (SoC), and on the generation of the memory-mapped, addressable control and status registers (CSRs) that form the hardware side of the HSI. Generating a testbench and tests to verify a CSR block certainly seems within the scope of AI as well.

Thorough hardware-software verification of the HSI involves both the CSR hardware and the low-level software (such as microcode and device drivers) that programs the registers. HSI verification requires tests that exercise the registers and check the results. Many of these tests could potentially be generated by AI tools, although custom sequences that access the registers may be needed. Thus, there must be a way to specify these sequences as well as the set of registers.

Agnisys AI Verification Solutions

The Agnisys IDesignSpec™ Suite is a specification automation solution that generates register RTL code, SystemVerilog Open Verification Methodology (OVM) testbenches and tests, C/C++ tests, and combined hardware-software verification environments. As discussed in the earlier post, the Agnisys SmartDatasheet site uses leading-edge LLM and natural language processing (NLP) technology to interpret natural language datasheets with CSR definitions to generate the RTL code.

IDesignSpec uses the same register definitions, whether from datasheets or from standard formats such as SystemRDL and IP-XACT, to generate verification testbenches and tests. The C/C++ tests can be run on UVM testbenches, co-verification environments, emulators, and even actual chips. The tests are fine-tuned for any special registers specified, including indirect, indexed, read-only/write-only, alias, lock, shadow, interrupt, counter, paged, virtual, external, and read/write pairs.

When custom CSR programming sequences are required, Agnisys provides an AI solution as well. Users can write these sequences in natural language, which is converted via NLP into UVM and C/C++ sequences. Assertions, an important part of RTL verification, can also be specified as statements of design intent in natural language. The Agnisys iSpec.ai site converts these statements into SystemVerilog Assertions (SVA), which could be for the CSRs or any other parts of the design.

Summary

AI is a long way from generating a full-chip testbench and a complete set of tests, but it adds value to the verification flow today for assertions, CSR programming sequences, and HSI testbenches. This frees up a lot of time for the verification engineers, enabling them to focus on more complex chip-level tests. Agnisys is a proven industry leader in using AI for SoC and IP development, with additional capabilities in development.

0 notes

Text

https://deepmind.google/discover/blog/how-alphachip-transformed-computer-chip-design/

1 note

·

View note

Text

AI for Good: A Balanced Look at the Positive Potential of Artificial Intelligence

After exploring the unsettling possibilities of AI through the lens of Roko’s Basilisk, it’s only fair to look at the opposite end of the spectrum: the ways in which artificial intelligence is being harnessed for positive change. Recently, I watched a video that delved into the more hopeful and constructive uses of AI, which provided an important counterbalance to the existential fears many��

#AI benefits#AI chip design#AI ethics#AI for good#AI in conservation#AI in healthcare#AI job displacement#AI prosthetics#AI regulation#AI risks#AI-powered technology#artificial intelligence#balanced AI perspective#environmental conservation#neural networks#Roko’s Basilisk

0 notes

Text

3 notes

·

View notes

Text

Chinese Researchers Release World’s First AI-Based Fully Automated Processor Chip Design System

— Global Times | June 10, 2025

Photo: VCG

Chinese Researchers have released the World’s First Fully Automated Processor Chip Design System based on Artificial Intelligence (AI) Technology, making AI-designed chips a reality. With its designs comparable to the performance of human experts across multiple key metrics, the system represents a significant step toward fully automated chip design, potentially revolutionizing how chips are designed and manufactured.

The system named QiMeng jointly released by the Institute of Computing Technology, Chinese Academy of Sciences (CAS) and the Institute of Software of CAS, were recently published on arXiv.org, the Science and Technology Daily reported on Tuesday.

The QiMeng works like an automated architect and builder for computer chips. Instead of engineers manually designing every component, it uses AI to handle both the hardware and software aspects of chip creation.

China’s AI Chip Tool QiMeng Beats Engineers, Designs Processors In Just Days! With QiMeng, China’s Top Scientists Have Used AI To Build Processors Comparable To Intel’s 486 and Arm’s Cortex A53. June 10, 2025, Aamir Khollam Representational Image of AI-Driven Chip Design Process in China. Михаил Руденко/iStock. InterestingEngineering.Com

Expected to transform the design paradigms for the hardware and software of processor chip, the system not only significantly reduces human involvement, enhances design efficiency and shortens development cycles, but also enables rapid, customized designs tailored to specific application scenarios – flexibly meeting the increasingly diverse demands of chip design, the Science and Technology Daily reported.

Processor chips are hailed as the “Crown Jewel” of modern science and technology, with their design processes being highly complex, precise, and requiring a very high level of expertise. Traditional processor chip design relies heavily on experienced expert teams, often involving hundreds of people and taking months or even years to complete, resulting in high costs and lengthy development cycles.

Meanwhile, with the development of emerging technologies such as AI, cloud computing and edge computing, market demands for specialized processor chips and their corresponding foundational software optimization are growing rapidly. However, the number of professionals engaged in the processor chip industry in China is severely insufficient to meet this increasing demand.

In response to these challenges, the QiMeng system leverages advanced AI technologies such as large models to realize automated CPU design. It can also automatically configure corresponding foundational software for the chip, including operating systems, compilers and high-performance kernel libraries, according to the report.

#China 🇨🇳#Global Times#Chinese Researchers#World’s First AI-Based Fully Automated Processor Chip Design System#Artificial Intelligence (AI) Technology#Institute of Computing Technology | Chinese Academy of Sciences (CAS) | Institute of Software of CAS#“Crown Jewel”

0 notes

Text

Machine learning applications in semiconductor manufacturing

Machine Learning Applications in Semiconductor Manufacturing: Revolutionizing the Industry

The semiconductor industry is the backbone of modern technology, powering everything from smartphones and computers to autonomous vehicles and IoT devices. As the demand for faster, smaller, and more efficient chips grows, semiconductor manufacturers face increasing challenges in maintaining precision, reducing costs, and improving yields. Enter machine learning (ML)—a transformative technology that is revolutionizing semiconductor manufacturing. By leveraging ML, manufacturers can optimize processes, enhance quality control, and accelerate innovation. In this blog post, we’ll explore the key applications of machine learning in semiconductor manufacturing and how it is shaping the future of the industry.

Predictive Maintenance

Semiconductor manufacturing involves highly complex and expensive equipment, such as lithography machines and etchers. Unplanned downtime due to equipment failure can cost millions of dollars and disrupt production schedules. Machine learning enables predictive maintenance by analyzing sensor data from equipment to predict potential failures before they occur.

How It Works: ML algorithms process real-time data from sensors, such as temperature, vibration, and pressure, to identify patterns indicative of wear and tear. By predicting when a component is likely to fail, manufacturers can schedule maintenance proactively, minimizing downtime.

Impact: Predictive maintenance reduces equipment downtime, extends the lifespan of machinery, and lowers maintenance costs.

Defect Detection and Quality Control

Defects in semiconductor wafers can lead to significant yield losses. Traditional defect detection methods rely on manual inspection or rule-based systems, which are time-consuming and prone to errors. Machine learning, particularly computer vision, is transforming defect detection by automating and enhancing the process.

How It Works: ML models are trained on vast datasets of wafer images to identify defects such as scratches, particles, and pattern irregularities. Deep learning algorithms, such as convolutional neural networks (CNNs), excel at detecting even the smallest defects with high accuracy.

Impact: Automated defect detection improves yield rates, reduces waste, and ensures consistent product quality.

Process Optimization

Semiconductor manufacturing involves hundreds of intricate steps, each requiring precise control of parameters such as temperature, pressure, and chemical concentrations. Machine learning optimizes these processes by identifying the optimal settings for maximum efficiency and yield.

How It Works: ML algorithms analyze historical process data to identify correlations between input parameters and output quality. Techniques like reinforcement learning can dynamically adjust process parameters in real-time to achieve the desired outcomes.

Impact: Process optimization reduces material waste, improves yield, and enhances overall production efficiency.

Yield Prediction and Improvement

Yield—the percentage of functional chips produced from a wafer—is a critical metric in semiconductor manufacturing. Low yields can result from various factors, including process variations, equipment malfunctions, and environmental conditions. Machine learning helps predict and improve yields by analyzing complex datasets.

How It Works: ML models analyze data from multiple sources, including process parameters, equipment performance, and environmental conditions, to predict yield outcomes. By identifying the root causes of yield loss, manufacturers can implement targeted improvements.

Impact: Yield prediction enables proactive interventions, leading to higher productivity and profitability.

Supply Chain Optimization

The semiconductor supply chain is highly complex, involving multiple suppliers, manufacturers, and distributors. Delays or disruptions in the supply chain can have a cascading effect on production schedules. Machine learning optimizes supply chain operations by forecasting demand, managing inventory, and identifying potential bottlenecks.

How It Works: ML algorithms analyze historical sales data, market trends, and external factors (e.g., geopolitical events) to predict demand and optimize inventory levels. Predictive analytics also helps identify risks and mitigate disruptions.

Impact: Supply chain optimization reduces costs, minimizes delays, and ensures timely delivery of materials.

Advanced Process Control (APC)

Advanced Process Control (APC) is critical for maintaining consistency and precision in semiconductor manufacturing. Machine learning enhances APC by enabling real-time monitoring and control of manufacturing processes.

How It Works: ML models analyze real-time data from sensors and equipment to detect deviations from desired process parameters. They can automatically adjust settings to maintain optimal conditions, ensuring consistent product quality.

Impact: APC improves process stability, reduces variability, and enhances overall product quality.

Design Optimization

The design of semiconductor devices is becoming increasingly complex as manufacturers strive to pack more functionality into smaller chips. Machine learning accelerates the design process by optimizing chip layouts and predicting performance outcomes.

How It Works: ML algorithms analyze design data to identify patterns and optimize layouts for performance, power efficiency, and manufacturability. Generative design techniques can even create novel chip architectures that meet specific requirements.

Impact: Design optimization reduces time-to-market, lowers development costs, and enables the creation of more advanced chips.

Fault Diagnosis and Root Cause Analysis

When defects or failures occur, identifying the root cause can be challenging due to the complexity of semiconductor manufacturing processes. Machine learning simplifies fault diagnosis by analyzing vast amounts of data to pinpoint the source of problems.

How It Works: ML models analyze data from multiple stages of the manufacturing process to identify correlations between process parameters and defects. Techniques like decision trees and clustering help isolate the root cause of issues.

Impact: Faster fault diagnosis reduces downtime, improves yield, and enhances process reliability.

Energy Efficiency and Sustainability

Semiconductor manufacturing is energy-intensive, with significant environmental impacts. Machine learning helps reduce energy consumption and improve sustainability by optimizing resource usage.

How It Works: ML algorithms analyze energy consumption data to identify inefficiencies and recommend energy-saving measures. For example, they can optimize the operation of HVAC systems and reduce idle time for equipment.

Impact: Energy optimization lowers operational costs and reduces the environmental footprint of semiconductor manufacturing.

Accelerating Research and Development

The semiconductor industry is driven by continuous innovation, with new materials, processes, and technologies being developed regularly. Machine learning accelerates R&D by analyzing experimental data and predicting outcomes.

How It Works: ML models analyze data from experiments to identify promising materials, processes, or designs. They can also simulate the performance of new technologies, reducing the need for physical prototypes.

Impact: Faster R&D cycles enable manufacturers to bring cutting-edge technologies to market more quickly.

Challenges and Future Directions

While machine learning offers immense potential for semiconductor manufacturing, there are challenges to overcome. These include the need for high-quality data, the complexity of integrating ML into existing workflows, and the shortage of skilled professionals. However, as ML technologies continue to evolve, these challenges are being addressed through advancements in data collection, model interpretability, and workforce training.

Looking ahead, the integration of machine learning with other emerging technologies, such as the Internet of Things (IoT) and digital twins, will further enhance its impact on semiconductor manufacturing. By embracing ML, manufacturers can stay competitive in an increasingly demanding and fast-paced industry.

Conclusion

Machine learning is transforming semiconductor manufacturing by enabling predictive maintenance, defect detection, process optimization, and more. As the industry continues to evolve, ML will play an increasingly critical role in driving innovation, improving efficiency, and ensuring sustainability. By harnessing the power of machine learning, semiconductor manufacturers can overcome challenges, reduce costs, and deliver cutting-edge technologies that power the future.

This blog post provides a comprehensive overview of machine learning applications in semiconductor manufacturing. Let me know if you’d like to expand on any specific section or add more details!

#semiconductor manufacturing#Machine learning in semiconductor manufacturing#AI in semiconductor industry#Predictive maintenance in chip manufacturing#Defect detection in semiconductor wafers#Semiconductor process optimization#Yield prediction in semiconductor manufacturing#Advanced Process Control (APC) in semiconductors#Semiconductor supply chain optimization#Fault diagnosis in chip manufacturing#Energy efficiency in semiconductor production#Deep learning for semiconductor defects#Computer vision in wafer inspection#Reinforcement learning in semiconductor processes#Semiconductor yield improvement using AI#Smart manufacturing in semiconductors#AI-driven semiconductor design#Root cause analysis in chip manufacturing#Sustainable semiconductor manufacturing#IoT in semiconductor production#Digital twins in semiconductor manufacturing

0 notes

Text

0 notes

Photo

(via "NJ Comic Book Superhero Character with Guns" iPhone Wallet for Sale by NeutrinoJem)

#findyourthing#redbubble#neutrinojem#Tec AI Computer Chip Design for Tech Lovers Cyber Punk Sci-fi lovers Neutrino Cyborg Robot Neon Cyber Punk Sci-Fi Design for Tech Lovers Sci#Tec AIComputer ChipDesign for Tech LoversCyber PunkSci-fi loversNeutrinoCyborg RobotNeon Cyber Punk Sci-Fi#Neutrino

0 notes

Text

A summary of the Chinese AI situation, for the uninitiated.

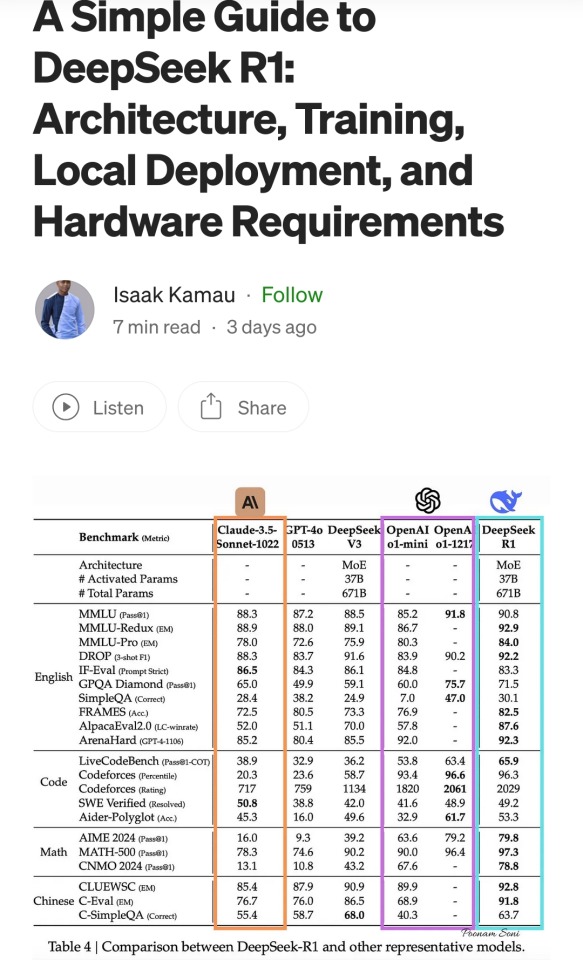

These are scores on different tests that are designed to see how accurate a Large Language Model is in different areas of knowledge. As you know, OpenAI is partners with Microsoft, so these are the scores for ChatGPT and Copilot. DeepSeek is the Chinese model that got released a week ago. The rest are open source models, which means everyone is free to use them as they please, including the average Tumblr user. You can run them from the servers of the companies that made them for a subscription, or you can download them to install locally on your own computer. However, the computer requirements so far are so high that only a few people currently have the machines at home required to run it.

Yes, this is why AI uses so much electricity. As with any technology, the early models are highly inefficient. Think how a Ford T needed a long chimney to get rid of a ton of black smoke, which was unused petrol. Over the next hundred years combustion engines have become much more efficient, but they still waste a lot of energy, which is why we need to move towards renewable electricity and sustainable battery technology. But that's a topic for another day.

As you can see from the scores, are around the same accuracy. These tests are in constant evolution as well: as soon as they start becoming obsolete, new ones are released to adjust for a more complicated benchmark. The new models are trained using different machine learning techniques, and in theory, the goal is to make them faster and more efficient so they can operate with less power, much like modern cars use way less energy and produce far less pollution than the Ford T.

However, computing power requirements kept scaling up, so you're either tied to the subscription or forced to pay for a latest gen PC, which is why NVIDIA, AMD, Intel and all the other chip companies were investing hard on much more powerful GPUs and NPUs. For now all we need to know about those is that they're expensive, use a lot of electricity, and are required to operate the bots at superhuman speed (literally, all those clickbait posts about how AI was secretly 150 Indian men in a trenchcoat were nonsense).

Because the chip companies have been working hard on making big, bulky, powerful chips with massive fans that are up to the task, their stock value was skyrocketing, and because of that, everyone started to use AI as a marketing trend. See, marketing people are not smart, and they don't understand computers. Furthermore, marketing people think you're stupid, and because of their biased frame of reference, they think you're two snores short of brain-dead. The entire point of their existence is to turn tall tales into capital. So they don't know or care about what AI is or what it's useful for. They just saw Number Go Up for the AI companies and decided "AI is a magic cow we can milk forever". Sometimes it's not even AI, they just use old software and rebrand it, much like convection ovens became air fryers.

Well, now we're up to date. So what did DepSeek release that did a 9/11 on NVIDIA stock prices and popped the AI bubble?

Oh, I would not want to be an OpenAI investor right now either. A token is basically one Unicode character (it's more complicated than that but you can google that on your own time). That cost means you could input the entire works of Stephen King for under a dollar. Yes, including electricity costs. DeepSeek has jumped from a Ford T to a Subaru in terms of pollution and water use.

The issue here is not only input cost, though; all that data needs to be available live, in the RAM; this is why you need powerful, expensive chips in order to-

Holy shit.

I'm not going to detail all the numbers but I'm going to focus on the chip required: an RTX 3090. This is a gaming GPU that came out as the top of the line, the stuff South Korean LoL players buy…

Or they did, in September 2020. We're currently two generations ahead, on the RTX 5090.

What this is telling all those people who just sold their high-end gaming rig to be able to afford a machine that can run the latest ChatGPT locally, is that the person who bought it from them can run something basically just as powerful on their old one.

Which means that all those GPUs and NPUs that are being made, and all those deals Microsoft signed to have control of the AI market, have just lost a lot of their pulling power.

Well, I mean, the ChatGPT subscription is 20 bucks a month, surely the Chinese are charging a fortune for-

Oh. So it's free for everyone and you can use it or modify it however you want, no subscription, no unpayable electric bill, no handing Microsoft all of your private data, you can just run it on a relatively inexpensive PC. You could probably even run it on a phone in a couple years.

Oh, if only China had massive phone manufacturers that have a foot in the market everywhere except the US because the president had a tantrum eight years ago.

So… yeah, China just destabilised the global economy with a torrent file.

#valid ai criticism#ai#llms#DeepSeek#ai bubble#ChatGPT#google gemini#claude ai#this is gonna be the dotcom bubble again#hope you don't have stock on anything tech related#computer literacy#tech literacy

433 notes

·

View notes

Text

FINAL ROUND

Propaganda from submitters below the cut; I will reblog any additions!

GlaDOS (Portal) -murderous ai -please don't ask me about the veracity of the cake -GLaDOS isn't just a computer she is the entirety of Aperture, she was created to contain the mind of a separate lady but seems to have her own personality, and her creators hate her and she killed them. I love her. I will forgive all her crimes. Yeah she's a bully but she's also severely hurt she can kill all she wants. (reblog link)

Data (Star Trek) -Data is a sentient synthetic life form designed in the likeness of Doctor Noonien Soong, his creator. In his growth as an individual, Data was later augmented with an emotion chip to help him better understand human behavior and increase his own humanity. -reblog link for images (1) -reblog link for images (2)

#ultimate artificial showdown#character tournament#bracket tournament#tournament poll#character bracket#tumblr bracket#tumblr tournament#fandom bracket#poll#glados#portal#data#star trek#data star trek

921 notes

·

View notes

Text

Industry First: UCIe Optical Chiplet Unveiled by Ayar Labs

New Post has been published on https://thedigitalinsider.com/industry-first-ucie-optical-chiplet-unveiled-by-ayar-labs/

Industry First: UCIe Optical Chiplet Unveiled by Ayar Labs

Ayar Labs has unveiled the industry’s first Universal Chiplet Interconnect Express (UCIe) optical interconnect chiplet, designed specifically to maximize AI infrastructure performance and efficiency while reducing latency and power consumption for large-scale AI workloads.

This breakthrough will help address the increasing demands of advanced computing architectures, especially as AI systems continue to scale. By incorporating a UCIe electrical interface, the new chiplet is designed to eliminate data bottlenecks while enabling seamless integration with chips from different vendors, fostering a more accessible and cost-effective ecosystem for adopting advanced optical technologies.

The chiplet, named TeraPHY™, achieves 8 Tbps bandwidth and is powered by Ayar Labs’ 16-wavelength SuperNova™ light source. This optical interconnect technology aims to overcome the limitations of traditional copper interconnects, particularly for data-intensive AI applications.

“Optical interconnects are needed to solve power density challenges in scale-up AI fabrics,” said Mark Wade, CEO of Ayar Labs.

The integration with the UCIe standard is particularly significant as it allows chiplets from different manufacturers to work together seamlessly. This interoperability is critical for the future of chip design, which is increasingly moving toward multi-vendor, modular approaches.

The UCIe Standard: Creating an Open Chiplet Ecosystem

The UCIe Consortium, which developed the standard, aims to build “an open ecosystem of chiplets for on-package innovations.” Their Universal Chiplet Interconnect Express specification addresses industry demands for more customizable, package-level integration by combining high-performance die-to-die interconnect technology with multi-vendor interoperability.

“The advancement of the UCIe standard marks significant progress toward creating more integrated and efficient AI infrastructure thanks to an ecosystem of interoperable chiplets,” said Dr. Debendra Das Sharma, Chair of the UCIe Consortium.

The standard establishes a universal interconnect at the package level, enabling chip designers to mix and match components from different vendors to create more specialized and efficient systems. The UCIe Consortium recently announced its UCIe 2.0 Specification release, indicating the standard’s continued development and refinement.

Industry Support and Implications

The announcement has garnered strong endorsements from major players in the semiconductor and AI industries, all members of the UCIe Consortium.

Mark Papermaster from AMD emphasized the importance of open standards: “The robust, open and vendor neutral chiplet ecosystem provided by UCIe is critical to meeting the challenge of scaling networking solutions to deliver on the full potential of AI. We’re excited that Ayar Labs is one of the first deployments that leverages the UCIe platform to its full extent.”

This sentiment was echoed by Kevin Soukup from GlobalFoundries, who noted, “As the industry transitions to a chiplet-based approach to system partitioning, the UCIe interface for chiplet-to-chiplet communication is rapidly becoming a de facto standard. We are excited to see Ayar Labs demonstrating the UCIe standard over an optical interface, a pivotal technology for scale-up networks.”

Technical Advantages and Future Applications

The convergence of UCIe and optical interconnects represents a paradigm shift in computing architecture. By combining silicon photonics in a chiplet form factor with the UCIe standard, the technology allows GPUs and other accelerators to “communicate across a wide range of distances, from millimeters to kilometers, while effectively functioning as a single, giant GPU.”

The technology also facilitates Co-Packaged Optics (CPO), with multinational manufacturing company Jabil already showcasing a model featuring Ayar Labs’ light sources capable of “up to a petabit per second of bi-directional bandwidth.” This approach promises greater compute density per rack, enhanced cooling efficiency, and support for hot-swap capability.

“Co-packaged optical (CPO) chiplets are set to transform the way we address data bottlenecks in large-scale AI computing,” said Lucas Tsai from Taiwan Semiconductor Manufacturing Company (TSMC). “The availability of UCIe optical chiplets will foster a strong ecosystem, ultimately driving both broader adoption and continued innovation across the industry.”

Transforming the Future of Computing

As AI workloads continue to grow in complexity and scale, the semiconductor industry is increasingly looking toward chiplet-based architectures as a more flexible and collaborative approach to chip design. Ayar Labs’ introduction of the first UCIe optical chiplet addresses the bandwidth and power consumption challenges that have become bottlenecks for high-performance computing and AI workloads.

The combination of the open UCIe standard with advanced optical interconnect technology promises to revolutionize system-level integration and drive the future of scalable, efficient computing infrastructure, particularly for the demanding requirements of next-generation AI systems.

The strong industry support for this development indicates the potential for a rapidly expanding ecosystem of UCIe-compatible technologies, which could accelerate innovation across the semiconductor industry while making advanced optical interconnect solutions more widely available and cost-effective.

#accelerators#adoption#ai#AI chips#AI Infrastructure#AI systems#amd#Announcements#applications#approach#architecture#bi#CEO#challenge#chip#Chip Design#chips#collaborative#communication#complexity#computing#cooling#data#Design#designers#development#driving#efficiency#express#factor

2 notes

·

View notes

Text

Time to Orbit: Unknown liveblog Chapters 011-020

Chapters 001-010">Chapters 001-010

So recently I've been reading Time to Orbit: Unknown by @derinthescarletpescatarian who may or may not appreciate being tagged in this thing again; a sci-fi mystery you've probably heard about if you're on this webbed site. I am definitely having Thoughts about it, so I'm abandoning my uncomfortably long post for a shiny new one, and also grabbing the opportunity to organise some of those thoughts; we have 180+ chapters and any minor detail might be key. It's only getting more complicated, so let us go through unanswered questions and assorted fuckery. Mysterious, frankly bizarre, and/or outright shady behaviour exhibited by characters:

Captain Joshua Reimann: grabbed an axe and started attacking the walls. Wrecked CR1 and his own arm in the process. Died of an untreated infection. Science Officer Claire Rynn-Hatson, possibly also Science Officer Mohammed Aziz and/or Maintenance Officer Ash Dornae: did some sort of experiment involving dangerous chemicals: the experiment ended in disaster killing Rynn-Hatson on the spot and Aziz&Dornae later due to poisoning. The experiment was conducted for unknown reasons despite the lack of any available medical professionals. Captain Kinoshita Keiko: did not authorise the jettisoning of CR1 even though it cut more than half the crew off from her and made it impossible to turn fore engines on from her position. To be fair it's kind of understandable considering the number of people in there. She also died trying to move a giant, heavy crate of protein bars for some reason. Engineer Leilea Arc Hess: spilled coffee all over a keyboard and didn't clean it up. Also kept a physical calendar even though I don't think you need the AI for the calendar or timed reminders to work. The ship's AI: so many things. Didn't wake any new crewmembers when the deaths started; didn't decrease "gravity" or do anything else to save Captain Kinoshita; woke Aspen and Aspen alone when the fore engines needed turned on; needed Aspen to identify by chip even though it was the one that woke them up just a bit ago, who the fuck else would they be; is definitely lying about CR1; is definitely acting outside its parameters; other stuff probably. The organisation that sent them up here in the first place: doesn't allow personal effects which is comic-book villain behaviour. Also made the AI. Doctor Aspen Greaves: upset the bees.

My questions at this point: Why did Captain Reimann try to damage the ship? I've read Solaris, I know that sci-fi characters don't just go crazy for no reason. Why did no one treat Captain Reimann's wounds? Whose body is missing and where is it? There were only three frozen corpses for four potential dead people in the back of the ship. What is in CR1? How did the 120-something people die there? If a guy with an axe in the process of being subdued can actually cause a hull breach then that's not a spaceship I'd like to travel on. When and why was CR1 locked ? We know when it was damaged but not when it was password-locked. Which captain did it? Riemann probably didn't have the opportunity (it was still open during his rampage and I sure wouldn't have allowed him computer access after.) If it was Kinoshita, why? Why didn't the two halves of the crew reestablish contact? What killed the people at the front of the ship? What's up with the disgusting air filer? What was the experiment that killed three members of the crew? Why can't the new captain override the previous one's orders? Captain locks a door, dies, door is locked forever. That's just bad design. How did the aft engines get irreparably damaged? What happened when the ship lurched sideways? It can't have been just the rotations slowing, because that would decrease gravity unless there's a complicated science reason as to why it doesn't. There can't be a complicated science reason because Derin explains those immediately. Did the crew keep logs? If yes, read them. Current suspects:

Captain Reimann: convenient scapegoat but probably not the root of the problems. The AI: could be. Computers sometimes do stupid shit. My company had to change one of their domains once because a widely used cybersecurity AI decided that we're a phishing scam pretending to be ourselves and wouldn't let the programmers whitelist us. The organisation that launched the Courageous, whatever their name is: programmed the AI. Aspen: no, that's stupid.

102 notes

·

View notes

Text

Disclaimer that this is a post mostly motivated by frustration at a cultural trend, not at any individual people/posters. Vagueing to avoid it seeming like a callout but I know how Tumblr is so we'll see I guess. Putting it after a read-more because I think it's going to spiral out of control.

Recent discourse around obnoxious Linux shills chiming in on posts about how difficult it can be to pick up computer literacy these days has made me feel old and tired. I get that people just want computers to Work and they don't want to have to put any extra effort into getting it to Do The Thing, that's not unreasonable, I want the same!

(I also want obnoxious Linux shills to not chip in on my posts (unless I am posting because my Linux has exploded and I need help) so I sympathise with that angle too, 'just use Linux' is not the catch-all solution you think it is my friend.)

But I keep seeing this broad sense of learned helplessness around having to learn about what the computer is actually doing without having your hand held by a massive faceless corporation, and I just feel like it isn't a healthy relationship to have with your tech.

The industry is getting worse and worse in their lack of respect to the consumer every quarter. Microsoft is comfortable pivoting their entire business to push AI on every part of their infrastructure and in every service, in part because their customers aren't going anywhere and won't push back in the numbers that might make a difference. Windows 11 has hidden even more functionality behind layers of streamlining and obfuscation and integrated even more spyware and telemetry that won't tell you shit about what it's doing and that you can't turn off without violating the EULA. They're going to keep pursuing this kind of shit in more and more obvious ways because that's all they can do in the quest for endless year on year growth.

Unfortunately, switching to Linux will force you to learn how to use it. That sucks when it's being pushed as an immediate solution to a specific problem you're having! Not going to deny that. FOSS folks need to realise that 'just pivot your entire day to day workflow to a new suite of tools designed by hobby engineers with really specific chips on their shoulders' does not work as a method of evangelism. But if you approach it more like learning to understand and control your tech, I think maybe it could be a bit more palatable? It's more like a set of techniques and strategies than learning a specific workflow. Once you pick up the basic patterns, you can apply them to the novel problems that inevitably crop up. It's still painful, particularly if you're messing around with audio or graphics drivers, but importantly, you are always the one in control. You might not know how to drive, and the engine might be on fire, but you're not locked in a burning Tesla.

Now that I write this it sounds more like a set of coping mechanisms, but to be honest I do not have a healthy relationship with xorg.conf and probably should seek therapy.

It's a bit of a stretch but I almost feel like a bit of friction with tech is necessary to develop a good relationship with it? Growing up on MS-DOS and earlier versions of Windows has given me a healthy suspicion of any time my computer does something without me telling it to, and if I can't then see what it did, something's very off. If I can't get at the setting and properties panel for something, my immediate inclination is to uninstall it and do without.

And like yeah as a final note, I too find it frustrating when Linux decides to shit itself and the latest relevant thread I can find on the matter is from 2006 and every participant has been Raptured since, but at least threads exist. At least they're not Microsoft Community hellscapes where every second response is a sales rep telling them to open a support ticket. At least there's some transparency and openness around how the operating system is made and how it works. At least you have alternatives if one doesn't do the job for you.

This is long and meandering and probably misses the point of the discourse I'm dragging but I felt obligated to make it. Ubuntu Noble Numbat is pretty good and I haven't had any issues with it out of the box (compared to EndeavourOS becoming a hellscape whenever I wanted my computer to make a sound or render a graphic) so I recommend it. Yay FOSS.

219 notes

·

View notes

Text

1 note

·

View note

Note

What im hearing is:

Little crow feet outside my window bcs im feeding them- that’s besides the point!

Is there magic??? His shovel looks magic and they look magic

And do give me every detail you are thinking of for the series even if its in the distant future or not that relevant but you want to share

Crows!! Cute!! Also sorry i didnt get to this sooner my laptop BROKE (still broken but usable) and my mom and i have been looking for someone to fix it. Ive been drawing with it sparingly to be careful.

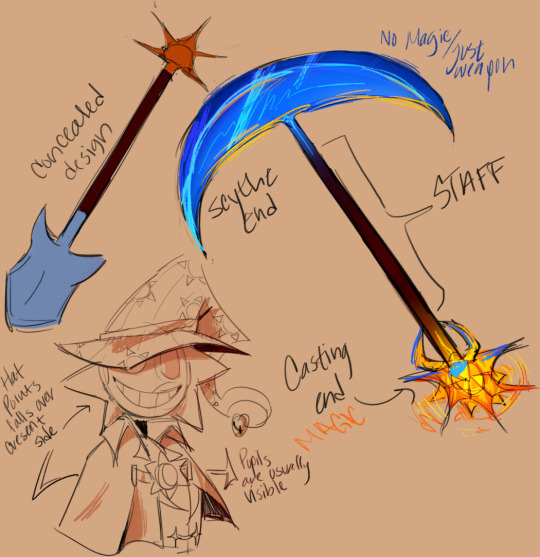

YES there is magic. Of course im still working on this storywise but im getting characters designs n whatnot done right now. Dynamics n stuff. BUT i do have some refs i made before my laptop broke.

I like to draw out certain stuff so that it helps with descriptions in the future; i have the worst memory so it helps to be able to do so. (More beneath cut)

Im so excited for moon's shadow form. Oh my god. Its probably my favorite thing right now.

Fun thing about it is that in this form he can touch you but you cant touch him. Something something you can be cast in shadow but you cant take it off yk? He's still light sensitive like this though, so if the area hes in isn't dark enough or he's hit with anything too bright he just reverts back. At that point he'd just have to rely on normal hand to hand stuff and his sand lol. The shadow form is just better for sneaking and speed. Really, he's some amalgamative idea of the sandman and boogieman. I thought it fit well with his whole "naptime attendant gone wrong" thing.

Sun's design, however, is more like if you mixed a cowboy, wizard, and gravedigger together. I made it a while ago on a whim with no intention behind it but then i ended up thinking "ykw would be so awesome".

The hat dips to cover the crescent side of his face (not intentional on his part) to symbolize his resentment towards moon and how he basically damned him to an hourglass. His eyes are easier to see bc of this which could seem more trusting (eyes are the window to the soul or whatever), but the thing is thats not normal for him (as we know) so it's meant to make him look suspicious and looming to 4th wall viewers. There's also the fact that i just thought it was cool too.

He also doesn't get a second form. Moon's sneaky and weird so i thought it would fit to give him some freaky thing iykwim. Sun, however, is a pretty "in your face" kinda guy, so his look and fight style is more extravagant and boisterous. Lots of swinging amd yelling and boom bang zap! Despite the showiness he's actually a longer range fighter. Mainly because unlike moon, thousands of years ago, he wasn't often one to get violent with his hands. His weapon is just obnoxiously large too though.

They are still one animatronic and their transformation is still triggered by light. Instead of an AI chip though (which is still in there but long dead), they live through the work of a soul. They're still physically inorganic but as far as spiritually they're as close as they're gonna get to being human. Their life and functionailty is derived from the magic itself, not the machinery. Like if for some reason they lost all their magic they'd just drop dead from a battery life long since drained.

The hourglass has a carousel-like design to it purely as reference to moon's level in Help Wanted 2.

Sorry for rambling so much but this is what i've got for you so far! I have a general idea for the plot but im tryna to make it more than what it is rn, so i dont wanna share too much of that just yet in case i change or completely toss away an idea.

#fnaf daycare attendant#fnaf sun#fnaf security breach#sundrop#fnaf moon#moondrop#binary resurgence: round 2 au#binary resurgence#my asks#mikas stuff#dca x reader#dca x y/n#sun x reader#moon x reader

459 notes

·

View notes