#AIPC

Explore tagged Tumblr posts

Text

0 notes

Video

youtube

Intel放棄記憶體封裝 AIPC時代又將錯失? 20241122 Intel近日宣布放棄將記憶體封裝於CPU內的設計。我認為這是典型的「創新者窘境」,錯失了AIPC市場的未來。記憶體封裝是AI運算的關鍵,未來的AIPC必須支援更大的AI模型,Intel應該推動創新,這樣才能真正抓住AI時代的機會。

0 notes

Text

RAPIDS cuDF Boosts Python Pandas On RTX-powered AI PCs

This article is a part of the AI Decoded series, which shows off new RTX workstation and PC hardware, software, tools, and accelerations while demystifying AI by making the technology more approachable.

AI is fostering innovation and increasing efficiency across sectors, but in order to reach its full potential, the system has to be trained on enormous volumes of high-quality data.

Data scientists are crucial to the preparation of this data, particularly in domain-specific industries where improving AI skills requires specialized, sometimes private data.

NVIDIA revealed that RAPIDS cuDF, a library that makes data manipulation easier for users, speeds up the pandas software library without requiring any code modifications. This is intended to assist data scientists who are facing a growing amount of labor. Pandas is a well-liked, robust, and adaptable Python computer language data analysis and manipulation toolkit. Data scientists may now utilize their favorite code base without sacrificing the speed at which data is processed with RAPIDS cuDF.

Additionally, NVIDIA RTX AI hardware and technology help speed up data processing. Proficient GPUs are among them, providing the computing capacity required to swiftly and effectively boost AI across the board, from data science operations to model training and customization on PCs and workstations.

Python Pandas

Tabular data is the most often used data format; it is arranged in rows and columns. Spreadsheet programs such as Excel may handle smaller datasets; however, modeling pipelines and datasets with tens of millions of rows usually need data frame libraries in Python or other programming languages.

Because of the pandas package, which has an intuitive application programming interface (API), Python is a popular option for data analysis. However, pandas has processing speed and efficiency issues on CPU-only systems as dataset volumes increase. enormous language models need enormous datasets with a lot of text, which the library is infamously bad at handling.

Data scientists are presented with a choice when their data needs exceed pandas’ capabilities: put up with lengthy processing times or make the difficult and expensive decision to migrate to more complicated and expensive technologies that are less user-friendly.

RAPIDS cuDF-Accelerated Preprocessing Pipelines

Data scientists may utilize their favorite code base without compromising processing performance using RAPIDS cuDF.

An open-source collection of the Python packages with GPU acceleration called RAPIDS is intended to enhance data science and analytics workflows. A GPU Data Frame framework called RAPIDS cuDF offers an API for loading, filtering, and modifying data that is similar to pandas.Image credit to Nvidia

Data scientists may take use of strong parallel processing by running their current pandas code on GPUs using RAPIDS cuDF‘s “pandas accelerator mode,” knowing that the code will transition to CPUs as needed. This compatibility offers cutting-edge, dependable performance.

Larger datasets and billions of rows of tabular text data are supported by the most recent version of RAPIDS cuDF. This makes it possible for data scientists to preprocess data for generative AI use cases using pandas code.

NVIDIA RTX-Powered AI Workstations and PCs Improve Data Science

A recent poll indicated that 57% of data scientists use PCs, desktops, or workstations locally.

Significant speedups may be obtained by data scientists beginning with the NVIDIA GeForce RTX 4090 GPU. When compared to conventional CPU-based solutions, cuDF may provide up to 100x greater performance with NVIDIA RTX 6000 Ada Generation GPUs in workstations as datasets expand and processing becomes more memory-intensive.Image credit to Nvidia

With the NVIDIA AI Workbench, data scientists may quickly become proficient with RAPIDS cuDF. Together, data scientists and developers can design, collaborate on, and move AI and data science workloads across GPU systems with our free developer environment manager powered by containers. Several sample projects, like the cuDF AI Workbench project, are available on the NVIDIA GitHub repository to help users get started.

Additionally, cuDF is pre-installed on HP AI Studio, a centralized data science platform intended to assist AI professionals in smoothly migrating their desktop development environment to the cloud. As a result, they may establish, work on projects together, and manage various situations.

Beyond only improving performance, cuDF on RTX-powered AI workstations and PCs has further advantages. It furthermore

Offers fixed-cost local development on strong GPUs that replicates smoothly to on-premises servers or cloud instances, saving time and money.

Enables data scientists to explore, improve, and extract insights from datasets at interactive rates by enabling faster data processing for quicker iterations.

Provides more effective data processing later on in the pipeline for improved model results.

A New Data Science Era

The capacity to handle and analyze large information quickly will become a critical difference as AI and data science continue to advance and allow breakthroughs across sectors. RAPIDS cuDF offers a platform for next-generation data processing, whether it is for creating intricate machine learning models, carrying out intricate statistical analysis, or investigating generative artificial intelligence.

In order to build on this foundation, NVIDIA is supporting the most widely used data frame tools, such as Polaris, one of the fastest-growing Python libraries, which out-of-the-box dramatically speeds up data processing as compared to alternative CPU-only tools.

This month, Polars revealed the availability of the RAPIDS cuDF-powered Polars GPU Engine in open beta. Users of Polars may now increase the already blazingly fast dataframe library’s speed by up to 13 times.

Read more on govindhtech.com

#RAPIDScuDFBoosts#PythonPandas#RTXpowered#aipc#NVIDIARTX#applicationprogramminginterface#API#Python#generativeAI#GeForceRTX4090#news#cloud#NVIDIARTX6000#artificialintelligence#RAPIDScuDF#DataScienceEra#technology#technews#govindhtech

0 notes

Text

AI-Capable PCs Capture 14% of Global Q2 Shipments

Although AIPCs were introduced in the middle of Q2, they still captured 14% of global shipments, according to a report released Tuesday by research firm Canalys. https://tinyurl.com/24xwj9p7

0 notes

Text

Microsoft Introduces Copilot Key on Windows Keyboards for AI Conversations

Microsoft has announced a significant addition to upcoming Windows PCs: the Copilot key, designed to facilitate text conversations with the tech giant’s virtual assistant. This innovation marks one of the most prominent updates to Windows keyboards since the introduction of the Windows key in 1994, revolutionizing user interaction with the operating system.

The Role, Integration, and Accessibility

The Copilot feature in Windows harnesses the power of artificial intelligence models developed by OpenAI, a startup backed by Microsoft. Leveraging the capabilities of OpenAI’s ChatGPT chatbot, Copilot can generate human-like text responses based on minimal written input. Users can command Copilot to compose emails, address inquiries, create visuals, and even activate various PC functionalities. Additionally, subscribers to Copilot for Microsoft 365 in business settings can receive chat highlights from Teams and seek assistance in crafting Word documents.

Initially launched for PCs operating on Windows 10, the world’s most widely used OS, Copilot is now being incorporated into Windows 11. To activate Copilot, individuals can simply hold down the Windows key and press the C key, summoning the virtual assistant instantly. Notably, Microsoft has further streamlined this accessibility by introducing a dedicated Copilot key on keyboards.

Introducing a new Copilot key for Windows 11 PCs

youtube

Outlook for 2024

While the dominance of Windows has diminished, it still contributes significantly to Microsoft’s revenue, accounting for approximately 10%. Introducing innovations like Copilot aims to stimulate a surge in PC upgrades, benefiting companies like Dell and HP seeking to replace devices purchased during the COVID-19 pandemic. This aligns with the tech industry’s vision of AI-enabled PCs, emphasizing specialized chip components within devices to efficiently execute demanding computational models.

Yusuf Mehdi, Microsoft’s head of Windows and Surface, envisions 2024 as the “year of the AI PC.” He anticipates substantial advancements in Windows, PC enhancements, and chip-level improvements, setting the stage for the proliferation of AI-driven computing devices.

Implementation Details

Manufacturers will unveil Copilot-equipped PCs ahead of the CES conference in Las Vegas, with availability slated to commence later this month. Microsoft Surface PCs, among others, will prominently feature the new Copilot key, signaling a pivotal step in user interaction and AI integration within computing devices.

According to a Microsoft spokesperson, in some instances, the Copilot key may replace the Menu key or the Right Control key. Larger computers, however, will accommodate both the Copilot key and the right Control key, ensuring seamless integration without compromising existing functionalities.

Microsoft’s initiative to introduce the Copilot key signifies a deliberate stride towards enhancing user experiences and integrating AI capabilities within the realm of everyday computing, promising a transformative shift in how users interact with their Windows PCs.

Curious to learn more? Explore our articles on Enterprise Wired

0 notes

Text

🤍🖤🧋🎀

#my own stuff#mine ❤️#my details#moments#mobilephoto#studyblr#medical studyblr#my outfits#fashion#coffelover#coffeetime#my coffee#بانجو من غير بانجو#AIPC 2025

29 notes

·

View notes

Text

0 notes

Text

DELL US Home Page History on January 9, 2025!

youtube

#homepageexplorer#Dell#CES2025#DellPro14Premium#AIPCs#TechInnovation#LaptopRevolution#OLEDDisplay#IntelLunarLake#ProductRebranding#DellProMax#Youtube

0 notes

Text

Eleven Years Later

I am a regular contributor to The Printer, the online magazine of the Australian Photographic Society’s Print Group. This article first was published on pages 17-20 of that magazine’s December 2024 issue here. Back in 2013, a rather different version of that publication (but also known as The Printer) featured images by Print Group members in each issue. In the September issue of that year, I…

View On WordPress

#Australian Digital Photography Awards (ADPA)#Australian Interstate Photographic Competition (AIPC)#Canberra Photographic Society#Clouds#Dance Routes#Flora#Fuji Landscape Photographer of the Year#High Country#Michael Weston#Pelican#Photoart#photography#Rainmist#Rekha Tandon#Robyn Beeche#Robyn Swadling#Sunlight#Wollongong

0 notes

Text

【実機を試す!】世界を変えるPC Copilot+PCガチでレビューするぞ!Microsoft Surface Laptop【忖度一切無し!】

#AIモデル解説#ainews#xelite#xplus#評価#copilotai#snapdragonx#解説動画#microsoftnews#windows11#性能#aipc#benchmarks#PC#コパイロット#win11#パソコン#コパイロットpc#cpu#生成AI#GPU#コパイロット使い方#ガジェット紹介#AI#copilotpc#リコ��ル#パソコン初心者#chatgpt#Recall#ImageCreator

0 notes

Link

Get ready for a revolution in PC performance and AI capabilities. At Computex 2024, AMD unveiled its groundbreaking Zen 5 architecture, powering the next generation of Ryzen processors. This exciting lineup includes the all-new Ryzen 9000 series for desktop PCs and the 3rd generation Ryzen AI processors for ultrabooks. Computex 2024 A New Era of Desktop Processing: The Ryzen 9000 Series AMD has taken the crown for the most advanced desktop processors with the Ryzen 9000 series. Built on the AM5 platform, these processors boast cutting-edge features like PCIe 5.0 and DDR5 support. They also deliver a significant 16% improvement in instructions per core (IPC) compared to their Zen 4 predecessors. Here's a closer look at the specs of the Ryzen 9000 family: Flagship Performance: The Ryzen 9 9950X reigns supreme with 16 cores, 32 threads, and a blazing-fast clock speed reaching up to 5.7 GHz. This powerhouse surpasses the competition in graphics bandwidth and AI acceleration, translating to impressive performance gains in creative applications like Blender (up to 56% faster) and high frame rates in demanding games (up to 23% improvement). Multiple Options: The Ryzen 9000 series caters to diverse needs with the Ryzen 9 9900X, Ryzen 7 9700X, and Ryzen 5 9600X processors. All models boast impressive core counts, thread counts, and clock speeds, ensuring smooth performance for gamers, content creators, and professionals alike. Availability: Gear up for an upgrade! The Ryzen 9000 series is slated for release in July 2024. Ryzen AI 300: Unleashing On-Device AI Power for Next-Gen Laptops The future of AI-powered computing is here with the Ryzen AI 300 series. Designed for ultrabooks, these processors integrate a powerful dedicated Neural Processing Unit (NPU) capable of delivering a staggering 50 trillion operations per second (TOPs). This translates to impressive on-device AI experiences, including: Real-time translation: Break down language barriers effortlessly with real-time translation powered by the NPU. Live captioning: Never miss a beat with live captioning that keeps you in the loop during meetings or lectures. Co-creation: Unleash your creativity with AI-assisted tools that enhance your workflow. The Ryzen AI 300 series comes in two variants: Ryzen AI 9 HX 370: This flagship model boasts the full power of the NPU with 50 TOPs and 16 compute units, ideal for demanding AI workloads. Ryzen AI 9 365: Offering exceptional value, this processor delivers 40 TOPs of AI performance with 10 CPU cores, catering to a wide range of AI applications. Look forward to experiencing the power of Ryzen AI 300 in upcoming Copilot+ PCs and AI+ PCs starting July 2024. Frequently Asked Questions Q: When will the Ryzen 9000 series and Ryzen AI 300 processors be available? A: Both processor lines are expected to hit the market in July 2024. Q: What are the key benefits of the Ryzen 9000 series? A: The Ryzen 9000 series offers significant advantages, including: Increased performance with a 16% IPC improvement over Zen 4 processors. Support for cutting-edge technologies like PCIe 5.0 and DDR5. A wide range of processor options for various needs and budgets. Q: What kind of AI experiences can I expect with the Ryzen AI 300 series? A: The Ryzen AI 300 series unlocks a new level of on-device AI capabilities, including: Real-time language translation. Live captioning for videos and meetings. AI-powered co-creation tools for enhanced creativity. Q: Which laptops will feature the Ryzen AI 300 processors? A: Look for the Ryzen AI 300 series in upcoming Copilot+ PCs and AI+ PCs from various manufacturers.

#AIPCs#AItasks#AM5platform#amdradeongraphics#AMDRyzenAI9HX370#amdzen5#Computex2024#CPU#DDR5#desktopprocessors#gamingPCs.#GPU#NeuralProcessingUnit#PCIe5.0#RDNA3.5architecture#Ryzen9000series#RyzenAI300series#TOPS#ultrabooks

0 notes

Link

0 notes

Text

Friday Night Funkin: AIPC season 6

New things explained

Sierra belongs to sieraluvv on Instagram

Sunny Honey and Zoey are half sister in my AU

Two belong to @luminarystarfall

There’s gonna be a FNF AIPC x Nicktoon all star brawl after hours crossover event soon

#friday night funkin#fnf mod#fnf headcanons#fnf au#friday night funkin : adventures in parodies city#nicktoons unite#nickelodeon all star brawl

11 notes

·

View notes

Text

AMD Instinct MI300X GPU Accelerators With Meta’s Llama 3.2

AMD applauds Meta for their most recent Llama 3.2 release. Llama 3.2 is intended to increase developer productivity by assisting them in creating the experiences of the future and reducing development time, while placing a stronger emphasis on data protection and ethical AI innovation. The focus on flexibility and openness has resulted in a tenfold increase in Llama model downloads this year over last, positioning it as a top option for developers looking for effective, user-friendly AI solutions.

Llama 3.2 and AMD Instinct MI300X GPU Accelerators

The world of multimodal AI models is changing with AMD Instinct MI300X accelerators. One example is Llama 3.2, which has 11B and 90B parameter models. To analyze text and visual data, they need a tremendous amount of processing power and memory capa

AMD and Meta have a long-standing cooperative relationship. Its is still working to improve AI performance for Meta models on all of AMD platforms, including Llama 3.2. AMD partnership with Meta allows Llama 3.2 developers to create novel, highly performant, and power-efficient agentic apps and tailored AI experiences on AI PCs and from the cloud to the edge.

AMD Instinct accelerators offer unrivaled memory capability, as demonstrated by the launch of Llama 3.1 in previous demonstrations. This allows a single server with 8 MI300X GPUs to fit the largest open-source project currently available with 405B parameters in FP16 datatype something that no other 8x GPU platform can accomplish. AMD Instinct MI300X GPUs are now capable of supporting both the latest and next iterations of these multimodal models with exceptional memory economy with the release of Llama 3.2.

By lowering the complexity of distributing memory across multiple devices, this industry-leading memory capacity makes infrastructure management easier. It also allows for quick training, real-time inference, and the smooth handling of large datasets across modalities, such as text and images, without compromising performance or adding network overhead from distributing across multiple servers.

With the powerful memory capabilities of the AMD Instinct MI300X platform, this may result in considerable cost savings, improved performance efficiency, and simpler operations for enterprises.

Throughout crucial phases of the development of Llama 3.2, Meta has also made use of AMD ROCm software and AMD Instinct MI300X accelerators, enhancing their long-standing partnership with AMD and their dedication to an open software approach to AI. AMD’s scalable infrastructure offers open-model flexibility and performance to match closed models, allowing developers to create powerful visual reasoning and understanding applications.

Developers now have Day-0 support for the newest frontier models from Meta on the most recent generation of AMD Instinct MI300X GPUs, with the release of the Llama 3.2 generation of models. This gives developers access to a wider selection of GPU hardware and an open software stack ROCm for future application development.

CPUs from AMD EPYC and Llama 3.2

Nowadays, a lot of AI tasks are executed on CPUs, either alone or in conjunction with GPUs. AMD EPYC processors provide the power and economy needed to power the cutting-edge models created by Meta, such as the recently released Llama 3.2. The rise of SLMs (small language models) is noteworthy, even if the majority of recent attention has been on LLM (long language model) breakthroughs with massive data sets.

These smaller models need far less processing resources, assist reduce risks related to the security and privacy of sensitive data, and may be customized and tailored to particular company datasets. These models are appropriate and well-sized for a variety of corporate and sector-specific applications since they are made to be nimble, efficient, and performant.

The Llama 3.2 version includes new capabilities that are representative of many mass market corporate deployment situations, particularly for clients investigating CPU-based AI solutions. These features include multimodal models and smaller model alternatives.

When consolidating their data center infrastructure, businesses can use the Llama 3.2 models’ leading AMD EPYC processors to achieve compelling performance and efficiency. These processors can also be used to support GPU- or CPU-based deployments for larger AI models, as needed, by utilizing AMD EPYC CPUs and AMD Instinct GPUs.

AMD AI PCs with Radeon and Ryzen powered by Llama 3.2

AMD and Meta have collaborated extensively to optimize the most recent versions of Llama 3.2 for AMD Ryzen AI PCs and AMD Radeon graphics cards, for customers who choose to use it locally on their own PCs. Llama 3.2 may also be run locally on devices accelerated by DirectML AI frameworks built for AMD on AMD AI PCs with AMD GPUs that support DirectML. Through AMD partner LM Studio, Windows users will soon be able to enjoy multimodal Llama 3.2 in an approachable package.

Up to 192 AI accelerators are included in the newest AMD Radeon, graphics cards, the AMD Radeon PRO W7900 Series with up to 48GB and the AMD Radeon RX 7900 Series with up to 24GB. These accelerators can run state-of-the-art models such Llama 3.2-11B Vision. Utilizing the same AMD ROCm 6.2 optimized architecture from the joint venture between AMD and Meta, customers may test the newest models on PCs that have these cards installed right now3.

AMD and Meta: Progress via Partnership

To sum up, AMD is working with Meta to advance generative AI research and make sure developers have everything they need to handle every new release smoothly, including Day-0 support for entire AI portfolio. Llama 3.2’s integration with AMD Ryzen AI, AMD Radeon GPUs, AMD EPYC CPUs, AMD Instinct MI300X GPUs, and AMD ROCm software offers customers a wide range of solution options to power their innovations across cloud, edge, and AI PCs.

Read more on govindhtech.com

#AMDInstinctMI300X#GPUAccelerators#AIsolutions#MetaLlama32#AImodels#aipc#AMDEPYCCPU#smalllanguagemodels#AMDEPYCprocessors#AMDInstinctMI300XGPU#AMDRyzenAI#LMStudio#graphicscards#AMDRadeongpu#amd#gpu#mi300x#technology#technews#govindhtech

0 notes

Text

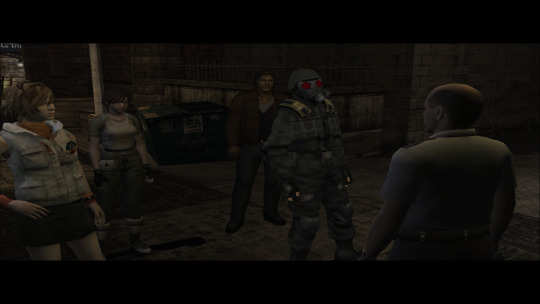

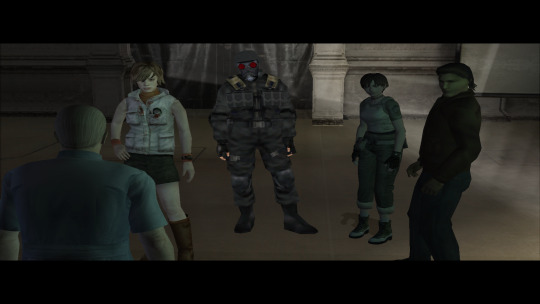

Now I'm back... back again, with my RE Outbreak junk, this is the Japanese version of the game, online is actually pretty fun with this game, though I'd have to say I prefer File 1's scenarios over File 2's, save Hellfire, though Decisions Decisions does feel more conclusive than EOTR. Though I had to mod over the cast along with their costumes, cause there isn't a code to change AIPCs into the NPCs you can get. I do have James, Mary, Maria, and Henry also modded in, but had to make some adjustments with them, plus did become a little glitchy afterwards, with Mary I noticed... but what can you do?

Yeah, Silent Hill characters can't catch a break now can they?

#silent hill#resident evil#resident evil outbreak#modding#crossover#harry mason#heather mason#rebecca chambers#hunk resident evil#just for fun

21 notes

·

View notes