#Biomedical data analysis

Explore tagged Tumblr posts

Text

0 notes

Text

Job - Alert 📢

🌟 Kickstart Your Career with Us! 🌟

The Leibniz-Institut für Analytische Wissenschaften – ISAS – e.V. is inviting applications for a Masters Intern (m/f/d) in Machine Learning and Bioimage Analysis in our Dortmund team!

📅 Application Deadline: March 31, 2025

💼 Opportunity to work on cutting-edge bioimage analysis projects!

If you're enrolled in a Master's program in computer science, statistics, or related fields, and have a passion for data analysis, we want to hear from you!

📲 Apply through our applicant portal or reach out with informal inquiries (Ref: 344_2025) at

https://www.academiceurope.com/job/?id=7080

Join us in advancing precision medicine! 🙌

#jobs#hiring#science#jobseekers#master study#internship#computer science#biomedical science#mathematics#statistics#data analysis

0 notes

Text

"Revolutionizing Biotech: How AI is Transforming the Industry"

The biotech industry is on the cusp of a revolution, and Artificial Intelligence (AI) is leading the charge. AI is transforming the way biotech researchers and developers work, enabling them to make groundbreaking discoveries and develop innovative solutions at an unprecedented pace. “Accelerating Scientific Discovery with AI” AI is augmenting human capabilities in biotech research, enabling…

View On WordPress

#Agricultural Biotech#agriculture#ai#Artificial Intelligence#bioinformatics#Biological Systems#Biomedical Engineering.#Biotech#Data Analysis#Drug Discovery#Environment#genetics#genomics#healthcare#innovation#Machine Learning#personalized medicine#Precision Medicine#Research#science#synthetic biology#technology

0 notes

Text

people who work/study in quantitative bio-adjacent fields, rise up. computational neuroscience where you get to see someone's thoughts in feelings in graph form??? so cool. biophysics where you can pass blood plasma through an electric field to determine whether a patient has cancer or not?? unbelievable. biomedical engineering where you can literally build a device to pump someone's heart and be the difference between their life and death??? oh my god. disease modelling, being able to predict AND prevent communities being affected by disease on a large scale through your analysis of data??? i love science

#biomedical engineering#bme#biophysics#healthcare#science academic#chemblr#bioblr#physics#physblr#neuroscience#brains#cell bio#research#sciblr#studyblr

229 notes

·

View notes

Text

Almost Human (TV series, 2013-2014) and Detroit: Become Human (video game, 2018) similarities. PART 2

Meeting the Creator. The founder of the robotics corporation, the most gifted roboticist of his generation. (What he's up to?)

"The Luger test" and "the Kamski test." Tests were created to identify defective androids.

Android children.

Dorian's and Markus' heterochromia (Dorian is malfunctioning here. His eyes are actually blue).

Android wakes the other android to give it a better life.

Androids question the meaning of life and are afraid of death.

Androids shut down due to being emotionally overwhelmed by the stressful situations.

Androids seem to have emotional connection.

Android abilities: facial recognition, biomedical scanner, chemical analysis, data tracking, the ability to speed read data, the ability to read other androids' memory, the ability to speak in different languages, singing, parkour ability, combat, wireless communication, voice mimicry, skin regeneration...

Android LED.

Interfacing.

Android hands.

Skinless androids.

Blue blood. Androids are blue-blooded.

Giraffes (giraffe became a symbol of the Almost Human fandom).

+Extra fun facts:

Almost Human was filmed in Canada. Show uses exterior shots of the city of Vancouver. Detroit: Become Human events are connected to Canada too.

Detroit Olympics 2036 were mentioned in one of the episodes of Almost Human.

[PART 1]

#almost human#dbh#detroit become human#bad robot#quantic dream#j.h. wyman#j.j. abrams#david cage#tv shows#games#sci-fi#robots#androids#synthetics#john kennex#dorian#mx-43#richard paul#valerie stahl#dbh kamski#dbh connor#dbh markus#dbh kara#dbh north#mijchi

56 notes

·

View notes

Text

Also preserved on our archive

New research has found that 33.6% of surveyed healthcare workers in England report symptoms consistent with post-COVID syndrome.

New research from the Institute of Psychiatry, Psychology & Neuroscience (IoPPN) at King's College London, and University College London has found that 33.6% of surveyed healthcare workers in England report symptoms consistent with post-COVID syndrome (PCS), more commonly known as Long COVID. Yet only 7.4% of respondents reported that they have received a formal diagnosis.

The research is part of the wider long-term NHS CHECK study that is tracking the mental and physical health of NHS staff throughout and beyond the COVID-19 pandemic. Other research by NHS CHECK has included healthcare workers’ experiences of support services, prevalence of mental health problems, moral injury, and suicidal thoughts.

The study used the NICE definition of Long COVID, which includes symptoms like fatigue, cognitive difficulties, and anxiety for 12 weeks or more after they've had COVID.

After four and a half years since it was first described, there is still a lot to learn about Long COVID. This study has sought to explore how common Long COVID is among healthcare workers and if certain people are more likely to develop it than others.

“PCS can have a dramatic impact on a person’s day to day life. If we are to ensure that the healthcare workers, and wider population, affected by it receive the best possible care and support, we need to address both the physiological and psychosocial mechanisms behind it.”

-Dr Sharon Stevelink, Reader in Epidemiology and one of the study’s authors from King's IoPPN

The research was led by Dr Danielle Lamb, Senior Research Fellow at University College London’s Institute of Epidemiology & Health Care, who said “COVID-19 has not gone away. We know that more infections mean more people are at risk of developing Long COVID. This research shows that we should be particularly concerned about the impacts of this on the health and social care sector, especially in older and female workers, and staff with pre-existing physical and mental health conditions. We now need to better understand the complex interplay between biomedical, psychological, and social factors that affect people's experiences of Long COVID, and how healthcare workers with this condition can best be supported.”

The study team collaborated with a Patient and Public Involvement and Engagement (PPIE) panel of 16 healthcare workers with Long COVID. The panel helped design the research by developing the study questions, shaping the analysis, and interpreting the results.

The study’s Co-Lead, Dr Brendan Dempsey, Research Fellow at University College London, said “Collaborating with the healthcare workers who formed our PPIE group has been really important in making sure that we are conducting research that is relevant to them. They also helped interpret our results, sharing their own experiences of living with Long COVID and working in the NHS.”

To gather the survey findings, data was gathered from over 5,000 healthcare workers across three surveys spanning 32 months. The research found that potential risk factors for Long COVID included: being female, being between 51 and 60 years of age, directly working with COVID-19 patients, having pre-existing respiratory conditions, and having existing mental health issues.

The lack of formal diagnosis, despite the widespread prevalence of symptoms, raises concerns that healthcare professionals with Long COVID symptoms are not seeking care or are not being diagnosed. The research team calls for urgent improvements in diagnostic practices and access to support for those living with Long COVID in the healthcare sector.

The research was funded by The Colt Foundation and supported by the National Institute for Health Research (NIHR) Applied Research Collaboration North Thames. It was a collaboration between University College London, King’s College London, and 18 participating NHS Trusts.

Study Link: oem.bmj.com/content/early/2024/10/01/oemed-2024-109621.info

#long covid#mask up#covid#pandemic#covid 19#wear a mask#coronavirus#public health#sars cov 2#still coviding#wear a respirator

25 notes

·

View notes

Text

Low gravity in space travel found to weaken and disrupt normal rhythm in heart muscle cells

Johns Hopkins Medicine scientists who arranged for 48 human bioengineered heart tissue samples to spend 30 days at the International Space Station report evidence that the low gravity conditions in space weakened the tissues and disrupted their normal rhythmic beats when compared to Earth-bound samples from the same source.

The scientists said the heart tissues "really don't fare well in space," and over time, the tissues aboard the space station beat about half as strongly as tissues from the same source kept on Earth.

The findings, they say, expand scientists' knowledge of low gravity's potential effects on astronauts' survival and health during long space missions, and they may serve as models for studying heart muscle aging and therapeutics on Earth.

A report of the scientists' analysis of the tissues is published in the Proceedings of the National Academy of Sciences.

Previous studies showed that some astronauts return to Earth from outer space with age-related conditions, including reduced heart muscle function and arrythmias (irregular heartbeats), and that some—but not all—effects dissipate over time after their return.

But scientists have sought ways to study such effects at a cellular and molecular level in a bid to find ways to keep astronauts safe during long spaceflights, says Deok-Ho Kim, Ph.D., a professor of biomedical engineering and medicine at the Johns Hopkins University School of Medicine. Kim led the project to send heart tissue to the space station.

To create the cardiac payload, scientist Jonathan Tsui, Ph.D. coaxed human induced pluripotent stem cells (iPSCs) to develop into heart muscle cells (cardiomyocytes). Tsui, who was a Ph.D. student in Kim's lab at the University of Washington, accompanied Kim as a postdoctoral fellow when Kim moved to Johns Hopkins University in 2019. They continued the space biology research at Johns Hopkins.

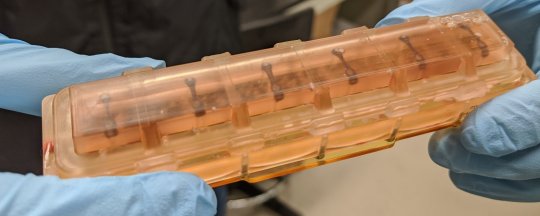

Tsui then placed the tissues in a bioengineered, miniaturized tissue chip that strings the tissues between two posts to collect data about how the tissues beat (contract). The cells' 3D housing was designed to mimic the environment of an adult human heart in a chamber half the size of a cell phone.

To get the tissues aboard the SpaceX CRS-20 mission, which launched in March 2020 bound for the space station, Tsui says he had to hand-carry the tissue chambers on a plane to Florida, and continue caring for the tissues for a month at the Kennedy Space Center. Tsui is now a scientist at Tenaya Therapeutics, a company focused on heart disease prevention and treatment.

Once the tissues were on the space station, the scientists received real-time data for 10 seconds every 30 minutes about the cells' strength of contraction, known as twitch forces, and on any irregular beating patterns. Astronaut Jessica Meir, Ph.D., M.S. changed the liquid nutrients surrounding the tissues once each week and preserved tissues at specific intervals for later gene readout and imaging analyses.

The research team kept a set of cardiac tissues developed the same way on Earth, housed in the same type of chamber, for comparison with the tissues in space.

When the tissue chambers returned to Earth, Tsui continued to maintain and collect data from the tissues.

"An incredible amount of cutting-edge technology in the areas of stem cell and tissue engineering, biosensors and bioelectronics, and microfabrication went into ensuring the viability of these tissues in space," says Kim, whose team developed the tissue chip for this project and subsequent ones.

Devin Mair, Ph.D., a former Ph.D. student in Kim's lab and now a postdoctoral fellow at Johns Hopkins, then analyzed the tissues' ability to contract.

In addition to losing strength, the heart muscle tissues in space developed irregular beating (arrhythmias)—disruptions that can cause a human heart to fail. Normally, the time between one beat of cardiac tissue and the next is about a second. This measure, in the tissues aboard the space station, grew to be nearly five times longer than those on Earth, although the time between beats returned nearly to normal when the tissues returned to Earth.

The scientists also found, in the tissues that went to space, that sarcomeres—the protein bundles in muscle cells that help them contract—became shorter and more disordered, a hallmark of human heart disease.

In addition, energy-producing mitochondria in the space-bound cells grew larger, rounder and lost the characteristic folds that help the cells use and produce energy.

Finally, Mair, Eun Hyun Ahn, Ph.D.—an assistant research professor of biomedical engineering—and Zhipeng Dong, a Johns Hopkins Ph.D. student, studied the gene readout in the tissues housed in space and on Earth. The tissues at the space station showed increased gene production involved in inflammation and oxidative damage, also hallmarks of heart disease.

"Many of these markers of oxidative damage and inflammation are consistently demonstrated in post-flight checks of astronauts," says Mair.

Kim's lab sent a second batch of 3D engineered heart tissues to the space station in 2023 to screen for drugs that may protect the cells from the effects of low gravity. This study is ongoing, and according to the scientists, these same drugs may help people maintain heart function as they get older.

The scientists are continuing to improve their "tissue on a chip" system and are studying the effects of radiation on heart tissues at the NASA Space Radiation Laboratory. The space station is in low Earth orbit, where the planet's magnetic field shields occupants from most of the effects of space radiation.

IMAGE: Heart tissues within one of the launch-ready chambers. Credit: Jonathan Tsui

5 notes

·

View notes

Text

The Role of Photon Insights in Helps In Academic Research

In recent times, the integration of Artificial Intelligence (AI) with academic study has been gaining significant momentum that offers transformative opportunities across different areas. One area in which AI has a significant impact is in the field of photonics, the science of producing as well as manipulating and sensing photos that can be used in medical, telecommunications, and materials sciences. It also reveals its ability to enhance the analysis of data, encourage collaboration, and propel the development of new technologies.

Understanding the Landscape of Photonics

Photonics covers a broad range of technologies, ranging from fibre optics and lasers to sensors and imaging systems. As research in this field gets more complicated and complex, the need for sophisticated analytical tools becomes essential. The traditional methods of data processing and interpretation could be slow and inefficient and often slow the pace of discovery. This is where AI is emerging as a game changer with robust solutions that improve research processes and reveal new knowledge.

Researchers can, for instance, use deep learning methods to enhance image processing in applications such as biomedical imaging. AI-driven algorithms can improve the image’s resolution, cut down on noise, and even automate feature extraction, which leads to more precise diagnosis. Through automation of this process, experts are able to concentrate on understanding results, instead of getting caught up with managing data.

Accelerating Material Discovery

Research in the field of photonics often involves investigation of new materials, like photonic crystals, or metamaterials that can drastically alter the propagation of light. Methods of discovery for materials are time-consuming and laborious and often require extensive experiments and testing. AI can speed up the process through the use of predictive models and simulations.

Facilitating Collaboration

In a time when interdisciplinary collaboration is vital, AI tools are bridging the gap between researchers from various disciplines. The research conducted in the field of photonics typically connects with fields like engineering, computer science, and biology. AI-powered platforms aid in this collaboration by providing central databases and sharing information, making it easier for researchers to gain access to relevant data and tools.

Cloud-based AI solutions are able to provide shared datasets, which allows researchers to collaborate with no limitations of geographic limitations. Collaboration is essential in photonics, where the combination of diverse knowledge can result in revolutionary advances in technology and its applications.

Automating Experimental Procedures

Automation is a third area in which AI is becoming a major factor in the field of academic research in the field of photonics. The automated labs equipped with AI-driven technology can carry out experiments with no human involvement. The systems can alter parameters continuously based on feedback, adjusting conditions for experiments to produce the highest quality outcomes.

Furthermore, robotic systems that are integrated with AI can perform routine tasks like sampling preparation and measurement. This is not just more efficient but also decreases errors made by humans, which results in more accurate results. Through automation researchers can devote greater time for analysis as well as development which will speed up the overall research process.

Predictive Analytics for Research Trends

The predictive capabilities of AI are crucial for analyzing and predicting research trends in the field of photonics. By studying the literature that is already in use as well as research outputs, AI algorithms can pinpoint new themes and areas of research. This insight can assist researchers to prioritize their work and identify emerging trends that could be destined to be highly impactful.

For organizations and funding bodies These insights are essential to allocate resources as well as strategic plans. If they can understand where research is heading, they are able to help support research projects that are in line with future requirements, ultimately leading to improvements that benefit the entire society.

Ethical Considerations and Challenges

While the advantages of AI in speeding up research in photonics are evident however, ethical considerations need to be taken into consideration. Questions like privacy of data and bias in algorithmic computation, as well as the possibility of misuse by AI technology warrant careful consideration. Institutions and researchers must adopt responsible AI practices to ensure that the applications they use enhance human decision-making and not substitute it.

In addition, the incorporation in the use of AI into academic studies calls for the level of digital literacy which not every researcher are able to attain. Therefore, investing in education and education about AI methods and tools is vital to reap the maximum potential advantages.

Conclusion

The significance of AI in speeding up research at universities, especially in the field of photonics, is extensive and multifaceted. Through improving data analysis and speeding up the discovery of materials, encouraging collaboration, facilitating experimental procedures and providing insights that are predictive, AI is reshaping the research landscape. As the area of photonics continues to grow, the integration of AI technologies is certain to be a key factor in fostering innovation and expanding our knowledge of applications based on light.

Through embracing these developments scientists can open up new possibilities for research, which ultimately lead to significant scientific and technological advancements. As we move forward on this new frontier, interaction with AI as well as academic researchers will prove essential to address the challenges and opportunities ahead. The synergy between these two disciplines will not only speed up discovery in photonics, but also has the potential to change our understanding of and interaction with the world that surrounds us.

2 notes

·

View notes

Text

Impact of Digital Signal Processing in Electrical Engineering - Arya College

Arya College of Engineering & I.T is the best college of Jaipur, Digital SignalProcessing (DSP) has become a cornerstone of modern electrical engineering, influenced a wide range of applications and driven significant technological advancements. This comprehensive overview will explore the impact of DSP in electrical engineering, highlighting its applications, benefits, and emerging trends.

Understanding Digital Signal Processing

Definition and Fundamentals

Digital Signal Processing involves the manipulation of signals that have been converted into a digital format. This process typically includes sampling, quantization, and various mathematical operations to analyze and modify the signals. The primary goal of DSP is to enhance the quality and functionality of signals, making them more suitable for various applications.

Key components of DSP include:

Analog-to-Digital Conversion (ADC): This process converts analog signals into digital form, allowing for digital manipulation.

Digital Filters: These algorithms are used to enhance or suppress certain aspects of a signal, such as noise reduction or frequency shaping.

Fourier Transform: A mathematical technique that transforms signals from the time domain to the frequency domain, enabling frequency analysis.

Importance of DSP in Electrical Engineering

DSP has revolutionized the way engineers approach signal processing, offering numerous advantages over traditional analog methods:

Precision and Accuracy: Digital systems can achieve higher precision and reduce errors through error detection and correction algorithms.

Flexibility: DSP systems can be easily reprogrammed or updated to accommodate new requirements or improvements, making them adaptable to changing technologies.

Complex Processing Capabilities: Digital processors can perform complex mathematical operations that are difficult to achieve with analog systems, enabling advanced applications such as real-time image processing and speech recognition.

Applications of Digital Signal Processing

The versatility of DSP has led to its adoption across various fields within electrical engineering and beyond:

1. Audio and Speech Processing

DSP is extensively used in audio applications, including:

Audio Compression: Techniques like MP3 and AAC reduce file sizes while preserving sound quality, making audio files easier to store and transmit.

Speech Recognition: DSP algorithms are crucial for converting spoken language into text, enabling voice-activated assistants and transcription services.

2. Image and Video Processing

In the realm of visual media, DSP techniques enhance the quality and efficiency of image and video data:

Digital Image Processing: Applications include noise reduction, image enhancement, and feature extraction, which are essential for fields such as medical imaging and remote sensing.

Video Compression: Standards like H.264 and HEVC enable efficient storage and streaming of high-definition video content.

3. Telecommunications

DSP plays a vital role in modern communication systems:

Modulation and Demodulation: DSP techniques are used in encoding and decoding signals for transmission over various media, including wireless and optical networks.

Error Correction: Algorithms such as Reed-Solomon and Turbo codes enhance data integrity during transmission, ensuring reliable communication.

4. Radar and Sonar Systems

DSP is fundamental in radar and sonar applications, where it is used for:

Object Detection: DSP processes signals to identify and track objects, crucial for air traffic control and maritime navigation.

Environmental Monitoring: Sonar systems utilize DSP to analyze underwater acoustics for applications in marine biology and oceanography.

5. Biomedical Engineering

In healthcare, DSP enhances diagnostic and therapeutic technologies:

Medical Imaging: Techniques such as MRI and CT scans rely on DSP for image reconstruction and analysis, improving diagnostic accuracy.

Wearable Health Monitoring: Devices that track physiological signals (e.g., heart rate, glucose levels) use DSP to process and interpret data in real time.

Trends in Digital Signal Processing

As technology evolves, several trends are shaping the future of DSP:

1. Integration with Artificial Intelligence

The convergence of DSP and AI is leading to smarter systems capable of learning and adapting to user needs. Machine learning algorithms can enhance traditional DSP techniques, enabling more sophisticated applications in areas like autonomous vehicles and smart home devices.

2. Increased Use of FPGAs and ASICs

Field-Programmable Gate Arrays (FPGAs) and Application-Specific Integrated Circuits (ASICs) are increasingly used for implementing DSP algorithms. These technologies offer high performance and efficiency, making them suitable for real-time processing in demanding applications such as telecommunications and multimedia.

3. Internet of Things (IoT)

The proliferation of IoT devices is driving demand for efficient DSP solutions that can process data locally. This trend emphasizes the need for low-power, high-performance DSP algorithms that can operate on resource-constrained devices.

4. Cloud-Based DSP

Cloud computing is transforming how DSP is implemented, allowing for scalable processing power and storage. This shift enables complex signal processing tasks to be performed remotely, facilitating real-time analysis and data sharing across devices.

Conclusion

Digital Signal Processing has significantly impacted electrical engineering, enhancing the quality and functionality of signals across various applications. Its versatility and adaptability make it a critical component of modern technology, driving innovations in audio, image processing, telecommunications, and biomedical fields. As DSP continues to evolve, emerging trends such as AI integration, IoT, and cloud computing will further expand its capabilities and applications, ensuring that it remains at the forefront of technological advancement. The ongoing development of DSP technologies promises to enhance our ability to process and utilize information in increasingly sophisticated ways, shaping the future of engineering and technology.

2 notes

·

View notes

Text

How to write a good abstract

Writing a compelling and effective abstract is crucial for communicating the essence of your research succinctly and clearly. A well-crafted abstract not only summarizes your study but also emphasizes its significance, thereby attracting the attention of the intended audience, including researchers, practitioners, and policymakers. Below are essential guidelines and a structured approach to writing a high-quality abstract for scientific papers, particularly in the biomedical field, though the principles can be adapted for other disciplines.

Key Elements of a Good Abstract:

Declarative Title:

Your title should be clear and direct, reflecting the main findings of your study. It should convey the primary message accurately, ensuring that even those who only read the title understand the core outcome of your research.

2 .Introduction to the Problem:

Start with a sentence that introduces a significant problem or field of interest. In biomedical sciences, this could involve highlighting a critical health issue. The goal is to establish the relevance of your research by showing the urgency or importance of the problem.

3 . Identification of a Significant Challenge:

Clearly state the specific challenge or barrier that is hindering progress in your field. This sets the stage for your study by pinpointing the precise issue you aim to address without yet delving into your methodology.

4 . Opportunity for Advancement:

Introduce a recent advancement or opportunity that makes addressing the identified challenge feasible. This could be a technological innovation, new data availability, or a novel methodological approach that provides a fresh perspective on the problem.

5 . Description of Your Study:

Summarize the core of your study in 1–2 sentences. Describe what you did and how you leveraged the identified opportunity to tackle the challenge. This should provide a brief but comprehensive overview of your approach.

6 .Key Results:

Highlight the main findings of your study in 2–3 sentences. These results should directly support the conclusions stated in your title and demonstrate the impact of your research.

7. Implications and Broader Impact:

Conclude with a sentence on the potential impact of your findings. Explain how your results could change current practices, inform future research, or have broader implications for the field.

Example of an Abstract Using These Guidelines:

Title: Data-driven Prediction of Drug Effects and Interactions

Abstract: Adverse drug events remain a leading cause of morbidity and mortality worldwide. Many such events are undetected during clinical trials before a drug receives approval for clinical use. Regulatory agencies maintain extensive collections of adverse event reports as part of post marketing surveillance, presenting an opportunity to study drug effects using patient population data. However, confounding factors such as concomitant medications, patient demographics, medical histories, and prescribing reasons are often uncharacterized in spontaneous reporting systems, limiting quantitative signal detection methods. Here, we present an adaptive data-driven approach for correcting these confounding factors in cases with unknown or unmeasured covariates and combine this approach with existing methods to improve drug effect analyses using three test datasets. We also introduce comprehensive databases of drug effects (OffSIDES) and drug-drug interaction side effects (TwoSIDES). To demonstrate the utility of these resources, we identified drug targets, predicted drug indications, and discovered drug class interactions, corroborating 47 (P < 0.0001) interactions using independent electronic medical record analysis. Our findings suggest that combined treatment with selective serotonin reuptake inhibitors and thiazides significantly increases the incidence of prolonged QT intervals. We conclude that controlling for confounding effects in observational clinical data enhances the detection and prediction of adverse drug effects and interactions.

Investing in your academic future with Dissertation Writing Help For Students means choosing a dedicated professional who understands the complexities of dissertation writing and is committed to your success. With a comprehensive range of services, personalized attention, and a proven track record of helping students achieve their academic goals, I am here to support you at every stage of your dissertation journey.

Feel free to reach out to me at [email protected] to commence a collaborative endeavor towards scholarly excellence. Whether you seek guidance in crafting a compelling research proposal, require comprehensive editing to refine your dissertation, or need support in conducting a thorough literature review, I am here to facilitate your journey towards academic success. and discuss how I can assist you in realizing your academic aspirations. Whether you seek guidance in crafting a compelling research proposal, require comprehensive editing to refine your dissertation, or need support in conducting a thorough literature review, I am here to facilitate your journey towards academic success.

#academics#education#grad school#gradblr#phd#phd life#phd research#phd student#phdblr#study#essays#literature#writters on tumblr#my writing#writeblr#writing#writers on tumblr#thesis#dissertation#university student#university#uniblr#stanford university#case study#student life#students#studyblr#studying#studyspo#student

3 notes

·

View notes

Text

Building Biomedical Data & ML-ops Platform: From Collection to Discoveries

Life science research presents a series of hurdles from the moment you collect your data to the moment you gain meaningful insights. Biomedical data is messy, and complex, and comes in all shapes and sizes. Turning this data into groundbreaking discoveries takes some serious work, and each step has its own hurdles.

Source Link

#Biomedical#Biomedical Data#Machine learning#Biomedical research#Biomedical data analysis#Biomedical technology

0 notes

Text

I was originally going to argue but you are right. But asdccscushhhhullyy Here is a sneak peak <3

If one considers the physical laws of the universe and by analogy how hydrogen arose from the universe after its opaque stage after the initial singularity then by postulating an adductive hypothesis, one could consider that the process "human" evolution started as soon as time began to flow in one direction and the future of conscious existence will most likely depend on memetic and biomedical developments in our time. Taking the liberty of saying this hypothesis as sound and valid then it would follow that every thought or cognitive process that occurs in an individual either consciously or unconsciously has/is a corresponding change in the real universe and by extension effects the physical world to some degree.

This model of human experience I proposed can be understood with the study of perspective in art. Using mathematics, deductive, inductive and abductive logic in abstraction in parallel with reduction along with any basic form of taxonomy. It is possible to turn most things that can be described or observed to be remade into an axiom. This includes the external and internal and the objective, subjective, and inductive. One point can be described with multiple axioms and suspension of disbelief can be used to reduce conflicts. These axioms can be arranged via taxonomic/set theory (logic) principles to form a network or grouping of axioms which can have any logical rules assigned to any axiom arranged in any order or arrangement described in mathematics. The axiom systems themselves can be abstracted or reduced into new axioms to form systems of infinite complexity for various purposes.

Current ISR, mass data and bio-metric technologies also including spectrum analysis could falsify any hypothesis that this adductive theory generates. On could potentially see the expression of culture as an sort of extracellular matrix in which human creates/secrete physical structures in the real world as result of physical, technological or biological processes.

2 notes

·

View notes

Text

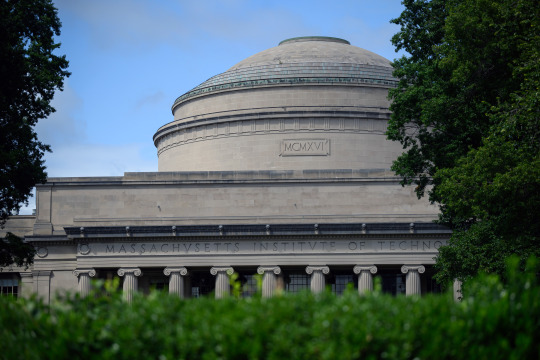

MIT named No. 2 university by U.S. News for 2024-25

New Post has been published on https://thedigitalinsider.com/mit-named-no-2-university-by-u-s-news-for-2024-25/

MIT named No. 2 university by U.S. News for 2024-25

MIT has placed second in U.S. News and World Report’s annual rankings of the nation’s best colleges and universities, announced today.

As in past years, MIT’s engineering program continues to lead the list of undergraduate engineering programs at a doctoral institution. The Institute also placed first in six out of nine engineering disciplines.

U.S. News placed MIT second in its evaluation of undergraduate computer science programs, along with Carnegie Mellon University and the University of California at Berkeley. The Institute placed first in four out of 10 computer science disciplines.

MIT remains the No. 2 undergraduate business program, a ranking it shares with UC Berkeley. Among business subfields, MIT is ranked first in three out of 10 specialties.

Within the magazine’s rankings of “academic programs to look for,” MIT topped the list in the category of undergraduate research and creative projects. The Institute also ranks as the third most innovative national university and the third best value, according to the U.S. News peer assessment survey of top academics.

MIT placed first in six engineering specialties: aerospace/aeronautical/astronautical engineering; chemical engineering; computer engineering; electrical/electronic/communication engineering; materials engineering; and mechanical engineering. It placed within the top five in two other engineering areas: biomedical engineering and civil engineering.

Other schools in the top five overall for undergraduate engineering programs are Stanford University, UC Berkeley, Georgia Tech, Caltech, the University of Illinois at Urbana-Champaign, and the University of Michigan at Ann Arbor.

In computer science, MIT placed first in four specialties: biocomputing/bioinformatics/biotechnology; computer systems; programming languages; and theory. It placed in the top five of five other disciplines: artificial intelligence; cybersecurity; data analytics/science; mobile/web applications; and software engineering.

The No. 1-ranked undergraduate computer science program overall is at Stanford. Other schools in the top five overall for undergraduate computer science programs are Carnegie Mellon, Stanford, UC Berkeley, Princeton University, and the University of Illinois at Urbana-Champaign.

Among undergraduate business specialties, the MIT Sloan School of Management leads in analytics; production/operations management; and quantitative analysis. It also placed within the top five in three other categories: entrepreneurship; management information systems; and supply chain management/logistics.

The No. 1-ranked undergraduate business program overall is at the University of Pennsylvania; other schools ranking in the top five include UC Berkeley, the University of Michigan at Ann Arbor, and New York University.

#2024#aerospace#Analysis#Analytics#applications#artificial#Artificial Intelligence#assessment#Biocomputing#Biomedical engineering#biotechnology#Business#Business and management#california#caltech#Carnegie Mellon University#chemical#Chemical engineering#civil engineering#colleges#communication#computer#Computer Science#Computer science and technology#creative projects#cybersecurity#data#data analytics#Education#teaching

0 notes

Text

Brain Implants Industry is projected to expand at a 9.3% CAGR through 2032

The global Brain Implants Industry size is projected to surpass around US$ 14.24 billion by 2032 and is anticipated to grow at a CAGR of 9.3% from 2023 to 2032 | Towards Healthcare

Download FREE SAMPLE COPY @ https://www.towardshealthcare.com/personalized-scope/5067

The Industry volume with the help of widespread quantitative and qualitative insights, and forecasts of the Industry. This report presents breakdown of Industry into forthcoming and niche segments. Additionally, this research study gauges Industry revenue growth and its drift at global, regional, and country from 2023 to 2032. This research report evaluates Brain Implants Industry on a global and regional level. It offers thorough analysis of Industry status, growth and forecast of the global Brain Implants Industry for the period from 2023 to 2032.

Industry Overview

This Brain Implants Industry report studies Industry dynamics, status and outlook especially in North America, Europe and Asia-Pacific, Latin America, Middle East and Africa. This research report offers scenario and forecast (revenue/volume). This report also studies global Industry prominence, competitive landscape, Industry share, growth rate Industry dynamics such as drivers, restraints and opportunities, and distributors and sales channel.

This research study also integrates Industry Chain analysis and Porter's Five Forces Analysis. Further, this report offers competitive scenario which comprises collaborations, Industry concentration rate and expansions, mergers & acquisitions undertaken by companies.

Some of the prominent players in the Brain Implants Industry include:

Neuralink

Medtronic

Boston Scientific Corporation

St. Jude Medical (Abbott)

NeuroPace, Inc.

Nevro Corporation

Synapse Biomedical Inc.

Aleva Neurotherapeutics SA

Industry Segmentations:

By Product Type

Deep Brain

Stimulator

Vagus Nerve Stimulator

Spinal cord stimulator

By Applications

Parkinson’s Disease

Epilepsy

Chronic Pain

Alzheimer’s Diseases

Depression

Essential Tremor

By Geography

North America

Europe

Asia-Pacific

Latin America

The Middle East and Africa

Key Points Covered in Brain Implants Industry Study:

Growth of Brain Implants in 2023

Industry Estimates and Forecasts (2023-2032)

Brand Share and Industry Share Analysis

Key Drivers and Restraints Shaping Industry Growth

Segment-wise, Country-wise, and Region-wise Analysis

Competition Mapping and Benchmarking

Recommendation on Key Winning Strategies

COVID-19 Impact on Demand for Brain Implants and How to Navigate

Key Product Innovations and Regulatory Climate

Brain Implants Consumption Analysis

Brain Implants Production Analysis

Brain Implants and Management

Research Methodology

A unique research methodology has been utilized by Precedence Research to conduct comprehensive research on the growth of the global Brain Implants Industry and arrive at conclusions on its growth prospects. This research methodology is a combination of primary and secondary research, which helps analysts warrant the accuracy and reliability of the drawn conclusions.

Precedence Research employs comprehensive and iterative research methodology focused on minimizing deviance in order to provide the most accurate estimates and forecast possible. The company utilizes a combination of bottom-up and top-down approaches for segmenting and estimating quantitative aspects of the Industry. In Addition, a recurring theme prevalent across all our research reports is data triangulation that looks Industry from three different perspectives. Critical elements of methodology employed for all our studies include:

Preliminary data mining

Raw Industry data is obtained and collated on a broad front. Data is continuously filtered to ensure that only validated and authenticated sources are considered. In addition, data is also mined from a host of reports in our repository, as well as a number of reputed paid databases. For comprehensive understanding of the Industry, it is essential to understand the complete value chain and in order to facilitate this; we collect data from raw material suppliers, distributors as well as buyers.

Technical issues and trends are obtained from surveys, technical symposia and trade journals. Technical data is also gathered from intellectual property perspective, focusing on white space and freedom of movement. Industry dynamics with respect to drivers, restraints, pricing trends are also gathered. As a result, the material developed contains a wide range of original data that is then further cross-validated and authenticated with published sources.

Statistical model

Our Industry estimates and forecasts are derived through simulation models. A unique model is created customized for each study. Gathered information for Industry dynamics, technology landscape, application development and pricing trends is fed into the model and analyzed simultaneously. These factors are studied on a comparative basis, and their impact over the forecast period is quantified with the help of correlation, regression and time series analysis. Industry forecasting is performed via a combination of economic tools, technological analysis, and Industry experience and domain expertise.

Econometric models are generally used for short-term forecasting, while technological Industry models are used for long-term forecasting. These are based on an amalgamation of technology landscape, regulatory frameworks, economic outlook and business principles. A bottom-up approach to Industry estimation is preferred, with key regional Industrys analyzed as separate entities and integration of data to obtain global estimates. This is critical for a deep understanding of the Industry as well as ensuring minimal errors. Some of the parameters considered for forecasting include:

• Industry drivers and restrains, along with their current and expected impact • Raw material scenario and supply v/s price trends • Regulatory scenario and expected developments • Current capacity and expected capacity additions up to 2032

We assign weights to these parameters and quantify their Industry impact using weighted average analysis, to derive an expected Industry growth rate.

Primary validation

This is the final step in estimating and forecasting for our reports. Exhaustive primary interviews are conducted, on face to face as well as over the phone to validate our findings and assumptions used to obtain them. Interviewees are approached from leading companies across the value chain including suppliers, technology providers, domain experts and buyers so as to ensure a holistic and unbiased picture of the Industry. These interviews are conducted across the globe, with language barriers overcome with the aid of local staff and interpreters.

Primary interviews not only help in data validation, but also provide critical insights into the Industry, current business scenario and future expectations and enhance the quality of our reports. All our estimates and forecast are verified through exhaustive primary research with Key Industry Participants (KIPs) which typically include:

• Industry leading companies • Raw material suppliers • Product distributors • Buyers

The key objectives of primary research are as follows:

• To validate our data in terms of accuracy and acceptability • To gain an insight in to the current Industry and future expectations

Secondary Validation

Secondary research sources referred to by analysts during the production of the global Brain Implants Industry report include statistics from company annual reports, SEC filings, company websites, investor presentations, regulatory databases, government publications, and Industry white papers. Analysts have also interviewed senior managers, product portfolio managers, CEOs, VPs, and Industry intelligence managers, who contributed to the production of Precedence Research’s study on the Brain Implants Industry as primary methods.

Why should you invest in this report?

If you are aiming to enter the global Brain Implants Industry, this report is a comprehensive guide that provides crystal clear insights into this niche Industry. All the major application areas for Brain Implants are covered in this report and information is given on the important regions of the world where this Industry is likely to boom during the forecast period of 2023-2032 so that you can plan your strategies to enter this Industry accordingly.

Besides, through this report, you can have a complete grasp of the level of competition you will be facing in this hugely competitive Industry and if you are an established player in this Industry already, this report will help you gauge the strategies that your competitors have adopted to stay as Industry leaders in this Industry. For new entrants to this Industry, the voluminous data provided in this report is invaluable.

Contact US -

Towards Healthcare

Web: https://www.towardshealthcare.com/

You can place an order or ask any questions, please feel free to contact at

Email: [email protected]

About Us

We are a global strategy consulting firm that assists business leaders in gaining a competitive edge and accelerating growth. We are a provider of technological solutions, clinical research services, and advanced analytics to the healthcare sector, committed to forming creative connections that result in actionable insights and creative innovations.

1 note

·

View note

Text

How SHPL Supports NABH Re-Accreditation and Continuous Quality Improvement

Achieving NABH accreditation is a significant milestone for any hospital—but maintaining it is an ongoing challenge. Hospitals often struggle to keep up with evolving standards, documentation practices, and internal audits. Re-accreditation isn’t just about passing another inspection—it’s about proving that quality care is being sustained every day.

This is where SHPL Management Consultancy plays a crucial role, offering structured, continuous support to help hospitals retain their NABH status and improve clinical and operational quality over time.

Why Re-Accreditation Matters

After the initial accreditation, hospitals are expected to:

Maintain continuous adherence to NABH standards

Improve based on previous audit feedback

Demonstrate regular internal audits and quality reviews

Show updated SOPs and staff training records

Enhance patient safety and satisfaction metrics

Falling short in any of these areas can lead to non-compliance or even loss of accreditation.

SHPL’s Re-Accreditation Support Framework

1. Quality Gap Assessment SHPL begins with a full review of the hospital’s current documentation, processes, and compliance status compared to NABH standards. We identify gaps and risk areas well before the audit cycle begins.

2. Update and Realignment of SOPs and Policies NABH standards are regularly updated. SHPL ensures:

SOPs are revised to meet current requirements

Policy documents reflect recent changes in regulations

Staff understand and implement changes effectively

3. Internal Audits and Departmental Reviews We conduct:

Scheduled mock audits for every department

Internal checks on clinical practices, patient safety, documentation, and HR processes

Corrective action planning and root cause analysis for any non-conformities

4. Staff Training and Awareness Programs Ongoing training is critical. SHPL organizes:

Quality improvement sessions for key staff

Daily checklist reviews and compliance briefings

Refresher programs on patient rights, biomedical waste handling, infection control, etc.

5. Quality Indicators and Data Monitoring We help hospitals develop systems to track and report:

Infection rates, patient falls, adverse events

Patient feedback scores and grievance redressal timelines

Medication errors and incident reports

This data is vital for NABH re-accreditation and internal improvement.

6. NABH Documentation and Audit Preparation SHPL ensures that:

All required files, registers, and logs are up-to-date and audit-ready

The Quality Council and internal audit teams are active and functional

Coordinators are prepared to present data confidently during audits

Outcome of SHPL’s Re-Accreditation Support

Hospitals working with SHPL have reported:

Zero major non-conformities in final audits

Reduced stress and confusion during audit cycles

Improved patient outcomes due to ongoing quality focus

Higher staff confidence and involvement in quality systems

Conclusion

NABH re-accreditation is not a formality—it’s a statement of a hospital’s commitment to excellence. With SHPL Management Consultancy, hospitals move beyond checklists to build a real culture of continuous quality improvement.

#NABHReaccreditation#SHPLManagementConsultancy#HospitalQualityImprovement#HealthcareCompliance#AccreditationSupport#HospitalAuditReadiness#SOPUpdateHealthcare#PatientSafetyStandards#MedicalConsultingIndia#HealthcareAccreditationExperts

0 notes

Text

Reference archived on our website

Published in the Summer of 2021. Those "experts" who are shocked to find out that covid has lingering effects aren't as expert or informed as they like to act.

Abstract Background There is growing concern about possible cognitive consequences of COVID-19, with reports of ‘Long COVID’ symptoms persisting into the chronic phase and case studies revealing neurological problems in severely affected patients. However, there is little information regarding the nature and broader prevalence of cognitive problems post-infection or across the full spread of disease severity.

Methods We sought to confirm whether there was an association between cross-sectional cognitive performance data from 81,337 participants who between January and December 2020 undertook a clinically validated web-optimized assessment as part of the Great British Intelligence Test, and questionnaire items capturing self-report of suspected and confirmed COVID-19 infection and respiratory symptoms.

Findings People who had recovered from COVID-19, including those no longer reporting symptoms, exhibited significant cognitive deficits versus controls when controlling for age, gender, education level, income, racial-ethnic group, pre-existing medical disorders, tiredness, depression and anxiety. The deficits were of substantial effect size for people who had been hospitalised (N = 192), but also for non-hospitalised cases who had biological confirmation of COVID-19 infection (N = 326). Analysing markers of premorbid intelligence did not support these differences being present prior to infection. Finer grained analysis of performance across sub-tests supported the hypothesis that COVID-19 has a multi-domain impact on human cognition.

Interpretation Interpretation. These results accord with reports of ‘Long Covid’ cognitive symptoms that persist into the early-chronic phase. They should act as a clarion call for further research with longitudinal and neuroimaging cohorts to plot recovery trajectories and identify the biological basis of cognitive deficits in SARS-COV-2 survivors.

Funding Funding. AH is supported by the UK Dementia Research Institute Care Research and Technology Centre and Biomedical Research Centre at Imperial College London. WT is supported by the EPSRC Centre for Doctoral Training in Neurotechnology. SRC is funded by a Wellcome Trust Clinical Fellowship 110,049/Z/15/Z. JMB is supported by Medical Research Council (MR/N013700/1). MAM, SCRW and PJH are, in part, supported by the National Institute for Health Research (NIHR) Biomedical Research Centre at South London and Maudsley NHS Foundation Trust and King's College London

#mask up#covid#pandemic#wear a mask#covid 19#public health#coronavirus#sars cov 2#still coviding#wear a respirator#long covid#covid conscious#covid is airborne

21 notes

·

View notes