#DeepFake Technology

Text

youtube

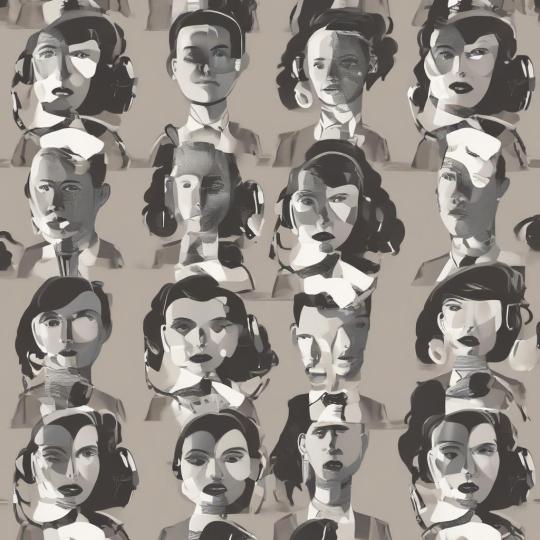

STOP Using Fake Human Faces in AI

#GenerativeAI#GANs (Generative Adversarial Networks)#VAEs (Variational Autoencoders)#Artificial Intelligence#Machine Learning#Deep Learning#Neural Networks#AI Applications#CreativeAI#Natural Language Generation (NLG)#Image Synthesis#Text Generation#Computer Vision#Deepfake Technology#AI Art#Generative Design#Autonomous Systems#ContentCreation#Transfer Learning#Reinforcement Learning#Creative Coding#AI Innovation#TDM#health#healthcare#bootcamp#llm#youtube#branding#animation

1 note

·

View note

Text

Deep Dive into Deepfake Technology | Digi Telegraph

In the past year, you may have come across the viral story of twitch streamers dealing with the violative circulation of their fake pornographic content.

Non-consensual and AI-generated sexually explicit videos of popular, primarily women, streamers were available for sale online. Perhaps more recognisable, you may have seen Kendrick Lamar’s video of his song ‘The Heart Part 5’, which garnered over 40 million views.

Visit Us:-

0 notes

Text

The Deepfake Dilemma: Understanding the Technology Behind Artificial Media Manipulation

Introduction

In our visually-driven world, the adage “seeing is believing” has taken on a new dimension with the advent of deepfake technology. This innovative yet controversial facet of artificial intelligence (AI) has the remarkable ability to manipulate audiovisual content, blurring the lines between reality and fabrication. As we delve into the complexities of deepfakes, we uncover a realm…

0 notes

Text

Are Deepfakes The New Spam Calls? Here’s How To Protect Against Them

New Post has been published on https://thedigitalinsider.com/are-deepfakes-the-new-spam-calls-heres-how-to-protect-against-them/

Are Deepfakes The New Spam Calls? Here’s How To Protect Against Them

If you saw a deepfake of your company’s CEO, would you be able to tell it wasn’t real? This is a concerning challenge that organizations around the globe are dealing with on a frequent basis. In fact, just recently, an advertising giant was the target of a deepfake of its CEO. A publicly available image of the executive was used to set up a Microsoft Teams meeting in which a voice clone of said executive – sourced from a YouTube video – was deployed. While this specific attack was unsuccessful, it paints a larger picture of the emerging tactics cybercriminals are using with publicly available information – and this is just the tip of the iceberg.

Technology has become so sophisticated that only about half of IT leaders today have high confidence in their ability to detect a deepfake of their CEO. Making matters worse, cybercriminals are not only impersonating CEOs, but the entire leadership team, with CFOs becoming popular targets, as well. Deepfakes are becoming increasingly easy to create. In fact, a quick Google search of “how to create a deepfake” produces various articles and YouTube tutorials on exactly how to create one. Costs are becoming negligible, meaning that deepfakes are essentially the new spam calls.

Spam calls are all too common today. In fact, the Federal Communications Commission (FCC) claims that U.S. consumers receive approximately 4 billion robocalls per month, and advancements in technology make them extremely cheap and highly lucrative, even with a low success rate. Deepfakes are following suit. Cybercriminals will utilize deepfake technology to trick unsuspecting employees even more so than they are today, and deepfakes will eventually become an everyday occurrence for the average consumer. Let’s explore strategies that leaders can implement to best protect their organization, employees, and customers from these threats.

Establish Strong Guidelines

First, leaders need to establish strong guidelines within their organization. These guidelines need to come from the very top, starting with the CEO, and be communicated frequently. For example, the CEO needs to firmly explain to the entire company that they will never make an odd or random request to an employee, such as buying several $100 gift cards – a frequent phishing tactic. These attacks are often successful because they come from a place of leadership and aren’t questioned. However, as CEO deepfakes become more common, we are becoming more aware that they are, in fact, not real. As a result, I anticipate they will work their way down the organization, to include VPs, Directors, front line managers and even peers.

Just think: having a peer or your immediate manager ask a request of you is pretty common. Why should you have a reason to question it? Guidelines can also be related to the use of these deepfake tools within your organization, including banning the use of them on company-owned technology. Setting these guidelines and guardrails is just the first step.

Confirm Requests Through Multiple Channels

Second, when requests do need to be made, there should be a strategy in place to confirm them via multiple modes of communication. An example could be if a request comes from the CEO, that request will be shared over email and will also include a follow-up via an instant messaging platform used in the workplace. If there is no follow-up, the employee should either ignore the request or proactively confirm it over Slack themselves, then notify internal security teams per their security policy. Similarly, perhaps a request is made via a Teams meeting, similar to the tactic used for the advertising company deepfake. This request then needs to have an email confirmation and/or a Slack confirmation. Better yet, confirmed via a quick phone call if walking over to their physical desk is not an option. These processes should be communicated often and to the entire organization to keep them top of mind. Then, when an attempt is known, establish a process to share the example broadly throughout the organization to create pattern recognition of the types of threats everyone should be aware of.

Hold Frequent Trainings

Third, organizations should implement frequent company-wide training to keep deepfakes, and other types of identity fraud attacks, at the forefront of employees’ minds. These are helpful for a few reasons. An employee may not even know what a deepfake is or know that voices and videos could be faked. Additionally, employees may defer to the “out of sight, out of mind” mindset – if deepfakes aren’t top of mind, they may easily fall victim to an attack. Research shows that employees who received cybersecurity training demonstrated a significantly improved ability to recognize potential cyber threats.

Deepfakes aren’t going anywhere, and they are becoming increasingly frequent and hard to detect. However, by establishing guidelines, verifying requests via multiple routes, and implementing consistent training across your organization, we can be better prepared and protect against these threats. In an increasing digital world, our diligence to trust less and verify more will be essential in maintaining the security and integrity of our digital identity.

#advertising#Articles#billion#CEO#CFOs#challenge#clone#communication#communications#consumers#cyber#Cyber Threats#cybercriminals#cybersecurity#cybersecurity training#deep fakes#deepfake#deepfake technology#deepfakes#digital identity#easy#email#employees#federal#fraud#Google#google search#guidelines#how#how to

0 notes

Text

Deepfake Technology: An Overview of its Impact on Society | USAII®

Deepfake technology is another double-edged sword in the field of AI as it offers both positive and negative impacts to society. Learn more about it in this comprehensive guide.

Read more: https://shorturl.at/yEBOa

AI and deep learning techniques, deepfake course, deepfake AI detection tools, deepfake applications, deepfake algorithms, AI Engineer, AI professionals, AI technology, AI Certification Programs, USAII Certifications, Best AI Certifications, deepfake AI detection tools

0 notes

Text

Navigating Ethical Concerns Around Using AI to Clone Voices for Political Messaging

Navigating Ethical Concerns Around Using AI to Clone Voices for Political Messaging

The use of artificial intelligence (AI) for voice cloning in political messaging is a complex and nuanced issue that raises significant ethical concerns. As technology continues to advance, it’s crucial to understand the implications and navigate these challenges responsibly.

Understanding AI Voice Cloning in…

View On WordPress

0 notes

Text

Why Deepfakes are Dangerous and How to Identify?

Spotting deepfakes requires a keen eye and an understanding of telltale signs. Unnatural facial expressions, awkward body movements and inconsistencies in coloring or alignment can betray the artificial nature of manipulated media. Lack of emotion or unusual eye movements may also indicate a deepfake. You can check if a video is real by looking at news from reliable sources and searching for similar images online. These can help in find changes or defects in the tech-generated video.

0 notes

Text

youtube

This video is all about the dangers of deepfake technology. In short, deepfake technology is a type of AI that is able to generate realistic, fake images of people. This technology has the potential to be used for a wide variety of nefarious purposes, from porn to political manipulation.

Deepfake technology has emerged as a significant concern in the digital age, raising alarm about its potential dangers and the need for effective detection methods. Deepfakes refer to manipulated or synthesized media content, such as images, videos, or audio recordings, that convincingly replicate real people saying or doing things they never did. While deepfakes can have legitimate applications in entertainment and creative fields, their malicious use poses serious threats to individuals, organizations, and society as a whole.

The dangers of deepfakes are not very heavily known by everyone, and this poses a threat. There is no guarantee that what you see online is real, and deepfakes have successfully lessened the gap between fake and real content. Even though the technology can be used for creating innovative entertainment projects, it is also being heavily misused by cybercriminals. Additionally, if the technology is not monitored properly by law enforcement, things will likely get out of hand quickly.

Deepfakes can be used to spread false information, which can have severe consequences for public opinion, political discourse, and trust in institutions. A realistic deepfake video of a public figure could be used to disseminate fabricated statements or actions, leading to confusion and the potential for societal unrest.

Cybercriminals can exploit deepfake technology for financial gain. By impersonating someone's voice or face, scammers could trick individuals into divulging sensitive information, making fraudulent transactions, or even manipulating people into thinking they are communicating with a trusted source.

Deepfakes have the potential to disrupt democratic processes by distorting the truth during elections or important political events. Fake videos of candidates making controversial statements could sway public opinion or incite conflict.

The Dangers of Deepfake Technology and How to Spot Them

#the dangers of deepfake technology and how to spot them#the dangers of deepfake technology#deepfake technology#deepfake#artificial intelligence#deep fake#LimitLess Tech 888#how are deepfakes dangerous#the dangers of deepfakes#how to spot a deepfake#deepfakes#deepfake explained#what is a deepfake#dangers of deepfake#deepfake video#effects of deepfakes#how deepfakes work#deepfakes explained 2023#deepfake dangers#deepfake ai technology#Youtube

0 notes

Text

youtube

This video is all about the dangers of deepfake technology. In short, deepfake technology is a type of AI that is able to generate realistic, fake images of people. This technology has the potential to be used for a wide variety of nefarious purposes, from porn to political manipulation.

Deepfake technology has emerged as a significant concern in the digital age, raising alarm about its potential dangers and the need for effective detection methods. Deepfakes refer to manipulated or synthesized media content, such as images, videos, or audio recordings, that convincingly replicate real people saying or doing things they never did. While deepfakes can have legitimate applications in entertainment and creative fields, their malicious use poses serious threats to individuals, organizations, and society as a whole.

The dangers of deepfakes are not very heavily known by everyone, and this poses a threat. There is no guarantee that what you see online is real, and deepfakes have successfully lessened the gap between fake and real content. Even though the technology can be used for creating innovative entertainment projects, it is also being heavily misused by cybercriminals. Additionally, if the technology is not monitored properly by law enforcement, things will likely get out of hand quickly.

Deepfakes can be used to spread false information, which can have severe consequences for public opinion, political discourse, and trust in institutions. A realistic deepfake video of a public figure could be used to disseminate fabricated statements or actions, leading to confusion and the potential for societal unrest.

Cybercriminals can exploit deepfake technology for financial gain. By impersonating someone's voice or face, scammers could trick individuals into divulging sensitive information, making fraudulent transactions, or even manipulating people into thinking they are communicating with a trusted source.

Deepfakes have the potential to disrupt democratic processes by distorting the truth during elections or important political events. Fake videos of candidates making controversial statements could sway public opinion or incite conflict.

The Dangers of Deepfake Technology and How to Spot Them

#the dangers of deepfake technology and how to spot them#the dangers of deepfake technology#deepfake technology#how to spot them#deepfake#artificial intelligence#deep fake#LimitLess Tech 888#how are deepfakes dangerous#the dangers of deepfakes#how to spot a deepfake#deepfakes#deepfake explained#what is a deepfake#dangers of deepfake#deepfake video#effects of deepfakes#how deepfakes work#deepfakes explained 2023#deepfake dangers#deepfake ai technology#Youtube

0 notes

Text

youtube

MIND-BLOWING Semantic Data Secrets Revealed in AI and Machine Learning

#GenerativeAI#GANs (Generative Adversarial Networks)#VAEs (Variational Autoencoders)#Artificial Intelligence#Machine Learning#Deep Learning#Neural Networks#AI Applications#CreativeAI#Natural Language Generation (NLG)#Image Synthesis#Text Generation#Computer Vision#Deepfake Technology#AI Art#Generative Design#Autonomous Systems#ContentCreation#Transfer Learning#Reinforcement Learning#Creative Coding#AI Innovation#TDM#health#healthcare#bootcamp#llm#branding#youtube#animation

1 note

·

View note

Text

What Is Deepfake

Find a good article talk about deepfake, mainly talk about:

An overview of Deepfakes

Deepfake technology development

How to make deepfake content

The pros and cons of deepfakes

Read This article: What Is Deepfake

1 note

·

View note

Text

New Post has been published on Lokapriya.com

New Post has been published on https://www.lokapriya.com/deepfake-technology-the-potential-risks-of-future-part-i/

DeepFake Technology: The Potential Risks of Future! Part I

Imagine you are watching a video of your favorite celebrity giving a speech. You are impressed by their eloquence and charisma, and you agree with their message. But then you find out that the video was not real. It was a deep fake, a synthetic media created by AI “(Artificial Intelligence) that can manipulate the appearance and voice of anyone. You feel deceived and confused.

This is no longer a hypothetical scenario; this is now real. There are several deepfakes of prominent actors, celebrities, politicians, and influencers circulating the internet. Some include deepfakes of Film Actors like Tom Cruise and Keanu Reeves on TikTok, among others. Even Indian PM Narendra Modi’s deepfake edited video was made.

In simple terms, Deepfakes are AI-generated videos and images that can alter or fabricate the reality of people, events, and objects. This technology is a type of artificial intelligence that can create or manipulate images, videos, and audio that look and sound realistic but are not authentic. Deepfake technology is becoming more sophisticated and accessible every day. It can be used for various purposes, such as in entertainment, education, research, or art. However, it can also pose serious risks to individuals and society, such as spreading misinformation, violating privacy, damaging reputation, impersonating identity, and influencing public opinion.

In this article, I will be exploring the dangers of deep fake technology and how we can protect ourselves from its potential harm.

How is Deepfake Technology a Potential Threat to Society?

Deepfake technology is a potential threat to society because it can:

Spread misinformation and fake news that can influence public opinion, undermine democracy, and cause social unrest.

Violate privacy and consent by using personal data without permission, and creating image-based sexual abuse, blackmail, or harassment.

Damage reputation and credibility by impersonating or defaming individuals, organizations, or brands.

Create security risks by enabling identity theft, fraud, or cyber attacks.

Deepfake technology can also erode trust and confidence in the digital ecosystem, making it harder to verify the authenticity and source of information.

The Dangers and Negative Uses of Deepfake Technology

As much as there may be some positives to deepfake technology, the negatives easily overwhelm the positives in our growing society. Some of the negative uses of deepfakes include:

Deepfakes can be used to create fake adult material featuring celebrities or regular people without their consent, violating their privacy and dignity. Because it has become very easy for a face to be replaced with another and a voice changed in a video. Surprising, but true.

Deepfakes can be used to spread misinformation and fake news that can deceive or manipulate the public. Deepfakes can be used to create hoax material, such as fake speeches, interviews, or events, involving politicians, celebrities, or other influential figures.

Since face swaps and voice changes can be carried out with the deepfake technology, it can be used to undermine democracy and social stability by influencing public opinion, inciting violence, or disrupting elections.

False propaganda can be created, fake voice messages and videos that are very hard to tell are unreal and can be used to influence public opinions, cause slander, or blackmail involving political candidates, parties, or leaders.

Deepfakes can be used to damage reputation and credibility by impersonating or defaming individuals, organizations, or brands. Imagine being able to get the deepfake of Keanu Reeves on TikTok creating fake reviews, testimonials, or endorsements involving customers, employees, or competitors.

For people who do not know, they are easy to convince and in an instance where something goes wrong, it can lead to damage in reputation and loss of belief in the actor.

Ethical, Legal, and Social Implications of Deepfake Technology

Ethical Implications

Deepfake technology can violate the moral rights and dignity of the people whose images or voices are used without their consent, such as creating fake pornographic material, slanderous material, or identity theft involving celebrities or regular people. Deepfake technology can also undermine the values of truth, trust, and accountability in society when used to spread misinformation, fake news, or propaganda that can deceive or manipulate the public.

Legal Implications

Deepfake technology can pose challenges to the existing legal frameworks and regulations that protect intellectual property rights, defamation rights, and contract rights, as it can infringe on the copyright, trademark, or publicity rights of the people whose images or voices are used without their permission.

Deepfake technology can violate the privacy rights of the people whose personal data are used without their consent. It can defame the reputation or character of the people who are falsely portrayed in a negative or harmful way.

Social Implications

Deepfake technology can have negative impacts on the social well-being and cohesion of individuals and groups, as it can cause psychological, emotional, or financial harm to the victims of deepfake manipulation, who may suffer from distress, anxiety, depression, or loss of income. It can also create social divisions and conflicts among different groups or communities, inciting violence, hatred, or discrimination against certain groups based on their race, gender, religion, or political affiliation.

Imagine having deepfake videos of world leaders declaring war, making false confessions, or endorsing extremist ideologies. That could be very detrimental to the world at large.

I am afraid that in the future, deepfake technology could be used to create more sophisticated and malicious forms of disinformation and propaganda if not controlled. It could also be used to create fake evidence of crimes, scandals, or corruption involving political opponents or activists or to create fake testimonials, endorsements, or reviews involving customers, employees, or competitors.

Read More: Detecting and Regulating Deepfake Technology: The Challenges! Part II

#ai#ai-and-deepfakes#cybersecurity#Deep Fake#DeepFake Technology#deepfakes#generative-ai#Modi#Narendra Modi#Security#social media#synthetic-media#web

0 notes

Text

Deepfake Technology: Exploring The Potential Risks Of AI-Generated Videos

Recent advances in the field of artificial intelligence (AI) have led to the emergence of a concerning technology called DeepFake. DeepFake refers to the use of AI algorithms to produce highly real-looking fake videos and photos that appear to be genuine. DeepFake is a technique which can be entertaining and amusing. But there are substantial risk and dangers. This article delves into the potential risks associated with AI-generated videos and images by shedding light on adverse effects that they may affect individuals, the society, and even national security.

The rise of DeepFake Technology

Deep fakes and AI technology is gaining popularity due to its ability produce convincing and false content. AI employing advanced algorithms and machine learning techniques allows the creation of videos that have facial expressions and voice that appear authentic. AI generated videos could be used to depict people doing or saying things that aren't actually happening that could have grave implications. Look at https://ca.linkedin.com/in/pamkaweske web site if you need to have details information concerning Deep fakes and AI.

Security threats to privacy

The privacy implications of DeepFake are one of its greatest dangers. Its ability to make fake videos, or to superimpose various faces on bodies of other individuals can lead to individuals being victimized for harm. DeepFake can be used as a tool to defame people, or to blackmail them in order to make it appear they're involved in criminal activities. The victims can suffer lasting psychological and emotional traumas.

False Information and Misinformation

Deep fakes and AI is a method of technology that exacerbates the issue of false news and misleading information. Artificially-generated videos and images are used by the public to deceive the public, influence their perceptions and create false narratives. The high level of authenticity offered by DeepFake is difficult to distinguish the difference between fake and real videos. The result is the loss of trust among media. A loss of trust in media sources can lead to serious consequences for the values of democracy and harmony in society.

Political Manipulation

DeepFake has the ability to manipulate and disrupt political processes. Through the creation of fake, realistic photos and videos of politicians, it becomes possible to show them engaged with criminal conduct or committing unethical acts and influence public opinion and causing instability in government institutions. The use of deep fakes and AI during political campaigns could cause the spreading of false information. It can also alter the process for making decisions democratically which undermines the foundations of a fair and equitable society.

Security threats to National Security

Beyond personal and societal implications, DeepFake technology also poses dangers to security of the nation. DeepFake videos can be used to impersonate officials of high rank or military personnel, potentially leading to confusion, misinformation, or even initiating conflicts. It is possible to compromise national security by creating convincing videos of sensitive intelligence or military operations.

Impacts on the Financial and Economic System

The risk of DeepFake technology can be found in the financial and economic sectors too. Artificially-generated video can be used to create fraudulent content, for example, fake interviews of prominent executives, false stock market predictions, or manipulated corporate announcements. This can result in financial losses, market instability and a decline in confidence in the world of business. In order to protect investors and ensure integrity in the financial system, it is crucial to detect and mitigate the risk associated with DeepFake.

DeepFake Technology: How to combat it

To address the threats posed by DeepFake, a multifaceted approach is needed. Technology advancements focused on the creation of reliable detection strategies and authentication tools are essential. Also, it is important to raise awareness about DeepFake and its implications amongst the public. Education and media literacy initiatives are important to help people improve their use of digital information, and less prone to the false information provided by DeepFake.

Bottom Line

DeepFake is a serious threat to privacy and security. It can affect societal stability, as well as the politics. Being able to make highly realistic fake videos and images can cause serious harm that can result in reputational harm, altering public opinion and disruption to democratic processes. Individuals, technologists as well as policymakers need to collaborate to devise effective countermeasures as well as strategies to combat the DeepFake risks.

0 notes

Text

How To Stay Away From Deepfakes?

Generative AI is getting more proficient at creating deepfakes that can sound and look realistic. As a result, some of the more sophisticated spoofers have taken social engineering attacks to a more sinister level.

0 notes

Text

AI-Generated Voice Firm Clamps Down After 4chan Makes Celebrity Voices for Abuse

4chan members used ElevenLabs to make deepfake voices of Emma Watson, Joe Rogan, and others saying racist, transphobic, and violent things.

It was only a matter of time before the wave of artificial intelligence-generated voice startups became a play thing of internet trolls. On Monday, ElevenLabs, founded by ex-Google and Palantir staffers, said it had found an “increasing number of voice cloning misuse cases” during its recently launched beta. ElevenLabs didn’t point to any particular instances of abuse, but Motherboard found 4chan members appear to have used the product to generate voices that sound like Joe Rogan, Ben Sharpio, and Emma Watson to spew racist and other sorts of material. ElevenLabs said it is exploring more safeguards around its technology.

Source: Motherboard // Vice; 30 January 2023

0 notes