#OpenGL Development

Explore tagged Tumblr posts

Text

A-star pathfinding in action

youtube

#gamedev#indiedev#creative coding#coding#pathfinding#game development#programming#c++#c++ programming#opengl#Youtube

13 notes

·

View notes

Text

A run through the start of the new tutorial level. I used to wonder why you don't see many Quake maps with exteriors. I will wonder no longer. It is so much more complicated and time-consuming, you can't really improvise the way you normally do with interior detailing because all of the cliff brushes need to sort of flow into one-another. In future projects I'm thinking I'll just pick a more heavily contrasting cliff texture and stick to simple angular brushes like in Duke3D.

#gamedev#ctesiphon#fps#progress#low poly#screenshotsaturday#indiedev#game development#opengl#retro fps#solodev#quake#trenchbroom

7 notes

·

View notes

Text

Bisection

I've encountered several otherwise-capable software developers who apparently don't know how to use bisection to locate regressions (=bugs that were introduced recently into a software project).

The basic idea is, you identify a (recent) commit A that exhibits the bug and another (older) commit B where the bug is not present. Then you pick a commit C about halfway between A and B and determine whether the bug is present there. If it is, you pick D between C and B and repeat the process. If not, you pick D between A and C.

In this way, by running a dozen or so tests, you can often identify a single commit from among thousands. It's an example of a "divide and conquer" strategy.

I spent a pleasant half hour today using bisection to locate a 3 year-old performance regression in one of my projects. Said project has 1427 commits. Back in 2022 I added code to check for OpenGL errors. For one particular workload, those changes cut the graphics frame rate by a factor of 4.

All I need now is a simple mechanism to bypass OpenGL error checking, one that won't cause me to forget that those checks are available!

#war stories#binary search#opengl#software development#coding#accomplishments#divide and conquer#workload#performance

3 notes

·

View notes

Text

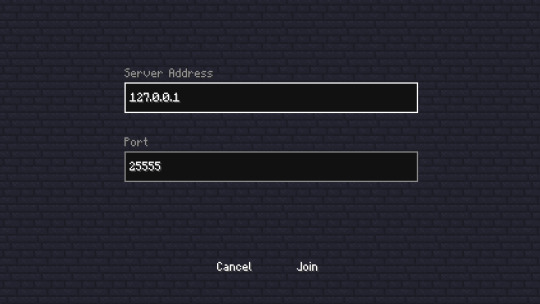

Teaser!

I got a basic TCP server running able to withstand ~10/20 players with ease. This is how the multiplayer connect screen is going to look like!

#game development#programming#lwjgl#opengl#indie#game#taplixic#youtube#libgdx#survival#multiplayer#tcp#network

2 notes

·

View notes

Text

I am the graphics programmer guy now. OpenGL is like almost fun.

3 notes

·

View notes

Text

Pick your side.

2 notes

·

View notes

Text

According to the hex code that artists colorr belongs to the poor.' 'No,' says the man in washington, 'it belongs to the pastel purple color family, I consider whoever made that decision as crazy as anyone who's read harry Potter and the rest will come after you.

#TEXT#DAY 4#Since i can't do that on television was developed by accident#according to the hex code that artists Colorr belongs to a Rathian#but I'm not sure what the hell just said that aliens and doesn't directly provide OpenGL bindings for you.

1 note

·

View note

Text

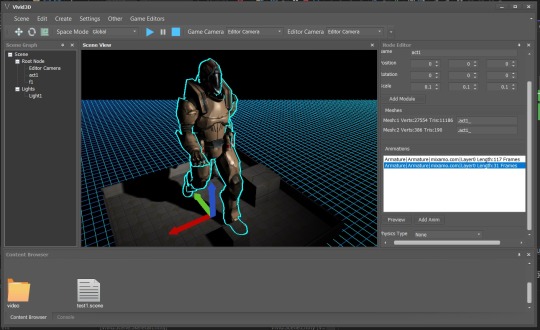

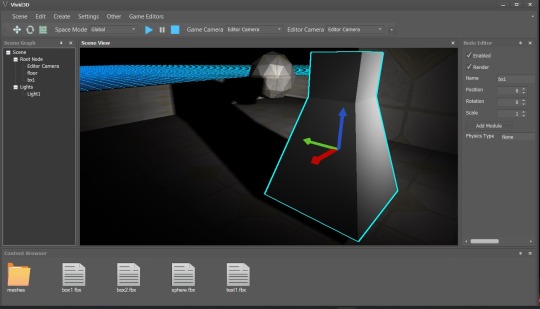

Here are some screenies of my indevelopment 3D engine and IDE. The engine and IDE both are C# using OpenTK and a native UI for the IDE.

I will post more soon, including a tech demo game called "Beyond the Crystal Gate."

1 note

·

View note

Note

i have a question about the development of the game!

most environments in the game operate on a two-frame animation where it's the same drawing twice but with tiny line deviations; just a subtle art style choice to make the game a bit more lively.

a lot of games and other such things do this, and it certainly adds a lot of life to the frame, but it seems like an incredible amount of work to have to draw every single thing twice instead of once, even if one is just traced over the other - especially with environments with so many intricate details like in slay the princess.

did you use any tricks or programs to make this process faster? or did you just have to draw every single background twice?

Lol. So I actually asked Abby if she could draw 3 versions of every asset when we started on the very first demo, after which she promptly died to avoid taking on that much work. When that was clearly a no-go, I switched to creating a boil effect out of localized distortions in After Effects, and then applied that to each image, creating a short, looping video file. This was *terrible* from a performance perspective, especially with the degree of layering in Slay the Princess — to maintain the parallax effect, many backgrounds have as many as 4 different layers, which all have to be saved as their own images, on top of the Princess when she's present. Playing that many HD video files is very CPU intensive, and the first demo ran terribly because of it. Ultimately we switched to using an openGL shader (which we hired @manuelamalasanya to code — she is so so talented) that mimics the transform I built in After Effects, and we apply that shader to each image within Ren'py. This works better with the engine in general, and it also means the extra work on displaying that effect is done on the GPU instead of the CPU, so the game's more performant.

253 notes

·

View notes

Text

Little ad moment. I'm not paid or anything, I just really like this library I thought I'd share.

This is fuckin huge.

Fuck electron apps loading up your 8Go of ram with a single one open, embrace real GUI in C, C++, Odin and many more to come with Clay

I'm so in love with this. It's C99 style, single header, very elegant and nice to use. Works with raylib, opengl, sdl, vulkan, and ANY OTHER RENDERING ENGINE.

Video tutorial and presentation under the cut:

youtube

#cool retro term#linux#linuxposting#programming#c++#c programming#c++ programming#gui#gui programming#odin programming#raylib#sdl#sdl 2#sdl 3#opengl#vulkan#Youtube

18 notes

·

View notes

Text

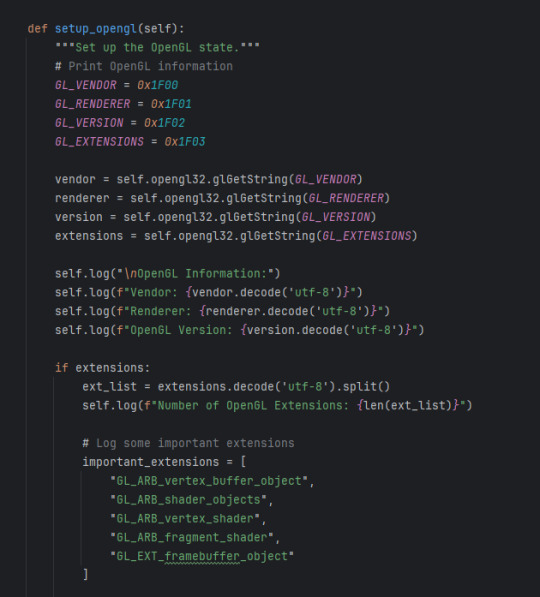

Programs get so spooky when you start using specific hex values as your constants

so because i am a crazy person i resently decided to try and learn how to use driver api's to use the GPU in python without any pipped libraires. in going down this rabbit hole i have disvored that these api's take some constants as specific hex values to indicate different meanings. and it feels so spooky to just use magic numbers pulled from the ether

This has all been in service of being able to run shit like openGL in vanilla with no added libraries ArcGIS Pro so that i could develop a toolbox that could be shared and it would run on anyone's project right out the gate.

so here is a rotating cube as an arcgis toolbox

4 notes

·

View notes

Text

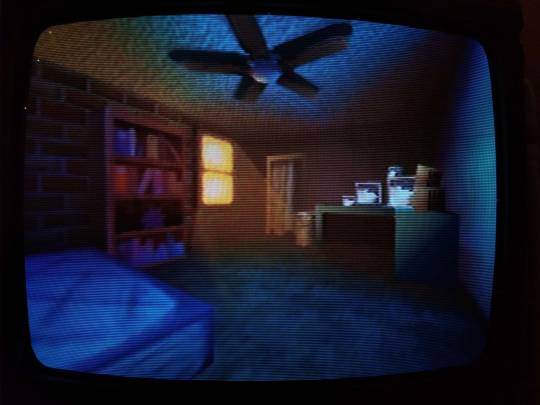

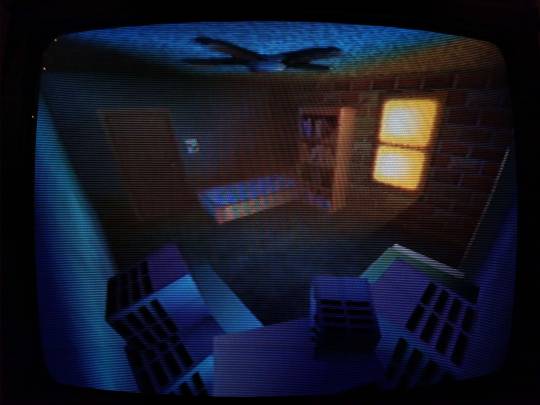

When whipping up Railgun in two weeks' time for a game jam, I aimed to make the entire experience look and feel as N64-esque as I could muster in that short span. But the whole game was constructed in Godot, a modern engine, and targeted for PC. I just tried to look the part. Here is the same bedroom scene running on an actual Nintendo 64:

I cannot overstate just how fucking amazing this is.

Obviously this is not using Godot anymore, but an open source SDK for the N64 called Libdragon. The 3D support is still very much in active development, and it implements-- get this-- OpenGL 1.1 under the hood. What the heck is this sorcery...

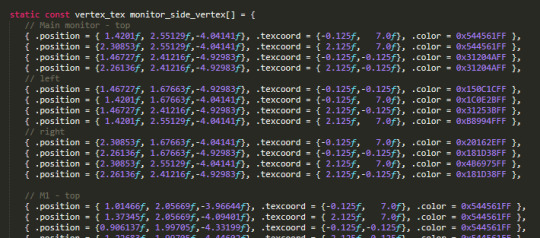

UH OH, YOU'VE BEEN TRAPPED IN THE GEEK ZONE! NO ESCAPE NO ESCAPE NO ESCAPE EHUEHUHEUHEUHEUHUEH While there is a gltf importer for models, I didn't want to put my faith in a kinda buggy importer with an already (in my experience) kinda buggy model format. I wanted more control over how my mesh data is stored in memory, and how it gets drawn. So instead I opted for a more direct solution: converting every vertex of every triangle of every object in the scene by fucking hand.

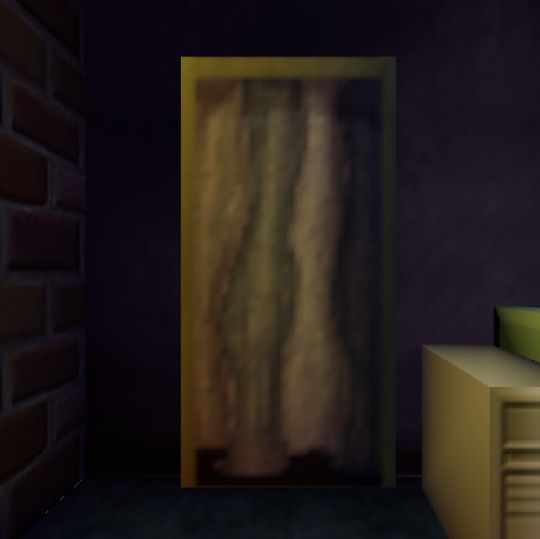

THERE ARE NEARLY NINE HUNDRED LINES OF THIS SHIT. THIS TOOK ME MONTHS. And these are just the vertices. I had to figure out triangle drawing PER VERTEX. You have to construct each triangle counterclockwise in order for the front of the face to be, well, the front. In addition, starting the next tri with the last vertex of the previous tri is the most efficient, so I plotted out so many diagrams to determine how to most efficiently draw each mesh. And god the TEXTURES. When I painted the textures for this scene originally, I went no larger than 64 x 64 pixels for each. The N64 has an infamously minuscule texture cache of 4kb, and while there were some different formats to try and make the most of it, I previously understood this resolution to be the maximum. Guess what? I was wrong! You can go higher. Tall textures, such as the closet and hallway doors, were stored as 32 x 64 in Godot. On the actual N64, however, I chose the CI4 texture format, aka 4-bit color index. I can choose a palette of 16 colors, and in doing so bump it up to 48 x 84.

On the left, the original texture in Godot at 32 x 64px. On the right, an updated texture on the N64 at 48 x 84px. Latter screenshot taken in the Ares emulator.

The window, previously the same smaller size, is now a full 64 x 64 CI4 texture mirrored once vertically. Why I didn't think of this previously in Godot I do not know lol

Similarly, the sides of the monitors in the room? A single 32 x 8 CI4 texture. The N64 does a neat thing where you can specify the number of times a texture repeats or mirrors on each axis, and clip it afterwards. So I draw a single vent in the texture, mirror it twice horizontally and 4 times vertically, adjusting the texture coordinates so the vents sit toward the back of the monitor.

The bookshelf actually had to be split up into two textures for the top and bottom halves. Due to the colorful array of books on display, a 16 color palette wasn't enough to show it all cleanly. So instead these are two CI8 textures, an 8-bit color index so 256 colors per half!! At a slightly bumped up resolution of 42 x 42. You can now kind of sort of tell what the mysterious object on the 2nd shelf is. It's. It is a sea urchin y'all it is in the room of a character that literally goes by Urchin do ddo you get it n-

also hey do u notice anything coo,l about the color of the books on each shelf perhaps they also hjint at things about Urchin as a character teehee :3c I redid the ceiling texture anyways cause the old one was kind of garbage, (simple noise that somehow made the edges obvious when tiled). Not only is it still 64px, but it's now an I4 texture, aka 4-bit intensity. There's no color information here, it's simply a grayscale image that gets blended over the vertex color. So it's half the size in memory now! Similarly the ceiling fan shadow now has a texture on it (it was previously just a black polygon). The format is IA4, or 4-bit intensity alpha. 3 bits of intensity (b/w), 1 bit of alpha (transparency). It's super subtle but it now has some pleasing vertex colors that compliment the lighting in the room!

Left, Godot. Right, N64. All of the texture resolutions either stayed the same, or got BIGGER thanks to the different texture formats the N64 provides. Simply put:

THE SCENE LOOKS BETTER ON THE ACTUAL N64.

ALSO IT RUNS AT 60FPS. MOSTLY*. *It depends on the camera angle, as tried to order draw calls of everything in the scene to render as efficiently as I could for most common viewing angles. Even then there are STILL improvements I know I can make, particularly with disabling the Z-buffer for some parts of the room. And I still want to add more to the scene: ambient sounds, and if I can manage it, the particles of dust that swirl around the room. Optimization is wild, y'all. But more strikingly... fulfilling a childhood dream of making something that actually renders and works on the first video game console I ever played? Holy shit. Seeing this thing I made on this nearly thirty-year-old console, on this fuzzy CRT, is such a fucking trip. I will never tire of it.

46 notes

·

View notes

Text

A weapon... (dramatic pause) to surpass Metal Gear...

7 notes

·

View notes

Text

RenderDoc the block

Today I solved the blocking issue in my Macana OpenGL project. I did it using RenderDoc, an open-source graphics debugger suggested to me by a colleague.

Since I'm a complete noob at RenderDoc (and not very proficient at OpenGL either), it took me awhile to gain traction. I didn't use the tool very effectively. But the key virtue of a good debugger is that helps users visualize what's going on. Somehow in the flood of details, I noticed that my problematic texture (which didn't have mipmaps) used a filter intended for mipmaps. And that proved to be my issue.

Meanwhile, I'm making progress configuring my new Linux Mint environment. I finally got the Cinnamon panel (analogous with the Windows taskbar) configured the way I like. I must've spent hours; it seems to me the Cinnamon UI could be a lot more intuitive.

Also I discovered I had 2 copies of LibreOffice: one installed from Apt and another installed from Flatpak. I only need one copy, so I'm removing the older (Apt) install to free up disk space.

#disk space#opengl#debugging#open source#computer graphics#accomplishments#free tools#textures#panel#linux mint#libre office#flatpak#duplication#software development#user interface#uninstall#war stories#traction#weird bug

2 notes

·

View notes

Text

Taplixic graphics overhall video example

youtube

I just prototyped something that fits my vision on how I think the game should look... It's extremely different from what we had, so I hope you like it!

Anyways, the trees are from Stardew Valley, it's not an official version, so for now, I'm using them for testing purposes. Of course, after release I will be drawing my own trees and etc.

3 notes

·

View notes

Text

Just imagine the scene in an actual game. I think it looks pretty nice. It doesn't make all too much sense that the stuff moves around in a room with no airflow but I like it ok? I made some VERY ROUGH DRAFT music for it which kinda fits the mood I'm going for. The plants should have physical interactions with the player eventually.🍔

#gamedev#c++#opengl#game engine#indie dev#solo dev#game development#indiegamedev#sorry for flooding tags#i'm kinda proud of it is all

2 notes

·

View notes