#Python development process

Explore tagged Tumblr posts

Text

Learn how to streamline Python packaging and distribution from code to PyPI! This comprehensive guide by BoTree Technologies covers essential steps, best practices, and tips to create and share your Python packages efficiently. Whether you're a developer looking to share your libraries or a team ensuring seamless distribution, this resource is your go-to for mastering Python packaging. Explore the ins and outs of packaging, versioning, metadata, dependencies, and more. Elevate your Python development process and make your packages accessible to the wider community. Dive into this informative guide and enhance your Python packaging skills today!

0 notes

Text

Need a Python expert? I offer freelance Python development for web apps, data processing, and automation. With strong experience in Django, Flask, and data analysis, I provide efficient, scalable solutions tailored to your needs. Let’s bring your project to life with robust Python development. more information visit my website : www.karandeeparora.com

#web services#python developers#data processing#django#data analysis#flask#python development#automation

0 notes

Text

Django Middleware: What, Why, and How

Django is a robust web framework that provides a variety of features to simplify web development. One of these powerful features is middleware. Middleware is a way to process requests globally before they reach the view or after the view has processed them. This blog post will delve into what Django middleware is, why it’s essential, and how you can create and use your own middleware in Django…

View On WordPress

#custom middleware#Django middleware#Django Tutorial#middleware best practices#Python#request processing#response processing#web development

0 notes

Text

#DSA#web#development#machine#learning#ml#data#science#datascience#python#java#c++#sql#git#devops#android#nlp#natural#language#processing#compsci#computer

0 notes

Text

What kind of bubble is AI?

My latest column for Locus Magazine is "What Kind of Bubble is AI?" All economic bubbles are hugely destructive, but some of them leave behind wreckage that can be salvaged for useful purposes, while others leave nothing behind but ashes:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Think about some 21st century bubbles. The dotcom bubble was a terrible tragedy, one that drained the coffers of pension funds and other institutional investors and wiped out retail investors who were gulled by Superbowl Ads. But there was a lot left behind after the dotcoms were wiped out: cheap servers, office furniture and space, but far more importantly, a generation of young people who'd been trained as web makers, leaving nontechnical degree programs to learn HTML, perl and python. This created a whole cohort of technologists from non-technical backgrounds, a first in technological history. Many of these people became the vanguard of a more inclusive and humane tech development movement, and they were able to make interesting and useful services and products in an environment where raw materials – compute, bandwidth, space and talent – were available at firesale prices.

Contrast this with the crypto bubble. It, too, destroyed the fortunes of institutional and individual investors through fraud and Superbowl Ads. It, too, lured in nontechnical people to learn esoteric disciplines at investor expense. But apart from a smattering of Rust programmers, the main residue of crypto is bad digital art and worse Austrian economics.

Or think of Worldcom vs Enron. Both bubbles were built on pure fraud, but Enron's fraud left nothing behind but a string of suspicious deaths. By contrast, Worldcom's fraud was a Big Store con that required laying a ton of fiber that is still in the ground to this day, and is being bought and used at pennies on the dollar.

AI is definitely a bubble. As I write in the column, if you fly into SFO and rent a car and drive north to San Francisco or south to Silicon Valley, every single billboard is advertising an "AI" startup, many of which are not even using anything that can be remotely characterized as AI. That's amazing, considering what a meaningless buzzword AI already is.

So which kind of bubble is AI? When it pops, will something useful be left behind, or will it go away altogether? To be sure, there's a legion of technologists who are learning Tensorflow and Pytorch. These nominally open source tools are bound, respectively, to Google and Facebook's AI environments:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

But if those environments go away, those programming skills become a lot less useful. Live, large-scale Big Tech AI projects are shockingly expensive to run. Some of their costs are fixed – collecting, labeling and processing training data – but the running costs for each query are prodigious. There's a massive primary energy bill for the servers, a nearly as large energy bill for the chillers, and a titanic wage bill for the specialized technical staff involved.

Once investor subsidies dry up, will the real-world, non-hyperbolic applications for AI be enough to cover these running costs? AI applications can be plotted on a 2X2 grid whose axes are "value" (how much customers will pay for them) and "risk tolerance" (how perfect the product needs to be).

Charging teenaged D&D players $10 month for an image generator that creates epic illustrations of their characters fighting monsters is low value and very risk tolerant (teenagers aren't overly worried about six-fingered swordspeople with three pupils in each eye). Charging scammy spamfarms $500/month for a text generator that spits out dull, search-algorithm-pleasing narratives to appear over recipes is likewise low-value and highly risk tolerant (your customer doesn't care if the text is nonsense). Charging visually impaired people $100 month for an app that plays a text-to-speech description of anything they point their cameras at is low-value and moderately risk tolerant ("that's your blue shirt" when it's green is not a big deal, while "the street is safe to cross" when it's not is a much bigger one).

Morganstanley doesn't talk about the trillions the AI industry will be worth some day because of these applications. These are just spinoffs from the main event, a collection of extremely high-value applications. Think of self-driving cars or radiology bots that analyze chest x-rays and characterize masses as cancerous or noncancerous.

These are high value – but only if they are also risk-tolerant. The pitch for self-driving cars is "fire most drivers and replace them with 'humans in the loop' who intervene at critical junctures." That's the risk-tolerant version of self-driving cars, and it's a failure. More than $100b has been incinerated chasing self-driving cars, and cars are nowhere near driving themselves:

https://pluralistic.net/2022/10/09/herbies-revenge/#100-billion-here-100-billion-there-pretty-soon-youre-talking-real-money

Quite the reverse, in fact. Cruise was just forced to quit the field after one of their cars maimed a woman – a pedestrian who had not opted into being part of a high-risk AI experiment – and dragged her body 20 feet through the streets of San Francisco. Afterwards, it emerged that Cruise had replaced the single low-waged driver who would normally be paid to operate a taxi with 1.5 high-waged skilled technicians who remotely oversaw each of its vehicles:

https://www.nytimes.com/2023/11/03/technology/cruise-general-motors-self-driving-cars.html

The self-driving pitch isn't that your car will correct your own human errors (like an alarm that sounds when you activate your turn signal while someone is in your blind-spot). Self-driving isn't about using automation to augment human skill – it's about replacing humans. There's no business case for spending hundreds of billions on better safety systems for cars (there's a human case for it, though!). The only way the price-tag justifies itself is if paid drivers can be fired and replaced with software that costs less than their wages.

What about radiologists? Radiologists certainly make mistakes from time to time, and if there's a computer vision system that makes different mistakes than the sort that humans make, they could be a cheap way of generating second opinions that trigger re-examination by a human radiologist. But no AI investor thinks their return will come from selling hospitals that reduce the number of X-rays each radiologist processes every day, as a second-opinion-generating system would. Rather, the value of AI radiologists comes from firing most of your human radiologists and replacing them with software whose judgments are cursorily double-checked by a human whose "automation blindness" will turn them into an OK-button-mashing automaton:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

The profit-generating pitch for high-value AI applications lies in creating "reverse centaurs": humans who serve as appendages for automation that operates at a speed and scale that is unrelated to the capacity or needs of the worker:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

But unless these high-value applications are intrinsically risk-tolerant, they are poor candidates for automation. Cruise was able to nonconsensually enlist the population of San Francisco in an experimental murderbot development program thanks to the vast sums of money sloshing around the industry. Some of this money funds the inevitabilist narrative that self-driving cars are coming, it's only a matter of when, not if, and so SF had better get in the autonomous vehicle or get run over by the forces of history.

Once the bubble pops (all bubbles pop), AI applications will have to rise or fall on their actual merits, not their promise. The odds are stacked against the long-term survival of high-value, risk-intolerant AI applications.

The problem for AI is that while there are a lot of risk-tolerant applications, they're almost all low-value; while nearly all the high-value applications are risk-intolerant. Once AI has to be profitable – once investors withdraw their subsidies from money-losing ventures – the risk-tolerant applications need to be sufficient to run those tremendously expensive servers in those brutally expensive data-centers tended by exceptionally expensive technical workers.

If they aren't, then the business case for running those servers goes away, and so do the servers – and so do all those risk-tolerant, low-value applications. It doesn't matter if helping blind people make sense of their surroundings is socially beneficial. It doesn't matter if teenaged gamers love their epic character art. It doesn't even matter how horny scammers are for generating AI nonsense SEO websites:

https://twitter.com/jakezward/status/1728032634037567509

These applications are all riding on the coattails of the big AI models that are being built and operated at a loss in order to be profitable. If they remain unprofitable long enough, the private sector will no longer pay to operate them.

Now, there are smaller models, models that stand alone and run on commodity hardware. These would persist even after the AI bubble bursts, because most of their costs are setup costs that have already been borne by the well-funded companies who created them. These models are limited, of course, though the communities that have formed around them have pushed those limits in surprising ways, far beyond their original manufacturers' beliefs about their capacity. These communities will continue to push those limits for as long as they find the models useful.

These standalone, "toy" models are derived from the big models, though. When the AI bubble bursts and the private sector no longer subsidizes mass-scale model creation, it will cease to spin out more sophisticated models that run on commodity hardware (it's possible that Federated learning and other techniques for spreading out the work of making large-scale models will fill the gap).

So what kind of bubble is the AI bubble? What will we salvage from its wreckage? Perhaps the communities who've invested in becoming experts in Pytorch and Tensorflow will wrestle them away from their corporate masters and make them generally useful. Certainly, a lot of people will have gained skills in applying statistical techniques.

But there will also be a lot of unsalvageable wreckage. As big AI models get integrated into the processes of the productive economy, AI becomes a source of systemic risk. The only thing worse than having an automated process that is rendered dangerous or erratic based on AI integration is to have that process fail entirely because the AI suddenly disappeared, a collapse that is too precipitous for former AI customers to engineer a soft landing for their systems.

This is a blind spot in our policymakers debates about AI. The smart policymakers are asking questions about fairness, algorithmic bias, and fraud. The foolish policymakers are ensnared in fantasies about "AI safety," AKA "Will the chatbot become a superintelligence that turns the whole human race into paperclips?"

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

But no one is asking, "What will we do if" – when – "the AI bubble pops and most of this stuff disappears overnight?"

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/19/bubblenomics/#pop

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

tom_bullock (modified) https://www.flickr.com/photos/tombullock/25173469495/

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

4K notes

·

View notes

Text

Story from today. There is a local engineer that is a python breeder on the side.

Reticulated pythons are the longest snakes in the world. They are massive snakes. They get to be like 25 ft long and thicker than a human thigh.

Anyway, a while back he gave me a 10 ft male.

This was a wild snake that he had imported from Indonesia and was trying to breed it to one of his females.

Breeders do this sometimes because animals in captivity tend to become inbred, so once in a while some breeders will hire someone in a foreign country to go out to the forest and catch a wild animal and then put it in a box and ship it to the United states.

That way it introduces fresh genes into the gene pool. bonus money if it has any special patterns or coloration.

Anyway he got the young male shipped in and put it together with one of the females and instead of having babies the female killed it.

So he gave it to me and I've had it in the freezer.

I pulled it out last night to thaw.

Males and females snakes need to be prepped and processed differently. The anatomy of male snakes requires special equipment.

So the day before I got all the equipment ready. I got up early, started work, cutting into the tail section.

It doesn't look normal on the inside.

I'm not seeing the structures that I'm supposed to see in male snakes.

I'm very confused..... does it have some sort of condition where it didn't develop correctly?

Maybe it's too young? It's 10 ft long. I don't think it's too young.

Could it be intersex? That's super rare but I've seen it a few times.

It's a FEMALE

So it turns out that this breeder had gotten someone on the other side of the world to catch and ship this snake to him for thousands of dollars and all this time it was a female.

And then he put this smaller female in with a much larger older female and she killed it.

Through the dozens of people who have handled the snake and imported it and worked with it, everybody has completely missed the fact that its been mistakenly sexed

Welp...

506 notes

·

View notes

Text

Essentials You Need to Become a Web Developer

HTML, CSS, and JavaScript Mastery

Text Editor/Integrated Development Environment (IDE): Popular choices include Visual Studio Code, Sublime Text.

Version Control/Git: Platforms like GitHub, GitLab, and Bitbucket allow you to track changes, collaborate with others, and contribute to open-source projects.

Responsive Web Design Skills: Learn CSS frameworks like Bootstrap or Flexbox and master media queries

Understanding of Web Browsers: Familiarize yourself with browser developer tools for debugging and testing your code.

Front-End Frameworks: for example : React, Angular, or Vue.js are powerful tools for building dynamic and interactive web applications.

Back-End Development Skills: Understanding server-side programming languages (e.g., Node.js, Python, Ruby , php) and databases (e.g., MySQL, MongoDB)

Web Hosting and Deployment Knowledge: Platforms like Heroku, Vercel , Netlify, or AWS can help simplify this process.

Basic DevOps and CI/CD Understanding

Soft Skills and Problem-Solving: Effective communication, teamwork, and problem-solving skills

Confidence in Yourself: Confidence is a powerful asset. Believe in your abilities, and don't be afraid to take on challenging projects. The more you trust yourself, the more you'll be able to tackle complex coding tasks and overcome obstacles with determination.

#code#codeblr#css#html#javascript#java development company#python#studyblr#progblr#programming#comp sci#web design#web developers#web development#website design#webdev#website#tech#html css#learn to code

2K notes

·

View notes

Text

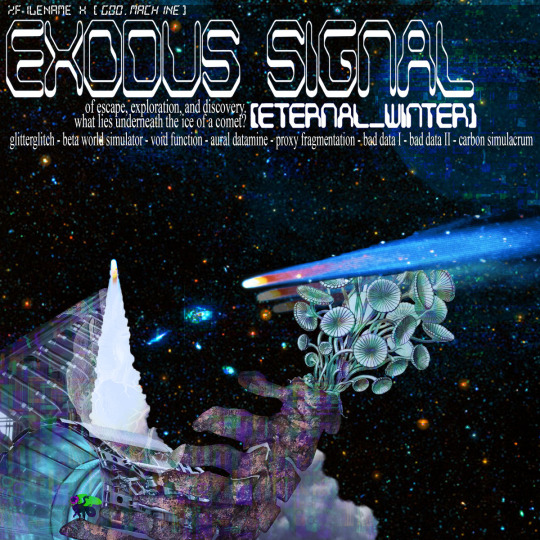

research & development is ongoing

since using jukebox for sampling material on albedo, i've been increasingly interested in ethically using ai as a tool to incorporate more into my own artwork. recently i've been experimenting with "commoncanvas", a stable diffusion model trained entirely on works in the creative commons. though i do not believe legality and ethics are equivalent, this provides me peace of mind that all of the training data was used consensually through the terms of the creative commons license. here's the paper on it for those who are curious! shoutout to @reachartwork for the inspiration & her informative posts about her process!

part 1: overview

i usually post finished works, so today i want to go more in depth & document the process of experimentation with a new medium. this is going to be a long and image-heavy post, most of it will be under the cut & i'll do my best to keep all the image descriptions concise.

for a point of reference, here is a digital collage i made a few weeks ago for the album i just released (shameless self promo), using photos from wikimedia commons and a render of a 3d model i made in blender:

and here are two images i made with the help of common canvas (though i did a lot of editing and post-processing, more on that process in a future post):

more about my process & findings under the cut, so this post doesn't get too long:

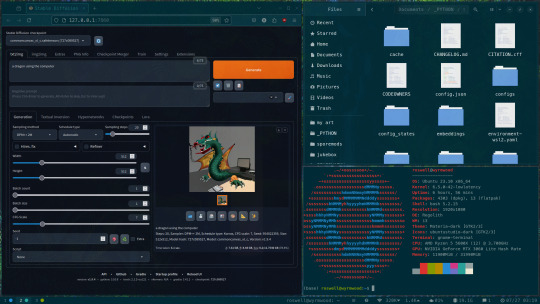

quick note for my setup: i am running this model locally on my own machine (rtx 3060, ubuntu 23.10), using the automatic1111 web ui. if you are on the same version of ubuntu as i am, note that you will probably have to build python 3.10.6 yourself (and be sure to use 'make altinstall' instead of 'make install' and change the line in the webui to use 'python3.10' instead of 'python3'. just mentioning this here because nobody else i could find had this exact problem and i had to figure it out myself)

part 2: initial exploration

all the images i'll be showing here are the raw outputs of the prompts given, with no retouching/regenerating/etc.

so: commoncanvas has 2 different types of models, the "C" and "NC" models, trained on their database of works under the CC Commercial and Non-Commercial licenses, respectively (i think the NC dataset also includes the commercial license works, but i may be wrong). the NC model is larger, but both have their unique strengths:

"a cat on the computer", "C" model

"a cat on the computer", "NC" model

they both take the same amount of time to generate (17 seconds for four 512x512 images on my 3060). if you're really looking for that early ai jank, go for the commercial model. one thing i really like about commoncanvas is that it's really good at reproducing the styles of photography i find most artistically compelling: photos taken by scientists and amateurs. (the following images will be described in the captions to avoid redundancy):

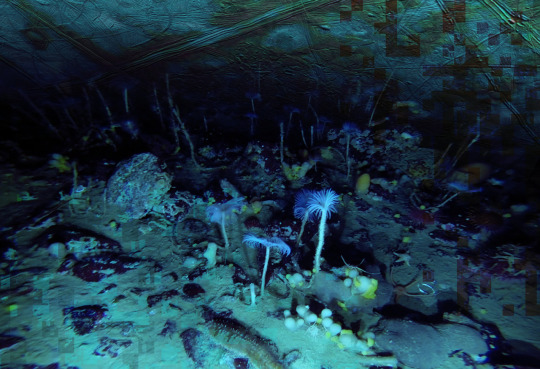

"grainy deep-sea rover photo of an octopus", "NC" model. note the motion blur on the marine snow, greenish lighting and harsh shadows here, like you see in photos taken by those rover submarines that scientists use to take photos of deep sea creatures (and less like ocean photography done for purely artistic reasons, which usually has better lighting and looks cleaner). the anatomy sucks, but the lighting and environment is perfect.

"beige computer on messy desk", "NC" model. the reflection of the flash on the screen, the reddish-brown wood, and the awkward angle and framing are all reminiscent of a photo taken by a forum user with a cheap digital camera in 2007.

so the noncommercial model is great for vernacular and scientific photography. what's the commercial model good for?

"blue dragon sitting on a stone by a river", "C" model. it's good for bad CGI dragons. whenever i request dragons of the commercial model, i either get things that look like photographs of toys/statues, or i get gamecube type CGI, and i love it.

here are two little green freaks i got while trying to refine a prompt to generate my fursona. (i never succeeded, and i forget the exact prompt i used). these look like spore creations and the background looks like a bryce render. i really don't know why there's so much bad cgi in the datasets and why the model loves going for cgi specifically for dragons, but it got me thinking...

"hollow tree in a magical forest, video game screenshot", "C" model

"knights in a dungeon, video game screenshot", "C" model

i love the dreamlike video game environments and strange CGI characters it produces-- it hits that specific era of video games that i grew up with super well.

part 3: use cases

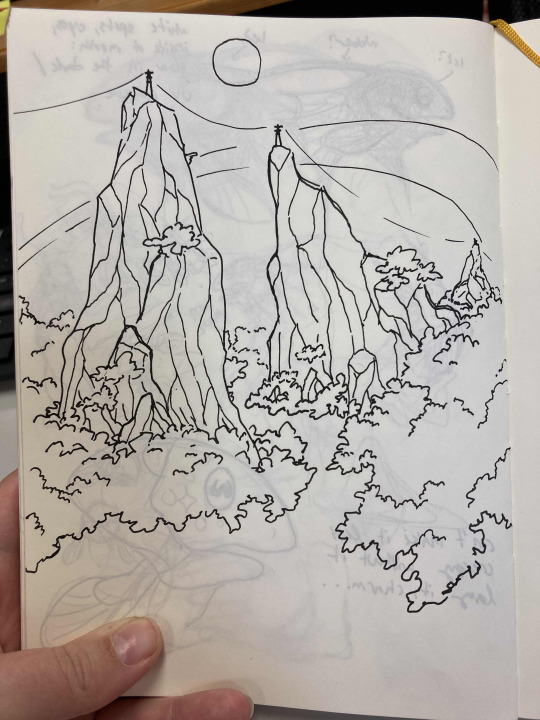

if you've seen any of the visual art i've done to accompany my music projects, you know that i love making digital collages of surreal landscapes:

(this post is getting image heavy so i'll wrap up soon)

i'm interested in using this technology more, not as a replacement for my digital collage art, but along with it as just another tool in my toolbox. and of course...

... this isn't out of lack of skill to imagine or draw scifi/fantasy landscapes.

thank you for reading such a long post! i hope you got something out of this post; i think it's a good look into the "experimentation phase" of getting into a new medium. i'm not going into my post-processing / GIMP stuff in this post because it's already so long, but let me know if you want another post going into that!

good-faith discussion and questions are encouraged but i will disable comments if you don't behave yourselves. be kind to each other and keep it P.L.U.R.

201 notes

·

View notes

Text

Rambling About C# Being Alright

I think C# is an alright language. This is one of the highest distinctions I can give to a language.

Warning: This post is verbose and rambly and probably only good at telling you why someone might like C# and not much else.

~~~

There's something I hate about every other language. Worst, there's things I hate about other languages that I know will never get better. Even worse, some of those things ALSO feel like unforced errors.

With C# there's a few things I dislike or that are missing. C#'s feature set does not obviously excel at anything, but it avoids making any huge misstep in things I care about. Nothing in C# makes me feel like the language designer has personally harmed me.

C# is a very tolerable language.

C# is multi-paradigm.

C# is the Full Middle Malcomist language.

C# will try to not hurt you.

A good way to describe C# is "what if Java sucked less". This, of course, already sounds unappealing to many, but that's alright. I'm not trying to gas it up too much here.

C# has sins, but let's try to put them into some context here and perhaps the reason why I'm posting will become more obvious:

C# didn't try to avoid generics and then implement them in a way that is very limiting (cough Go).

C# doesn't hamstring your ability to have statement lambdas because the language designer dislikes them and also because the language designer decided to have semantic whitespace making statement lambdas harder to deal with (cough Python).

C# doesn't require you to explicitly wrap value types into reference types so you can put value types into collections (cough Java).

C# doesn't ruin your ability to interact with memory efficiently because it forbids you from creating custom value types, ergo everything goes to the heap (cough cough Java, Minecraft).

C# doesn't have insane implicit type coercions that have become the subject of language design comedy (cough JavaScript).

C# doesn't keep privacy accessors as a suggestion and has the developers pinkie swear about it instead of actually enforcing it (cough cough Python).

Plainly put, a lot of the time I find C# to be alright by process of elimination. I'm not trying to shit on your favorite language. Everyone has different things they find tolerable. I have the Buddha nature so I wish for all things to find their tolerable language.

I do also think that C# is notable for being a mainstream language (aka not Haskell) that has a smaller amount of egregious mistakes, quirks and Faustian bargains.

The Typerrrrr

C# is statically typed, but the typing is largely effortless to navigate unlike something like Rust, and the GC gives a greater degree of safety than something like C++.

Of course, the typing being easy to work it also makes it less safe than Rust. But this is an appropriate trade-off for certain kinds of applications, especially considering that C# is memory safe by virtue of running on a VM. Don't come at me, I'm a Rust respecter!!

You know how some people talk about Python being amazing for prototyping? That's how I feel about C#. No matter how much time I would dedicate to Python, C# would still be a more productive language for me. The type system would genuinely make me faster for the vast majority of cases. Of course Python has gradual typing now, so any comparison gets more difficult when you consider that. But what I'm trying to say is that I never understood the idea that doing away entirely with static typing is good for fast iteration.

Also yes, C# can be used as a repl. Leave me alone with your repls. Also, while the debugger is active you can also evaluate arbitrary code within the current scope.

I think that going full dynamic typing is a mistake in almost every situation. The fact that C# doesn't do that already puts it above other languages for me. This stance on typing is controversial, but it's my opinion that is really shouldn't be. And the wind has constantly been blowing towards adding gradual typing to dynamic languages.

The modest typing capabilities C# coupled with OOP and inheritance lets you create pretty awful OOP slop. But that's whatever. At work we use inheritance in very few places where it results in neat code reuse, and then it's just mostly interfaces getting implemented.

C#'s typing and generic system is powerful enough to offer you a plethora of super-ergonomic collection transformation methods via the LINQ library. There's a lot of functional-style programming you can do with that. You know, map, filter, reduce, that stuff?

Even if you make a completely new collection type, if it implements IEnumerable<T> it will benefit from LINQ automatically. Every language these days has something like this, but it's so ridiculously easy to use in C#. Coupled with how C# lets you (1) easily define immutable data types, (2) explicitly control access to struct or class members, (3) do pattern matching, you can end up with code that flows really well.

A Friendly Kitchen Sink

Some people have described C#'s feature set as bloated. It is getting some syntactic diversity which makes it a bit harder to read someone else's code. But it doesn't make C# harder to learn, since it takes roughly the same amount of effort to get to a point where you can be effective in it.

Most of the more specific features can be effortlessly ignored. The ones that can't be effortlessly ignored tend to bring something genuinely useful to the language -- such as tuples and destructuring. Tuples have their own syntax, the syntax is pretty intuitive, but the first time you run into it, you will have to do a bit of learning.

C# has an immense amount of small features meant to make the language more ergonomic. They're too numerous to mention and they just keep getting added.

I'd like to draw attention to some features not because they're the most important but rather because it feels like they communicate the "personality" of C#. Not sure what level of detail was appropriate, so feel free to skim.

Stricter Null Handling. If you think not having to explicitly deal with null is the billion dollar mistake, then C# tries to fix a bit of the problem by allowing you to enable a strict context where you have to explicitly tell it that something can be null, otherwise it will assume that the possibility of a reference type being null is an error. It's a bit more complicated than that, but it definitely helps with safety around nullability.

Default Interface Implementation. A problem in C# which drives usage of inheritance is that with just interfaces there is no way to reuse code outside of passing function pointers. A lot of people don't get this and think that inheritance is just used because other people are stupid or something. If you have a couple of methods that would be implemented exactly the same for classes 1 through 99, but somewhat differently for classes 100 through 110, then without inheritance you're fucked. A much better way would be Rust's trait system, but for that to work you need really powerful generics, so it's too different of a path for C# to trod it. Instead what C# did was make it so that you can write an implementation for methods declared in an interface, as long as that implementation only uses members defined in the interface (this makes sense, why would it have access to anything else?). So now you can have a default implementation for the 1 through 99 case and save some of your sanity. Of course, it's not a panacea, if the implementation of the method requires access to the internal state of the 1 through 99 case, default interface implementation won't save you. But it can still make it easier via some techniques I won't get into. The important part is that default interface implementation allows code reuse and reduces reasons to use inheritance.

Performance Optimization. C# has a plethora of features regarding that. Most of which will never be encountered by the average programmer. Examples: (1) stackalloc - forcibly allocate reference types to the stack if you know they won't outlive the current scope. (2) Specialized APIs for avoiding memory allocations in happy paths. (3) Lazy initialization APIs. (4) APIs for dealing with memory more directly that allow high performance when interoping with C/C++ while still keeping a degree of safety.

Fine Control Over Async Runtime. C# lets you write your own... async builder and scheduler? It's a bit esoteric and hard to describe. But basically all the functionality of async/await that does magic under the hood? You can override that magic to do some very specific things that you'll rarely need. Unity3D takes advantage of this in order to allow async/await to work on WASM even though it is a single-threaded environment. It implements a cooperative scheduler so the program doesn't immediately freeze the moment you do await in a single-threaded environment. Most people don't know this capability exists and it doesn't affect them.

Tremendous Amount Of Synchronization Primitives and API. This ones does actually make multithreaded code harder to deal with, but basically C# erred a lot in favor of having many different ways to do multithreading because they wanted to suit different usecases. Most people just deal with idiomatic async/await code, but a very small minority of C# coders deal with locks, atomics, semaphores, mutex, monitors, interlocked, spin waiting etc. They knew they couldn't make this shit safe, so they tried to at least let you have ready-made options for your specific use case, even if it causes some balkanization.

Shortly Begging For Tagged Unions

What I miss from C# is more powerful generic bounds/constraints and tagged unions (or sum types or discriminated unions or type unions or any of the other 5 names this concept has).

The generic constraints you can use in C# are anemic and combined with the lack of tagged unions this is rather painful at times.

I remember seeing Microsoft devs saying they don't see enough of a usecase for tagged unions. I've at times wanted to strangle certain people. These two facts are related to one another.

My stance is that if you think your language doesn't need or benefit from tagged unions, either your language is very weird, or, more likely you're out of your goddamn mind. You are making me do really stupid things every time I need to represent a structure that can EITHER have a value of type A or a value of type B.

But I think C# will eventually get tagged unions. There's a proposal for it here. I would be overjoyed if it got implemented. It seems like it's been getting traction.

Also there was an entire section on unchecked exceptions that I removed because it wasn't interesting enough. Yes, C# could probably have checked exceptions and it didn't and it's a mistake. But ultimately it doesn't seem to have caused any make-or-break in a comparison with Java, which has them. They'd all be better off with returning an Error<T>. Short story is that the consequences of unchecked exceptions have been highly tolerable in practice.

Ecosystem State & FOSSness

C# is better than ever and the tooling ecosystem is better than ever. This is true of almost every language, but I think C# receives a rather high amount of improvements per version. Additionally the FOSS story is at its peak.

Roslyn, the bedrock of the toolchain, the compiler and analysis provider, is under MIT license. The fact that it does analysis as well is important, because this means you can use the wealth of Roslyn analyzers to do linting.

If your FOSS tooling lets you compile but you don't get any checking as you type, then your development experience is wildly substandard.

A lot of stupid crap with cross-platform compilation that used to be confusing or difficult is now rather easy to deal with. It's basically as easy as (1) use NET Core, (2) tell dotnet to build for Linux. These steps take no extra effort and the first step is the default way to write C# these days.

Dotnet is part of the SDK and contains functionality to create NET Core projects and to use other tools to build said projects. Dotnet is published under MIT, because the whole SDK and runtime are published under MIT.

Yes, the debugger situation is still bad -- there's no FOSS option for it, but this is more because nobody cares enough to go and solve it. Jetbrains proved anyone can do it if they have enough development time, since they wrote a debugger from scratch for their proprietary C# IDE Rider.

Where C# falls flat on its face is the "userspace" ecosystem. Plainly put, because C# is a Microsoft product, people with FOSS inclinations have steered clear of it to such a degree that the packages you have available are not even 10% of what packages a Python user has available, for example. People with FOSS inclinations are generally the people who write packages for your language!!

I guess if you really really hate leftpad, you might think this is a small bonus though.

Where-in I talk about Cross-Platform

The biggest thing the ecosystem has been lacking for me is a package, preferably FOSS, for developing cross-platform applications. Even if it's just cross-platform desktop applications.

Like yes, you can build C# to many platforms, no sweat. The same way you can build Rust to many platforms, some sweat. But if you can't show a good GUI on Linux, then it's not practically-speaking cross-platform for that purpose.

Microsoft has repeatedly done GUI stuff that, predictably, only works on Windows. And yes, Linux desktop is like 4%, but that 4% contains >50% of the people who create packages for your language's ecosystem, almost the exact point I made earlier. If a developer runs Linux and they can't have their app run on Linux, they are not going to touch your language with a ten foot pole for that purpose. I think this largely explains why C#'s ecosystem feels stunted.

The thing is, I'm not actually sure how bad or good the situation is, since most people just don't even try using C# for this usecase. There's a general... ecosystem malaise where few care to use the language for this, chiefly because of the tone that Microsoft set a decade ago. It's sad.

HOWEVER.

Avalonia, A New Hope?

Today we have Avalonia. Avalonia is an open-source framework that lets you build cross-platform applications in C#. It's MIT licensed. It will work on Windows, macOS, Linux, iOS, Android and also somehow in the browser. It seems to this by actually drawing pixels via SkiaSharp (or optionally Direct2D on Windows).

They make money by offering migration services from WPF app to Avalonia. Plus general support.

I can't say how good Avalonia is yet. I've researched a bit and it's not obviously bad, which is distinct from being good. But if it's actually good, this would be a holy grail for the ecosystem:

You could use a statically typed language that is productive for this type of software development to create cross-platform applications that have higher performance than the Electron slop. That's valuable!

This possibility warrants a much higher level of enthusiasm than I've seen, especially within the ecosystem itself. This is an ecosystem that was, for a while, entirely landlocked, only able to make Windows desktop applications.

I cannot overstate how important it is for a language's ecosystem to have a package like this and have it be good. Rust is still missing a good option. Gnome is unpleasant to use and buggy. Falling back to using Electron while writing Rust just seems like a bad joke. A lot of the Rust crates that are neither Electron nor Gnome tend to be really really undercooked.

And now I've actually talked myself into checking out Avalonia... I mean after writing all of that I feel like a charlatan for not having investigated it already.

72 notes

·

View notes

Note

The Rocky Horror characters at the mall?? :) What shops would they like? What antics would they get into?

-A.B.

OMG I LOVE IT SO MUUUCHHHH

Frank: He is headed straight to Victoria's Secret- he loves Victoria's secret. He's also been banned from numerous candy shops because he keeps trying to use the candy in attempts to seduce staff and other customers with it. It started out with just lollipops, which they couldn't really get after him for, but then it escalated into "inappropriate use of the whipped cream" and "noise complaints from his moaning whenever he ate anything" and he was out

Brad: Brad loves to go fishing through those big bins of DVDs in video stores!!! (Idk if they still exist I haven't seen them in my recent trips to the mall but they were excellent while they lasted. I probably would not have the patience for what Brad does, but I'm proud that he does it) One time he found a Monty Python in there and rode that high for MONTHS! It was his greatest moment. He's also always going to wherever the Mario games are!!!

Janet: Janet's favorite stores are Forever 21 and Macy's. She definitely gets obsessed with stuff, has her arms full, and then realizes halfway through that she most certainly cannot afford most of these things. She then has to spend an eternity deciding what to put back. There have been a few times in which a store has needed to inform her that they'll be closing in fifteen minutes and she's needed to make an incredibly quick turn-around. She always comes back for the things she's left behind once she comes into some more money, though, and then the process starts all over again and she is thrilled every time!

Riff Raff: Riff Raff loves to get nail polish at makeup stores and other little accessories that can make him feel especially elegant! He'll go more out there and get better outfits when he's not in Frank's servitude- but anything he gets will inevitably become Frank's if he's not careful. ALSO he always gets himself a nice little treat because Frank ususally gives him and Magenta leftovers. He's developed a true love of cheese popcorn and will get himself a bag if one of the stores is selling one. However, he does tend to look like a bird when he eats, so if he sees someone looking at him funny, and he notices that they are not a child (he'll turn the other way if they are) will stare them directly in the eyes and go kind of cross-eyed until they leave and he can enjoy his popcorn in peace

Magenta: Magenta will go and outright get her nails done at the mall. Then, depending on the day, she'll either get some perfume, clothes, accessories, or baking supplies! Sometimes she'll just go to a bakery and let them do the job for her. She also loves those fun vulgar signs that you can get to hang up. She's kind of seen as a test of sorts when she walks into a store because- if she doesn't like the service you provide- she will go online and leave the most scorching of reviews. If you pass the "Magenta test" your shop honestly probably gets a small celebration

Columbia: At every mall you will find a few special shops that sell AMAZING fashion and accessories that are so alternative and unique. You will find Columbia there every time!!! It's where all of her favorite outfits come from! She'll also always get donuts on her way out

Eddie: Eddie steals from Hot Topic. Its an unstoppable fact of life. No one can stop it

Doctor Scott: Doctor Scott loves bookstores!!! He'll always decide what his next read is on the spot, but he'll always pick something that he ends up enjoying. He's read so many things that it's surprising! He subbed for Brad and Janet's literature class one time, checked the curriculum, and had read every book on the list. How does he always pick something that he ends up enjoying? Well he reads the first 5 or so chapters in the store before he even buys it! He's caught a few employees looking at him funny for it a few times, but there is in fact, no rule against this action. Hes been doing it since he was like 20 and no one can stop him

Rocky: Rocky loves shops where he can get board games and special ice cream flavors- but his favorite thing that the mall has to offer is the ball pit. Oh how Rocky loves the ball pit

The Criminologist: the Criminologist loves getting pictures, either drawn, painted, or photographed, that he can frame and hang up on his walls! He especially loves ones of city skylines!!!

#THANK YOU A.B!!!!!!#I love requests everybody and am always eager for more!!!!#this was a FANTASTIC idea!!#rocky horror#rocky horror picture show#rocky horror show#richard o'brien#riff raff#frank n furter#brad majors#janet weiss#magenta rocky horror#columbia rocky horror#rocky rocky horror#eddie rocky horror#doctor scott#the criminologist rocky horror#rhps

29 notes

·

View notes

Note

fwiw and i have no idea what the artists are doing with it, a lot of the libraries that researchers are currently using to develop deep learning models from scratch are all open source built upon python, i'm sure monsanto has its own proprietary models hand crafted to make life as shitty as possible in the name of profit, but for research there's a lot of available resources library and dataset wise in related fields. It's not my area per se but i've learnt enough to get by in potentially applying it to my field within science, and largely the bottleneck in research is that the servers and graphics cards you need to train your models at a reasonable pace are of a size you can usually only get from google or amazon or facebook (although some rich asshole private universities from the US can actually afford the cost of the kind of server you need. But that's a different issue wrt resource availability in research in the global south. Basically: mas plata para la universidad pública la re puta que los parió)

Yes, one great thing about software development is that for every commercially closed thing there are open source versions that do better.

The possibilities for science are enormous. Gigantic. Much of modern science is based on handling huge amounts of data no human can process at once. Specially trained models can be key to things such as complex genetics, especially simulating proteomes. They already have been used there to incredible effect, but custom models are hard to make, I think AIs that can be reconfigured to particular cases might change things in a lot of fields forever.

I am concerned, however, of the overconsumption of electronics this might lead to when everyone wants their pet ChatGPT on their PC, but this isn't a thing that started with AI, electronic waste and planned obsolescence is already wasting countless resources in chips just to feed fashion items like iphones, this is a matter of consumption and making computers be more modular and longer lasting as the tools they are. I've also read that models recently developed in China consume much, much less resources and could potentially be available in common desktop computers, things might change as quickly as in 2 years.

25 notes

·

View notes

Text

We had an ask today that touched on Will's sprite and its layout, so I thought it'd be fun to do one of my backstage in game development posts.

One of my favourite things in Ren'py (the Python-based scripting system I use to create Made Marion) is its layered sprite system. Layered sprites allow creators to link different images together so that they don't have to make an entirely new sprite for every facial expression and outfit change that a sprite has. Instead they can make transparent images that layer on top of each other like paper dolls.

Will happens to be my most complex sprite for two reasons. First, he has two major outfits because he wears a flamboyant swashbuckling outfit when he's not incognito and a dark hooded outfit when he's doing more thiefy tasks. He also has two major arm poses that are used with all of his outfits because I wanted to visually indicate when he's feeling more closed-off versus more open.

Add a cape and hat to the deal and poor Arrapso (our lead/sprite artist) was going nuts with all the layered sprite bits and bobs. Will's sprite has quite the base setup. If I'd just gone completely basic with the sprite layout, I would have to type the following in every time I called up Will's sprite as he appears above: show will normaldowncloak hat_gloatinge gloatingm hathair normal normaldown That's a lot of typing. Early in the process, I spoke with Feniks, our awesome code consultant, about how to link sprite bits together. They showed me a great system that I used so that now all I have to do to call up the Will above is type: show will normaldown gloating Most of my sprites are sorted by outfit, but because Will is special, he's actually sorted by his arms. "Normaldown" is his hand on hip pose while wearing his normal swashbuckler outfit. If I type "normaldown" my code settings call up that set of arms and add on his normal outfit, the appropriate cloak background, and his hairstyle with him wearing his hat. "Gloating" is my compound facial expression that combines his "gloating" eyes and mouth.

I also have facial expressions that combine different eyes and mouths (angrye smilem often makes a really good sarcastic expression!). Will even has two sets of eyes, one normal and one with his hat's shadow over them. The shadowed eyes are automatically linked to the presence of his hat, so I don't have to type anything extra there when I'm coding in a scene.

Here's an example of what my layered linking code looks like for Will:

Yeah, he's got a LOT of bits and bobs. It was a ton of work to set up, but it made it soooo much easier during the bulk of my coding since 90% of the time I now only have to type in an arm pose and a facial expression to call a properly attired Will onto the scene.

This does somewhat limit my flexibility. As I mentioned in my ask, the automatic linkage of Will's casual arm pose to his hatless hairstyle means he cannot easily wear his casual outfit with his hat. That's fine, since generally he isn't going to. And I've added and removed some outfits here and there if they weren't working for how I ended up using a sprite.

Anyway, as a player, layered sprites are why Made Marion can have such cool sprites with tons of facial expressions, different arm poses, combat poses, and multiple outfits without the game being 10 gigabytes in size and without its coder going entirely bananas. That's pretty cool!

29 notes

·

View notes

Text

The Sims 4: New Game Patch (September 18th, 2024)

Your game should now read: PC: 1.109.185.1030 / Mac: 1.109.185.1230 / Console: 1.99.

Sul Sul Simmers!

This patch is a big one and brings many new updates and fixes into the game that span across different packs, including improvements to apartment walls, ceiling lighting, and a whole host of fixes for our recently released expansion pack, Lovestruck. These, along with so many more across both Base Game and Packs, can be found below.

Thank you for your continued reporting efforts at AnswersHQ. It really helps to let us know the issues you care most about. We hope these fixes keep improving your game and allow you to keep having more fun! More to come.

There’s a colorful update to Build/Buy items! 650 color variants have been added and there are new items such as archways, doors, and even ground covers to spruce up your terrain. Check out the vibrant colors and new items in the video. For more details, scroll to the end of the patch notes to see a list of all the Build/Buy items. We can’t wait to see how you mix and match!

youtube

Performance

Reducing Memory Usage:

Frequent Memory Updates – Increased the frequency of memory usage data updates to prevent out-of-memory crashes.

Improving Simulation Performance:

Streamlining Data Storage – We restructured how we store game data to reduce the overhead of using Python objects, making the game run faster and use less memory.

Efficient Buff Generation – Reworked how temporary data is generated and stored to fix previous issues and optimize memory usage.

Reducing Load Times:

Optimizing Render Target Allocation – Stopped unnecessary allocation of large color targets during certain rendering processes, now saving valuable memory especially in high-resolution settings.

DirectX 11 (DX11) Updates

We’re pleased to announce improvements to the DirectX 11 executable for PC users. While these updates are focused on Windows PC’s, Xbox Simmers will also see benefits from these changes as the Xbox runs a console-based variant of DirectX 11. Here’s what to expect:

NVIDIA and AMD

NVIDIA and AMD Graphics Cards – Players using NVIDIA and AMD GPUs will now automatically default to the DX11 executable. Players on other GPUs like Intel, will continue to enjoy The Sims 4 on DX9 until a later update.

DirectX 11 Enhancements – Faster Graphics Processing – Implemented changes to how graphics data is updated, reducing delays between the CPU and GPU for smoother gameplay.

Performance Boost with Constant Buffers (cbuffers) – This enhances performance by reducing overhead and managing memory more efficiently. Users with mid to high-end GPUs should notice improved performance!

New DirectX 9 Option in Graphics Setting – By default, Simmers on NVIDIA and AMD GPUs will launch The Sims 4 using DX11. If you prefer, you can switch to DX9 via Game Options > Graphics and enable the DirectX 9 toggle before re-launching The Sims 4 to play using DirectX 9.

Intel

Ongoing Intel Development – We are continuing development on bringing DirectX 11 to Intel GPU based PC’s but it needs a little longer before we can bring it to Simmers as the default option. For now Intel based Simmers will continue to use DirectX 9 when running The Sims 4.

DirectX 11 Opt-In – DirectX 11 is available for those Simmers who want to try it, including those using an Intel GPU, you may experience visual issues with mods. We recommend disabling all mods while using the -dx11 command line argument.

For detailed information about DirectX 11 and instructions on how to enter launch arguments for both the EA App and Steam, please visit here. If you encounter any issues with DirectX, you can find assistance here.

The Gallery

Pack filters now properly work for Home Chef Hustle in The Gallery and library. No more hiding.

Base Game

[AHQ] Outdoor lighting will no longer affect inside the room through ceilings. Let there be (proper) light.

[AHQ and AHQ] When attempting to save, “Error Code: 0“ no longer occurs related to Gigs or Neighborhood Stories.

[AHQ] Addressed an additional issue where the game would fail to load and display Error Code 123 when traveling between lots.

[AHQ] Clay and Future Cubes will no longer get left on lots during events. Pick up your trash and leave nothing behind.

[AHQ] Frogs, mice and fishes in tanks are now visible when placed in laptop mode. Welcome back, friends!

[AHQ] Sims will put their tablets and homework back into their inventory instead of placing them in the world as long as they are standing or sitting. Again, stop littering, Sims!

[AHQ] The call is not coming from inside the house–you'll stop getting invitational phone calls from your own Household Members.

[AHQ] “Complete a Daily Work Task” Want now completes properly after finishing a daily work task. Work work work.

[AHQ] Children now have the option to quit their “After School Activity“. Although quitters never prosper.

[AHQ] Thought bubbles of Sims will no longer appear through walls and floors. Keep your thoughts to yourself.

[AHQ] Family fortune - “Heal Negative Sentiments” task will now properly complete.

[AHQ] Sims will return from work or school and switch into the same Everyday Outfit they had on instead of defaulting to the first Everyday Outfit in the Create a Sim list. You will wear what I tell you to wear, Sim!

[AHQ] Certain cabinet/shelf combinations over kitchen sinks will no longer prevent Sim from washing in the sink. No excuses for not cleaning up after yourself.

[AHQ] Outdoor shadows now move smoothly without jumping on the screen on ultra graphics settings and at different Live Mode speeds.

[AHQ] Camera jittering is no longer observed in Build Buy mode after using Terrain Tools.

[AHQ] When recent neighborhood stories mention a Sim that died in another neighborhood, switching to the respective Sim household will now have an urn present. RIP.

[AHQ] Upgrading washer/dryer now completes Nerd Brain Aspiration.

[AHQ] Sims will now hold the acarajé dish the right way while eating. It’s delicious however you eat it, though.

[AHQ] Autonomous check infant no longer causes new random cold weather outfit to be generated for infant. They’re not cold.

Infant no longer stretches when crawling in deep snow. Although we question why you’re letting your infant crawl in the snow.

[AHQ] Teen Sim is able to take vacation days while working in the lifeguard career. It’s only fair.

[AHQ] Event goals remain visible even after editing from the Calendar.

Siblings can no longer be set as engaged in Create a Sim.

[AHQ] The Teen Goal Oriented Aspiration now gets completed properly after getting promoted at work.

Investigating missing Doodlepip splines. Reticulation progressing.

[AHQ] “Become friends with“ Want no longer appears for Sims with relationship equal or above friends. We’re already friends.

[AHQ] World icons on the world selection screen no longer move when middle-clicked.

[AHQ] Locked seed packets are unlocked in BB when using the gardening skill cheat “stats.set_skill_level Major_Gardening 10”. Gimme my seeds!

[AHQ] Female Sims' stomachs will no longer become invisible when paired with Masculine cargo pants in certain color swatches.

“Likes/Dislikes“ Sim preferences are now available for Sims created via Create a Sim Stories.

Sim animation will no longer pop when sitting on a stool and asking another Sim an inappropriate question.

[AHQ] Sim thumbnails are no longer low resolution on the Resume button.

[AHQ] Re-fixed issue where Skill List gets out of order, specifically when switching between Sims.

[AHQ] ‘ymTop_TshirtRolled_Yellow' top no longer clips with bottoms in Create a Sim.

[AHQ] Event Goals created through the Calendar properly show up during the Event, even if you edit the Event.

You can view the rest game patch notes on the Official site

26 notes

·

View notes

Note

If you are going to make a game here’s some things that might be helpful!

Game engines:

Godot: very new dev friendly and it’s free. Has its own programming language (GDscript) but also supports C#. It’s best for 2D games but it can do 3D also.

Unity: I don’t even know if I should be recommending Unity. It has caused me much pain and the suffering. But Unity has an incredible amount of guides and tutorials. And once you get the hang of something it’s hard to get caught on the same thing again. It also has a great Visual Studio integration and uses C#. I will warn you the unity animator is where all dreams go to die. It’s a tedious process but you can probably get some plugins to help with that.

Unreal: Don’t use it unless you’re building a very large or very detailed 3D game. It also uses C++ which is hell.

Renpy: Made for visual novels but has support for small mini games. It only supports Python iirc. Basically if you’re making a VN it’s renpy all the way otherwise you should look elsewhere.

What to learn: Game design and how to act as your own game designer. As a designer you need to know if a part of your game isn’t meshing with the rest of it and be willing to give up that part if needed. Also sound design is very important as well. If you want to make your own sounds audacity is perfect for recording and cutting up your clips. If you want to find sound effects I recommend freesound.org and the YouTube royalty free music database.

Sadly I can’t recommend a lot of places to learn this stuff because I’m taking Game Development in Uni. So most of my info comes from my lectures and stuff. One of my game design textbooks is pretty good but it’s around $40 CAD. It’s called the game designers playbook by Samantha Stahlke and Pejman Mirza-Babaei if you’re interested (fun fact there’s a photo of Toriel in there)

Anyway sorry for dumping this large ask on you I’m just really passionate about game design and I like to see other people get into it.

please do not apologize I'd never heard half of this stuff so this is super useful!! I've seen some godot tutorials on YouTube although so far I've played around with RPG maker MV (it was on sale. very very fiddly interface, i had trouble getting around it) and gamemaker, which recently became free for non-commercial use (a lot more approachable on first impact but like i said, haven't really done anything substantial in either yet).

mostly, I'm still in the super vague stage. I've got an idea for the main story conflict, the protagonist and their foil, the general aesthetic i want to go for (likely 2D graphics, but it would be cool to make like. small cutscenes in low-poly 3D) but not much else. haven't exactly decided on the gameplay either! it's gonna necessarily be rpg-esque, but I'm not much of a fan of classic turn-based combat so. I'm gonna check out other games and see if i can frankenstein anything cooler :P

#like for example. if i were ever to make a daemo game (knock on wood) i was thinking that it would work out quite well#if i made it a PUZZLE rpg kind if game. since the player character is no longer frisk/chara/connected to the player#and daemo doesn't really have any reason to 1) be possessed or 2) go on murderous rampages#so with a base game like undertale where those ARE crucial parts of player-world interaction I'd have to redirect it elsewhere#it being player input in the story#but I'm not sure puzzles are quite the solutions for this other story....... we'll see#answered asks#SAVE point#thank you so much!

83 notes

·

View notes

Text

introduction. hi :)

hello!!

i wanted to document my process of learning to code :) it's something i've always been interested in, but never got to, because i felt like it would take too much time to become good. well, the time will pass anyways, i need a project to stay sane. i have adhd, so it's somewhat of a developing hyperfixation / special interest for me.

i was using sololearn to learn, before i got paywalled :/ -- now i'm using freecodecamp and will probably also use codeacademy. on freecodecamp, i'm doing the certified full stack developer program which will (from my understanding) teach me to code front-end / client side as well back-end. i knew some HTML to begin with, but so far i've finished the basics of HTML mini-course and am on to structural HTML. After the full HTML topic, the course teaches me CSS, Java, Back-End Javascript and Python.

LONG STORY SHORT!! let's be friends, because i love doing this. also i need help. and advice. so much of it. m

#codeblr#studyblr#programming#coding#web development#web developing#progblr#backend development#frontend#frontenddevelopment#html#html css#java#javascript#python#fullstackdevelopment#computer science#stem#stemblr#introduction#looking for moots#mutuals#looking for mutuals

10 notes

·

View notes

Text

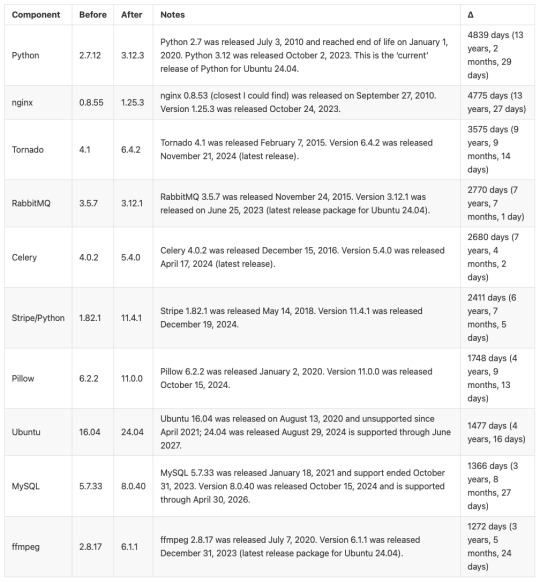

BRB... just upgrading Python

CW: nerdy, technical details.

Originally, MLTSHP (well, MLKSHK back then) was developed for Python 2. That was fine for 2010, but 15 years later, and Python 2 is now pretty ancient and unsupported. January 1st, 2020 was the official sunset for Python 2, and 5 years later, we’re still running things with it. It’s served us well, but we have to transition to Python 3.

Well, I bit the bullet and started working on that in earnest in 2023. The end of that work resulted in a working version of MLTSHP on Python 3. So, just ship it, right? Well, the upgrade process basically required upgrading all Python dependencies as well. And some (flyingcow, torndb, in particular) were never really official, public packages, so those had to be adopted into MLTSHP and upgraded as well. With all those changes, it required some special handling. Namely, setting up an additional web server that could be tested against the production database (unit tests can only go so far).

Here’s what that change comprised: 148 files changed, 1923 insertions, 1725 deletions. Most of those changes were part of the first commit for this branch, made on July 9, 2023 (118 files changed).

But by the end of that July, I took a break from this task - I could tell it wasn’t something I could tackle in my spare time at that time.

Time passes…

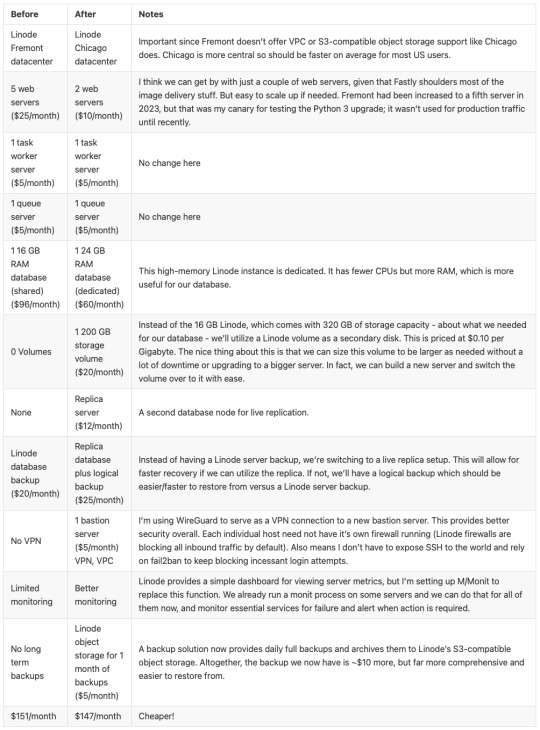

Fast forward to late 2024, and I take some time to revisit the Python 3 release work. Making a production web server for the new Python 3 instance was another big update, since I wanted the Docker container OS to be on the latest LTS edition of Ubuntu. For 2023, that was 20.04, but in 2025, it’s 24.04. I also wanted others to be able to test the server, which means the CDN layer would have to be updated to direct traffic to the test server (without affecting general traffic); I went with a client-side cookie that could target the Python 3 canary instance.

In addition to these upgrades, there were others to consider — MySQL, for one. We’ve been running MySQL 5, but version 9 is out. We settled on version 8 for now, but could also upgrade to 8.4… 8.0 is just the version you get for Ubuntu 24.04. RabbitMQ was another server component that was getting behind (3.5.7), so upgrading it to 3.12.1 (latest version for Ubuntu 24.04) seemed proper.

One more thing - our datacenter. We’ve been using Linode’s Fremont region since 2017. It’s been fine, but there are some emerging Linode features that I’ve been wanting. VPC support, for one. And object storage (basically the same as Amazon’s S3, but local, so no egress cost to-from Linode servers). Both were unavailable to Fremont, so I decided to go with their Chicago region for the upgrade.

Now we’re talking… this is now not just a “push a button” release, but a full-fleged, build everything up and tear everything down kind of release that might actually have some downtime (while trying to keep it short)!

I built a release plan document and worked through it. The key to the smooth upgrade I want was to make the cutover as seamless as possible. Picture it: once everything is set up for the new service in Chicago - new database host, new web servers and all, what do we need to do to make the switch almost instant? It’s Fastly, our CDN service.

All traffic to our service runs through Fastly. A request to the site comes in, Fastly routes it to the appropriate host, which in turns speaks to the appropriate database. So, to transition from one datacenter to the other, we need to basically change the hosts Fastly speaks to. Those hosts will already be set to talk to the new database. But that’s a key wrinkle - the new database…

The new database needs the data from the old database. And to make for a seamless transition, it needs to be up to the second in step with the old database. To do that, we have take a copy of the production data and get it up and running on the new database. Then, we need to have some process that will copy any new data to it since the last sync. This sounded a lot like replication to me, but the more I looked at doing it that way, I wasn’t confident I could set that up without bringing the production server down. That’s because any replica needs to start in a synchronized state. You can’t really achieve that with a live database. So, instead, I created my own sync process that would copy new data on a periodic basis as it came in.

Beyond this, we need a proper replication going in the new datacenter. In case the database server goes away unexpectedly, a replica of it allows for faster recovery and some peace of mind. Logical backups can be made from the replica and stored in Linode’s object storage if something really disastrous happens (like tables getting deleted by some intruder or a bad data migration).

I wanted better monitoring, too. We’ve been using Linode’s Longview service and that’s okay and free, but it doesn’t act on anything that might be going wrong. I decided to license M/Monit for this. M/Monit is so lightweight and nice, along with Monit running on each server to keep track of each service needed to operate stuff. Monit can be given instructions on how to self-heal certain things, but also provides alerts if something needs manual attention.

And finally, Linode’s Chicago region supports a proper VPC setup, which allows for all the connectivity between our servers to be totally private to their own subnet. It also means that I was able to set up an additional small Linode instance to serve as a bastion host - a server that can be used for a secure connection to reach the other servers on the private subnet. This is a lot more secure than before… we’ve never had a breach (at least, not to my knowledge), and this makes that even less likely going forward. Remote access via SSH is now unavailable without using the bastion server, so we don’t have to expose our servers to potential future ssh vulnerabilities.

So, to summarize: the MLTSHP Python 3 upgrade grew from a code release to a full stack upgrade, involving touching just about every layer of the backend of MLTSHP.

Here’s a before / after picture of some of the bigger software updates applied (apologies for using images for these tables, but Tumblr doesn’t do tables):

And a summary of infrastructure updates:

I’m pretty happy with how this has turned out. And I learned a lot. I’m a full-stack developer, so I’m familiar with a lot of devops concepts, but actually doing that role is newish to me. I got to learn how to set up a proper secure subnet for our set of hosts, making them more secure than before. I learned more about Fastly configuration, about WireGuard, about MySQL replication, and about deploying a large update to a live site with little to no downtime. A lot of that is due to meticulous release planning and careful execution. The secret for that is to think through each and every step - no matter how small. Document it, and consider the side effects of each. And with each step that could affect the public service, consider the rollback process, just in case it’s needed.

At this time, the server migration is complete and things are running smoothly. Hopefully we won’t need to do everything at once again, but we have a recipe if it comes to that.

15 notes

·

View notes