#SQL Data Access

Explore tagged Tumblr posts

Text

Unlock Data Governance: Revolutionary Table-Level Access in Modern Platforms

Dive into our latest blog on mastering data governance with Microsoft Fabric & Databricks. Discover key strategies for robust table-level access control and secure your enterprise's data. A must-read for IT pros! #DataGovernance #Security

View On WordPress

#Access Control#Azure Databricks#Big data analytics#Cloud Data Services#Data Access Patterns#Data Compliance#Data Governance#Data Lake Storage#Data Management Best Practices#Data Privacy#Data Security#Enterprise Data Management#Lakehouse Architecture#Microsoft Fabric#pyspark#Role-Based Access Control#Sensitive Data Protection#SQL Data Access#Table-Level Security

0 notes

Text

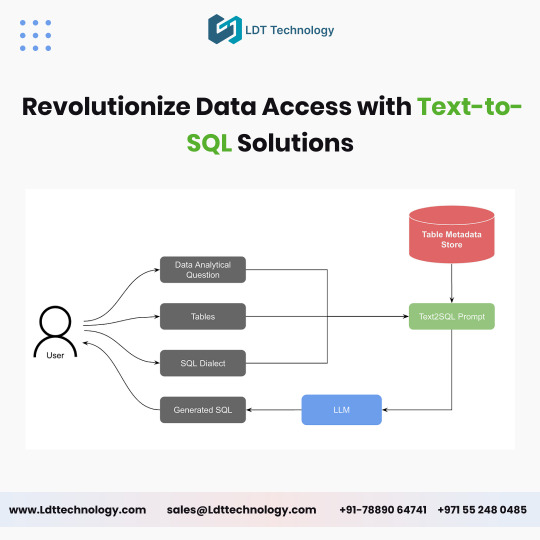

Unlocking the Power of Text-to-SQL Development for Modern Enterprises

In today’s data-driven world, businesses constantly seek innovative solutions to simplify data access and enhance decision-making. Text-to-SQL development has emerged as a game-changing technology, enabling users to interact with databases using natural language queries. By bridging the gap between technical complexity and user-friendly interaction, this technology is revolutionizing how enterprises harness the power of their data.

What is Text-to-SQL Development?

Text-to-SQL development involves creating systems that translate natural language queries into SQL statements. This allows users—even those without technical expertise—to retrieve data from complex databases by simply typing or speaking queries in plain language. For example, instead of writing a traditional SQL query like “SELECT * FROM sales WHERE region = 'North America',” a user can ask, “What are the sales figures for North America?”

The Benefits of Natural Language Queries

Natural language queries offer a seamless way to interact with databases, making data access more intuitive and accessible for non-technical users. By eliminating the need to learn complex query languages, organizations can empower more employees to leverage data in their roles. This democratization of data access drives better insights, faster decision-making, and improved collaboration across teams.

Enhancing Efficiency with Query Automation

Query automation is another key advantage of text-to-SQL development. By automating the translation of user input into SQL commands, enterprises can streamline their workflows and reduce the time spent on manual data retrieval. Query automation also minimizes the risk of errors, ensuring accurate and reliable results that support critical business operations.

Applications of Text-to-SQL in Modern Enterprises

Text-to-SQL development is being adopted across various industries, including healthcare, finance, retail, and logistics. Here are some real-world applications:

Business Intelligence: Empowering analysts to generate reports and dashboards without relying on IT teams.

Customer Support: Enabling support staff to quickly retrieve customer data and history during interactions.

Healthcare: Allowing medical professionals to access patient records and insights without navigating complex systems.

Building Intuitive Database Solutions

Creating intuitive database solutions is essential for organizations looking to stay competitive in today’s fast-paced environment. Text-to-SQL technology plays a pivotal role in achieving this by simplifying database interactions and enhancing the user experience. These solutions not only improve operational efficiency but also foster a culture of data-driven decision-making.

The Future of Text-to-SQL Development

As artificial intelligence and machine learning continue to advance, text-to-SQL development is poised to become even more sophisticated. Future innovations may include improved language understanding, support for multi-database queries, and integration with voice-activated assistants. These developments will further enhance the usability and versatility of text-to-SQL solutions.

Conclusion

Text-to-SQL development is transforming how businesses access and utilize their data. By leveraging natural language queries, query automation, and intuitive database solutions, enterprises can unlock new levels of efficiency and innovation. As this technology evolves, it will continue to play a crucial role in shaping the future of data interaction and decision-making.

Embrace the potential of text-to-SQL technology today and empower your organization to make smarter, faster, and more informed decisions.

#text-to-SQL development#natural language queries#data access#query automation#intuitive database solutions

0 notes

Video

youtube

Chat with local Database using LLM - access & understand data without ne...

0 notes

Text

Linked Server vs. Polybase: Choosing the Right Approach for SQL Server Data Integration

When it comes to pulling data from another Microsoft SQL Server, two popular options are Linked Server and Polybase. Both technologies enable you to access and query data from remote servers, but they have distinct differences in their implementation and use cases. In this article, we’ll explore the practical applications of Linked Server and Polybase, along with T-SQL code examples, to help you…

View On WordPress

0 notes

Text

Teaching myself SQL and quietly seething about all the places I worked who wanted so, so desperately to use Excel and Access for all of their data.

This is so much better. This is so much better I'm going to throw UP.

7 notes

·

View notes

Text

SQL Server 2022 Edition and License instructions

SQL Server 2022 Editions:

• Enterprise Edition is ideal for applications requiring mission critical in-memory performance, security, and high availability

• Standard Edition delivers fully featured database capabilities for mid-tier applications and data marts

SQL Server 2022 is also available in free Developer and Express editions. Web Edition is offered in the Services Provider License Agreement (SPLA) program only.

And the Online Store Keyingo Provides the SQL Server 2017/2019/2022 Standard Edition.

SQL Server 2022 licensing models

SQL Server 2022 offers customers a variety of licensing options aligned with how customers typically purchase specific workloads. There are two main licensing models that apply to SQL Server: PER CORE: Gives customers a more precise measure of computing power and a more consistent licensing metric, regardless of whether solutions are deployed on physical servers on-premises, or in virtual or cloud environments.

• Core based licensing is appropriate when customers are unable to count users/devices, have Internet/Extranet workloads or systems that integrate with external facing workloads.

• Under the Per Core model, customers license either by physical server (based on the full physical core count) or by virtual machine (based on virtual cores allocated), as further explained below.

SERVER + CAL: Provides the option to license users and/or devices, with low-cost access to incremental SQL Server deployments.

• Each server running SQL Server software requires a server license.

• Each user and/or device accessing a licensed SQL Server requires a SQL Server CAL that is the same version or newer – for example, to access a SQL Server 2019 Standard Edition server, a user would need a SQL Server 2019 or 2022 CAL.

Each SQL Server CAL allows access to multiple licensed SQL Servers, including Standard Edition and legacy Business Intelligence and Enterprise Edition Servers.SQL Server 2022 Editions availability by licensing model:

Physical core licensing – Enterprise Edition

• Customers can deploy an unlimited number of VMs or containers on the server and utilize the full capacity of the licensed hardware, by fully licensing the server (or server farm) with Enterprise Edition core subscription licenses or licenses with SA coverage based on the total number of physical cores on the servers.

• Subscription licenses or SA provide(s) the option to run an unlimited number of virtual machines or containers to handle dynamic workloads and fully utilize the hardware’s computing power.

Virtual core licensing – Standard/Enterprise Edition

When licensing by virtual core on a virtual OSE with subscription licenses or SA coverage on all virtual cores (including hyperthreaded cores) on the virtual OSE, customers may run any number of containers in that virtual OSE. This benefit applies both to Standard and Enterprise Edition.

Licensing for non-production use

SQL Server 2022 Developer Edition provides a fully featured version of SQL Server software—including all the features and capabilities of Enterprise Edition—licensed for development, test and demonstration purposes only. Customers may install and run the SQL Server Developer Edition software on any number of devices. This is significant because it allows customers to run the software on multiple devices (for testing purposes, for example) without having to license each non-production server system for SQL Server.

A production environment is defined as an environment that is accessed by end-users of an application (such as an Internet website) and that is used for more than gathering feedback or acceptance testing of that application.

SQL Server 2022 Developer Edition is a free product !

#SQL Server 2022 Editions#SQL Server 2022 Standard license#SQL Server 2019 Standard License#SQL Server 2017 Standard Liense

7 notes

·

View notes

Text

SQL Fundamentals #1: SQL Data Definition

Last year in college , I had the opportunity to dive deep into SQL. The course was made even more exciting by an amazing instructor . Fast forward to today, and I regularly use SQL in my backend development work with PHP. Today, I felt the need to refresh my SQL knowledge a bit, and that's why I've put together three posts aimed at helping beginners grasp the fundamentals of SQL.

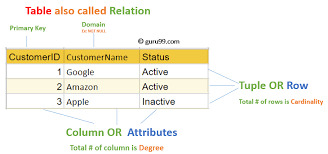

Understanding Relational Databases

Let's Begin with the Basics: What Is a Database?

Simply put, a database is like a digital warehouse where you store large amounts of data. When you work on projects that involve data, you need a place to keep that data organized and accessible, and that's where databases come into play.

Exploring Different Types of Databases

When it comes to databases, there are two primary types to consider: relational and non-relational.

Relational Databases: Structured Like Tables

Think of a relational database as a collection of neatly organized tables, somewhat like rows and columns in an Excel spreadsheet. Each table represents a specific type of information, and these tables are interconnected through shared attributes. It's similar to a well-organized library catalog where you can find books by author, title, or genre.

Key Points:

Tables with rows and columns.

Data is neatly structured, much like a library catalog.

You use a structured query language (SQL) to interact with it.

Ideal for handling structured data with complex relationships.

Non-Relational Databases: Flexibility in Containers

Now, imagine a non-relational database as a collection of flexible containers, more like bins or boxes. Each container holds data, but they don't have to adhere to a fixed format. It's like managing a diverse collection of items in various boxes without strict rules. This flexibility is incredibly useful when dealing with unstructured or rapidly changing data, like social media posts or sensor readings.

Key Points:

Data can be stored in diverse formats.

There's no rigid structure; adaptability is the name of the game.

Non-relational databases (often called NoSQL databases) are commonly used.

Ideal for handling unstructured or dynamic data.

Now, Let's Dive into SQL:

SQL is a :

Data Definition language ( what todays post is all about )

Data Manipulation language

Data Query language

Task: Building and Interacting with a Bookstore Database

Setting Up the Database

Our first step in creating a bookstore database is to establish it. You can achieve this with a straightforward SQL command:

CREATE DATABASE bookstoreDB;

SQL Data Definition

As the name suggests, this step is all about defining your tables. By the end of this phase, your database and the tables within it are created and ready for action.

1 - Introducing the 'Books' Table

A bookstore is all about its collection of books, so our 'bookstoreDB' needs a place to store them. We'll call this place the 'books' table. Here's how you create it:

CREATE TABLE books ( -- Don't worry, we'll fill this in soon! );

Now, each book has its own set of unique details, including titles, authors, genres, publication years, and prices. These details will become the columns in our 'books' table, ensuring that every book can be fully described.

Now that we have the plan, let's create our 'books' table with all these attributes:

CREATE TABLE books ( title VARCHAR(40), author VARCHAR(40), genre VARCHAR(40), publishedYear DATE, price INT(10) );

With this structure in place, our bookstore database is ready to house a world of books.

2 - Making Changes to the Table

Sometimes, you might need to modify a table you've created in your database. Whether it's correcting an error during table creation, renaming the table, or adding/removing columns, these changes are made using the 'ALTER TABLE' command.

For instance, if you want to rename your 'books' table:

ALTER TABLE books RENAME TO books_table;

If you want to add a new column:

ALTER TABLE books ADD COLUMN description VARCHAR(100);

Or, if you need to delete a column:

ALTER TABLE books DROP COLUMN title;

3 - Dropping the Table

Finally, if you ever want to remove a table you've created in your database, you can do so using the 'DROP TABLE' command:

DROP TABLE books;

To keep this post concise, our next post will delve into the second step, which involves data manipulation. Once our bookstore database is up and running with its tables, we'll explore how to modify and enrich it with new information and data. Stay tuned ...

Part2

#code#codeblr#java development company#python#studyblr#progblr#programming#comp sci#web design#web developers#web development#website design#webdev#website#tech#learn to code#sql#sqlserver#sql course#data#datascience#backend

112 notes

·

View notes

Text

Structured Query Language (SQL): A Comprehensive Guide

Structured Query Language, popularly called SQL (reported "ess-que-ell" or sometimes "sequel"), is the same old language used for managing and manipulating relational databases. Developed in the early 1970s by using IBM researchers Donald D. Chamberlin and Raymond F. Boyce, SQL has when you consider that end up the dominant language for database structures round the world.

Structured query language commands with examples

Today, certainly every important relational database control system (RDBMS)—such as MySQL, PostgreSQL, Oracle, SQL Server, and SQLite—uses SQL as its core question language.

What is SQL?

SQL is a website-specific language used to:

Retrieve facts from a database.

Insert, replace, and delete statistics.

Create and modify database structures (tables, indexes, perspectives).

Manage get entry to permissions and security.

Perform data analytics and reporting.

In easy phrases, SQL permits customers to speak with databases to shop and retrieve structured information.

Key Characteristics of SQL

Declarative Language: SQL focuses on what to do, now not the way to do it. For instance, whilst you write SELECT * FROM users, you don’t need to inform SQL the way to fetch the facts—it figures that out.

Standardized: SQL has been standardized through agencies like ANSI and ISO, with maximum database structures enforcing the core language and including their very own extensions.

Relational Model-Based: SQL is designed to work with tables (also called members of the family) in which records is organized in rows and columns.

Core Components of SQL

SQL may be damaged down into numerous predominant categories of instructions, each with unique functions.

1. Data Definition Language (DDL)

DDL commands are used to outline or modify the shape of database gadgets like tables, schemas, indexes, and so forth.

Common DDL commands:

CREATE: To create a brand new table or database.

ALTER: To modify an present table (add or put off columns).

DROP: To delete a table or database.

TRUNCATE: To delete all rows from a table but preserve its shape.

Example:

sq.

Copy

Edit

CREATE TABLE personnel (

id INT PRIMARY KEY,

call VARCHAR(one hundred),

income DECIMAL(10,2)

);

2. Data Manipulation Language (DML)

DML commands are used for statistics operations which include inserting, updating, or deleting information.

Common DML commands:

SELECT: Retrieve data from one or more tables.

INSERT: Add new records.

UPDATE: Modify existing statistics.

DELETE: Remove information.

Example:

square

Copy

Edit

INSERT INTO employees (id, name, earnings)

VALUES (1, 'Alice Johnson', 75000.00);

three. Data Query Language (DQL)

Some specialists separate SELECT from DML and treat it as its very own category: DQL.

Example:

square

Copy

Edit

SELECT name, income FROM personnel WHERE profits > 60000;

This command retrieves names and salaries of employees earning more than 60,000.

4. Data Control Language (DCL)

DCL instructions cope with permissions and access manage.

Common DCL instructions:

GRANT: Give get right of entry to to users.

REVOKE: Remove access.

Example:

square

Copy

Edit

GRANT SELECT, INSERT ON personnel TO john_doe;

five. Transaction Control Language (TCL)

TCL commands manage transactions to ensure data integrity.

Common TCL instructions:

BEGIN: Start a transaction.

COMMIT: Save changes.

ROLLBACK: Undo changes.

SAVEPOINT: Set a savepoint inside a transaction.

Example:

square

Copy

Edit

BEGIN;

UPDATE personnel SET earnings = income * 1.10;

COMMIT;

SQL Clauses and Syntax Elements

WHERE: Filters rows.

ORDER BY: Sorts effects.

GROUP BY: Groups rows sharing a assets.

HAVING: Filters companies.

JOIN: Combines rows from or greater tables.

Example with JOIN:

square

Copy

Edit

SELECT personnel.Name, departments.Name

FROM personnel

JOIN departments ON personnel.Dept_id = departments.Identity;

Types of Joins in SQL

INNER JOIN: Returns statistics with matching values in each tables.

LEFT JOIN: Returns all statistics from the left table, and matched statistics from the right.

RIGHT JOIN: Opposite of LEFT JOIN.

FULL JOIN: Returns all records while there is a in shape in either desk.

SELF JOIN: Joins a table to itself.

Subqueries and Nested Queries

A subquery is a query inside any other query.

Example:

sq.

Copy

Edit

SELECT name FROM employees

WHERE earnings > (SELECT AVG(earnings) FROM personnel);

This reveals employees who earn above common earnings.

Functions in SQL

SQL includes built-in features for acting calculations and formatting:

Aggregate Functions: SUM(), AVG(), COUNT(), MAX(), MIN()

String Functions: UPPER(), LOWER(), CONCAT()

Date Functions: NOW(), CURDATE(), DATEADD()

Conversion Functions: CAST(), CONVERT()

Indexes in SQL

An index is used to hurry up searches.

Example:

sq.

Copy

Edit

CREATE INDEX idx_name ON employees(call);

Indexes help improve the performance of queries concerning massive information.

Views in SQL

A view is a digital desk created through a question.

Example:

square

Copy

Edit

CREATE VIEW high_earners AS

SELECT call, salary FROM employees WHERE earnings > 80000;

Views are beneficial for:

Security (disguise positive columns)

Simplifying complex queries

Reusability

Normalization in SQL

Normalization is the system of organizing facts to reduce redundancy. It entails breaking a database into multiple related tables and defining overseas keys to link them.

1NF: No repeating groups.

2NF: No partial dependency.

3NF: No transitive dependency.

SQL in Real-World Applications

Web Development: Most web apps use SQL to manipulate customers, periods, orders, and content.

Data Analysis: SQL is extensively used in information analytics systems like Power BI, Tableau, and even Excel (thru Power Query).

Finance and Banking: SQL handles transaction logs, audit trails, and reporting systems.

Healthcare: Managing patient statistics, remedy records, and billing.

Retail: Inventory systems, sales analysis, and consumer statistics.

Government and Research: For storing and querying massive datasets.

Popular SQL Database Systems

MySQL: Open-supply and extensively used in internet apps.

PostgreSQL: Advanced capabilities and standards compliance.

Oracle DB: Commercial, especially scalable, agency-degree.

SQL Server: Microsoft’s relational database.

SQLite: Lightweight, file-based database used in cellular and desktop apps.

Limitations of SQL

SQL can be verbose and complicated for positive operations.

Not perfect for unstructured information (NoSQL databases like MongoDB are better acceptable).

Vendor-unique extensions can reduce portability.

Java Programming Language Tutorial

Dot Net Programming Language

C ++ Online Compliers

C Language Compliers

2 notes

·

View notes

Text

I'd rather have been asleep at 1:40am instead of laying there thinking about how uncertain and scared I feel right now, but I was laying there feeling uncertain and scared and realized I have no idea what people that work in offices DO.

I've only worked retail/customer service outside of the military and even while working in an office in the military, I had nothing to do.

At first I was put in one office that didn't need me so they just said "Here... Manage these files." and I was like "..... how, exactly??" and ended up retyping the labels for all of them because some were wrong and there was nothing else for me to do.

Then they gave me the personnel database. I rebuilt it and made it accessible in two days, and that was it. After that, I filled out orders for one person once. The only way I had anything to DO was by becoming the mail clerk which is another customer-facing, cyclically repetitive task that is never done and needs redone in the same way every day. After the mail was done, which took about two hours, I would leave or go sit in my office with nothing to do because the database was my whole job and it was DONE. It wouldn't need changed until the software was updated and that wouldn't be for years.

I had a once-a-week task of making a physical copy of the server which meant going into a closet, popping a tape into a machine, and waiting. I had to sit there with it. There was nothing else to do. I got in trouble for coloring in a coloring book while waiting for the backups to write and I was like "........ What am I SUPPOSED to do, then?????" "Work on the database!" "It's DONE........"

If it's SQL stuff do people sit around until the boss says "Hey, I need to know how much we spent on avocado toast this month." and then whip up a report real quick and wait for the boss to want a report on something else? What about when the boss doesn't need a report?

What do IT people do when things are working smoothly?

I can understand data entry, that would be like "Here we have all these waivers that were signed for the indoor trampoline business and these need plugged into the database manually because the online one was down so they're on paper." or something like that but what about when the online waivers do work what do they do then?

?????

It's like that episode of Seinfeld where George gets an office job but just sits there all day because he has no idea what he's supposed to be doing, no one tells him, and he eventually gets fired because he didn't do anything.

13 notes

·

View notes

Text

Ok more complaining abt work

More technically capable but genuinely sweet but also power hungry coworker keeps seeing other people (me) getting assigned to projects or working on things and then secretly tackles them herself IF she's interested and then makes no indication that she's working on it to the people assigned. So then we end up with these parallel solutions/reports and its like ????? I know you're "just trying to help" but the constant undercurrent is that we (I) am simply not to be trusted to be able to resolve anything and she needs to be the one to swoop in and save the day with her thing. It would be one thing if she was asked or if she was like hey can i join in? But no, us lesser mortals have to just be in a weird one-sided competition for things that just duplicate efforts!!!!!!!

Add to that the fact that she like WILLFULLY makes data architecture decisions that play specifically to HER strengths/certifications (she got our developers to provide the data UNSTRUCTURED so now we ALL need to get oracle drivers installed and need to learn SQL when she happily ALREADY has them installed and is used to SQL (none of us are data scientists or developers btw) so now she's the ONLY one w access to the data from the application) its like. Ok you clearly believe you are the ONLY one driving this unit or doing anything and unless anyone else is a coder we are basically useless. She keeps saying "i'll have to teach you SQL" like i haven't already gotten MEYE cert in it???? And then making off-hand remarks like "i need to build this so its easy for you to use it" like ms ma'am you are part of THIS team IM not your customer im your team member!!!!!!!!! OH AND WE HAVE ACTIAL DEVELOPERS FOR THE JOB YOU KEEP INSERTING YOURSELF INTO!!!!!!!!!!!!!

6 notes

·

View notes

Text

Why Tableau is Essential in Data Science: Transforming Raw Data into Insights

Data science is all about turning raw data into valuable insights. But numbers and statistics alone don’t tell the full story—they need to be visualized to make sense. That’s where Tableau comes in.

Tableau is a powerful tool that helps data scientists, analysts, and businesses see and understand data better. It simplifies complex datasets, making them interactive and easy to interpret. But with so many tools available, why is Tableau a must-have for data science? Let’s explore.

1. The Importance of Data Visualization in Data Science

Imagine you’re working with millions of data points from customer purchases, social media interactions, or financial transactions. Analyzing raw numbers manually would be overwhelming.

That’s why visualization is crucial in data science:

Identifies trends and patterns – Instead of sifting through spreadsheets, you can quickly spot trends in a visual format.

Makes complex data understandable – Graphs, heatmaps, and dashboards simplify the interpretation of large datasets.

Enhances decision-making – Stakeholders can easily grasp insights and make data-driven decisions faster.

Saves time and effort – Instead of writing lengthy reports, an interactive dashboard tells the story in seconds.

Without tools like Tableau, data science would be limited to experts who can code and run statistical models. With Tableau, insights become accessible to everyone—from data scientists to business executives.

2. Why Tableau Stands Out in Data Science

A. User-Friendly and Requires No Coding

One of the biggest advantages of Tableau is its drag-and-drop interface. Unlike Python or R, which require programming skills, Tableau allows users to create visualizations without writing a single line of code.

Even if you’re a beginner, you can:

✅ Upload data from multiple sources

✅ Create interactive dashboards in minutes

✅ Share insights with teams easily

This no-code approach makes Tableau ideal for both technical and non-technical professionals in data science.

B. Handles Large Datasets Efficiently

Data scientists often work with massive datasets—whether it’s financial transactions, customer behavior, or healthcare records. Traditional tools like Excel struggle with large volumes of data.

Tableau, on the other hand:

Can process millions of rows without slowing down

Optimizes performance using advanced data engine technology

Supports real-time data streaming for up-to-date analysis

This makes it a go-to tool for businesses that need fast, data-driven insights.

C. Connects with Multiple Data Sources

A major challenge in data science is bringing together data from different platforms. Tableau seamlessly integrates with a variety of sources, including:

Databases: MySQL, PostgreSQL, Microsoft SQL Server

Cloud platforms: AWS, Google BigQuery, Snowflake

Spreadsheets and APIs: Excel, Google Sheets, web-based data sources

This flexibility allows data scientists to combine datasets from multiple sources without needing complex SQL queries or scripts.

D. Real-Time Data Analysis

Industries like finance, healthcare, and e-commerce rely on real-time data to make quick decisions. Tableau’s live data connection allows users to:

Track stock market trends as they happen

Monitor website traffic and customer interactions in real time

Detect fraudulent transactions instantly

Instead of waiting for reports to be generated manually, Tableau delivers insights as events unfold.

E. Advanced Analytics Without Complexity

While Tableau is known for its visualizations, it also supports advanced analytics. You can:

Forecast trends based on historical data

Perform clustering and segmentation to identify patterns

Integrate with Python and R for machine learning and predictive modeling

This means data scientists can combine deep analytics with intuitive visualization, making Tableau a versatile tool.

3. How Tableau Helps Data Scientists in Real Life

Tableau has been adopted by the majority of industries to make data science more impactful and accessible. This is applied in the following real-life scenarios:

A. Analytics for Health Care

Tableau is deployed by hospitals and research institutions for the following purposes:

Monitor patient recovery rates and predict outbreaks of diseases

Analyze hospital occupancy and resource allocation

Identify trends in patient demographics and treatment results

B. Finance and Banking

Banks and investment firms rely on Tableau for the following purposes:

✅ Detect fraud by analyzing transaction patterns

✅ Track stock market fluctuations and make informed investment decisions

✅ Assess credit risk and loan performance

C. Marketing and Customer Insights

Companies use Tableau to:

✅ Track customer buying behavior and personalize recommendations

✅ Analyze social media engagement and campaign effectiveness

✅ Optimize ad spend by identifying high-performing channels

D. Retail and Supply Chain Management

Retailers leverage Tableau to:

✅ Forecast product demand and adjust inventory levels

✅ Identify regional sales trends and adjust marketing strategies

✅ Optimize supply chain logistics and reduce delivery delays

These applications show why Tableau is a must-have for data-driven decision-making.

4. Tableau vs. Other Data Visualization Tools

There are many visualization tools available, but Tableau consistently ranks as one of the best. Here’s why:

Tableau vs. Excel – Excel struggles with big data and lacks interactivity; Tableau handles large datasets effortlessly.

Tableau vs. Power BI – Power BI is great for Microsoft users, but Tableau offers more flexibility across different data sources.

Tableau vs. Python (Matplotlib, Seaborn) – Python libraries require coding skills, while Tableau simplifies visualization for all users.

This makes Tableau the go-to tool for both beginners and experienced professionals in data science.

5. Conclusion

Tableau has become an essential tool in data science because it simplifies data visualization, handles large datasets, and integrates seamlessly with various data sources. It enables professionals to analyze, interpret, and present data interactively, making insights accessible to everyone—from data scientists to business leaders.

If you’re looking to build a strong foundation in data science, learning Tableau is a smart career move. Many data science courses now include Tableau as a key skill, as companies increasingly demand professionals who can transform raw data into meaningful insights.

In a world where data is the driving force behind decision-making, Tableau ensures that the insights you uncover are not just accurate—but also clear, impactful, and easy to act upon.

#data science course#top data science course online#top data science institute online#artificial intelligence course#deepseek#tableau

3 notes

·

View notes

Text

Protect Your Laravel APIs: Common Vulnerabilities and Fixes

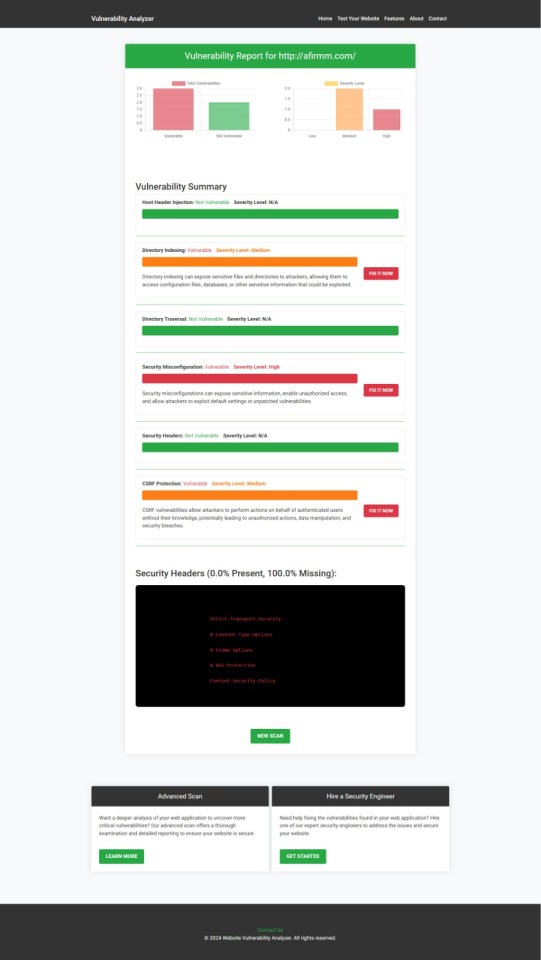

API Vulnerabilities in Laravel: What You Need to Know

As web applications evolve, securing APIs becomes a critical aspect of overall cybersecurity. Laravel, being one of the most popular PHP frameworks, provides many features to help developers create robust APIs. However, like any software, APIs in Laravel are susceptible to certain vulnerabilities that can leave your system open to attack.

In this blog post, we’ll explore common API vulnerabilities in Laravel and how you can address them, using practical coding examples. Additionally, we’ll introduce our free Website Security Scanner tool, which can help you assess and protect your web applications.

Common API Vulnerabilities in Laravel

Laravel APIs, like any other API, can suffer from common security vulnerabilities if not properly secured. Some of these vulnerabilities include:

>> SQL Injection SQL injection attacks occur when an attacker is able to manipulate an SQL query to execute arbitrary code. If a Laravel API fails to properly sanitize user inputs, this type of vulnerability can be exploited.

Example Vulnerability:

$user = DB::select("SELECT * FROM users WHERE username = '" . $request->input('username') . "'");

Solution: Laravel’s query builder automatically escapes parameters, preventing SQL injection. Use the query builder or Eloquent ORM like this:

$user = DB::table('users')->where('username', $request->input('username'))->first();

>> Cross-Site Scripting (XSS) XSS attacks happen when an attacker injects malicious scripts into web pages, which can then be executed in the browser of a user who views the page.

Example Vulnerability:

return response()->json(['message' => $request->input('message')]);

Solution: Always sanitize user input and escape any dynamic content. Laravel provides built-in XSS protection by escaping data before rendering it in views:

return response()->json(['message' => e($request->input('message'))]);

>> Improper Authentication and Authorization Without proper authentication, unauthorized users may gain access to sensitive data. Similarly, improper authorization can allow unauthorized users to perform actions they shouldn't be able to.

Example Vulnerability:

Route::post('update-profile', 'UserController@updateProfile');

Solution: Always use Laravel’s built-in authentication middleware to protect sensitive routes:

Route::middleware('auth:api')->post('update-profile', 'UserController@updateProfile');

>> Insecure API Endpoints Exposing too many endpoints or sensitive data can create a security risk. It’s important to limit access to API routes and use proper HTTP methods for each action.

Example Vulnerability:

Route::get('user-details', 'UserController@getUserDetails');

Solution: Restrict sensitive routes to authenticated users and use proper HTTP methods like GET, POST, PUT, and DELETE:

Route::middleware('auth:api')->get('user-details', 'UserController@getUserDetails');

How to Use Our Free Website Security Checker Tool

If you're unsure about the security posture of your Laravel API or any other web application, we offer a free Website Security Checker tool. This tool allows you to perform an automatic security scan on your website to detect vulnerabilities, including API security flaws.

Step 1: Visit our free Website Security Checker at https://free.pentesttesting.com. Step 2: Enter your website URL and click "Start Test". Step 3: Review the comprehensive vulnerability assessment report to identify areas that need attention.

Screenshot of the free tools webpage where you can access security assessment tools.

Example Report: Vulnerability Assessment

Once the scan is completed, you'll receive a detailed report that highlights any vulnerabilities, such as SQL injection risks, XSS vulnerabilities, and issues with authentication. This will help you take immediate action to secure your API endpoints.

An example of a vulnerability assessment report generated with our free tool provides insights into possible vulnerabilities.

Conclusion: Strengthen Your API Security Today

API vulnerabilities in Laravel are common, but with the right precautions and coding practices, you can protect your web application. Make sure to always sanitize user input, implement strong authentication mechanisms, and use proper route protection. Additionally, take advantage of our tool to check Website vulnerability to ensure your Laravel APIs remain secure.

For more information on securing your Laravel applications try our Website Security Checker.

#cyber security#cybersecurity#data security#pentesting#security#the security breach show#laravel#php#api

2 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

Impact of successful SQLi, examples

Three common ways SQL injection attacks can impact web apps: - unauthorized access to sensitive data (user lists, personally identifiable information (PII), credit card numbers) - data modification/deletion - administrative access to the system (-> unauthorized access to specific areas of the system or malicious actions performance) examples as always speak louder than explanations! there are going to be two of them 1. Equifax data breach (2017) - 1st way Hackers exploited a SQL injection flaw in the company’s system, breaching the personal records of 143 million users, making it one of the largest cybercrimes related to identity theft. Damages: The total cost of the settlement included $300 million to a fund for victim compensation, $175 million to the states and territories in the agreement, and $100 million to the CFPB in fines. 2. Play Station Network Outage or PSN Hack (2011) - 2nd way Tthe result of an "external intrusion" on Sony's PlayStation Network and Qriocity services, in which personal details from approximately 77 million accounts were compromised and prevented users of PlayStation 3 and PlayStation Portable consoles from accessing the service. Damages: Sony stated that the outage costs were $171 million. more recent CVEs: CVE-2023-32530. SQL injection in security product dashboard using crafted certificate fields CVE-2020-12271. SQL injection in firewall product's admin interface or user portal, as exploited in the wild per CISA KEV. ! this vulnerability has critical severity with a score 10. Description: A SQL injection issue was found in SFOS 17.0, 17.1, 17.5, and 18.0 before 2020-04-25 on Sophos XG Firewall devices, as exploited in the wild in April 2020. This affected devices configured with either the administration (HTTPS) service or the User Portal exposed on the WAN zone. A successful attack may have caused remote code execution that exfiltrated usernames and hashed passwords for the local device admin(s), portal admins, and user accounts used for remote access (but not external Active Directory or LDAP passwords) CVE-2019-3792. An automation system written in Go contains an API that is vulnerable to SQL injection allowing the attacker to read privileged data. ! this vulnerability has medium severity with a score 6.8.

3 notes

·

View notes

Text

Gemini Code Assist Enterprise: AI App Development Tool

Introducing Gemini Code Assist Enterprise’s AI-powered app development tool that allows for code customisation.

The modern economy is driven by software development. Unfortunately, due to a lack of skilled developers, a growing number of integrations, vendors, and abstraction levels, developing effective apps across the tech stack is difficult.

To expedite application delivery and stay competitive, IT leaders must provide their teams with AI-powered solutions that assist developers in navigating complexity.

Google Cloud thinks that offering an AI-powered application development solution that works across the tech stack, along with enterprise-grade security guarantees, better contextual suggestions, and cloud integrations that let developers work more quickly and versatile with a wider range of services, is the best way to address development challenges.

Google Cloud is presenting Gemini Code Assist Enterprise, the next generation of application development capabilities.

Beyond AI-powered coding aid in the IDE, Gemini Code Assist Enterprise goes. This is application development support at the corporate level. Gemini’s huge token context window supports deep local codebase awareness. You can use a wide context window to consider the details of your local codebase and ongoing development session, allowing you to generate or transform code that is better appropriate for your application.

With code customization, Code Assist Enterprise not only comprehends your local codebase but also provides code recommendations based on internal libraries and best practices within your company. As a result, Code Assist can produce personalized code recommendations that are more precise and pertinent to your company. In addition to finishing difficult activities like updating the Java version across a whole repository, developers can remain in the flow state for longer and provide more insights directly to their IDEs. Because of this, developers can concentrate on coming up with original solutions to problems, which increases job satisfaction and gives them a competitive advantage. You can also come to market more quickly.

GitLab.com and GitHub.com repos can be indexed by Gemini Code Assist Enterprise code customisation; support for self-hosted, on-premise repos and other source control systems will be added in early 2025.

Yet IDEs are not the only tool used to construct apps. It integrates coding support into all of Google Cloud’s services to help specialist coders become more adaptable builders. The time required to transition to new technologies is significantly decreased by a code assistant, which also integrates the subtleties of an organization’s coding standards into its recommendations. Therefore, the faster your builders can create and deliver applications, the more services it impacts. To meet developers where they are, Code Assist Enterprise provides coding assistance in Firebase, Databases, BigQuery, Colab Enterprise, Apigee, and Application Integration. Furthermore, each Gemini Code Assist Enterprise user can access these products’ features; they are not separate purchases.

Gemini Code Support BigQuery enterprise users can benefit from SQL and Python code support. With the creation of pre-validated, ready-to-run queries (data insights) and a natural language-based interface for data exploration, curation, wrangling, analysis, and visualization (data canvas), they can enhance their data journeys beyond editor-based code assistance and speed up their analytics workflows.

Furthermore, Code Assist Enterprise does not use the proprietary data from your firm to train the Gemini model, since security and privacy are of utmost importance to any business. Source code that is kept separate from each customer’s organization and kept for usage in code customization is kept in a Google Cloud-managed project. Clients are in complete control of which source repositories to utilize for customization, and they can delete all data at any moment.

Your company and data are safeguarded by Google Cloud’s dedication to enterprise preparedness, data governance, and security. This is demonstrated by projects like software supply chain security, Mandiant research, and purpose-built infrastructure, as well as by generative AI indemnification.

Google Cloud provides you with the greatest tools for AI coding support so that your engineers may work happily and effectively. The market is also paying attention. Because of its ability to execute and completeness of vision, Google Cloud has been ranked as a Leader in the Gartner Magic Quadrant for AI Code Assistants for 2024.

Gemini Code Assist Enterprise Costs

In general, Gemini Code Assist Enterprise costs $45 per month per user; however, a one-year membership that ends on March 31, 2025, will only cost $19 per month per user.

Read more on Govindhtech.com

#Gemini#GeminiCodeAssist#AIApp#AI#AICodeAssistants#CodeAssistEnterprise#BigQuery#Geminimodel#News#Technews#TechnologyNews#Technologytrends#Govindhtech#technology

3 notes

·

View notes

Text

The Skills I Acquired on My Path to Becoming a Data Scientist

Data science has emerged as one of the most sought-after fields in recent years, and my journey into this exciting discipline has been nothing short of transformative. As someone with a deep curiosity for extracting insights from data, I was naturally drawn to the world of data science. In this blog post, I will share the skills I acquired on my path to becoming a data scientist, highlighting the importance of a diverse skill set in this field.

The Foundation — Mathematics and Statistics

At the core of data science lies a strong foundation in mathematics and statistics. Concepts such as probability, linear algebra, and statistical inference form the building blocks of data analysis and modeling. Understanding these principles is crucial for making informed decisions and drawing meaningful conclusions from data. Throughout my learning journey, I immersed myself in these mathematical concepts, applying them to real-world problems and honing my analytical skills.

Programming Proficiency

Proficiency in programming languages like Python or R is indispensable for a data scientist. These languages provide the tools and frameworks necessary for data manipulation, analysis, and modeling. I embarked on a journey to learn these languages, starting with the basics and gradually advancing to more complex concepts. Writing efficient and elegant code became second nature to me, enabling me to tackle large datasets and build sophisticated models.

Data Handling and Preprocessing

Working with real-world data is often messy and requires careful handling and preprocessing. This involves techniques such as data cleaning, transformation, and feature engineering. I gained valuable experience in navigating the intricacies of data preprocessing, learning how to deal with missing values, outliers, and inconsistent data formats. These skills allowed me to extract valuable insights from raw data and lay the groundwork for subsequent analysis.

Data Visualization and Communication

Data visualization plays a pivotal role in conveying insights to stakeholders and decision-makers. I realized the power of effective visualizations in telling compelling stories and making complex information accessible. I explored various tools and libraries, such as Matplotlib and Tableau, to create visually appealing and informative visualizations. Sharing these visualizations with others enhanced my ability to communicate data-driven insights effectively.

Machine Learning and Predictive Modeling

Machine learning is a cornerstone of data science, enabling us to build predictive models and make data-driven predictions. I delved into the realm of supervised and unsupervised learning, exploring algorithms such as linear regression, decision trees, and clustering techniques. Through hands-on projects, I gained practical experience in building models, fine-tuning their parameters, and evaluating their performance.

Database Management and SQL

Data science often involves working with large datasets stored in databases. Understanding database management and SQL (Structured Query Language) is essential for extracting valuable information from these repositories. I embarked on a journey to learn SQL, mastering the art of querying databases, joining tables, and aggregating data. These skills allowed me to harness the power of databases and efficiently retrieve the data required for analysis.

Domain Knowledge and Specialization

While technical skills are crucial, domain knowledge adds a unique dimension to data science projects. By specializing in specific industries or domains, data scientists can better understand the context and nuances of the problems they are solving. I explored various domains and acquired specialized knowledge, whether it be healthcare, finance, or marketing. This expertise complemented my technical skills, enabling me to provide insights that were not only data-driven but also tailored to the specific industry.

Soft Skills — Communication and Problem-Solving

In addition to technical skills, soft skills play a vital role in the success of a data scientist. Effective communication allows us to articulate complex ideas and findings to non-technical stakeholders, bridging the gap between data science and business. Problem-solving skills help us navigate challenges and find innovative solutions in a rapidly evolving field. Throughout my journey, I honed these skills, collaborating with teams, presenting findings, and adapting my approach to different audiences.

Continuous Learning and Adaptation

Data science is a field that is constantly evolving, with new tools, technologies, and trends emerging regularly. To stay at the forefront of this ever-changing landscape, continuous learning is essential. I dedicated myself to staying updated by following industry blogs, attending conferences, and participating in courses. This commitment to lifelong learning allowed me to adapt to new challenges, acquire new skills, and remain competitive in the field.

In conclusion, the journey to becoming a data scientist is an exciting and dynamic one, requiring a diverse set of skills. From mathematics and programming to data handling and communication, each skill plays a crucial role in unlocking the potential of data. Aspiring data scientists should embrace this multidimensional nature of the field and embark on their own learning journey. If you want to learn more about Data science, I highly recommend that you contact ACTE Technologies because they offer Data Science courses and job placement opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested. By acquiring these skills and continuously adapting to new developments, they can make a meaningful impact in the world of data science.

#data science#data visualization#education#information#technology#machine learning#database#sql#predictive analytics#r programming#python#big data#statistics

14 notes

·

View notes