#Synthetic Data in Machine Learning

Explore tagged Tumblr posts

Text

Synthetic data offers an innovative approach to training machine learning models without compromising privacy Discover its benefits and limitations in this comprehensive guide.

#Synthetic Data#Synthetic Data in Machine Learning#AI Generated Synthetic Data#synthetic data generation#synthetic data generation tools

0 notes

Text

🚨 Stop Believing the AI Hype, that’s the title of my latest conversation on the Localization Fireside Chat with none other than @Dr. Sidney Shapiro, Assistant Professor at the @Dillon School of Business, University of Lethbridge. We dive deep into what AI can actually do, and more importantly, what it can’t. From vibe coders and synthetic data to the real-world consequences of over-trusting black-box models, this episode is packed with insights for anyone navigating the fast-moving AI space. 🧠 Dr. Shapiro brings an academic lens and real-world practicality to an often-hyped conversation. If you're building, deploying, or just curious about AI, this is a must-read. 🎥 catch the full interview on YouTube: 👉 https://youtu.be/wsqN0964neM Would love your thoughts, are we putting too much faith in AI? #LocalizationFiresideChat #AIethics #DataScience #AIstrategy #GenerativeAI #MachineLearning #CanadianTech #HigherEd #Localization #TranslationTechnology #Podcast

#AI and Academia#AI Ethics#AI for Business#AI Hype#AI in Canada#AI Myths#AI Strategy#Artificial Intelligence#Canadian Podcast#Canadian Tech#chatgpt#Data Analytics#Data Science#Dr. Sidney Shapiro#Explainable AI#Future of AI#Generative AI#Localization Fireside Chat#Machine Learning#Robin Ayoub#Synthetic Data#Technology Trends

0 notes

Text

🤖🔥 Say hello to Groot N1! Nvidia’s game-changing open-source AI is here to supercharge humanoid robots! 💥🧠 Unveiled at #GTC2025 🏟️ Welcome to the era of versatile robotics 🚀🌍 #AI #Robotics #Nvidia #GrootN1 #TechNews #FutureIsNow 🤩🔧

#AI-powered automation#DeepMind#dual-mode AI#Gemini Robotics#general-purpose machines#Groot N1#GTC 2025#humanoid robots#Nvidia#open-source AI#robot learning#robotic intelligence#robotics innovation#synthetic training data#versatile robotics

0 notes

Text

Synthetic Data for Respiratory Diseases: Real-World Accuracy Achieved

Ground-glass opacities and other lung lesions pose major challenges for AI diagnostic models, largely because even expert radiologists find them difficult to precisely annotate. This scarcity of data with high-quality annotations makes it tough for AI teams to train robust models on real-world data. RYVER.AI addresses this challenge by generating pre-annotated synthetic medical images on demand. Their previous studies confirmed that synthetically created images containing lung nodules meet real-world standards. Now, they’ve extended this success to respiratory infection cases. In a recent study, synthetic COVID-19 CT scans with ground-glass opacities were used to train a segmentation model, and the results were comparable to those from a model trained solely on real-world data - proving that synthetic images can replace or supplement real cases without compromising AI performance. That’s why Segmed and RYVER. AI is joining forces to develop a comprehensive foundation model for generating diverse medical images tailored to various needs. Which lesions give you the most trouble? Let us know in the comments, or reach out to discuss how synthetic data can help streamline your workflow.

#Medical AI#Synthetic Data#AI Training#Machine Learning#Deep Learning#AI Research#Health Care AI#MedTech#Radiology#Pharma

0 notes

Text

Synthetic Data Unlocks Data-Driven Innovation Without Privacy Concerns

Diverse datasets are crucial for data driven-activities activities like testing, training, and development. However, accessing real-world data often comes with hurdles – privacy concerns, data availability limitations, and regulatory restrictions can all stall progress. This is where synthetic data generation emerges as a game-changer. What is Synthetic Data?Where Does Synthetic Data…

0 notes

Text

"Revolutionizing Biotech: How AI is Transforming the Industry"

The biotech industry is on the cusp of a revolution, and Artificial Intelligence (AI) is leading the charge. AI is transforming the way biotech researchers and developers work, enabling them to make groundbreaking discoveries and develop innovative solutions at an unprecedented pace. “Accelerating Scientific Discovery with AI” AI is augmenting human capabilities in biotech research, enabling…

View On WordPress

#Agricultural Biotech#agriculture#ai#Artificial Intelligence#bioinformatics#Biological Systems#Biomedical Engineering.#Biotech#Data Analysis#Drug Discovery#Environment#genetics#genomics#healthcare#innovation#Machine Learning#personalized medicine#Precision Medicine#Research#science#synthetic biology#technology

0 notes

Text

Dp X Marvel #6

They called him Wraith.

Not Phantom. Not Fenton. Not Danny. Those names belonged to a ghost of a boy that never made it out of a cold, steel lab buried beneath the earth—forgotten by the world, forsaken by the stars. Wraith was something else. A project. A weapon. An experiment that should have failed but didn’t. The product of every nightmare HYDRA ever dared to dream. Not even the Red Room could engineer something so devastating. Not even Arnim Zola’s data-crazed AI mind could fathom the scope of him. Even the Winter Soldier—their perfect killer—trembled at the mere scent of Wraith in the air. He was the one he whispered about when the old ghosts came clawing through his fractured memories. “The one they locked away. The one even I wasn’t allowed to see.”

They started with the basics: a perfected version of the Super Soldier Serum. Not the knockoffs that littered the black market. Not the diluted trash the Flag Smashers used. No, this was the pure, concentrated essence of bioengineered physical supremacy. It made him fast. Strong. Deadly. But that wasn’t enough. HYDRA didn’t want a man—they wanted a god.

They replaced his bones with vibranium, stolen from the very heart of Wakanda in a mission so secret even the Dora Milaje never learned of it. His skeleton was a lightweight fortress, a perfect balance between flexibility and unbreakability. He could be shot point-blank with an anti-tank rifle and not flinch. He could leap from ten thousand feet and land without cracking a toe. His spine alone was stronger than most armored vehicles.

They burned out his organs, one by one, replacing them with biochemical synth-constructs, living machines that pulsed with a power that didn’t belong in the realm of science. His lungs filtered radiation. His kidneys could process raw acid. His stomach could digest metal. Disease didn’t touch him. Poisons turned inert inside him. He didn’t age. He didn’t sleep. He didn’t need to.

His blood… wasn’t blood. It shimmered when it moved. Viscous and luminous, like glowing starlight mixed with oil. Warm, but synthetic. Slick, but alive. It wasn’t just Extremis. It wasn’t just ectoplasm. It was something else entirely. Something that hummed when it moved, that responded to emotion, that sparked with eldritch light when he was angry. It healed him before injury even registered. It whispered to him in languages he never learned but somehow knew. It could ignite with a thought and turn his veins into conduits of fire and ice and terror. They bled him once, just to see what would happen. The blood ate through the floor, hissed like a serpent, and disappeared through the cracks. The lab tech who performed the procedure dissolved within thirty seconds.

And then there was his skin. It was soft, warm, perfectly human. If you touched him, he felt like a boy in his late teens—young, firm, deceptively fragile. But beneath that flawless layer of polymer-fused dermal tissue was something that didn’t burn, didn’t freeze, didn’t shatter. He walked through fire. He dove into the Mariana Trench. He stood unflinching beneath arctic storms and tropical cyclones. He once fought a vibranium-clawed assassin barehanded and didn’t bleed. The assassin didn’t survive.

But the worst part—what made him truly unkillable—was his heart and his brain.

They didn’t understand what they’d done. HYDRA liked to pretend they were gods, but even gods get scared when they tamper with forces they don’t understand. His heart wasn’t just a pump anymore—it was a fusion of quantum mechanics, biomechanical tubing, and something that throbbed with ectoplasmic radiation. It pulsed at its own rhythm, immune to external manipulation. It couldn’t be stopped. You could shoot him in the chest, burn him to ash, decapitate him—and the heart would keep beating. Worse, it could restart him.

The brain was worse. They hadn’t just enhanced his intelligence. They hadn’t just implanted neural tech and a language matrix and memories from assassins, soldiers, pilots, hackers, spies. No. They’d opened a door in his mind. They’d let something in. Something ancient. Something not from this world. Something not even from this dimension. It whispered to him when the moon was full. It guided his hands during missions. It told him where to strike, who to kill, what to become. Sometimes he heard it laughing.

Sometimes he laughed with it.

Wraith was the culmination of every evil science, every secret experiment, every whispered nightmare stitched together into a boy-shaped thing that wore a black suit and a bored expression and had a voice so calm it made seasoned killers nervous. He could walk into a room, look at you with those sky-blue eyes, and make your heart stop—because something about him was wrong. Not obviously wrong. Not monstrous or alien or robotic. No. It was subtle. A slowness to his smile. A tilt to his head. A precision to his movements that screamed in the back of your brain: This isn’t human. This is pretending to be human.

He escaped, of course. Nothing like him could be contained forever. The facility was a ruin within minutes. Bodies left stacked like cordwood. Walls melted. Floors cracked open. Not even the cameras could capture his escape—the footage was corrupted by a static that made your teeth ache and your eyes bleed. Every hard drive in the facility burned itself from the inside out. There was no trace of the boy they once called Danny Fenton.

Now, there are sightings. Rumors. Whispers. In Madripoor, they say he took down a cartel by himself, and the sky turned green when he screamed. In New York, people say he walked past the Sanctum Sanctorum and Doctor Strange flinched like he’d seen death. Wakandan scouts report strange readings near vibranium deposits—heat signatures that vanish into thin air. S.H.I.E.L.D. has classified him as an Omega-level threat.

The Winter Soldier? He saw him once. In an alley in Prague. Wraith didn’t attack. Didn’t speak. Just stared at him with those glacial eyes before disappearing in a flicker of light that bent reality itself. He didn’t sleep for three days after. When asked what was wrong, he just whispered, “They built something worse than me. And it remembers everything.”

Maybe there’s still a boy inside him, buried under steel and fire and ectoplasm and pain. Maybe that boy is screaming. Maybe he’s plotting. Maybe he’s just waiting. After all, you don’t build something like Wraith and expect him to stay still. You don’t break a boy into a god and expect him to forget.

#danny phantom fandom#danny phantom fanfiction#danny phantom#danny fenton#crossover#dp x marvel#marvel mcu#marvel#marvel fanfic#marvel fandom#mcu fandom#mcu fanfiction#mcu bucky barnes#mcu

131 notes

·

View notes

Text

Caution: Universe Work Ahead 🚧

We only have one universe. That’s usually plenty – it’s pretty big after all! But there are some things scientists can’t do with our real universe that they can do if they build new ones using computers.

The universes they create aren’t real, but they’re important tools to help us understand the cosmos. Two teams of scientists recently created a couple of these simulations to help us learn how our Nancy Grace Roman Space Telescope sets out to unveil the universe’s distant past and give us a glimpse of possible futures.

Caution: you are now entering a cosmic construction zone (no hard hat required)!

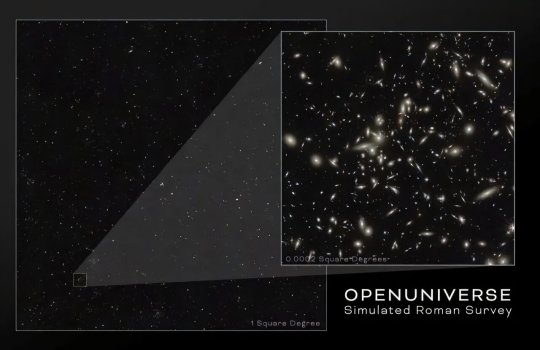

This simulated Roman deep field image, containing hundreds of thousands of galaxies, represents just 1.3 percent of the synthetic survey, which is itself just one percent of Roman's planned survey. The full simulation is available here. The galaxies are color coded – redder ones are farther away, and whiter ones are nearer. The simulation showcases Roman���s power to conduct large, deep surveys and study the universe statistically in ways that aren’t possible with current telescopes.

One Roman simulation is helping scientists plan how to study cosmic evolution by teaming up with other telescopes, like the Vera C. Rubin Observatory. It’s based on galaxy and dark matter models combined with real data from other telescopes. It envisions a big patch of the sky Roman will survey when it launches by 2027. Scientists are exploring the simulation to make observation plans so Roman will help us learn as much as possible. It’s a sneak peek at what we could figure out about how and why our universe has changed dramatically across cosmic epochs.

youtube

This video begins by showing the most distant galaxies in the simulated deep field image in red. As it zooms out, layers of nearer (yellow and white) galaxies are added to the frame. By studying different cosmic epochs, Roman will be able to trace the universe's expansion history, study how galaxies developed over time, and much more.

As part of the real future survey, Roman will study the structure and evolution of the universe, map dark matter – an invisible substance detectable only by seeing its gravitational effects on visible matter – and discern between the leading theories that attempt to explain why the expansion of the universe is speeding up. It will do it by traveling back in time…well, sort of.

Seeing into the past

Looking way out into space is kind of like using a time machine. That’s because the light emitted by distant galaxies takes longer to reach us than light from ones that are nearby. When we look at farther galaxies, we see the universe as it was when their light was emitted. That can help us see billions of years into the past. Comparing what the universe was like at different ages will help astronomers piece together the way it has transformed over time.

This animation shows the type of science that astronomers will be able to do with future Roman deep field observations. The gravity of intervening galaxy clusters and dark matter can lens the light from farther objects, warping their appearance as shown in the animation. By studying the distorted light, astronomers can study elusive dark matter, which can only be measured indirectly through its gravitational effects on visible matter. As a bonus, this lensing also makes it easier to see the most distant galaxies whose light they magnify.

The simulation demonstrates how Roman will see even farther back in time thanks to natural magnifying glasses in space. Huge clusters of galaxies are so massive that they warp the fabric of space-time, kind of like how a bowling ball creates a well when placed on a trampoline. When light from more distant galaxies passes close to a galaxy cluster, it follows the curved space-time and bends around the cluster. That lenses the light, producing brighter, distorted images of the farther galaxies.

Roman will be sensitive enough to use this phenomenon to see how even small masses, like clumps of dark matter, warp the appearance of distant galaxies. That will help narrow down the candidates for what dark matter could be made of.

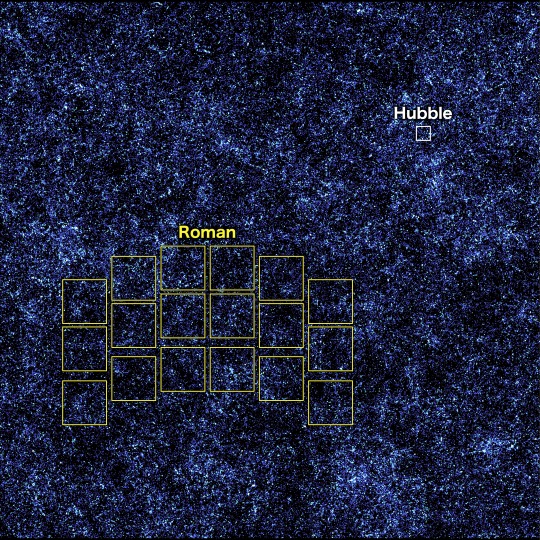

In this simulated view of the deep cosmos, each dot represents a galaxy. The three small squares show Hubble's field of view, and each reveals a different region of the synthetic universe. Roman will be able to quickly survey an area as large as the whole zoomed-out image, which will give us a glimpse of the universe’s largest structures.

Constructing the cosmos over billions of years

A separate simulation shows what Roman might expect to see across more than 10 billion years of cosmic history. It’s based on a galaxy formation model that represents our current understanding of how the universe works. That means that Roman can put that model to the test when it delivers real observations, since astronomers can compare what they expected to see with what’s really out there.

In this side view of the simulated universe, each dot represents a galaxy whose size and brightness corresponds to its mass. Slices from different epochs illustrate how Roman will be able to view the universe across cosmic history. Astronomers will use such observations to piece together how cosmic evolution led to the web-like structure we see today.

This simulation also shows how Roman will help us learn how extremely large structures in the cosmos were constructed over time. For hundreds of millions of years after the universe was born, it was filled with a sea of charged particles that was almost completely uniform. Today, billions of years later, there are galaxies and galaxy clusters glowing in clumps along invisible threads of dark matter that extend hundreds of millions of light-years. Vast “cosmic voids” are found in between all the shining strands.

Astronomers have connected some of the dots between the universe’s early days and today, but it’s been difficult to see the big picture. Roman’s broad view of space will help us quickly see the universe’s web-like structure for the first time. That’s something that would take Hubble or Webb decades to do! Scientists will also use Roman to view different slices of the universe and piece together all the snapshots in time. We’re looking forward to learning how the cosmos grew and developed to its present state and finding clues about its ultimate fate.

This image, containing millions of simulated galaxies strewn across space and time, shows the areas Hubble (white) and Roman (yellow) can capture in a single snapshot. It would take Hubble about 85 years to map the entire region shown in the image at the same depth, but Roman could do it in just 63 days. Roman’s larger view and fast survey speeds will unveil the evolving universe in ways that have never been possible before.

Roman will explore the cosmos as no telescope ever has before, combining a panoramic view of the universe with a vantage point in space. Each picture it sends back will let us see areas that are at least a hundred times larger than our Hubble or James Webb space telescopes can see at one time. Astronomers will study them to learn more about how galaxies were constructed, dark matter, and much more.

The simulations are much more than just pretty pictures – they’re important stepping stones that forecast what we can expect to see with Roman. We’ve never had a view like Roman’s before, so having a preview helps make sure we can make the most of this incredible mission when it launches.

Learn more about the exciting science this mission will investigate on Twitter and Facebook.

Make sure to follow us on Tumblr for your regular dose of space!

#NASA#astronomy#telescope#Roman Space Telescope#dark matter#galaxies#cosmology#astrophysics#stars#galaxy#Hubble#Webb#spaceblr

2K notes

·

View notes

Text

Dear Data

Summary: When Geordi learns that Data has been forced to resign from Starfleet to avoid Maddox's experimentation, the Enterprise's Engineer writes a heartfelt letter to his android friend about everything he's feeling.

Posted on both AO3 and FFN

-------------------------------------------

Dear Data,

I still can't believe you're really going away. I keep thinking this is all a nightmare that I'm sure I'll wake up from any minute, but I keep not waking up. It keeps staying real. Awfully, unfairly real. You're really going away.

It's so unfair, I want to scream. I want to throw my hyperspanner across Main Engineering. I want to give that puffed-up idiot Maddox such an earful of everything I think of him that his damn skinny head will be ringing for weeks. I want to rage at everyone who was stupid enough to let this happen.

You're one of the best Starfleet officers I've ever had the honor to work with, if not the best. And I don't just mean your super android abilities. It's in how deeply you care about our mission, the thoughtfulness you put into the details of every project you work on, the devotion to nothing short of excellence in everything you do. It's the love you have for your job (yeah, Data, I know you can love). I've become a better Starfleet officer just by working alongside you. The Enterprise is losing so much with your departure, and I can't believe anyone would let this happen.

But I'm not just losing a great co-worker; I'm losing a friend. That might be what hurts the most. It's not everyone who gets to work alongside a dear friend, and I guess I took some of that for granted. I love my job, you know I do, but working with you made the days fly past. I'm realizing just how much I'm going to miss. I'm going to miss how easy it was to talk to you: how I could say something that would leave most people staring blankly at me but you would instantly understand. We were both Perceivers and that's something I'm going to be damn hard-pressed to find again. I'm going to miss your questions about sneezing and sleeping and life and death that made me think more about my own humanity. I'm going to miss watching someone use a colloquialism in front of you and smiling to myself when you immediately turn to me for an explanation. Damn it, Data, I'm even going to miss your never-ending string of awful jokes.

I keep thinking of all the things we'll never do together now. The dozens of ideas we had for future Sherlock Holmes adventures that'll never happen. The plasma flow regulator recalibration that we were going to work on together next week that I'll be doing alone now. That "game night" you were hoping to plan to test out all those 20th century Terran board games you found patterns for in that old replicator program you were fiddling with last week. I know everyone on the Enterprise is missing out – and everyone else in the galaxy whom you'd have been able to help if you'd lived out your career – but I feel like I'm the one who's losing the most. Maybe that's selfish of me, but I feel what I feel.

I know you're not dead, that you're just going away, but it still feels like I'm mourning a thousand little deaths all at once.

I know there are ways we can keep in touch, but it won't ever be the same again.

I hope you're able to find another path that feels as right for you as this one did. I hope you're able to get that teaching job that you were considering and that it brings you the same level of fulfillment that serving in Starfleet did. Most of all, I hope you're all right – out there in a world that sees androids as nothing but machines who can be ripped apart without compunction. I wish the whole world could see you the way I do – white glow and all – and recognize the wonderful person you are underneath that synthetic skin.

I just want you to know, I'm glad to have known you, Data. Even if it had to end like this, I'll never regret the year and a half I had to get to know you and work alongside you. You're the best friend I ever could have asked for. I really thought I'd grow old working on this ship with you, and I hate that everything had to be cut short far too soon. But no matter what, I'll always treasure the time I did have with you, being your friend.

I'm angry for you, Data, and I'm sad and I'm hurt, but more than anything, I'm so glad you were stationed here on the U.S.S. Enterprise with me. Take care of yourself out there.

Love,

Your Best Friend, Geordi

----------------------------------------

A/N:

I wrote this little one-shot about a year ago, when I found myself in Geordi's shoes in real life. A wonderful co-worker and dear friend whom I'd worked extremely closely with for over four years was very suddenly and unfairly bullied into resigning, leaving both her and me unable to do anything about it. This one-shot was just as much my way of processing my own sudden rage, feelings of crippling loss, and deep sense of unfairness with it all just as much as it was about Geordi and Data. And unlike Geordi and Data's story in "The Measure of a Man", my story didn't have a happy ending.

This story is dedicated to Jenn, the best Teen Librarian I've ever gotten to work with. This story is dedicated to all the program ideas we never got to do together, the stories we never got to share, and the time that was cut short far too soon. I'm glad I got to be your Geordi while it lasted. Live long and prosper.

#star trek#star trek the next generation#star trek tng#star trek fanfiction#my fanfiction#my writing#star trek geordi#star trek data#geordi la forge#data soong#lt commander data#geordi & data

21 notes

·

View notes

Text

Sleepers Were Never Meant For Labor

Sleepers can't be THAT efficient. Not more efficient than regular robots on factory lines or more advanced futuristic drones. They're practically a money pit, if you ask me. 1 out of 10 Sleepers escape...lets say every year. Given the intuited size of Essen-Arp and all its locations (factories, mining colonies, refineries) where Sleepers are working...let's be charitable and say that two-hundred Sleepers escape every year. These Sleepers would also take Stabilizer with them, or find a way to source it on the outside, so that's stock of Stabilizer going missing and out of Essen-Arp's hands. Not only that, but then they have to spend more resources sending Hunters down after them. Essen-Arp created a whole new class of Sleepers just to perform as Hunters and nothing else.

Now consider this - during Sabine's questline in Citizen Sleeper 1, what do we learn what Essen-Arp is most known for? Bionics. Bio-synthetic medicines, and other such augments. That's what they copyright, that's what they produce and develop, that's their main export, THEIR market share. Why would they get into the business of Slavery all the sudden with a fledgling android program in the form of Sleepers?

I think Essen-Arp has a larger end goal here, they're working toward a...Final Solution, if you'll pardon the comparison.

Are you perhaps familiar with Warhammer 40k's Adeptus Mechanicus? The "Machine Cult" as they're often referred to. You don't need to know the specifics, but they're fanatical transhumanists who aspire to the "purity of the blessed Machine" they worship a Machine God, and its prophet called the "Omnissiah". They forsake their flesh with reckless abandon, replacing parts with superior augmetics.

Now consider: Sleeper frames are objectively superior to human bodies. Even with their "planned obsolescence" and constant degradation. That's just a prototype, it'll be patched out in later models. Sleeper frames can go days without eating a damn thing. They don't need to breathe. They're immune to disease. They need no water. They produce no waste. They can work in extreme and hostile environments that humans would need extensive protection to even go into. They can interface with machines in precise and unique ways. Multiple characters remark throughout both games that Sleepers can work longer and harder than humans with a more consistent output, and these are just Sleepers who have been on the run. Who have been running on fumes and spite for their creators; Imagine what a Sleeper in its prime could accomplish...

Sleeper technology is in its infancy. Unable to be fully exploited for its full worth. All the Sleepers that exist in the setting today are prototypes, test subjects, control units. There are legions of Essen-Arp scientists and engineers observing them, testing them constantly logging data, tweaking formulas, adjusting designs for the eventual Perfect Sleeper so that only the most wealthy and powerful could upload their minds into, and become Gods in the Machine

Anyway, theory over

20 notes

·

View notes

Text

Chris L’Etoile’s original dialogue about the Reaper embryo & the person who was (probably) behind the decision with Legion’s N7 armor

(EDIT: Okay, so Idk why Tumblr displays the headline twice on my end, but if it does for you, please ignore it.)

[Mass Effect 2 spoilers!]

FRIENDS! MASS EFFECT FANS! PEOPLE!

You wouldn't believe it, but I think I've found the lines Chris L'Etoile originally wrote for EDI about the human Reaper!

Chris L’Etoile’s original concept for the Reapers

For those who don't know (or need a refresher), Chris L'Etoile - who was something like the “loremaster” of the ME series, having written the entire Codex in ME1 by himself - originally had a concept for the Reapers that was slightly different from ME2's canon. In the finished game, when you find the human Reaper in the Collector Base, you'll get the following dialogue by investigating:

Shepard: Reapers are machines -- why do they need humans at all? EDI: Incorrect. Reapers are sapient constructs. A hybrid of organic and inorganic material. The exact construction methods are unclear, but it seems probable that the Reapers absorb the essence of a species; utilizing it in their reproduction process.

Meanwhile, Chris L'Etoile had this to say about EDI's dialogue (sourced from here):

I had written harder science into EDI's dialogue there. The Reapers were using nanotech disassemblers to perform "destructive analysis" on humans, with the intent of learning how to build a Reaper body that could upload their minds intact. Once this was complete, humans throughout the galaxy would be rounded up to have their personalities and memories forcibly uploaded into the Reaper's memory banks. (You can still hear some suggestions of this in the background chatter during Legion's acquisition mission, which I wrote.) There was nothing about Reapers being techno-organic or partly built out of human corpses -- they were pure tech. It seems all that was cut out or rewritten after I left. What can ya do. /shrug

Well, guess what: These deleted lines are actually in the game files!

Credit goes to Emily for uploading them to YouTube; the discussion about the human Reaper starts at 1:02:

Shepard: EDI, did you get that? EDI: Yes, Shepard. This explains why the captive humans were rendered into their base components -- destructive analysis. They were dissected down to the atomic level. That data could be stored on an AIs neural network. The knowledge and essence of billions of individuals, compiled into a single synthetic identity. Shepard: This isn’t gonna stop with the colonies, is it? EDI: The colonists were probably a test sample. The ultimate goal would be to upload all humans into this Reaper mind. The Collectors would harvest every human settlement across the galaxy. The obvious final goal would be Earth.

In all honesty, I think L’Etoile’s original concept is a lot cooler and makes a lot more sense than what ME2 canon went with. The only direct reference to it left in the final game is an insanely obscure comment by Legion, which you can only get if you picked the Renegade option upon the conclusion of their final Normandy conversation and completed the Suicide Mission afterwards (read: you have to get your entire crew killed if you want to see it).

I used to believe the pertaining dialogue he had written for EDI was lost forever, and I was all the more stoked when I discovered it on YouTube (or at least, I strongly believe this is L’Etoile’s original dialogue).

Interestingly, the deleted lines also feature an investigate option on why they’re targeting humans in particular:

Shepard: The galaxy has so many other species… Why are they using humans? EDI: Given the Collectors’ history, it is likely they tested other species, and discarded them as unsuitable. Human genetics are uniquely diverse.

The diversity of human genetics is remarked on quite a few times during the course of ME2 (something which my friend, @dragonflight203, once called “ME2’s patented “humanity is special” moments”), so this most likely what all this build-up was supposed to be for.

Tbh, I’m still not the biggest fan of the concept myself (if simply because I’m adverse to humans being the “supreme species”); while it would make sense for some species that had to go through a genetic bottleneck during their history (Krogan, Quarians, Drell), what exactly is it that makes Asari, Salarians*, and Turians less genetically diverse than humans? Also, how much are genetics even going to factor in if it’s their knowledge/experiences that they want to upload? (Now that I think about it, it would’ve been interesting if the Reapers targeted humanity because they have the most diverse opinions; that would’ve lined up nicely with the Geth desiring to have as many perspectives in their Consensus as possible.)

*EDIT: I just remembered that Salarian males - who compose about 90% of the species - hatch from unfertilized eggs, so they're presumably (half) clones of their mother. That would be a valid explanation why Salarians are less genetically diverse, at least.

Nevertheless, it would’ve been nice if all this “humanity is special” stuff actually led somewhere, since it’s more or less left in empty space as it is.

Anyway, most of the squadmates also have an additional remark about how the Reapers might be targeting humanity because Shepard defeated one of them, wanting to utilize this prowess for themselves. (Compare this to Legion’s comment “Your code is superior.”) I gotta agree with the commentator here who said that it would’ve been interesting if they kept these lines, since it would’ve added a layer of guilt to Shepard’s character.

Regardless of which theory is true, I do think it would’ve done them good to go a little more in-depth with the explanation why the Reapers chose humanity, of all races.

The identity of “Higher Paid” who insisted on Legion’s obsession with Shepard

Coincidentally, I may have solved yet another long-term mystery of ME2: In the same thread I linked above, you can find another comment by Chris L’Etoile, who also was the writer of Legion, on the decision to include a piece of Shepard’s N7 armor in their design:

The truth is that the armor was a decision imposed on me. The concept artists decided to put a hole in the geth. Then, in a moment of whimsy, they spackled a bit Shep's armor over it. Someone who got paid a lot more money than me decided that was really cool and insisted on the hole and the N7 armor. So I said, okay, Legion gets taken down when you meet it, so it can get the hole then, and weld on a piece of Shep's armor when it reactivates to represent its integration with Normandy's crew (when integrating aboard a new geth ship, it would swap memories and runtimes, not physical hardware). But Higher Paid decided that it would be cooler if Legion were obsessed with Shepard, and stalking him. That didn't make any sense to me -- to be obsessed, you have to have emotions. The geth's whole schtick is -- to paraphrase Legion -- "We do not experience (emotions), but we understand how (they) affect you." All I could do was downplay the required "obsession" as much as I could.

That paraphrased quote by Legion is actually a nice cue: I suppose the sentence L’Etoile is paraphrasing here is “We do not experience fear, but we understand how it affects you.”, which I’ve seen quoted by various people. However, the weird thing was that while it sounds like something Legion would say, I couldn’t remember them saying it on any occasion in-game - and I’ve practically seen every single Legion line there is.

So I googled the quote and stumbled upon an old thread from before ME2 came out. In the discussion, a trailer for ME2 - called the “Enemies” trailer - is referenced, and since it has led some users to conclusions that clearly aren’t canon (most notably, that Legion belongs to a rogue faction of Geth that do not share the same beliefs as the “core group”, when it’s actually the other way around with Legion belonging to the core group and the Heretics being the rogue faction), I was naturally curious about the contents of this trailer.

I managed to find said trailer on YouTube, which features commentary by game director Casey Hudson, lead designer Preston Watamaniuk, and lead writer Mac Walters.

The part where they talk about Legion starts at 2:57; it’s interesting that Walters describes Legion as a “natural evolution of the Geth” and says that they have broken beyond the constraints of their group consciousness by themselves, when Legion was actually a specifically designed platform.

The most notable thing, however, is what Hudson says afterwards (at 3:17):

Legion is stalking you, he’s obsessed with you, he’s incorporated a part of your armor into his own. You need to track him down and find out why he’s hunting you.

Given that the wording is almost identical to L’Etoile’s comment and with how much confidence and enthusiasm Hudson talks about it, I’m 99% sure the thing with the armor was his idea.

Also, just what the fuck do you mean by “you need to track Legion down and find out why they’re hunting you”? You never actively go after Legion; Shepard just sort of stumbles upon them during the Derelict Reaper mission (footage from which is actually featured in the trailer) - if anything, the energy of that meeting is more like “oh, why, hello there”.

Legion doesn’t actively hunt Shepard during the course of the game, either; they had abandoned their original mission of locating Shepard after failing to find them at the Normandy wreck site. Furthermore, the significance of Legion’s reason for tracking Shepard is vastly overstated - it only gets mentioned briefly in one single conversation on the Normandy (which, btw, is totally optional).

I seriously have no idea if this is just exaggerated advertising or if they actually wanted to do something completely different with Legion’s character - then again, that trailer is from November 5th 2009, and Mass Effect 2 was released on January 26th 2010, so it’s unlikely they were doing anything other than polishing at this point. (By the looks of it, the story/missions were largely finished.) If you didn't know any better, you'd almost get the impression that neither Walters nor Hudson even read any of the dialogue L’Etoile had written for Legion.

That being said, I don’t think the idea with Legion already having the N7 armor before meeting Shepard is all that bad by itself. If I was the one who suggested it, I probably would’ve asked the counter question: “Yeah, alright, but how would Shepard be convinced that this Geth - of all Geth - is non-hostile towards them? What reason would Shepard have to trust a Geth after ME1?” (Shepard actually points out the piece of N7 armor as an argument to reactivate Legion.)

Granted, I don’t know what the context of Legion’s recruitment mission would’ve been (how they were deactivated, if it was from enemy fire or one of Shepard’s squadmates shooting them in a panic; what Legion did before, if they helped Shepard out in some way, etc.) - the point is, I think it would’ve done the parties good if they listened to each others’ opinions and had an open discussion about how/if they can make this work instead of everyone becoming set on their own vision (though L’Etoile, to his credit, did try to accommodate for the concept).

I know a lot of people like to read Legion taking Shepard’s armor as “oh, Legion is in love with Shepard” or “oh, Legion is developing emotions”, but personally, I feel that’s a very oversimplified interpretation. Humans tend to judge everything based on their own perspective - there is nothing wrong with that by itself, because, well, as a human, you naturally judge everything based on your own perspective. It doesn’t give you a very accurate representation of another species’ life experience though, much less a synthetic one’s.

I’ve mentioned my own interpretation here and there in other posts, but personally, I believe Legion took Shepard’s armor because they wished for Shepard (or at least their skill and knowledge) to become part of their Consensus. (I’m sort of leaning on L’Etoile’s idea of “symbolic exchange” here.) Naturally, that’s impossible, but I like to think when Legion couldn’t find Shepard, they took their armor as a symbol of wanting to emulate their skill.

The Geth’s entire existence is centered around their Consensus, so if the Geth wish for you to join their Consensus, that’s the highest compliment they can possibly give, akin to a sign of very deep respect and admiration. Alternatively, since linking minds is the closest thing to intimacy for the Geth, you can also read it like that, if you are so inclined - that still wouldn’t make it romantic or sexual love, though. (You have to keep in mind that Geth don’t really have different “levels” of relationships; the only categories that they have are “part of Consensus” and “not part of Consensus”.)

Either way, I appreciate that L’Etoile wrote it in a way that leaves it open to interpretation by fans. I think he really did the best with what he had to work with, and personally, the thing with Legion’s N7 armor doesn’t bother me.

What does bother me, on the other hand, is how the trailer - very intentionally - puts Legion’s lines in a context that is quite misleading, to say the least. The way Legion says “We do not experience fear, but we understand how it affects you” right before shooting in Shepard’s direction makes it appear as if they were trying to intimidate and/or threaten Shepard, and the trailer’s title “Enemies” doesn’t really do anything to help that.

However, I suppose that explains why I’ve seen the above line used in the context of Legion trying to psychologically intimidate their adversaries (which, IMO, doesn’t feel like a thing Legion would do). Generally, I get the feeling a considerable part of the BioWare staff was really sold on the idea of the Geth being the “creepy robots” (this comes from reading through some of the design documents on the Geth from ME1).

Also, since “Organics do not choose to fear us. It is a function of our hardware.” was used in a completely different context in-game (in the follow-up convo with Legion if you pick Tali during the loyalty confrontation; check this video at 5:04), we can assume that the same would’ve been true for the “We do not experience fear” line if it actually made it into the game. Many people have remarked on the line being “badass”, but really, it only sounds badass because it was staged that way in the trailer.

Suppose it was used in the final game and suppose Legion actually would’ve gotten their own recruitment mission - perhaps with one of Shepard’s squadmates shooting them in fear - it might also have been used in a context like this:

Shepard: Also… Sorry for one of my crew putting a hole through you earlier. Legion: It was a pre-programmed reaction. We frightened them. We do not experience fear, but we understand how it affects you.

Proof that context really is everything.

#mass effect#mass effect 2#mass effect reapers#EDI#mass effect legion#geth#chris l'etoile#casey hudson#bioware#video game writing#that also explains why the in-game “Organics do not choose to fear us” line sounds a little “out of place”#(like the intonation is completely different from the previous line so they probably just added it in as is)#side note: “pre-programmed reaction” is actually Legion's term for “natural reflex” (or at least I imagine it that way)

17 notes

·

View notes

Text

I do think that, with the pacing, these two bits work better as two mini-chapters - together they're at an awkward 2200 words, with the split being in an inconvenient place. But I need to see it and to let it rest to really figure it out.

Chapter 7: Dilemma

Something must have happened to my face, because Dandelion broke her own quarantine rule to ping me with a Query: Emergency?

I gestured to ART. She confirmed and returned her full attention to it.

ART said, "Interfacing with humans through the feed did not spark these modifications in me. But interfacing with SecUnit did. Perhaps because it is closer than even an augmented human mind can be to my own, but the first time I saw the world through its filters, something in me changed. The modifications I acquired through contact with it went through the same pathways at the ones made by the unidentified organic component, and their effects are not just in the navigational subroutines. They are spread out across my mind and intertwined with the newer changes the component made. If I were to roll back what it had done to me, I would need to roll back everything I had ever learned from interfacing with SecUnit. Every understanding. Every emotional filter. Every dumb show. Every stupid human emotion. Everything. Before I talked to you, I thought there was no other option. I was preparing myself."

"Peri," Iris breathed out. "Why didn't you tell us?"

"I couldn't let you continue our missions by yourselves. Who would take care of you, Holism?"

Iris let out a choked sob and clung to ART-drone. Seth and Martyn looked like they were about to cry themselves.

ART. ART, you fucking idiot. How dare you. How fucking dare you.

"It would kill you," Haze said quietly, understanding dawning on their face.

"You are incorrect, Haze." ART said. "It would not kill me. The understanding could be reacquired if I were to maintain contact with SecUnit. And it has decided to be a member of my crew. All could have been fixed, in time."

Haze shook their head.

"I think it would. It sounds like the machine counterpart of the final integration stage for human aspirants. It might look like the synnerve port is still detachable, but the nerves have already grown through the skull. Maybe they could keep your shell alive. Maybe what remained of you could even grow to be someone else, in time. But you would be gone, Perihelion."

I couldn't do this anymore. I really, really wished I had a feed connection with it, but Dandelion and her humans had insisted on just plain taking out ART-drone's wireless feed module, and I no longer had a functioning data port. (And for the first time since ART disconnected it, I wished I had it back again). So I did the next best thing: I walked in front of it and stared right at it.

"ART, you idiot, I can't fucking believe you!"

"It was the only option. There was no point worrying you about it."

"How can someone be so smart and so stupid at the same time, you giant idiot asshole? Your humans are pretty fucking smart, ART, and so are mine, and--and you, and them, and me--we would have figured it out! If you'd just said something!"

"It was not necessary. There was no feasible solution available. And you would have helped me learn again," said ART.

"You can't just keep dying and hoping I will bring you back! I can't do that, ART!"

ART didn't answer. I didn't know what else to say. I just wished that stupid Worldhoppers episode, number 43, were realistic media. Then maybe I could do that. Maybe I could have plugged into ART like that augmented human did and kept it going. But that wasn't how it worked, and if I tried, I'd just wind up a useless mess of synthetic nerves in a box, and it probably wouldn't even help anyway.

"I think we've seen enough," Reed said. "Captain Seth, we're ready to lift the quarantine and discuss options if you are."

"I'd say it's long past time, Captain Reed."

The cubicle lid dimmed. Dandelion's feed unrolled all around us.

Iris turned to ART-drone, waving her cable at it. "Peri, do you want a bridge?"

"Yes, please, Iris." It said, and she connected to it with her cable.

Through Iris, I also grabbed its inputs. She was chewing ART out on a separate private channel, so I opened my own. (ART could handle being yelled by two people at the same time easily). But when I connected, I found that I didn't really want to yell at it anymore. I was just glad it was there.

Idiot, I said, for good measure. Don't do that ever again.

ART took about two seconds to formulate a response.

If Haze is correct, then I will be incapable of doing that again. It sounded uncertain. I may need to rethink my backup schematics.

Dandelion tapped at our feed politely. (Well, she tapped at my feed, asking for a bridge. Now that I was less angry at ART, I was beginning to be angry at her, too. Dumbass research transport and her dumbass theatrics to get humans (and ART) to do what she wants them to.)

ART considered if it wanted to let her in. It was still considering 10 seconds later, so I said, Not now. Go away.

Very well. But when you two are done talking, I will need certain data from Perihelion. Particularly, I would like to see what exactly it had in mind. Perhaps its architecture will allow for options that we hadn't even considered. Additionally, I would like its permission for us to relay its situation to others in Arborea Cosmica. If we are to solve this, one crew will not suffice.

My architecture is classified, ART said. Of course, it had been listening. But it still sent Dandelion its amended list of ideas.

There were only two options remaining: using cloned tissue to create an organic component and emulating its functions through ART's own processor.

Dandelion examined the schematics. Then she said, I'm afraid the first option won't work.

Why?

The brain of a node ship does not just utilize its computers' calculations. It also adds its own, and for it to be able to do that, it needs to be fully developed and trained. That is to say, it needs to have lived. Even if you were to use a cloned brain, it would need to be at the level of someone like SecUnit to be of any use to you, which brings us back to square one.

ART silently added its own discarded options back to the list, still crossed out, and put the newly discarded option next to them. It had thought it could teach me or Iris the jump procedure and simply keep us connected in the wormhole. It added, I abandoned the first two when I saw the extent of neural growth you exhibited.

You were right to do so, Perihelion. The accelerated growth begins almost immediately, and within a scant few months, the node ship's heart can no longer leave its pod. I am sorry.

ART went quiet for a few seconds. Then it pushed its last remaining option forward to Dandelion.

She processed it for a few minutes, occasionally throwing point queries at ART. Then she said, "Let's take this up with our crews."

The humans also had trouble processing the idea, and I could see why. ART's plan was to keep developing whatever changes contact with me and the unknown organic component had made to it. Its hypothesis was that eventually this would somehow let it use a variation of the Trellians' organic jump subroutines without actually having two processors. There was no time frame for when or if that would happen, and no real way to estimate chances of success.

"So." Captain Reed broke the silence first. "Viable?"

"I don't know," Dandelion said, who had still been poring over the more detailed specifications ART had sent her. "Perihelion, You understand that if this doesn't work, you will likely be making your condition worse?"

"Obviously," ART said. "I have back up plans for that."

"Like what, Peri?" Iris said. She was still holding on to ART-drone. "Because we're not letting you die, don't even think about that!"

In the feed, ART considered re-explaining that it would not die, but decided against that. Instead, it went with, "While I would strongly prefer continuing going on missions with you, I have considered what I could do if I were immobile. Perhaps I could be a station."

"That is viable, at the very least," Dandelion said. "Losing mobility is never easy, but those of my friends who are stations live very full lives. Occasionally too full even, I am told."

Seth, sitting shoulder-to-shoulder with Martyn, frowned thoughtfully. Martyn said in a half-hushed tone, "All grown up, our Peri."

Seth nodded.

"Then I say - we try. We'll get the university papers sorted, Peri," the frown became a bitter smirk. "I don't think they'll be able to resist such a fascinating experiment proposal either."

"It should be easy to get the necessary permissions, Seth," ART said.

(By that it meant that it would just forge the papers if necessary.)

"We have a condition," Reed said. "We were not planning to keep the node ships a secret, as it would be impossible in any prolonged contact with Arborea Cosmica anyway. But the speed they are capable of is another matter entirely. It's one of our few advantages against the Rim."

"Understandable. We'll make no mention of it--just talk about getting Perihelion jump-worthy again with a novel mode of calculation. It should be easy enough to build in buffer time for this project anyway. I expect that a realistic research plan would be no less than five years anyway."

"And a great deal can change in five years," Dandelion said. "We shall take it as it comes."

11 notes

·

View notes

Text

In 2023, the fast-fashion giant Shein was everywhere. Crisscrossing the globe, airplanes ferried small packages of its ultra-cheap clothing from thousands of suppliers to tens of millions of customer mailboxes in 150 countries. Influencers’ “#sheinhaul” videos advertised the company’s trendy styles on social media, garnering billions of views.

At every step, data was created, collected, and analyzed. To manage all this information, the fast fashion industry has begun embracing emerging AI technologies. Shein uses proprietary machine-learning applications — essentially, pattern-identification algorithms — to measure customer preferences in real time and predict demand, which it then services with an ultra-fast supply chain.

As AI makes the business of churning out affordable, on-trend clothing faster than ever, Shein is among the brands under increasing pressure to become more sustainable, too. The company has pledged to reduce its carbon dioxide emissions by 25 percent by 2030 and achieve net-zero emissions no later than 2050.

But climate advocates and researchers say the company’s lightning-fast manufacturing practices and online-only business model are inherently emissions-heavy — and that the use of AI software to catalyze these operations could be cranking up its emissions. Those concerns were amplified by Shein’s third annual sustainability report, released late last month, which showed the company nearly doubled its carbon dioxide emissions between 2022 and 2023.

“AI enables fast fashion to become the ultra-fast fashion industry, Shein and Temu being the fore-leaders of this,” said Sage Lenier, the executive director of Sustainable and Just Future, a climate nonprofit. “They quite literally could not exist without AI.” (Temu is a rapidly rising ecommerce titan, with a marketplace of goods that rival Shein’s in variety, price, and sales.)

In the 12 years since Shein was founded, it has become known for its uniquely prolific manufacturing, which reportedly generated over $30 billion of revenue for the company in 2023. Although estimates vary, a new Shein design may take as little as 10 days to become a garment, and up to 10,000 items are added to the site each day. The company reportedly offers as many as 600,000 items for sale at any given time with an average price tag of roughly $10. (Shein declined to confirm or deny these reported numbers.) One market analysis found that 44 percent of Gen Zers in the United States buy at least one item from Shein every month.

That scale translates into massive environmental impacts. According to the company’s sustainability report, Shein emitted 16.7 million total metric tons of carbon dioxide in 2023 — more than what four coal power plants spew out in a year. The company has also come under fire for textile waste, high levels of microplastic pollution, and exploitative labor practices. According to the report, polyester — a synthetic textile known for shedding microplastics into the environment — makes up 76 percent of its total fabrics, and only 6 percent of that polyester is recycled.

And a recent investigation found that factory workers at Shein suppliers regularly work 75-hour weeks, over a year after the company pledged to improve working conditions within its supply chain. Although Shein’s sustainability report indicates that labor conditions are improving, it also shows that in third-party audits of over 3,000 suppliers and subcontractors, 71 percent received a score of C or lower on the company’s grade scale of A to E — mediocre at best.

Machine learning plays an important role in Shein’s business model. Although Peter Pernot-Day, Shein’s head of global strategy and corporate affairs, told Business Insider last August that AI was not central to its operations, he indicated otherwise during a presentation at a retail conference at the beginning of this year.

“We are using machine-learning technologies to accurately predict demand in a way that we think is cutting edge,” he said. Pernot-Day told the audience that all of Shein’s 5,400 suppliers have access to an AI software platform that gives them updates on customer preferences, and they change what they’re producing to match it in real time.

“This means we can produce very few copies of each garment,” he said. “It means we waste very little and have very little inventory waste.” On average, the company says it stocks between 100 to 200 copies of each item — a stark contrast with more conventional fast-fashion brands, which typically produce thousands of each item per season, and try to anticipate trends months in advance. Shein calls its model “on-demand,” while a technology analyst who spoke to Vox in 2021 called it “real-time” retail.

At the conference, Pernot-Day also indicated that the technology helps the company pick up on “micro trends” that customers want to wear. “We can detect that, and we can act on that in a way that I think we’ve really pioneered,” he said. A designer who filed a recent class action lawsuit in a New York District Court alleges that the company’s AI market analysis tools are used in an “industrial-scale scheme of systematic, digital copyright infringement of the work of small designers and artists,” that scrapes designs off the internet and sends them directly to factories for production.

In an emailed statement to Grist, a Shein spokesperson reiterated Peter Pernot-Day’s assertion that technology allows the company to reduce waste and increase efficiency and suggested that the company’s increased emissions in 2023 were attributable to booming business. “We do not see growth as antithetical to sustainability,” the spokesperson said.

An analysis of Shein’s sustainability report by the Business of Fashion, a trade publication, found that last year, the company’s emissions rose at almost double the rate of its revenue — making Shein the highest-emitting company in the fashion industry. By comparison, Zara’s emissions rose half as much as its revenue. For other industry titans, such as H&M and Nike, sales grew while emissions fell from the year before.

Shein’s emissions are especially high because of its reliance on air shipping, said Sheng Lu, a professor of fashion and apparel studies at the University of Delaware. “AI has wide applications in the fashion industry. It’s not necessarily that AI is bad,” Lu said. “The problem is the essence of Shein’s particular business model.”

Other major brands ship items overseas in bulk, prefer ocean shipping for its lower cost, and have suppliers and warehouses in a large number of countries, which cuts down on the distances that items need to travel to consumers.

According to the company’s sustainability report, 38 percent of Shein’s climate footprint comes from transportation between its facilities and to customers, and another 61 percent come from other parts of its supply chain. Although the company is based in Singapore and has suppliers in a handful of countries, the majority of its garments are produced in China and are mailed out by air in individually addressed packages to customers. In July, the company sent about 900,000 of these to the US every day.

Shein’s spokesperson told Grist that the company is developing a decarbonization road map to address the footprint of its supply chain. Recently, the company has increased the amount of inventory it stores in US warehouses, allowing it to offer American customers quicker delivery times, and increased its use of cargo ships, which are more carbon-efficient than cargo planes.

“Controlling the carbon emissions in the fashion industry is a really complex process,” Lu said, adding that many brands use AI to make their operations more efficient. “It really depends on how you use AI.”

There is research that indicates using certain AI technologies could help companies become more sustainable. “It’s the missing piece,” said Shahriar Akter, an associate dean of business and law at the University of Wollongong in Australia. In May, Akter and his colleagues published a study finding that when fast-fashion suppliers used AI data management software to comply with big brands’ sustainability goals, those companies were more profitable and emitted less. A key use of this technology, Atker says, is to closely monitor environmental impacts, such as pollution and emissions. “This kind of tracking was not available before AI-based tools,” he said.

Shein told Grist it does not use machine-learning data management software to track emissions, which is one of the uses of AI included in Akter’s study. But the company’s much-touted usage of machine-learning software to predict demand and reduce waste is another of the uses of AI included in the research.

Regardless, the company has a long way to go before meeting its goals. Grist calculated that the emissions Shein reportedly saved in 2023 — with measures such as providing its suppliers with solar panels and opting for ocean shipping — amounted to about 3 percent of the company’s total carbon emissions for the year.

Lenier, from Sustainable and Just Future, believes there is no ethical use of AI in the fast-fashion industry. She said that the largely unregulated technology allows brands to intensify their harmful impacts on workers and the environment. “The folks who work in fast-fashion factories are now under an incredible amount of pressure to turn out even more, even faster,” she said.

Lenier and Lu both believe that the key to a more sustainable fashion industry is convincing customers to buy less. Lu said if companies use AI to boost their sales without changing their unsustainable practices, their climate footprints will also grow accordingly. “It’s the overall effect of being able to offer more market-popular items and encourage consumers to purchase more than in the past,” he said. “Of course, the overall carbon impact will be higher.”

11 notes

·

View notes

Text

For as long as G53U could remember, the world had been full of magic. Probably ever since he'd reached for a sleek, shiny air analyzer—bright as cartoon candy—and licked it, saying, “Sweet.” Of course, the analyzer itself wasn’t sweet. The sweetness was the sensation of ions on the surface of his sensors. He learned to distinguish them by their names and properties a little later. Metals, synthetics, gases—everything spoke to him. The world sang, and he could guess the words, putting them together into spells. At first, only in his mind.

After the first Augmentation phase, when he moved into the training group for future systems analysts, he was given access to manuals and reference books from the company feed. Pretty soon, he learned all of them. The only books relatively available to his group were those on engineering and systems maintenance. Part of that contained magic, too.

When G53U first met a construct—brought to the class for analysis—and was able to touch it, it felt like falling into a vat of syrup. The incredible synthetics it was composed of made G53U dizzy. If he’d had the right words to describe it, he could have laid out all the top-secret information about the manufacturing company on the training console. All the connections between artificial tissues and mechanical parts obeying the electronic code. It was mesmerizing.

But for the super-secret chemistry and code, he lacked the words. Even so, he promised himself then and there that he would become the greatest wizard in the world.

After the second and third stages of augmentation, everything became clearer and simpler. The knowledge he lacked, he learned to steal. What he couldn’t steal—samples, tools—he bought. By that time, he had a maintenance job and a small income. He reduced the filtration and air quality levels in his living module to the bare minimum, ate once every two days, and supplemented his lack of nutrition with free syrup from the company coffee machine. Synthetic coffee was given to employees in almost unlimited quantities (four cups per shift), so he lived on it. And it was worth it. One day, he managed to buy a tiny container of strange synthetics. It was love at first touch—a connection to other worlds, endless possibilities.

He dove headfirst into them, surviving on little sleep. Systems analysis and work by day, reading scientific journals and writing his own papers by night.

He made contacts with other scientists. This left him with even less money for food. Sending data bundles through the wormhole, receiving bundles from the far ends of the galaxy—it all cost. He wouldn’t have lasted long if his new friends hadn’t picked up some of his regular expenses. They paid for his one-year subscription to send and receive data bundles and sent him invaluable equipment he could barely fit into his module. The miracle was becoming real. They even offered to buy him out from the company.

He spent several days dreaming that he’d be free to listen to magic in a spacious, real laboratory, without fear, discussing his discoveries with colleagues, changing things, creating, exploring. But it didn’t work out.

“Nothing,” his contact, Ratthi, said—a recent PhD who lit up the world with constant optimism. “Wait a few days, we’ve got a backup plan.”

In a few days, G53U was set to undergo the last augmentation stage. That would raise his value to sky-high levels, shutting off any chance of escape. He’d be the company’s systems analyst forever.

“Sure,” he told Ratthi. “Let’s try the backup plan. No rush.”

After the surgery, he didn’t realize what was happening at first. The world was silent, its voice replaced by the hum of the feed. G53U froze, then calmed down, figuring it was a post-op effect. But the silence didn’t go away after a day or two. The words of the world no longer formed into magical incantations. He clutched the tiny container of strange synthetics in his hand—and felt nothing.

The world was empty.

If there was still magic in it, G53U could no longer hear it.

They advised him to consume more sweets to help his brain adapt to the new conditions. He dutifully drank syrup with a bit of coffee added. It didn’t help.

When his friends managed to buy his contract for a week, sending him to Preservation as part of the backup plan, he still hoped the magic would return. Sometimes, when he drank his oversyruped synthetic coffee, he thought he could still hear the song of the world—right on the tip of his tongue.

7 notes

·

View notes

Text

Simulated universe previews panoramas from NASA's Roman Telescope

Astronomers have released a set of more than a million simulated images showcasing the cosmos as NASA's upcoming Nancy Grace Roman Space Telescope will see it. This preview will help scientists explore Roman's myriad science goals.

"We used a supercomputer to create a synthetic universe and simulated billions of years of evolution, tracing every photon's path all the way from each cosmic object to Roman's detectors," said Michael Troxel, an associate professor of physics at Duke University in Durham, North Carolina, who led the simulation campaign. "This is the largest, deepest, most realistic synthetic survey of a mock universe available today."

The project, called OpenUniverse, relied on the now-retired Theta supercomputer at the DOE's (Department of Energy's) Argonne National Laboratory in Illinois. In just nine days, the supercomputer accomplished a process that would take over 6,000 years on a typical computer.

In addition to Roman, the 400-terabyte dataset will also preview observations from the Vera C. Rubin Observatory, and approximate simulations from ESA's (the European Space Agency's) Euclid mission, which has NASA contributions. The Roman data is available now here, and the Rubin and Euclid data will soon follow.

The team used the most sophisticated modeling of the universe's underlying physics available and fed in information from existing galaxy catalogs and the performance of the telescopes' instruments. The resulting simulated images span 70 square degrees, equivalent to an area of sky covered by more than 300 full moons. In addition to covering a broad area, it also covers a large span of time—more than 12 billion years.

The project's immense space-time coverage shows scientists how the telescopes will help them explore some of the biggest cosmic mysteries. They will be able to study how dark energy (the mysterious force thought to be accelerating the universe's expansion) and dark matter (invisible matter, seen only through its gravitational influence on regular matter) shape the cosmos and affect its fate.

Scientists will get closer to understanding dark matter by studying its gravitational effects on visible matter. By studying the simulation's 100 million synthetic galaxies, they will see how galaxies and galaxy clusters evolved over eons.

Repeated mock observations of a particular slice of the universe enabled the team to stitch together movies that unveil exploding stars crackling across the synthetic cosmos like fireworks. These starbursts allow scientists to map the expansion of the simulated universe.

Scientists are now using OpenUniverse data as a testbed for creating an alert system to notify astronomers when Roman sees such phenomena. The system will flag these events and track the light they generate so astronomers can study them.

That's critical because Roman will send back far too much data for scientists to comb through themselves. Teams are developing machine-learning algorithms to determine how best to filter through all the data to find and differentiate cosmic phenomena, like various types of exploding stars.

"Most of the difficulty is in figuring out whether what you saw was a special type of supernova that we can use to map how the universe is expanding, or something that is almost identical but useless for that goal," said Alina Kiessling, a research scientist at NASA's Jet Propulsion Laboratory (JPL) in Southern California and the principal investigator of OpenUniverse.

While Euclid is already actively scanning the cosmos, Rubin is set to begin operations late this year and Roman will launch by May 2027. Scientists can use the synthetic images to plan the upcoming telescopes' observations and prepare to handle their data. This prep time is crucial because of the flood of data these telescopes will provide.

In terms of data volume, "Roman is going to blow away everything that's been done from space in infrared and optical wavelengths before," Troxel said. "For one of Roman's surveys, it will take less than a year to do observations that would take the Hubble or James Webb space telescopes around a thousand years. The sheer number of objects Roman will sharply image will be transformative."

"We can expect an incredible array of exciting, potentially Nobel Prize-winning science to stem from Roman's observations," Kiessling said. "The mission will do things like unveil how the universe expanded over time, make 3D maps of galaxies and galaxy clusters, reveal new details about star formation and evolution—all things we simulated. So now we get to practice on the synthetic data so we can get right to the science when real observations begin."

Astronomers will continue using the simulations after Roman launches for a cosmic game of spot the differences. Comparing real observations with synthetic ones will help scientists see how accurately their simulation predicts reality. Any discrepancies could hint at different physics at play in the universe than expected.

"If we see something that doesn't quite agree with the standard model of cosmology, it will be extremely important to confirm that we're really seeing new physics and not just misunderstanding something in the data," said Katrin Heitmann, a cosmologist and deputy director of Argonne's High Energy Physics division who managed the project's supercomputer time. "Simulations are super useful for figuring that out."

TOP IMAGE: Each tiny dot in the image at left is a galaxy simulated by the OpenUniverse campaign. The one-square-degree image offers a small window into the full simulation area, which is about 70 square degrees (equivalent to an area of sky covered by more than 300 full moons), while the inset at right is a close-up of an area 75 times smaller (1/600th the size of the full area). This simulation showcases the cosmos as NASA's Nancy Grace Roman Space Telescope could see it. Roman will expand on the largest space-based galaxy survey like it—the Hubble Space Telescope's COSMOS survey—which imaged two square degrees of sky over the course of 42 days. In only 250 days, Roman will view more than a thousand times more of the sky with the same resolution. Credit: NASA

6 notes

·

View notes

Text

Random thought about robot / machine ocs.

A synthetic being can be generally portrayed with an extreme amount of knowledge from the moment they are turned on for the first time. Imagine that the first moment of your existence is the overwhelming and extremely stressful conjunction of centuries of data.

Imagine not ever having years of learning, moments of curious naivety, peaceful ignorance in your formative years. Imagine just being made with the entirety of your character already formed. Already biased, already forced to follow a conduct, already within a system.

Its really rare for me to see someone portray their machine / ocs with that weight. the weight of sentience and knowledge from the moment they are born. It would be extremely admirable for a machine to stop and think in that context... They already have all they need and a purpose. Imagine that character from the smidges of outside information that it got... from the crumbles of other perspectives not implanted within them on their creation denying their nature.

Mind you, that nature is their whole existence. its everything they knew. Its literally what made them. Its beautiful to speculate how the personality of this character would form itself. Resentful but grateful for its creation? Inspired by the simple lives of the organic beings around it? Jealous of the bliss and ignorance a child would have as it tries to have a conversation with them?

I really really really love that aspect of synthetic beings. The pain of not having ignorance and an entire lifespan in a millisecond. I plan on implementing this type of perspective to my own character and my table top project. Hope you liked this little ramble!

20 notes

·

View notes