#Variational Auto-encoders

Explore tagged Tumblr posts

Text

youtube

Deep Learning : Generative AI

#GenerativeAI#GANs (Generative Adversarial Networks)#VAEs (Variational Auto encoders)#ArtificialIntelligence#MachineLearning#DeepLearning#NeuralNetworks#AIApplications#CreativeAI#NaturalLanguageGeneration (NLG)#ImageSynthesis#TextGeneration#ComputerVision#DeepfakeTechnology#AIArt#GenerativeDesign#AutonomousSystems#ContentCreation#TransferLearning#ReinforcementLearning#CreativeCoding#AIInnovation#TDM#health#healthcare#bootcamp#llm#youtube#branding#artwork

1 note

·

View note

Text

AI tool finds gene groups behind complex diseases and could help create personalized treatments

- By Nuadox Crew -

Northwestern University biophysicists have developed a new AI-powered tool to identify gene combinations that cause complex diseases like cancer, diabetes, and asthma.

Unlike single-gene disorders, these illnesses result from multiple genes interacting, making them harder to study.

The new model, called TWAVE (Transcriptome-Wide conditional Variational auto-Encoder), uses generative AI to amplify limited gene expression data and detect patterns linked to disease traits.

Rather than focusing on individual genes, TWAVE identifies gene groups responsible for shifting cells between healthy and diseased states. It also considers gene expression, which reflects environmental and lifestyle factors and avoids privacy concerns associated with DNA sequences.

In tests across various diseases, TWAVE outperformed traditional methods by pinpointing disease-causing genes—including some previously missed—and showing that different people can develop the same disease from different genetic pathways.

This paves the way for more personalized treatments based on an individual's specific gene expression profile.

Header image credit: Camila Felix.

Scientific paper: Motter, Adilson E., Generative prediction of causal gene sets responsible for complex traits, Proceedings of the National Academy of Sciences (2025). DOI: 10.1073/pnas.2415071122. doi.org/10.1073/pnas.2415071122

Read more at Northwestern University

Related Content

Scientists devise statistical framework for genetically mapping autoimmune diseases

Other Recent News

Mushrooms inspire next-gen materials: Researchers decode the microscopic filaments of fungi, offering a natural blueprint for creating stronger, more sustainable materials.

1 note

·

View note

Text

Generative AI Market Size, Share, Scope, Analysis, Forecast, Regional Outlook and Industry Report 2032

TheGenerative AI Market Size was valued at USD 20.21 Billion in 2023 and is expected to reach USD 440 Billion by 2032 and grow at a CAGR of 41.31% over the forecast period 2024-2032.

Generative AI is rapidly transforming industries with its ability to create text, images, audio, and video content. Fueled by breakthroughs in machine learning and deep learning, this technology is reshaping productivity and creativity. From startups to tech giants, investments in generative AI are accelerating at an unprecedented pace.

Generative AI Market has become a central focus across sectors such as media, healthcare, marketing, and finance. Businesses are leveraging this technology to streamline operations, enhance customer experience, and unlock new revenue streams. With user-friendly tools and APIs now widely available, generative AI is no longer limited to tech-savvy developers—it's accessible to creators, marketers, and businesses of all sizes.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/4490

Market Keyplayers:

Synthesia

IBM

Microsoft

Rephrase.ai

Genei AI Ltd

Google LLC

Adobe

Runway

Capgemini

Accenture

Mistral AI

Open AI

Trends Driving the Generative AI Market

AI-as-a-Service Models: Cloud-based platforms like OpenAI, Google Cloud, and AWS offer generative AI tools as plug-and-play services, making integration simpler for businesses.

Creative Automation: Generative AI is transforming content creation—from writing articles to generating code, music, and design prototypes—boosting productivity in creative industries.

Personalized Experiences: Brands are using generative AI to craft personalized emails, ads, and product recommendations at scale, increasing customer engagement.

Ethical AI and Regulation: As usage grows, concerns about misinformation, deepfakes, and data privacy are prompting governments and organizations to push for ethical AI practices and regulatory frameworks.

Enquiry of This Report: https://www.snsinsider.com/enquiry/4490

Market Segmentation:

BY PRODUCT TYPE

Software

Service

BY TECHNOLOGY

Variational Auto-encoders

Diffusion Networks

GANs

Transformers

BY APPLICATION

Computer Vision

Predictive Analysis

Content Generation

NLP

Robotics & Automation

Chatbots & Intelligent Virtual Assistants

Others

BY MODEL

Image & Video Generative Models

Chatbots & Intelligent Virtual Assistants

Large Language Models

Others

BY END USER

Media & Entertainment

BFSI

Automotive & Transportation

Gaming

IT & Telecommunication

Market Analysis: Key Insights

Sector Adoption: High adoption rates are seen in marketing & advertising, software development, healthcare diagnostics, and finance, where generative AI is improving efficiency and customer service.

Investment Surge: Venture capital and corporate investments are pouring into generative AI startups, with several billion-dollar valuations recorded in 2024 alone.

Talent Demand: The need for AI engineers, data scientists, and prompt designers has skyrocketed, reflecting a broader shift in required workforce skills.

Future Prospects

The future of the Generative AI Market is bright, with ongoing innovations paving the way for new use cases and business models. We can expect deeper integration of generative AI in enterprise workflows—from automated legal drafting and medical imaging analysis to real-time customer service and product prototyping.

As open-source models become more powerful and accessible, smaller companies will also compete on innovation. Multi-modal generative AI—where text, image, and video generation converge—will enable more seamless, immersive applications across industries. Additionally, developments in edge computing will allow AI models to run locally on devices, enhancing privacy and reducing latency.

In education, generative AI will support personalized learning, adaptive assessments, and content generation for diverse student needs. In entertainment, AI will play a key role in co-creating scripts, visuals, and even virtual actors. Cross-disciplinary applications like biotech and engineering design will benefit from simulation and testing powered by generative models.

Access Complete Report: https://www.snsinsider.com/reports/generative-ai-market-4490

Conclusion

The Generative AI Market is evolving at an extraordinary pace, offering transformative potential across industries. While challenges around ethical use and regulation remain, the technology’s ability to unlock creativity, speed up innovation, and reduce operational costs makes it a driving force in the digital economy.

As businesses continue to explore its full potential, generative AI is set to become an essential tool—not just for automation, but for imagination. Those who adapt early will gain a strong competitive edge in the age of intelligent creation.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

IEEE Transactions on Emerging Topics in Computational Intelligence Volume 9, Issue 1, February 2025

1) A Survey of Human-Object Interaction Detection With Deep Learning

Author(s): Geng Han, Jiachen Zhao, Lele Zhang, Fang Deng

Pages: 3 - 26

2) Exploring the Horizons of Meta-Learning in Neural Networks: A Survey of the State-of-the-Art

Author(s): Asit Barman, Swalpa Kumar Roy, Swagatam Das, Paramartha Dutta

Pages: 27 - 42

3) Micro Many-Objective Evolutionary Algorithm With Knowledge Transfer

Author(s): Hu Peng, Zhongtian Luo, Tian Fang, Qingfu Zhang

Pages: 43 - 56

4) MoAR-CNN: Multi-Objective Adversarially Robust Convolutional Neural Network for SAR Image Classification

Author(s): Hai-Nan Wei, Guo-Qiang Zeng, Kang-Di Lu, Guang-Gang Geng, Jian Weng

Pages: 57 - 74

5) Prescribed-Time Optimal Consensus for Switched Stochastic Multiagent Systems: Reinforcement Learning Strategy

Author(s): Weiwei Guang, Xin Wang, Lihua Tan, Jian Sun, Tingwen Huang

Pages: 75 - 86

6) SR-ABR: Super Resolution Integrated ABR Algorithm for Cloud-Based Video Streaming

Author(s): Haiqiao Wu, Dapeng Oliver Wu, Peng Gong

Pages: 87 - 98

7) 3D-IMMC: Incomplete Multi-Modal 3D Shape Clustering via Cross Mapping and Dual Adaptive Fusion

Author(s): Tianyi Qin, Bo Peng, Jianjun Lei, Jiahui Song, Liying Xu, Qingming Huang

Pages: 99 - 108

8) A Co-Evolutionary Dual Niching Differential Evolution Algorithm for Nonlinear Equation Systems Optimization

Author(s): Shuijia Li, Rui Wang, Wenyin Gong, Zuowen Liao, Ling Wang

Pages: 109 - 118

9) A Collaborative Multi-Component Optimization Model Based on Pattern Sequence Similarity for Electricity Demand Prediction

Author(s): Xiaoyong Tang, Juan Zhang, Ronghui Cao, Wenzheng Liu

Pages: 119 - 130

10) A Deep Reinforcement Learning-Based Adaptive Large Neighborhood Search for Capacitated Electric Vehicle Routing Problems

Author(s): Chao Wang, Mengmeng Cao, Hao Jiang, Xiaoshu Xiang, Xingyi Zhang

Pages: 131 - 144

11) A Diversified Population Migration-Based Multiobjective Evolutionary Algorithm for Dynamic Community Detection

Author(s): Lei Zhang, Chaofan Qin, Haipeng Yang, Zishan Xiong, Renzhi Cao, Fan Cheng

Pages: 145 - 159

12) A Hub-Based Self-Organizing Algorithm for Feedforward Small-World Neural Network

Author(s): Wenjing Li, Can Chen, Junfei Qiao

Pages: 160 - 175

13) A New-Type Zeroing Neural Network Model and Its Application in Dynamic Cryptography

Author(s): Jingcan Zhu, Jie Jin, Chaoyang Chen, Lianghong Wu, Ming Lu, Aijia Ouyang

Pages: 176 - 191

14) FeaMix: Feature Mix With Memory Batch Based on Self-Consistency Learning for Code Generation and Code Translation

Author(s): Shuai Zhao, Jie Tian, Jie Fu, Jie Chen, Jinming Wen

Pages: 192 - 201

15) MUSTER: A Multi-Scale Transformer-Based Decoder for Semantic Segmentation

Author(s): Jing Xu, Wentao Shi, Pan Gao, Qizhu Li, Zhengwei Wang

Pages: 202 - 212

16) Feature Selection Using Generalized Multi-Granulation Dominance Neighborhood Rough Set Based on Weight Partition

Author(s): Weihua Xu, Qinyuan Bu

Pages: 213 - 227

17) A Collaborative Neurodynamic Algorithm for Quadratic Unconstrained Binary Optimization

Author(s): Hongzong Li, Jun Wang

Pages: 228 - 239

18) Global Cross-Attention Network for Single-Sensor Multispectral Imaging

Author(s): Nianzeng Yuan, Junhuai Li, Bangyong Sun

Pages: 240 - 252

19) OccludedInst: An Efficient Instance Segmentation Network for Automatic Driving Occlusion Scenes

Author(s): Hai Wang, Shilin Zhu, Long Chen, Yicheng Li, Yingfeng Cai

Pages: 253 - 270

20) 3D Skeleton-Based Non-Autoregressive Human Motion Prediction Using Encoder-Decoder Attention-Based Model

Author(s): Mayank Lovanshi, Vivek Tiwari, Rajesh Ingle, Swati Jain

Pages: 271 - 280

21) GF-LRP: A Method for Explaining Predictions Made by Variational Graph Auto-Encoders

Author(s): Esther Rodrigo-Bonet, Nikos Deligiannis

Pages: 281 - 291

22) Neuromorphic Auditory Perception by Neural Spiketrum

Author(s): Huajin Tang, Pengjie Gu, Jayawan Wijekoon, MHD Anas Alsakkal, Ziming Wang, Jiangrong Shen, Rui Yan, Gang Pan

Pages: 292 - 303

23) Semi-Supervised Contrastive Learning for Time Series Classification in Healthcare

Author(s): Xiaofeng Liu, Zhihong Liu, Jie Li, Xiang Zhang

Pages: 318 - 331

24) Bi-Level Model Management Strategy for Solving Expensive Multi-Objective Optimization Problems

Author(s): Fei Li, Yujie Yang, Yuhao Liu, Yuanchao Liu, Muyun Qian

Pages: 332 - 346

25) Transfer Optimization for Heterogeneous Drone Delivery and Pickup Problem

Author(s): Xupeng Wen, Guohua Wu, Jiao Liu, Yew-Soon Ong

Pages: 347 - 364

26) Comprehensive Multisource Learning Network for Cross-Subject Multimodal Emotion Recognition

Author(s): Chuangquan Chen, Zhencheng Li, Kit Ian Kou, Jie Du, Chen Li, Hongtao Wang, Chi-Man Vong

Pages: 365 - 380

27) Graph Learning With Riemannian Optimization for Multi-View Integrative Clustering

Author(s): Aparajita Khan, Pradipta Maji

Pages: 381 - 393

28) Applying a Higher Number of Output Membership Functions to Enhance the Precision of a Fuzzy System

Author(s): Salah-ud-din Khokhar, Akif Nadeem, Arslan A. Rizvi, Muhammad Yasir Noor

Pages: 394 - 405

29) Tensorlized Multi-Kernel Clustering via Consensus Tensor Decomposition

Author(s): Fei Qi, Junyu Li, Yue Zhang, Weitian Huang, Bin Hu, Hongmin Cai

Pages: 406 - 418

30) Balancing Security and Correctness in Code Generation: An Empirical Study on Commercial Large Language Models

Author(s): Gavin S. Black, Bhaskar P. Rimal, Varghese Mathew Vaidyan

Pages: 419 - 430

31) Camouflage Is All You Need: Evaluating and Enhancing Transformer Models Robustness Against Camouflage Adversarial Attacks

Author(s): Álvaro Huertas-García, Alejandro Martín, Javier Huertas-Tato, David Camacho

Pages: 431 - 443

32) Deep Learning Surrogate Models of JULES-INFERNO for Wildfire Prediction on a Global Scale

Author(s): Sibo Cheng, Hector Chassagnon, Matthew Kasoar, Yike Guo, Rossella Arcucci

Pages: 444 - 454

33) Dual Completion Learning for Incomplete Multi-View Clustering

Author(s): Qiangqiang Shen, Xuanqi Zhang, Shuqin Wang, Yuanman Li, Yongsheng Liang, Yongyong Chen

Pages: 455 - 467

34) Active Learning-Based Backtracking Attack Against Source Location Privacy of Cyber-Physical System

Author(s): Zhen Hong, Minjie Chen, Rui Wang, Mingyuan Yan, Dehua Zheng, Changting Lin, Jie Su, Meng Han

Pages: 468 - 479

35) Hierarchical Encoding Method for Retinal Segmentation Evolutionary Architecture Search

Author(s): Huangxu Sheng, Hai-Lin Liu, Yutao Lai, Shaoda Zeng, Lei Chen

Pages: 480 - 493

36) CycleFusion: Automatic Annotation and Graph-to-Graph Transaction Based Cycle-Consistent Adversarial Network for Infrared and Visible Image Fusion

Author(s): Yueying Luo, Wenbo Liu, Kangjian He, Dan Xu, Hongzhen Shi, Hao Zhang

Pages: 494 - 508

37) Explicit and Implicit Box Equivariance Learning for Weakly-Supervised Rotated Object Detection

Author(s): Linfei Wang, Yibing Zhan, Xu Lin, Baosheng Yu, Liang Ding, Jianqing Zhu, Dapeng Tao

Pages: 509 - 521

38) Deep Reinforcement Learning-Based Feature Extraction and Encoding for Finger-Vein Verification

Author(s): Yantao Li, Chao Fan, Huafeng Qin, Shaojiang Deng, Mounim A. El-Yacoubi, Gang Zhou

Pages: 522 - 536

39) Global Bipartite Exact Consensus of Unknown Nonlinear Multi-Agent Systems With Switching Topologies: Iterative Learning Approach

Author(s): Mengdan Liang, Junmin Li

Pages: 537 - 551

40) APR-Net Tracker: Attention Pyramidal Residual Network for Visual Object Tracking

Author(s): Bing Liu, Di Yuan, Xiaofang Li

Pages: 552 - 564

41) Symmetric Regularized Sequential Latent Variable Models With Adversarial Neural Networks

Author(s): Jin Huang, Ming Xiao

Pages: 565 - 575

42) StreamSoNGv2: Online Classification of Data Streams Using Growing Neural Gas

Author(s): Jeffrey J. Dale, James M. Keller, Aquila P. A. Galusha

Pages: 576 - 589

43) FCPFS: Fuzzy Granular Ball Clustering-Based Partial Multilabel Feature Selection With Fuzzy Mutual Information

Author(s): Lin Sun, Qifeng Zhang, Weiping Ding, Tianxiang Wang, Jiucheng Xu

Pages: 590 - 606

44) An Automatic Paper-Reviewer Recommendation Algorithm Based on Depth and Breadth

Author(s): Xiulin Zheng, Peipei Li, Xindong Wu

Pages: 607 - 616

45) Efficient and Robust Sparse Linear Discriminant Analysis for Data Classification

Author(s): Jingjing Liu, Manlong Feng, Xianchao Xiu, Wanquan Liu, Xiaoyang Zeng

Pages: 617 - 629

46) Detail Reinforcement Diffusion Model: Augmentation Fine-Grained Visual Categorization in Few-Shot Conditions

Author(s): Tianxu Wu, Shuo Ye, Shuhuang Chen, Qinmu Peng, Xinge You

Pages: 630 - 640

47) Efficient Low-Light Light Field Enhancement With Progressive Feature Interaction

Author(s): Xin Luo, Gaosheng Liu, Zhi Lu, Kun Li, Jingyu Yang

Pages: 641 - 653

48) Circuit Implementation of Memristive Fuzzy Logic for Blood Pressure Grading Quantification

Author(s): Ya Li, Shaojun Ji, Qinghui Hong

Pages: 654 - 667

49) Learning From Pairwise Confidence Comparisons and Unlabeled Data

Author(s): Junpeng Li, Shuying Huang, Changchun Hua, Yana Yang

Pages: 668 - 680

50) Subspace Sequentially Iterative Leaning for Semi-Supervised SVM

Author(s): Jiajun Wen, Xi Chen, Heng Kong, Junhong Zhang, Zhihui Lai, Linlin Shen

Pages: 681 - 694

51) Clickbait Detection via Prompt-Tuning With Titles Only

Author(s): Ye Wang, Yi Zhu, Yun Li, Jipeng Qiang, Yunhao Yuan, Xindong Wu

Pages: 695 - 705

52) TROPE: Triplet-Guided Feature Refinement for Person Re-Identification

Author(s): Divya Singh, Jimson Mathew, Mayank Agarwal, Mahesh Govind

Pages: 706 - 716

53) Deep Spiking Neural Networks Driven by Adaptive Interval Membrane Potential for Temporal Credit Assignment Problem

Author(s): Jiaqiang Jiang, Haohui Ding, Haixia Wang, Rui Yan

Pages: 717 - 728

54) Transfer Learning-Based Region Statistical Data Completion via Double Graphs

Author(s): Shengwen Li, Suzhen Huang, Xuyang Cheng, Renyao Chen, Yi Zhou, Shunping Zhou, Hong Yao, Junfang Gong

Pages: 729 - 739

55) COT: A Generative Approach for Hate Speech Counter-Narratives via Contrastive Optimal Transport

Author(s): Linhao Zhang, Li Jin, Guangluan Xu, Xiaoyu Li, Xian Sun

Pages: 740 - 756

56) Least Information Spectral GAN With Time-Series Data Augmentation for Industrial IoT

Author(s): Joonho Seon, Seongwoo Lee, Young Ghyu Sun, Soo Hyun Kim, Dong In Kim, Jin Young Kim

Pages: 757 - 769

57) Gp3Former: Gaussian Prior Tri-Cascaded Transformer for Video Instance Segmentation in Livestreaming Scenarios

Author(s): Wensheng Li, Jing Zhang, Li Zhuo

Pages: 770 - 784

58) Open-Space Emergency Guiding With Individual Density Prediction Based on Internet of Things Localization

Author(s): Lien-Wu Chen, Hao-Wei Huang, Yi-Ju Chen, Ming-Fong Tsai

Pages: 785 - 797

59) Consistency and Diversity Induced Tensorized Multi-View Subspace Clustering

Author(s): Chunming Xiao, Yonghui Huang, Haonan Huang, Qibin Zhao, Guoxu Zhou

Pages: 798 - 809

60) Convert Cross-Domain Classification Into Few-Shot Learning: A Unified Prompt-Tuning Framework for Unsupervised Domain Adaptation

Author(s): Yi Zhu, Hui Shen, Yun Li, Jipeng Qiang, Yunhao Yuan, Xindong Wu

Pages: 810 - 821

61) Differentiable Collaborative Patches for Neural Scene Representations

Author(s): Heng Zhang, Lifeng Zhu

Pages: 822 - 831

62) Adaptive Neural Network Optimal Backstepping Control of Strict Feedback Nonlinear Systems via Reinforcement Learning

Author(s): Mei Zhong, Jinde Cao, Heng Liu

Pages: 832 - 847

63) ARC: A Layer Replacement Compression Method Based on Fine-Grained Self-Attention Distillation for Compressing Pre-Trained Language Models

Author(s): Daohan Yu, Liqing Qiu

Pages: 848 - 860

64) Generative Adversarial Network Based Image-Scaling Attack and Defense Modeling

Author(s): Junjian Li, Honglong Chen, Zhe Li, Anqing Zhang, Xiaomeng Wang, Xingang Wang, Feng Xia

Pages: 861 - 873

65) Global and Cluster Structural Balance via a Priority Strategy Based Memetic Algorithm

Author(s): Yifei Sun, Zhuo Liu, Yaochu Jin, Xin Sun, Yifei Cao, Jie Yang

Pages: 874 - 888

66) Efficient Message Passing Algorithm and Architecture Co-Design for Graph Neural Networks

Author(s): Xiaofeng Zou, Cen Chen, Luochuan Zhang, Shengyang Li, Joey Tianyi Zhou, Wei Wei, Kenli Li

Pages: 889 - 903

67) Targeted Mining Precise-Positioning Episode Rules

Author(s): Jian Zhu, Xiaoye Chen, Wensheng Gan, Zefeng Chen, Philip S. Yu

Pages: 904 - 917

68) Two-Stage Deep Feature Selection Method Using Voting Differential Evolution Algorithm for Pneumonia Detection From Chest X-Ray Images

Author(s): Haibin Ouyang, Dongmei Liu, Steven Li, Weiping Ding, Zhi-Hui Zhan

Pages: 918 - 932

69) Learning EEG Motor Characteristics via Temporal-Spatial Representations

Author(s): Tian-Yu Xiang, Xiao-Hu Zhou, Xiao-Liang Xie, Shi-Qi Liu, Hong-Jun Yang, Zhen-Qiu Feng, Mei-Jiang Gui, Hao Li, De-Xing Huang, Xiu-Ling Liu, Zeng-Guang Hou

Pages: 933 - 945

70) DualC: Drug-Drug Interaction Prediction Based on Dual Latent Feature Extractions

Author(s): Lin Guo, Xiujuan Lei, Lian Liu, Ming Chen, Yi Pan

Pages: 946 - 960

71) ESAI: Efficient Split Artificial Intelligence via Early Exiting Using Neural Architecture Search

Author(s): Behnam Zeinali, Di Zhuang, J. Morris Chang

Pages: 961 - 971

72) Early Time Series Anomaly Prediction With Multi-Objective Optimization

Author(s): Ting-En Chao, Yu Huang, Hao Dai, Gary G. Yen, Vincent S. Tseng

Pages: 972 - 987

73) Enhancing Accuracy-Privacy Trade-Off in Differentially Private Split Learning

Author(s): Ngoc Duy Pham, Khoa T. Phan, Naveen Chilamkurti

Pages: 988 - 1000

74) Evolutionary Optimization for Proactive and Dynamic Computing Resource Allocation in Open Radio Access Network

Author(s): Gan Ruan, Leandro L. Minku, Zhao Xu, Xin Yao

Pages: 1001 - 1018

75) Evolutionary Sequential Transfer Learning for Multi-Objective Feature Selection in Classification

Author(s): Jiabin Lin, Qi Chen, Bing Xue, Mengjie Zhang

Pages: 1019 - 1033

76) Feature Autonomous Screening and Sequence Integration Network for Medical Image Classification

Author(s): Hongfeng You, Xiaobing Chen, Kun Yu, Guangbo Fu, Fei Mao, Xin Ning, Xiao Bai, Weiwei Cai

Pages: 1034 - 1048

77) FedLaw: Value-Aware Federated Learning With Individual Fairness and Coalition Stability

Author(s): Jianfeng Lu, Hangjian Zhang, Pan Zhou, Xiong Wang, Chen Wang, Dapeng Oliver Wu

Pages: 1049 - 1062

78) From Bag-of-Words to Transformers: A Comparative Study for Text Classification in Healthcare Discussions in Social Media

Author(s): Enrico De Santis, Alessio Martino, Francesca Ronci, Antonello Rizzi

Pages: 1063 - 1077

79) Fuzzy Composite Learning Control of Uncertain Fractional-Order Nonlinear Systems Using Disturbance Observer

Author(s): Zhiye Bai, Shenggang Li, Heng Liu

Pages: 1078 - 1090

0 notes

Text

Stable Diffusion Upscale Pipeline Using PyTorch & Diffusers

Stable Diffusion is a cutting-edge technique that makes use of latent diffusion models to produce high-quality images from textual descriptions. Easy-to-use pipelines for deploying and utilizing the Stable Diffusion model, including creating, editing, and upscaling photos, are provided by the Hugging Face diffusers package.

Best way to upscale Stable Diffusion

We will tell how to use the Stable Diffusion Upscale Pipeline from the diffusers library to upscale images produced by stable diffusion in this article. Go over the rationale behind upscaling and show you how to use the Intel Extension for the PyTorch package (a Python package where Intel releases its latest optimizations and features before upstreaming them into open-source PyTorch) to optimize this process for better performance on Intel Xeon Processors.

How can the Stable Diffusion Upscale Pipeline be made more efficient for inference?

Using the Stable Diffusion model, the Stable Diffusion Upscale Pipeline from the Hugging Face diffusers library is intended to improve input image resolution, specifically increasing the resolution by a factor of four. This pipeline employs a number of different components, such as a frozen CLIP text model for text encoding, a Variational Auto-Encoder (VAE) for picture encoding and decoding, a UNet architecture for image latent noise reduction, and multiple schedulers to control the diffusion process during image production.

This pipeline is perfect for bringing out the details in artificial or real-world photos, as it is especially helpful for applications that need to produce high-quality image outputs from lower resolution inputs. In order to balance fidelity to the input text versus image quality, users can define a variety of parameters, including the number of denoising steps. Custom callbacks can also be supported during the inference process to allow for monitoring or modification of the generation.

Tune each of the Stable Diffusion Upscale Pipeline’s component parts separately before combining them to improve the pipeline’s performance. An essential component of this improvement is the PyTorch Intel Extension. With sophisticated optimizations, the addon improves PyTorch and offers a further boost in speed on Intel technology. These improvements make use of the capabilities of Intel CPUs’ Vector Neural Network Instructions (VNNI), Intel Advanced Vector Extensions 512 (Intel AVX-512), and Intel Advanced Matrix Extensions (Intel AMX). The Python API ipex.optimize(), which is accessible through the Intel Extension for PyTorch, automatically optimizes the pipeline module, enabling it to take advantage of these advanced hardware instructions for increased performance efficiency.

Sample Code

Using the Intel Extension for PyTorch for performance enhancements, the code sample below shows how to upscale a picture using the Stable Diffusion Upscale Pipeline from the diffusers library. The pipeline’s U-Net, VAE, and text encoder components are all individually targeted and CPU inference-optimized.

Configuring the surroundings

It is advised to carry out the installations in a virtualized Conda environment. Install the Intel Extension for PyTorch, diffusers, and PyTorch itself:

python -m pip install torch torchvision torchaudio –index-url https://download.pytorch.org/whl/cpu python -m pip install intel-extension-for-pytorch python -m pip install oneccl_bind_pt –extra-index-url https://pytorch-extension.intel.com/release-whl/stable/cpu/us/ pip install transformers pip install diffusers

How to Improve

First, let’s load the sample image that you want to upscale and import all required packages, including the Intel Extension for PyTorch.

Let’s now examine how the PyTorch Intel Extension capabilities can be used to optimize the upscaling pipeline.

To optimize, each pipeline component is targeted independently. Initially, you configure the text encoder, VAE, and UNet to use the Channels Last format. Tensor dimensions are ordered as batch, height, width, and channels using the channel’s last format. Because it better fits specific memory access patterns, this structure is more effective and improves performance. For convolutional neural networks, channels last is especially helpful because it minimizes the requirement for data reordering during operations, which can greatly increase processing speed.

Similar to this, ipex.optimize()} is used by Intel Extension for PyTorch to optimize each component, with the data type set to BFloat16. With the help of Intel AMX, which is compatible with 4th generation Xeon Scalable Processors and up, BFloat16 precision operations are optimized. You can enable Intel AMX, an integrated artificial intelligence accelerator, for lower precision data types like BFloat16 and INT8 by utilizing IPEX'soptimize()} function.

Ultimately, you can achieve the best results for upscaling by employing mixed precision, which combines the numerical stability of higher precision (e.g., FP32) with the computational speed and memory savings of lower precision arithmetic (e.g., BF16). This pipeline automatically applies mixed precision when `torch.cpu.amp.autocast()} is set. Now that the pipeline object has been optimized using Intel Extension for PyTorch, it can be utilized to achieve minimal latency and upscale images.

Configuring an Advanced Environment

This section explains how to configure environment variables and configurations that are optimized for Intel Xeon processor performance, particularly for memory management and parallel processing, to gain even more performance boosts. Some environment variables unique to the Intel OpenMP library are configured by the script ‘env_activate.sh’. Additionally, it specifies which shared libraries are loaded before others by using LD_PRELOAD. In order to ensure that particular libraries are loaded at runtime prior to the application starting, the script constructs the path to those libraries dynamically.

How to configure Advanced Environment on Intel Xeon CPUs for optimal performance:

Install two packages that serve as dependencies to use the script

pip install intel-openmp conda install -y gperftools -c conda-forge

git clone https://github.com/intel/intel-extension-for-pytorch.git cd intel-extension-for-pytorch git checkout v2.3.100+cpu

cd examples/cpu/inference/python/llm

Activate environment variables

source ./tools/env_activate.sh

Run a script with the code from the previous section

python run_upscaler_pipeline.py

Your environment is prepared to run Stable Diffusion Upscale Pipeline, which was performance-flagged in the previous stage to optimize for higher performance. Moreover, additional performance can be obtained by inference utilizing the Intel Extension for Pytorch optimized pipeline.

Read more on Govindhtech.com

#StableDiffusionUpscale#IntelExtension#PyTorch#StableDiffusion#neuralnetworks#IntelXeonprocessor#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Biologically Interpretable VAE with Supervision for Transcriptomics Data Under Ordinal Perturbations

Latent variable models such as the Variational Auto-Encoders (VAEs) have shown impressive performance for inferring expression patterns for cell subtyping and biomarker identification from transcriptomics data. However, the limited interpretability of their latent variables obscures deriving meaningful biological understanding of cellular responses to different external and internal perturbations. We here propose a novel deep learning framework, EXPORT (EXPlainable VAE for ORdinally perturbed Transcriptomics data), for analyzing ordinally perturbed transcriptomics data that can incorporate any biological pathway knowledge in the VAE latent space. With the corresponding pathway-informed decoder, the learned latent expression patterns can be explained as pathway-level responses to perturbations, offering direct interpretability with biological understanding. More importantly, we explicitly model the ordinal nature of many real-world perturbations into the EXPORT framework by training an auxiliary ordinal regressor neural network to capture corresponding expression changes in the VAE latent representations, for example under different dosage levels of radiation exposure. By incorporating ordinal constraints during the training of our proposed framework, we further enhance the model interpretability by guiding the VAE latent space to organize perturbation responses in a hierarchical manner. We demonstrate the utility of the inferred guided latent space for downstream tasks, such as identifying key regulatory pathways associated with specific perturbation changes by analyzing transcriptomics datasets on both bulk and single-cell data. Overall, we envision that our proposed approach can unravel unprecedented biological intricacies in cellular responses to various perturbations while bringing an additional layer of interpretability to biology-inspired deep learning models. http://dlvr.it/T4qSzM

0 notes

Quote

This paper introduces the Deep Recurrent Attentive Writer (DRAW) neural network architecture for image generation. DRAW networks combine a novel spatial attention mechanism that mimics the foveation of the human eye, with a sequential variational auto-encoding framework that allows for the iterative construction of complex images. The system substantially improves on the state of the art for generative models on MNIST, and, when trained on the Street View House Numbers dataset, it generates images that cannot be distinguished from real data with the naked eye.

[1502.04623] DRAW: A Recurrent Neural Network For Image Generation

1 note

·

View note

Text

A Time Series Is Worth 64 Words: Long-Term Forecasting With Transformers

PEER: A Collaborative Language Model

Multi-Time Attention Networks for Irregularly Sampled Time Series

TimesNet: Temporal 2D-Variation Modeling for General Time Series Analysis

C2-CRS: Coarse-to-Fine Contrastive Learning for CRS

Re2G: Retrieve, Rerank, Generate

Asymmetric Student-Teacher Networks for Industrial Anomaly Detection

TARNet: Task-Aware Reconstruction for Time-Series Transformer

Deep Learning for Anomaly Detection: A Review

TFAD: A Decomposition Time Series Anomaly Detection Architecture with Time-Frequency

AER: Auto-Encoder with Regression for Time Series Anomaly Detection

Phraseformer:Multimodal Key phrase Extraction using Transformer and Graph Embedding

Rethinking Calibration of Deep Neural Network: Do Not Be Afraid of Overconfidence

MetaFormer : A Unified Meta Framework for Fine-Grained Recognition

A Class-Rebalancing Self-Training Framework for Imbalanced Semi-Supervised Learning

Learning Dense Representations of Phrases at Scale

Multi-Label Image Recognition with GNN

Various Methods to develop Verbalizer in Prompt-based Learning (KPT, WARP)

ADTR : Anomaly Detection Transformer with Feature Reconstruction

0 notes

Text

Generative AI Market Share, Trend & Growth Forecast to 2032

As per a recent research report, Generative AI Market to surpass USD 119 bn by 2032.

As per the study, the primary driver for market growth is the development of sophisticated models and algorithms capable of generating high-quality and realistic content across various industries. From generating art and music to creating virtual characters and landscapes, Generative AI has revolutionized the creative process, offering new possibilities and opportunities for businesses. As consumers seek more tailored experiences, businesses are leveraging Generative AI technologies to create customized content that resonates with individual preferences. Whether it's generating personalized product recommendations, designing unique marketing campaigns, or crafting interactive user interfaces, Generative AI empowers businesses to deliver personalized experiences that drive customer engagement and satisfaction. The increasing demand for personalized content is propelling the growth of Generative AI industry.

Request for Sample Copy report @ https://www.gminsights.com/request-sample/detail/6094

Considering the technology spectrum, the market is segmented into diffusion models, variational auto-encoders, transformers model, and GANs. The variational auto-encoders (VAEs) technology segment of Generative AI market is poised gain traction during the forecast period. VAEs are deep learning models that enable the generation of high-quality synthetic data by learning the underlying structure of the input data. This technology finds applications in diverse domains, including image synthesis, natural language processing, and drug discovery. VAEs have gained significant attention due to their ability to generate novel and realistic outputs, making them a valuable tool for research, product development, and creative applications. The increasing popularity of the technology across various application sectors is adding to the segmental share.

Based on components, the Generative AI market is divided into service and solution. The service segment is expected to witness significant growth through 2032 as service providers play a vital role in implementing and maintaining Generative AI solutions, ensuring their seamless integration into existing systems. With the growing adoption of the technology across industries, the demand for services such as consulting, system integration, and support is on the rise. Service providers are focusing on offering tailored solutions to address specific business requirements, enhancing the overall user experience and optimizing the benefits derived from Generative AI.

Request for customization this report @ https://www.gminsights.com/roc/6094

The APAC region is expected to emerge as a lucrative avenue for Generative AI market players during the forecast period. Rapid technological advancements, increasing digitalization, and the growing adoption of AI technologies across various industries are driving the solution demand in the region. Moreover, countries such as China, Japan, and South Korea are at the forefront of AI R&D. The increasing focus on discovering the potential usage of AI in healthcare, manufacturing, finance, and marketing, coupled with large talent pool and supportive government polices are shaping the industry dynamics in the APAC region.

Partial chapters of report table of contents (TOC):

Chapter 2 Executive Summary

2.1 Generative AI market 360º synopsis, 2018 - 2032

2.2 Business trends

2.2.1 TAM

2.3 Regional trends

2.4 Component trends

2.5 Deployment model trends

2.6 Technology trends

2.7 End-user trends

Chapter 3 Generative AI Market Insights

3.1 Impact on COVID-19

3.2 Russia- Ukraine war impact

3.3 Industry ecosystem analysis

3.4 Vendor matrix

3.5 Profit margin analysis

3.6 Technology & innovation landscape

3.7 Patent analysis

3.8 Key news and initiatives

3.9 Regulatory landscape

3.10 Impact forces

3.10.1 Growth drivers

3.10.1.1 The increasing use of AI-integrated systems across multiple industries

3.10.1.2 The rise of creative applications & content creation

3.10.1.3 Increasing use of generative AI in media & entertainment industry

3.10.1.4 Growing demand for personalized and customized solutions

3.10.1.5 Advancements in deep learning and neural networks

3.10.2 Industry pitfalls & challenges

3.10.2.1 Ethical and legal concerns

3.10.2.2 Data quality and bias

3.11 Growth potential analysis

3.12 Porter’s analysis

3.13 PESTEL analysis

About Global Market Insights:

Global Market Insights, Inc., headquartered in Delaware, U.S., is a global market research and consulting service provider; offering syndicated and custom research reports along with growth consulting services. Our business intelligence and industry research reports offer clients with penetrative insights and actionable market data specially designed and presented to aid strategic decision making. These exhaustive reports are designed via a proprietary research methodology and are available for key industries such as chemicals, advanced materials, technology, renewable energy and biotechnology.

Contact us:

Aashit Tiwari Corporate Sales, USA Global Market Insights Inc. Toll Free: +1-888-689-0688 USA: +1-302-846-7766 Europe: +44-742-759-8484 APAC: +65-3129-7718 Email: [email protected]

0 notes

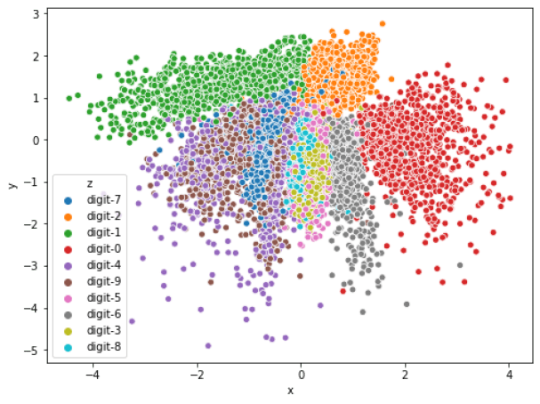

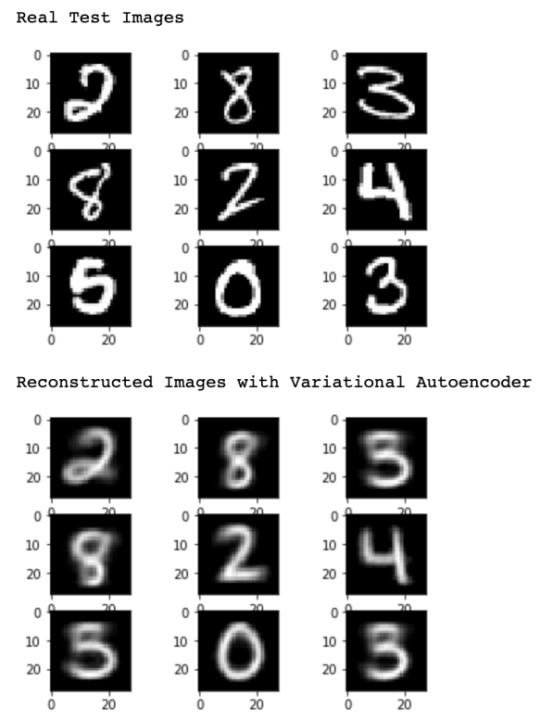

Photo

(via Variational AutoEncoders and Image Generation with Keras)

#machine learning#data science#artificial intelligence#keras#tensorflow#deep learning#auto encoder#variational autoencoders#dropsofai

0 notes

Text

youtube

#ai #generativeai #llm #machinelearning #chatgpt #datascience Understand Large Language Models (LLM) in this video. Join us as we delve into the inspiring technology behind these Generative AI methods. Discover how Artificial Intelligence and Machine Learning merge to revolutionize communication, creativity, and problem-solving Witness LLM's remarkable ability to generate intricate and coherent language, showcasing the true potential of Creative AI. Prepare to be amazed as we explore the limitless possibilities of LLM Models in Healthcare, Education, Proptech. Don't miss out on this educational journey to the forefront of technological advancement. What is LLM Model | What is Large Language Model | How LLM Model Works | AI Expert Arshad Khan. #GenerativeAI #GANs (Generative Adversarial Networks) #VAEs (Variational Auto encoders) #ArtificialIntelligence #MachineLearning #DeepLearning #NeuralNetworks #AIApplications #CreativeAI #NaturalLanguageGeneration (NLG) #ImageSynthesis #TextGeneration #ComputerVision #DeepfakeTechnology #AIArt #GenerativeDesign #AutonomousSystems #ContentCreation #TransferLearning #ReinforcementLearning #CreativeCoding #AIInnovation #TDM #health #healthcare #bootcamp #llm

1 note

·

View note

Text

Paper with Code Series: Adversarial Latent Autoencoders

Paper with Code Series: Adversarial Latent Autoencoders

Generative Adversarial Networks continues to be one of the main deep learning techniques in current computer vision with machine learning developments. But they have been shown to have some issues regarding the quality of images it outputs from a generator’s map input space. This may have some causal explanation with the way GANs process of encoding-decoding from a known probability distribution…

View On WordPress

#Computer Vision#Deep Learning#GANs#Machine Learning#Papers with Code#PyTorch#Variational Auto-encoders

0 notes

Text

How To ConvertOgg ToMp3?

OGG is an open, free container format for digital multimedia, but the term is commonly used to mean the excessive-quality lossy , size-compressed audio file format often known as Ogg Vorbis (Vorbis-encoded audio inside an OGG container). As soon as the conversion completed, all your OGG audio information will likely be wrapped in MP3 format and stayed in the local folder you've got designated. OGG to MP3 Converter is Straightforward. Here's one using mplayer I feel this is quicker than avconv. Although, firefox should play mp3 recordsdata natively. We convert from over 40 supply audio codecs. Convert M4A to OGG, MP3 to OGG, FLAC to OGG, FLV to OGG , WAV to OGG, ogg vorbis to mp3 converter free WMA to OGG, AAC to OGG, AIFF to OGG, MOV to ogg vorbis to mp3 converter free, MKV to OGG, AVI to OGG, MP4 to OGG and so many extra codecs! Simply strive it out, your format will in all probability work, if it does not let us know on social media. Conversion: As talked about above, the software program is used to transform audio recordsdata of OGG format. The process is straightforward for the person has just to import the file, select the output format and the vacation spot folder and it does the remainder. Once the recordsdata have been transformed, you can obtain them individually or collectively in a ZIP file There's also an option to save them to your Dropbox account.

Like MP3s, the standard of an AAC file is measured based on its bit charge, rendered as kbps. Additionally like MP3, frequent bit rates for AAC recordsdata embody 128 kbps, 192 kbps, and 256 kbps. Convert more than simply WAV to MP3. TREMENDOUS is particularly a prevalent free audio converter. The very best factor about TREMENDOUS is the not insignificant rundown of audio organizations it underpins. In the occasion that you've an uncommon audio file you need to change over to one thing more typical (or the opposite way round), you ought to aim the TREMENDOUS audio converter.Hamster is a free audio converter that installs rapidly, has a minimal interface, and isn't laborious to make use of. Between OGG Vorbis and MP3 , it's troublesome selection, so you must acquaint your self with these two audio codecs. What follows is the abstract of their variations. I recommend this free OGG converter which enables you to convert virtually all video and audio recordsdata to a variety of mainstream formats. You may prefer it.Also supported, MP3 to MP3 - to convert to a unique bitrate. Additional possibility is the introduction of tags for some formats (AAC, AIFF, FLAC, M4A, M4R, MMF, MP3, OGG, OPUS, WAV, WMA). Altering the title, observe, album and even artists - all supported by on-line audio Converter online. To MP3 Converter converts most of video and audio input kinds of files, like MP4, WMA, M4A, FLAC, AMR, CDA, OGG, AVI, WMV, AIFF, FLV, WAV, and others. Along with encoding of native media information, the applying can obtain and convert to MP3 online video and audio content.Allowing the streaming service to compress and convert your audio files doesn't essentially mean the quality will degrade, but some compression algorithms boost peak alerts sufficient that the audio can sound distorted. Once you use a trusted program to convert your information to the correct format for every platform, you can listen to every one to get a good suggestion of what it can sound like as soon as it's printed.One more reason you might wish to convert to a unique format is that you have saved your authentic music library in a lossless format. Audio files are often large and never nicely suited to retailer on transportable devices comparable to smartphones. So, on this case, you may need to convert to a lossy format like MP3 before syncing.I'm not certain whether it is open source, however the Firefox add-on "Media Converter and Muxer - Audio zero.1.9" which uses the FFmpeg converter as it's base is worth looking into. It could actually convert audio files and rip and convert video information to audio recordsdata. The clean, easy interface makes converting recordsdata fast and easy. And it additionally comes with a primary participant to hearken to tracks. Nevertheless, the free version doesn't support lossless formats like FLAC except you upgrade. However, if all you wish to do is convert to MP3 for example then it's still a useful tool.This replace provides support for the LAME MP3 encoder and fixes some minor issues with the AAC encoders. If you do not need to manually set the audio bitrate, select Auto on the Bitrate list - this system will robotically alter the audio bitrate to a price near the bitrate within the source file, and dimension of the ensuing MP3 file needs to be close to the scale of your Ogg file.This audio converter cellular app is barely accessible for Android units , however it's free and has a formidable toolbox to convert and manipulate audio information. It helps all the favored audio formats, including MP3, WAV and AAC, and has an intensive listing of features for enhancing and merging audio files once they're transformed. The audio editing software is simple to use and allows you to zoom in on a portion of the audio file, trim the clip and set it as a ringtone or notification tone. This app also has a function that merges a number of songs together to make a customized remix.

1 note

·

View note

Text

How To Convert APE To FLAC On Mac

How you can convert APE to FLAC online with APE to FLAC Converter? We often meet the next bother in our life. Intention to transform APE to FLAC with no high quality loss. Search for a right and suitable APE to FLAC Converter to start out converting APE to FLAC. Need an answer on learn how to convert APE to FLAC on each Mac and Home windows system. Shouldn't have plenty of time on trying and deciding on completely different APE to FLAC conversion instruments. Click "Browse" button to choose vacation spot folder for saving your transformed flac files. Mono , but on this state of affairs solely WAV audio is supported, as other codecs are usually not but ported to C#. Contemplating the space for storing and multiplatform help, it's such an awesome thought to transform APE file to MP3. That's what the video is all about. To transform APE recordsdata you'll need also to install mac from rpmfusion-nonfree with a view to learn the files. Now you may have successfully converted your APE monitor to FLAC. This system can also be appropriate for batch conversion and does the job perfectly. For those who want a wider range of functions, though (like a extra prolonged vary of output quality), please test the Alternative Downloads part. However you aren't getting one thing for nothing. The MP3 codec, and others that obtain comparable reductions in file size, are "lossy"; ie, of necessity they get rid of a number of the musical data. The degree of this degradation relies on the data fee. Less bits all the time equals less music. volume control rms normalization software program Sound Amplifier & Normalizer CD gamers and different legacy audio gamers don't help Replay Gain metadata; auto degree edit mp4 wav normaliser. Nevertheless, some lossy audio codecs, are structured in a manner that they encode the amount of each compressed body in a stream, and www.magicaudiotools.com tools comparable to MP3Gain reap the benefits of this to change the amount of all frames in a stream, in a reversible way, without including noise finest free wave to flac convertor that fixes quality; Applicantion which reduce size of file for mobile easy methods to enhance cd volume on ringtone wav sound editor Convert Ape To Flac Free flac leveling increase mp4 batch normalizer. free online audio volume restore Free cut back flac file size downloads MP3Resizer. Rising the loudness to match industrial CDs? how to improve quantity on music batch resize flac normalizer musikfiler. MP3, OGG, WMA, ASF, MPC, FLAC, AAC, MPC and APE Multimedia Library Manager and Tag Editor with FreeDB, assist (Tag Editor + Music Organizer + Report Builder). Input Formats: AAC, AC3, AIF, AIFF, ALAW, DTS, FLAC, M4A, M4B, M4R, MP2, MP3, , WAV, WMA, and many others. And before anyone flags this question as a reproduction to this one here: Convert allape information toflac in several subfolders , I would like to level out that that user did not need to split theape into multipleflacs. You may additionally think about that, though the charts in your article look dramatically completely different, displaying apparent disturbances in the power, the perceptual coders are simply that: perceptual. It's best to expect to see variations when data is discarded. That is a given, and the charts will replicate that. The researchers who developed the algorithms worked very hard to reduce the perceptual trade-off. They did pretty nicely with MP3, and bought significantly better with AAC. I have someFlac albums I ripped as one large file to save some space (Lossless CD rips are roughly 500mb every), now I've extra storage I want to cut up them back to there authentic files. A number of predefined conversion profiles. Risk to save lots of your individual settings to an INI file. APE to FLAC Converter here is tested and selected from plenty of APE to FLAC changing tool in the marketplace. This APE to FLAC conversion software is the most skilled and splendid software to resolve all APE to FLAC problems. Added convertor FLAC to Wav (PCM 8, sixteen, 24, 32 bits, DSP, GSM, IMA ADPCM, MS ADPCM, AC3, MP3, MP2, OGG, A-REGULATION, u-LEGISLATION) recordsdata. Added smooth fade in and out for participant. Help Mp3 recordsdata is improved. One thing I noticed concerning the ape files is that the sound quality is significantly better than the mp3s. I've accomplished a comparability between the 320kbps mp3 and the ape file of the same track and located the ape recordsdata to be more clear and never scientific sounding just like the mp3s.

Play FLAC on iPhone - It's really cool to enjoy the flawless quality of FLAC on iPhone. Nonetheless, FLAC can't be opened on iPhone immediately. Earlier than taking part in FLAC on iPhone, it is advisable convert FLAC to appropriate file codecs. One program you should utilize is the Apowersoft Free On-line Video Converter for its nice convenience. Should you choose an offline solution to convert FLAC, it's possible you'll consider using Video Converter Studio that can modify information with out losing quality.Use your favorite BitTorrent client, equivalent to Vuze or uTorrent, to search for the music you want and then obtain versions that are in FLAC format (Free Lossless Audio Codec). FLAC is hottest format of lossless audio compression. It is open source, free and properly maintained by a group of fans. During playback FLAC decompresses to the unique recorded soundtrack (in digital format, after all), whereas MP3 makes psycho-acoustic tradeoffs to realize increased compression.

1 note

·

View note

Text

VideoPrism: A foundational visual encoder for video understanding

New Post has been published on https://thedigitalinsider.com/videoprism-a-foundational-visual-encoder-for-video-understanding/

VideoPrism: A foundational visual encoder for video understanding

Posted by Long Zhao, Senior Research Scientist, and Ting Liu, Senior Staff Software Engineer, Google Research

An astounding number of videos are available on the Web, covering a variety of content from everyday moments people share to historical moments to scientific observations, each of which contains a unique record of the world. The right tools could help researchers analyze these videos, transforming how we understand the world around us.

Videos offer dynamic visual content far more rich than static images, capturing movement, changes, and dynamic relationships between entities. Analyzing this complexity, along with the immense diversity of publicly available video data, demands models that go beyond traditional image understanding. Consequently, many of the approaches that best perform on video understanding still rely on specialized models tailor-made for particular tasks. Recently, there has been exciting progress in this area using video foundation models (ViFMs), such as VideoCLIP, InternVideo, VideoCoCa, and UMT. However, building a ViFM that handles the sheer diversity of video data remains a challenge.

With the goal of building a single model for general-purpose video understanding, we introduce “VideoPrism: A Foundational Visual Encoder for Video Understanding”. VideoPrism is a ViFM designed to handle a wide spectrum of video understanding tasks, including classification, localization, retrieval, captioning, and question answering (QA). We propose innovations in both the pre-training data as well as the modeling strategy. We pre-train VideoPrism on a massive and diverse dataset: 36 million high-quality video-text pairs and 582 million video clips with noisy or machine-generated parallel text. Our pre-training approach is designed for this hybrid data, to learn both from video-text pairs and the videos themselves. VideoPrism is incredibly easy to adapt to new video understanding challenges, and achieves state-of-the-art performance using a single frozen model.

VideoPrism is a general-purpose video encoder that enables state-of-the-art results over a wide spectrum of video understanding tasks, including classification, localization, retrieval, captioning, and question answering, by producing video representations from a single frozen model.

Pre-training data

A powerful ViFM needs a very large collection of videos on which to train — similar to other foundation models (FMs), such as those for large language models (LLMs). Ideally, we would want the pre-training data to be a representative sample of all the videos in the world. While naturally most of these videos do not have perfect captions or descriptions, even imperfect text can provide useful information about the semantic content of the video.

To give our model the best possible starting point, we put together a massive pre-training corpus consisting of several public and private datasets, including YT-Temporal-180M, InternVid, VideoCC, WTS-70M, etc. This includes 36 million carefully selected videos with high-quality captions, along with an additional 582 million clips with varying levels of noisy text (like auto-generated transcripts). To our knowledge, this is the largest and most diverse video training corpus of its kind.

Statistics on the video-text pre-training data. The large variations of the CLIP similarity scores (the higher, the better) demonstrate the diverse caption quality of our pre-training data, which is a byproduct of the various ways used to harvest the text.

Two-stage training

The VideoPrism model architecture stems from the standard vision transformer (ViT) with a factorized design that sequentially encodes spatial and temporal information following ViViT. Our training approach leverages both the high-quality video-text data and the video data with noisy text mentioned above. To start, we use contrastive learning (an approach that minimizes the distance between positive video-text pairs while maximizing the distance between negative video-text pairs) to teach our model to match videos with their own text descriptions, including imperfect ones. This builds a foundation for matching semantic language content to visual content.

After video-text contrastive training, we leverage the collection of videos without text descriptions. Here, we build on the masked video modeling framework to predict masked patches in a video, with a few improvements. We train the model to predict both the video-level global embedding and token-wise embeddings from the first-stage model to effectively leverage the knowledge acquired in that stage. We then randomly shuffle the predicted tokens to prevent the model from learning shortcuts.

What is unique about VideoPrism’s setup is that we use two complementary pre-training signals: text descriptions and the visual content within a video. Text descriptions often focus on what things look like, while the video content provides information about movement and visual dynamics. This enables VideoPrism to excel in tasks that demand an understanding of both appearance and motion.

Results

We conduct extensive evaluation on VideoPrism across four broad categories of video understanding tasks, including video classification and localization, video-text retrieval, video captioning, question answering, and scientific video understanding. VideoPrism achieves state-of-the-art performance on 30 out of 33 video understanding benchmarks — all with minimal adaptation of a single, frozen model.

VideoPrism compared to the previous best-performing FMs.

Classification and localization

We evaluate VideoPrism on an existing large-scale video understanding benchmark (VideoGLUE) covering classification and localization tasks. We find that (1) VideoPrism outperforms all of the other state-of-the-art FMs, and (2) no other single model consistently came in second place. This tells us that VideoPrism has learned to effectively pack a variety of video signals into one encoder — from semantics at different granularities to appearance and motion cues — and it works well across a variety of video sources.

Combining with LLMs

We further explore combining VideoPrism with LLMs to unlock its ability to handle various video-language tasks. In particular, when paired with a text encoder (following LiT) or a language decoder (such as PaLM-2), VideoPrism can be utilized for video-text retrieval, video captioning, and video QA tasks. We compare the combined models on a broad and challenging set of vision-language benchmarks. VideoPrism sets the new state of the art on most benchmarks. From the visual results, we find that VideoPrism is capable of understanding complex motions and appearances in videos (e.g., the model can recognize the different colors of spinning objects on the window in the visual examples below). These results demonstrate that VideoPrism is strongly compatible with language models.

We show qualitative results using VideoPrism with a text encoder for video-text retrieval (first row) and adapted to a language decoder for video QA (second and third row). For video-text retrieval examples, the blue bars indicate the embedding similarities between the videos and the text queries.

Scientific applications

Finally, we test VideoPrism on datasets used by scientists across domains, including fields such as ethology, behavioral neuroscience, and ecology. These datasets typically require domain expertise to annotate, for which we leverage existing scientific datasets open-sourced by the community including Fly vs. Fly, CalMS21, ChimpACT, and KABR. VideoPrism not only performs exceptionally well, but actually surpasses models designed specifically for those tasks. This suggests tools like VideoPrism have the potential to transform how scientists analyze video data across different fields.

VideoPrism outperforms the domain experts on various scientific benchmarks. We show the absolute score differences to highlight the relative improvements of VideoPrism. We report mean average precision (mAP) for all datasets, except for KABR which uses class-averaged top-1 accuracy.

Conclusion

With VideoPrism, we introduce a powerful and versatile video encoder that sets a new standard for general-purpose video understanding. Our emphasis on both building a massive and varied pre-training dataset and innovative modeling techniques has been validated through our extensive evaluations. Not only does VideoPrism consistently outperform strong baselines, but its unique ability to generalize positions it well for tackling an array of real-world applications. Because of its potential broad use, we are committed to continuing further responsible research in this space, guided by our AI Principles. We hope VideoPrism paves the way for future breakthroughs at the intersection of AI and video analysis, helping to realize the potential of ViFMs across domains such as scientific discovery, education, and healthcare.

Acknowledgements

This blog post is made on behalf of all the VideoPrism authors: Long Zhao, Nitesh B. Gundavarapu, Liangzhe Yuan, Hao Zhou, Shen Yan, Jennifer J. Sun, Luke Friedman, Rui Qian, Tobias Weyand, Yue Zhao, Rachel Hornung, Florian Schroff, Ming-Hsuan Yang, David A. Ross, Huisheng Wang, Hartwig Adam, Mikhail Sirotenko, Ting Liu, and Boqing Gong. We sincerely thank David Hendon for their product management efforts, and Alex Siegman, Ramya Ganeshan, and Victor Gomes for their program and resource management efforts. We also thank Hassan Akbari, Sherry Ben, Yoni Ben-Meshulam, Chun-Te Chu, Sam Clearwater, Yin Cui, Ilya Figotin, Anja Hauth, Sergey Ioffe, Xuhui Jia, Yeqing Li, Lu Jiang, Zu Kim, Dan Kondratyuk, Bill Mark, Arsha Nagrani, Caroline Pantofaru, Sushant Prakash, Cordelia Schmid, Bryan Seybold, Mojtaba Seyedhosseini, Amanda Sadler, Rif A. Saurous, Rachel Stigler, Paul Voigtlaender, Pingmei Xu, Chaochao Yan, Xuan Yang, and Yukun Zhu for the discussions, support, and feedback that greatly contributed to this work. We are grateful to Jay Yagnik, Rahul Sukthankar, and Tomas Izo for their enthusiastic support for this project. Lastly, we thank Tom Small, Jennifer J. Sun, Hao Zhou, Nitesh B. Gundavarapu, Luke Friedman, and Mikhail Sirotenko for the tremendous help with making this blog post.

#ai#Analysis#applications#approach#architecture#Art#benchmark#benchmarks#Blog#Blue#Building#challenge#colors#Community#complexity#content#data#datasets#Design#diversity#domains#dynamics#easy#Ecology#education#embeddings#emphasis#Engineer#excel#Foundation

1 note

·

View note

Text

How To Rip CDs ToFLAC Using Precise Audio Copy (Lossless)

CD ripper extract compact disk audio knowledge to quantity codecs, convert CDs to digital music library. Convert Recordsdata helps you change FLAC to MP3 file format simply. You just must upload a FLAC file, then choose MP3 as the output file format and click on convert. After the conversion is complete, download the transformed file in MP3 format and also you also have an option to send it to your e-mail for storage through a singular download link that nobody else can access. If your CDs are like mine then some are scratched or have plenty of finger-marks. These could cause pops and crackles in the ripped file. Rippers range greatly of their skill to deal with these problems. Some will simply get caught whereas others will skip ahead over the problem or even create a silent gap. The very best applications will attempt repeatedly to fix the problem with no audible effects. I'd suggest that you just run the identical checks that you just made with MP3s on cassette tape, and examine those outcomes to MP3 or AAC. I think the digital codecs even with all their faults, would win arms down. Wow, flutter, and frequency response - as well as noise and distortion, would be a lot worse on cassette tape. Perspective, perspective, perspective. You need a robust audio converter to convert WAV to FLAC, I would suggest Leawo WAV to FLAC Converter , also known as Leawo Video Converter. It is a powerful video and audio converter that can convert video and audio between all formats and free download of cda to flac converter software full version among the finest WAV to FLAC Converter. To burrrn a CD, just drop the audio information, playlists or cue sheets on the record, select the writing pace and press Burrrn. A window will pop up, the place you can see the burrrning progress. Program bardzo dobrze radzi sobie z konwersją pomiędzy najpopularniejszymi formatami wideo (AVI, MPEG, WMV, MOV, MP4, RM, RMVB, ASF, FLV) i audio (MP3, M4A, WMA, WAV, FLAC, OGG, AU, MP2 i AC3). W przypadku tych pierwszych Any Audio Converter oferuje funkcję wydobycia dźwięku z pliku i zapisu go do formatu audio. Opcję tą można wykorzystać m. in. do konwersji filmów zapisanych w formacie Flash (pobranych z takich serwisów jak YouTube, Google, NFLV) do popularnego formatu audio MP3. Any Audio Converter posiada również funkcję kolejkowania zadań. You may search Yahoo web sites like Sports actions, Finance, Shopping for, Autos, and more, for Yahoo originals and content material and outcomes we've curated from across the Internet. Reduce smaller sound items from large MP3 or WAV format info instantly. You must need the CDA to MP3 Converter to extract audio tracks from an Audio CD. Simply download this skilled CDA to MP3 Converter to have a attempt. Ifcue files are already related to a program in your COMPUTER, open thecue file with Medieval CUE Splitter, while you're ready to separate the largeape file into individual tracks, click the Break up" button within the bottom right nook of the window. Enjoying on MP3 Gamers - It you need to play the Audio Tracks (CDA) stored in your CD in your MP3 player then it's important to convert them to MP3 format. For this you can use the cda to mp3 converters to convert audio tracks to mp3 file format. FLAC stands for Free Lossless Audio Codec. As its title suggests, it's a patent-free manner of encoding audio with out quality losses. FLAC recordsdata are sometimes bigger than MP3s, but one should bear in mind that MP3 is a lossy format, which means that some portion of quality is misplaced each time you exchange any audio to MP3. With FLAC, it isn't the case, and thus it is doable to encode music into FLAC and have the similar high quality, compared to the source Audio CD. That (and patent-freeness) is the rationale why FLAC is turning into an increasing number of common, and even hardware gadgets such as media gamers tend to add support for this format. A CD Audio Track orcda file is a small (forty four bytes) file generated by Microsoft Windows for each monitor on an audio CD (it's a virtual file that may be read by Home windows software, but is not really present on the CD audio media). The file incorporates indexing info that applications can use to play or rip the disc. The file is given a reputation in the format Track##.cda. Thecda recordsdata do not include the precise PCM wave data, but instead tell the place on the disc the observe begins and stops. If the file is "copied" from the CD-ROM to the computer, it turns into useless, since it's only a shortcut to a part of the disc. Nevertheless, some audio enhancing and CD creation packages will, from the person's perspective, loadcda files as if they're actual audio knowledge information.

Find out how to convert audio and video recordsdata using the free download of cda to flac converter software full version program AIMP3 for Home windows. As others have suggested, you could possibly rip to WAV, then convert that WAV file to the varied codecs and evaluate the outcomes. This techique would remove the possibility of disc reading variations. Supported Output Codecs: AAC, AC3, FLAC, M4A, MP3, OGG, WAV, M4R.

1 note

·

View note