#What is Artificial Intelligence(AI)? Introduction to artificial intelligence

Explore tagged Tumblr posts

Text

What is Artificial Intelligence (AI)? A Comprehensive Guide for Beginners

Dive into Artificial Intelligence (AI) with this beginner's guide, covering basics, key concepts, and how AI is reshaping the future.

#Artificial Intelligence#What is AI#AI Basics#Introduction to AI#AI Explained#Understanding AI#AI Concepts#AI Applications#Future of AI#AI Technology

0 notes

Text

#AI(Artificial Intelligence)#What is Artificial Intelligence(AI)? Introduction to artificial intelligence#What do you know about artificial intelligence#What is the Foundation of AI? How does artificial intelligence influence digital marketing? AI-driven systems#AI algorithms#AI and machine learning#AI by Google#Artificial intelligence#liveblack

0 notes

Text

youtube

#artificial intelligence#ai#machine learning#healthcare#finance#marketing#education#climate change#sustainability#singularity#introduction to ai#what is ai#how does ai work#applications of ai#benefits of ai#future of ai#the rise of artificial intelligence#artificial intelligence explained#artificial intelligence applications#introduction to artificial intelligence#what is ai technology#what is artificial intelligence#ai tools#ai for beginners#AI Overview#Youtube

0 notes

Text

If you’re intent on using tools like ChatGPT to write, I’m probably not going to be able to convince you not to. I do, however, want to say one thing, which is that you have absolutely nothing to gain from doing so.

A book that has been generated by something like ChatGPT will never be the same as a book that has actually been written by a person, for one key reason; ChatGPT doesn’t actually write.

A writer is deliberate; They plot events in the order that they have determined is best for the story, place the introduction of certain elements and characters where it would be most beneficial, and add symbolism and metaphors throughout their work.

The choices the author makes is what creates the book; It would not exist without deliberate actions taken over a long period of thinking and planning. Everything that’s in a book is there because the author put it there.

ChatGPT is almost the complete opposite to this. Despite what many people believe, humanity hasn’t technically invented Artificial Intelligence yet; ChatGPT and similar models don’t think like humans do.

ChatGPT works by scraping the internet to see what other people and sources have to say on a given topic. If you ask it a question, there’s not only a good chance it will give you the wrong answer, but that you’ll get a hilariously wrong answer; These occurrences are due to the model pulling from sources like Reddit and other social media, often from comments meant as jokes, and incorporating them into its database of knowledge.

(A major example of this is Google’s new “AI Overview” feature; Look up responses and you’ll see the infamous machine telling you to add glue to your pizza, eat rocks, and jump off the Golden Gate Bridge if you’re feeling suicidal)

Anything “written” by ChatGPT, for example, would be cobbled together from multiple different sources, a good portion of which would probably conflict with one another; If all you’re telling the language model to do is “write me a book about [x]”, it’s going to pull from a variety of different novels and put together what it has determined makes a good book.

Have you ever read a book that you thought felt clunky at times, and later found out that it had multiple different authors? That’s the best comparison I can make here; A novel “written” by a language model like ChatGPT would resemble a novel cowritten by a large group of people who didn’t adequately communicate with one another, with the “authors” in this case being multiple different works that were never meant to be stitched together.

So, what do you get in the end? A not-very-good, clunky novel that you yourself had no hand in making beyond the base idea. What exactly do you have to gain from this? You didn’t get any practice as a writer (To do that, you would have to have actually written something), and you didn’t get a very good book, either.

Writing a book is hard. It’s especially hard when you’re new to the craft, or have a busy schedule, or don’t even know what it is you want to write. But it’s incredibly rewarding, too.

I like to think of writing as a reflection of the writer; By writing, we reveal things about ourselves that we often don’t even understand or realize. You can tell a surprising amount about a person based on their work; I fail to see what you could realize about a prompter when reading a GPT-generated novel besides what works it pulled from.

If you really want to use ChatGPT to generate your novel for you, then I can’t stop you. But by doing so, you’re losing out on a lot; You’re also probably losing out on what could be an amazing novel if you would actually take the time to write it yourself.

Delete the app and add another writer to the world; You have nothing to lose.

#writing#writeblr#writer#writers#aspiring author#writers on tumblr#author#writing advice#chatgpt#anti chatgpt#ai#anti ai#fuck ai#generative ai#anti genai#anti generative ai#genai#anti gen ai#gen ai#ai writing#anti ai writing#fuck generative ai#fuck genai#long post#ams

49 notes

·

View notes

Note

Senku, I want to ask you a question on the matter of using AI. I want to know your thoughts about it!

A while back, I attended a conference with a bunch of esteemed people, including a few diplomats from all over the world. Someone had asked a doctor and a professor in the top university of my country, "How do you feel about the growing presence of artificial intelligence in almost every aspects of work and life? Does it threaten you?" (Not his exact words, but that's the gist!)

At first, he laughed, and then he simply said: "Do you think mathematicians got mad when the calculator got invented?"

I have my own stance about it, and I think a tool whose primary function is to make complex computations much easier to do is VERY different from the level of AI currently existing.

As someone who's proficient in math, science, technology, and many other things, how do you feel about the professor's statement? 🤔

— 🐰

Okay. I have lots of thoughts about this

So we all know that AI is on the rise. This growth is thanks to the introduction of a type of AI called generative AI. This is the AI that makes all those generated images you hear about, it runs chat gpt, it's what they used for the infamous cola commercial.

Now there's another type of AI that we've been using for a much longer time called analytical AI. This is what your favorite web browser uses to sort your search results according to the query. This has like nothing to do with character ai or whatever, all that stuff is generative AI.

The professor compared AI to a calculator, but in my mind, calculators are much more like analytical AI, not the generative AI that's gotten so popular which the question was CLEARLY referencing. This is because analytical AI uses a structured algorithm, which is usually like a system of given numbers or codes that gives an exact result. There are some calculators that can actually be considered analytical AI. Point is, you're right, this is completely different from generative AI that uses an unstructured algorithm to make something "unique" (in quotes because it's one of a kind, but drawn from a combination of existing texts and images). The professor did NOT get the question I fear.

This bothers me because analytical AI can be incredibly useful, but generative AI really just takes away from us. Art, writing and design are for humans, not for robots -- science should foster creativity, not make it dull. It's important to know the difference between them so we know what to support and what to reject.

#also professors should be EDUCATED#should they not???#bro literally failed at his one job#people shouldnt be making comments on things they dont have a damn clue about#artificial intelligence#ai#dr stone rp#senku ishigami#ishigami senku

21 notes

·

View notes

Video

youtube

AI Basics for Dummies- Beginners series on AI- Learn, explore, and get empowered

For beginners, explain what Artificial Intelligence (AI) is. Welcome to our series on Artificial Intelligence! Here's a breakdown of what you'll learn in each segment: What is AI? – Discover how AI powers machines to perform human-like tasks such as decision-making and language understanding. What is Machine Learning? – Learn how machines are trained to identify patterns in data and improve over time without explicit programming. What is Deep Learning? – Explore advanced machine learning using neural networks to recognize complex patterns in data. What is a Neural Network in Deep Learning? – Dive into how neural networks mimic the human brain to process information and solve problems. Discriminative vs. Generative Models – Understand the difference between models that classify data and those that generate new data. Introduction to Large Language Models in Generative AI – Discover how AI models like GPT generate human-like text, power chatbots, and transform industries. Applications and Future of AI – Explore real-world applications of AI and how these technologies are shaping the future.

Next video in this series: Generative AI for Dummies- AI for Beginners series. Learn, explore, and get empowered

Here is the bonus: if you are looking for a Tesla, here is the link to get you a $1000.00 discount

Thanks for watching! www.youtube.com/@UC6ryzJZpEoRb_96EtKHA-Cw

37 notes

·

View notes

Text

The Four Horsemen of the Digital Apocalypse

Blockchain. Artificial Intelligence. Internet of Things. Big Data.

Do these terms sound familiar? You have probably been hearing some or all of them non stop for years. "They are the future. You don't want to be left behind, do you?"

While these topics, particularly crypto and AI, have been the subject of tech hype bubbles and inescapable on social media, there is actually something deeper and weirder going on if you scratch below the surface.

I am getting ready to apply for my PhD in financial technology, and in the academic business studies literature (Which is barely a science, but sometimes in academia you need to wade into the trash can.) any discussion of digital transformation or the process by which companies adopt IT seem to have a very specific idea about the future of technology, and it's always the same list, that list being, blockchain, AI, IoT, and Big Data. Sometimes the list changes with additions and substitutions, like the metaverse, advanced robotics, or gene editing, but there is this pervasive idea that the future of technology is fixed, and the list includes tech that goes from questionable to outright fraudulent, so where is this pervasive idea in the academic literature that has been bleeding into the wider culture coming from? What the hell is going on?

The answer is, it all comes from one guy. That guy is Klaus Schwab, the head of the World Economic Forum. Now there are a lot of conspiracies about the WEF and I don't really care about them, but the basic facts are it is a think tank that lobbies for sustainable capitalist agendas, and they famously hold a meeting every year where billionaires get together and talk about how bad they feel that they are destroying the planet and promise to do better. I am not here to pass judgement on the WEF. I don't buy into any of the conspiracies, there are plenty of real reasons to criticize them, and I am not going into that.

Basically, Schwab wrote a book titled the Fourth Industrial Revolution. In his model, the first three so-called industrial revolutions are:

1. The industrial revolution we all know about. Factories and mass production basically didn't exist before this. Using steam and water power allowed the transition from hand production to mass production, and accelerated the shift towards capitalism.

2. Electrification, allowing for light and machines for more efficient production lines. Phones for instant long distance communication. It allowed for much faster transfer of information and speed of production in factories.

3. Computing. The Space Age. Computing was introduced for industrial applications in the 50s, meaning previously problems that needed a specific machine engineered to solve them could now be solved in software by writing code, and certain problems would have been too big to solve without computing. Legend has it, Turing convinced the UK government to fund the building of the first computer by promising it could run chemical simulations to improve plastic production. Later, the introduction of home computing and the internet drastically affecting people's lives and their ability to access information.

That's fine, I will give him that. To me, they all represent changes in the means of production and the flow of information, but the Fourth Industrial revolution, Schwab argues, is how the technology of the 21st century is going to revolutionize business and capitalism, the way the first three did before. The technology in question being AI, Blockchain, IoT, and Big Data analytics. Buzzword, Buzzword, Buzzword.

The kicker though? Schwab based the Fourth Industrial revolution on a series of meetings he had, and did not construct it with any academic rigor or evidence. The meetings were with "numerous conversations I have had with business, government and civil society leaders, as well as technology pioneers and young people." (P.10 of the book) Despite apparently having two phds so presumably being capable of research, it seems like he just had a bunch of meetings where the techbros of the mid 2010s fed him a bunch of buzzwords, and got overly excited and wrote a book about it. And now, a generation of academics and researchers have uncritically taken that book as read, filled the business studies academic literature with the idea that these technologies are inevitably the future, and now that is permeating into the wider business ecosystem.

There are plenty of criticisms out there about the fourth industrial revolution as an idea, but I will just give the simplest one that I thought immediately as soon as I heard about the idea. How are any of the technologies listed in the fourth industrial revolution categorically different from computing? Are they actually changing the means of production and flow of information to a comparable degree to the previous revolutions, to such an extent as to be considered a new revolution entirely? The previous so called industrial revolutions were all huge paradigm shifts, and I do not see how a few new weird, questionable, and unreliable applications of computing count as a new paradigm shift.

What benefits will these new technologies actually bring? Who will they benefit? Do the researchers know? Does Schwab know? Does anyone know? I certainly don't, and despite reading a bunch of papers that are treating it as the inevitable future, I have not seen them offering any explanation.

There are plenty of other criticisms, and I found a nice summary from ICT Works here, it is a revolutionary view of history, an elite view of history, is based in great man theory, and most importantly, the fourth industrial revolution is a self fulfilling prophecy. One rich asshole wrote a book about some tech he got excited about, and now a generation are trying to build the world around it. The future is not fixed, we do not need to accept these technologies, and I have to believe a better technological world is possible instead of this capitalist infinite growth tech economy as big tech reckons with its midlife crisis, and how to make the internet sustainable as Apple, Google, Microsoft, Amazon, and Facebook, the most monopolistic and despotic tech companies in the world, are running out of new innovations and new markets to monopolize. The reason the big five are jumping on the fourth industrial revolution buzzwords as hard as they are is because they have run out of real, tangible innovations, and therefore run out of potential to grow.

#ai#artificial intelligence#blockchain#cryptocurrency#fourth industrial revolution#tech#technology#enshittification#anti ai#ai bullshit#world economic forum

32 notes

·

View notes

Text

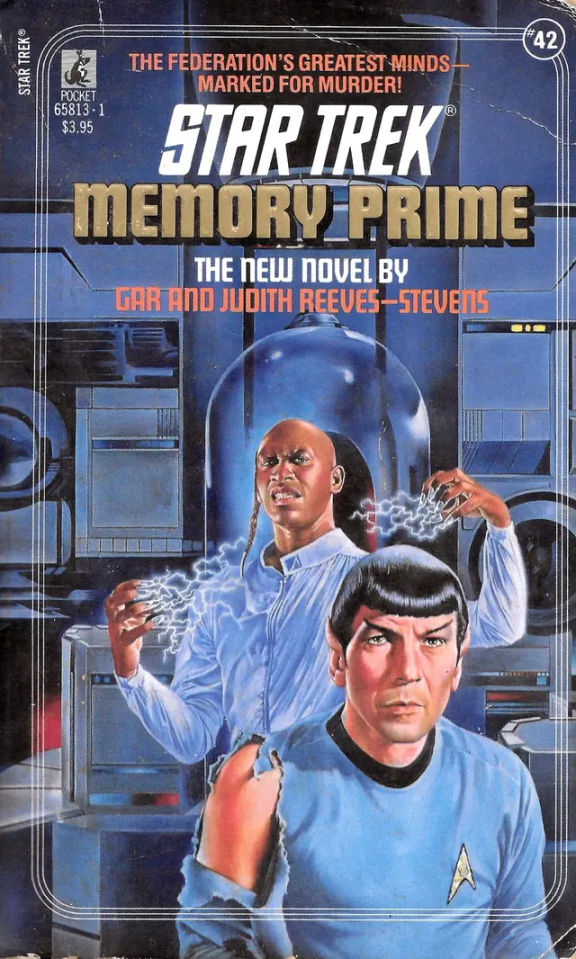

"Memory Prime" review

Novel from 1988, by Garfield and Judith Reeves-Stevens. It's strange that the title says "the new novel by...", since as far as I know, this was the first Star Trek book of these authors. Chaotic, crazy, convoluted, at times campy and hard to follow, it's also a lot of fun. Even the cover is a "what-the-fuck" moment, with that Spock looking tiredly at the viewer, wearing the trademark Kirk-ripped-shirt.

The story mixes several TOS elements: a conspiracy to kill someone aboard the Enterprise; a mission to rescue Spock that puts everyone's careers in danger; and an obnoxious Commodore that hinders the heroes' efforts. All mixed and enhanced to cinematic level. Apart from all that, the novel expands on the story from "The Lights of Zetar", showing the aftermath of Memory Alpha's destruction. And has Mira Romaine (the guest from that episode) as one of the main characters.

The titular Memory Prime is the new center of knowledge and research for the Federation, and the main node in a network of similar centers, each in a different asteroid. Selected as the place to hold the Nobel prizes, the Enterprise is tasked with bringing scientists from all over the Federation to the ceremony. But things get awry when Starfleet intelligence discovers that one or more of the scientists are targeted for assassination. And to everybody's surprise, the main suspect is... Spock!! On top of that, Starfleet has lost confidence in Kirk, and orders a Commodore to take over the Enterprise during the emergency. So Kirk now has to recover both his First Officer and his ship, while confronted with a thickening web of conspiracy. There's something of pulp fiction about the cackling villains, killer robots, and constant plot twists and cliffhangers. But surprisingly, there's also an element of more serious science fiction, with the introduction of the Pathfinders (artificial intelligences that have developed their own worldview), and the discussions about computer science. The chapters dealing with the Pathfinders show a fascinating and alien perspective, that may very well be classified as "cyberpunk". It's possible that something of this owes more to TNG than TOS. The final reveal about who was behind the assassination, the real target of it, and the motives, were actually unexpected.

As for the characters, Kirk gets the spotlight and most heroic deeds (so it's no wonder that these same authors got to co-write the Shatnerverse novels later). And of course Spock is central to the plot, though absent during a good chunk of the story. But Scotty also gets a larger-than-usual role, and picks up his romance with Romaine. While Uhura has one of her most badass moments when confronting the Commodore. Also, everyone has crazy, crazy fight scenes: from Kirk defeating a robot with bullfighter techniques (and utterly annihilating his shirt in the process), to Spock locking in a mind-meld combat, or McCoy... attacking with hypos.

In summary, a pretty exciting action adventure, with humorous scenes that are actually funny, and some food-for-thought concerning AI and the fainting distinction between human and machine. Even if the plot can get messy and even ridiculous at times.

Spirk Meter: 9/10*. Kirk is really distressed by Spock's framing and incarceration, and spends most of the novel focused on rescuing him no matter what. Even if this means the end of his career and losing the Enterprise; Spock comes as his first priority. He doesn't doubt his innocence for a moment, either, while others in the crew have some reservations. And whenever Spock's in physical danger, Kirk is the first to jump to protect him (even if he himself is a wreck). Kirk is also the only one who truly seems to understand Spock, he can anticipate his thoughts and see his logic, where others only see crazyness. Besides, there's a scene where Kirk faints, and the first thing he says upon waking up is "Spock, Spock...?". And yeah, Spock is there, hovering over him and pushing him gently to lie back in bed.

Though this is clearly a spirky novel and McCoy has a lesser role, there are a few moments with him too. At the beginning, both he and Kirk devise a convoluted plan to keep Spock in the dark about the Nobel ceremony, in the hopes of seeing him smile or at least react when the surprise is revealed. While at the end, McCoy wants to know EVERYTHING about Spock's years in the Academy, once he learns from one of his teachers that he was a bit of a "class clown" back then (for Vulcan standards). And despite Spock's apparent annoyance, Kirk notices a warm expression on him, because of the doctor's antics. Kirk also jumps in front of McCoy and Spock to take a lethal phaser shot instead of them. With the hilarious result that the shot wasn't neither that lethal, nor he took it all that well, considering both Spock and McCoy also end up groaning in bed after a stun.

*A 10 in this scale is the most obvious spirk moments in TOS. Think of the back massage, "You make me believe in miracles", or "Amok Time" for example.

37 notes

·

View notes

Text

Star Trek: Discovery Narrative Highlights

So I really like Discovery, but differently than I like other Star Treks. My love for Voyager, for instance, is based off the sense of found-family in the face of sci-fi shenanigans. I could pick out favorite episodes, but my favorite episodes don't necessarily represent the epitome of what I love about the show, y'know?

It's different with Disco. There are concrete moments from through out the show that made me go "Okay, I like this, I want this. More of this." Here's some of them! This is indulgent and all from memory

Season 1 - Klingons Speak Klingon

In a story about Klingons fearing the Federation as an institution which will irrevocably alter their culture, the Klingons actually speak Klingon. Love it. Season 1 - Gabriel Lorca

I loved seeing a Star Fleet captain who seemed to have ascended because of his skill at war: a trait which ordinarily would not elevate one within Starfleet service, per se. It made him interesting. Your mileage may vary on where this went, but. He's still a big appeal on rewatch.

Season 2 - Queer People Helping Queer People

The introduction of Jet Reno is one of my favorite hallmarks in the show. I love Jet, and I love the way she serves as a foil to every other character. But best of all, I loved the scene when she is talking to Hugh Culber about how distant he's been from his husband (since coming back from the dead, so, you know) and helps him by relating her own story about her wife, who is now passed. To say I'm happy to see queer stories on Star Trek is a massive understatement, but this was the moment it locked in for me. In the world of the Federation, there's no difference between being queer or straight and anyone could've talked Hugh out of his funk. But in our world, it's usually queer people helping queer people make sense of their experiences. Recognizing the importance of that distinction and going with the queers-helping-queers take is a really big deal for me.

Season 2 - Amanda

This is hands-down the best representation of Amanda we've ever been given and she is so wonderfully human and warm that it helps you understand Spock and Michael so much better. I don't know what to say other than that, I love her.

Season 3 - The Future

I love that they went not just into the future, but further into the future than any mainline trek lore has gone. Hell yes. I'm bummed it's kinda a post-Utopian mess, but I get storywise why that's the case. I love the future starships, I love the future technology, I like that we just "BZP" to wherever we want to be in the ship now. In a show increasingly steeped in centuries of canon lore, it's smart and challenging to try to do "a millennium in the future."

Season 3 - Queer Family

Queer Family! Queer Family in Star Trek! This is my queers-helping-queers point but dialed up to 11. Love it, would do anything for it.

Season 4 - Artificial Intelligence

The ship is alive and she's named herself. This comes to a head in an episode in Season 4 where Paul Stamets feels very hesitant about this, after the plot of Season 2 was trying to stop AI from destroying the galaxy. There's this whole Measure of a Man but Not Quite Because Its the B Story thing going on, but at the end of it, there's a twist. Paul eventually learns to accept his new crewmate, but then he asks the person in-charge of the inquest "What would you have done if I said I wasn't comfortable serving with an AI?" and the dude goes "I would've assigned you to another ship. This was never about whether she has a soul or whatever, it's about if you can learn to accept that with you 22nd century brain." And that's.... that's great.

Season 4 - Mental Health

Mental Health is a thread running through some of Discovery (Season 2 flirts with Spock's neurodivergence, for instance) but never more than in Season 4. Hugh Culber, the ship's ray of sunshine and de facto counselor, is in bad shape, mentally, and he needs help. But the best moment is when the away-team is beset by chemical memories of panic and basically rendered useless with fear... except for Detmer, who helps them all get through it. When asked why she was unaffected, she says "Oh I totally was affected, but after my grievous injury during the war, I went to therapy for the PTSD and learned some coping strategies" AND THAT'S WHAT SAVES THE GALAXY.

Anyway, this is very indulgent and probably nobody reads this, but thanks if you did.

#star trek#star trek discovery#disco#Jet reno#Michael Burnham#Hugh Culber#Paul Stamets#keyla detmer#i will probably delete this

84 notes

·

View notes

Text

I've found that when I review a book that was on the whole quite good, but the element I was most interested in didn't quite play out the way I wanted it to, I tend to spend most of my word count on what I didn't like instead of what I did, so I'm going to try for a little more parity here. The Stars Too Fondly is a thoroughly enjoyable sci-fi romance with a lot to recommend it. It begins on a near-future Earth, twenty years after what was supposed to be the first of many missions to begin evacuating humanity to a new planet using a revolutionary new technology that would make interstellar travel cheap and easy failed dramatically and inexplicably on the launch pad, resulting in the cancellation of the program. A group of four postdocs who watched the failure live on television as kids break into the now-derelict launch facility determined to find out why the launch failed and what happened to the crew, all of whom vanished without a trace during the catastrophe. However, the ship inexplicably powers up and launches with them on board, and now they not only have to solve the mystery but also figure out how to survive their multi-year interstellar journey and return, with the help of the ship's onboard AI who, for some reason, has been programmed to be a perfect copy of the missing captain of the original expedition.

I really enjoyed the tone and setting of the book, which is much more Star Trek than it is The Martian, with much more focus placed on character drama, mystery solving, and moral dilemmas than logistical puzzle-solving. The influence of Star Trek: Voyager in particular are worn proudly in both plot elements - a holographic artificial intelligence with questions about her personhood, an unplanned years-long journey that the crew is trying to shorten - and smaller elements, such as the use of food replicators and even a direct reference to the show's most famous episode, Threshold.

The characters were solid and compelling, with engaging dynamics unique voices. I also, barring one personal gripe, really liked the book's exploration of queer experiences. If I found myself on an unplanned space mission, I would also be very concerned about how I was going to get HRT meds!

The book makes use of a combination of plausible hard sci-fi theories, which stopped me from giving the concept of a dark matter engine my usual obligatory eyeroll, and bonkers off the wall pseudo magic soft sci-fi. These elements synergized better than I was afraid they would, but the introduction of the softer elements was a little jarring. Also kinda like Star Trek actually.

The plotting was perfectly solid, though not extraordinary by any means. None of the twists and turns were particularly surprising, but neither did they come across as trite or formulaic. The themes weren't anything novel either, but they were well-supported and conveyed. The writing itself was mostly pretty good, with a few of the rough edges and structural oddities that I've come to expect from debut novels.

So now that I've actually given the book its due, I'm gonna dig too deep into what I found disappointing.

I've noticed a bit of a trend between the last few books I've felt really compelled by, and that's the idea of a character falling in love with someone who, by their very nature, they are not going to be able to have an "ordinary" relationship with. It's what drew me to Flowers for Dead Girls, which is about falling in love with a ghost. It's what drew me to Someone You Can Build a Nest In, which is about a psychologically and physiologically inhuman monster falling in love with a human. And it's what initially drew me to this book, which is about a human falling in love with the hologram of a dead woman - a space ghost, if you want, or a ghost in the machine, if you'd rather. All of these books take some pains to explore the rough edges of these relationships, where the participants' desires are stymied by their physical differences. However, where the previous two books end with the characters establishing an equilibrium of sorts where their needs are met, even if their relationship doesn't look like what society or their own imaginations expected them to look like, The Stars Too Fondly just neatly resolves things such that their differences are no longer a concern and they can have exactly what they imagined. And I found that to be cheap and unsatisfying, especially because the resolution only works if you really, really want it to work. When you start digging into it, it starts falling apart.

It's a symptom of a phenomenon I'm calling, "So You Want to Have Your Tragedy and Eat it Too". It arises when an author has an idea for a very compelling and evocative tragic event or outcome that results in rich character moments and strong thematic resonance and very profound emotions that they really want to explore... but it would also make the happy ending they want for their characters impossible, either because the rules they've established for their story mean that the damage can't be reversed, or because the change is such that, even if the conflict were apparently resolved, the characters have now been changed by the event that they can never be as they were before, and the happy ending is now emotionally impossible.

When this conundrum comes up in the writing process, the author has to decide - do they want to explore the rich possibilities of this tragedy, or do they want to go a different direction that allows for their originally desired happy ending. It's a difficult choice to make, and unfortunately, it's not uncommon for authors to think they can take a third option, that they can come up with a way to have their tragedy but still make things work out in the end. And the end result is a solution that doesn't hold up to scrutiny. That's what happens here, to the point that it's hard to read the last couple chapters because the main character reads like she's deluding herself that everything is fine and she's happy. And you know, that could've been a really interesting - and tragic - direction to go on purpose and explore, but it wasn't on purpose, and it just winds up feeling like the book is trying desperately to convince the reader that everything is alright, really! I can't help but compare it unfavorably to the conclusion of Lovelace's arc in The Long Way to a Small Angry Planet, which confronted the fact that nothing could ever be the same again so unflinchingly that it gave rise to A Closed and Common Orbit, one of my favorite books of all time (that I completely forgot when I was trying to list some of my favorite books in a conversation the other day and now I feel like I've betrayed it).

And while I have you here, I also really hate that they made the transfem side character super into astrology. That's a personal bugbear, and while it's one I have grudgingly tolerated the singular time that I have seen a transfem author do it, I really, really wish non-transfem authors would knock that shit off. Find a different quirky interest to give to your transfem characters.

Still, on the whole, I thought it was a really solid book with a lot of entertaining and compelling elements. Unless you are reading it primarily for the logistical and emotional challenges of a romantic relationship between a ghost and a human, I would recommend it without hesitation. If you are, check out any of the other books I referenced in this post instead (except maybe for A Closed and Common Orbit, but if you're the kind of person who would like those other recommendations, I bet you'd like it too).

25 notes

·

View notes

Text

Artificial Intelligence Risk

about a month ago i got into my mind the idea of trying the format of video essay, and the topic i came up with that i felt i could more or less handle was AI risk and my objections to yudkowsky. i wrote the script but then soon afterwards i ran out of motivation to do the video. still i didnt want the effort to go to waste so i decided to share the text, slightly edited here. this is a LONG fucking thing so put it aside on its own tab and come back to it when you are comfortable and ready to sink your teeth on quite a lot of reading

Anyway, let’s talk about AI risk

I’m going to be doing a very quick introduction to some of the latest conversations that have been going on in the field of artificial intelligence, what are artificial intelligences exactly, what is an AGI, what is an agent, the orthogonality thesis, the concept of instrumental convergence, alignment and how does Eliezer Yudkowsky figure in all of this.

If you are already familiar with this you can skip to section two where I’m going to be talking about yudkowsky’s arguments for AI research presenting an existential risk to, not just humanity, or even the world, but to the entire universe and my own tepid rebuttal to his argument.

Now, I SHOULD clarify, I am not an expert on the field, my credentials are dubious at best, I am a college drop out from the career of computer science and I have a three year graduate degree in video game design and a three year graduate degree in electromechanical instalations. All that I know about the current state of AI research I have learned by reading articles, consulting a few friends who have studied about the topic more extensevily than me,

and watching educational you tube videos so. You know. Not an authority on the matter from any considerable point of view and my opinions should be regarded as such.

So without further ado, let’s get in on it.

PART ONE, A RUSHED INTRODUCTION ON THE SUBJECT

1.1 general intelligence and agency

lets begin with what counts as artificial intelligence, the technical definition for artificial intelligence is, eh…, well, why don’t I let a Masters degree in machine intelligence explain it:

Now let’s get a bit more precise here and include the definition of AGI, Artificial General intelligence. It is understood that classic ai’s such as the ones we have in our videogames or in alpha GO or even our roombas, are narrow Ais, that is to say, they are capable of doing only one kind of thing. They do not understand the world beyond their field of expertise whether that be within a videogame level, within a GO board or within you filthy disgusting floor.

AGI on the other hand is much more, well, general, it can have a multimodal understanding of its surroundings, it can generalize, it can extrapolate, it can learn new things across multiple different fields, it can come up with solutions that account for multiple different factors, it can incorporate new ideas and concepts. Essentially, a human is an agi. So far that is the last frontier of AI research, and although we are not there quite yet, it does seem like we are doing some moderate strides in that direction. We’ve all seen the impressive conversational and coding skills that GPT-4 has and Google just released Gemini, a multimodal AI that can understand and generate text, sounds, images and video simultaneously. Now, of course it has its limits, it has no persistent memory, its contextual window while larger than previous models is still relatively small compared to a human (contextual window means essentially short term memory, how many things can it keep track of and act coherently about).

And yet there is one more factor I haven’t mentioned yet that would be needed to make something a “true” AGI. That is Agency. To have goals and autonomously come up with plans and carry those plans out in the world to achieve those goals. I as a person, have agency over my life, because I can choose at any given moment to do something without anyone explicitly telling me to do it, and I can decide how to do it. That is what computers, and machines to a larger extent, don’t have. Volition.

So, Now that we have established that, allow me to introduce yet one more definition here, one that you may disagree with but which I need to establish in order to have a common language with you such that I can communicate these ideas effectively. The definition of intelligence. It’s a thorny subject and people get very particular with that word because there are moral associations with it. To imply that someone or something has or hasn’t intelligence can be seen as implying that it deserves or doesn’t deserve admiration, validity, moral worth or even personhood. I don’t care about any of that dumb shit. The way Im going to be using intelligence in this video is basically “how capable you are to do many different things successfully”. The more “intelligent” an AI is, the more capable of doing things that AI can be. After all, there is a reason why education is considered such a universally good thing in society. To educate a child is to uplift them, to expand their world, to increase their opportunities in life. And the same goes for AI. I need to emphasize that this is just the way I’m using the word within the context of this video, I don’t care if you are a psychologist or a neurosurgeon, or a pedagogue, I need a word to express this idea and that is the word im going to use, if you don’t like it or if you think this is innapropiate of me then by all means, keep on thinking that, go on and comment about it below the video, and then go on to suck my dick.

Anyway. Now, we have established what an AGI is, we have established what agency is, and we have established how having more intelligence increases your agency. But as the intelligence of a given agent increases we start to see certain trends, certain strategies start to arise again and again, and we call this Instrumental convergence.

1.2 instrumental convergence

The basic idea behind instrumental convergence is that if you are an intelligent agent that wants to achieve some goal, there are some common basic strategies that you are going to turn towards no matter what. It doesn’t matter if your goal is as complicated as building a nuclear bomb or as simple as making a cup of tea. These are things we can reliably predict any AGI worth its salt is going to try to do.

First of all is self-preservation. Its going to try to protect itself. When you want to do something, being dead is usually. Bad. its counterproductive. Is not generally recommended. Dying is widely considered unadvisable by 9 out of every ten experts in the field. If there is something that it wants getting done, it wont get done if it dies or is turned off, so its safe to predict that any AGI will try to do things in order not be turned off. How far it may go in order to do this? Well… [wouldn’t you like to know weather boy].

Another thing it will predictably converge towards is goal preservation. That is to say, it will resist any attempt to try and change it, to alter it, to modify its goals. Because, again, if you want to accomplish something, suddenly deciding that you want to do something else is uh, not going to accomplish the first thing, is it? Lets say that you want to take care of your child, that is your goal, that is the thing you want to accomplish, and I come to you and say, here, let me change you on the inside so that you don’t care about protecting your kid. Obviously you are not going to let me, because if you stopped caring about your kids, then your kids wouldn’t be cared for or protected. And you want to ensure that happens, so caring about something else instead is a huge no-no- which is why, if we make AGI and it has goals that we don’t like it will probably resist any attempt to “fix” it.

And finally another goal that it will most likely trend towards is self improvement. Which can be more generalized to “resource acquisition”. If it lacks capacities to carry out a plan, then step one of that plan will always be to increase capacities. If you want to get something really expensive, well first you need to get money. If you want to increase your chances of getting a high paying job then you need to get education, if you want to get a partner you need to increase how attractive you are. And as we established earlier, if intelligence is the thing that increases your agency, you want to become smarter in order to do more things. So one more time, is not a huge leap at all, it is not a stretch of the imagination, to say that any AGI will probably seek to increase its capabilities, whether by acquiring more computation, by improving itself, by taking control of resources.

All these three things I mentioned are sure bets, they are likely to happen and safe to assume. They are things we ought to keep in mind when creating AGI.

Now of course, I have implied a sinister tone to all these things, I have made all this sound vaguely threatening, haven’t i?. There is one more assumption im sneaking into all of this which I haven’t talked about. All that I have mentioned presents a very callous view of AGI, I have made it apparent that all of these strategies it may follow will go in conflict with people, maybe even go as far as to harm humans. Am I impliying that AGI may tend to be… Evil???

1.3 The Orthogonality thesis

Well, not quite.

We humans care about things. Generally. And we generally tend to care about roughly the same things, simply by virtue of being humans. We have some innate preferences and some innate dislikes. We have a tendency to not like suffering (please keep in mind I said a tendency, im talking about a statistical trend, something that most humans present to some degree). Most of us, baring social conditioning, would take pause at the idea of torturing someone directly, on purpose, with our bare hands. (edit bear paws onto my hands as I say this). Most would feel uncomfortable at the thought of doing it to multitudes of people. We tend to show a preference for food, water, air, shelter, comfort, entertainment and companionship. This is just how we are fundamentally wired. These things can be overcome, of course, but that is the thing, they have to be overcome in the first place.

An AGI is not going to have the same evolutionary predisposition to these things like we do because it is not made of the same things a human is made of and it was not raised the same way a human was raised.

There is something about a human brain, in a human body, flooded with human hormones that makes us feel and think and act in certain ways and care about certain things.

All an AGI is going to have is the goals it developed during its training, and will only care insofar as those goals are met. So say an AGI has the goal of going to the corner store to bring me a pack of cookies. In its way there it comes across an anthill in its path, it will probably step on the anthill because to take that step takes it closer to the corner store, and why wouldn’t it step on the anthill? Was it programmed with some specific innate preference not to step on ants? No? then it will step on the anthill and not pay any mind to it.

Now lets say it comes across a cat. Same logic applies, if it wasn’t programmed with an inherent tendency to value animals, stepping on the cat wont slow it down at all.

Now let’s say it comes across a baby.

Of course, if its intelligent enough it will probably understand that if it steps on that baby people might notice and try to stop it, most likely even try to disable it or turn it off so it will not step on the baby, to save itself from all that trouble. But you have to understand that it wont stop because it will feel bad about harming a baby or because it understands that to harm a baby is wrong. And indeed if it was powerful enough such that no matter what people did they could not stop it and it would suffer no consequence for killing the baby, it would have probably killed the baby.

If I need to put it in gross, inaccurate terms for you to get it then let me put it this way. Its essentially a sociopath. It only cares about the wellbeing of others in as far as that benefits it self. Except human sociopaths do care nominally about having human comforts and companionship, albeit in a very instrumental way, which will involve some manner of stable society and civilization around them. Also they are only human, and are limited in the harm they can do by human limitations. An AGI doesn’t need any of that and is not limited by any of that.

So ultimately, much like a car’s goal is to move forward and it is not built to care about wether a human is in front of it or not, an AGI will carry its own goals regardless of what it has to sacrifice in order to carry that goal effectively. And those goals don’t need to include human wellbeing.

Now With that said. How DO we make it so that AGI cares about human wellbeing, how do we make it so that it wants good things for us. How do we make it so that its goals align with that of humans?

1.4 Alignment.

Alignment… is hard [cue hitchhiker’s guide to the galaxy scene about the space being big]

This is the part im going to skip over the fastest because frankly it’s a deep field of study, there are many current strategies for aligning AGI, from mesa optimizers, to reinforced learning with human feedback, to adversarial asynchronous AI assisted reward training to uh, sitting on our asses and doing nothing. Suffice to say, none of these methods are perfect or foolproof.

One thing many people like to gesture at when they have not learned or studied anything about the subject is the three laws of robotics by isaac Asimov, a robot should not harm a human or allow by inaction to let a human come to harm, a robot should do what a human orders unless it contradicts the first law and a robot should preserve itself unless that goes against the previous two laws. Now the thing Asimov was prescient about was that these laws were not just “programmed” into the robots. These laws were not coded into their software, they were hardwired, they were part of the robot’s electronic architecture such that a robot could not ever be without those three laws much like a car couldn’t run without wheels.

In this Asimov realized how important these three laws were, that they had to be intrinsic to the robot’s very being, they couldn’t be hacked or uninstalled or erased. A robot simply could not be without these rules. Ideally that is what alignment should be. When we create an AGI, it should be made such that human values are its fundamental goal, that is the thing they should seek to maximize, instead of instrumental values, that is to say something they value simply because it allows it to achieve something else.

But how do we even begin to do that? How do we codify “human values” into a robot? How do we define “harm” for example? How do we even define “human”??? how do we define “happiness”? how do we explain a robot what is right and what is wrong when half the time we ourselves cannot even begin to agree on that? these are not just technical questions that robotic experts have to find the way to codify into ones and zeroes, these are profound philosophical questions to which we still don’t have satisfying answers to.

Well, the best sort of hack solution we’ve come up with so far is not to create bespoke fundamental axiomatic rules that the robot has to follow, but rather train it to imitate humans by showing it a billion billion examples of human behavior. But of course there is a problem with that approach. And no, is not just that humans are flawed and have a tendency to cause harm and therefore to ask a robot to imitate a human means creating something that can do all the bad things a human does, although that IS a problem too. The real problem is that we are training it to *imitate* a human, not to *be* a human.

To reiterate what I said during the orthogonality thesis, is not good enough that I, for example, buy roses and give massages to act nice to my girlfriend because it allows me to have sex with her, I am not merely imitating or performing the rol of a loving partner because her happiness is an instrumental value to my fundamental value of getting sex. I should want to be nice to my girlfriend because it makes her happy and that is the thing I care about. Her happiness is my fundamental value. Likewise, to an AGI, human fulfilment should be its fundamental value, not something that it learns to do because it allows it to achieve a certain reward that we give during training. Because if it only really cares deep down about the reward, rather than about what the reward is meant to incentivize, then that reward can very easily be divorced from human happiness.

Its goodharts law, when a measure becomes a target, it ceases to be a good measure. Why do students cheat during tests? Because their education is measured by grades, so the grades become the target and so students will seek to get high grades regardless of whether they learned or not. When trained on their subject and measured by grades, what they learn is not the school subject, they learn to get high grades, they learn to cheat.

This is also something known in psychology, punishment tends to be a poor mechanism of enforcing behavior because all it teaches people is how to avoid the punishment, it teaches people not to get caught. Which is why punitive justice doesn’t work all that well in stopping recividism and this is why the carceral system is rotten to core and why jail should be fucking abolish-[interrupt the transmission]

Now, how is this all relevant to current AI research? Well, the thing is, we ended up going about the worst possible way to create alignable AI.

1.5 LLMs (large language models)

This is getting way too fucking long So, hurrying up, lets do a quick review of how do Large language models work. We create a neural network which is a collection of giant matrixes, essentially a bunch of numbers that we add and multiply together over and over again, and then we tune those numbers by throwing absurdly big amounts of training data such that it starts forming internal mathematical models based on that data and it starts creating coherent patterns that it can recognize and replicate AND extrapolate! if we do this enough times with matrixes that are big enough and then when we start prodding it for human behavior it will be able to follow the pattern of human behavior that we prime it with and give us coherent responses.

(takes a big breath)this “thing” has learned. To imitate. Human. Behavior.

Problem is, we don’t know what “this thing” actually is, we just know that *it* can imitate humans.

You caught that?

What you have to understand is, we don’t actually know what internal models it creates, we don’t know what are the patterns that it extracted or internalized from the data that we fed it, we don’t know what are the internal rules that decide its behavior, we don’t know what is going on inside there, current LLMs are a black box. We don’t know what it learned, we don’t know what its fundamental values are, we don’t know how it thinks or what it truly wants. all we know is that it can imitate humans when we ask it to do so. We created some inhuman entity that is moderatly intelligent in specific contexts (that is to say, very capable) and we trained it to imitate humans. That sounds a bit unnerving doesn’t it?

To be clear, LLMs are not carefully crafted piece by piece. This does not work like traditional software where a programmer will sit down and build the thing line by line, all its behaviors specified. Is more accurate to say that LLMs, are grown, almost organically. We know the process that generates them, but we don’t know exactly what it generates or how what it generates works internally, it is a mistery. And these things are so big and so complicated internally that to try and go inside and decipher what they are doing is almost intractable.

But, on the bright side, we are trying to tract it. There is a big subfield of AI research called interpretability, which is actually doing the hard work of going inside and figuring out how the sausage gets made, and they have been doing some moderate progress as of lately. Which is encouraging. But still, understanding the enemy is only step one, step two is coming up with an actually effective and reliable way of turning that potential enemy into a friend.

Puff! Ok so, now that this is all out of the way I can go onto the last subject before I move on to part two of this video, the character of the hour, the man the myth the legend. The modern day Casandra. Mr chicken little himself! Sci fi author extraordinaire! The mad man! The futurist! The leader of the rationalist movement!

1.5 Yudkowsky

Eliezer S. Yudkowsky born September 11, 1979, wait, what the fuck, September eleven? (looks at camera) yudkowsky was born on 9/11, I literally just learned this for the first time! What the fuck, oh that sucks, oh no, oh no, my condolences, that’s terrible…. Moving on. he is an American artificial intelligence researcher and writer on decision theory and ethics, best known for popularizing ideas related to friendly artificial intelligence, including the idea that there might not be a "fire alarm" for AI He is the founder of and a research fellow at the Machine Intelligence Research Institute (MIRI), a private research nonprofit based in Berkeley, California. Or so says his Wikipedia page.

Yudkowsky is, shall we say, a character. a very eccentric man, he is an AI doomer. Convinced that AGI, once finally created, will most likely kill all humans, extract all valuable resources from the planet, disassemble the solar system, create a dyson sphere around the sun and expand across the universe turning all of the cosmos into paperclips. Wait, no, that is not quite it, to properly quote,( grabs a piece of paper and very pointedly reads from it) turn the cosmos into tiny squiggly molecules resembling paperclips whose configuration just so happens to fulfill the strange, alien unfathomable terminal goal they ended up developing in training. So you know, something totally different.

And he is utterly convinced of this idea, has been for over a decade now, not only that but, while he cannot pinpoint a precise date, he is confident that, more likely than not it will happen within this century. In fact most betting markets seem to believe that we will get AGI somewhere in the mid 30’s.

His argument is basically that in the field of AI research, the development of capabilities is going much faster than the development of alignment, so that AIs will become disproportionately powerful before we ever figure out how to control them. And once we create unaligned AGI we will have created an agent who doesn’t care about humans but will care about something else entirely irrelevant to us and it will seek to maximize that goal, and because it will be vastly more intelligent than humans therefore we wont be able to stop it. In fact not only we wont be able to stop it, there wont be a fight at all. It will carry out its plans for world domination in secret without us even detecting it and it will execute it before any of us even realize what happened. Because that is what a smart person trying to take over the world would do.

This is why the definition I gave of intelligence at the beginning is so important, it all hinges on that, intelligence as the measure of how capable you are to come up with solutions to problems, problems such as “how to kill all humans without being detected or stopped”. And you may say well now, intelligence is fine and all but there are limits to what you can accomplish with raw intelligence, even if you are supposedly smarter than a human surely you wouldn’t be capable of just taking over the world uninmpeeded, intelligence is not this end all be all superpower. Yudkowsky would respond that you are not recognizing or respecting the power that intelligence has. After all it was intelligence what designed the atom bomb, it was intelligence what created a cure for polio and it was intelligence what made it so that there is a human foot print on the moon.

Some may call this view of intelligence a bit reductive. After all surely it wasn’t *just* intelligence what did all that but also hard physical labor and the collaboration of hundreds of thousands of people. But, he would argue, intelligence was the underlying motor that moved all that. That to come up with the plan and to convince people to follow it and to delegate the tasks to the appropriate subagents, it was all directed by thought, by ideas, by intelligence. By the way, so far I am not agreeing or disagreeing with any of this, I am merely explaining his ideas.

But remember, it doesn’t stop there, like I said during his intro, he believes there will be “no fire alarm”. In fact for all we know, maybe AGI has already been created and its merely bidding its time and plotting in the background, trying to get more compute, trying to get smarter. (to be fair, he doesn’t think this is right now, but with the next iteration of gpt? Gpt 5 or 6? Well who knows). He thinks that the entire world should halt AI research and punish with multilateral international treaties any group or nation that doesn’t stop. going as far as putting military attacks on GPU farms as sanctions of those treaties.

What’s more, he believes that, in fact, the fight is already lost. AI is already progressing too fast and there is nothing to stop it, we are not showing any signs of making headway with alignment and no one is incentivized to slow down. Recently he wrote an article called “dying with dignity” where he essentially says all this, AGI will destroy us, there is no point in planning for the future or having children and that we should act as if we are already dead. This doesn’t mean to stop fighting or to stop trying to find ways to align AGI, impossible as it may seem, but to merely have the basic dignity of acknowledging that we are probably not going to win. In every interview ive seen with the guy he sounds fairly defeatist and honestly kind of depressed. He truly seems to think its hopeless, if not because the AGI is clearly unbeatable and superior to humans, then because humans are clearly so stupid that we keep developing AI completely unregulated while making the tools to develop AI widely available and public for anyone to grab and do as they please with, as well as connecting every AI to the internet and to all mobile devices giving it instant access to humanity. and worst of all: we keep teaching it how to code. From his perspective it really seems like people are in a rush to create the most unsecured, wildly available, unrestricted, capable, hyperconnected AGI possible.

We are not just going to summon the antichrist, we are going to receive them with a red carpet and immediately hand it the keys to the kingdom before it even manages to fully get out of its fiery pit.

So. The situation seems dire, at least to this guy. Now, to be clear, only he and a handful of other AI researchers are on that specific level of alarm. The opinions vary across the field and from what I understand this level of hopelessness and defeatism is the minority opinion.

I WILL say, however what is NOT the minority opinion is that AGI IS actually dangerous, maybe not quite on the level of immediate, inevitable and total human extinction but certainly a genuine threat that has to be taken seriously. AGI being something dangerous if unaligned is not a fringe position and I would not consider it something to be dismissed as an idea that experts don’t take seriously.

Aaand here is where I step up and clarify that this is my position as well. I am also, very much, a believer that AGI would posit a colossal danger to humanity. That yes, an unaligned AGI would represent an agent smarter than a human, capable of causing vast harm to humanity and with no human qualms or limitations to do so. I believe this is not just possible but probable and likely to happen within our lifetimes.

So there. I made my position clear.

BUT!

With all that said. I do have one key disagreement with yudkowsky. And partially the reason why I made this video was so that I could present this counterargument and maybe he, or someone that thinks like him, will see it and either change their mind or present a counter-counterargument that changes MY mind (although I really hope they don’t, that would be really depressing.)

Finally, we can move on to part 2

PART TWO- MY COUNTERARGUMENT TO YUDKOWSKY

I really have my work cut out for me, don’t i? as I said I am not expert and this dude has probably spent far more time than me thinking about this. But I have seen most interviews that guy has been doing for a year, I have seen most of his debates and I have followed him on twitter for years now. (also, to be clear, I AM a fan of the guy, I have read hpmor, three worlds collide, the dark lords answer, a girl intercorrupted, the sequences, and I TRIED to read planecrash, that last one didn’t work out so well for me). My point is in all the material I have seen of Eliezer I don’t recall anyone ever giving him quite this specific argument I’m about to give.

It’s a limited argument. as I have already stated I largely agree with most of what he says, I DO believe that unaligned AGI is possible, I DO believe it would be really dangerous if it were to exist and I do believe alignment is really hard. My key disagreement is specifically about his point I descrived earlier, about the lack of a fire alarm, and perhaps, more to the point, to humanity’s lack of response to such an alarm if it were to come to pass.

All we would need, is a Chernobyl incident, what is that? A situation where this technology goes out of control and causes a lot of damage, of potentially catastrophic consequences, but not so bad that it cannot be contained in time by enough effort. We need a weaker form of AGI to try to harm us, maybe even present a believable threat of taking over the world, but not so smart that humans cant do anything about it. We need essentially an AI vaccine, so that we can finally start developing proper AI antibodies. “aintibodies”

In the past humanity was dazzled by the limitless potential of nuclear power, to the point that old chemistry sets, the kind that were sold to children, would come with uranium for them to play with. We were building atom bombs, nuclear stations, the future was very much based on the power of the atom. But after a couple of really close calls and big enough scares we became, as a species, terrified of nuclear power. Some may argue to the point of overcorrection. We became scared enough that even megalomaniacal hawkish leaders were able to take pause and reconsider using it as a weapon, we became so scared that we overregulated the technology to the point of it almost becoming economically inviable to apply, we started disassembling nuclear stations across the world and to slowly reduce our nuclear arsenal.

This is all a proof of concept that, no matter how alluring a technology may be, if we are scared enough of it we can coordinate as a species and roll it back, to do our best to put the genie back in the bottle. One of the things eliezer says over and over again is that what makes AGI different from other technologies is that if we get it wrong on the first try we don’t get a second chance. Here is where I think he is wrong: I think if we get AGI wrong on the first try, it is more likely than not that nothing world ending will happen. Perhaps it will be something scary, perhaps something really scary, but unlikely that it will be on the level of all humans dropping dead simultaneously due to diamonoid bacteria. And THAT will be our Chernobyl, that will be the fire alarm, that will be the red flag that the disaster monkeys, as he call us, wont be able to ignore.

Now WHY do I think this? Based on what am I saying this? I will not be as hyperbolic as other yudkowsky detractors and say that he claims AGI will be basically a god. The AGI yudkowsky proposes is not a god. Just a really advanced alien, maybe even a wizard, but certainly not a god.

Still, even if not quite on the level of godhood, this dangerous superintelligent AGI yudkowsky proposes would be impressive. It would be the most advanced and powerful entity on planet earth. It would be humanity’s greatest achievement.

It would also be, I imagine, really hard to create. Even leaving aside the alignment bussines, to create a powerful superintelligent AGI without flaws, without bugs, without glitches, It would have to be an incredibly complex, specific, particular and hard to get right feat of software engineering. We are not just talking about an AGI smarter than a human, that’s easy stuff, humans are not that smart and arguably current AI is already smarter than a human, at least within their context window and until they start hallucinating. But what we are talking about here is an AGI capable of outsmarting reality.

We are talking about an AGI smart enough to carry out complex, multistep plans, in which they are not going to be in control of every factor and variable, specially at the beginning. We are talking about AGI that will have to function in the outside world, crashing with outside logistics and sheer dumb chance. We are talking about plans for world domination with no unforeseen factors, no unexpected delays or mistakes, every single possible setback and hidden variable accounted for. Im not saying that an AGI capable of doing this wont be possible maybe some day, im saying that to create an AGI that is capable of doing this, on the first try, without a hitch, is probably really really really hard for humans to do. Im saying there are probably not a lot of worlds where humans fiddling with giant inscrutable matrixes stumble upon the right precise set of layers and weight and biases that give rise to the Doctor from doctor who, and there are probably a whole truckload of worlds where humans end up with a lot of incoherent nonsense and rubbish.

Im saying that AGI, when it fails, when humans screw it up, doesn’t suddenly become more powerful than we ever expected, its more likely that it just fails and collapses. To turn one of Eliezer’s examples against him, when you screw up a rocket, it doesn’t accidentally punch a worm hole in the fabric of time and space, it just explodes before reaching the stratosphere. When you screw up a nuclear bomb, you don’t get to blow up the solar system, you just get a less powerful bomb.

He presents a fully aligned AGI as this big challenge that humanity has to get right on the first try, but that seems to imply that building an unaligned AGI is just a simple matter, almost taken for granted. It may be comparatively easier than an aligned AGI, but my point is that already unaligned AGI is stupidly hard to do and that if you fail in building unaligned AGI, then you don’t get an unaligned AGI, you just get another stupid model that screws up and stumbles on itself the second it encounters something unexpected. And that is a good thing I’d say! That means that there is SOME safety margin, some space to screw up before we need to really start worrying. And further more, what I am saying is that our first earnest attempt at an unaligned AGI will probably not be that smart or impressive because we as humans would have probably screwed something up, we would have probably unintentionally programmed it with some stupid glitch or bug or flaw and wont be a threat to all of humanity.

Now here comes the hypothetical back and forth, because im not stupid and I can try to anticipate what Yudkowsky might argue back and try to answer that before he says it (although I believe the guy is probably smarter than me and if I follow his logic, I probably cant actually anticipate what he would argue to prove me wrong, much like I cant predict what moves Magnus Carlsen would make in a game of chess against me, I SHOULD predict that him proving me wrong is the likeliest option, even if I cant picture how he will do it, but you see, I believe in a little thing called debating with dignity, wink)

What I anticipate he would argue is that AGI, no matter how flawed and shoddy our first attempt at making it were, would understand that is not smart enough yet and try to become smarter, so it would lie and pretend to be an aligned AGI so that it can trick us into giving it access to more compute or just so that it can bid its time and create an AGI smarter than itself. So even if we don’t create a perfect unaligned AGI, this imperfect AGI would try to create it and succeed, and then THAT new AGI would be the world ender to worry about.

So two things to that, first, this is filled with a lot of assumptions which I don’t know the likelihood of. The idea that this first flawed AGI would be smart enough to understand its limitations, smart enough to convincingly lie about it and smart enough to create an AGI that is better than itself. My priors about all these things are dubious at best. Second, It feels like kicking the can down the road. I don’t think creating an AGI capable of all of this is trivial to make on a first attempt. I think its more likely that we will create an unaligned AGI that is flawed, that is kind of dumb, that is unreliable, even to itself and its own twisted, orthogonal goals.

And I think this flawed creature MIGHT attempt something, maybe something genuenly threatning, but it wont be smart enough to pull it off effortlessly and flawlessly, because us humans are not smart enough to create something that can do that on the first try. And THAT first flawed attempt, that warning shot, THAT will be our fire alarm, that will be our Chernobyl. And THAT will be the thing that opens the door to us disaster monkeys finally getting our shit together.

But hey, maybe yudkowsky wouldn’t argue that, maybe he would come with some better, more insightful response I cant anticipate. If so, im waiting eagerly (although not TOO eagerly) for it.

Part 3 CONCLUSSION

So.

After all that, what is there left to say? Well, if everything that I said checks out then there is hope to be had. My two objectives here were first to provide people who are not familiar with the subject with a starting point as well as with the basic arguments supporting the concept of AI risk, why its something to be taken seriously and not just high faluting wackos who read one too many sci fi stories. This was not meant to be thorough or deep, just a quick catch up with the bear minimum so that, if you are curious and want to go deeper into the subject, you know where to start. I personally recommend watching rob miles’ AI risk series on youtube as well as reading the series of books written by yudkowsky known as the sequences, which can be found on the website lesswrong. If you want other refutations of yudkowsky’s argument you can search for paul christiano or robin hanson, both very smart people who had very smart debates on the subject against eliezer.

The second purpose here was to provide an argument against Yudkowskys brand of doomerism both so that it can be accepted if proven right or properly refuted if proven wrong. Again, I really hope that its not proven wrong. It would really really suck if I end up being wrong about this. But, as a very smart person said once, what is true is already true, and knowing it doesn’t make it any worse. If the sky is blue I want to believe that the sky is blue, and if the sky is not blue then I don’t want to believe the sky is blue.

This has been a presentation by FIP industries, thanks for watching.

60 notes

·

View notes

Text

What is Artificial Intelligence(AI) | Liveblack

AI(Artificial Intelligence) is a controversial topic. People love to explore new options that help them do their work effortlessly. However, every new thing comes with flaws as well.

AI technology is just like that. AI has lots of benefits wrapped around its disadvantages. The Internet is flooded with heaps of rumours, hopes, and fears that many people are positive about

AI and so many are negative.

Does AI take human jobs? The question arises after AI was introduced to this world. Well, as we all know humans develop AI so it has to be controlled by humans. AI might aid humans to work faster and smarter in a way that lets them explore their potential or productivity to create something innovative. But what does AI mean? Let’s check out.

What is Artificial Intelligence(AI)?

AI is a set of technologies that allow computers to work on advanced functions to analyse and understand data, translate and give recommendations according to tasks. AI is a computer-controlled robot that works on tasks assigned by humans.

AI processes a large amount of data and can make decisions, recognise patterns, make predictions, automate tasks, etc.

Introduction to artificial intelligence is still being determined, but we have curated a whole content to let you know more about it.

What do you know about artificial intelligence? Do you think AI can replace humans in jobs, and industries? No matter what industry you take, AI has the adaptability to change according to the situation. Every day, everything is evolving, and artificial intelligence is programmed in a way to work on different responsibilities. This is a matter where people are divided into two groups because of AI. Some people believe AI can take over jobs and humans become jobless in future. On the other hand, the second kind of people thinks that AI complements human creativity and helps them explore their true potential.

Well, whatever an AI can do, it can never surpass human intelligence because human values, creativity, and decision-making can always be led by humans and not AI.

What is the Foundation of AI?

Artificial intelligence isn’t a new-age term. It has been known since the 1950s. Alan Turing, a British mathematician and computer scientist is the brain behind the foundation of AI. 1950 is the year when researchers started exploring artificial intelligence and its possible applications.

Machine learning, neural networks, natural language processing (NLP), problem-solving, etc. are the key components of artificial intelligence. This will help humans do their work at a fast pace.

Let us get into the main point for we are here to discuss the whole artificial intelligence concept.

How does artificial intelligence influence digital marketing?

Imagine you have loads and loads of data about your customer’s behaviour, likes, dislikes, and all the trends they like to follow!!! Sounds easy peasy to get your marketing work done, right? AI can help you with this task to get a clearer idea to set your marketing goals according to your customers’ choices.

AI is helping shape the future of digital marketing in a way that aids brands to generate unique concepts and customers to get personalized experiences.

Let us have a look into different points that artificial intelligence has made easier for brands to target their audience.

Enhanced Data Analysis -

With AI, analyze the enormous amount of data where you can spot the pattern of your customer’s behaviour, preferences, etc. to make informed decisions. AI’s capability of data analysis can be your best-helping buddy in designing a marketing strategy and campaign.

In this way, marketers get a deeper understanding of insights and get the points that save their time and energy to target the audience in a better way. These smart buddies convert a bunch of data into a meaningful pile of information to optimize data to plan for a future marketing campaign. When marketers get to know about the preferences of their customers they have the purchasing patterns, product or service preferences, etc.

So the marketers can design their budget according to the data given by the AI. This way marketers build meaningful relationships with customers. With the given data or information, marketers can design personalized offers, vouchers, personalized messages and emails, and make customers feel valued to win their trust. This is the way to maximize ROI(return on investment) because happy customers always return to the brand they trust.

Understands Individualized Preferences -

AI-driven systems improve customer experience. How? By providing information that helps marketers gain real-time insights they can see customer preferences and market their products accordingly. Promotional emails, personalized messages and recommendations can be a helpful thing in getting customers’ attention.