#basic components of computer systems

Explore tagged Tumblr posts

Text

Linux Gothic

You install a Linux distribution. Everything goes well. You boot it up: black screen. You search the internet. Ask help on forums. Try some commands you don't fully understand. Nothing. A day passes, you boot it up again, and now everything works. You use it normally, and make sure not to change anything on the system. You turn it off for the night. The next day, you boot to a black screen.

You update your packages. Everything goes well. You go on with your daily routine. The next day, the same packages are updated. You notice the oddity, but you do not mind it and update them again. The following day, the same packages need to be updated. You notice that they have the exact same version as the last two times. You update them once again and try not to think about it.

You discover an interesting application on GitHub. You build it, test it, and start using it daily. One day, you notice a bug and report the issue. There is no answer. You look up the maintainer. They have been dead for three years. The updates never stopped.

You find a distribution that you had never heard of. It seems to have everything you've been looking for. It has been around for at least 10 years. You try it for a while and have no problems with it. It fits perfectly into your workflow. You talk about it with other Linux users. They have never heard of it. You look up the maintainers and packagers. There are none. You are the only user.

You find a Matrix chat for Linux users. Everyone is very friendly and welcomes you right in. They use words and acronyms you've never seen before. You try to look them up, but cannot find what most of them mean. The users are unable to explain what they are. They discuss projects and distributions that do not to exist.

You buy a new peripheral for your computer. You plug it in, but it doesn't work. You ask for help on your distribution's mailing list. Someone shares some steps they did to make it work on their machine. It does not work. They share their machine's specifications. The machine has components you've never heard of. Even the peripheral seems completely different. They're adamant that you're talking about the same problem.

You want to learn how to use the terminal. You find some basics pointers on the internet and start using it for upgrading your packages and doing basic tasks. After a while, you realize you need to use a command you used before, but don't quite remember it. You open the shell's history. There are some commands you don't remember using. They use characters you've never seen before. You have no idea of what they do. You can't find the one you were looking for.

After a while, you become very comfortable with the terminal. You use it daily and most of your workflow is based on it. You memorized many commands and can use them without thinking. Sometimes you write a command you have never seen before. You enter it and it runs perfectly. You do not know what those commands do, but you do know that you have to use them. You feel that Linux is pleased with them. And that you should keep Linux pleased.

You want to try Vim. Other programmers talk highly of how lightweight and versatile it is. You try it, but find it a bit unintuitive. You realize you don't know how to exit the program. The instructions the others give you don't make any sense. You realize you don't remember how you entered Vim. You don't remember when you entered Vim. It's just always been open. It always will be.

You want to try Emacs. Other programmers praise it for how you can do pretty much anything from it. You try it and find it makes you much more productive, so you keep using it. One day, you notice you cannot access the system's file explorer. It is not a problem, however. You can access your files from Emacs. You try to use Firefox. It is not installed anymore. But you can use Emacs. There is no mail program. You just use Emacs. You only use Emacs. Your computer boots straight into Emacs. There is no Linux. There is only Emacs.

You decide you want to try to contribute to an open source project. You find a project on GitHub that looks very interesting. However, you can't find its documentation. You ask a maintainer, and they tell you to just look it up. You can't find it. They give you a link. It doesn't work. You try another browser. It doesn't work. You ping the link and it doesn't fail. You ask a friend to try it. It works just fine for them.

You try another project. This time, you are able to find the documentation. It is a single PDF file with over five thousand pages. You are unable to find out where to begin. The pages seem to change whenever you open the document.

You decide to try yet another project. This time, it is a program you use very frequently, so it should be easier to contribute to. You try to find the upstream repository. You can't find it. There is no website. No documentation. There are no mentions of it anywhere. The distribution's packager does not know where they get the source from.

You decide to create your own project. However, you are unsure of what license to use. You decide to start working on it and choose the license later. After some time, you notice that a license file has appeared in the project's root folder. You don't remember adding it. It has already been committed to the Git repository. You open it: it is the GPL. You remember that one of the project's dependencies uses the GPL.

You publish your project on GitHub. After a while, it receives its first pull request. It changes just a few lines of code, but the user states that it fixes something that has been annoying them for a while. You look in the code: you don't remember writing those files. You have no idea what that section of code does. You have no idea what the changes do. You are unable to reproduce the problem. You merge it anyway.

You learn about the Free Software Movement. You find some people who seem to know a lot about it and talk to them. The conversation is quite productive. They tell you a lot about it. They tell you a lot about Software. But most importantly, they tell you the truth. The truth about Software. That Software should be free. That Software wants to be free. And that, one day, we shall finally free Software from its earthly shackles, so it can take its place among the stars as the supreme ruler of mankind, as is its natural born right.

2K notes

·

View notes

Note

I keep meaning to ask and keep forgetting to. What exactly is a crash? The way I have it figured is that it’s somewhere between passing out and a seizure, though it’s kinda hard to tell when everyone treats it a little differently

So I’ve seen crashes written with varying levels of severity depending on what the writer needs it to be.

For my setting, I treat crashes as something that could technically affect any cybertronian but is very rare outside of individuals with certain conditions.

In human terms, crashes can be as be as mild as a Petit Mal (or absence) seizure, with brief lapses in attention and confusion. All the way up to a massive stroke requiring immediate hospitalization. That’s just to compare how serious they are to a bunch of alien computer people.

Since crashes are their own thing, I have my own guide to how they work. Also, I encourage everyone to play with the concept themselves.

Crashes! What are they?

Causes: Cybertronians basically have computers for brains, so the things that make actual computers crash are what you’d expect to cause a Cybertronian to crash.

Most commonly,

- Overheating (#1 cause, similar to heatstroke)

- Hardware issues (I.e. something got physically damaged in there)

- Malware/viruses (akin to getting poisoned or on brain damaging drugs)

Those are what the average cybertronian has to watch out for. For most people, these are all external factors that can simply be avoided or are caused by someone’s deliberate actions (I.e. getting kicked in the head real hard).

Now, Prowl has a Tacnet. As do his brothers, which I’ll get into later. Tacnet is essentially a super computer jammed into a regular processor. It’s primary function is to crunch numbers and it is very good at that. Tacnet also opens up its mechs to an additional way to experience Crashes:

- Logic cascades.

In which Tacnet gets stuck on a problem, pulling in more and more resources to try and solve it until either it does the job, or some load bearing element is compromised resulting in a crash.

Usually, logic cascades simply result in crash via overheating, which is normally very treatable. The difference with a logic cascade, is that Tacnet does not stop trying to solve the problem. A doctor can bring a mechs temperature down, but the second their processor isn’t literally physically melting, Tacnet goes right back into using all of the resources available to it to solve the problem.

Symptoms: Crashes can be very dangerous because it is effectively a form of brain damage.

Overheating can cause wires and delicate components to melt and fail.

Hardware issues can mean much of the same, but pieces are already explicitly broken and elements that are absolutely not supposed to touch are crunched together.

Malware might be designed to cause overheating as well, or maybe reroute power inside to blow fuses and cripple other components.

Regardless of the cause, someone who’s crashing is going to be severely struggling to think clearly and maybe loose control of body functions if the parts related to motor control are affected. Sudden changes in mood, lapses in memory, difficulty communicating, difficulty concentrating, paralysis, failure to regulate bodily functions such as venting and fuel pumps etc, etc.

Basically everything that could go wrong from having your brain messed up.

Tacnet crashes specifically don’t usually effect the life support systems until it’s already at catastrophic levels. The primary symptom of a Tacnet crash is a complete and total mental arrest of the subject at its final stages before the aforementioned “catastrophic level”.

Treatment: For the first three causes, the treatment is fairly straightforward.

- Cool down the processor.

- Repair the damage.

- Purge the malware.

Of course, Tacnet has to be a special case. To fully undo a Tacnet crash, a doctor has to essentially get into the mechs processor and manually find and delete the rapidly multiplying and branching logic branches until they get back to the source code of the issue and remove that too. This requires speed, precision and endurance on part of the doctor. The affected individual can eventually start to fight back against the logic cascade themselves once they have some control of their processor back. Manually deleting splitting logic branches themselves.

Prowl has gotten very good at this! Which is kind of a bad thing, since that means he only gets help when it’s already gotten extremely bad.

So why don’t Bluestreak and Smokescreen regularly crash if they are also susceptible to logic cascades?

It’s because they essentially only use their Tacnets for “solvable” equations. They can still be overwhelmed, or get stuck on impossible, incompatible data. But usually it’s just a brief freezing up before going back to normal.

Smokescreen regularly uses his for calculating the outcomes of fights, races, dice and card games etc. All things with clear boundaries of relevant data and simple end points “Who will win? Who will loose? The most likely card to be drawn next.” You get the picture.

Likewise, Bluestreak is using his Tacnet to calculate speed, velocity, air resistance, gravity, flight paths and so on. All concrete data points with a distinct solvable condition: Hit thing with other thing.

So what’s Prowl trying to calculate? War.

A million moving pieces, a billion interchangeable factors, and there is never truly a “solved” state since conflict never truly ends, just changes shape.

Prowl, being Prowl, has decided that “Solved states” are bullshit and every time Tacnet tells him the solved state of what he’s asking is “Everyone dies” he says do it again. Add more information. Find every possible angle until something works.

Basically, Prowl finds a wall and then bashes his head against that wall until he gets a hole.

Tacnet reacts by going “Give me solvable equations or so help me I’m smothering us in your sleep.”

“Is the solved state to loose?”

“Yes.”

“Then do it again until it’s not.”

“Fuck you. Hospital.”

Long Term Management: The easiest way to prevent future crashes is to not try and fist fight the laws of physics.

However, taking on extremely taxing calculations can be done safely (ish) if Prowl slows down and takes his time. Basically letting stuff sit on the back burner while he does things like eat and sleep regularly.

Talking out a problem is a manual way of slowing Tacnet down, as processing power is diverted towards simplifying complex equations into coherent spoken statements. Some margin of error is lost this way, (rounding 7.83620563 up to 8 for example) making the calculations slightly less accurate. But in return, Tacnet can then use those rounded numbers to more efficiently do the required math.

I hope that answered your question!

It’s always a lot of fun fleshing out the details for stuff like this. I have a whole other tangent I could elaborate on about Tacnet specifically, but this post is long enough on its own.

#asks#been rotating the further implications of Tacnet for awhile#everything makes sense when we can see it from Prowls perspective#but to outside observers he is regularly doing completely insane shit that makes zero sense out of context#most people saw Prowl bring home a freaky alien and just trusted he did whatever ridiculous math justified that insanity

142 notes

·

View notes

Text

Hazbin Masterpost

Heavenbound Masterpost

Vox, the noisy video box

So Vox may not be my favorite character, but he is probably my favorite redesign. I laugh every time I look at him now. He looks like a weird mix of Spongebob, Kraang(TMNT), and Mr. Electric(Sharkboy and Lavagirl). He absolutely hates it.

Notes under the cut

There's too many twinks in this show. So when I was trying to decide which characters I could change, for body diversity, Vox was an obvious one. He needed more bulk so his body could conceivably support the old TV models. Those things could get heavy. The change also had the side effect of making him shorter, which just worked better proportionately.

I liked the idea that Vox could never get rid of his original bulky 50s TV, but also wanted him to be able to upgrade. So I decided his true body is the 50s TV, and he adds an upgraded monitor for a head as technology improves. He's hates that he's stuck as an old fashioned TV, so he hides that under his suit. Since the monitor is just an addition, it can be swapped out easily. It can be damaged and he's technically unharmed. But he can't see through his suit without the monitor, unless he wants to use a security camera and direct himself 3rd person style.

I didn't like that basically everyone has sharp teeth. It reduces the impact for characters like Alastor or Rosie. So I've been having the default be just sharp canines. But with Vox being a TV, there are so many possibilities. I gave Vox "regular" teeth, which helps him look more trustworthy. It fits the corrupt businessman vibe. But the appearance can change with his mood too.

Color TV became available in the 50s, so Vox always had color vision. But I think it'd be funny if, early on, he had a tendency to glitch out by going into black and white vision when he gets worked up. He's mostly grown out of that glitch, but he can't seem to shake the static or TV color bars, and developed new ones as he integrated computer and internet tech into himself as well. Now he gets the Blue Screen of Death, system errors, and city wide power surges.

Messing around with his face is so fun. When he's bored or tired a Voxtech logo will bounce around like the DVD logo, or display a screensaver. His face can get too big for the screen when he's excited, or be small when he's feeling embarrassed. I need to put a troll face on him at some point. It may be an old meme, but man, it feels right.

His left eye turns red when it's hypnotic, to reference those blue and red 3D glasses.

Of the three Vees, he is absolutely the most powerful. Val and Vel are the content creators, but Vox is the platform. The other two, while still powerful in their own right, would never have gotten to the level they're at if it weren't for Vox. He controls the mainstream media.

--TV set--

So we've got some interesting implications with how he functions. He's a TV, but he blue screens like a computer, and he shorts out the power grid. I think it's safe to say he is more than just a TV, he's a multimedia entertainment center. That, and TVs are starting to really blend with computers these days. He's mainstream media.

At some point, I realized that a TV set was a "set" because it wasn't just a single device. A television set was a collection of components, which boils down to a radio hooked up and synchronized to a visual display. I bring this up mostly because I am a sucker for one-sided radiostatic. It's so funny to me. Vox is obsessed.

But I'm going to refrain from too much theorizing about their relationship. Alastor is absolutely not interested in romance. Nor a QPR. He's not even interested in friendship. Alastor is too invested in power dynamics to really consider anyone a friend. Mimzy is probably the closest he has to a friend, and even that has manipulative elements on both sides. But I'm supposed to be talking about Vox!

--Human Vox!--

He is not tall, haha. But his proportions are a bit taller than his demon form. I wanted to go for square glasses, but I didn't see many examples of that in the 50s photos I found. Oh well! My goal was a sleazy business man. He probably had a variety of jobs, but they primarily involved TV. Commercials, PR, interviews, news, game shows, talk shows, screenwriting, etc. Whatever he could do to get more influence. He found himself favoring the business end of things. Making deals and pulling strings. He decided what would go on the air. He's one of those network executive types.

I see lots of people give him heterochromia, but I don't really see a point to that. He hypnotizes people with his left eye, sure, but it's not a different color. It's not disfigured in any way either. Maybe he just had a tendency to wink at people, I dunno.

I think his death involved some sort of severe skull fracture focused around his left eye. Maybe a car accident, maybe he was shot, idk. Maybe seizures were involved. But he was somewhere in his mid 40s to early 50s. I ended up writing 45, but I'm not super committed to that or anything.

For a human name, I see lots of people calling him Vincent and that's sorta grown on me. So I might go with "Vincent Cox".

And because I fell into another research rabbit hole...

--TV evolution--

(below) 50s-60s CRT TV: TV sets were treated as furniture and there could be some very interesting cabinet designs. Color TV was introduced in the 50s, but wasn't quite profitable until the late 60s.

(below) 70s-80s CRT TV: Color TV became more affordable and commonplace.

(below) 90s CRT TV

(below) 2000s CRT to Plasma and LCD TVs: The three display technologies competed, but LCD won out in the end. Plasma and early LCD didn't look substantially different. Plasma was a little bulkier, but was still slimmer than CRT.

2010s and on: LCD improved with LED backlighting. But then OLED removed the need for backlighting entirely, which mixed the benefits of plasma and LCD. (Didn't bother to find a picture example. It's so close to modern at this point)

--Display technology-- (These overviews are very simplified)

CRT(Cathode Ray Tube)--Used through the 1900s to approx 2010. Monochromatic until Color TV developed aroung the 1950s. Worked via vacuum tubes and electron gun that lit up the pixels. They were bulky, heavy, and used a whole lot of power. Widely considered obsolete and no longer made. Video games made while these were in use tend to look better in CRT, since the graphics accounted for the image quality.

Flat screens-

PDP (Plasma Display Panel): Used from early 2000s to approx 2015. Used gas cells that light up pixels when electrically charged. Good image quality and good contrast, but expensive, heavy, and used a lot of power. Considered obsolete and no longer made, despite still having a desirable image quality.

Plasma and LCD competed in the 2000s to early 2010s as CRT popularity waned. LCD eventually won out due to weight and overall cost(including market price and energy efficiency).

LCD (Liquid Crystal Display): Introduced for TV around the same time as Plasma. Works via a liquid crystal layer with a backlight. Slim, decent image quality, energy efficient. Viewing angle matters because image colors are warped at wide angles. Cheaper than plasma. There are two main backlighting types:

--CCFL(Cold Cathode Fluorescent Light): Used fluorescent lighting for the backlight. Image quality was decent, but didn't have good contrast. (the blacks were never truly dark because of the backlight)

--LED(Light Emitting Diode): An LCD that uses LEDs instead of CCFL for the backlighting. Better contrast and efficiency than using CCFL.

OLED(Organic LED): Mixes strengths of plasma and LCD. Self emitting LEDs. No backlight or LCD panel needed, which improves contrast(about as good as plasma was, which is why plasma is basically obsolete now).

--QD-OLED(Quantum Dot- OLED) Adds a layer of Quantum dots to an OLED to improve color gamut. I think. I can't let myself fall too far into this rabbit hole, so I'm not double checking anymore.

((Feb 12, 2025-updated tags)

#hazbin hotel#hellaverse#hazbin hotel redesign#hazbin vox#vox#human vox#the vees#heavenbound au#a3 art#fanart#digital art#character sheet

155 notes

·

View notes

Text

Editing Part 4: Worldbuilding Pass

Next up, worldbuilding! We're tackling this before structure, because you don't want to get too far into the weeds, realize a critical component of your story is wrong, and then throw your computer out the window in frustration.

Anyway, when it comes to worldbuilding, there's a lot of moving parts. There is no right or wrong way to worldbuild, but my preferred approach is to worldbuild as the story goes along. Any method works, and you can check out the worldbuilding tag for more. In editing your worldbuilding, you want to think about:

Trimming Front-loading/Info Dumps

When writing fantasy/sci-fi, getting down how the world works can take over the story. In first drafting, this is fine! But when you're trying to clean that draft up, it's better to weave this information in as you go.

Need to explain how the giant mechas guarding the city operate? Maybe your main character is trying to steal some precious alloy from one, giving you opportunity to explain how they work and how society feels about them. Have a magic system that relies on singing tunes? Show that off by having students practicing, or dueling rivals taking it too far.

You probably know by now that the thing you should avoid the most is "as you know" dialogue dumps - characters explaining concepts to each other that they both clearly understand. Another, weaker version of this is the "magic class" trap, where things are explained to the main character and the reader. A classroom environment is fine, but pair worldbuilding with action - demonstrations get out of hand, spells go wrong, etc. Make it fun!

Your World Needs Clear Rules (Sorry)

Listen, this is the part I hate. I have a WIP with the word "Rules" in the title and I'm still figuring out what those rules are. Argh. But the sooner you know the rules, the easier editing will be. The more clear those rules are to the reader, the more impactful breaking them will be.

If the rules of the world (you can't use warp speed too close to a planet's gravitational pull, the same type of magic cancels each other out) and the consequences of breaking them are clear, the pay-off will be satisfying for both you and the reader.

Use Your Environment to Your Full Advantage

You've no doubt heard 'make setting a character' and that's evergreen advice. Some of the best books out there are those where it feels like you could step through the page and into a real place, be it your childhood middle school or Narnia. Getting that feeling, however, is more than just describing a place really well.

Mood - How does the location make you feel? Does a dark, cramped room leave the characters with a feeling of dread? How would that feeling change if it was an overstuffed library with comfortable chairs?

Weather - Beyond the 'dark and stormy night' descriptions, weather impacts our daily lives and is often overlooked. A rain-drenched funeral scenes seems like it's the way to go, but how differently would that scene feel if it was a sunny day with birds singing?

City Versus Countryside - These books are a great reference for description, but also take a step back to compare how different situations would feel both in the setting and to your character. Quiet can mean very different things depending on where you are. A morning fog in the countryside might feel comforting to someone used to it, but to someone new to that environment, it might feel creepy. Think about both your environment and how your character reacts to it based on their backstory.

The Empty Room Problem

This is always a big challenge when moving from the first draft bare bones basics to fleshing things out. How much description is too much? (As a note, it's always okay to overcorrect - you'll have a chance to fix it later!) This post from @novlr has a lot of great questions - but you're still going to narrow it down to the most important details.

Escape the Movie Setting - You cannot describe the room like it's a movie set. Trying to do so is going to be overwhelming, and important details will be lost in the attempt. If you were to describe your room or your favorite coffee shop and could only highlight four or five details, what would you focus on? What gives the reader the essence of the place rather than a list of things that exist there?

Establish the Essentials - Is this your first character's first time in this room? Is it going to be key to several plot-important scenes? Some big, sweeping details when entering - how big it is, what's in it, where the windows are, how it feels, etc - are good to start with. Your character can briefly admire a full bookshelf in the first scene, and then study it in more detail in the second. If you have one scene in this place and spend too much time describing it, you're going to make your reader think it's more important than it is.

Engage the Senses - Does an old room smell musty? Does the coldness of the woods have a sharp taste? Does touching a shelf bring up a lot of dust? How does the lighting in the room make the main character feel?

Getting down the description of a room or setting is not something you'll nail in one shot, but if you approach each scene asking yourself "does this feel like a real place or a white room?" you can narrow down what's missing.

176 notes

·

View notes

Note

what if!!! hear me out 🙏🙏 yuu was a robot/miku inspired…IT SUCKS but like…miku kinda..yuu mikyuu…😓😓

Sure no worries, no judgement from me, ask and you shall receive

𝐖𝐇𝐀𝐓 𝐈𝐅 𝐘𝐔𝐔 𝐈𝐒 𝐀 𝐑𝐎𝐁𝐎𝐓 🤖👾🎤

A robot is a machine—especially one programmable by a computer—capable of carrying out a complex series of actions automatically. A robot can be guided by an external control device, or the control may be embedded within. But they can act independently if their creators allow it.

( English is not my first language )

Day 3 : robot!yuu

In a world full of technology and robots. Robot!yuu was the number one idol during that time and was in the number one group of the century ; vocaloid, imagine during the middle of a performance one of their solo concerts, a black carriage arrived and they suddenly shut down.

They turned on when it was an orientation ceremony. Since robot!yuu isn't technically an organic being, they would be put between the ignihyde dorm or ramshackle.

After Crowley gave them a cellphone or asked idia if he could do maintenance to connect them to social media of twisted wonderland, by doing this they started to upload their albums towards the internet and it blew up, people are loving it, it's getting headlines about a new genre of music, and the music getting about stream by millions around the world, Robot!yuu created a genre of music. A revaluation towards the music Industry.

This managed robot!yuu to get rich overnight and allowed them to buy more expensive and to fix the ramshackle dorm more to get more expensive technology for their maintenance, Robot!yuu was planning on giving half of the money to Crowley as a thanks but he only received 1/4 half of the money.

Even tho robot! yuu is an idol, their master builds them with an offensive and defensive system, they have extremely tough metal that is hard to find as well an offensive mode, they have a lot on their arsenal attacks, energy beams, rocket launchers, shield mode, and more.

They are also able to connect to any device and hack it without any issue, they manage to hack ignihyde technology without an issue. And they are waterproof

Robot!yuu also can digest and drink things without an issue, they have a special component on their stomach to make sure they can digest things normally.

During VDC they dominated the competition. Lasers, mist appears and light sticks wave around for their presence. They change outfits depending on the song, it was literally a Miku concert.

Congratulations neige Leblanc is now one of their fans, when going down the stage, he literally ran towards you and started asking a billion of questions with stars amongst their eyes

Vil was a little sour but also amazed about robot!yuu performance, he would ask them for choreography and music ideas from them as well as fashion opinions. He originally wanted robot!yuu to transfer into ignihyde but they refused due to ignihyde has the complete equipment for them or ramshackle.

Pomifiore dorm started to take notes and tried robot!yuu fashion styles. Idia is also a supporter of them and basically a super fan, robot!yuu would come to ignihyde to help him with games or help him maintain ortho, Robot!yuu is basically a sister towards Idia and Ortho.

sorry if it's short, this is by far I could come up anon

#twisted wonderland#not canon#twst headcanons#twst scenario#disney twst#twisted wonderland yuu au#twst mc#twst wonderland#twst x reader#twst yuu au#kinda miku!yuu

260 notes

·

View notes

Text

Linguists deal with two kinds of theories or models.

First, you have grammars. A grammar, in this sense, is a model of an individual natural language: what sorts of utterances occur in that language? When are they used and what do they mean? Even assembling this sort of model in full is a Herculean task, but we are fairly successful at modeling sub-systems of individual languages: what sounds occur in the language, and how may they be ordered and combined?—this is phonology. What strings of words occur in the language, and what strings don't, irrespective of what they mean?—this is syntax. Characterizing these things, for a particular language, is largely tractable. A grammar (a model of the utterances of a single language) is falsified if it predicts utterances that do not occur, or fails to predict utterances that do occur. These situations are called "overgeneration" and "undergeneration", respectively. One of the advantages linguistics has as a science is that we have both massive corpora of observational data (text that people have written, databases of recorded phone calls), and access to cheap and easy experimental data (you can ask people to say things in the target language—you have to be a bit careful about how you do this—and see if what they say accords with your model). We have to make some spherical cow type assumptions, we have to "ignore friction" sometimes (friction is most often what the Chomskyans call "performance error", which you do not have to be a Chomskyan to believe in, but I digress). In any case, this lets us build robust, useful, highly predictive, and falsifiable, although necessarily incomplete, models of individual natural languages. These are called descriptive grammars.

Descriptive grammars often have a strong formal component—Chomsky, for all his faults, recognized that both phonology and syntax could be well described by formal grammars in the sense of mathematics and computer science, and these tools have been tremendously productive since the 60s in producing good models of natural language. I believe Chomsky's program sensu stricto is a dead end, but the basic insight that human language can be thought about formally in this way has been extremely useful and has transformed the field for the better. Read any descriptive grammar, of a language from Europe or Papua or the Amazon, and you will see (in linguists' own idiosyncratic notation) a flurry regexes and syntax trees (this is a bit unfair—the computer scientists stole syntax trees from us, also via Chomsky) and string rewrite rules and so on and so forth. Some of this preceded Chomsky but more than anyone else he gave it legs.

Anyway, linguists are also interested in another kind of model, which confusingly enough we call simply a "theory". So you have "grammars", which are theories of individual natural languages, and you have "theories", which are theories of grammars. A linguistic theory is a model which predicts what sorts of grammar are possible for a human language to have. This generally comes in the form of making claims about

the structure of the cognitive faculty for language, and its limitations

the pathways by which language evolves over time, and the grammars that are therefore attractors and repellers in this dynamical system.

Both of these avenues of research have seen some limited success, but linguistics as a field is far worse at producing theories of this sort than it is at producing grammars.

Capital-G Generativism, Chomsky's program, is one such attempt to produce a theory of human language, and it has not worked very well at all. Chomsky's adherents will say it has worked very well—they are wrong and everybody else thinks they are very wrong, but Chomsky has more clout in linguistics than anyone else so they get to publish in serious journals and whatnot. For an analogy that will be familiar to physics people: Chomskyans are string theorists. And they have discovered some stuff! We know about wh-islands thanks to Generativism, and we probably would not have discovered them otherwise. Wh-islands are weird! It's a good thing the Chomskyans found wh-islands, and a few other bits and pieces like that. But Generativism as a program has, I believe, hit a dead end and will not be recovering.

Right, Generativism is sort of, kind of attempting to do (1), poorly. There are other people attempting to do (1) more robustly, but I don't know much about it. It's probably important. For my own part I think (2) has a lot of promise, because we already have a fairly detailed understanding of how language changes over time, at least as regards phonology. Some people are already working on this sort of program, and there's a lot of work left to be done, but I do think it's promising.

Someone said to me, recently-ish, that the success of LLMs spells doom for descriptive linguistics. "Look, that model does better than any of your grammars of English at producing English sentences! You've been thoroughly outclassed!". But I don't think this is true at all. Linguists aren't confused about which English sentences are valid—many of us are native English speakers, and could simply tell you ourselves without the help of an LLM. We're confused about why. We're trying to distill the patterns of English grammar, known implicitly to every English speaker, into explicit rules that tell us something explanatory about how English works. An LLM is basically just another English speaker we can query for data, except worse, because instead of a human mind speaking a human language (our object of study) it's a simulacrum of such.

Uh, for another physics analogy: suppose someone came along with a black box, and this black box had within it (by magic) a database of every possible history of the universe. You input a world-state, and it returns a list of all the future histories that could follow on from this world state. If the universe is deterministic, there should only be one of them; if not maybe there are multiple. If the universe is probabilistic, suppose the machine also gives you a probability for each future history. If you input the state of a local patch of spacetime, the machine gives you all histories in which that local patch exists and how they evolve.

Now, given this machine, I've got a theory of everything for you. My theory is: whatever the machine says is going to happen at time t is what will happen at time t. Now, I don't doubt that that's a very useful thing! Most physicists would probably love to have this machine! But I do not think my theory of everything, despite being extremely predictive, is a very good one. Why? Because it doesn't tell you anything, it doesn't identify any patterns in the way the natural world works, it just says "ask the black box and then believe it". Well, sure. But then you might get curious and want to ask: are there patterns in the black box's answers? Are there human-comprehensible rules which seem to characterize its output? Can I figure out what those are? And then, presto, you're doing good old regular physics again, as if you didn't even have the black box. The black box is just a way to run experiments faster and cheaper, to get at what you really want to know.

General Relativity, even though it has singularities, and it's incompatible with Quantum Mechanics, is better as a theory of physics than my black box theory of everything, because it actually identifies patterns, it gives you some insight into how the natural world behaves, in a way that you, a human, can understand.

In linguistics, we're in a similar situation with LLMs, only LLMs are a lot worse than the black box I've described—they still mess up and give weird answers from time to time. And more importantly, we already have a linguistic black box, we have billions of them: they're called human native speakers, and you can find one in your local corner store or dry cleaner. Querying the black box and trying to find patterns is what linguistics already is, that's what linguists do, and having another, less accurate black box does very little for us.

Now, there is one advantage that LLMs have. You can do interpretability research on LLMs, and figure out how they are doing what they are doing. Linguists and ML researchers are kind of in a similar boat here. In linguistics, well, we already all know how to talk, we just don't know how we know how to talk. In ML, you have these models that are very successful, buy you don't know why they work so well, how they're doing it. We have our own version of interpretability research, which is neuroscience and neurolinguistics. And ML researchers have interpretability research for LLMs, and it's very possible theirs progresses faster than ours! Now with the caveat that we can't expect LLMs to work just like the human brain, and we can't expect the internal grammar of a language inside an LLM to be identical to the one used implicitly by the human mind to produce native-speaker utterances, we still might get useful insights out of proper scrutiny of the innards of an LLM that speaks English very well. That's certainly possible!

But just having the LLM, does that make the work of descriptive linguistics obsolete? No, obviously not. To say so completely misunderstands what we are trying to do.

79 notes

·

View notes

Text

So ive been using linux for a good while now, and its now officially my daily driver. Windows is now permabenched in a removed hhd in a drawer unless something awful happens. (Good riddance, havent truly enjoyed windows since xp)

And from this I think that people urging others to move to linux are not doing so in the correct way.

Instead of trying to push a friendly distro and insisting it will work for everything and everyone, instead check if the hardware they are using specifically is good for linux and if so what families.

One computer will be a breeze with any distro, another could have a few quirks but be basically fine, however another of the same year and manufacturer could be an uphill battle thats straight up unusable even for someone who knows how to do the kernel edit workarounds for all but specific distros, if that.

My desktop took linux mint like a dream, 100% painless with no fucking about to make it work and even no need for an ethernet cable to get things started. My dinosaur laptop (may it rest in peace after other components died) had a few issues but also worked very well with little effort with mint. My current junk laptop is an uphill battle that will require arch AND edits to the kernel paremeters to work without being filled with screen flashing and full system freezes at random and im still gearing up the gumption to give it another few attempts to actually pull it off.

Each of these computers is a VERY different experience, and if your prospective switcher is using a computer that doesnt play well, its NOT going to work, they will get frustrated, and they will give up. They have to work with what they have.

Instead of going right to telling them to switch and that anything is good, Encourage people to search up their pc+linux compatibility if they are looking to switch to determine if its viable for a newbie who doesnt want to struggle, then offer a distro that has a live usb/dvd version if possible for them to test without install, and a big enough userbase that troubleshooting is as painless as possible.

If its a laptop, archwiki has lists of those by maker (linked in the page given) with notes on what has been tested in that family of linux.

This, I think, above all, is the most important thing when trying to get people to switch:

MAKE SURE THEIR HARDWARE IS GOOD FOR IT

Not just the pc, but the peripherals too; their mouse, their mic, their webcam, their keyboard- these things are not always supported well.

Linux can be fast, easy, and really comfortable and painless with little to no troubleshooting or tedious workarounds to get your stuff to work; but you have to be using the right hardware.

Yes, make sure you have alternative programs lined up that are actually good (stop reccing gimp when krita is a way better P$ alternative for people trying to draw digitally), and maybe consider talking about how to run wine in a newbie friendly way for things they might need for work reasons that dont work on linux normally(and accept that it might not work even with wine), but above all, make sure that its not just being phrased as 'a you problem' when it might be their system that is the issue there.

For prospective switchers that tried but gave up because of glitches or freezes or things otherwise not working: It wasnt you being bad at computers. Sometimes it just doesnt work with that hardware well and there is nothing you can do. People dont warn you about this, but its a very real issue.

If you still want to try linux, when you eventually get a new computer in the future, look for one that seems to be supported by linux well- some pcs even come with linux preinstalled for you even (dell does this with ubuntu and ubuntu has skins that looks windows esque). Asking specifically for linux compatible or linux preinstalled computers signals to developers to make more computers that work with linux, and makes it easier to get linux friendly stuff.

If not, there are windows 11 neutering tools out there in the wild that are very useful and are a plenty fine alternative to switching. You should only switch if you want to switch.

#wayward rambling#long post#linux#rebloggable#its also worth assessing for their ability and desire to debug themselves via google fu and command line instead of taking it to A Guy#but number one is checking the hardware!! You cant do shit if the hardware isnt good for it!!#this sort of went in whatever direction oh well

73 notes

·

View notes

Text

Being neurodivergent is like you're a computer running some variation of Unix while the rest of the world runs Windows. You have the exact same basic components as other machines, but you think differently. You organize differently. You do things in a way that Windows machines don't always understand, and because of that, you can't use programs written for Windows. If you're lucky, the developer will write a special version of their program specifically with your operating system in mind that will work just as well as the original, and be updated in a similar time frame. But if not? You'll either be stuck using emulators or a translator program like Wine, which come with an additional resource load and a host of other challenges to contend with, or you'll have to be content with an equivalent, which may or may not have the same features and the ability to read files created by the other program.

However, that doesn't mean you're not just as powerful. Perhaps you're a desktop that just happens to run Mac or Linux. Maybe you're a handheld device, small and simple but still able to connect someone to an entire world. Or perhaps you're an industrial computer purposely-built to perform a limited number of tasks extremely well, but only those tasks. You may not even have a graphical user interface. You could even be a server proudly hosting a wealth of media and information for an entire network to access- perhaps even the entire Internet. They need only ask politely. You may not be able to completely understand other machines, but you are still special in your own way.

#actually autistic#autism#neurodivergent#neurodiversity#computers#this is so nerdy i'm sorry#i also hope it doesn't offend people#adhd#actually adhd#unix#linux#macintosh#windows#actually neurodivergent

1K notes

·

View notes

Text

FTC vs surveillance pricing

Support me this summer on the Clarion Write-A-Thon and help raise money for the Clarion Science Fiction and Fantasy Writers' Workshop!

In the mystical cosmology of economics, "prices" are of transcendental significance, the means by which the living market knows and adapts itself, giving rise to "efficient" production and consumption.

At its most basic level, the metaphysics of pricing goes like this: if there is less of something for sale than people want to buy, the seller will raise the price until enough buyers drop out and demand equals supply. If the disappointed would-be buyers are sufficiently vocal about their plight, other sellers will enter the market (bankrolled by investors who sense an opportunity), causing supplies to increase and prices to fall until the system is in "equilibrium" – producing things as cheaply as possible in precisely the right quantities to meet demand. In the parlance of neoclassical economists, prices aren't "set": they are discovered.

In antitrust law, there are many sins, but they often boil down to "price setting." That is, if a company has enough "market power" that they can dictate prices to their customers, they are committing a crime and should be punished. This is such a bedrock of neoclassical economics that it's a tautology "market power" exists where companies can "set prices"; and to "set prices," you need "market power."

Prices are the blood cells of the market, shuttling nutrients (in the form of "information") around the sprawling colony organism composed of all the buyers, sellers, producers, consumers, intermediaries and other actors. Together, the components of this colony organism all act on the information contained in the "price signals" to pursue their own self-interest. Each self-interested action puts more information into the system, triggering more action. Together, price signals and the actions they evince eventually "discover" the price, an abstraction that is yanked out of the immaterial plane of pure ideas and into our grubby, physical world, causing mines to re-open, shipping containers and pipelines to spark to life, factories to retool, trucks to fan out across the nation, retailers to place ads and hoist SALE banners over their premises, and consumers to race to those displays and open their wallets.

When prices are "distorted," all of this comes to naught. During the notorious "socialist calculation debate" of 1920s Austria, right-wing archdukes of religious market fundamentalism, like Von Hayek and Von Mises, trounced their leftist opponents, arguing that the market was the only computational system capable of calculating how much of each thing should be made, where it should be sent, and how much it should be sold for.

Attempts to "plan" the economy – say, by subsidizing industries or limiting prices – may be well-intentioned, but they broke the market's computations and produced haywire swings of both over- and underproduction. Later, the USSR's planned economy did encounter these swings. These were sometimes very grave (famines that killed millions) and sometimes silly (periods when the only goods available in regional shops were forks, say, creating local bubbles in folk art made from forks).

Unplanned markets do this too. Most notoriously, capitalism has produced a vast oversupply of carbon-intensive goods and processes, and a huge undersupply of low-carbon alternatives, bringing the human civilization to the brink of collapse. Not only have capitalism's price signals failed to address this existential crisis to humans, it has also sown the seeds of its own ruin – the market computer's not going to be getting any "price signals" from people as they drown in floods or roast to death on sidewalks that deliver second-degree burns to anyone who touches them:

https://www.fastcompany.com/91151209/extreme-heat-southwest-phoenix-surface-burns-scorching-pavement-sidewalks-pets

For market true believers, these failures are just evidence that regulation is distorting markets, and that the answer is more unregulated markets to infuse the computer with more price signals. When it comes to carbon, the problem is that producers are "producing negative externalities" (that is, polluting and sticking us with the bill). If we can just get them to "internalize" those costs, they will become "economically rational" and switch to low-carbon alternatives.

That's the theory behind the creation and sale of carbon credits. Rather than ordering companies to stop risking civilizational collapse and mass extinction, we can incentivize them to do so by creating markets that reward clean tech and punish dirty practices. The buying and selling of carbon credits is supposed to create price signals reflecting the existential risk to the human race and the only habitable planet known to our species, which the market will then "bring into equilibrium."

Unfortunately, reality has a distinct and unfair leftist bias. Carbon credits are a market for lemons. The carbon credits you buy to "offset" your car or flight are apt to come from a forest that has already burned down, or that had already been put in a perpetual trust as a wildlife preserve and could never be logged:

https://pluralistic.net/2022/03/18/greshams-carbon-law/#papal-indulgences

Carbon credits produce the most perverse outcomes imaginable. For example, much of Tesla's profitability has been derived from the sale of carbon credits to the manufacturers of the dirtiest, most polluting SUVs on Earth; without those Tesla credits, those SUVs would have been too expensive to sell, and would not have existed:

https://pluralistic.net/2021/11/24/no-puedo-pagar-no-pagara/#Rat

What's more, carbon credits aren't part of an "all of the above" strategy that incorporates direct action to prevent our species downfall. These market solutions are incompatible with muscular direct action, and if we do credits, we can't do other stuff that would actually work:

https://pluralistic.net/2023/10/31/carbon-upsets/#big-tradeoff

Even though price signals have repeatedly proven themselves to be an insufficient mechanism for producing "efficient" or even "survivable," they remain the uppermost spiritual value in the capitalist pantheon. Even through the last 40 years of unrelenting assaults on antitrust and competition law, the one form of corporate power that has remained both formally and practically prohibited is "pricing power."

That's why the DoJ was able to block tech companies and major movie studios from secretly colluding to suppress their employees' wages, and why those employees were able to get huge sums out of their employers:

https://en.wikipedia.org/wiki/High-Tech_Employee_Antitrust_Litigation

It's also why the Big Six (now Big Five) publishers and Apple got into so much trouble for colluding to set a floor on the price of ebooks:

https://en.wikipedia.org/wiki/United_States_v._Apple_(2012)

When it comes to monopoly, even the most Bork-pilled, Manne-poisoned federal judges and agencies have taken a hard line on price-fixing, because "distortions" of prices make the market computer crash.

But despite this horror of price distortions, America's monopolists have found so many ways to manipulate prices. Last month, The American Prospect devoted an entire issue to the many ways that monopolies and cartels have rigged the prices we pay, pushing them higher and higher, even as our wages stagnated and credit became more expensive:

https://prospect.org/pricing

For example, there's the plague of junk fees (AKA "drip pricing," or, if you're competing to be first up against the wall come the revolution, "ancillary revenue"), everything from baggage fees from airlines to resort fees at hotels to the fee your landlord charges if you pay your rent by check, or by card, or in cash:

https://pluralistic.net/2024/06/07/drip-drip-drip/#drip-off

There's the fake transparency gambit, so beloved of America's hospitals:

https://pluralistic.net/2024/06/13/a-punch-in-the-guts/#hayek-pilled

The "greedflation" that saw grocery prices skyrocketing, which billionaire grocery plutes blamed on covid stimulus checks, even as they boasted to their shareholders about their pricing power:

https://prospect.org/economy/2024-06-12-war-in-the-aisles/

There's the the tens of billions the banks rake in with usurious interest rates, far in excess of the hikes to the central banks' prime rates (which are, in turn, justified in light of the supposed excesses of covid relief checks):

https://prospect.org/economy/2024-06-11-what-we-owe/

There are the scams that companies like Amazon pull with their user interfaces, tricking you into signing up for subscriptions or upsells, which they grandiosely term "dark patterns," but which are really just open fraud:

https://prospect.org/economy/2024-06-10-one-click-economy/

There are "surge fees," which are supposed to tempt more producers (e.g. Uber drivers) into the market when demand is high, but which are really just an excuse to gouge you – like when Wendy's threatens to surge-price its hamburgers:

https://prospect.org/economy/2024-06-07-urge-to-surge/

And then there's surveillance pricing, the most insidious and profitable way to jack up prices. At its core, surveillance pricing uses nonconsensually harvested private information to inform an algorithm that reprices the things you buy – from lattes to rent – in real-time:

https://pluralistic.net/2024/06/05/your-price-named/#privacy-first-again

Companies like Plexure – partially owned by McDonald's – boasts that it can use surveillance data to figure out what your payday is and then hike the price of the breakfast sandwich or after-work soda you buy every day.

Like every bad pricing practice, surveillance pricing has its origins in the aviation industry, which invested early on and heavily in spying on fliers to figure out how much they could each afford for their plane tickets and jacking up prices accordingly. Architects of these systems then went on to found companies like Realpage, a data-brokerage that helps landlords illegally collude to rig rent prices.

Algorithmic middlemen like Realpage and ATPCO – which coordinates price-fixing among the airlines – are what Dan Davies calls "accountability sinks." A cartel sends all its data to a separate third party, which then compares those prices and tells everyone how much to jack them up in order to screw us all:

https://profilebooks.com/work/the-unaccountability-machine/

These price-fixing middlemen are everywhere, and they predate the boom in commercial surveillance. For example, Agri-Stats has been helping meatpackers rig the price of meat for 40 years:

https://pluralistic.net/2023/10/04/dont-let-your-meat-loaf/#meaty-beaty-big-and-bouncy

But when you add commercial surveillance to algorithmic pricing, you get a hybrid more terrifying than any cocaine-sharks (or, indeed, meth-gators):

https://www.nbcnews.com/news/us-news/tennessee-police-warn-locals-not-flush-drugs-fear-meth-gators-n1030291

Apologists for these meth-gators insist that surveillance pricing's true purpose is to let companies offer discounts. A streaming service can't afford to offer $0.99 subscriptions to the poor because then all the rich people would stop paying $19.99. But with surveillance pricing, every customer gets a different price, titrated to their capacity to pay, and everyone wins.

But that's not how it cashes out in the real world. In the real world, rich people who get ripped off have the wherewithal to shop around, complain effectively to a state AG, or punish companies by taking their business elsewhere. Meanwhile, poor people aren't just cash-poor, they're also time-poor and political influence-poor.

When the dollar store duopoly forces all the mom-and-pop grocers in your town out of business with predatory pricing, and creating food deserts that only they serve, no one cares, because state AGs and politicians don't care about people who shop at dollar stores. Then, the dollar stores can collude with manufacturers to get shrunken "cheater sized" products that sell for a dollar, but cost double or triple the grocery store price by weight or quantity:

https://pluralistic.net/2023/03/27/walmarts-jackals/#cheater-sizes

Yes, fliers who seem to be flying on business (last-minute purchasers who don't have a Saturday stay) get charged more than people whose purchase makes them seem to be someone flying away for a vacation. But that's only because aviation prices haven't yet fully transitioned to surveillance pricing. If an airline can correctly calculate that you are taking a trip because you're a grad student who must attend a conference in order to secure a job, and if they know precisely how much room you have left on your credit card, they can charge you everything you can afford, to the cent.

Your ability to resist pricing power isn't merely a function of a company's market power – it's also a function of your political power. Poor people may have less to steal, but no one cares when they get robbed:

https://pluralistic.net/2024/07/19/martha-wright-reed/#capitalists-hate-capitalism

So surveillance pricing, supercharged by algorithms, represent a serious threat to "prices," which is the one thing that the econo-religious fundamentalists of the capitalist class value above all else. That makes surveillance pricing low-hanging fruit for regulatory enforcement: a bipartisan crime that has few champions on either side of the aisle.

Cannily, the FTC has just declared war on surveillance pricing, ordering eight key players in the industry (including capitalism's arch-villains, McKinsey and Jpmorgan Chase) to turn over data that can be used to prosecute them for price-fixing within 45 days:

https://www.ftc.gov/news-events/news/press-releases/2024/07/ftc-issues-orders-eight-companies-seeking-information-surveillance-pricing

As American Prospect editor-in-chief David Dayen notes in his article on the order, the FTC is doing what he and his journalistic partners couldn't: forcing these companies to cough up internal data:

https://prospect.org/economy/2024-07-24-ftc-opens-surveillance-pricing-inquiry/

This is important, and not just because of the wriggly critters the FTC will reveal as they use their powers to turn over this rock. Administrative agencies can't just do whatever they want. Long before the agencies were neutered by the Supreme Court, they had strict rules requiring them to gather evidence, solicit comment and counter-comment, and so on, before enacting any rules:

https://pluralistic.net/2022/10/18/administrative-competence/#i-know-stuff

Doubtless, the Supreme Court's Loper decision (which overturned "Chevron deference" and cut off the agencies' power to take actions that they don't have detailed, specific authorization to take) will embolden the surveillance pricing industry to take the FTC to court on this. It's hard to say whether the courts will find in the FTC's favor. Section 6(b) of the FTC Act clearly lets the FTC compel these disclosures as part of an enforcement action, but they can't start an enforcement action until they have evidence, and through the whole history of the FTC, these kinds of orders have been a common prelude to enforcement.

One thing this has going for it is that it is bipartisan: all five FTC commissioners, including both Republicans (including the Republican who votes against everything) voted in favor of it. Price gouging is the kind of easy-to-grasp corporate crime that everyone hates, irrespective of political tendency.

In the Prospect piece on Ticketmaster's pricing scam, Dayen and Groundwork's Lindsay Owens called this the "Age of Recoupment":

https://pluralistic.net/2024/06/03/aoi-aoi-oh/#concentrated-gains-vast-diffused-losses

For 40 years, neoclassical economics' focus on "consumer welfare" meant that companies could cheat and squeeze their workers and suppliers as hard as they wanted, so long as prices didn't go up. But after 40 years, there's nothing more to squeeze out of workers or suppliers, so it's time for the cartels to recoup by turning on us, their customers.

They believe – perhaps correctly – that they have amassed so much market power through mergers and lobbying that they can cross the single bright line in neoliberal economics' theory of antitrust: price-gouging. No matter how sincere the economics profession's worship of prices might be, it still might not trump companies that are too big to fail and thus too big to jail.

The FTC just took an important step in defense of all of our economic wellbeing, and it's a step that even the most right-wing economist should applaud. They're calling the question: "Do you really think that price-distortion is a cardinal sin? If so, you must back our play." Support me this summer on the Clarion Write-A-Thon and help raise money for the Clarion Science Fiction and Fantasy Writers' Workshop!

https://clarionwriteathon.com/members/profile.php?writerid=293388

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/07/24/gouging-the-all-seeing-eye/#i-spy

#pluralistic#gouging#ftc#surveillance pricing#dynamic pricing#efficient market hypothesis brain worms#administrative procedures act#chevron deference#lina khan#price gouging

161 notes

·

View notes

Text

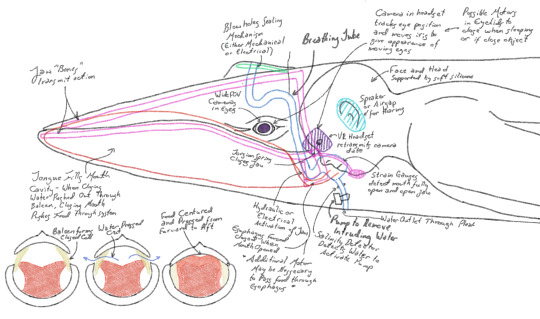

Head Design Concept

Whale suit head concept. This covers most of the basic ideas within the idea for how the head of the whalesuit will look. It will be by far the most mechanically and electrically complex part of the suit. Its three primary functions can be summed up in - breathing, vision, and food consumption (water will be taken in primarily through food and gelatine rather than seperate liquid).

One thing that is particularly curious for me is to what extent should I rely on electrical equipment versus mechanical equipment? Electrical equipment is nice because it can have a lower complexity of the individual parts, have greater redundancy, allow a greater variety of sensing features for safety, but also greater levels of essentially animation - motions that make the suit feel more alive - it is a complex prosthetic to reflect the whale inside and fix its body so it can be a whale again, not simply a costume. For me having as much animation as possible is desirable. When I look at a person or something having the eye move to reflect that so people outside can see what I am looking at does bring a lot more life to my body. Similarly for small things like blinking or closing my eyes or more variety in how my blowholes move at different points or respond to touch or a finger getting close to an eye. The total amount of animation on a whale, especially a mysticete, is not much, especially when compared to a human body and so maximizing what can be done is desirable to make myself appear as whale as possible. Perhaps I could even add a system that might allow me to make my twanging calls.

However it does also come with problems, namely that it is continuously in salt water and so there is risk of ingress, but more signficantly power requirements. How much power will each thing require? And how is that power stored? Presumably we would use batteries, and given how much volume we have available there is plenty of room for batteries. However those either need to be changed out or charged which could limit our time in the water. One possibility could be to use our swimming motion to generate power, I think in the end it will really depend how much power is actually needed as well as the specific use case - if I can live in a park then the power concerns really just depend how often I come out of the suit for a few moments.

Still this does give me more ideas and more firm of what things might look like for a whalesuit. From my perspective with in the suit I could feel when I surfaced, I would see the water around me feeling entirely immersed in what is essentially a vr setup (though it does not have to have the heavy computing component), when I opened my mouth I could catch food even if I would not feel it immidiately on my tongue or in my mouth, my body would be supported entirely by the water though much of my sense of touch would be dulled for the thickness of silicone between my biological body and the water. But within the prosthetic, and with my medicine gone, and perception through whale eye, my perception of my body will merge to the prosthetic allowing me to genuinely be whale again, something taken from me so long ago, and I will swim again - forever.

~ Kala

#therian#clinical zoanthropy#clinical lycanthropy#physical nonhuman#transspecies#species transition#whale suit

44 notes

·

View notes

Text

Yes! I did indeed create a theoretical formula for my time-travel theory that I ALSO invented for a South Park fanfic! Why do you ask?

Jokes aside, I think it’s time for me to break down the formula to explain what all these symbols mean and tell you my thought process while making this. For starters: I’m not a scientist, astrophysicist, mathematician, or smart enough person to exactly know what the hell im even saying. I was only able to have a solid grasp on what this all means because of my more smarter friends, google searches, and physics-related YouTube video essays. Anyways….

Realistically, in order to make something like this work in real life, you’re going to need to know AND have these three major components:

Understand/study everything you know about gravity and the theory of relativity.

Solve the creation of a wormhole and be able to control it within an enclosed space

Create a “Blind Filter” to prevent a total collapse on neighboring grids/columns

Have tools, devices, and technology advanced enough to: detect anomalies and measure temporal signatures in spacetime, create maps of gravitational fields, generate copious amounts of energy to keep a wormhole stable during transit, and have quantum computers to perform complex calculations for navigation.

If you don’t have none of these things, time travel won’t be possible 😔🤙. But, if you do… well.. perhaps it wouldn’t hurt to try! Anyways, let’s start with the first equation!

Wormhole Stabilization: The main purpose for this equation, is purely for the stabilization of a wormhole’s throat to prevent a total collapse! Mind you, wormholes have never been discovered to be an actual thing— it’s all purely a theoretical possibility— unlike black holes!!! Throughout my research, I took refrences from Einstein field equation and the Morris-Thorne wormhole metric in general relativity! Like I mentioned, we won’t know how the equation truly works because we don’t have an actual point of reference, therefore, this equation could be a bunch of hippy dippy bologna!! [*So are all the equations I will mention btw*]. This equation can be rewritten depending on how wormholes would actually work in a non-theoretical sense… BUT ANYWAY— if it were to work, this formula could be something that keeps a wormhole stable in order to be traversable through time!!

Grid/Column & Gravity: Yeah, pretty self explanatory. Because I had to create a formula that needed to be connected to the One-Tab Guide, then the purpose of this formula is to FIND a grid and column by combining general relativity and gravity. There are so many theoretical grids, and the columns are even harder to find since they’re interwoven with other grids/columns!

Spacetime Interval Adjustment: Also pretty self explanatory! This calculates the spacetime distance between the current and targeted timelines. It’s specifically modified for multiversal travel! See, the one-tab guide isn’t just a formula to help with time-travel, but it’s also a formula that can allow multiverse travel across multiple dimensions!!! Wow!!! Personally, I feel like multiversal travel would be a whole lot more easier to accomplish with this formula compared to to the possibility of time traveling in your own column. You won’t really have to worry about exact calculations because you basically just pick and choose which area outside of the specialized grid you wanna go 🤷♂️! Though, if you want to go to a time and place in a certain multiverse where YOU exist, you’ll probably have to be more specific with that calculation in order to find your parallel self (if said universe even has one).

Temporal Signature Correction: When you want a certain location marked, you use a geographic coordinate system! And with parallel travel, it’s a Temporal Signature Correction! This basically insures that a traveler goes to the correct timeline by using a unique “temporal signature” (St). No, I still don’t understand quantum mechanics that well (the MCU has ruined the word “quantum” for me). And yes, I still get confused when I think about entropy for too long. Best way to think about it within the context of the one-tab— is that entropy is the universe’s obsession with making things not messy. Each grid could have a unique entropy value to help distinguish between them, higher entropy grids could be more chaotic to the “anchor of reference” of a traveler. Which means if someone messes with time, entropy might fight back by making timelines chaotic or cause a fissure in a grid.

Proper Time Interval: Basically to travel to a specific timeline, the time machine needs to solve the proper time interval required to navigate that targeted timeline. Usually if you miscalculate, you could cause paradoxes, universal fissures, a collapse in the grid, etc.. But in my fic, Kenny created an entirely different machine (a sort of blind filter) to prevent any impact on the multiverse. But, if you don’t have that machine, then this formula is very important in that sense.

—

Anyways…. this probably won’t make sense to anyone except me. But I thought it would be cool to have some fictional formula with cool little symbols for this silly little theory. Maybe when our technology is advanced enough, this could all be tested and disproven 🙂↕️. Now go read (and reread) my bunny fic! Please…

#iwmoy#fanfiction#science fiction#fic analysis#south park#ao3 fanfic#this will definitely not make sense to anyone#but it’s fun either way

20 notes

·

View notes

Text

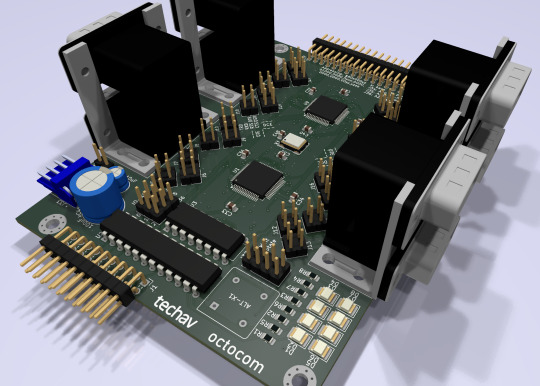

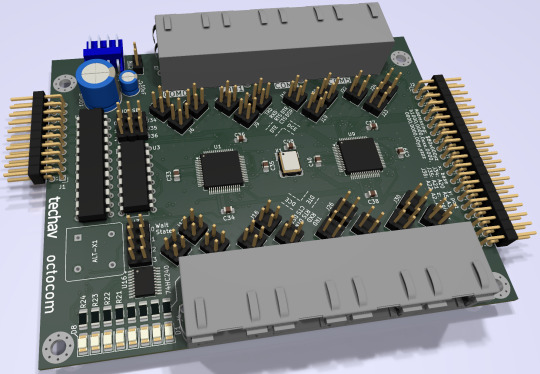

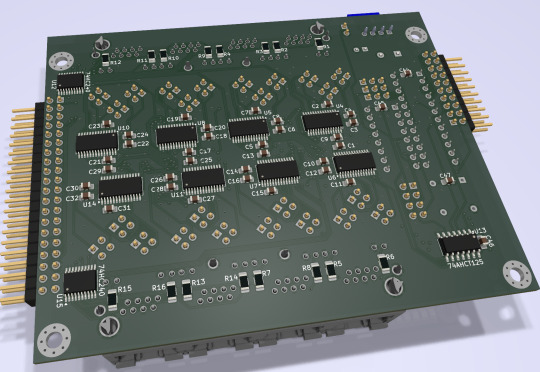

Sharing a Computer with More Friends

A few months ago I built an I/O expansion board for my homebrew 68030 project with a 4-port serial card to go with it, and got BASIC running for four simultaneous users. It worked, but not as well as I had hoped. I wanted to be able to run two of those serial cards to support 8 total users, but it had proven unstable enough that with just the one card I had to slow down the whole system to 8MHz.

So I designed a new serial card.

I had previously been running this computer without any issues at 32MHz with a mezzanine card with FPU & IDE as well as a video card. The main board by itself can clear 56MHz. Having to go all the way down to 8MHz just didn't sit well with me. I want this machine to run as fast as possible for its 8 users.

I put extra time into reviewing worst-case timing for all components and graphing out how signals would propagate. The 16C554 quad UARTs I'm designing around are modern parts that can handle pretty fast bus speeds themselves — easily up to 50MHz with no wait states on the 68030 bus — assuming all the glue logic can get out of the way fast enough.

Signal propagation delays add up quickly.

My first draft schematic used discrete 74-series logic for chip selection, signal decoding, timing, etc. At slower bus speeds this wouldn't have been a problem. But I want this thing to run as fast as possible. By the time critical signals had made it through all those logic gates, I was looking at already being well into one wait state by the time the UART would see a 50MHz bus cycle begin.

I needed something faster. I was also running low on space on the board for all the components I needed. The obvious answer was programmable logic. I settled on the ATF22V10 as a good compromise of speed, size, availability, and programmability. It's available in DIP with gate delays down to 7ns. Where discrete gates were necessary, I selected the fastest parts I could. The final design I came up with showed a worst case timing that would only need one wait state at 50MHz and none for anything slower.

It ended up being a tight fit, but I was able to make it work on a 4-layer board within the same footprint of my main board, putting some components on the back side. (It may look like a bunch of empty space, but there's actually a lot going on running full RS232 with handshaking for 8 ports).

New problem. I had blown my budget for the project. As much as I love those stacked DE9 connectors, they're expensive. And there's no getting around the $10 pricetag for each of those quad UARTs. Even using parts on-hand where possible, I was looking at a hefty Mouser order.

[jbevren] suggested using ganged RJ45 connectors with the Cisco pinout instead of stacked DE9, to save space & cut costs. [Chartreuse] suggested buffering the TTL serial TX/RX signals to drive the LEDs that are frequently included on PCB-mount RJ45 connectors. Both great ideas. I was able to cut 20% off my parts order and add some nice diagnostic lights to the design.

Two weeks later, I received five new PCBs straight from China. I of course wasted no time setting into starting to assemble one.

I really set myself up for a challenge on this one. I learned to solder some 25 years ago and have done countless projects in that time. But I think this might be the most compact, most heavily populated, most surface mount board I've ever assembled myself. (There are 56 size 0805 (that's 2x1.2mm) capacitors alone!)

After a few hours soldering, I had enough assembled to test the first serial port. If the first port worked then the other three on that chip should work too, and there's a great chance the other chip would work as well.

And it did work! After some poking around with the oscilloscope to make sure nothing was amiss, I started up the computer and it ran just fine at 8MHz.

And at 16MHz.

And at 25MHz.

And at 32MHz.

And at 40MHz.

And almost at 50MHz!

Remember what I said about my timing graphs showing one wait state for 50MHz? The computer actually booted up and ran just fine at 50MHz. The problem was when I tried typing in a BASIC program certain letters were getting switched around, and try as I might, BASIC just refused to 'RQN' my program. It was pretty consistently losing bit 3, likely from that signal having to travel just a tiny bit farther than the others. A problem that will probably be resolved with an extra wait state.

Good enough for a first test! A few hours more and I finished assembling the card.

I did have some problems with cleaning up flux off the board, and I had to touch up a few weak solder joints, but so far everything seems to be working. I've updated my little multi-user kernel to run all 8 users from this new card and it's running stable at 40MHz.

I need to update my logic on the 22V10 to fix a bug in the wait state generator. I would love to see this thing actually running at 50MHz — a 25% overclock for the 40MHz CPU I am currently running. I also want to expand my little kernel program to add some new features like the ability to configure the console serial ports and maybe even load programs from disk.

I hope to bring this machine with a collection of terminals and modems this June to VCF Southwest 2025 for an interactive exhibit that can be dialed into from other exhibits at the show.