#data modeling in python

Explore tagged Tumblr posts

Text

2 notes

·

View notes

Text

What is Data Science? A Comprehensive Guide for Beginners

In today’s data-driven world, the term “Data Science” has become a buzzword across industries. Whether it’s in technology, healthcare, finance, or retail, data science is transforming how businesses operate, make decisions, and understand their customers. But what exactly is data science? And why is it so crucial in the modern world? This comprehensive guide is designed to help beginners understand the fundamentals of data science, its processes, tools, and its significance in various fields.

#Data Science#Data Collection#Data Cleaning#Data Exploration#Data Visualization#Data Modeling#Model Evaluation#Deployment#Monitoring#Data Science Tools#Data Science Technologies#Python#R#SQL#PyTorch#TensorFlow#Tableau#Power BI#Hadoop#Spark#Business#Healthcare#Finance#Marketing

0 notes

Photo

Document-Oriented Agents: A Journey with Vector Databases, LLMs, Langchain, FastAPI, and Docker Leveraging ChromaDB, Langchain, and ChatGPT: Enhanced Responses and Cited Sources from Large Document DatabasesContinue reading on Towards Data Science » https://towardsdatascience.com/document-oriented-agents-a-journey-with-vector-databases-llms-langchain-fastapi-and-docker-be0efcd229f4

#python#artificial-intelligence#data-science#machine-learning#large-language-models#Luís Roque#Artificial Intelligence on Medium

0 notes

Text

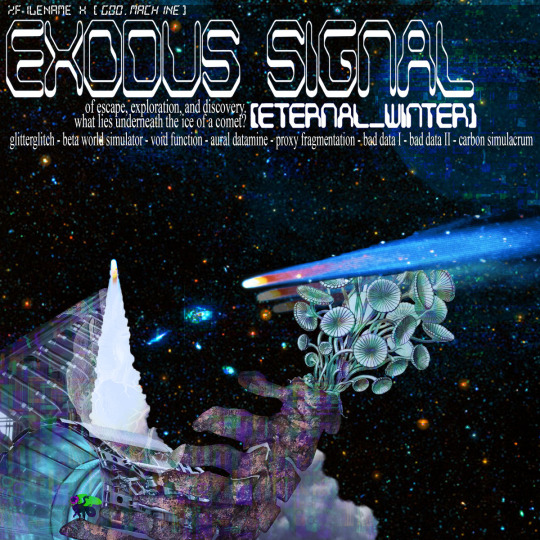

research & development is ongoing

since using jukebox for sampling material on albedo, i've been increasingly interested in ethically using ai as a tool to incorporate more into my own artwork. recently i've been experimenting with "commoncanvas", a stable diffusion model trained entirely on works in the creative commons. though i do not believe legality and ethics are equivalent, this provides me peace of mind that all of the training data was used consensually through the terms of the creative commons license. here's the paper on it for those who are curious! shoutout to @reachartwork for the inspiration & her informative posts about her process!

part 1: overview

i usually post finished works, so today i want to go more in depth & document the process of experimentation with a new medium. this is going to be a long and image-heavy post, most of it will be under the cut & i'll do my best to keep all the image descriptions concise.

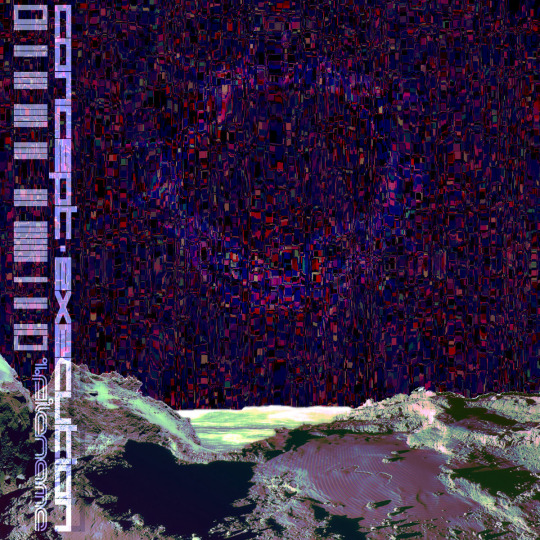

for a point of reference, here is a digital collage i made a few weeks ago for the album i just released (shameless self promo), using photos from wikimedia commons and a render of a 3d model i made in blender:

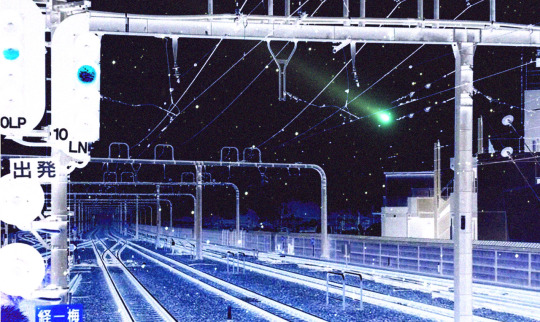

and here are two images i made with the help of common canvas (though i did a lot of editing and post-processing, more on that process in a future post):

more about my process & findings under the cut, so this post doesn't get too long:

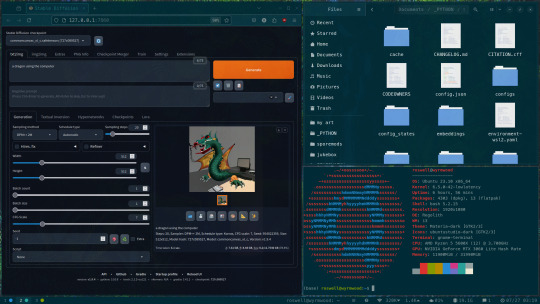

quick note for my setup: i am running this model locally on my own machine (rtx 3060, ubuntu 23.10), using the automatic1111 web ui. if you are on the same version of ubuntu as i am, note that you will probably have to build python 3.10.6 yourself (and be sure to use 'make altinstall' instead of 'make install' and change the line in the webui to use 'python3.10' instead of 'python3'. just mentioning this here because nobody else i could find had this exact problem and i had to figure it out myself)

part 2: initial exploration

all the images i'll be showing here are the raw outputs of the prompts given, with no retouching/regenerating/etc.

so: commoncanvas has 2 different types of models, the "C" and "NC" models, trained on their database of works under the CC Commercial and Non-Commercial licenses, respectively (i think the NC dataset also includes the commercial license works, but i may be wrong). the NC model is larger, but both have their unique strengths:

"a cat on the computer", "C" model

"a cat on the computer", "NC" model

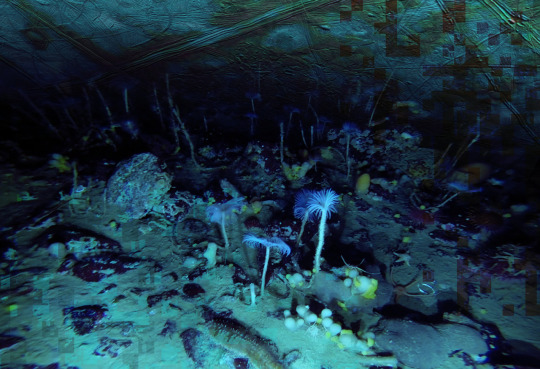

they both take the same amount of time to generate (17 seconds for four 512x512 images on my 3060). if you're really looking for that early ai jank, go for the commercial model. one thing i really like about commoncanvas is that it's really good at reproducing the styles of photography i find most artistically compelling: photos taken by scientists and amateurs. (the following images will be described in the captions to avoid redundancy):

"grainy deep-sea rover photo of an octopus", "NC" model. note the motion blur on the marine snow, greenish lighting and harsh shadows here, like you see in photos taken by those rover submarines that scientists use to take photos of deep sea creatures (and less like ocean photography done for purely artistic reasons, which usually has better lighting and looks cleaner). the anatomy sucks, but the lighting and environment is perfect.

"beige computer on messy desk", "NC" model. the reflection of the flash on the screen, the reddish-brown wood, and the awkward angle and framing are all reminiscent of a photo taken by a forum user with a cheap digital camera in 2007.

so the noncommercial model is great for vernacular and scientific photography. what's the commercial model good for?

"blue dragon sitting on a stone by a river", "C" model. it's good for bad CGI dragons. whenever i request dragons of the commercial model, i either get things that look like photographs of toys/statues, or i get gamecube type CGI, and i love it.

here are two little green freaks i got while trying to refine a prompt to generate my fursona. (i never succeeded, and i forget the exact prompt i used). these look like spore creations and the background looks like a bryce render. i really don't know why there's so much bad cgi in the datasets and why the model loves going for cgi specifically for dragons, but it got me thinking...

"hollow tree in a magical forest, video game screenshot", "C" model

"knights in a dungeon, video game screenshot", "C" model

i love the dreamlike video game environments and strange CGI characters it produces-- it hits that specific era of video games that i grew up with super well.

part 3: use cases

if you've seen any of the visual art i've done to accompany my music projects, you know that i love making digital collages of surreal landscapes:

(this post is getting image heavy so i'll wrap up soon)

i'm interested in using this technology more, not as a replacement for my digital collage art, but along with it as just another tool in my toolbox. and of course...

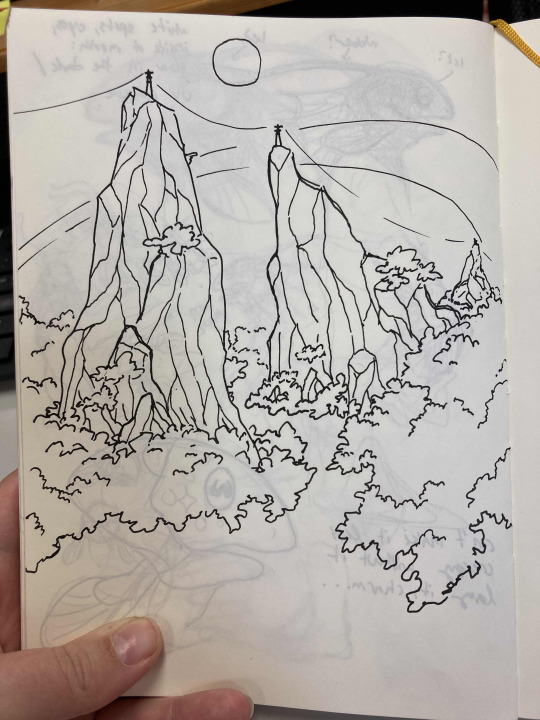

... this isn't out of lack of skill to imagine or draw scifi/fantasy landscapes.

thank you for reading such a long post! i hope you got something out of this post; i think it's a good look into the "experimentation phase" of getting into a new medium. i'm not going into my post-processing / GIMP stuff in this post because it's already so long, but let me know if you want another post going into that!

good-faith discussion and questions are encouraged but i will disable comments if you don't behave yourselves. be kind to each other and keep it P.L.U.R.

201 notes

·

View notes

Text

13/100 days of productivity

i am slowly getting my head above water, not only by getting things done but by realizing people don't secretly hate me (i know but be patient i only realized this yesterday)

academically speaking: python python python different types of regressions different models python python data tables APIs python python pyt*loses her mind*

#studyblr#university#uni#coffee#college#uni student#university student#bookblr#dark academia#information management#information science#library science#study inspiration#study studyspo#study motivation#100 days of productivity#uni inspiration#uni life#food#books#barista#uni motivation#international student#python#coding#data science#student#uni student aesthetic#studyblr aesthetic#uni aesthetic

45 notes

·

View notes

Text

Tierlist part 3: Rust

But first corrections. As some have pointed out i of course should have interpreted C++ as an increment. I will note that i model the system as signed integers putting C at 0 and not chars to make the ordering reflect the ranking. Thus C++ increments C and evaluates to the same putting them both at B tier. This has been corrections. Now Rust. I absolutely love rust so its an easy S tier. The way you structure data with it structs and enums combined with the trait system is just a delight to work with. So... Wait. Uhmmm, it seems that i have reached a problem. Due to previous indiscretions Rust does not trust me anymore and has locked itself away from me. Due to its memory safety there is not really anything i can do so lets just leave it there for now.

Part 2

40 notes

·

View notes

Text

2 notes

·

View notes

Note

fwiw and i have no idea what the artists are doing with it, a lot of the libraries that researchers are currently using to develop deep learning models from scratch are all open source built upon python, i'm sure monsanto has its own proprietary models hand crafted to make life as shitty as possible in the name of profit, but for research there's a lot of available resources library and dataset wise in related fields. It's not my area per se but i've learnt enough to get by in potentially applying it to my field within science, and largely the bottleneck in research is that the servers and graphics cards you need to train your models at a reasonable pace are of a size you can usually only get from google or amazon or facebook (although some rich asshole private universities from the US can actually afford the cost of the kind of server you need. But that's a different issue wrt resource availability in research in the global south. Basically: mas plata para la universidad pública la re puta que los parió)

Yes, one great thing about software development is that for every commercially closed thing there are open source versions that do better.

The possibilities for science are enormous. Gigantic. Much of modern science is based on handling huge amounts of data no human can process at once. Specially trained models can be key to things such as complex genetics, especially simulating proteomes. They already have been used there to incredible effect, but custom models are hard to make, I think AIs that can be reconfigured to particular cases might change things in a lot of fields forever.

I am concerned, however, of the overconsumption of electronics this might lead to when everyone wants their pet ChatGPT on their PC, but this isn't a thing that started with AI, electronic waste and planned obsolescence is already wasting countless resources in chips just to feed fashion items like iphones, this is a matter of consumption and making computers be more modular and longer lasting as the tools they are. I've also read that models recently developed in China consume much, much less resources and could potentially be available in common desktop computers, things might change as quickly as in 2 years.

25 notes

·

View notes

Text

I'm really struggling here. There are so many things I want and need to be. SO many things I should study, so many career paths I need to take, so many things in life that I need to get to. By studying it all, I'm getting nothing done. How do I get myself together? I need to be able to prioritize what I'd like to study and where I want to be in life, so I'm writing this post to puke it all out and hopefully fix it with a little glitter. I'm making a list and categorizing them with Emojis for what I should put a longer-term pause on, what I should put up next, and what I should study now. Stuff I should study now: ✒️ Python for data analysis and machine learning ✒️ Using statistical models on python ✒️ JavaScript/React for web development ✒️ Azure AZ-900 exam prep Stuff I should get to soon but not now: 📜 Data structures & algorithms 📜 A new language Stuff that would be better to pause for now: ���GMAT, for my future MBA 🤎Blender, to create 3D images and interactive tools With things like my GMAT exam prep I can practice 30 minutes a day or 10 pages a day instead of actively making it a major focus of my day and missing out on the things that I really wanted to study right now. Thus, it may be better to turn my 150 days of GMAT prep into just 150 days of productivity ☕ I hope you'll understand and that hopefully, you guys are also coming to a position where you can truly focus on what you want to focus on in life

#study blog#studyspo#study motivation#daily journal#studyblr#to do list#coding#chaotic academia#chaotic thoughts#getting my shit together#realistic studyblr#studying#study tips

15 notes

·

View notes

Text

English SDK for Apache Spark

Are you tired of dealing with complex code and confusing commands when working with Apache Spark? Well, get ready to say goodbye to all that hassle! The English SDK for Spark is here to save the day.

View On WordPress

#AI#Apache Spark#ChatGPT#code#Coding#compiler#Creative content#Data Frames#databricks apache spark#english#English SDK#generative AI#GPT-4#Large Language Model#LLM#OpenAI#pyspark#Python#SDK#Source code#Spark#Spark Session

0 notes

Text

Your Printer is (Probably) a Snitch

In these bewildering times you might want to put up flyers or posters around a neighborhood, or mail a strongly-worded letter to someone. You might be scared of reprisals and wish to do that sort of thing anonymously, but it turns out that governments would prefer that they be able to track you down anyway.

In the early 2010s, a few different innovations were making their way into high-end scanners and printers. One was the EURION constellation, a set of dots that you'll recognize in the constellations on Euro notes or in the distribution of the little numeral 20 speckled around the back of a twenty-dollar bill. Scanners are programmed to look for dots in exactly that pattern and refuse to scan the paper that those dots are on. Can't make a copy of it! Can't scan it in. We'll get back to that.

The other innovation are **tracking dots**. The Electronic Frontier Foundation (EFF) estimates that in 2025, basically every color laser printer on the market embeds a barely-visible forensic code on each of its printouts, which encodes information about the printer and its serial number. (Maybe more data too - I don't have all the details.) Maybe you remember Reality Winner, who leaked classified intelligence to journalists? They received high quality copies (maybe originals) of the documents that were leaked. When officials were able to examine them, they were able to combine regular IT forensics ("how many users printed documents on these dates, to printers of this make & model") and work backwards from the other end, to get a narrow list of suspects.

To defeat those signatures, you either need to use a printer that doesn't embed those features (Challenging! The EFF says basically all printers do this now) or you need to be careful to employ countermeasures like this Python tool. The tool can spray lots of nonsense codes into a PDF that make forensic extraction harder (or impossible).

What does this have to do with the EURion constellation? Well, before anyone can even start to extract little invisible yellow dots from your poster... they have to scan it into their system. And their very law-abiding scanner -- unless it's owned by an agency that fights counterfeiting like the Secret Service does -- probably doesn't have its EURion brainwashing disabled. So when they go to scan your poster or strongly-worded letter, the EURion constellation on it probably stops them from doing it.

#EURion#steganography#printers and scanners#tracking#cybersecurity#sigils#anonymity#electronic frontier foundation

12 notes

·

View notes

Text

28/3/25 AI Development

So i made a GAN image generation ai, a really simple one, but it did take me a lot of hours. I used this add-on for python (a programming language) called tensorflow, which is meant specifically for LMs (language models). The dataset I used is made up of 12 composite photos I made in 2023. I put my focus for this week into making sure the AI works, so I know my idea is viable, if it didnt work i would have to pivot to another idea, but its looking like it does thank god.

A GAN pretty much creates images similar to the training data, which works well with my concept because it ties into how AI tries to replicate art and culture. I called it Johnny2000. It doesnt actually matter how effective johnny is at creating realistic output, the message still works, the only thing i dont want is the output to be insanely realistic, which it shouldnt be, because i purposefully havent trained johnny to recognise and categorise things, i want him to try make something similar to the stuff i showed him and see what happens when he doesnt understand the 'rules' of the human world, so he outputs what a world based on a program would look like, that kind of thing.

I ran into heaps of errors, like everyone does with a coding project, and downloading tensorflow itself literally took me around 4 hours from how convoluted it was.

As of writing this paragraph, johnny is training in the background. I have two levels of output, one (the gray box) is what johnny gives me when i show him the dataset and tell him to create an image right away with no training, therefore he has no idea what to do and gives me a grey box with slight variations in colour. The second one (colourful) is after 10 rounds of training (called epoches), so pockets of colour are appearing, but still in a random noise like way. I'll make a short amendment to this post with the third image he's generating, which will be after 100 more rounds. Hopefully some sort of structure will form. I'm not sure how many epoches ill need to get the output i want, so while i continue the actual proposal i can have johnny working away in the background until i find a good level of training.

Edit, same day: johnny finished the 100 epoch version, its still very noisy as you can see, but the colours are starting to show, and the forms are very slowly coming through. looking at these 3 versions, im not expecting any decent input until 10000+ epochs. considering this 3rd version took over an hour to render, im gonna need to let it work overnight, ive been getting errors that the gpu isnt being used so i could try look at that, i think its because my version of tensorflow is too low. (newer ones arent supported on native windows, id need to use linux, which is possible on my computer but ive done all this work to get it to work here... so....)

how tf do i make it display smaller...

anyways, heres a peek at my dataset, so you can see that the colours are now being used (b/w + red and turquoise).

11 notes

·

View notes

Text

Python Objects and Classes | Resources ✨

Understanding classes and objects makes you better prepared to use Python's data model and full feature set, which will lead to cleaner and more “pythonic” code! The way I be forgetting about Python objects and classes, I need this really 😭🙌🏾

Here is a link to the slideshow: LINK 🐍

#resources#codeblr#coding#programming#progblr#studyblr#studying#comp sci#tech#programmer#python#python resource

132 notes

·

View notes

Text

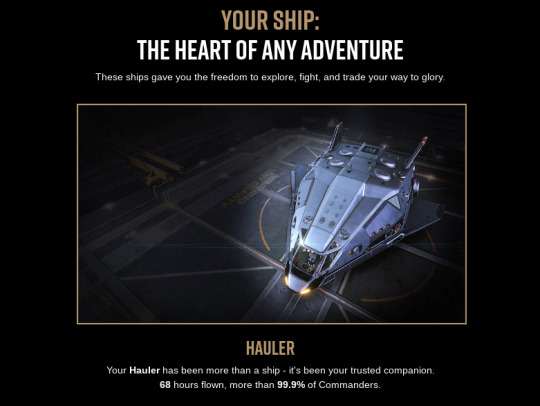

10 years of elite dangerous!

game is 10 years old now! and even tho i haven't played in a good year or two, i did used to invest most of college into the game AND i was a backer. I wanna ramble about my journey below the cut but first. Frontier sent me an email with my backer account's stats! which is really interesting!

So before i do a big ramble below the cut, i want everyone to know that i'm in the top 0.1% of people who fly a hauler in the past 10 years. so take that! (for those who don't play this is like the third cheapest ship and considered by many the worst or a taxi to get to your better ships)

Thanks for reading my ramble! I wanna tell you my storyyyyyyyy So at first i must tell you just how big of a game this was for me. just like minecraft! I was really into space back then and i saw a video by scott manley about this new game on kickstarter which allowed you to fly to earth and had promises to allow you to fly inside a 1:1 scale of our universe! billions of stars! And how realistic the flight model was and how guuuuuud the sounds were. Right after that video i asked my mom if she could buy it for me with me paying her back later. I'm telling you i was HOOKED at the tutorial, in my tiny sidewinder. I saw the weapons pop out and the gatling gun ooohhh the sounds. and just how big the space stations were and detailed! I'll never forget that. I even have an accidental screenshot of it on a harddrive somewhere. I remember my first jump to another start system, i had my curtains closed and my speakers set a bit louder and i was just like, this is giving me goosbumps this is epic!

(the screenshot in question circa 2014) So what did i do after everything was explained to me by the tutorial? I started bounty hunting! And after the game released later in 2014, I got my kickstarter reward of a free eagle fighter (second cheapest ship in the game) And went back to bounty hunting! I dreamt of owning the biggest ship in the game, The Anaconda. But each ship kill was about 3k credits for me back then. And i had school and played minecraft and call of duty with friends so i didn't get the conda many years later. But i had so much fun! I ended up flying in a cobra mk3 for most of my time back then. Then around 2017 I met an artist friend online who also played! which was my first multiplayer experience in elite. I had just done the Sothis/Ceos runs which allowed me to get a python haha. For those who remember hauling biowaste back then due to the mission reward bug being insane, 07 to you!

(my biowaste luxery vessel circa 2017)

Later i remember hauling passengers with some friends i made after joining a community for the first time and that gave me so much money (another mission reward bug after the biowaste got patched) that i finally was able to just buy every ship in the game! I went and grinded all the millitary ranks to get both a federal corvette and an imperial cutter. And i was set for life! I got an exploration ship to go to the galactic core which was about 8 hours of realtime pressing J to jump to another star haha, it got so boring after a while! i wanted to return to combat but i had to fly all the way back too! (netted me so much money tho from selling star data oh lordy! i had about 1 billion credits and if you remember the anaconda i wanted years ago that one is about 120 million)

(my imperial cutter and federal corvette(The cutter costed me about 1.2 billion if i remember correctly)) In my last year of elite before i quit that account (due to drama in the player group i was in, i won't say too much but it was baaaad). I got really into mining, combat had grown stale, hunting aliens got too easy. I was basically sitting still in a difficult spot and had the ship on turret mode and was able to make lunch and come back victorious. I liked seeing the little mining drones pick up ores and put them into my ship! Also back then it was the most profitable thing you could do. But after you have everything, and so much money that you don't know what to do with it. What do you do? I bought a hauler as a joke. It was known as a meme ship. And i had some laughs! I went to a hauler meetup with a few players, Went to community missions and gave people free stuff and repairs. Even went to the galactic core again in it! And it was around that time that i realized that the hauler is actually the best ship in the game! Everything you do in it is a challenge, you have to carefully build it for a specific job. It dies super easily in combat and you only have 1 small weapon to give it. Making every encounter a fun and skillfull engagement. This post loops back to one of my previous blog posts about how space sandbox games like this reflect real life. When i started out, i want to have it all! big moneys, big ships. but in the end? I just want to do my favorite activities in a small starter boat that i love way more than anything in the entire game! Like i said, i stopped playing on that acount years ago after a falling out with a friendgroup, and after epic offered the game for free! bought the odyssey expansion on there and now i have an alt acount where i just fly a hauler on every once in a while. Doing combat or mining or sometimes trading. Thanks Elite for being probably one of my top 3 games ever! Maybe i'll install it again to fly in my ship some more. Thanks for reading and before i go have some pictures of my hauler.

12 notes

·

View notes

Text

I think I could get away with pretending to be an AI robot. At least for a few years. Lord knows I’d figure out how to shut the emotion in my voice off immediately, and if I’ve got a face of steel I don’t need to worry about people reading my emotions. My ability to take things literally will be ignored as standard Python coding. I might be able to even get away with oversharing information as just supplying the data the humans asked for.

What’s gonna get me caught is my inability to stand a quiet room for longer than 30 seconds. Maybe I could develop a subsonic pattern only my robot ears could catch, or I could focus inward. Literally. Listen to my gears and cooling fans. But the quiet and the boredom is what will get me. Maybe I could Murderbot it out and just watch shows all day long, but it got caught so I don’t know if I want to view it as a role model.

I just know at some point I’m going to break the monotony and do something that would absolutely break my cover and someone I didn’t realize could see me, well, sees me. But then I’d get to indulge in my second favorite fantasy: running away from the authorities and escaping!

19 notes

·

View notes

Text

New data model paves way for seamless collaboration among US and international astronomy institutions

Software engineers have been hard at work to establish a common language for a global conversation. The topic—revealing the mysteries of the universe. The U.S. National Science Foundation National Radio Astronomy Observatory (NSF NRAO) has been collaborating with U.S. and international astronomy institutions to establish a new open-source, standardized format for processing radio astronomical data, enabling interoperability between scientific institutions worldwide.

When telescopes are observing the universe, they collect vast amounts of data—for hours, months, even years at a time, depending on what they are studying. Combining data from different telescopes is especially useful to astronomers, to see different parts of the sky, or to observe the targets they are studying in more detail, or at different wavelengths. Each instrument has its own strengths, based on its location and capabilities.

"By setting this international standard, NRAO is taking a leadership role in ensuring that our global partners can efficiently utilize and share astronomical data," said Jan-Willem Steeb, the technical lead of the new data processing program at the NSF NRAO. "This foundational work is crucial as we prepare for the immense data volumes anticipated from projects like the Wideband Sensitivity Upgrade to the Atacama Large Millimeter/submillimeter Array and the Square Kilometer Array Observatory in Australia and South Africa."

By addressing these key aspects, the new data model establishes a foundation for seamless data sharing and processing across various radio telescope platforms, both current and future.

International astronomy institutions collaborating with the NSF NRAO on this process include the Square Kilometer Array Observatory (SKAO), the South African Radio Astronomy Observatory (SARAO), the European Southern Observatory (ESO), the National Astronomical Observatory of Japan (NAOJ), and Joint Institute for Very Long Baseline Interferometry European Research Infrastructure Consortium (JIVE).

The new data model was tested with example datasets from approximately 10 different instruments, including existing telescopes like the Australian Square Kilometer Array Pathfinder and simulated data from proposed future instruments like the NSF NRAO's Next Generation Very Large Array. This broader collaboration ensures the model meets diverse needs across the global astronomy community.

Extensive testing completed throughout this process ensures compatibility and functionality across a wide range of instruments. By addressing these aspects, the new data model establishes a more robust, flexible, and future-proof foundation for data sharing and processing in radio astronomy, significantly improving upon historical models.

"The new model is designed to address the limitations of aging models, in use for over 30 years, and created when computing capabilities were vastly different," adds Jeff Kern, who leads software development for the NSF NRAO.

"The new model updates the data architecture to align with current and future computing needs, and is built to handle the massive data volumes expected from next-generation instruments. It will be scalable, which ensures the model can cope with the exponential growth in data from future developments in radio telescopes."

As part of this initiative, the NSF NRAO plans to release additional materials, including guides for various instruments and example datasets from multiple international partners.

"The new data model is completely open-source and integrated into the Python ecosystem, making it easily accessible and usable by the broader scientific community," explains Steeb. "Our project promotes accessibility and ease of use, which we hope will encourage widespread adoption and ongoing development."

10 notes

·

View notes