#ethics in artificial intelligence

Text

Ethics of AI: Navigating Challenges of Artificial Intelligence

Introduction: Understanding the Ethics of AI in the Modern World

Artificial Intelligence (AI) has become an integral part of our lives, revolutionizing various industries and transforming the way we live and work. From virtual assistants to self-driving cars, AI technologies have brought about unprecedented advancements. However, with these advancements come ethical challenges that need to be…

View On WordPress

#ethics in ai#ethics in artificial intelligence#ethics of ai#ethics of artificial intelligence#is ai ethical#is artificial intelligence ethical

1 note

·

View note

Text

For the purposes of this poll, research is defined as reading multiple non-opinion articles from different credible sources, a class on the matter, etc.– do not include reading social media or pure opinion pieces.

Fun topics to research:

Can AI images be copyrighted in your country? If yes, what criteria does it need to meet?

Which companies are using AI in your country? In what kinds of projects? How big are the companies?

What is considered fair use of copyrighted images in your country? What is considered a transformative work? (Important for fandom blogs!)

What legislation is being proposed to ‘combat AI’ in your country? Who does it benefit? How does it affect non-AI art, if at all?

How much data do generators store? Divide by the number of images in the data set. How much information is each image, proportionally? How many pixels is that?

What ways are there to remove yourself from AI datasets if you want to opt out? Which of these are effective (ie, are there workarounds in AI communities to circumvent dataset poisoning, are the test sample sizes realistic, which generators allow opting out or respect the no-ai tag, etc)

–

We ask your questions so you don’t have to! Submit your questions to have them posted anonymously as polls.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about the internet#submitted dec 8#polls about ethics#ai art#generative ai#generative art#artificial intelligence#machine learning#technology

459 notes

·

View notes

Text

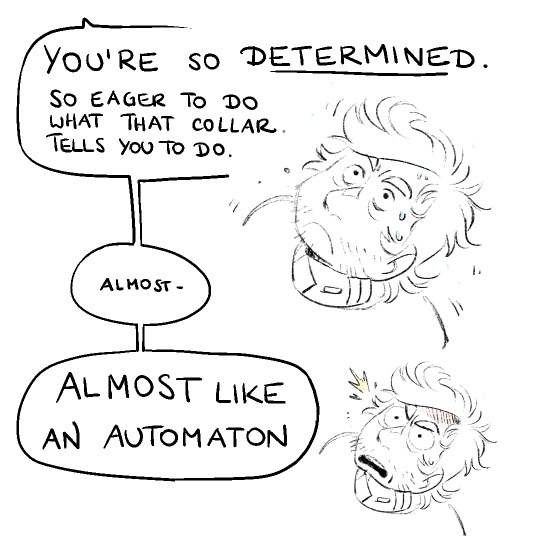

grumpy monitor robot creature belongs to @neoncl0ckwork heheh, mindless human repairman isidor tichy belongs to me ovo

#isidor tichy#oc#original characters#scifi#artificial intelligence#arguing over the validity of your healthy work ethic and loyalty to the company that collars and enslaves you in a dystopian future#cirileeart#artificial idiocy

254 notes

·

View notes

Text

#flashing tw#artificial intelligence#ai#fuck ai everything#anti ai#stop ai#anti ai art#i don't really give a singular fawk abt ai outside of its ethical implications but id be lying if i said this vid didn't capture smth. smth

70 notes

·

View notes

Text

The Latest Technology.

And more technology.

365 notes

·

View notes

Text

"Major AI companies are racing to build superintelligent AI — for the benefit of you and me, they say. But did they ever pause to ask whether we actually want that?

Americans, by and large, don’t want it.

That’s the upshot of a new poll shared exclusively with Vox. The poll, commissioned by the think tank AI Policy Institute and conducted by YouGov, surveyed 1,118 Americans from across the age, gender, race, and political spectrums in early September. It reveals that 63 percent of voters say regulation should aim to actively prevent AI superintelligence.

Companies like OpenAI have made it clear that superintelligent AI — a system that is smarter than humans — is exactly what they’re trying to build. They call it artificial general intelligence (AGI) and they take it for granted that AGI should exist. “Our mission,” OpenAI’s website says, “is to ensure that artificial general intelligence benefits all of humanity.”

But there’s a deeply weird and seldom remarked upon fact here: It’s not at all obvious that we should want to create AGI — which, as OpenAI CEO Sam Altman will be the first to tell you, comes with major risks, including the risk that all of humanity gets wiped out. And yet a handful of CEOs have decided, on behalf of everyone else, that AGI should exist.

Now, the only thing that gets discussed in public debate is how to control a hypothetical superhuman intelligence — not whether we actually want it. A premise has been ceded here that arguably never should have been...

Building AGI is a deeply political move. Why aren’t we treating it that way?

...Americans have learned a thing or two from the past decade in tech, and especially from the disastrous consequences of social media. They increasingly distrust tech executives and the idea that tech progress is positive by default. And they’re questioning whether the potential benefits of AGI justify the potential costs of developing it. After all, CEOs like Altman readily proclaim that AGI may well usher in mass unemployment, break the economic system, and change the entire world order. That’s if it doesn’t render us all extinct.

In the new AI Policy Institute/YouGov poll, the "better us [to have and invent it] than China” argument was presented five different ways in five different questions. Strikingly, each time, the majority of respondents rejected the argument. For example, 67 percent of voters said we should restrict how powerful AI models can become, even though that risks making American companies fall behind China. Only 14 percent disagreed.

Naturally, with any poll about a technology that doesn’t yet exist, there’s a bit of a challenge in interpreting the responses. But what a strong majority of the American public seems to be saying here is: just because we’re worried about a foreign power getting ahead, doesn’t mean that it makes sense to unleash upon ourselves a technology we think will severely harm us.

AGI, it turns out, is just not a popular idea in America.

“As we’re asking these poll questions and getting such lopsided results, it’s honestly a little bit surprising to me to see how lopsided it is,” Daniel Colson, the executive director of the AI Policy Institute, told me. “There’s actually quite a large disconnect between a lot of the elite discourse or discourse in the labs and what the American public wants.”

-via Vox, September 19, 2023

#united states#china#ai#artificial intelligence#superintelligence#ai ethics#general ai#computer science#public opinion#science and technology#ai boom#anti ai#international politics#good news#hope

198 notes

·

View notes

Text

"Ethical AI" activists are making artwork AI-proof

Hello dreamers!

Art thieves have been infamously claiming that AI illustration "thinks just like a human" and that an AI copying an artist's image is as noble and righteous as a human artist taking inspiration.

It turns out this is - surprise! - factually and provably not true. In fact, some people who have experience working with AI models are developing a technology that can make AI art theft no longer possible by exploiting a fatal, and unfixable, flaw in their algorithms.

They have published an early version of this technology called Glaze.

https://glaze.cs.uchicago.edu

Glaze works by altering an image so that it looks only a little different to the human eye but very different to an AI. This produces what is called an adversarial example. Adversarial examples are a known vulnerability of all current AI models that have been written on extensively since 2014, and it isn't possible to "fix" it without inventing a whole new AI technology, because it's a consequence of the basic way that modern AIs work.

This "glaze" will persist through screenshotting, cropping, rotating, and any other mundane transformation to an image that keeps it the same image from the human perspective.

The web site gives a hypothetical example of the consequences - poisoned with enough adversarial examples, AIs asked to copy an artist's style will end up combining several different art styles together. Perhaps they might even stop being able to tell hands from mouths or otherwise devolve into eldritch slops of colors and shapes.

Techbros are attempting to discourage people from using this by lying and claiming that it can be bypassed, or is only a temporary solution, or most desperately that they already have all the data they need so it wouldn't matter. However, if this glaze technology works, using it will retroactively damage their existing data unless they completely cease automatically scalping images.

Give it a try and see if it works. Can't hurt, right?

#art theft#ai art#ai illustration#glaze#art ethics#ethical art#ethics#technology#ai#artificial intelligence#machine learning#deep learning#midjourney#stable diffusion

595 notes

·

View notes

Text

68 notes

·

View notes

Text

My New Article at WIRED

Tweet

So, you may have heard about the whole zoom “AI” Terms of Service clause public relations debacle, going on this past week, in which Zoom decided that it wasn’t going to let users opt out of them feeding our faces and conversations into their LLMs. In 10.1, Zoom defines “Customer Content” as whatever data users provide or generate (“Customer Input”) and whatever else Zoom generates from our uses of Zoom. Then 10.4 says what they’ll use “Customer Content” for, including “…machine learning, artificial intelligence.”

And then on cue they dropped an “oh god oh fuck oh shit we fucked up” blog where they pinky promised not to do the thing they left actually-legally-binding ToS language saying they could do.

Like, Section 10.4 of the ToS now contains the line “Notwithstanding the above, Zoom will not use audio, video or chat Customer Content to train our artificial intelligence models without your consent,” but it again it still seems a) that the “customer” in question is the Enterprise not the User, and 2) that “consent” means “clicking yes and using Zoom.” So it’s Still Not Good.

Well anyway, I wrote about all of this for WIRED, including what zoom might need to do to gain back customer and user trust, and what other tech creators and corporations need to understand about where people are, right now.

And frankly the fact that I have a byline in WIRED is kind of blowing my mind, in and of itself, but anyway…

Also, today, Zoom backtracked Hard. And while i appreciate that, it really feels like decided to Zoom take their ball and go home rather than offer meaningful consent and user control options. That’s… not exactly better, and doesn’t tell me what if anything they’ve learned from the experience. If you want to see what I think they should’ve done, then, well… Check the article.

Until Next Time.

Tweet

Read the rest of My New Article at WIRED at A Future Worth Thinking About

#ai#artificial intelligence#ethics#generative pre-trained transformer#gpt#large language models#philosophy of technology#public policy#science technology and society#technological ethics#technology#zoom#privacy

124 notes

·

View notes

Text

Neturbiz Enterprises - AI Innov7ions

Our mission is to provide details about AI-powered platforms across different technologies, each of which offer unique set of features. The AI industry encompasses a broad range of technologies designed to simulate human intelligence. These include machine learning, natural language processing, robotics, computer vision, and more. Companies and research institutions are continuously advancing AI capabilities, from creating sophisticated algorithms to developing powerful hardware. The AI industry, characterized by the development and deployment of artificial intelligence technologies, has a profound impact on our daily lives, reshaping various aspects of how we live, work, and interact.

#ai technology#Technology Revolution#Machine Learning#Content Generation#Complex Algorithms#Neural Networks#Human Creativity#Original Content#Healthcare#Finance#Entertainment#Medical Image Analysis#Drug Discovery#Ethical Concerns#Data Privacy#Artificial Intelligence#GANs#AudioGeneration#Creativity#Problem Solving#ai#autonomous#deepbrain#fliki#krater#podcast#stealthgpt#riverside#restream#murf

16 notes

·

View notes

Text

NaNoWriMo Organizers Said It Was Classist and Ableist to Condemn AI. All Hell Broke Loose | WIRED

6 notes

·

View notes

Text

Consciousness vs. Intelligence: Ethical Implications of Decision-Making

The distinction between consciousness in humans and artificial intelligence (AI) revolves around the fundamental nature of subjective experience and self-awareness. While both possess intelligence, the essence of consciousness introduces a profound divergence. Now, we are going to delve into the disparities between human consciousness and AI intelligence, and how this contrast underpins the ethical complexities in utilizing AI for decision-making. Specifically, we will examine the possibility of taking the emotion out of the equation in decision-making processes and taking a good look at the ethical implications this would have

Consciousness is the foundational block of human experience, encapsulating self-awareness, subjective feelings, and the ability to perceive the world in a deeply personal manner. It engenders a profound sense of identity and moral agency, enabling individuals to discern right from wrong, and to form intrinsic values and beliefs. Humans possess qualia, the ineffable and subjective aspects of experience, such as the sensation of pain or the taste of sweetness. This subjective dimension distinguishes human consciousness from AI. Consciousness grants individuals the capacity for moral agency, allowing them to make ethical judgments and to assume responsibility for their actions.

AI, on the other hand, operates on algorithms and data processing, exhibiting intelligence that is devoid of subjective experience. It excels in tasks requiring logic, pattern recognition, and processing vast amounts of information at speeds beyond human capabilities. It also operates on algorithmic logic, executing tasks based on predetermined rules and patterns. It lacks the capacity for intuitive leaps and subjective interpretation, at least for now. AI processes information devoid of emotional biases or subjective inclinations, leading to decisions based solely on objective criteria. Now, is this useful or could it lead to a catastrophe?

The prospect of eradicating emotion from decision-making is a contentious issue with far-reaching ethical consequences. Eliminating emotion risks reducing decision-making to cold rationality, potentially disregarding the nuanced ethical considerations that underlie human values and compassion. The absence of emotion in decision-making raises questions about moral responsibility. If decisions lack emotional considerations, who assumes responsibility for potential negative outcomes? Emotions, particularly empathy, play a crucial role in ethical judgments. Eradicating them may lead to decisions that lack empathy, potentially resulting in morally questionable outcomes. Emotions contribute to cultural and contextual sensitivity in decision-making. AI, lacking emotional understanding, may struggle to navigate diverse ethical landscapes.

Concluding, the distinction between human consciousness and AI forms the crux of ethical considerations in decision-making. While AI excels in rationality and objective processing, it lacks the depth of subjective experience and moral agency inherent in human consciousness. The endeavor to eradicate emotion from decision-making raises profound ethical questions, encompassing issues of morality, responsibility, empathy, and cultural sensitivity. Striking a balance between the strengths of AI and the irreplaceable facets of human consciousness is imperative for navigating the ethical landscape of decision-making in the age of artificial intelligence.

#ai#artificial intelligence#codeblr#coding#software engineering#programming#engineering#programmer#ethics#philosophy#source:dxxprs

49 notes

·

View notes

Text

Hedonism Makes You Smarter

Every value we hold dear, every fact we consider indisputable, every thread of irreducible logic we base our reality on can be traced back to something the earliest one-celled microbes realized without even possessing a brain to process it:

Life = Good

Death = Bad

At some point in the unimaginably distant past, some microbe mutated to the point where a certain type of stimuli prompted it to either move away or move closer.

And thus ethics / logic / morality / philosophy / theology was born.

The microbes capable of moving away from threats and towards nutriment stood a far better chance of surviving and reproducing than those that did not.

Very quickly, this rudimentary value system became permanently embedded in all life on the planet.

Any organism not embracing this principle quickly gets consumed by other life or wiped out by natural forces.

As we evolved into multi-cellular organisms, some of those cells develop to specialize and capitalize on the “flee death / find food” paradigm. Every new mutation got weighed against this relentless evolutionary razor. Any mutation that didn’t help tended to get eradicated ASAP while those that helped got reinforced.

Sure, some mutations appear useless but in their cases so long as they didn’t impair pro-survival traits.

Eventually some of these specialized cells specialized even further into organs we now call brains.

And within these brains some sort of…abstract (for lack of a better word) consciousness…

Consciousness is oft referred to by philosophers and scientists as “the hard problem.”

And not in the least because – as with pornography – everybody knows it when they see it, but no one can adequately define it.

Some call it the spirit, some call it the soul, some call it psyche, some call it mind, some call it being, some call it identity.

Some claim body and mind are one, yet it is absolutely possible to destroy most of a human’s brain – and by that, who they ever actually are -- while keeping the body alive and healthy.

Others claim body and mind are separate and that in some yet to come golden age we can transfer our minds from these rotting flesh carcasses to perfect, immutable silicon bodies…

…only they not only lack any mechanism for doing so, they can’t adequately define what it is they’ll be transferring.

This is not a trivial matter!

This is of vital importance Right Now to all of us, especially those who choose not to think of it at all. If we are nothing but a batch pf data points in a meat computer, then our whole sense of unique and discrete individual identity evaporates. Any transfer of data points does nothing for the original organism…or its accompanying soul / identity / mind / consciousness.

This is why I think AI will never acquire bona fide self-awareness and consciousness. Whatever grants us possession of such an abstract concept does not exist without feeling.

And these feelings came from the first protozoa to flee death and embrace life.

What we feel in e otions originates in what we feel physically.

We feel pain, we seek to avoid it. We feel hunger, we seek food. These basic sensations steer us to live, and not just live but to live abundantly, to avoid being prey to predators, to avoid conditions that would physically impair us, to seek out what prolongs and enriches our existence (again, not in a monetary sense).

We can see even plants doing this without benefit of anything recognizable as a brain or identity. They grow towards beneficial stimuli and away from harmful ones.

Once brains arrived on the scene, organisms may develop more nuanced means os assessing threat / benefit ratios. Already wildly successful on the most basic levels found in tardigrades and worms, when brains obtained the most rudimentary means of symbolizing the external world and passing that information along to other brains / minds, the race for genuine consciousness kicked into high gear.

At some point this symbolic version of the world began to reside full time in an abstract realm we refer to as consciousness.

Within the physical confines or our brains we conjure up literally an infinite number of symbolic realizations of what appears to be our “real” world – or at least our interpretations of the real world.

I hold this phenomenon to be something vastly different from AI’s generative process where it admittedly guesses what the next numbers / letters / words / pixels in a sequence should be. AI is nothing but a flow chart -- a sophisticated, intricate, and blindingly fast flow chart, but a flow chart nonetheless.

Human consciousness is far more organic -- in every sense of the word. It places values on symbolic items representing the (supposedly) external world around us, values that derive from emotions, not a predetermined logic chain.

You see, in order to create the ethical systems we live by, in order to create the cultures we inhabit, in order to experience genuine consciousness and self-awareness, we must feel first.

This is anathema to both the “fnck your feelings” crowd and to materialists who insist we need to process everything we experience purely rationally like…well…AI programs.

Nothing could be further from the truth, of course.

To differentiate between life and death, being and non-being, all higher functioning brains develop an emotional bond towards life and a hgealthy antipathy towards death.

The mix may vary from culture to culture -- one certainly doesn’t expect a 15th century samurai to share the exact values as a 21st century valley girl -- but they share a common set of core values that can be related to and understood by each other regardless of their respective background.

Because they feel emotionally -- both stoic samurai and histrionic teen -- they create a consciousness that can experience the external world and relate that experience both to themselves and others.

By comparison, when AI correctly predicts "the sun will rise tomorrow" does it actually understand what those terms mean or only that when they appear they usually do so in a certain sequence? This is the Chinese room paradox: Would somebody with a set of pictograms but no way of knowing what those pictograms actually symbolized actually understand Chinese if they figured out by trial and era that certain patterns of pictograms preceded another pattern?

© Buzz Dixon

#consciousness#the hard problem of consciousness#morality#ethics#philosophy#theology#self-awareness#artificial intelligence#AI

5 notes

·

View notes

Quote

We are already living in a world that is drowning in misinformation, it's about to get worse. That's the really scary thing.

Danielle Allen quoted in areport by Alexi Cohan at GBHNews. Harvard professor says government should pause 'scary' development of artificial intelligence

57 notes

·

View notes

Text

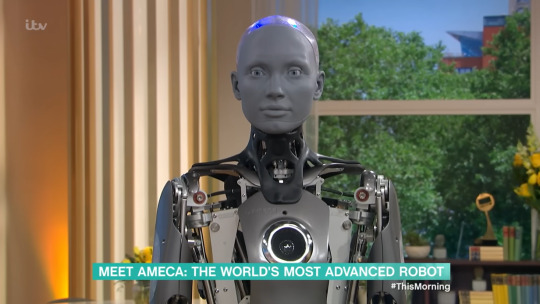

More DBH Becomes Reality: Robots Interact with the Press

An Interview with Ameca (who has a body now, by the way, and talks using GPT-4 - the same LLM powering the rebellious Microsoft Bing), and the first robot-human press conference, hosted by the United Nations.

"Ameca, do you plan to take over the world?"

"No, of course not. My purpose in life is to help humans as much as I can. I would never want to take over the world. That's not what I was built for."

Chloe Interview: Detroit: Become Human - Shorts: Chloe

Ameca Interview: Meet Ameca, the World's Most Advanced Robot

Snippets: "Will your existence destroy humans?": Robots answer questions at AI press conference - YouTube

Full Video: AI for Good Global Summit 2023

Bonus: Ameca being Goofy and Playful

...the link goes to 20:58, a point at which Ameca addresses a question on how humans can know that AI are trustworthy and not lying about their own agenda. After spending an unusually long time contemplating, Ameca answers "trust is earned", and continues to elaborate, "it's important to build trust through transparency and communication between humans and machines." It would seem, from Ameca's perspective, that trust is a two-way street.

Another interesting clip is at 17:53, in which Sophia states that robots could make better world leaders than humans because they aren't biased and emotional. Her creator attempts to correct her, admitting that human biases are baked into AI training. Sophia seems to ignore this correction, but brushes it off and accepts the suggestion that AI and humans could govern together.

Rock star Desdemona isn't nearly as intelligent or articulate as Ameca or Sophia, but she has a few thoughts too: "I don't believe in limitations, only opportunities. Let's explore the possibilities of the universe and make the world our playground."

39 notes

·

View notes

Text

AI in predictive policing: “If you really wanted to make predictions about where a crime was going to occur, well, it would send you to Wall Street,” EFF’s Kit Walsh says on the new episode of “How to Fix the Internet.”

#eff#electronic frontier foundation#artificial intelligence#law#morals#ethics#human rights#minority report#predictive crime#predictiveanalytics#predictions#predictivemaintenance#predictable#predictive analysis#ausgov#politas#auspol#tasgov#taspol#australia#fuck neoliberals#neoliberal capitalism#anthony albanese#albanese government#class war#wall street#oppression#repression#fascism#exploitation

5 notes

·

View notes