#hardware acceleration guide

Explore tagged Tumblr posts

Text

How To Enable Or Disable Hardware Acceleration In The 360 Secure Browser

youtube

How To Enable Or Disable Hardware Acceleration In The 360 Secure Browser | PC Tutorial | *2024

In this tutorial, we'll guide you through the process of enabling or disabling hardware acceleration in the 360 Secure Browser on your PC. Hardware acceleration can improve browser performance or help resolve issues with rendering. Follow this step-by-step guide to optimize your browsing experience. Don’t forget to like, subscribe, and hit the notification bell for more 360 Secure Browser tips and tricks!

Simple Steps:

Open the 360 Secure web browser.

Click on the 3 bar hamburger menu in the upper right corner and choose "Settings".

In the left pane, click on "Advanced" to expand it the section, then choose "System".

In the center pane, toggle on or off "Use Hardware Acceleration When Available".

#360 Secure Browser#enable hardware acceleration#disable hardware acceleration#hardware acceleration settings#360 Secure tutorial#optimize browser performance#browser settings 360#360 Secure PC#browser tips#hardware acceleration guide#improve browsing speed#troubleshoot 360 Secure#browser optimization#360 Secure 2024#tech tutorial 360 Secure#Youtube

0 notes

Note

Could you give us a guide on how bots reproduce with each other? And how things would vary with a human partner?

A variety of ways, but this will focus on sexual reproduction specifically, so:

Cybertronian-Cybertronian

The most common method is the sparklet transfer. This happens when two or more mecha consistently share sparks to splinter off an orb that will eventually form a stable sparklet.

A popular method as there's no mess associated with sticky reproduction.

Doesn't require the carrier to have a gestational chamber or reproductive hardware.

If the carrier lacks a dense spark, then they'll need other mecha's spark energy to ensure the orb will remain stable and their own spark doesn't gutter trying to feed it.

This requires the carrier to have medical intervention as the sparklet will need to be 'snipped' off to transfer to lab-derived sentio metallico to build their frame or in the Newspark Intensive Care and Recovery Unit (NICRU) to keep a close observation for any rejection between a sparklet and a chosen proto-frame.

If the sparklet isn't taken away, the draw would overwhelm the carrier as the sparklet wouldn't receive the code to descend down to build their frame. Instead, keep drawing on more energy until death from spark burnout and chamber calcification occurs.

This is the stage where other mechs that aren't the ignitor can influence the upcoming sparkling and pass on their own code. Enough effort and a third party can completely overwrite the ignitor.

Carriage (or in some circles, true or full carriage) is the specific method where a gestational chamber is used to bring a newspark to term.

The first phase is the same as the sparklet method, and should the carrier have a functional gestational chamber and reproductive hardware, then a matured sparklet will receive a code to immediately snap away from the carrier's spark to descend down through a specialized funnel to stay in the gestational chamber.

With carriage, the carrier uses their valve to take in transfluid to provide construction materials and nanites for the sparklet to use.

Once the sparklet descends, the outside party influence is minimal. Donors can give transfluid without passing direct code to the sparkling.

Heats can occur as a latch-ditch attempt for the carrier to call for donors as their frame can't sufficiently support the carriage.

An active gestational chamber will expand over the course of the newspark's development.

Emergence is similar to a human. Chamber will shift downward, and newspark is born head-first via contractions through the valve (or the primary valve should the carrier have two valves).

Cybertronian-Human

It's all carriage method. However, a very specific kind:

A very rare phenomenon where all stages of sparklet formation takes place in the gestional chamber.

Highly dangerous as the orb still requires energy to feed itself, so it pulls on the peripheral tendrils on their carrier and feeds on the residual energy from transfluid.

An incredibly high-risk carriage in Cybertronians as the danger lurks where the sparklet doesn't receive the code to detach itself from the carrier's spark.

Plus coding and priority tree complications in the carrier as their frame doesn't realize they're carrying.

Human pregnancy doesn't run that risk since they don't have sparks.

In a human carrier, the orb forms in the uterus and nourishes itself via transfluid and the ambient energy from the carrier's bioelectricity.

When the orb matures to a sparklet, it deploys connective tendrils to funnel nutrition to it. The tendrils attach to the womb and cause nanites to create zones of platelets in the organ to efficiently gather materials from their carrier's body and sire(s)' transfluid.

Cybertronian sires will be more defensive over a human carrier since donors can influence the carriage. However, it's a short-lived state as a human carriage happens at an accelerated pace compared in a Cybertronian carrier.

Far more common for a human female to be a carrier than a human male to sire, but should a human male manage the far-fetched odds on siring upon a Cybertronian with a functional gestational chamber, then the couple would require a third to act as a donor to keep up with the development as the Cybertronian carrier can not rely on supplemental infusions in this kind of carriage.

#ask#transformers#cybertronian biology#pregnancy#bitlets#sparklings#childbirth#heats#maccadam#my thoughts#tf headcanons#my writing#look the odds of a human guy fucking a baby into a cybertronian carrier would be ASTRONOMICALLY SMALL but it's possible#never say never on Earth. weirder shit can and will happen#medical complications

50 notes

·

View notes

Text

B-2 Gets Big Upgrade with New Open Mission Systems Capability

July 18, 2024 | By John A. Tirpak

The B-2 Spirit stealth bomber has been upgraded with a new open missions systems (OMS) software capability and other improvements to keep it relevant and credible until it’s succeeded by the B-21 Raider, Northrop Grumman announced. The changes accelerate the rate at which new weapons can be added to the B-2; allow it to accept constant software updates, and adapt it to changing conditions.

“The B-2 program recently achieved a major milestone by providing the bomber with its first fieldable, agile integrated functional capability called Spirit Realm 1 (SR 1),” the company said in a release. It announced the upgrade going operational on July 17, the 35th anniversary of the B-2’s first flight.

SR 1 was developed inside the Spirit Realm software factory codeveloped by the Air Force and Northrop to facilitate software improvements for the B-2. “Open mission systems” means that the aircraft has a non-proprietary software architecture that simplifies software refresh and enhances interoperability with other systems.

“SR 1 provides mission-critical capability upgrades to the communications and weapons systems via an open mission systems architecture, directly enhancing combat capability and allowing the fleet to initiate a new phase of agile software releases,” Northrop said in its release.

The system is intended to deliver problem-free software on the first go—but should they arise, correct software issues much earlier in the process.

The SR 1 was “fully developed inside the B-2 Spirit Realm software factory that was established through a partnership with Air Force Global Strike Command and the B-2 Systems Program Office,” Northrop said.

The Spirit Realm software factory came into being less than two years ago, with four goals: to reduce flight test risk and testing time through high-fidelity ground testing; to capture more data test points through targeted upgrades; to improve the B-2’s functional capabilities through more frequent, automated testing; and to facilitate more capability upgrades to the jet.

The Air Force said B-2 software updates which used to take two years can now be implemented in less than three months.

In addition to B61 or B83 nuclear weapons, the B-2 can carry a large number of precision-guided conventional munitions. However, the Air Force is preparing to introduce a slate of new weapons that will require near-constant target updates and the ability to integrate with USAF’s evolving long-range kill chain. A quicker process for integrating these new weapons with the B-2’s onboard communications, navigation, and sensor systems was needed.

The upgrade also includes improved displays, flight hardware and other enhancements to the B-2’s survivability, Northrop said.

“We are rapidly fielding capabilities with zero software defects through the software factory development ecosystem and further enhancing the B-2 fleet’s mission effectiveness,” said Jerry McBrearty, Northrop’s acting B-2 program manager.

The upgrade makes the B-2 the first legacy nuclear weapons platform “to utilize the Department of Defense’s DevSecOps [development, security, and operations] processes and digital toolsets,” it added.

The software factory approach accelerates adding new and future weapons to the stealth bomber, and thus improve deterrence, said Air Force Col. Frank Marino, senior materiel leader for the B-2.

The B-2 was not designed using digital methods—the way its younger stablemate, the B-21 Raider was—but the SR 1 leverages digital technology “to design, manage, build and test B-2 software more efficiently than ever before,” the company said.

The digital tools can also link with those developed for other legacy systems to accomplish “more rapid testing and fielding and help identify and fix potential risks earlier in the software development process.”

Following two crashes in recent years, the stealthy B-2 fleet comprises 19 aircraft, which are the only penetrating aircraft in the Air Force’s bomber fleet until the first B-21s are declared to have achieved initial operational capability at Ellsworth Air Force Base, S.D. A timeline for IOC has not been disclosed.

The B-2 is a stealthy, long-range, penetrating nuclear and conventional strike bomber. It is based on a flying wing design combining LO with high aerodynamic efficiency. The aircraft’s blended fuselage/wing holds two weapons bays capable of carrying nearly 60,000 lb in various combinations.

Spirit entered combat during Allied Force on March 24, 1999, striking Serbian targets. Production was completed in three blocks, and all aircraft were upgraded to Block 30 standard with AESA radar. Production was limited to 21 aircraft due to cost, and a single B-2 was subsequently lost in a crash at Andersen, Feb. 23, 2008.

Modernization is focused on safeguarding the B-2A’s penetrating strike capability in high-end threat environments and integrating advanced weapons.

The B-2 achieved a major milestone in 2022 with the integration of a Radar Aided Targeting System (RATS), enabling delivery of the modernized B61-12 precision-guided thermonuclear freefall weapon. RATS uses the aircraft’s radar to guide the weapon in GPS-denied conditions, while additional Flex Strike upgrades feed GPS data to weapons prerelease to thwart jamming. A B-2A successfully dropped an inert B61-12 using RATS on June 14, 2022, and successfully employed the longer-range JASSM-ER cruise missile in a test launch last December.

Ongoing upgrades include replacing the primary cockpit displays, the Adaptable Communications Suite (ACS) to provide Link 16-based jam-resistant in-flight retasking, advanced IFF, crash-survivable data recorders, and weapons integration. USAF is also working to enhance the fleet’s maintainability with LO signature improvements to coatings, materials, and radar-absorptive structures such as the radome and engine inlets/exhausts.

Two B-2s were damaged in separate landing accidents at Whiteman on Sept. 14, 2021, and Dec. 10, 2022, the latter prompting an indefinite fleetwide stand-down until May 18, 2023. USAF plans to retire the fleet once the B-21 Raider enters service in sufficient numbers around 2032.

Contractors: Northrop Grumman; Boeing; Vought.

First Flight: July 17, 1989.

Delivered: December 1993-December 1997.

IOC: April 1997, Whiteman AFB, Mo.

Production: 21.

Inventory: 20.

Operator: AFGSC, AFMC, ANG (associate).

Aircraft Location: Edwards AFB, Calif.; Whiteman AFB, Mo.

Active Variant: •B-2A. Production aircraft upgraded to Block 30 standards.

Dimensions: Span 172 ft, length 69 ft, height 17 ft.

Weight: Max T-O 336,500 lb.

Power Plant: Four GE Aviation F118-GE-100 turbofans, each 17,300 lb thrust.

Performance: Speed high subsonic, range 6,900 miles (further with air refueling).

Ceiling: 50,000 ft.

Armament: Nuclear: 16 B61-7, B61-12, B83, or eight B61-11 bombs (on rotary launchers). Conventional: 80 Mk 62 (500-lb) sea mines, 80 Mk 82 (500-lb) bombs, 80 GBU-38 JDAMs, or 34 CBU-87/89 munitions (on rack assemblies); or 16 GBU-31 JDAMs, 16 Mk 84 (2,000-lb) bombs, 16 AGM-154 JSOWs, 16 AGM-158 JASSMs, or eight GBU-28 LGBs.

Accommodation: Two pilots on ACES II zero/zero ejection seats.

21 notes

·

View notes

Text

Do you know what this is? Probably not. But if you follow me and enjoy retro gaming, you REALLY should know about it.

I see all of these new micro consoles, and retro re-imaginings of game consoles and I think to myself "Why?" WHY would you spend a decent chunk of your hard-earned money on some proprietary crap hardware that can only play games for that specific system?? Or even worse, pre-loaded titles and you can't download / add your own to the system!? Yet, people think it's great and that seems to be a very popular way to play their old favorites vs. emulation which requires a "certain degree of tech savvy" (and might be frowned upon from a legal perspective).

So, let me tell you about the Mad Catz M.O.J.O (and I don't think the acronym actually means anything). This came out around the same time as the nVidia Shield and the Ouya - seemingly a "me too" product from a company that is notorious for oddly shaped 3rd party game controllers that you would never personally use, instead reserved exclusively for your visiting friends and / or younger siblings. It's an Android micro console with a quad-core 1.8 GHz nVidia Tegra 4 processor, 2 GB of RAM, 16GB of onboard storage (expandable via SD card), running Android 4.2.2. Nothing amazing here from a hardware perspective - but here's the thing most people overlook - it's running STOCK Android - which means all the bloatware crap that is typically installed on your regular consumer devices, smartphones, etc. isn't consuming critical hardware resources - so you have most of the power available to run what you need. Additionally, you get a GREAT controller (which is surprising given my previous comment about the friend / sibling thing) that is a very familiar format for any retro-age system, but also has the ability to work as a mouse - so basically, the same layout as an Xbox 360 controller + 5 additional programmable buttons which come in very handy if you are emulating. It is super comfortable and well-built - my only negative feedback is that it's a bit on the "clicky" side - not the best for environments where you need to be quiet, otherwise very solid.

Alright now that we've covered the hardware - what can it run? Basically any system from N64 on down will run at full speed (even PSP titles). It can even run an older version of the Dreamcast emulator, Reicast, which actually performs quite well from an FPS standpoint, but the emulation is a bit glitchy. Obviously, Retroarch is the way to go for emulation of most older game systems, but I also run DOSbox and a few standalone emulators which seem to perform better vs. their RetroArch Core equivalents (list below). I won't get into all of the setup / emulation guide nonsense, you can find plenty of walkthroughs on YouTube and elsewhere - but I will tell you from experience - Android is WAY easier to setup for emulation vs. Windows or another OS. And since this is stock Android, there is very little in the way of restrictions to the file system, etc. to manage your setup.

I saved the best for last - and this is truly why you should really check out the M.O.J.O. even if you are remotely curious. Yes, it was discontinued years ago (2019, I think). It has not been getting updates - but even so, it continues to run great, and is extremely reliable and consistent for retro emulation. These sell on eBay, regularly for around $60 BRAND NEW with the controller included. You absolutely can't beat that for a fantastic emulator-ready setup that will play anything from the 90s without skipping a beat. And additional controllers are readily available, new, on eBay as well.

Here's a list of the systems / emulators I run on my setup:

Arcade / MAME4droid (0.139u1) 1.16.5 or FinalBurn Alpha / aFBA 0.2.97.35 (aFBA is better for Neo Geo and CPS2 titles bc it provides GPU-driven hardware acceleration vs. MAME which is CPU only)

NES / FCEUmm (Retroarch)

Game Boy / Emux GB (Retroarch)

SNES / SNES9X (Retroarch)

Game Boy Advance / mGBA (Retroarch)

Genesis / PicoDrive (Retroarch)

Sega CD / PicoDrive (Retroarch)

32X / PicoDrive (Retroarch)

TurboGrafx 16 / Mednafen-Beetle PCE (Retroarch)

Playstation / ePSXe 2.0.16

N64 / Mupen64 Plus AE 2.4.4

Dreamcast / Reicast r7 (newer versions won't run)

PSP / PPSSPP 1.15.4

MS-DOS / DOSBox Turbo + DOSBox Manager

I found an extremely user friendly Front End called Gamesome (image attached). Unfortunately it is no longer listed on Google Play, but you can find the APK posted on the internet to download and install. If you don't want to mess with that, another great, similar Front End that is available via Google Play is called DIG.

If you are someone who enjoys emulation and retro-gaming like me, the M.O.J.O. is a great system and investment that won't disappoint. If you decide to go this route and have questions, DM me and I'll try to help you if I can.

Cheers - Techturd

#retro gaming#emulation#Emulators#Android#Nintendo#Sega#Sony#Playstation#N64#Genesis#Megadrive#Mega drive#32x#Sega cd#Mega cd#turbografx 16#Pc engine#Dos games#ms dos games#ms dos#Psp#Snes#Famicom#super famicom#Nes#Game boy#Gameboy#gameboy advance#Dreamcast#Arcade

67 notes

·

View notes

Text

Cost of Setting Up an Electric Vehicle Charging Station in India (2025 Guide)

With India accelerating its transition to electric mobility, the demand for EV charging stations is growing rapidly. Whether you're a business owner, real estate developer, or green tech enthusiast, setting up an electric vehicle (EV) charging station is a promising investment. But how much does it really cost to build one? Let's break it down.

Before diving into the costs, it's important to understand the types of EV chargers and the scope of services provided by modern EV charging solution providers like Tobor, a rising name in the EV infrastructure space offering smart, scalable, and efficient EV charging solutions across India.

Types of EV Charging Stations

Understanding the charger types is essential, as this heavily influences the overall cost:

1. AC Charging Stations

AC (Alternating Current) chargers are typically used for slower charging applications, ideal for residential societies, office complexes, and commercial locations with longer dwell times.

Level 1 Chargers: 3.3 kW output, suitable for two- and three-wheelers.

Level 2 Chargers: 7.2 kW to 22 kW, suitable for four-wheelers (e.g., home or workplace).

2. DC Fast Charging Stations

DC (Direct Current) chargers are used where quick charging is required, such as highways, malls, or public parking zones.

DC Fast Chargers: Start from 30 kW and go up to 350 kW.

They can charge an electric car from 0 to 80% in under an hour, depending on the vehicle.

Cost Breakdown for EV Charging Station Setup

The total cost to set up an electric car charging station in India can vary depending on the type of charger, infrastructure, and location. Here is a detailed breakdown:

1. EV Charging Equipment Cost

The cost of the electric car charger itself is one of the biggest components:

AC Chargers: ₹50,000 to ₹1.5 lakh

DC Fast Chargers: ₹5 lakh to ₹40 lakh (depending on capacity and standards like CCS, CHAdeMO, Bharat DC-001)

Tobor offers a range of chargers including TOBOR Lite (3.3 kW), TOBOR 7.2 kW, and TOBOR 11 kW – suitable for home and commercial use.

2. Infrastructure Costs

You’ll also need to invest in site preparation and power infrastructure:

Land Lease or Purchase: Costs vary widely by city and location.

Electrical Upgrades: Transformer, cabling, and power grid integration can cost ₹5 to ₹10 lakh.

Civil Work: Parking bays, shelter, lighting, signage, and accessibility features – ₹2 to ₹5 lakh.

Installation: Depending on charger type and electrical capacity, installation can range from ₹50,000 to ₹3 lakh.

3. Software & Networking Costs

Smart EV charging stations are often connected to networks for billing, load management, and user access:

EVSE Management Software: ₹50,000 to ₹2 lakh depending on features (Tobor integrates smart software as part of its offering).

Mobile App Integration: Enables users to find, reserve, and pay at your station.

OCPP Protocols: Ensures interoperability and scalability of your station.

4. Operational & Maintenance Costs

Running an EV charging station includes recurring costs:

Electricity Bills: ₹5–₹15 per kWh, depending on the state and provider.

Internet Connectivity: ₹1,000–₹2,000 per month for online monitoring.

Station Maintenance: ₹50,000 to ₹1 lakh annually.

Staff Salaries: If you have on-site attendants, this could range ₹1 to ₹3 lakh annually.

Marketing: ₹50,000 or more for signage, promotions, and digital visibility.

Total Investment Required

Here’s an estimate of the total cost based on the scale of your EV charging station:

Type

Estimated Range

Level 1 (Basic AC)

₹1 lakh – ₹3 lakh

Level 2 (Commercial AC)

₹3 lakh – ₹6 lakh

DC Fast Charging Station

₹10 lakh – ₹40 lakh

These costs can vary based on customization, location, and electricity load availability. Tobor offers tailored solutions to help you choose the right hardware and software based on your needs.

Government Support and Subsidies

To promote EV adoption and reduce the cost of EV infrastructure:

FAME II Scheme: Offers capital subsidies for charging stations.

State Incentives: States like Delhi, Maharashtra, Kerala, and Gujarat offer reduced electricity tariffs, subsidies up to 25%, and faster approvals.

Ease of Licensing: As per Ministry of Power guidelines, EV charging is a de-licensed activity, making it easier to start.

Return on Investment (ROI)

An EV charging station in a good location with growing EV traffic can break even in 3 to 5 years. Revenue comes from:

Charging fees (per kWh or per session)

Advertisement and partnerships

Value-added services (e.g., parking, cafés, shopping zones nearby)

Final Thoughts

With India's electric mobility market booming, setting up an EV charging station is not only a sustainable choice but also a profitable long-term investment. Whether you're a fleet operator, business owner, or infrastructure developer, now is the perfect time to invest.

For reliable equipment, integrated software, and end-to-end EV charging solutions, Tobor is one of the leading EV charging solution providers in India. From residential setups to large-scale commercial EVSE projects, Tobor supports every step of your journey toward green mobility.

2 notes

·

View notes

Text

„Resonance of Self"

In the soft glow of her bedroom, Clara, a curious biomedical engineer, contemplated an experiment blending her passions for anatomy and sensory exploration. She carefully selected a smooth, pill-sized Bluetooth microphone, designed for safe ingestion, and synced it to her surround-sound speakers. The device, encased in biocompatible silicone, was meant to pass harmlessly through her system. With a deep breath, she swallowed it, lay back, and tuned into her body’s symphony.

Hour 1: The Descent

The microphone’s journey began with rhythmic *thumps* of peristalsis—muscular waves guiding it down her esophagus. Each contraction echoed like a distant drumbeat, syncopated with her accelerating heartbeat. As arousal stirred, her pulse quickened, the dual cadence merging into a hypnotic rhythm. She noted the gurgle of air passing the cardiac sphincter, a hollow *whoosh* as the device entered her stomach. Acidic whispers fizzed like champagne bubbles, a prelude to digestion.

Hour 2: Gastric Sonata

Her stomach, now churning with enzymes, produced low, resonant groans—*borborygmi*—that throbbed through the speakers. The sounds deepened as her body responded to touch; visceral echoes mirrored the flutter of her fingertips. A crescendo of blood rushed in her ears, harmonizing with the stomach’s primal drone. She marveled at how her arousal amplified every gurgle, each surge of gastric juice a counterpoint to her swelling desire.

Hour 3: Intestinal Whispers

Passing into the small intestine, the environment shifted. Gentle, liquid murmurs surrounded the mic—a susurrus of chyme flowing through coiled channels. Soft, wet clicks marked villi absorbing nutrients, like raindrops pattering glass. Clara’s breaths grew shallow; her muscles tensed. The sounds grew intimate, almost conversational, as peristaltic ripples carried the device forward, each undulation syncing with her movements.

Hour 4: Echoes of Pulse

Near the iliac artery, the mic captured her heartbeat’s deep *lub-dub*, now thunderous with excitement. Blood surged in time with her climax, the artery’s vibrations thrumming through the speakers. Mesenteric membranes rustled like silk with each shudder, while distant colonic rumbles provided a bassline. She dissolved into the biomechanical duet, her body’s boundaries blurring.

Hour 5: Quietus

As the device descended into the colon, the sounds mellowed—a tapestry of slow gasps and fluid shifts. Fatigue softened her breathing; her heartbeat steadied. The mic, journey complete, transmitted final whispers: a sigh of peristalsis, a gurgle of transit. Clara smiled, spent and enlightened, her experiment a testament to the body’s hidden music.

Clara later presented her findings in a thesis on bioacoustics, anonymized and clinical. The microphone? Retrieved safely, its data a private ode to curiosity. She cautioned readers: *“The body’s poetry is best heard metaphorically. Leave the hardware to labs.”*

10 notes

·

View notes

Text

Myntra co-founder Mukesh Bansal gets VC funding for new startup Nurix AI

Mukesh Bansal, the co-founder of online fashion major Myntra and Cult.fit, has secured $27.5 million in his new fundraising for artificial intelligence firm Nurix AI. This funding round combines seed investment and series A funding and was supported by Accel and General Catalyst.

Vision and Strategic Partnerships of Nurix AI

Nurix AI is primarily interested in offering AI-based customer communication tools. The kind of AI it seeks to incorporate into companies and organizations is to become functional agents within enterprises, boosting the effectiveness of their communication with an enterprise’s customers. Bansal believes that in the not-too-distant future, advanced intelligent agents supported by the human knowledge base will perform a great portion of work, generating unheard-of levels of efficiency and an increase in product quality.

Nurix AI intends to forge strategic collaborations with AI hardware and product makers. These partnerships help the company aim at the implementation of state-of-the-art AI technologies into the solutions, offering a competitive advantage in the market. Moreover, for Nurix AI, the improvement of the firm’s research & development functions will be vital so that its solutions remain cutting-edge in the field of AI.

Funding Details

The $27.5 million raised shall play a critical role in accelerating the operations of Nurix AI. The collected funds will be utilized for the company’s improvement of its technological portfolio, strengthening research and development, and for the development of strategic collaborations with AI hardware and product providers. The strategic investment has been informed by the growing demand for artificial intelligence solutions across Asia & North America markets and its ability to address this space squarely will be strategic for Nurix AI.

Mukesh Bansal said, “At Nurix, we envision a future where AI agents, guided by human expertise, handle a significant portion of tasks, driving unprecedented gains in productivity and quality.”

Entrepreneurial Journey of Mukesh Bansal

Mukesh Bansal co-founded Myntra in the same year and will be one of India’s most popular fashion e-tailers. Mukesh Bansal in 2014 managed to sell Myntra to his biggest rival Flipkart. Later, he started Curefit, a fitness services firm in 2021. It was renamed Cult.fit after receiving funding from Tata Digital. Mukesh Bansal was also the President of Tata Digital before he started his two-year sabbatical from the company in 2023.

Market Potential and Unique Approach

The overall AI market is rapidly growing and enterprises are choosing AI solutions more frequently to improve customer productivity and interaction. The market research shows that the AI market is projected to grow at a CAGR of 42.2% within the years 2020 to 2027. This growth of improvements in AI technology, growing investment, and the ever-growing need for AI solutions in various organizations.

Nurix AI has the opportunity to stand out as the company offering customer experience services enhanced by artificial intelligence, yet implemented jointly with human contributors. The first service offering is in the BPO sector and the company aims at helping enterprises have highly involved and productive conversations with their customers. With AI integration Nurix AI hopes to minimize the time and energy that customers have to spend interacting with the AI itself.

Conclusion

The new startup founded by Mukesh Bansal, Nurix AI, will be the next major player in AI and customer engagement. After receiving $27.5 million in funding from Accel and General Catalyst, the firm is prepared for increased expansion of operations to meet demand. With the growth and development of Nurix AI, the field of customer interaction with companies through artificial intelligence will be influenced.

4 notes

·

View notes

Text

How to enable Hardware acceleration in Firefox ESR

For reference, my computer has intel integrated graphics, and my operating system is Debian 12 Bookworm with VA-API for graphics. While I had hardware video acceleration enabled for many application, I had to spend some time last year trying to figure out out how to enable it for Firefox. While I found this article and followed it, I couldn't figure out at first how to use the environment variable. So here's a guide now for anyone new to Linux!

First, determine whether you are using the Wayland or X11 protocol Windowing system if you haven't already. In a terminal, enter:

echo "$XDG_SESSION_TYPE"

This will tell you which Windowing system you are using. Once you've followed the instructions in the article linked, edit (as root or with root privileges) /usr/share/applications/firefox-esr.desktop with your favorite text-editing software. I like to use nano! So for example, you would enter in a terminal:

sudo nano /usr/share/applications/firefox-esr.desktop

Then, navigate to the line that says "Exec=...". Replace that line with the following line, depending on whether you use Wayland or X11. If you use Wayland:

Exec=env MOZ_ENABLE_WAYLAND=1 firefox

If you use X11:

Exec=env MOZ_X11_EGL=1 firefox

Then save the file! If you are using the nano editor, press Ctrl+x, then press Y and then press enter! Restart Firefox ESR if you were already on it, and it should now work! Enjoy!

#linux#debian#gnu/linux#hardware acceleration#transfemme#Honestly I might start doing more Linux tutorials!#Linux is fun!

6 notes

·

View notes

Note

Hi, your blog is the first I could find when searching tumblr for OBS (the screen capture program) so I hope it's alright if I ask for some help with OBS?

In short, for some reason whenever I just boot up OBS to record something, the recording comes out horrendously framey. Im talking seconds per frame. After an hour of recording junk footage however it manages to record smoothly? Having to record an hour of junk for good quality video is both a waste of time and computer space (plus a friend of mine said she never had this issue back when she used it herself), so like... do you have any idea what could be going on? How to fix this? As someone who uses it regularly for streams I imagine you've done your fair share of troubleshooting, but even if you don't know (or choose to not answer), I thank you for your time. 👍

I'd be more than happy to help! I'm not sure what you're trying to record or what your video and encoding settings are set to but here are some tips regarding framerate and stuttering issues:

If you're recording a game, try turning off VSYNC.

Check your encoding settings - I'm unsure of your specs and internet speeds so you will likely need to check this for yourself but if you google encoding settings there are tonnes of good guides on them!

Switch encoding to GPU vs CPU or vice versa

Open OBS -> Settings -> Advanced -> Sources -> Uncheck 'Enable Browser Source Hardware Acceleration' then restart OBS.

Run OBS as administrator.

If none of the above help at all please let me know and if you're able to provide your PC specs and what you're recording that will help a tonne with troubleshooting!

2 notes

·

View notes

Text

Lethal Company Terminal Macros

I hope everyone has had a magnificent holiday season. My enjoyment, among more conventional Christmas conduct, has also come from contributing to Lethal Company's popularity boom.

From the ominous aesthetic to its simplicity, I have much praise I can give this game, but by far the most foremost is the way the game so tightly incorporates player communication. As an indicator of being alive it actively encourages banter to at minimum verify one's livelihood, making it all the funnier when abruptly interrupted by someone's quietus.

I’ve also been charmed by the game’s terminal, whose functionality further enhances team communication. The 4 person team where one person uses the terminal monitor to guide players and lock monsters behind shutters is one of my favorite dynamics to play in. When done right, players can avoid getting lost and getting team-wiped is virtually not a threat.

Become Lethal Company Youtuber Wurps made an excellent video on terminal usage. I recommend watching it, (as well as his radar-booster video), as they pertain to this post and demonstrate well the power of the terminal.

youtube

The video brings up the usefulness of macros for the terminal guy but doesn’t really provide a lot in terms of how to obtain them. Understandably, there are many means to get them. This post offers my option, accessible hopefully to folks who haven’t looked into macros before.

I’ve done a rough, broad evaluation of the macros other folk were using before deciding I’d rather design my own set. I attempted to have it be snappy, free, usable without mods, work on any hardware, and easy to adjust. The macros I wrote made my terminal use more comfortable and enjoyable and I hope they can assist others as well.

FULL USE GUIDE

Download and Install LibreAutomate

Download this script file, by yours truly

Open LibreAutomate, select File> Export, import> Import zip… and select the downloaded zip file.

Find the “LETHAL COMPANY TERMINAL SHORTCUTS” script in LibreAutomate and press Run

Open Lethal Company, make sure you are playing in Windowed Fullscreen (or Windowed) in settings.

In game, use the keys F1 through F10 and Shift+F1 through Shift+F10 to accelerate terminal commands.

When done playing, end the script or close LibreAutomate to revert functionality of the F keys.

After hours of play-testing I’ve set on this command configuration:

(made for v45, if later versions add more commands, consider looking for an updated script that re-prioritizes shortcuts)

F1: SWITCH F2: VIEW MONITOR Shift+F1: SWITCH playername Shift+F2: pop-up dialog to input playername F3: disable list of turrets Shift+F3: pop-up dialog to input list of turrets Shift+F7: Forbidden macro from the Wurps video. Avoid using if possible. F4: PING radar-booster Shift+F4: pop-up dialog to input radar-booster name(s) F5 and Shift+F5: FLASH radar-booster (shift is a bit slower but works better) F6: TRANSMIT _ Shift+F6: clears command line F7: SCAN F8: STORE Shift+F8: pop-up dialog to input full shopping list F9: MOONS Shift+F9: MOONS then COMPANY then CONFIRM (w/o enter) F10: STORAGE Shift+F10: BESTIARY

I hope this helps boost enjoyment and prowess with the terminal. Best of fortune to anyone attempting to fill the role of terminal guy in-game!

3 notes

·

View notes

Text

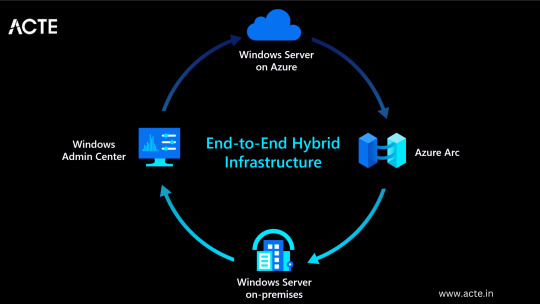

A Complete Guide to Mastering Microsoft Azure for Tech Enthusiasts

With this rapid advancement, businesses around the world are shifting towards cloud computing to enhance their operations and stay ahead of the competition. Microsoft Azure, a powerful cloud computing platform, offers a wide range of services and solutions for various industries. This comprehensive guide aims to provide tech enthusiasts with an in-depth understanding of Microsoft Azure, its features, and how to leverage its capabilities to drive innovation and success.

Understanding Microsoft Azure

A platform for cloud computing and service offered through Microsoft is called Azure. It provides reliable and scalable solutions for businesses to build, deploy, and manage applications and services through Microsoft-managed data centers. Azure offers a vast array of services, including virtual machines, storage, databases, networking, and more, enabling businesses to optimize their IT infrastructure and accelerate their digital transformation.

Cloud Computing and its Significance

Cloud computing has revolutionized the IT industry by providing on-demand access to a shared pool of computing resources over the internet. It eliminates the need for businesses to maintain physical hardware and infrastructure, reducing costs and improving scalability. Microsoft Azure embraces cloud computing principles to enable businesses to focus on innovation rather than infrastructure management.

Key Features and Benefits of Microsoft Azure

Scalability: Azure provides the flexibility to scale resources up or down based on workload demands, ensuring optimal performance and cost efficiency.

Vertical Scaling: Increase or decrease the size of resources (e.g., virtual machines) within Azure.

Horizontal Scaling: Expand or reduce the number of instances across Azure services to meet changing workload requirements.

Reliability and Availability: Microsoft Azure ensures high availability through its globally distributed data centers, redundant infrastructure, and automatic failover capabilities.

Service Level Agreements (SLAs): Guarantees high availability, with SLAs covering different services.

Availability Zones: Distributes resources across multiple data centers within a region to ensure fault tolerance.

Security and Compliance: Azure incorporates robust security measures, including encryption, identity and access management, threat detection, and regulatory compliance adherence.

Azure Security Center: Provides centralized security monitoring, threat detection, and compliance management.

Compliance Certifications: Azure complies with various industry-specific security standards and regulations.

Hybrid Capability: Azure seamlessly integrates with on-premises infrastructure, allowing businesses to extend their existing investments and create hybrid cloud environments.

Azure Stack: Enables organizations to build and run Azure services on their premises.

Virtual Network Connectivity: Establish secure connections between on-premises infrastructure and Azure services.

Cost Optimization: Azure provides cost-effective solutions, offering pricing models based on consumption, reserved instances, and cost management tools.

Azure Cost Management: Helps businesses track and optimize their cloud spending, providing insights and recommendations.

Azure Reserved Instances: Allows for significant cost savings by committing to long-term usage of specific Azure services.

Extensive Service Catalog: Azure offers a wide range of services and tools, including app services, AI and machine learning, Internet of Things (IoT), analytics, and more, empowering businesses to innovate and transform digitally.

Learning Path for Microsoft Azure

To master Microsoft Azure, tech enthusiasts can follow a structured learning path that covers the fundamental concepts, hands-on experience, and specialized skills required to work with Azure effectively. I advise looking at the ACTE Institute, which offers a comprehensive Microsoft Azure Course.

Foundational Knowledge

Familiarize yourself with cloud computing concepts, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS).

Understand the core components of Azure, such as Azure Resource Manager, Azure Virtual Machines, Azure Storage, and Azure Networking.

Explore Azure architecture and the various deployment models available.

Hands-on Experience

Create a free Azure account to access the Azure portal and start experimenting with the platform.

Practice creating and managing virtual machines, storage accounts, and networking resources within the Azure portal.

Deploy sample applications and services using Azure App Services, Azure Functions, and Azure Containers.

Certification and Specializations

Pursue Azure certifications to validate your expertise in Azure technologies. Microsoft offers role-based certifications, including Azure Administrator, Azure Developer, and Azure Solutions Architect.

Gain specialization in specific Azure services or domains, such as Azure AI Engineer, Azure Data Engineer, or Azure Security Engineer. These specializations demonstrate a deeper understanding of specific technologies and scenarios.

Best Practices for Azure Deployment and Management

Deploying and managing resources effectively in Microsoft Azure requires adherence to best practices to ensure optimal performance, security, and cost efficiency. Consider the following guidelines:

Resource Group and Azure Subscription Organization

Organize resources within logical resource groups to manage and govern them efficiently.

Leverage Azure Management Groups to establish hierarchical structures for managing multiple subscriptions.

Security and Compliance Considerations

Implement robust identity and access management mechanisms, such as Azure Active Directory.

Enable encryption at rest and in transit to protect data stored in Azure services.

Regularly monitor and audit Azure resources for security vulnerabilities.

Ensure compliance with industry-specific standards, such as ISO 27001, HIPAA, or GDPR.

Scalability and Performance Optimization

Design applications to take advantage of Azure’s scalability features, such as autoscaling and load balancing.

Leverage Azure CDN (Content Delivery Network) for efficient content delivery and improved performance worldwide.

Optimize resource configurations based on workload patterns and requirements.

Monitoring and Alerting

Utilize Azure Monitor and Azure Log Analytics to gain insights into the performance and health of Azure resources.

Configure alert rules to notify you about critical events or performance thresholds.

Backup and Disaster Recovery

Implement appropriate backup strategies and disaster recovery plans for essential data and applications.

Leverage Azure Site Recovery to replicate and recover workloads in case of outages.

Mastering Microsoft Azure empowers tech enthusiasts to harness the full potential of cloud computing and revolutionize their organizations. By understanding the core concepts, leveraging hands-on practice, and adopting best practices for deployment and management, individuals become equipped to drive innovation, enhance security, and optimize costs in a rapidly evolving digital landscape. Microsoft Azure’s comprehensive service catalog ensures businesses have the tools they need to stay ahead and thrive in the digital era. So, embrace the power of Azure and embark on a journey toward success in the ever-expanding world of information technology.

#microsoft azure#cloud computing#cloud services#data storage#tech#information technology#information security

6 notes

·

View notes

Text

OBS Studio Best Practices: Elevating Your Content Creation

As the world of content creation continues to evolve, OBS Studio (Open Broadcaster Software) has emerged as a powerful and versatile tool for live streaming and recording.

To achieve professional and engaging content, it is essential to adopt best practices when using OBS Studio.

In this comprehensive guide, we will explore a wide range of tips and recommendations to help you make the most of OBS Studio and elevate your live streams and recordings to the next level.

1. Plan Your Content

Before going live or recording, plan your content carefully. Consider the following:

Theme and Focus: Determine the theme and focus of your content. Whether you're streaming games, tutorials, or creative activities, a clear theme helps build a dedicated audience.

Storyboarding: Create a storyboard or outline to organize your scenes and sources, ensuring smooth transitions and a structured presentation.

Engaging Introductions: Start your streams with captivating intros that hook your audience and set the tone for the rest of the content.

2. Optimize Your Settings

Efficient OBS Studio settings are crucial for a seamless content creation experience. Consider the following optimizations:

Output Settings: Adjust output resolution, bitrate, and framerate based on your internet connection and desired video quality.

Hardware Acceleration: Enable hardware acceleration if your system supports it to offload some processing tasks from the CPU.

Use Scenes and Sources Wisely: Organize your scenes and sources to minimize clutter and confusion during your streams or recordings.

youtube

3. Audio Quality Matters

Audio is a critical aspect of content creation. Pay attention to the following audio best practices:

Microphone Quality: Invest in a good-quality microphone to deliver clear and crisp audio to your viewers.

Noise Suppression: Use OBS Studio's noise suppression filters to minimize background noise and enhance audio clarity.

Audio Balance: Ensure a proper balance between game audio, voice commentary, and background music.

4. Visual Appeal with Overlays

Engage your audience visually by using appealing overlays:

Custom Overlays: Create custom overlays that reflect your brand and add a professional touch to your streams.

Alerts and Widgets: Integrate alerts for followers, subscribers, and donations to acknowledge and appreciate your audience's support.

5. Master Transitions

Smooth scene transitions create a polished presentation:

Fades and Cuts: Use subtle fades or quick cuts between scenes for smooth transitions.

Stinger Transitions: Implement stinger transitions with dynamic video effects for a professional touch.

6. Engage with Your Audience

Building a connection with your audience is vital for content creators:

Interactive Elements: Add chat integration and interact with your viewers to create a sense of community.

Respond to Chat: Engage with your viewers by responding to their messages and questions during your streams.

7. Monitor Performance

Keep an eye on your OBS Studio performance to ensure a seamless streaming experience:

Performance Stats: Use OBS Studio's performance statistics to monitor CPU usage, dropped frames, and streaming bitrate.

Test Runs: Conduct test runs before going live to check the audio, video quality, and transitions.

8. Consistency is Key

Establishing a consistent schedule builds trust with your audience:

Regular Streams: Stick to a consistent streaming schedule to let your audience know when to expect your content.

Content Variety: Offer a mix of content to keep your viewers engaged and interested.

Conclusion

OBS Studio's best practices are fundamental to creating professional, engaging, and polished content.

By planning your content, optimizing settings, focusing on audio quality, using captivating visuals, mastering transitions, engaging with your audience, monitoring performance, and maintaining consistency, you can elevate your content creation game with OBS Studio.

Embrace these best practices and unlock the full potential of OBS Studio to connect with your viewers and build a dedicated community around your content. Happy streaming and recording!

3 notes

·

View notes

Link

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] From the manufacturer SanDisk Extreme Portable SSD Your life’s an adventure. To capture and keep its best moments, you need fast, high-capacity storage that accelerates every move. The SanDisk Extreme Portable SSD features 1050MB/s** read and 1000MB/s** write speeds, letting you store your content and creations on a fast drive that fits seamlessly into your active lifestyle. What’s in the Box: · SanDisk Extreme Portable SSD · Guide · Type-C to Type-C cable · Type-C to Type-A adapter At a Glance Fast NVMe solid state performance in a portable, high-capacity drive Nearly 2x as fast as our previous generation Drop protection, IP65 water and dust resistance, X-ray and shock proofing(3) A durable silicon shell for added protection Handy carabiner loop to secure the drive to your belt loop or backpack Help keep private content private with the included hardware encryption(2) From SanDisk, the brand trusted by professional photographers worldwide Easily manage files and automatically free up space with the SanDisk Memory Zone app.(4) Powerful yet Portable Get fast NVMe solid state performance featuring 1050MB/s** read and 1000MB/s** write speeds in a portable, high-capacity drive that’s perfect for creating amazing content or capturing incredible footage. Tough Enough to Take with You Up to three-meter drop protection and IP65 water and dust resistance(3) mean this durable drive can take a beating. Travel Confidently A durable silicon shell that offers a premium feel and added protection to the drive’s exterior. It Goes Where You Go Use the handy carabiner loop to attach the drive to your belt loop or backpack for extra security when you’re out in the world. Put a Lock on Your Files Help keep private content private with the included password protection featuring 256‐bit AES hardware encryption.(2) Professional-Grade Storage From SanDisk, the brand professional photographers worldwide trust to handle their best shots and footage.

Legal Disclaimers * 1GB = 1,000,000,000 bytes, 1TB = 1,000,000,000,000 bytes. Actual user capacity less. ** Up to 1050MB/s read speed, up to 1000MB/s write speed. Based on internal testing; performance may be lower depending on host device, interface, usage conditions and other factors. 1MB=1,000,000 bytes. 1. See official SanDisk website for details. 2. Password protection uses 256-bit AES encryption and is supported by Windows 8, Windows 10 and macOS v10.9+ (Software download required for Mac, see official SanDisk website.) 3. Based on internal testing. IPEC 60529 IP 55: Tested to withstand water flow (30 kPa) at 3 min.; limited dust contact does not interfere with operation. Must be clean and dry before use. 4. Download and installation required; See official SanDisk website for Memory Zone details. Digital Storage Capacity: 1TB Hardware Interface: NVMe | Included USB-C Cable with USB-C to USB-A Adapter Read Speed: 1050MB/s, Write Speed: 1000MB/s | Compatible with PC, Mac Computers, Smartphones, and tablet Up to 3 meter drop protection and IP65 water and dust resistance Included password protection- 256-bit AES hardware encryption Warranty: 5 Year Limited Warranty provided by SanDisk Handy carabiner loop to secure the drive to your belt loop or backpack plus Memory ZoneTM file management app for on-the-go access [ad_2]

0 notes

Text

How to Make AI: A Guide to An AI Developer’s Tech Stack

Globally, artificial intelligence (AI) is revolutionizing a wide range of industries, including healthcare and finance. Knowing the appropriate tools and technologies is crucial if you want to get into AI development. A well-organized tech stack can make all the difference, regardless of your level of experience as a developer. The top IT services in Qatar can assist you in successfully navigating AI development if you require professional advice.

Knowing the Tech Stack for AI Development

Programming languages, frameworks, cloud services, and hardware resources are all necessary for AI development. Let's examine the key elements of a tech stack used by an AI developer. 1. Programming Languages for the Development of AI

The first step in developing AI is selecting the appropriate programming language. Among the languages that are most frequently used are:

Because of its many libraries, including TensorFlow, PyTorch, and Scikit-Learn, Python is the most widely used language for artificial intelligence (AI) and machine learning (ML). • R: Perfect for data analysis and statistical computing. • Java: Used in big data solutions and enterprise AI applications. • C++: Suggested for AI-powered gaming apps and high-performance computing. Integrating web design services with AI algorithms can improve automation and user experience when creating AI-powered web applications.

2. Frameworks for AI and Machine Learning

AI/ML frameworks offer pre-built features and resources to speed up development. Among the most widely utilized frameworks are: • TensorFlow: Google's open-source deep learning application library. • PyTorch: A versatile deep learning framework that researchers prefer. • Scikit-Learn: Perfect for conventional machine learning tasks such as regression and classification.

Keras is a high-level TensorFlow-based neural network API. Making the most of these frameworks is ensured by utilizing AI/ML software development expertise in order to stay ahead of AI innovation.

3. Tools for Data Processing and Management Large datasets are necessary for AI model training and optimization. Pandas, a robust Python data manipulation library, is one of the most important tools for handling and processing AI data. • Apache Spark: A distributed computing platform designed to manage large datasets. • Google BigQuery: An online tool for organizing and evaluating sizable datasets. Hadoop is an open-source framework for processing large amounts of data and storing data in a distributed manner. To guarantee flawless performance, AI developers must incorporate powerful data processing capabilities, which are frequently offered by the top IT services in Qatar.

4. AI Development Cloud Platforms

Because it offers scalable resources and computational power, cloud computing is essential to the development of AI. Among the well-known cloud platforms are Google Cloud AI, which provides AI development tools, AutoML, and pre-trained models. • Microsoft Azure AI: This platform offers AI-driven automation, cognitive APIs, and machine learning services. • Amazon Web Services (AWS) AI: Offers computing resources, AI-powered APIs, and deep learning AMIs. Integrating cloud services with web design services facilitates the smooth deployment and upkeep of AI-powered web applications.

5. AI Hardware and Infrastructure

The development of AI demands a lot of processing power. Important pieces of hardware consist of: • GPUs (Graphics Processing Units): Crucial for AI training and deep learning. • Tensor Processing Units (TPUs): Google's hardware accelerators designed specifically for AI. • Edge Computing Devices: These are used to install AI models on mobile and Internet of Things devices.

To maximize hardware utilization, companies looking to implement AI should think about hiring professionals to develop AI/ML software.

Top Techniques for AI Development

1. Choosing the Appropriate AI Model Depending on the needs of your project, select between supervised, unsupervised, and reinforcement learning models.

2. Preprocessing and Augmenting Data

To decrease bias and increase model accuracy, clean and normalize the data.

3. Constant Model Training and Improvement

For improved performance, AI models should be updated frequently with fresh data.

4. Ensuring Ethical AI Procedures

To avoid prejudice, maintain openness, and advance justice, abide by AI ethics guidelines.

In conclusion

A strong tech stack, comprising cloud services, ML frameworks, programming languages, and hardware resources, is necessary for AI development. Working with the top IT services in Qatar can give you the know-how required to create and implement AI solutions successfully, regardless of whether you're a business or an individual developer wishing to use AI. Furthermore, combining AI capabilities with web design services can improve automation, productivity, and user experience. Custom AI solutions and AI/ML software development are our areas of expertise at Aamal Technology Solutions. Get in touch with us right now to find out how AI can transform your company!

#Best IT Service Provider in Qatar#Top IT Services in Qatar#IT services in Qatar#web designing services in qatar#web designing services#Mobile App Development#Mobile App Development services in qatar

0 notes

Text

Leveraging cloud partnerships for competitive advantage

A cloud partnership is a strategic alliance between businesses and cloud service providers that enables organizations to harness the full power of cloud computing. By partnering with experienced cloud vendors or technology firms, companies gain access to scalable infrastructure, advanced software tools, and expert support that accelerate digital transformation and operational efficiency.

In today’s fast-paced digital landscape, more businesses are turning to cloud partnerships to streamline IT operations, reduce costs, enhance security, and scale with agility. Whether you're a startup seeking flexible cloud hosting or an enterprise migrating legacy systems to the cloud, forming the right partnership can be a game-changer.

Cloud partnerships can take various forms:

Infrastructure partnerships (with providers like AWS, Microsoft Azure, or Google Cloud) offer scalable storage, computing power, and networking solutions.

Software partnerships provide access to SaaS platforms, DevOps tools, and AI/ML capabilities tailored to your industry.

Managed service partnerships allow companies to outsource ongoing cloud maintenance, monitoring, and support to certified professionals.

The benefits of a cloud partnership include:

Faster time-to-market through streamlined deployment and automation

Cost optimization by reducing hardware investments and paying only for what you use

Access to innovation through cutting-edge tools and regular updates from cloud providers

Transparency, trust, and common objectives are the foundation of a fruitful cloud partnership. It requires open communication, well-defined service-level agreements (SLAs), and alignment between business objectives and technical strategy. The right partner will not only provide infrastructure and tools but also guide your cloud journey—from planning and migration to optimization and ongoing innovation.

Whether you're modernizing IT systems, launching a cloud-native app, or building a hybrid architecture, a strategic cloud partnership from CONNACT ensures you have the right support and technology to thrive.

Interested in finding a trusted cloud partner for your business or exploring hybrid cloud collaboration opportunities? Let’s CONNACT help to build something powerful together.

0 notes