#hardware acceleration

Explore tagged Tumblr posts

Text

"Netflix is using hardware acceleration to stop you from streaming to discord"

I AM BEGGING YOU TO LEARN WHAT HARDWARE ACCELERATION IS. MOST OF THE TIME A WEBSITE IS GOING TO SAY "HEY DISPLAY THIS IMAGE" AND YOUR GPU GOES AND SAYS "YEAH THATS A GREAT IDEA, CPU GET ON THAT" AND SO IT GIVES THE CPU THE DATA TO WRITE THE IMAGE TO YOUR SCREEN. THIS IS THE SAME FUCKING PLACE THAT DISCORD PULLS FROM TO SHARE YOUR SCREEN WHEN YOU'RE IN VC WITH YOUR FRIENDS SO IF SOMETHING SUDDENLY MAKES IT SO THAT THE CPU DOES NOT HAVE THAT DATA, FOR INSTANCE IF YOU HAVE HARDWARE ACCELERATION ENABLED ON YOUR BROWSER WHICH LETS YOUR GPU WRITE DIRECTLY TO YOUR SCREEN INSTEAD OF HANDING THE TASK OFF TO YOUR CPU, WELL THEN DISCORD IS JUST GONNA COME UP EMPTY, ISN'T IT?

IT IS NOT A PLOY BY NETFLIX TO MAKE YOUR FRIENDS PAY FOR SUBSCRIPTIONS I SWEAR TO FUCKING GOD IT IS A LEGITIMATE FUNCTION BECAUSE MOST PEOPLE DO NOT HAVE A CAPABLE PC AND THEY NEED TO SAVE CPU RESOURCES

I WORDED SOME OF THIS WRONG BUT IM JUST TIRED OF EXPLAINING WHAT HARDWARE ACCELERATION IS HOLY SHIT

69 notes

·

View notes

Text

FYI, If you're watching things on streaming services (like The Legend of Vox Machina) and you can't screenshot or screenshare because it goes black, that's caused by hardware acceleration in your web browser and can be turned off.

This article details how to disable hardware acceleration in Discord and multiple web browsers. It's a very simple setting to just toggle.

Once you disable this, screenshotting will work just fine and you can have a screensharing watch party with your friends over Discord.

Also please switch to Firefox because chromium-based browsers including Chrome, Opera, Vivaldi, and Edge are getting increasingly hostile to user rights (in everything, but especially media playback). Firefox is the only browser out there focused on users over corporations.

Amazon will artificially lower the video quality to 1920 × 1080 on Firefox specifically because it blocks Amazon’s invasive video DRM. But that resolution is just fine, and fuck Amazon.

12 notes

·

View notes

Text

How to enable Hardware acceleration in Firefox ESR

For reference, my computer has intel integrated graphics, and my operating system is Debian 12 Bookworm with VA-API for graphics. While I had hardware video acceleration enabled for many application, I had to spend some time last year trying to figure out out how to enable it for Firefox. While I found this article and followed it, I couldn't figure out at first how to use the environment variable. So here's a guide now for anyone new to Linux!

First, determine whether you are using the Wayland or X11 protocol Windowing system if you haven't already. In a terminal, enter:

echo "$XDG_SESSION_TYPE"

This will tell you which Windowing system you are using. Once you've followed the instructions in the article linked, edit (as root or with root privileges) /usr/share/applications/firefox-esr.desktop with your favorite text-editing software. I like to use nano! So for example, you would enter in a terminal:

sudo nano /usr/share/applications/firefox-esr.desktop

Then, navigate to the line that says "Exec=...". Replace that line with the following line, depending on whether you use Wayland or X11. If you use Wayland:

Exec=env MOZ_ENABLE_WAYLAND=1 firefox

If you use X11:

Exec=env MOZ_X11_EGL=1 firefox

Then save the file! If you are using the nano editor, press Ctrl+x, then press Y and then press enter! Restart Firefox ESR if you were already on it, and it should now work! Enjoy!

#linux#debian#gnu/linux#hardware acceleration#transfemme#Honestly I might start doing more Linux tutorials!#Linux is fun!

6 notes

·

View notes

Text

I took this off a long time ago just because I wanted to be able to do screenshots that weren’t just black boxes.

firefox just started doing this too so remember kids if you want to stream things like netflix or hulu over discord without the video being blacked out you just have to disable hardware acceleration in your browser settings!

158K notes

·

View notes

Text

https://electronicsbuzz.in/hardware-acceleration-enhancing-performance-in-industrial-computing/

#hardware acceleration#precision#GPUs#automation#reduce downtime#HardwareAcceleration#ManufacturingTech#Industry40#AI#SmartFactories#Automation#powerelectronics#powermanagement#powersemiconductor

0 notes

Text

AutoBNN: Probabilistic time series forecasting with compositional bayesian neural networks

New Post has been published on https://thedigitalinsider.com/autobnn-probabilistic-time-series-forecasting-with-compositional-bayesian-neural-networks/

AutoBNN: Probabilistic time series forecasting with compositional bayesian neural networks

Posted by Urs Köster, Software Engineer, Google Research

Time series problems are ubiquitous, from forecasting weather and traffic patterns to understanding economic trends. Bayesian approaches start with an assumption about the data’s patterns (prior probability), collecting evidence (e.g., new time series data), and continuously updating that assumption to form a posterior probability distribution. Traditional Bayesian approaches like Gaussian processes (GPs) and Structural Time Series are extensively used for modeling time series data, e.g., the commonly used Mauna Loa CO2 dataset. However, they often rely on domain experts to painstakingly select appropriate model components and may be computationally expensive. Alternatives such as neural networks lack interpretability, making it difficult to understand how they generate forecasts, and don’t produce reliable confidence intervals.

To that end, we introduce AutoBNN, a new open-source package written in JAX. AutoBNN automates the discovery of interpretable time series forecasting models, provides high-quality uncertainty estimates, and scales effectively for use on large datasets. We describe how AutoBNN combines the interpretability of traditional probabilistic approaches with the scalability and flexibility of neural networks.

AutoBNN

AutoBNN is based on a line of research that over the past decade has yielded improved predictive accuracy by modeling time series using GPs with learned kernel structures. The kernel function of a GP encodes assumptions about the function being modeled, such as the presence of trends, periodicity or noise. With learned GP kernels, the kernel function is defined compositionally: it is either a base kernel (such as Linear, Quadratic, Periodic, Matérn or ExponentiatedQuadratic) or a composite that combines two or more kernel functions using operators such as Addition, Multiplication, or ChangePoint. This compositional kernel structure serves two related purposes. First, it is simple enough that a user who is an expert about their data, but not necessarily about GPs, can construct a reasonable prior for their time series. Second, techniques like Sequential Monte Carlo can be used for discrete searches over small structures and can output interpretable results.

AutoBNN improves upon these ideas, replacing the GP with Bayesian neural networks (BNNs) while retaining the compositional kernel structure. A BNN is a neural network with a probability distribution over weights rather than a fixed set of weights. This induces a distribution over outputs, capturing uncertainty in the predictions. BNNs bring the following advantages over GPs: First, training large GPs is computationally expensive, and traditional training algorithms scale as the cube of the number of data points in the time series. In contrast, for a fixed width, training a BNN will often be approximately linear in the number of data points. Second, BNNs lend themselves better to GPU and TPU hardware acceleration than GP training operations. Third, compositional BNNs can be easily combined with traditional deep BNNs, which have the ability to do feature discovery. One could imagine “hybrid” architectures, in which users specify a top-level structure of Add(Linear, Periodic, Deep), and the deep BNN is left to learn the contributions from potentially high-dimensional covariate information.

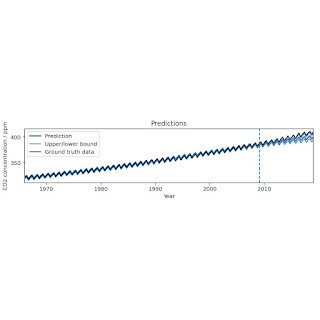

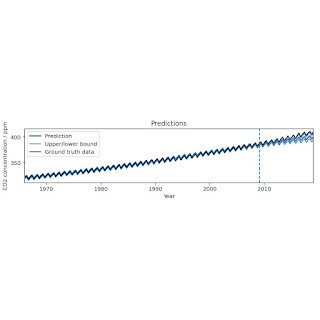

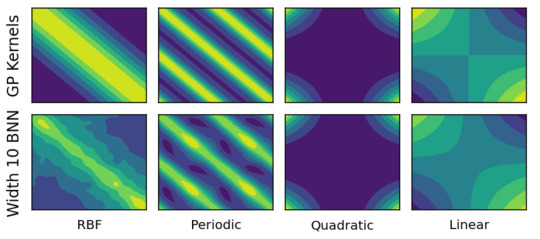

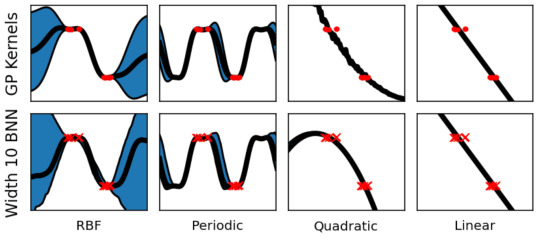

How might one translate a GP with compositional kernels into a BNN then? A single layer neural network will typically converge to a GP as the number of neurons (or “width”) goes to infinity. More recently, researchers have discovered a correspondence in the other direction — many popular GP kernels (such as Matern, ExponentiatedQuadratic, Polynomial or Periodic) can be obtained as infinite-width BNNs with appropriately chosen activation functions and weight distributions. Furthermore, these BNNs remain close to the corresponding GP even when the width is very much less than infinite. For example, the figures below show the difference in the covariance between pairs of observations, and regression results of the true GPs and their corresponding width-10 neural network versions.

Comparison of Gram matrices between true GP kernels (top row) and their width 10 neural network approximations (bottom row).

Comparison of regression results between true GP kernels (top row) and their width 10 neural network approximations (bottom row).

Finally, the translation is completed with BNN analogues of the Addition and Multiplication operators over GPs, and input warping to produce periodic kernels. BNN addition is straightforwardly given by adding the outputs of the component BNNs. BNN multiplication is achieved by multiplying the activations of the hidden layers of the BNNs and then applying a shared dense layer. We are therefore limited to only multiplying BNNs with the same hidden width.

Using AutoBNN

The AutoBNN package is available within Tensorflow Probability. It is implemented in JAX and uses the flax.linen neural network library. It implements all of the base kernels and operators discussed so far (Linear, Quadratic, Matern, ExponentiatedQuadratic, Periodic, Addition, Multiplication) plus one new kernel and three new operators:

a OneLayer kernel, a single hidden layer ReLU BNN,

a ChangePoint operator that allows smoothly switching between two kernels,

a LearnableChangePoint operator which is the same as ChangePoint except position and slope are given prior distributions and can be learnt from the data, and

a WeightedSum operator.

WeightedSum combines two or more BNNs with learnable mixing weights, where the learnable weights follow a Dirichlet prior. By default, a flat Dirichlet distribution with concentration 1.0 is used.

WeightedSums allow a “soft” version of structure discovery, i.e., training a linear combination of many possible models at once. In contrast to structure discovery with discrete structures, such as in AutoGP, this allows us to use standard gradient methods to learn structures, rather than using expensive discrete optimization. Instead of evaluating potential combinatorial structures in series, WeightedSum allows us to evaluate them in parallel.

To easily enable exploration, AutoBNN defines a number of model structures that contain either top-level or internal WeightedSums. The names of these models can be used as the first parameter in any of the estimator constructors, and include things like sum_of_stumps (the WeightedSum over all the base kernels) and sum_of_shallow (which adds all possible combinations of base kernels with all operators).

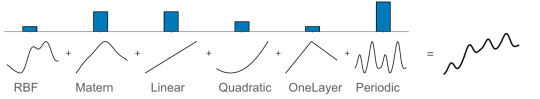

Illustration of the sum_of_stumps model. The bars in the top row show the amount by which each base kernel contributes, and the bottom row shows the function represented by the base kernel. The resulting weighted sum is shown on the right.

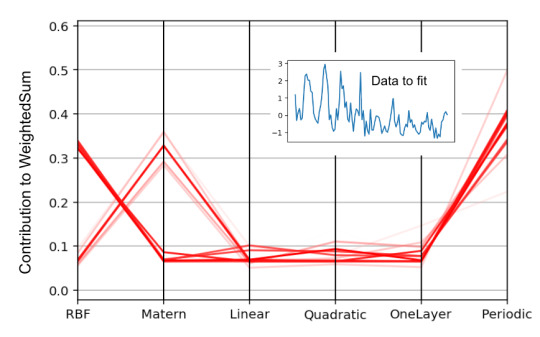

The figure below demonstrates the technique of structure discovery on the N374 (a time series of yearly financial data starting from 1949) from the M3 dataset. The six base structures were ExponentiatedQuadratic (which is the same as the Radial Basis Function kernel, or RBF for short), Matern, Linear, Quadratic, OneLayer and Periodic kernels. The figure shows the MAP estimates of their weights over an ensemble of 32 particles. All of the high likelihood particles gave a large weight to the Periodic component, low weights to Linear, Quadratic and OneLayer, and a large weight to either RBF or Matern.

Parallel coordinates plot of the MAP estimates of the base kernel weights over 32 particles. The sum_of_stumps model was trained on the N374 series from the M3 dataset (insert in blue). Darker lines correspond to particles with higher likelihoods.

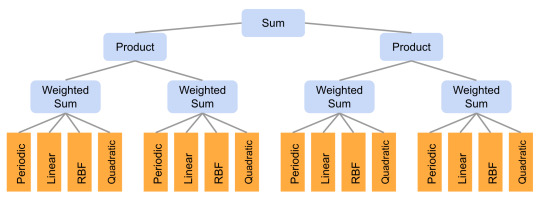

By using WeightedSums as the inputs to other operators, it is possible to express rich combinatorial structures, while keeping models compact and the number of learnable weights small. As an example, we include the sum_of_products model (illustrated in the figure below) which first creates a pairwise product of two WeightedSums, and then a sum of the two products. By setting some of the weights to zero, we can create many different discrete structures. The total number of possible structures in this model is 216, since there are 16 base kernels that can be turned on or off. All these structures are explored implicitly by training just this one model.

Illustration of the “sum_of_products” model. Each of the four WeightedSums have the same structure as the “sum_of_stumps” model.

We have found, however, that certain combinations of kernels (e.g., the product of Periodic and either the Matern or ExponentiatedQuadratic) lead to overfitting on many datasets. To prevent this, we have defined model classes like sum_of_safe_shallow that exclude such products when performing structure discovery with WeightedSums.

For training, AutoBNN provides AutoBnnMapEstimator and AutoBnnMCMCEstimator to perform MAP and MCMC inference, respectively. Either estimator can be combined with any of the six likelihood functions, including four based on normal distributions with different noise characteristics for continuous data and two based on the negative binomial distribution for count data.

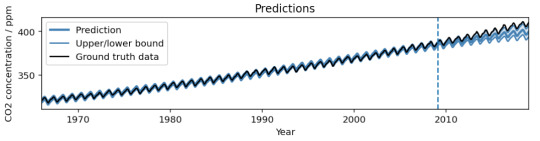

Result from running AutoBNN on the Mauna Loa CO2 dataset in our example colab. The model captures the trend and seasonal component in the data. Extrapolating into the future, the mean prediction slightly underestimates the actual trend, while the 95% confidence interval gradually increases.

To fit a model like in the figure above, all it takes is the following 10 lines of code, using the scikit-learn–inspired estimator interface:

import autobnn as ab model = ab.operators.Add( bnns=(ab.kernels.PeriodicBNN(width=50), ab.kernels.LinearBNN(width=50), ab.kernels.MaternBNN(width=50))) estimator = ab.estimators.AutoBnnMapEstimator( model, 'normal_likelihood_logistic_noise', jax.random.PRNGKey(42), periods=[12]) estimator.fit(my_training_data_xs, my_training_data_ys) low, mid, high = estimator.predict_quantiles(my_training_data_xs)

Conclusion

AutoBNN provides a powerful and flexible framework for building sophisticated time series prediction models. By combining the strengths of BNNs and GPs with compositional kernels, AutoBNN opens a world of possibilities for understanding and forecasting complex data. We invite the community to try the colab, and leverage this library to innovate and solve real-world challenges.

Acknowledgements

AutoBNN was written by Colin Carroll, Thomas Colthurst, Urs Köster and Srinivas Vasudevan. We would like to thank Kevin Murphy, Brian Patton and Feras Saad for their advice and feedback.

#Advice#Algorithms#Blue#Building#classes#CO2#code#CoLab#Combinatorial#Community#comparison#continuous#data#datasets#direction#economic#Engineer#express#financial#flax#form#framework#Future#Google#GPS#gpu#Hardware#hardware acceleration#how#hybrid

0 notes

Text

Fine, Thailand is pretty. No one was arguing with that! Now make the plot better. Fifa literally collapses and... he wakes up up and neither he nor Hem seem to care that he collapsed? Why? I mean, the rest of it is dumb enough and I'm still annoyed that the show has decided that Fifa just needs to bury himself in dirt and stop thinking about all those dreams of leaving and stuff and also the idea that he, on his first day, should forever be blamed for giving someone the wrong lunch who then didn't check anything and that everyone is gonna hold it against him because of his first day on a job he doesn't even want...

But anyway that's a very pretty forest shot and I like it. Too bad the rest of the show is not gonna live up to it.

#lost in the woods#thai series#bl drama#thai bl#asianlgbtqdramas#asian lgbtq dramas#thai bl series#thai drama#thaibl#thai bl drama#bl series#i had to spend two hours triyng to get this to play in firefox#to nothing#and then get it to play in edge#and then turn off hardware acceleration so i could screenshot this#but i did it!#not worth it

16 notes

·

View notes

Text

Also the reason you can't take screenshots on Netflix isn't because they're being dicks it's because of something called Hardware Acceleration. Basically Netflix is drawing to your screen using your GPU but your screenshot program reads the screen using your CPU, and never the twain shall meet. Of course, you can screenshot games and other GPU using programs so I'm sure the streaming platforms prefer it this way, but the upshot is this:

You can screenshot streaming services if you turn hardware acceleration off! It's a setting in your browser and you'll have to restart it but then you can screenshot away!

“To protect their copyright, streaming sites do not allow for screenshotting of any kind.”

Hey remember VHS where you bought a box to plug into your tv and you could legally record whatever was playing and then own it for free forever

#hardware acceleration#fuck netflix#netflix#screenshot#copyright#firefox#get firefox btw#seriously chrome is stupid and evil#like google tracks you thru chrome even when websites don't#also firefox is open source and thats beautiful#im not gonna read the open source code but surely somebody did

80K notes

·

View notes

Text

honestly the Shat's (first) character in Columbo would do numbers here

image ID: two screenshots of William Shatner from Columbo 6.01, in the first he's wearing a very seventies wide-collar blue shirt and necklace with the caption: "All indicate at least the possibility of a doubt as to the gender," in the second he's wearing a white pinstripe suit with an ascot and a carnation with a blank expression and the caption: "If you tell me that one more time, I'm gonna kill myself."

#Doubt The Gender#william shatner#fade in to murder#columbo#i had to fight with firefox drm and hardware acceleration a LOT to get these screenshots lmao

16 notes

·

View notes

Text

beyond live changing their streaming policy so people can't screen record the video is so....

#all videos you can find of it online are super short or a screen recorded with a phone like...#apparently screens go black if you don't stream the concert in the way they implemented earlier this month#kinda like streaming netflix on discord#doesn't work unless hardware acceleration is on#and even then it could still be blocked

19 notes

·

View notes

Text

Part of the reason I post less now is because I'm a little more likely to let people be wrong on the internet

a little

(just saw a post thread making a lot of valid points, but the original post was based on another post that turned out to be a misunderstanding. I understand, though, that me jumping in and saying that would not be helpful to the discussion that developed.)

7 notes

·

View notes

Text

THANK YOU. I went and searched for it a few times ago when I saw this recommended, and then stalled out when I couldn't find it. Now that I know there's extra steps involved for Firefox, I will try again!

hbo max blocks screenshots even when I use the snipping tool AND firefox AND ublock which is a fucking first. i will never understand streaming services blocking the ability to take screenshots thats literally free advertising for your show right there. HOW THE HELL IS SOMEBODY GONNA PIRATE YOUR SHOW THROUGH SCREENSHOTS. JACKASS

124K notes

·

View notes

Text

Lower Decks is Over and it's Given Me Hope

It's time for me to admit my greatest shame...

I am in my 30s.

That's right, I'm the weird one who wont get off the internet. I'm supposed to be having kids and working a Job™ and instead I'm on Tumblr, the website that is very much so only populated by minors and not a collection of millennials and gen xers who will never move on.

One of the things I think I have to grapple with the most as an adult is endings. And not "oh god oh god I'm reaching the end" ending, but just stuff like the things I love and had been a constant in my life just... Concluding. Gone. I thought as a Firefly fan I could handle a show being cancelled but fact is I was a fraud, I didn't watch it until it was over.

But I'm talking long running, decade long loves. The things that I couldn't even think about ending. Like fucking Sesame Street.

What I'm saying is Nanami Kento was the realest dude.

The Venture Brothers ended after 15 years. Final Fantasy XIV hit its big ending after 10. Destiny had the best ending it possibly could have had with the Final Shape. Naruto ended for me twice, first the Manga then the Anime. I didn't even watch the Anime, but I watched those final episodes and just like finding out who gave birth to the Venture Bros, and just like watching the credits roll on my Warrior of Light, I felt an immense feeling of catharsis. This was it. It was over. my investment is satisfied.

Here FF14 players might be contractually obligated to post the screenshot after mentioning the screenshot and I don't wanna take chances.

There was a big part of me that thought the reason I had such an emotional reaction to these endings was because of the investment, and that I'd probably never experience anything like it again. I wont have that wave of memories, a joy of seeing however many years of history culminate into one big ending.

So Lower Decks was only like, 3 years old? And as the final minutes of the finale panned over all of the characters on the Cerritos we've grown attached to over the years, I felt the catharsis. I felt that my investment was satisfied.

And I'm going to feel it again, because I was a dumb idiot wrapped up in despair over being officially Old. I didn't feel satisfied by the endings of the things I loved because they were old, I felt satisfied because they were satisfying endings.

So, thanks Lower Decks. I look forward to seeing what replaces you.

#rambling#star trek#lower decks#endings#being old#The reason there's no Star Trek screenshots is because paramount+ goes black when I try to take one#and it's like 1am and I'm too tired to turn off hardware acceleration or whatever the fuck you're supposed to do

9 notes

·

View notes

Text

every time i have to open chrome for something i can't do on firefox i feel dirty

#finally figured out how to take screenshots of streaming sites on a mac#unfortunately the cost is a sliver of my soul#(deactivate hardware acceleration doesn't get it done on firefox for mac alas but it does work on chrome)#(and on edge i imagine as a chromium browser but that would be. seriously funny.)#(make up a guy: guy who uses microsoft edge on a mac)

21 notes

·

View notes

Text

For some reason gagaoolala no longer works with Firefox and that just makes me want to not watch anything at all instead of switching to Chrome.

#jane watches stuff#(or not)#it's not ublock or hardware acceleration - i tried#imagine paying 9 quid for streaming and then having to sail the seven seas anyway :(((

2 notes

·

View notes

Text

alright so how are you guys screen recording this

5 notes

·

View notes