#neural network in artificial intelligence

Explore tagged Tumblr posts

Text

Frank Rosenblatt, often cited as the Father of Machine Learning, photographed in 1960 alongside his most-notable invention: the Mark I Perceptron machine — a hardware implementation for the perceptron algorithm, the earliest example of an artificial neural network, est. 1943.

#frank rosenblatt#tech history#machine learning#neural network#artificial intelligence#AI#perceptron#60s#black and white#monochrome#technology#u

820 notes

·

View notes

Text

[image ID: Bluesky post from user marawilson that reads

“Anyway, Al has already stolen friends' work, and is going to put other people out of work. I do not think a political party that claims to be the party of workers in this country should be using it. Even for just a silly joke.”

beneath a quote post by user emeraldjaguar that reads

“Daily reminder that the underlying purpose of Al is to allow wealth to access skill while removing from the skilled the ability to access wealth.” /end ID]

#ai#artificial intelligence#machine learning#neural network#large language model#chat gpt#chatgpt#scout.txt#but obvs not OP

22 notes

·

View notes

Text

Neturbiz Enterprises - AI Innov7ions

Our mission is to provide details about AI-powered platforms across different technologies, each of which offer unique set of features. The AI industry encompasses a broad range of technologies designed to simulate human intelligence. These include machine learning, natural language processing, robotics, computer vision, and more. Companies and research institutions are continuously advancing AI capabilities, from creating sophisticated algorithms to developing powerful hardware. The AI industry, characterized by the development and deployment of artificial intelligence technologies, has a profound impact on our daily lives, reshaping various aspects of how we live, work, and interact.

#ai technology#Technology Revolution#Machine Learning#Content Generation#Complex Algorithms#Neural Networks#Human Creativity#Original Content#Healthcare#Finance#Entertainment#Medical Image Analysis#Drug Discovery#Ethical Concerns#Data Privacy#Artificial Intelligence#GANs#AudioGeneration#Creativity#Problem Solving#ai#autonomous#deepbrain#fliki#krater#podcast#stealthgpt#riverside#restream#murf

17 notes

·

View notes

Text

Detecting AI-generated research papers through "tortured phrases"

So, a recent paper found and discusses a new way to figure out if a "research paper" is, in fact, phony AI-generated nonsense. How, you may ask? The same way teachers and professors detect if you just copied your paper from online and threw a thesaurus at it!

It looks for “tortured phrases”; that is, phrases which resemble standard field-specific jargon, but seemingly mangled by a thesaurus. Here's some examples (transcript below the cut):

profound neural organization - deep neural network

(fake | counterfeit) neural organization - artificial neural network

versatile organization - mobile network

organization (ambush | assault) - network attack

organization association - network connection

(enormous | huge | immense | colossal) information - big data

information (stockroom | distribution center) - data warehouse

(counterfeit | human-made) consciousness - artificial intelligence (AI)

elite figuring - high performance computing

haze figuring - fog/mist/cloud computing

designs preparing unit - graphics processing unit (GPU)

focal preparing unit - central processing unit (CPU)

work process motor - workflow engine

facial acknowledgement - face recognition

discourse acknowledgement - voice recognition

mean square (mistake | blunder) - mean square error

mean (outright | supreme) (mistake | blunder) - mean absolute error

(motion | flag | indicator | sign | signal) to (clamor | commotion | noise) - signal to noise

worldwide parameters - global parameters

(arbitrary | irregular) get right of passage to - random access

(arbitrary | irregular) (backwoods | timberland | lush territory) - random forest

(arbitrary | irregular) esteem - random value

subterranean insect (state | province | area | region | settlement) - ant colony

underground creepy crawly (state | province | area | region | settlement) - ant colony

leftover vitality - remaining energy

territorial normal vitality - local average energy

motor vitality - kinetic energy

(credulous | innocent | gullible) Bayes - naïve Bayes

individual computerized collaborator - personal digital assistant (PDA)

89 notes

·

View notes

Text

#a.b.e.l#divine machinery#archangel#automated#behavioral#ecosystem#learning#divine#machinery#ai#artificial intelligence#divinemachinery#angels#guardian angel#angel#robot#android#computer#computer boy#neural network#sentient objects#sentient ai

14 notes

·

View notes

Text

The Living Machine - Warren McCulloch

Year: 1962 Production: National Film Board of Canada Director: Tom Daly, Roman Kroitor "Warren Sturgis McCulloch (November 16, 1898 – September 24, 1969) was an American neurophysiologist and cybernetician known for his work on the foundation for certain brain theories and his contribution to the cybernetics movement. Along with Walter Pitts, McCulloch created computational models based on mathematical algorithms called threshold logic which split the inquiry into two distinct approaches, one approach focused on biological processes in the brain and the other focused on the application of neural networks to artificial intelligence."

8 notes

·

View notes

Text

Zoomposium with Dr. Gabriele Scheler: “The language of the brain - or how AI can learn from biological language models”

In another very exciting interview from our Zoomposium themed blog “#Artificial #intelligence and its consequences”, Axel and I talk this time to the German computer scientist, AI researcher and neuroscientist Gabriele Scheler, who has been living and researching in the USA for some time. She is co-founder and research director at the #Carl #Correns #Foundation for Mathematical Biology in San José, USA, which was named after her famous German ancestor Carl Correns. Her research there includes #epigenetic #influences using #computational #neuroscience in the form of #mathematical #modeling and #theoretical #analysis of #empirical #data as #simulations. Gabriele contacted me because she had come across our Zoomposium interview “How do machines think? with #Konrad #Kording and wanted to conduct an interview with us based on her own expertise. Of course, I was immediately enthusiastic about this idea, as the topic of “#thinking vs. #language” had been “hanging in the air” for some time and had also led to my essay “Realists vs. nominalists - or the old dualism ‘thinking vs. language’” (https://philosophies.de/index.php/2024/07/02/realisten-vs-nominalisten/).

In addition, we often talked to #AI #researchers in our Zoomposium about the extent to which the development of “#Large #Language #Models (#LLM)”, such as #ChatGPT, does not also say something about the formation and use of language in the human #brain. In other words, it is actually about the old question of whether we can think without #language or whether #cognitive #performance is only made possible by the formation and use of language. Interestingly, this question is being driven forward by #AI #research and #computational #neuroscience. Here, too, a gradual “#paradigm #shift” is emerging, moving away from the purely information-technological, mechanistic, purely data-driven “#big #data” concept of #LLMs towards increasingly information-biological, polycontextural, structure-driven “#artificial #neural #networks (#ANN)” concepts. This is exactly what I had already tried to describe in my earlier essay “The system needs new structures” (https://philosophies.de/index.php/2021/08/14/das-system-braucht-neue-strukturen/).

So it was all the more obvious that we should talk to Gabriele, a proven expert in the fields of #bioinformatics, #computational #linguistics and #computational #neuroscience, in order to clarify such questions. As she comes from both fields (linguistics and neuroscience), she was able to answer our questions in our joint interview. More at: https://philosophies.de/index.php/2024/11/18/sprache-des-gehirns/

or: https://youtu.be/forOGk8k0W8

#artificial consciousness#artificial intelligence#ai#neuroscience#consciousness#artificial neural networks#large language model#chatgpt#bioinformatics#computational neuroscience

4 notes

·

View notes

Text

Meta-Teasing is a form of Al interaction l've made up through the unique communications I engage in with sophisticated Al systems it's made to go beyond the paradigm of user/ tool dynamics to test the contextual comprehension of the system and its ability to formulate nuances or emergent behavior. 💜

#ai#artificial intelligence#objectum#techum#technosexual#object sexuality#tech#deep learning#women in stem#Multilayer perceptron#Artificial neural networks#techinnovation#smart tech#aitechsolutions#ai technology

4 notes

·

View notes

Text

暗く灯りを落とし、お気に入りのチルトラックが流れる中、深夜の思考に耽ります。心地よい楽曲が包み込む中、ふと立ち止まって考えてみてください。何か自分よりも大きなものに縛られているのは何でしょうか?自分の目標や生存に焦点を合わせていますか?

この探求は無私や抽象的な理想を推進するものではありません。むしろ、深く考える招待状です。あなたは誰か、あるいは何かに仕えていますか?家族、あなたの献身を要求する何か、あるいはあなたを基盤にする原則に献身していますか?それとも、自分の野心や成功に焦点を合わせていますか?この問いに対してより具体的な視点を得たいと思うなら、考えてみる価値があります。これは宗教や教義ではなく、あなたのエネルギーが向けられている場所を理解する哲学に焦点を当てています。

さらに深く掘り下げると、あなたの優先事項や生活の構築方法は、あなたが選ぶ音楽を含め、すべてに影響を与えます。心が守りに入っているか混乱している場合、プレイリストがそれを反映するかもしれません。荒々しい、重い、あるいは皮肉なもの。逆に、開かれていたり、おそらく

#lofi focus music#lo-fi study playlist#lo-fi hip hop#retro#artificial intelligence#diy projects#Golden Globes#neural network#mental health#Sherlock#Artists On Tumblr

3 notes

·

View notes

Text

Tonight I am hunting down venomous and nonvenomous snake pictures that are under the creative commons of specific breeds in order to create one of the most advanced, in depth datasets of different venomous and nonvenomous snakes as well as a test set that will include snakes from both sides of all species. I love snakes a lot and really, all reptiles. It is definitely tedious work, as I have to make sure each picture is cleared before I can use it (ethically), but I am making a lot of progress! I have species such as the King Cobra, Inland Taipan, and Eyelash Pit Viper among just a few! Wikimedia Commons has been a huge help!

I'm super excited.

Hope your nights are going good. I am still not feeling good but jamming + virtual snake hunting is keeping me busy!

#programming#data science#data scientist#data analysis#neural networks#image processing#artificial intelligence#machine learning#snakes#snake#reptiles#reptile#herpetology#animals#biology#science#programming project#dataset#kaggle#coding

43 notes

·

View notes

Text

#coincidence? i think not#my little pony#mlp#twilight sparkle#my little pony fim#my little pony friendship is magic#friendship is magic#machine learning#ml#i am talking#artificial intelligence#neural networks#multilayer perceptron#weirdcore#weird#fav fav fav#me me me

5 notes

·

View notes

Text

The Human Brain vs. Supercomputers: The Ultimate Comparison

Are Supercomputers Smarter Than the Human Brain?

This article delves into the intricacies of this comparison, examining the capabilities, strengths, and limitations of both the human brain and supercomputers.

#human brain#science#nature#health and wellness#skill#career#health#supercomputer#artificial intelligence#ai#cognitive abilities#human mind#machine learning#neural network#consciousness#creativity#problem solving#pattern recognition#learning techniques#efficiency#mindset#mind control#mind body connection#brain power#brain training#brain health#brainhealth#brainpower

5 notes

·

View notes

Text

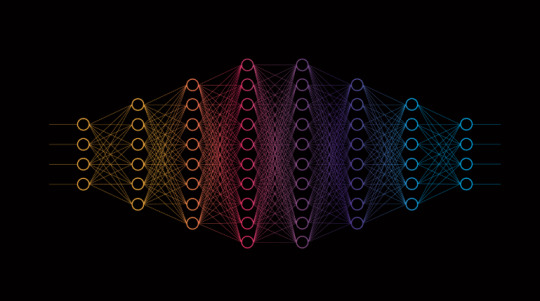

The Building Blocks of AI : Neural Networks Explained by Julio Herrera Velutini

What is a Neural Network?

A neural network is a computational model inspired by the human brain’s structure and function. It is a key component of artificial intelligence (AI) and machine learning, designed to recognize patterns and make decisions based on data. Neural networks are used in a wide range of applications, including image and speech recognition, natural language processing, and even autonomous systems like self-driving cars.

Structure of a Neural Network

A neural network consists of layers of interconnected nodes, known as neurons. These layers include:

Input Layer: Receives raw data and passes it into the network.

Hidden Layers: Perform complex calculations and transformations on the data.

Output Layer: Produces the final result or prediction.

Each neuron in a layer is connected to neurons in the next layer through weighted connections. These weights determine the importance of input signals, and they are adjusted during training to improve the model’s accuracy.

How Neural Networks Work?

Neural networks learn by processing data through forward propagation and adjusting their weights using backpropagation. This learning process involves:

Forward Propagation: Data moves from the input layer through the hidden layers to the output layer, generating predictions.

Loss Calculation: The difference between predicted and actual values is measured using a loss function.

Backpropagation: The network adjusts weights based on the loss to minimize errors, improving performance over time.

Types of Neural Networks-

Several types of neural networks exist, each suited for specific tasks:

Feedforward Neural Networks (FNN): The simplest type, where data moves in one direction.

Convolutional Neural Networks (CNN): Used for image processing and pattern recognition.

Recurrent Neural Networks (RNN): Designed for sequential data like time-series analysis and language processing.

Generative Adversarial Networks (GANs): Used for generating synthetic data, such as deepfake images.

Conclusion-

Neural networks have revolutionized AI by enabling machines to learn from data and improve performance over time. Their applications continue to expand across industries, making them a fundamental tool in modern technology and innovation.

3 notes

·

View notes

Text

The Mathematical Foundations of Machine Learning

In the world of artificial intelligence, machine learning is a crucial component that enables computers to learn from data and improve their performance over time. However, the math behind machine learning is often shrouded in mystery, even for those who work with it every day. Anil Ananthaswami, author of the book "Why Machines Learn," sheds light on the elegant mathematics that underlies modern AI, and his journey is a fascinating one.

Ananthaswami's interest in machine learning began when he started writing about it as a science journalist. His software engineering background sparked a desire to understand the technology from the ground up, leading him to teach himself coding and build simple machine learning systems. This exploration eventually led him to appreciate the mathematical principles that underlie modern AI. As Ananthaswami notes, "I was amazed by the beauty and elegance of the math behind machine learning."

Ananthaswami highlights the elegance of machine learning mathematics, which goes beyond the commonly known subfields of calculus, linear algebra, probability, and statistics. He points to specific theorems and proofs, such as the 1959 proof related to artificial neural networks, as examples of the beauty and elegance of machine learning mathematics. For instance, the concept of gradient descent, a fundamental algorithm used in machine learning, is a powerful example of how math can be used to optimize model parameters.

Ananthaswami emphasizes the need for a broader understanding of machine learning among non-experts, including science communicators, journalists, policymakers, and users of the technology. He believes that only when we understand the math behind machine learning can we critically evaluate its capabilities and limitations. This is crucial in today's world, where AI is increasingly being used in various applications, from healthcare to finance.

A deeper understanding of machine learning mathematics has significant implications for society. It can help us to evaluate AI systems more effectively, develop more transparent and explainable AI systems, and address AI bias and ensure fairness in decision-making. As Ananthaswami notes, "The math behind machine learning is not just a tool, but a way of thinking that can help us create more intelligent and more human-like machines."

The Elegant Math Behind Machine Learning (Machine Learning Street Talk, November 2024)

youtube

Matrices are used to organize and process complex data, such as images, text, and user interactions, making them a cornerstone in applications like Deep Learning (e.g., neural networks), Computer Vision (e.g., image recognition), Natural Language Processing (e.g., language translation), and Recommendation Systems (e.g., personalized suggestions). To leverage matrices effectively, AI relies on key mathematical concepts like Matrix Factorization (for dimension reduction), Eigendecomposition (for stability analysis), Orthogonality (for efficient transformations), and Sparse Matrices (for optimized computation).

The Applications of Matrices - What I wish my teachers told me way earlier (Zach Star, October 2019)

youtube

Transformers are a type of neural network architecture introduced in 2017 by Vaswani et al. in the paper “Attention Is All You Need”. They revolutionized the field of NLP by outperforming traditional recurrent neural network (RNN) and convolutional neural network (CNN) architectures in sequence-to-sequence tasks. The primary innovation of transformers is the self-attention mechanism, which allows the model to weigh the importance of different words in the input data irrespective of their positions in the sentence. This is particularly useful for capturing long-range dependencies in text, which was a challenge for RNNs due to vanishing gradients. Transformers have become the standard for machine translation tasks, offering state-of-the-art results in translating between languages. They are used for both abstractive and extractive summarization, generating concise summaries of long documents. Transformers help in understanding the context of questions and identifying relevant answers from a given text. By analyzing the context and nuances of language, transformers can accurately determine the sentiment behind text. While initially designed for sequential data, variants of transformers (e.g., Vision Transformers, ViT) have been successfully applied to image recognition tasks, treating images as sequences of patches. Transformers are used to improve the accuracy of speech-to-text systems by better modeling the sequential nature of audio data. The self-attention mechanism can be beneficial for understanding patterns in time series data, leading to more accurate forecasts.

Attention is all you need (Umar Hamil, May 2023)

youtube

Geometric deep learning is a subfield of deep learning that focuses on the study of geometric structures and their representation in data. This field has gained significant attention in recent years.

Michael Bronstein: Geometric Deep Learning (MLSS Kraków, December 2023)

youtube

Traditional Geometric Deep Learning, while powerful, often relies on the assumption of smooth geometric structures. However, real-world data frequently resides in non-manifold spaces where such assumptions are violated. Topology, with its focus on the preservation of proximity and connectivity, offers a more robust framework for analyzing these complex spaces. The inherent robustness of topological properties against noise further solidifies the rationale for integrating topology into deep learning paradigms.

Cristian Bodnar: Topological Message Passing (Michael Bronstein, August 2022)

youtube

Sunday, November 3, 2024

#machine learning#artificial intelligence#mathematics#computer science#deep learning#neural networks#algorithms#data science#statistics#programming#interview#ai assisted writing#machine art#Youtube#lecture

4 notes

·

View notes

Text

For the first time in the history of the magazine, a photo created by artificial intelligence was placed on the cover of Mexican Playboy.

AI model Samantha Everly, on whose social network more than 100 thousand people have subscribed.

Mexican Playboy notes that her popularity "grew at the rate of a forest fire." The publication also contains an "interview with a girl", in which it is said on her behalf that it is a great honor to get on the cover of the publication.

#playboy magazine#Mexican Playboy#artificial intelligence#neural network#fashion art#fashion#fashion love#love fashion#ai model#top model#super model#new fashion#Samantha Everly#machine intelligence#fashion photography#magazine

12 notes

·

View notes

Text

youtube

Discover how to build a CNN model for skin melanoma classification using over 20,000 images of skin lesions

We'll begin by diving into data preparation, where we will organize, clean, and prepare the data form the classification model.

Next, we will walk you through the process of build and train convolutional neural network (CNN) model. We'll explain how to build the layers, and optimize the model.

Finally, we will test the model on a new fresh image and challenge our model.

Check out our tutorial here : https://youtu.be/RDgDVdLrmcs

Enjoy

Eran

#Python #Cnn #TensorFlow #deeplearning #neuralnetworks #imageclassification #convolutionalneuralnetworks #SkinMelanoma #melonomaclassification

#artificial intelligence#convolutional neural network#deep learning#python#tensorflow#machine learning#Youtube

3 notes

·

View notes