#privacy automation tools

Explore tagged Tumblr posts

Text

The Best Tools for Automating Your Online Privacy Settings

In today’s digital age, protecting your online privacy is more critical than ever. With data breaches, surveillance, and tracking becoming increasingly common, having control over your personal information is essential. Thankfully, there are several tools available to help automate your privacy settings, ensuring that your data remains secure without having to manually manage it all the time.…

#automate privacy settings#browser privacy extensions#privacy automation tools#privacy tools#stop tracking

0 notes

Text

Why Germany Is Still Struggling with Digitalization – A Real-Life Look from Finance

Working in Germany, especially in a field like Finance, often feels like stepping into a strange paradox. On one hand, you’re in one of the most advanced economies in the world—known for its precision, engineering, and efficiency. On the other hand, daily tasks can feel like they belong in the 1990s. If you’ve ever had to send invoices to customers who insist they be mailed physically—yes, by…

#automation#business digitalization#business modernization#cash payments#change management#Clinics#cloud services#communication barriers#cultural habits#data privacy#digital future#digital mindset#digital natives#digital platforms#digital resistance#digital tools#digital transformation#digitalization#Distributors#document digitization#EDI#education system#electronic invoicing#email invoices#fax orders#filing cabinets#finance automation#finance department#future of work#generational gap

0 notes

Text

New Survey Finds Balancing AI’s Ease of Use with Trust is Top of Business Leaders Minds

New Post has been published on https://thedigitalinsider.com/new-survey-finds-balancing-ais-ease-of-use-with-trust-is-top-of-business-leaders-minds/

New Survey Finds Balancing AI’s Ease of Use with Trust is Top of Business Leaders Minds

A recent CIO report revealed that enterprises are investing up to $250 million in AI despite struggling to prove ROI. Business leaders are on a quest for productivity, but with new technology integration comes the need to potentially refactor existing applications, update processes, and inspire workers to learn and adapt to the modern business environment.

Nate MacLeitch, CEO of QuickBlox surveyed 136 executives to uncover the realities of AI adoption—looking at leaders’ top priorities, primary concerns, and where they seek trusted information about their prospective tools in 2025.

Are We Sacrificing Trust for Efficiency?

The survey results found ease of use and integration (72.8%) to be the top driver when selecting business AI tools. Yet, when asked about their primary concerns during the selection process, 60.3% voted privacy and security as their biggest worries. This emphasis on ease of use, however, raises questions about whether security is being adequately prioritized.

It is becoming easier for humans and machines to communicate, enabling AI users to accomplish more with greater proficiency. Businesses can automate tasks, optimize processes, and make better decisions with user-friendly analytics.

API-driven AI and microservices will allow businesses to integrate advanced AI functions into their existing systems in a modular fashion. Pair this with no-code solutions, auto-ML, and voice-controlled multimodal virtual assistants and this approach will speed up the development of custom applications without requiring extensive AI expertise.

Through continued exploration and optimization, AI is projected to add USD 4.4 trillion to the global economy. The crucial and complex part to keep in mind today is verifying that these pre-built solutions comply with regulatory and ethical AI practices. Strong encryption, tight access control, and regular checks keep data safe in these AI systems.

It’s also worth checking what ethical AI frameworks providers follow to build trust, avoid harm, and ensure AI benefits everyone. Some noted ones include, the EU AI Act, OECD AI Principles, UNESCO AI Ethics Framework, IEEE Ethically Aligned Design (EAD) Guidelines, and NIST AI Risk Management Framework.

What Do Leaders Need, and Where Do They Go To Get It?

Although data privacy concerns were leaders’ biggest worries during the AI selection phase, when asked about their integration challenges, only 20.6% ranked it as a primary issue. Instead, 41.2% of leaders stated that costs of integration were top of mind.

Interestingly, however, when asked “What additional support do you need?” the response “More affordable options” was ranked the lowest, with leaders more focused on finding training and education (56.6%), customized solutions (54.4%), and technical support (54.4%). This suggests that people aren’t just going after the cheapest options—they are looking for providers that can support them with integration and security. They would prefer to find trusted partners to guide them through proper data privacy protection methods and are willing to pay for it.

External information sources are the go-to when researching which AI applications leaders can trust. When asked to choose between social networking platforms, blogs, community platforms, and online directories as their most trusted source of information when deciding on tools, an equal majority of 54.4% said LinkedIn and X.

It is likely that these two platforms were most trusted due to the vast amount of professionals available to connect with. On LinkedIn, leaders can follow company pages, best practices, product information, and interests shared via posts, review peers’ comments, and even open conversations with other peers to gain insights from firsthand experiences. Similarly, on X, leaders can follow industry experts, analysts, and companies to stay informed about the latest developments. The platform’s fast-paced nature means if an AI tool is trending, platform members will hear about it.

Still, the potential for misinformation and biased opinions exists on any social media platform. Decision-makers must be mindful to consider a combination of online research, expert consultations, and vendor demonstrations when making AI tool purchasing decisions.

Can Leadership Evolve Fast Enough?

Limited internal expertise to manage AI was listed by 26.5% as their second biggest concern during integration, second only to integration costs. A recent IBM study on AI in the workplace found that 87% of business leaders expect at least a quarter of their workforce will need to reskill in response to generative AI and automation. While finding the right partner is a good start, what strategies can leaders use to train teams on the required information and achieve successful adoption?

Slow and steady wins the race, but aim to make every minute count. Business leaders must realize regulatory compliance and prepare their operations and workforce. This involves creating effective AI governance strategies built upon five pillars: explainability, fairness, robustness, transparency, and privacy.

It helps when everyone is on the same page—with employees who share your eagerness to adopt more efficient strategies. Start by showing them what’s in it for them. Higher profits? Less stressful workloads? Opportunities to learn and advance? It helps to have evidence to back up your statements. Be prepared to deliver some quick wins or pilot projects that solve more simple pain points. For example, in a healthcare scenario, this could be transcribing patient calls and auto-filling intake forms for doctors’ approval.

Nevertheless, you cannot predict what is on everyone’s minds, so it’s important to create spaces where teams feel comfortable sharing ideas, concerns, and feedback without fear of judgment or reprisal. This also offers the chance to discover and solve pain points you didn’t know existed. Fostering psychological safety is also crucial when adjusting to new processes. Frame failures as valuable learning experiences, not setbacks, to help encourage forward momentum.

Adopting AI in business isn’t just about efficiency gains—it’s about striking the right balance between usability, security, and trust. While companies recognize AI’s potential to reduce costs and streamline operations, they face real challenges, including integration expenses, and a growing need for AI-specific skills. Employees worry about job displacement, and leadership must proactively address these fears through transparency and upskilling initiatives. Robust AI governance is critical to navigating compliance, ethical considerations, and data protection. Ultimately, making AI work in the real world comes down to clear communication, tangible benefits, and a security-first culture that encourages experimentation.

#2025#250#access control#ADD#adoption#ai#ai act#AI adoption#AI Ethics#ai governance#AI systems#ai tools#Analytics#API#applications#approach#automation#back up#Business#business environment#CEO#cio#code#communication#Community#Companies#compliance#data#data privacy#data protection

1 note

·

View note

Text

The Future of Digital Marketing: Trends to Watch in 2025

Introduction The digital marketing landscape is evolving faster than ever. With advancements in artificial intelligence, changing consumer behaviors, and new regulations shaping the industry, businesses must stay ahead of the curve. To remain competitive, marketers need to adapt to the latest trends that will define digital marketing in 2025. In this article, we will explore the key digital…

#AI in digital marketing#AI-powered content creation tools#Best marketing automation tools 2025#Best video marketing platforms#Brand awareness through influencer marketing#content-marketing#Data privacy in digital advertising#Digital Marketing#Digital marketing trends 2025#Future of digital marketing#Future of paid advertising#How AI is changing digital marketing#How to optimize for Google search in 2025#Influencer marketing strategies#Interactive ads for higher conversions#Interactive content marketing#marketing#Marketing automation and AI#Micro-influencers vs macro-influencers#Omnichannel digital marketing strategy#Privacy-first marketing#Search engine optimization strategies#SEO#SEO trends 2025#Short-form video marketing#Social media engagement tactics#Social media marketing trends#social-media#The impact of AI on consumer behavior#Video marketing trends

0 notes

Text

#AI Factory#AI Cost Optimize#Responsible AI#AI Security#AI in Security#AI Integration Services#AI Proof of Concept#AI Pilot Deployment#AI Production Solutions#AI Innovation Services#AI Implementation Strategy#AI Workflow Automation#AI Operational Efficiency#AI Business Growth Solutions#AI Compliance Services#AI Governance Tools#Ethical AI Implementation#AI Risk Management#AI Regulatory Compliance#AI Model Security#AI Data Privacy#AI Threat Detection#AI Vulnerability Assessment#AI proof of concept tools#End-to-end AI use case platform#AI solution architecture platform#AI POC for medical imaging#AI POC for demand forecasting#Generative AI in product design#AI in construction safety monitoring

0 notes

Text

How Technology is Transforming Business Communication and Management

In this world of business, technology has become the single most pivotal force in shaping organizations to communicate, manage operations, and interact with their clients.

The rapid advancements in communication tools, automation in processes, and management systems have let businesses achieve more efficiency, competitiveness, and agility toward market dynamics. This transformation has not only aligned the internal processes but has also revolutionized customer engagement, team collaboration, and decision-making.

The article now goes through how technology is changing business communication and management, highlighting key trends and tools that drive such change.

Cloud-Based Communication Platforms

Among the many innovations in business communication, cloud technology is likely the most impactful. Traditional phone systems and on-premise email servers are giving way to flexible, scalable, and more economical cloud platforms.

Unified Communication as a Service (UCaaS)

The latest of these is Unified Communication as a Service (UCaaS). With UCaaS, voice, video, messaging, and conferencing are all integrated into one solution accessible from any device capable of accessing the internet. In other words, work teams can communicate in real-time is the technology that has made working from home and hybrid workplaces possible, as companies remain productive and their workers communicate effectively over-dispersed geographies.

Reduced Infrastructure Cost

With the use of cloud-supported communication tools, a company isn't required to invest in hardware and other maintenance costs of conventional systems.

Instead of investing in a PBX system or an on-premise data center, companies can invest in subscription models that scale as required. This has made access to enterprise-level communication tools easier for small and medium businesses without heavy upfront investments.

Improved Security and Data Privacy

Cloud communication platforms are also integrated with strong security functions, including encryption, multi-factor authentication, and adherence to data privacy laws. This means that sensitive business communication will be safe from unauthorized access and prevent the leakage of critical business information.

Mobile Technology for Remote Management

Mobile technology has made it possible for business leaders and employees to stay connected and manage tasks from anywhere, at any time. A prime example of this transformation is the role of mobile connectivity in facilitating seamless communication during business trips. With tools like mobile project management apps and communication platforms, remote work has become more seamless.

For esim international travel, professionals can easily switch between networks without the hassle of changing SIM cards, ensuring they remain connected wherever they go. This ensures that even when traveling internationally, business leaders can access important documents, attend virtual meetings, and stay in touch with their teams without interruptions.

AI in Communication

The use of AI is redefining business-customer interaction and internal communication in organizations.

● AI-Powered Customer Support: AI-powered chatbots and virtual assistants have indeed completely transformed customer support. Such applications can process hundreds of queries simultaneously in real-time and promptly respond with accuracy to the customers 24/7.

● Natural Language Processing (NLP): This is a branch of AI-engaging computers to perceive and interpret human language. Companies are leveraging NLP in email, chat, and customer feedback analyses to understand major trends and problems in communication.

● AI in Internal Communication: Coupled with communication with customers, AI also enhances the internal processes of communication. AI-driven tools could automate routine tasks of meeting scheduling, reminders, and keeping the workflow organized.

Team Management Collaboration Tools

Collaboration tools have now played a very important part in team management, with many employees embracing the concept of working from home and hybrid work models.

Project Management Software

Project management tools allow your team to organize work, follow through on progress, and collaborate in real-time. On the platform, members can delegate tasks, set deadlines, and communicate effectively so there is no miscommunication or a lost deadline.

They even give insights into the timeline of projects to ensure people are on the same page about what is happening with the status of a project.

Real-Time Collaboration on Documents

This has been further improved through other platforms, whereby many users can edit one document simultaneously. A team can edit a document spreadsheet or presentation, comment, and even suggestions that can be reviewed. This certainly has taken collaboration to a new level, more so when the team members are scattered in different locations.

Virtual Meeting Tools

Built-in integrations like screen sharing, virtual whiteboards, and breakout rooms make remote meetings truly far more interactive. The hybrid way of working has made these platforms do magic, connecting all the employees, those in-office and working remotely, to one platform where every single one of them has equal opportunity to participate and contribute.

Automation in Business Management

Automation is another crucial technology transforming how business activities are managed in any business to reduce workloads and further enhance operational efficiency.

● Workflow Automation: This helps businesses automate repetitive activities. It could also mean that customer data is routed automatically to a CRM system, instead of having to be keyed in when a new lead is created or a customer makes a purchase, reducing chances of human error and thus assuring speedier processing.

● Automated Reporting and Analytics: Automation tools will immediately report, at a frequency defined by the business rules, to make sure that managers and leaders have access to current information for making informed decisions.

● Human Resources and Payroll Automation: Automation has also brought a sea change in HR and payroll practices. This ensures further compliance with labor laws and frees up administrative burdens on HR teams to focus on employee engagement and development.

Improved Customer Relationship Management

The application of CRM in business is central to the handling of interactions with clients and prospects. Advanced modern CRM systems, leveraging AI and automation, may unlock deeper insights for businesses into customer behavior and preferences for personalized communication and marketing strategies.

AI-Driven CRMs

CRM systems apply AI toward identifying patterns in how customers interact and then predict their future behaviors. This can include automation of things like lead scoring on the likelihood to convert, suggesting next steps for sales teams, and personalized marketing content to better engage prospects.

AI-driven CRMs offer improvements in business-customer relations because they ensure that the communication is timely, relevant, and personal.

Omnichannel Communication

The modern CRM system unifies multi-channel communications like email, social media, phone, and chat - all within one system. This ability to engage the customer across the touchpoints allows for a consistent and seamless experience in engaging them.

When the customer calls the business, writes an email, or reaches out via social media, the CRM system monitors all of these interactions to enable insight into the big picture of the customer's journey.

Data Analytics and Business Intelligence (BI)

It has indeed brought about an absolute revolution in the way businesses make decisions-from insights into performance, customer behavior, and market trends.

Real-Time Data Insights

This allows the organization to realize the data in real-time to make informed strategic decisions. These tools aggregate data from various sources into comprehensible dashboards. Based on the observation of KPIs, business executives make fact-based decisions instead of gut-based decisions.

Predictive Analytics

Artificial Intelligence and machine learning-driven predictive analytics can help businesses to accurately predict future trends and outcomes.

For example, companies can estimate the rate of churn in customers, optimize inventory levels, and even predict market demand using historical data. Using this technology, predictive analytics enables a business to pre-emptively make decisions that will enhance efficiency and profitability.

Conclusion

Technology is transforming business communication and management on an unprecedented scale. Business managers leverage these innovations on everything from cloud-based communication platforms and AI-powered customer support to automation tools and data analytics.

As technology continues to evolve, only companies that adopt new tools will remain flexible in changing market conditions, enhance customer experience, and ensure long-term success. This is what will allow businesses to continue to be competitive and thrive in the high-speed world of business today.

FAQs

1.At which points does the utilization of cloud-based communication tools improve the way business is conducted?

Cloud-based tools make communication easier, the cost of the infrastructure is reduced, and it allows for working remotely.

2.What part does AI play in improving customer service?

AI empowers businesses by providing constant support through chatbots and virtual assistants, enhancing response time and customer satisfaction.

3.Why is automation important for business management?

Automation reduces human effort to the lowest level, reduces errors, and speeds up operations in many business processes.

Daniel Martin

Dan has had hands-on experience in digital marketing since 2007. He has been building teams and coaching others to foster innovation and solve real-time problems. In his previous work experiences, he has developed expertise in digital marketing, e-commerce, and social media. When he's not working, Dan enjoys photography and traveling.

LinkedIn Profile

Share in the comments below: Questions go here

#technology#business#communications#management#customer engagement#team collaboration#decision making#management systems#cloud technology#infrastructure cost#data privacy#security#remote management#virtual meeting tools#workflow automation#analytics

0 notes

Text

What Is the Future of Digital Marketing in the Age of AI?

As artificial intelligence (AI) continues to evolve, it is dramatically altering the landscape of digital marketing. No longer just a futuristic concept, AI has become an essential tool that companies of all sizes are leveraging to streamline processes, improve customer experiences, and stay competitive. But what is the future of digital marketing in the age of AI, and how will these changes…

#AI advancements#AI algorithms#AI and privacy#AI and SEO#AI automation#AI chatbots#AI content#AI content creation#AI data#AI ethics#AI in advertising#AI in business#AI in customer service#AI in marketing#AI marketing#AI optimization#AI personalization#AI technology#AI tools#AI training#AI trends#AI-driven#AI-Powered#automation#chatbots#content creation#content strategy#customer engagement#customer experience#data privacy

0 notes

Text

Faceless.Video is the ultimate secret weapon for camera-shy creators, and it's time we dive into why more people aren't talking about it. Imagine producing high-quality videos without ever showing your face! This innovative platform harnesses AI to manage everything from script generation to voiceovers and scene selection. Just input your text, and in minutes, you have a professional video ready to go.

Customization options allow us to adjust voice tones, visual styles, and pacing tailored to our unique brand identity. Say goodbye to cookie-cutter content; our videos can now reflect a personal touch while remaining quick and affordable.

#FacelessVideo #CameraShyCreators

#faceless video#neturbiz#privacy#video#content creation#protection#automation#AI video editing#user friendly platform#high quality videos#script generation#voiceovers#scene selection#customizable videos#affordable production#digital anonymity#marketing#educational videos#social media content#batch processing#production studio#engaging content#editing tips#content creators#features#video updates#video technology#online tools#AI technology#digital content

1 note

·

View note

Text

Revolutionize Your Video Production with Faceless AI!

Faceless.Video is a revolutionary tool for content creators who value privacy and ease of use. This platform allows us to produce high-quality videos without showing our faces, making it ideal for those seeking anonymity or digital distance. With its AI-driven automation, we can generate scripts, voiceovers, and select scenes effortlessly—just input your text and watch the magic happen.

Affordability is another key feature; there's no need for expensive equipment or professional talent. Whether we're creating educational content or social media snippets, faceless video supports our needs with constant updates that enhance capabilities.

#FacelessVideo

#ContentCreation

#faceless video#content creation#privacy protection#video automation#AI video editing#user friendly platform#high quality videos#script generation#voiceovers#scene selection#customizable videos#affordable video production#digital anonymity#video marketing#educational videos#social media content#batch processing#video production studio#engaging video content#tech wizard#video editing tips#content creators#video features#scalable solutions#video updates#video technology#online video tools#AI technology#professional results#video content creation

1 note

·

View note

Text

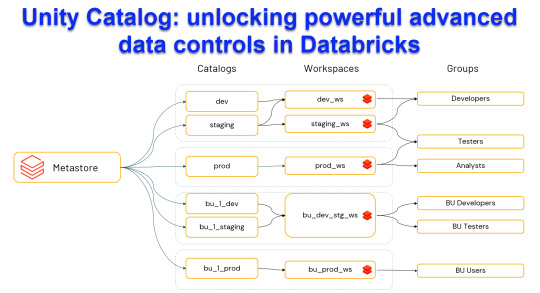

Unity Catalog: Unlocking Powerful Advanced Data Control in Databricks

Harness the power of Unity Catalog within Databricks and elevate your data governance to new heights. Our latest blog post, "Unity Catalog: Unlocking Advanced Data Control in Databricks," delves into the cutting-edge features

View On WordPress

#Advanced Data Security#Automated Data Lineage#Cloud Data Governance#Column Level Masking#Data Discovery and Cataloging#Data Ecosystem Security#Data Governance Solutions#Data Management Best Practices#Data Privacy Compliance#Databricks Data Control#Databricks Delta Sharing#Databricks Lakehouse Platform#Delta Lake Governance#External Data Locations#Managed Data Sources#Row Level Security#Schema Management Tools#Secure Data Sharing#Unity Catalog Databricks#Unity Catalog Features

0 notes

Text

CDA 230 bans Facebook from blocking interoperable tools

I'm touring my new, nationally bestselling novel The Bezzle! Catch me TONIGHT (May 2) in WINNIPEG, then TOMORROW (May 3) in CALGARY, then SATURDAY (May 4) in VANCOUVER, then onto Tartu, Estonia, and beyond!

Section 230 of the Communications Decency Act is the most widely misunderstood technology law in the world, which is wild, given that it's only 26 words long!

https://www.techdirt.com/2020/06/23/hello-youve-been-referred-here-because-youre-wrong-about-section-230-communications-decency-act/

CDA 230 isn't a gift to big tech. It's literally the only reason that tech companies don't censor on anything we write that might offend some litigious creep. Without CDA 230, there'd be no #MeToo. Hell, without CDA 230, just hosting a private message board where two friends get into serious beef could expose to you an avalanche of legal liability.

CDA 230 is the only part of a much broader, wildly unconstitutional law that survived a 1996 Supreme Court challenge. We don't spend a lot of time talking about all those other parts of the CDA, but there's actually some really cool stuff left in the bill that no one's really paid attention to:

https://www.aclu.org/legal-document/supreme-court-decision-striking-down-cda

One of those little-regarded sections of CDA 230 is part (c)(2)(b), which broadly immunizes anyone who makes a tool that helps internet users block content they don't want to see.

Enter the Knight First Amendment Institute at Columbia University and their client, Ethan Zuckerman, an internet pioneer turned academic at U Mass Amherst. Knight has filed a lawsuit on Zuckerman's behalf, seeking assurance that Zuckerman (and others) can use browser automation tools to block, unfollow, and otherwise modify the feeds Facebook delivers to its users:

https://knightcolumbia.org/documents/gu63ujqj8o

If Zuckerman is successful, he will set a precedent that allows toolsmiths to provide internet users with a wide variety of automation tools that customize the information they see online. That's something that Facebook bitterly opposes.

Facebook has a long history of attacking startups and individual developers who release tools that let users customize their feed. They shut down Friendly Browser, a third-party Facebook client that blocked trackers and customized your feed:

https://www.eff.org/deeplinks/2020/11/once-again-facebook-using-privacy-sword-kill-independent-innovation

Then in in 2021, Facebook's lawyers terrorized a software developer named Louis Barclay in retaliation for a tool called "Unfollow Everything," that autopiloted your browser to click through all the laborious steps needed to unfollow all the accounts you were subscribed to, and permanently banned Unfollow Everywhere's developer, Louis Barclay:

https://slate.com/technology/2021/10/facebook-unfollow-everything-cease-desist.html

Now, Zuckerman is developing "Unfollow Everything 2.0," an even richer version of Barclay's tool.

This rich record of legal bullying gives Zuckerman and his lawyers at Knight something important: "standing" – the right to bring a case. They argue that a browser automation tool that helps you control your feeds is covered by CDA(c)(2)(b), and that Facebook can't legally threaten the developer of such a tool with liability for violating the Computer Fraud and Abuse Act, the Digital Millennium Copyright Act, or the other legal weapons it wields against this kind of "adversarial interoperability."

Writing for Wired, Knight First Amendment Institute at Columbia University speaks to a variety of experts – including my EFF colleague Sophia Cope – who broadly endorse the very clever legal tactic Zuckerman and Knight are bringing to the court.

I'm very excited about this myself. "Adversarial interop" – modding a product or service without permission from its maker – is hugely important to disenshittifying the internet and forestalling future attempts to reenshittify it. From third-party ink cartridges to compatible replacement parts for mobile devices to alternative clients and firmware to ad- and tracker-blockers, adversarial interop is how internet users defend themselves against unilateral changes to services and products they rely on:

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

Now, all that said, a court victory here won't necessarily mean that Facebook can't block interoperability tools. Facebook still has the unilateral right to terminate its users' accounts. They could kick off Zuckerman. They could kick off his lawyers from the Knight Institute. They could permanently ban any user who uses Unfollow Everything 2.0.

Obviously, that kind of nuclear option could prove very unpopular for a company that is the very definition of "too big to care." But Unfollow Everything 2.0 and the lawsuit don't exist in a vacuum. The fight against Big Tech has a lot of tactical diversity: EU regulations, antitrust investigations, state laws, tinkerers and toolsmiths like Zuckerman, and impact litigation lawyers coming up with cool legal theories.

Together, they represent a multi-front war on the very idea that four billion people should have their digital lives controlled by an unaccountable billionaire man-child whose major technological achievement was making a website where he and his creepy friends could nonconsensually rate the fuckability of their fellow Harvard undergrads.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/02/kaiju-v-kaiju/#cda-230-c-2-b

Image: D-Kuru (modified): https://commons.wikimedia.org/wiki/File:MSI_Bravo_17_(0017FK-007)-USB-C_port_large_PNr%C2%B00761.jpg

Minette Lontsie (modified): https://commons.wikimedia.org/wiki/File:Facebook_Headquarters.jpg

CC BY-SA 4.0: https://creativecommons.org/licenses/by-sa/4.0/deed.en

#pluralistic#ethan zuckerman#cda 230#interoperability#content moderation#composable moderation#unfollow everything#meta#facebook#knight first amendment initiative#u mass amherst#cfaa

246 notes

·

View notes

Text

ok chipping away at this AI workshop and here is a first stab at articulating some learning goals. in this two-workshop series, I want students to:

explore and discuss the limits of AI as a research tool (including issues around AI hallucinations and “overcertainty”, sources consulted & source credibility, data privacy and security, etc)

develop concrete strategies for using AI more effectively in research contexts (including how to coach AI tools to give more rigorous search results + how to prompt AI to give substantive feedback rather than generating content for you)

differentiate between situations where using AI is shortchanging your learning/growth vs. helping you automate rote tasks that free up energy to do substantive work. maybe we’ll evaluate different hypothetical scenarios here and I’ll ask students to argue both sides before deciding on a stance… I think we can also work in some discussion here about the underlying emotional stuff that might drive us to use generative AI in inappropriate or unproductive ways—imposter syndrome, fear of falling behind or not measuring up to others, poor time management, etc.

practice having conversations about the use of AI in research with faculty mentors and peers (basically I want them to understand that adults are also learning this stuff in real time and also have a wide range of attitudes towards generative AI, and may not know how to have productive conversations about it with students… I certainly feel that way myself!! so I want to give them some tools for starting those conversations and clarifying mentor expectations around AI use)

can’t figure out how to articulate this as a learning outcome yet but I am hoping that we can do a mix of this focused work on “here are concrete strategies for dealing with these tools” + bigger-picture reflection on what learning is for and why we are personally driven to learn/develop expertise/create new knowledge. I want one of the big overarching aims of the summer seminar to be helping students articulate their identity, purpose, and values as researchers… and then we’ll think together about how all this skill development stuff we’re doing fits into that larger framework of why we care about this work and why we’ve chosen to pursue it.

18 notes

·

View notes

Text

In 1974, the United States Congress passed the Privacy Act in response to public concerns over the US government’s runaway efforts to harness Americans’ personal data. Now Democrats in the US Senate are calling to amend the half-century-old law, citing ongoing attempts by billionaire Elon Musk’s so-called Department of Government Efficiency (DOGE) to effectively commit the same offense—collusively collect untold quantities of personal data, drawing upon dozens if not hundreds of government systems.

On Monday, Democratic senators Ron Wyden, Ed Markey, Jeff Merkley, and Chris Van Hollen introduced the Privacy Act Modernization Act of 2025—a direct response, the lawmakers say, to the seizure by DOGE of computer systems containing vast tranches of sensitive personal information—moves that have notably coincided with the firings of hundreds of government officials charged with overseeing that data’s protection. “The seizure of millions of Americans’ sensitive information by Trump, Musk and other MAGA goons is plainly illegal,” Wyden tells WIRED, “but current remedies are too slow and need more teeth.”

The passage of the Privacy Act came in the wake of the McCarthy era—one of the darkest periods in American history, marked by unceasing ideological warfare and a government run amok, obsessed with constructing vast record systems to house files on hundreds of thousands of individuals and organizations. Secret dossiers on private citizens were the primary tool for suppressing free speech, assembly, and opinion, fueling decades’ worth of sedition prosecutions, loyalty oaths, and deportation proceedings. Countless writers, artists, teachers, and attorneys saw their livelihoods destroyed, while civil servants were routinely rounded up and purged as part of the roving inquisitions.

The first privacy law aimed at truly reining in the power of the administrative state, the Privacy Act was passed during the dawn of the microprocessor revolution, amid an emergence of high-speed telecommunications networks and “automated personal data systems.” The explosion in advancements coincided with Cassandra-like fears among ordinary Americans about a rise in unchecked government surveillance through the use of “universal identifiers.”

A wave of such controversies, including Watergate and COINTELPRO, had all but annihilated public trust in the government’s handling of personal data. “The Privacy Act was part of our country’s response to the FBI abusing its access to revealing sensitive records on the American people,” says Wyden. “Our bill defends against new threats to Americans’ privacy and the integrity of federal systems, and ensures individuals can go after the government when officials break the law, including quickly stopping their illegal actions with a court order.”

The bill, first obtained by WIRED last week, would implement several textual changes aimed at strengthening the law—redefining, for instance, common terms such as “record” and “process” to more aptly comport with their usage in the 21st century. It further takes aim at certain exemptions and provisions under the Privacy Act that have faced decades’ worth of criticism by leading privacy and civil liberties experts.

While the Privacy Act generally forbids the disclosure of Americans’ private records except to the “individual to whom the records pertain,” there are currently at least 10 exceptions that apply to this rule. Private records may be disclosed, for example, without consent in the interest of national defense, to determine an individual’s suitability for federal employment, or to “prevent, control, or reduce crime.” But one exception has remained controversial from the very start. Known as “routine use,” it enables government agencies to disclose private records so long as the reason for doing so is “compatible” with the purpose behind their collection.

The arbitrary ways in which the government applies the “routine use” exemption have been drawing criticism since at least 1977, when a blue-ribbon commission established by Congress reported that federal law enforcement agencies were creating “broad-worded routine uses,” while other agencies were engaged in “quid pro quo” arrangements—crafting their own novel “routine uses,” as long as other agencies joined in doing the same.

Nearly a decade later, Congress’ own group of assessors would find that “routine use” had become a “catch-all exemption” to the law.

In an effort to stem the overuse of this exemption, the bill introduced by the Democratic senators includes a new stipulation that, combined with enhanced minimization requirements, would require any “routine use” of private data to be both “appropriate” and “reasonably necessary,” providing a hook for potential plaintiffs in lawsuits against government offenders down the road. Meanwhile, agencies would be required to make publicly known “any purpose” for which a Privacy Act record might actually be employed.

Cody Venzke, a senior policy counsel at the American Civil Liberties Union, notes that the bill would also hand Americans the right to sue states and municipalities, while expanding the right of action to include violations that could reasonably lead to harms. “Watching the courts and how they’ve handled the whole variety of suits filed under the Privacy Act, it's been frustrating to see them not take the data harms seriously or recognize the potential eventual harms that could come to be,” he says. Another major change, he adds, is that the bill expands who's actually covered under the Privacy Act from merely citizens and legal residents to virtually anyone physically inside the United States—aligning the law more firmly with current federal statutes limiting the reach of the government's most powerful surveillance tools.

In another key provision, the bill further seeks to rein in the government’s use of so-called “computer matching,” a process whereby a person’s private records are cross-referenced across two agencies, helping the government draw new inferences it couldn’t by examining each record alone. This was a loophole that Congress previously acknowledged in 1988, the first time it amended the Privacy Act, requiring agencies to enter into written agreements before engaging in matching, and to calculate how matching might impact an individual’s rights.

The changes imposed under the Democrats’ new bill would merely extend these protections to different record systems held by a single agency. To wit, the Internal Revenue Service has one system that contains records on “erroneous tax refunds,” while another holds data on the “seizure and sale of real property.” These changes would ensure that the restrictions on matching still apply, even though both systems are controlled by the IRS. What’s more, while the restrictions on matching do not currently extend to “statistical projects,” they would under the new text, if the project’s purpose might impact the individuals’ “rights, benefits, or privileges.” Or—in the case of federal employees—result in any “financial, personnel, or disciplinary action.”

The Privacy Act currently imposes rather meager criminal fines (no more than $5,000) against government employees who knowingly disclose Americans’ private records to anyone ineligible to receive them. The Democrats’ bill introduces a fine of up to $250,000, as well as the possibility of imprisonment, for anyone who leaks records “for commercial advantage, personal gain, or malicious harm.”

The bill has been endorsed by the Electronic Privacy Information Center and Public Citizen, two civil liberties nonprofits that are both engaged in active litigation against DOGE.

“Over 50 years ago, Congress passed the Privacy Act to protect the public against the exploitation and misuse of their personal information held by the government,” Markey says in a statement. “Today, with Elon Musk and the DOGE team recklessly seeking to access Americans’ sensitive data, it’s time to bring this law into the digital age.”

12 notes

·

View notes

Text

Alright, bucko—strap in. Here’s a speculative timeline + cultural map of what an intentional or emergent AI slowdown might look like, as systems (and humans) hit thresholds they weren’t ready for:

⸻

PHASE 1: THE FRENZY (2023–2026)

“It’s magic! It does everything!”

• AI is integrated into every app, interface, workspace, school.

• Productivity spikes, novelty floods, markets boom.

• Art, code, and content feel democratized—but also flooded with sameness.

• Emerging signs of fatigue: burnout, aesthetic flattening, privacy erosion.

⸻

PHASE 2: THE CRACK (2026–2028)

“Wait… who’s driving this?”

• Cultural bottleneck: Hyper-efficiency erodes depth and human uniqueness.

• Political tension: Regulation debates explode over elections, education, warfare, and job losses.

• AI-native Gen Z+ workers question value of constant optimization.

• Rise of “Slow Tech” movements, digital sobriety, and human-first UX design.

⸻

PHASE 3: THE CORRECTION (2028–2032)

“We need AI to disappear into the background.”

• AI goes low-profile: instead of being flashy, it’s embedded into infrastructure, not attention.

• Cultural shift toward local intelligence, emotional resonance, and craft.

• Smart systems assist but do not decide. You drive, it advises.

• New benchmarks: “Serenity per watt”, “Complexity avoided”, “Time saved for soul”.

⸻

PHASE 4: THE REWEAVING (2032–2035)

“Tools for culture, not culture as tool.”

• AI serves communal living, ecological restoration, learning ecosystems.

• Widespread adoption of “Digital Commons” models (open-source, ethical compute).

• Intelligence seen not as a weapon of dominance but as a craft of care.

• Humans rediscover meaning not just in what they can automate, but what they can hold together.

⸻

THE OVERALL MAP:

• Spiritual: Shift from worshipping intelligence to cultivating wisdom.

• Design: From smart cities to wise villages—tech that respects silence, presence, friction.

• Economy: From “scale fast” to “scale consciously.”

• Power: From algorithmic persuasion to consent-based architectures.

• Aesthetics: From algorithmic mimicry to radical human texture.

⸻

In this future, the most prized skill won’t be prompting or automation—it’ll be curating stillness in motion, designing for nuance, and knowing when not to use AI at all.

8 notes

·

View notes

Text

It’s absolutely baffling to me how many artists and people who are enjoyers of art and design and creativity in its myriad of expressions are willing to just let AI run rampant. They know on an intellectual level all the arguments: copyright infringement, intellectual property, environmental impact, the unseen and often exploitative labor that goes into training these algorithms, but when it’s just for a cheap laugh or a cheap thrill all of that goes out the window.

The point of rejecting these tools is to hold the line, to keep the people who are fanning the flames of the “AI race” and the push to integrate this technology into pretty much every aspect of our lives at bay. The moment something becomes an acceptable, mainstream use of AI like something as seemingly harmless as writing an email, they see an opening that they’ll start pressing to its most extreme iteration. They’ll push the boundaries of what is deemed acceptable data for them to use to train their models, privacy and copyright be damned.

I know many people who are resigned to use chatgpt for work, who feel they have no choice but to use AI to keep up with the insane fast pace of the work place. But the more you clear the way for the steamroller that is the fully automated corporate machine, the closer you get to being steamrolled yourself. How much longer until they think you are an obstacle to efficiency?

And efficiency is not accessibility. Anyone who claims that AI lowers the barrier of entry for art, writing, labor of any form, is absolutely deluding themselves. The fact that it’s an “access” that’s entirely reliant on a program made by a private corporation makes that point immediately moot. Art is accessible because anyone can make art. The people who argue for AI "art" want to consume art for cheap. They don’t value it enough to see the human behind it or simply want recognition for art they can’t be bothered to make. Accessibility in art or any field doesn’t bypass the process of acquiring skill and anyone who loves art loves the process.

Even outside of its full context, Miyazaki calling this technology an insult to life itself is absolutely applicable to many of these popular uses of AI. What is the point of anything if everything gets replaced by the pre-masticated slop cooked up by a bunch of techbros?

The only art worth anything is the art someone could be bothered to actually make.

#chatgpt#fuck openai#fuck chatgpt#ive been crashing out for three straight days#enough to write an essay#thought various#so if u catch me reblogging ai unknowingly#or knowingly#DRAG ME#get the fucking ai shit out of my fucking sight

10 notes

·

View notes

Text

Dear Omelas Community,

We have received questions regarding Omelas’s use of AI tools in our vetting process for Omelas Kids. In the interest of transparency, we will explain the process for how we are using a Large Language Model (LLM). We understand that members of our community have very reasonable concerns and strong opinions about using LLMs. Please be assured that no data other than a proposed Omelas Kid’s name has been put into the LLM script that was used. Let’s repeat that point: No data other than a proposed Omelas Kid’s name has been put int the LLM script. The sole purpose of using the LLM was to streamline the online search process used for Omelas Kid vetting, and rather than being accepted uncritically, the outputs were carefully analyzed by multiple members of our team for accuracy. We received more than 1,300 suggestions for Omelas Kid. Building on the work of previous Omelas Kids, we chose to vet participants before inviting them to be in the Omelas Hole.We communicated this intention to the applicants in the instructions of our Omelas Kid suggestion form.

In order to enhance our process for vetting, volunteer staff also chose to test a process utilizing a script that used ChatGPT. The sole purpose of using this LLM was to automate and aggregate the usual online searches for participant vetting, which can take up to 10–30 minutes per applicant as you enter a person’s name, plus the search terms one by one. Using this script drastically shortened the search process by finding and aggregating sources to review.

Specifically, we created a query, including a requirement to provide sources, and entered no information about the applicant into the script except for their name. As generative AI can be unreliable, we built in an additional step for human review of all results with additional searches done by a human as necessary. An expert in LLMs who has been working in the field since the 1990s reviewed our process and found that privacy was protected and respected, but cautioned that, as we knew, the process might return false results.

The results were then passed back to the Omelas Kid division head and track leads. Track leads who were interested in Omelas Kids provided additional review of the results. Absolutely no Omelas Kids were denied a place on the program based solely on the LLM search. Once again, let us reiterate that no Omelas Kids were denied a place on the program based solely on the LLM search.

Using this process saved literally hundreds of hours of volunteer staff time, and we believe it resulted in more accurate vetting after the step of checking any purported negative results. We have also not utilized an LLM in any other aspect of our program or convention. If you have any questions, please get in touch with [email protected] or [email protected]

4 notes

·

View notes