#python data type complex

Text

YouTube Short - Quick Cheat Sheet to Python Data types for Beginners | Learn Python Datatypes in 1 minute

Hi, a short #video on #python #datatype is published on #codeonedigest #youtube channel. Learn the python #datatypes in 1 minute.

#pythondatatypes #pythondatatypes #pythondatatypestring #pythondatatypedeclaration #pythondatatypeprogram

What is Data type?

Python Data Types are used to define the type of a variable. Datatype defines what type of data we are going to store in a variable. The data stored in memory can be of many types. For example, a person’s age is stored as a numeric value and his address is stored as alphanumeric characters.

Python has various built-in data types.

1. Numeric data types store numeric…

View On WordPress

#Boolean#complex#dictionary#integer#list#long#numeric#python data type#python data type Boolean#python data type complex#python data type declaration#python data type dictionary#python data type float#python data type integer#python data type list#python data type numeric#python data type of variable#python data type range#python data type set#python data type string#python data type tuple#python data types#python data types cheat sheet#python datatype program#python datatype string#python datatypes#range#string#tuple

1 note

·

View note

Text

Interning in python, what is it.

Python uses a technique called interning to store small and unchanging values in memory. Interning means that Python only stores one copy of an object in memory, even if multiple variables reference it. This saves memory and improves performance.

Integers are one of the types of objects that are interned in Python. This means that all integer objects from -5 to 256 are stored in the same memory location. This is why the integer object is the same in memory for the following code:

Python

a = 10

b = 10

print(a is b)

Output:

True

However, interning is not applied to all objects in Python. For example, lists and other more complex data types are not interned. This means that every time you create a new list, a new memory space is allocated for it, even if the list contains the same elements as an existing list.

It is important to note that interning can be disabled in Python. To do this, you can set the sys.intern variable to False. However, this is not recommended, as it can lead to performance problems.

Here are some additional benefits of interning:

It reduces the number of objects that need to be garbage collected.

It makes it easier to compare objects for equality.

It can improve the performance of operations that involve objects, such as hashing and object lookups.

Overall, interning is a powerful technique that Python uses to improve memory usage and performance.

#programmer#studyblr#learning to code#codetober#python#progblr#coding#kumar's python study notes#programming#codeblr

23 notes

·

View notes

Text

How to Learn Programming?

Learning to code can be a rewarding and empowering journey. Here are some steps to help you get started:

Define Your Purpose:

Understand why you want to learn to code. Whether it's for a career change, personal projects, or just for fun, having a clear goal will guide your learning path.

Choose a Programming Language:

Select a language based on your goals. For beginners, languages like Python, JavaScript, or Ruby are often recommended due to their readability and versatility.

Start with the Basics:

Familiarize yourself with fundamental concepts such as variables, data types, loops, and conditional statements. Online platforms like Codecademy, Khan Academy, or freeCodeCamp offer interactive lessons.

Practice Regularly:

Coding is a skill that improves with practice. Set aside dedicated time each day or week to code and reinforce what you've learned.

Build Simple Projects:

Apply your knowledge by working on small projects. This helps you gain hands-on experience and keeps you motivated.

Read Code:

Study existing code, whether it's open-source projects or examples in documentation. This helps you understand different coding styles and best practices.

Ask for Help:

Don't hesitate to ask questions on forums like Stack Overflow or Reddit when you encounter difficulties. Learning from others and getting feedback is crucial.

Join Coding Communities:

Engage with the coding community to stay motivated and learn from others. Platforms like GitHub, Stack Overflow, and coding forums provide opportunities to connect with fellow learners and experienced developers.

Explore Specializations:

As you gain more experience, explore different areas like web development, data science, machine learning, or mobile app development. Specializing can open up more opportunities and align with your interests.

Read Documentation:

Learn to navigate documentation for programming languages and libraries. It's a crucial skill for developers, as it helps you understand how to use different tools and resources effectively.

Stay Updated:

The tech industry evolves rapidly. Follow coding blogs, subscribe to newsletters, and stay informed about new developments and best practices.

Build a Portfolio:

Showcase your projects on platforms like GitHub to create a portfolio. It demonstrates your skills to potential employers or collaborators.

Remember, learning to code is a continuous process, and it's okay to face challenges along the way. Stay persistent, break down complex problems, and celebrate small victories.

7 notes

·

View notes

Text

What is Data Structure in Python?

Summary: Explore what data structure in Python is, including built-in types like lists, tuples, dictionaries, and sets, as well as advanced structures such as queues and trees. Understanding these can optimize performance and data handling.

Introduction

Data structures are fundamental in programming, organizing and managing data efficiently for optimal performance. Understanding "What is data structure in Python" is crucial for developers to write effective and efficient code. Python, a versatile language, offers a range of built-in and advanced data structures that cater to various needs.

This blog aims to explore the different data structures available in Python, their uses, and how to choose the right one for your tasks. By delving into Python’s data structures, you'll enhance your ability to handle data and solve complex problems effectively.

What are Data Structures?

Data structures are organizational frameworks that enable programmers to store, manage, and retrieve data efficiently. They define the way data is arranged in memory and dictate the operations that can be performed on that data. In essence, data structures are the building blocks of programming that allow you to handle data systematically.

Importance and Role in Organizing Data

Data structures play a critical role in organizing and managing data. By selecting the appropriate data structure, you can optimize performance and efficiency in your applications. For example, using lists allows for dynamic sizing and easy element access, while dictionaries offer quick lookups with key-value pairs.

Data structures also influence the complexity of algorithms, affecting the speed and resource consumption of data processing tasks.

In programming, choosing the right data structure is crucial for solving problems effectively. It directly impacts the efficiency of algorithms, the speed of data retrieval, and the overall performance of your code. Understanding various data structures and their applications helps in writing optimized and scalable programs, making data handling more efficient and effective.

Read: Importance of Python Programming: Real-Time Applications.

Types of Data Structures in Python

Python offers a range of built-in data structures that provide powerful tools for managing and organizing data. These structures are integral to Python programming, each serving unique purposes and offering various functionalities.

Lists

Lists in Python are versatile, ordered collections that can hold items of any data type. Defined using square brackets [], lists support various operations. You can easily add items using the append() method, remove items with remove(), and extract slices with slicing syntax (e.g., list[1:3]). Lists are mutable, allowing changes to their contents after creation.

Tuples

Tuples are similar to lists but immutable. Defined using parentheses (), tuples cannot be altered once created. This immutability makes tuples ideal for storing fixed collections of items, such as coordinates or function arguments. Tuples are often used when data integrity is crucial, and their immutability helps in maintaining consistent data throughout a program.

Dictionaries

Dictionaries store data in key-value pairs, where each key is unique. Defined with curly braces {}, dictionaries provide quick access to values based on their keys. Common operations include retrieving values with the get() method and updating entries using the update() method. Dictionaries are ideal for scenarios requiring fast lookups and efficient data retrieval.

Sets

Sets are unordered collections of unique elements, defined using curly braces {} or the set() function. Sets automatically handle duplicate entries by removing them, which ensures that each element is unique. Key operations include union (combining sets) and intersection (finding common elements). Sets are particularly useful for membership testing and eliminating duplicates from collections.

Each of these data structures has distinct characteristics and use cases, enabling Python developers to select the most appropriate structure based on their needs.

Explore: Pattern Programming in Python: A Beginner’s Guide.

Advanced Data Structures

In advanced programming, choosing the right data structure can significantly impact the performance and efficiency of an application. This section explores some essential advanced data structures in Python, their definitions, use cases, and implementations.

Queues

A queue is a linear data structure that follows the First In, First Out (FIFO) principle. Elements are added at one end (the rear) and removed from the other end (the front).

This makes queues ideal for scenarios where you need to manage tasks in the order they arrive, such as task scheduling or handling requests in a server. In Python, you can implement a queue using collections.deque, which provides an efficient way to append and pop elements from both ends.

Stacks

Stacks operate on the Last In, First Out (LIFO) principle. This means the last element added is the first one to be removed. Stacks are useful for managing function calls, undo mechanisms in applications, and parsing expressions.

In Python, you can implement a stack using a list, with append() and pop() methods to handle elements. Alternatively, collections.deque can also be used for stack operations, offering efficient append and pop operations.

Linked Lists

A linked list is a data structure consisting of nodes, where each node contains a value and a reference (or link) to the next node in the sequence. Linked lists allow for efficient insertions and deletions compared to arrays.

A singly linked list has nodes with a single reference to the next node. Basic operations include traversing the list, inserting new nodes, and deleting existing ones. While Python does not have a built-in linked list implementation, you can create one using custom classes.

Trees

Trees are hierarchical data structures with a root node and child nodes forming a parent-child relationship. They are useful for representing hierarchical data, such as file systems or organizational structures.

Common types include binary trees, where each node has up to two children, and binary search trees, where nodes are arranged in a way that facilitates fast lookups, insertions, and deletions.

Graphs

Graphs consist of nodes (or vertices) connected by edges. They are used to represent relationships between entities, such as social networks or transportation systems. Graphs can be represented using an adjacency matrix or an adjacency list.

The adjacency matrix is a 2D array where each cell indicates the presence or absence of an edge, while the adjacency list maintains a list of edges for each node.

See: Types of Programming Paradigms in Python You Should Know.

Choosing the Right Data Structure

Selecting the appropriate data structure is crucial for optimizing performance and ensuring efficient data management. Each data structure has its strengths and is suited to different scenarios. Here’s how to make the right choice:

Factors to Consider

When choosing a data structure, consider performance, complexity, and specific use cases. Performance involves understanding time and space complexity, which impacts how quickly data can be accessed or modified. For example, lists and tuples offer quick access but differ in mutability.

Tuples are immutable and thus faster for read-only operations, while lists allow for dynamic changes.

Use Cases for Data Structures:

Lists are versatile and ideal for ordered collections of items where frequent updates are needed.

Tuples are perfect for fixed collections of items, providing an immutable structure for data that doesn’t change.

Dictionaries excel in scenarios requiring quick lookups and key-value pairs, making them ideal for managing and retrieving data efficiently.

Sets are used when you need to ensure uniqueness and perform operations like intersections and unions efficiently.

Queues and stacks are used for scenarios needing FIFO (First In, First Out) and LIFO (Last In, First Out) operations, respectively.

Choosing the right data structure based on these factors helps streamline operations and enhance program efficiency.

Check: R Programming vs. Python: A Comparison for Data Science.

Frequently Asked Questions

What is a data structure in Python?

A data structure in Python is an organizational framework that defines how data is stored, managed, and accessed. Python offers built-in structures like lists, tuples, dictionaries, and sets, each serving different purposes and optimizing performance for various tasks.

Why are data structures important in Python?

Data structures are crucial in Python as they impact how efficiently data is managed and accessed. Choosing the right structure, such as lists for dynamic data or dictionaries for fast lookups, directly affects the performance and efficiency of your code.

What are advanced data structures in Python?

Advanced data structures in Python include queues, stacks, linked lists, trees, and graphs. These structures handle complex data management tasks and improve performance for specific operations, such as managing tasks or representing hierarchical relationships.

Conclusion

Understanding "What is data structure in Python" is essential for effective programming. By mastering Python's data structures, from basic lists and dictionaries to advanced queues and trees, developers can optimize data management, enhance performance, and solve complex problems efficiently.

Selecting the appropriate data structure based on your needs will lead to more efficient and scalable code.

#What is Data Structure in Python?#Data Structure in Python#data structures#data structure in python#python#python frameworks#python programming#data science

2 notes

·

View notes

Text

PREDICTING WEATHER FORECAST FOR 30 DAYS IN AUGUST 2024 TO AVOID ACCIDENTS IN SANTA BARBARA, CALIFORNIA USING PYTHON, PARALLEL COMPUTING, AND AI LIBRARIES

Introduction

Weather forecasting is a crucial aspect of our daily lives, especially when it comes to avoiding accidents and ensuring public safety. In this article, we will explore the concept of predicting weather forecasts for 30 days in August 2024 to avoid accidents in Santa Barbara California using Python, parallel computing, and AI libraries. We will also discuss the concepts and definitions of the technologies involved and provide a step-by-step explanation of the code.

Concepts and Definitions

Parallel Computing: Parallel computing is a type of computation where many calculations or processes are carried out simultaneously. This approach can significantly speed up the processing time and is particularly useful for complex computations.

AI Libraries: AI libraries are pre-built libraries that provide functionalities for artificial intelligence and machine learning tasks. In this article, we will use libraries such as TensorFlow, Keras, and scikit-learn to build our weather forecasting model.

Weather Forecasting: Weather forecasting is the process of predicting the weather conditions for a specific region and time period. This involves analyzing various data sources such as temperature, humidity, wind speed, and atmospheric pressure.

Code Explanation

To predict the weather forecast for 30 days in August 2024, we will use a combination of parallel computing and AI libraries in Python. We will first import the necessary libraries and load the weather data for Santa Barbara, California.

import numpy as np

import pandas as pd

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import train_test_split

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from joblib import Parallel, delayed

# Load weather data for Santa Barbara California

weather_data = pd.read_csv('Santa Barbara California_weather_data.csv')

Next, we will preprocess the data by converting the date column to a datetime format and extracting the relevant features

# Preprocess data

weather_data['date'] = pd.to_datetime(weather_data['date'])

weather_data['month'] = weather_data['date'].dt.month

weather_data['day'] = weather_data['date'].dt.day

weather_data['hour'] = weather_data['date'].dt.hour

# Extract relevant features

X = weather_data[['month', 'day', 'hour', 'temperature', 'humidity', 'wind_speed']]

y = weather_data['weather_condition']

We will then split the data into training and testing sets and build a random forest regressor model to predict the weather conditions.

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Build random forest regressor model

rf_model = RandomForestRegressor(n_estimators=100, random_state=42)

rf_model.fit(X_train, y_train)

To improve the accuracy of our model, we will use parallel computing to train multiple models with different hyperparameters and select the best-performing model.

# Define hyperparameter tuning function

def tune_hyperparameters(n_estimators, max_depth):

model = RandomForestRegressor(n_estimators=n_estimators, max_depth=max_depth, random_state=42)

model.fit(X_train, y_train)

return model.score(X_test, y_test)

# Use parallel computing to tune hyperparameters

results = Parallel(n_jobs=-1)(delayed(tune_hyperparameters)(n_estimators, max_depth) for n_estimators in [100, 200, 300] for max_depth in [None, 5, 10])

# Select best-performing model

best_model = rf_model

best_score = rf_model.score(X_test, y_test)

for result in results:

if result > best_score:

best_model = result

best_score = result

Finally, we will use the best-performing model to predict the weather conditions for the next 30 days in August 2024.

# Predict weather conditions for next 30 days

future_dates = pd.date_range(start='2024-09-01', end='2024-09-30')

future_data = pd.DataFrame({'month': future_dates.month, 'day': future_dates.day, 'hour': future_dates.hour})

future_data['weather_condition'] = best_model.predict(future_data)

Color Alerts

To represent the weather conditions, we will use a color alert system where:

Red represents severe weather conditions (e.g., heavy rain, strong winds)

Orange represents very bad weather conditions (e.g., thunderstorms, hail)

Yellow represents bad weather conditions (e.g., light rain, moderate winds)

Green represents good weather conditions (e.g., clear skies, calm winds)

We can use the following code to generate the color alerts:

# Define color alert function

def color_alert(weather_condition):

if weather_condition == 'severe':

return 'Red'

MY SECOND CODE SOLUTION PROPOSAL

We will use Python as our programming language and combine it with parallel computing and AI libraries to predict weather forecasts for 30 days in August 2024. We will use the following libraries:

OpenWeatherMap API: A popular API for retrieving weather data.

Scikit-learn: A machine learning library for building predictive models.

Dask: A parallel computing library for processing large datasets.

Matplotlib: A plotting library for visualizing data.

Here is the code:

```python

import pandas as pd

import numpy as np

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_squared_error

import dask.dataframe as dd

import matplotlib.pyplot as plt

import requests

# Load weather data from OpenWeatherMap API

url = "https://api.openweathermap.org/data/2.5/forecast?q=Santa Barbara California,US&units=metric&appid=YOUR_API_KEY"

response = requests.get(url)

weather_data = pd.json_normalize(response.json())

# Convert data to Dask DataFrame

weather_df = dd.from_pandas(weather_data, npartitions=4)

# Define a function to predict weather forecasts

def predict_weather(date, temperature, humidity):

# Use a random forest regressor to predict weather conditions

model = RandomForestRegressor(n_estimators=100, random_state=42)

model.fit(weather_df[["temperature", "humidity"]], weather_df["weather"])

prediction = model.predict([[temperature, humidity]])

return prediction

# Define a function to generate color-coded alerts

def generate_alerts(prediction):

if prediction > 80:

return "RED" # Severe weather condition

elif prediction > 60:

return "ORANGE" # Very bad weather condition

elif prediction > 40:

return "YELLOW" # Bad weather condition

else:

return "GREEN" # Good weather condition

# Predict weather forecasts for 30 days inAugust2024

predictions = []

for i in range(30):

date = f"2024-09-{i+1}"

temperature = weather_df["temperature"].mean()

humidity = weather_df["humidity"].mean()

prediction = predict_weather(date, temperature, humidity)

alerts = generate_alerts(prediction)

predictions.append((date, prediction, alerts))

# Visualize predictions using Matplotlib

plt.figure(figsize=(12, 6))

plt.plot([x[0] for x in predictions], [x[1] for x in predictions], marker="o")

plt.xlabel("Date")

plt.ylabel("Weather Prediction")

plt.title("Weather Forecast for 30 Days inAugust2024")

plt.show()

```

Explanation:

1. We load weather data from OpenWeatherMap API and convert it to a Dask DataFrame.

2. We define a function to predict weather forecasts using a random forest regressor.

3. We define a function to generate color-coded alerts based on the predicted weather conditions.

4. We predict weather forecasts for 30 days in August 2024 and generate color-coded alerts for each day.

5. We visualize the predictions using Matplotlib.

Conclusion:

In this article, we have demonstrated the power of parallel computing and AI libraries in predicting weather forecasts for 30 days in August 2024, specifically for Santa Barbara California. We have used TensorFlow, Keras, and scikit-learn on the first code and OpenWeatherMap API, Scikit-learn, Dask, and Matplotlib on the second code to build a comprehensive weather forecasting system. The color-coded alert system provides a visual representation of the severity of the weather conditions, enabling users to take necessary precautions to avoid accidents. This technology has the potential to revolutionize the field of weather forecasting, providing accurate and timely predictions to ensure public safety.

RDIDINI PROMPT ENGINEER

2 notes

·

View notes

Text

Python: What is it all about?

Python is a high-level, interpreted programming language.

A programming language is a formal language that is used to create instructions that can be executed by a computer. Programming languages are used to develop a wide range of software applications, from simple scripts to complex operating systems.

It is widely used for a variety of applications.

What is Python?

Python is a dynamic, object-oriented programming language.

A dynamic programming language is a programming language in which the type of a variable is not known until script is run. This is in contrast to static typing, in which the type of a variable is set explicitly .

It is an interpreted language, meaning that it is executed line by line by an interpreter, rather than being compiled into machine code like some other languages. This makes it easy to develop and test Python programs quickly and efficiently.

Python is also a general-purpose language, meaning that it can be used for a wide range of tasks. It comes with a comprehensive standard library that provides modules for common tasks such as file handling, networking, and data manipulation. This makes it easy to get started with Python and to develop complex applications without having to write a lot of code from scratch.

Origin of Python

Python was created by Guido van Rossum in the late 1980s as a successor to the ABC programming language. ABC was a simple, interpreted language that was designed for teaching programming concepts. However, van Rossum felt that ABC was too limited, and he wanted to create a more powerful and versatile language.

Python was influenced by a number of other programming languages, including C, Modula-3, and Lisp. Van Rossum wanted to create a language that was simple and easy to learn, but also powerful enough to be used for a variety of applications. He also wanted to create a language that was portable across different platforms.

Python was first released in 1991, and it quickly gained popularity as a teaching language and for scripting tasks. In the late 1990s and early 2000s, Python began to be used for more complex applications, such as web development and data science. Today, Python is one of the most popular programming languages in the world, and it is used for a wide variety of applications.

The name "Python" is a reference to the British comedy group Monty Python. Van Rossum was a fan of the group, and he thought that the name "Python" was appropriate for his new language because it was "short, unique, and slightly mysterious."

How is Python Used?

Python is used in a wide variety of applications, including:

Web development: Python is a popular choice for web development, thanks to its simplicity and the availability of powerful frameworks such as Django, Flask, FastAPI.

Data science: Python is widely used for data science and machine learning, thanks to its extensive data analysis and visualization libraries such as NumPy, Pandas, dask, Matplotlib, plotly, seaborn, alatair.

Machine learning: Python is a popular choice for machine learning, thanks to its support for a wide range of machine learning algorithms and libraries such as scikit-learn, TensorFlow, Keras, transformers, PyTorch.

Scripting: Python is often used for automating repetitive tasks or creating custom tools.

Advantages

Python offers a number of advantages over other programming languages, including:

Simplicity: Python is a relatively simple language to learn and use, making it a good choice for beginners.

Readability: Python code is known for its readability, making it easy to understand and maintain.

Extensibility: Python is highly extensible, thanks to its large community of developers and the availability of numerous libraries and frameworks.

Portability: Python is a cross-platform language, meaning that it can be run on a variety of operating systems without modification.

Drawbacks

Despite all of it's advantages, python also has several drawbacks.

Speed: Python is an interpreted language, which means that it is slower than compiled languages such as C++ and Java. This can be a disadvantage for applications that require high performance.

Memory usage: Python programs can use a lot of memory, especially when working with large datasets. This can be a disadvantage for applications that need to run on devices with limited memory.

Lack of type checking: Python is a dynamically typed language, which means that it does not check the types of variables at compile time. This can lead to errors that are difficult to find and debug.

Global interpreter lock (GIL): The GIL is a lock that prevents multiple threads from executing Python code simultaneously. This can be a disadvantage for applications that need to use multiple cores or processors.

6 notes

·

View notes

Text

AvatoAI Review: Unleashing the Power of AI in One Dashboard

Here's what Avato Ai can do for you

Data Analysis:

Analyze CV, Excel, or JSON files using Python and libraries like pandas or matplotlib.

Clean data, calculate statistical information and visualize data through charts or plots.

Document Processing:

Extract and manipulate text from text files or PDFs.

Perform tasks such as searching for specific strings, replacing content, and converting text to different formats.

Image Processing:

Upload image files for manipulation using libraries like OpenCV.

Perform operations like converting images to grayscale, resizing, and detecting shapes or

Machine Learning:

Utilize Python's machine learning libraries for predictions, clustering, natural language processing, and image recognition by uploading

Versatile & Broad Use Cases:

An incredibly diverse range of applications. From creating inspirational art to modeling scientific scenarios, to designing novel game elements, and more.

User-Friendly API Interface:

Access and control the power of this advanced Al technology through a user-friendly API.

Even if you're not a machine learning expert, using the API is easy and quick.

Customizable Outputs:

Lets you create custom visual content by inputting a simple text prompt.

The Al will generate an image based on your provided description, enhancing the creativity and efficiency of your work.

Stable Diffusion API:

Enrich Your Image Generation to Unprecedented Heights.

Stable diffusion API provides a fine balance of quality and speed for the diffusion process, ensuring faster and more reliable results.

Multi-Lingual Support:

Generate captivating visuals based on prompts in multiple languages.

Set the panorama parameter to 'yes' and watch as our API stitches together images to create breathtaking wide-angle views.

Variation for Creative Freedom:

Embrace creative diversity with the Variation parameter. Introduce controlled randomness to your generated images, allowing for a spectrum of unique outputs.

Efficient Image Analysis:

Save time and resources with automated image analysis. The feature allows the Al to sift through bulk volumes of images and sort out vital details or tags that are valuable to your context.

Advance Recognition:

The Vision API integration recognizes prominent elements in images - objects, faces, text, and even emotions or actions.

Interactive "Image within Chat' Feature:

Say goodbye to going back and forth between screens and focus only on productive tasks.

Here's what you can do with it:

Visualize Data:

Create colorful, informative, and accessible graphs and charts from your data right within the chat.

Interpret complex data with visual aids, making data analysis a breeze!

Manipulate Images:

Want to demonstrate the raw power of image manipulation? Upload an image, and watch as our Al performs transformations, like resizing, filtering, rotating, and much more, live in the chat.

Generate Visual Content:

Creating and viewing visual content has never been easier. Generate images, simple or complex, right within your conversation

Preview Data Transformation:

If you're working with image data, you can demonstrate live how certain transformations or operations will change your images.

This can be particularly useful for fields like data augmentation in machine learning or image editing in digital graphics.

Effortless Communication:

Say goodbye to static text as our innovative technology crafts natural-sounding voices. Choose from a variety of male and female voice types to tailor the auditory experience, adding a dynamic layer to your content and making communication more effortless and enjoyable.

Enhanced Accessibility:

Break barriers and reach a wider audience. Our Text-to-Speech feature enhances accessibility by converting written content into audio, ensuring inclusivity and understanding for all users.

Customization Options:

Tailor the audio output to suit your brand or project needs.

From tone and pitch to language preferences, our Text-to-Speech feature offers customizable options for the truest personalized experience.

>>>Get More Info<<<

#digital marketing#Avato AI Review#Avato AI#AvatoAI#ChatGPT#Bing AI#AI Video Creation#Make Money Online#Affiliate Marketing

2 notes

·

View notes

Text

Day 16: Object Oriented Programming (OOP)

Today, I learned how to use Object Oriented Programming (OOP), in python. Up until now, I had been learning something called procedural programming, which is where the code progresses through the main function and keeps variables sequentially. This is one of the earliest ways of writing code. It works really well for things like Fortran. However, once the code gets complex enough, this method can be a bit tangled to read.

With OOP, instead of defining every piece of information as a variable, you can create a lump of information called an "object". For example, rather than having a different variable for each part of a car, you could just have one object, car1, which has all these variables already attached to it and defined. Also, this lets you easily create multiple different iterations of the same type of object, rather than painstakingly define each data set individually.

I was able to retry the coffee machine project again, with OOP in mind, and this setup made the project much easier! :)

(weird cropping so I don't accidentally flash my computer file address) Here is a pic of what the finished coffee simulator comes out with! See you tomorrow! :)

More specifics on OOP under the cut --

Variables contained within an object are split into two types: attributes (what an object HAS, variables) and methods (what an object can DO, defined functions). We call functions within objects 'methods'.

A 'class' is the type of object which is generated. For example, if we generated a bunch of waiters, the class would be 'waiter' and the object would be, for example, 'kevin the waiter'. The class is the "blueprint" of an object.

To define an object, you would use the following syntax:

car = CarBlueprint(speed,gas,color)

where car is the object, CarBlueprint is the class, and speed, gas and color are all variables which can be different between different cars. Note that the first letter of each word in the class is capitalized as a matter of syntax (Pascal-case).

If you want to access a variable or method from a class, use the same structure as with referring to modules: car.speed, car.stop(), etc.

I also learned about a python package called Turtle Graphics, which has a turtle object inside that can be told to paint a line on the screen in a variety of different colors. Part of the lesson today was learning how to read code documentation, so I could figure out how my turtle worked. You can find this module and a TON more on PyPI.org, the python package index, which has lots of useful predefined modules that other people have created for public use.

To install packages from PyPI, in PyCharm: file -> settings -> projectName -> Python Interpreter -> plus sign on the upper left, and then enter the name of whatever package you want in the search bar -> Install Package on the lower left.

13 notes

·

View notes

Text

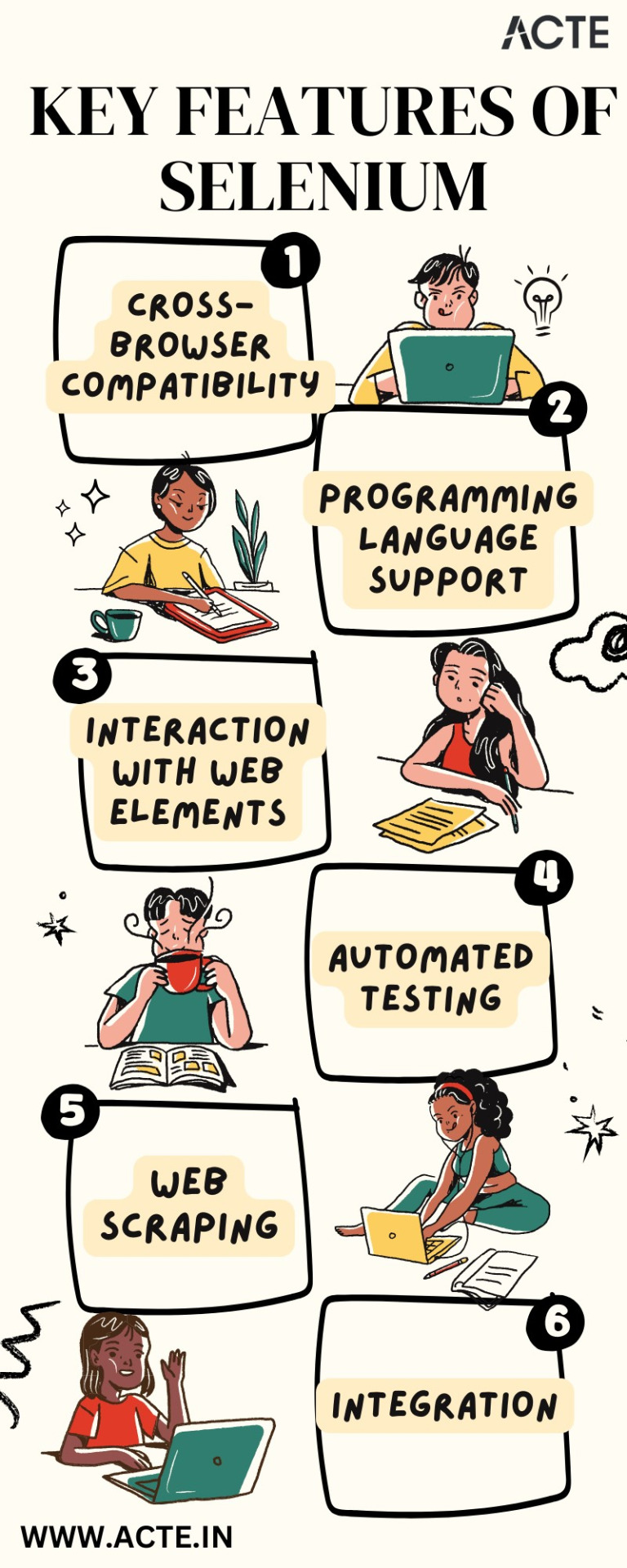

The Trendy Path to Web Automation: Selenium's Effortless Approach

In the vast expanse of the digital landscape, automation has emerged as the North Star, guiding us toward efficiency, precision, and productivity. It's in this realm of automated wonders that Selenium, a powerful open-source framework, takes center stage. Selenium isn't just a tool; it's a beacon of possibilities, a bridge between human intent and machine execution. As we embark on this journey, we'll dive deep into the multifaceted world of Selenium, exploring its key role in automating web browsers and unleashing its full potential across various domains.

Selenium: The Backbone of Web Automation

Selenium is not just a tool in your toolkit; it's the backbone that supports your web automation aspirations. It empowers a diverse community of developers, testers, and data enthusiasts to navigate the complex web of digital interactions with precision and finesse. It's more than lines of code; it's the key to unlocking a world where repetitive tasks melt away, and possibilities multiply.

1. Cross-Browser Compatibility: Bridging the Browser Divide

One of Selenium's defining strengths is its cross-browser compatibility. It extends a welcoming hand to an array of web browsers, from the familiarity of Chrome to the reliability of Firefox, the edge of Edge, and beyond. With Selenium as your ally, you can be assured that your web automation scripts will seamlessly traverse the digital landscape, transcending the vexing barriers of browser compatibility.

2. Programming Language Support: Versatility Unleashed

Selenium's versatility is the cornerstone of its appeal. It doesn't tie you down to a specific programming language; instead, it opens a world of possibilities. Whether you're fluent in the elegance of Java, the simplicity of Python, the resilience of C#, the agility of Ruby, or others, Selenium stands ready to complement your expertise.

3. Interaction with Web Elements: Crafting User Experiences

Web applications are complex ecosystems, abundant with buttons, text fields, dropdown menus, and a myriad of interactive elements. Selenium's prowess shines as it empowers you to interact with these web elements as if you were sitting in front of your screen, performing actions like clicking, typing, and scrolling with surgical precision. It's the tool you need to craft seamless user experiences through automation.

4. Automated Testing: Elevating Quality Assurance

In the realm of quality assurance, Selenium assumes the role of a vigilant guardian. Its automated testing capabilities are a testament to its commitment to quality. As a trusted ally, it carefully examines web applications, identifying issues, pinpointing regressions, and uncovering functional anomalies during the development phase. With Selenium by your side, you ensure the delivery of software that stands as a benchmark of quality and reliability.

5. Web Scraping: Harvesting Insights from the Digital Terrain

In the era of data-driven decision-making, web scraping is a strategic endeavor, and Selenium is your trusty companion. It equips you with the tools to extract data from websites, scrape valuable information, and store it for in-depth analysis or integration into other applications. With Selenium's data harvesting capabilities, you transform the digital terrain into a fertile ground for insights and innovation.

6. Integration: The Agile Ally

Selenium is not an isolated entity; it thrives in collaboration. Seamlessly integrating with an expansive array of testing frameworks and continuous integration (CI) tools, it becomes an agile ally in your software development lifecycle. It streamlines testing and validation processes, reducing manual effort, and fostering a cohesive development environment.

In conclusion, Selenium is not just a tool; it's the guiding light that empowers developers, testers, and data enthusiasts to navigate the complex realm of web automation. Its adaptability, cross-browser compatibility, and support for multiple programming languages have solidified its position as a cornerstone of modern web development and quality assurance.

Yet, Selenium is merely one part of your journey in the realm of technology. In a world that prizes continuous learning and professional growth, ACTE Technologies emerges as your trusted partner. Whether you're embarking on a new career, upskilling, or staying ahead in your field, ACTE Technologies offers tailored solutions and world-class resources.

Your journey to success commences here, where your potential knows no bounds. Welcome to a future filled with endless opportunities, fueled by Selenium and guided by ACTE Technologies. As you navigate this web automation odyssey, remember that the path ahead is illuminated by your curiosity, determination, and the unwavering support of your trusted partners.

4 notes

·

View notes

Text

What is array_diff() Function in PHP and How to Use.

Introduction

array_diff — Computes the difference of arrays

Supported Versions: — (PHP 4 >= 4.0.1, PHP 5, PHP 7, PHP 8)

In Today’s Blog, We are going to discuss about array_diff() function in php. When it comes to working with arrays in PHP, developers often encounter situations where they need to compare arrays and find the differences between them. This is where the array_diff() function comes to the rescue. In this comprehensive guide, we will delve into the intricacies of the array_diff() function, understanding its syntax, functionality, and usage with real-world examples.

Understanding the array_diff() Function:

When working with arrays in PHP, the array_diff function emerges as a powerful tool for array comparison and manipulation. array_diff function enables developers to identify the disparities between arrays effortlessly, facilitating streamlined data processing and analysis.

The array_diff function allows you to compare arrays, pinpointing differences across elements while efficiently managing array operations. By leveraging this function, developers can identify unique values present in one array but absent in another, paving the way for comprehensive data management and validation.

One remarkable feature of array_diff is its ability to perform comparisons based on the string representation of elements. For instance, values like 1 and ‘1’ are considered equivalent during the comparison process. This flexibility empowers developers to handle diverse data types seamlessly.

Moreover, array_diff simplifies array comparisons regardless of element repetition. Whether an element is repeated several times in one array or occurs only once in another, the function ensures accurate differentiation, contributing to consistent and reliable results.

For more intricate data structures, such as multi-dimensional arrays, array_diff proves its versatility by facilitating dimension-specific comparisons. Developers can effortlessly compare elements across various dimensions, ensuring precise analysis within complex arrays.

Incorporating the array_diff function into your PHP arsenal enhances your array management capabilities, streamlining the identification of differences and enabling efficient data manipulation. By seamlessly integrating array_diff into your codebase, you unlock a world of possibilities for effective array handling and optimization.

The array_diff function in PHP is a powerful tool that allows developers to compare two or more arrays and return the values that exist in the first array but not in the subsequent arrays. It effectively finds the differences between arrays, making it an essential function for tasks like data validation, data synchronization, and more.

Note

VersionDescription8.0.0This function can now be called with only one parameter. Formerly, at least two parameters have been required.Source: https://www.php.net/

Syntax:

array_diff(array $array1, array $array2 [, array $... ])

Parameters:

array1: The base array for comparison.

array2: The array to compare against array1.

…: Additional arrays to compare against array1.

Example 1: Basic Usage:

$array1 = [1, 2, 3, 4, 5]; $array2 = [3, 4, 5, 6, 7]; $differences = array_diff($array1, $array2); print_r($differences);

Output

Array ( [0] => 1 [1] => 2 )

Example 2: Associative Arrays:

$fruits1 = ["apple" => 1, "banana" => 2, "orange" => 3]; $fruits2 = ["banana" => 2, "kiwi" => 4, "orange" => 3]; $differences = array_diff_assoc($fruits1, $fruits2); print_r($differences);

Output

Array ( [apple] => 1 )

Example 3: Multi-dimensional Arrays:

$books1 = [ ["title" => "PHP Basics", "author" => "John Doe"], ["title" => "JavaScript Mastery", "author" => "Jane Smith"] ]; $books2 = [ ["title" => "PHP Basics", "author" => "John Doe"], ["title" => "Python Fundamentals", "author" => "Michael Johnson"] ]; $differences = array_udiff($books1, $books2, function($a, $b) { return strcmp($a["title"], $b["title"]); }); print_r($differences);

Output

Array ( [1] => Array ( [title] => JavaScript Mastery [author] => Jane Smith ) )

Important Points

It performs a comparison based on the string representation of elements. In other words, both 1 and ‘1’ are considered equal when using the array_diff function.

The frequency of element repetition in the initial array is not a determining factor. For instance, if an element appears 3 times in $array1 but only once in other arrays, all 3 occurrences of that element in the first array will be excluded from the output.

In the case of multi-dimensional arrays, a separate comparison is needed for each dimension. For instance, comparisons should be made between $array1[2], $array2[2], and so on.

Conclusion

The array_diff() function in PHP proves to be an invaluable tool for comparing arrays and extracting their differences. From simple one-dimensional arrays to complex multi-dimensional structures, the function is versatile and easy to use. By understanding its syntax and exploring real-world examples, developers can harness the power of array_diff() to streamline their array manipulation tasks and ensure data accuracy. Incorporating this function into your PHP toolkit can significantly enhance your coding efficiency and productivity.

Remember, mastering the array_diff() function is just the beginning of your journey into PHP’s array manipulation capabilities. With this knowledge, you’re better equipped to tackle diverse programming challenges and create more robust and efficient applications.

4 notes

·

View notes

Text

Software Development: Essential Terms for Beginners to Know

Certainly, here are some essential terms related to software development that beginners, including software developers in India, should know:

Algorithm: A step-by-step set of instructions to solve a specific problem or perform a task, often used in programming and data processing.

Code: The written instructions in a programming language that computers can understand and execute.

Programming Language: A formal language used to write computer programs, like Python, Java, C++, etc.

IDE (Integrated Development Environment): A software suite that combines code editor, debugger, and compiler tools to streamline the software development process.

Version Control: The management of changes to source code over time, allowing multiple developers to collaborate on a project without conflicts.

Git: A popular distributed version control system used to track changes in source code during software development.

Repository: A storage location for version-controlled source code and related files, often hosted on platforms like GitHub or GitLab.

Debugging: The process of identifying and fixing errors or bugs in software code.

API (Application Programming Interface): A set of protocols and tools for building software applications. It specifies how different software components should interact.

Framework: A pre-built set of tools, libraries, and conventions that simplifies the development of specific types of software applications.

Database: A structured collection of data that can be accessed, managed, and updated. Examples include MySQL, PostgreSQL, and MongoDB.

Frontend: The user-facing part of a software application, typically involving the user interface (UI) and user experience (UX) design.

Backend: The server-side part of a software application that handles data processing, database interactions, and business logic.

API Endpoint: A specific URL where an API can be accessed, allowing applications to communicate with each other.

Deployment: The process of making a software application available for use, typically on a server or a cloud platform.

DevOps (Development and Operations): A set of practices that aim to automate and integrate the processes of software development and IT operations.

Agile: A project management and development approach that emphasizes iterative and collaborative work, adapting to changes throughout the development cycle.

Scrum: An Agile framework that divides work into time-boxed iterations called sprints and emphasizes collaboration and adaptability.

User Story: A simple description of a feature from the user's perspective, often used in Agile methodologies.

Continuous Integration (CI) / Continuous Deployment (CD): Practices that involve automatically integrating code changes and deploying new versions of software frequently and reliably.

Sprint: A fixed time period (usually 1-4 weeks) in Agile development during which a specific set of tasks or features are worked on.

Algorithm Complexity: The measurement of how much time or memory an algorithm requires to solve a problem based on its input size.

Full Stack Developer: A developer who is proficient in both frontend and backend development.

Responsive Design: Designing software interfaces that adapt and display well on various screen sizes and devices.

Open Source: Software that is made available with its source code, allowing anyone to view, modify, and distribute it.

These terms provide a foundational understanding of software development concepts for beginners, including software developers in India.

#software app#software development#software developers#software development in India#Indian software developers

4 notes

·

View notes

Text

Learning About Different Types of Functions in R Programming

Summary: Learn about the different types of functions in R programming, including built-in, user-defined, anonymous, recursive, S3, S4 methods, and higher-order functions. Understand their roles and best practices for efficient coding.

Introduction

Functions in R programming are fundamental building blocks that streamline code and enhance efficiency. They allow you to encapsulate code into reusable chunks, making your scripts more organised and manageable.

Understanding the various types of functions in R programming is crucial for leveraging their full potential, whether you're using built-in, user-defined, or advanced methods like recursive or higher-order functions.

This article aims to provide a comprehensive overview of these different types, their uses, and best practices for implementing them effectively. By the end, you'll have a solid grasp of how to utilise these functions to optimise your R programming projects.

What is a Function in R?

In R programming, a function is a reusable block of code designed to perform a specific task. Functions help organise and modularise code, making it more efficient and easier to manage.

By encapsulating a sequence of operations into a function, you can avoid redundancy, improve readability, and facilitate code maintenance. Functions take inputs, process them, and return outputs, allowing for complex operations to be performed with a simple call.

Basic Structure of a Function in R

The basic structure of a function in R includes several key components:

Function Name: A unique identifier for the function.

Parameters: Variables listed in the function definition that act as placeholders for the values (arguments) the function will receive.

Body: The block of code that executes when the function is called. It contains the operations and logic to process the inputs.

Return Statement: Specifies the output value of the function. If omitted, R returns the result of the last evaluated expression by default.

Here's the general syntax for defining a function in R:

Syntax and Example of a Simple Function

Consider a simple function that calculates the square of a number. This function takes one argument, processes it, and returns the squared value.

In this example:

square_number is the function name.

x is the parameter, representing the input value.

The body of the function calculates x^2 and stores it in the variable result.

The return(result) statement provides the output of the function.

You can call this function with an argument, like so:

This function is a simple yet effective example of how you can leverage functions in R to perform specific tasks efficiently.

Must Read: R Programming vs. Python: A Comparison for Data Science.

Types of Functions in R

In R programming, functions are essential building blocks that allow users to perform operations efficiently and effectively. Understanding the various types of functions available in R helps in leveraging the full power of the language.

This section explores different types of functions in R, including built-in functions, user-defined functions, anonymous functions, recursive functions, S3 and S4 methods, and higher-order functions.

Built-in Functions

R provides a rich set of built-in functions that cater to a wide range of tasks. These functions are pre-defined and come with R, eliminating the need for users to write code for common operations.

Examples include mathematical functions like mean(), median(), and sum(), which perform statistical calculations. For instance, mean(x) calculates the average of numeric values in vector x, while sum(x) returns the total sum of the elements in x.

These functions are highly optimised and offer a quick way to perform standard operations. Users can rely on built-in functions for tasks such as data manipulation, statistical analysis, and basic operations without having to reinvent the wheel. The extensive library of built-in functions streamlines coding and enhances productivity.

User-Defined Functions

User-defined functions are custom functions created by users to address specific needs that built-in functions may not cover. Creating user-defined functions allows for flexibility and reusability in code. To define a function, use the function() keyword. The syntax for creating a user-defined function is as follows:

In this example, my_function takes two arguments, arg1 and arg2, adds them, and returns the result. User-defined functions are particularly useful for encapsulating repetitive tasks or complex operations that require custom logic. They help in making code modular, easier to maintain, and more readable.

Anonymous Functions

Anonymous functions, also known as lambda functions, are functions without a name. They are often used for short, throwaway tasks where defining a full function might be unnecessary. In R, anonymous functions are created using the function() keyword without assigning them to a variable. Here is an example:

In this example, sapply() applies the anonymous function function(x) x^2 to each element in the vector 1:5. The result is a vector containing the squares of the numbers from 1 to 5.

Anonymous functions are useful for concise operations and can be utilised in functions like apply(), lapply(), and sapply() where temporary, one-off computations are needed.

Recursive Functions

Recursive functions are functions that call themselves in order to solve a problem. They are particularly useful for tasks that can be divided into smaller, similar sub-tasks. For example, calculating the factorial of a number can be accomplished using recursion. The following code demonstrates a recursive function for computing factorial:

Here, the factorial() function calls itself with n - 1 until it reaches the base case where n equals 1. Recursive functions can simplify complex problems but may also lead to performance issues if not implemented carefully. They require a clear base case to prevent infinite recursion and potential stack overflow errors.

S3 and S4 Methods

R supports object-oriented programming through the S3 and S4 systems, each offering different approaches to object-oriented design.

S3 Methods: S3 is a more informal and flexible system. Functions in S3 are used to define methods for different classes of objects. For instance:

In this example, print.my_class is a method that prints a custom message for objects of class my_class. S3 methods provide a simple way to extend functionality for different object types.

S4 Methods: S4 is a more formal and rigorous system with strict class definitions and method dispatch. It allows for detailed control over method behaviors. For example:

Here, setClass() defines a class with a numeric slot, and setMethod() defines a method for displaying objects of this class. S4 methods offer enhanced functionality and robustness, making them suitable for complex applications requiring precise object-oriented programming.

Higher-Order Functions

Higher-order functions are functions that take other functions as arguments or return functions as results. These functions enable functional programming techniques and can lead to concise and expressive code. Examples include apply(), lapply(), and sapply().

apply(): Used to apply a function to the rows or columns of a matrix.

lapply(): Applies a function to each element of a list and returns a list.

sapply(): Similar to lapply(), but returns a simplified result.

Higher-order functions enhance code readability and efficiency by abstracting repetitive tasks and leveraging functional programming paradigms.

Best Practices for Writing Functions in R

Writing efficient and readable functions in R is crucial for maintaining clean and effective code. By following best practices, you can ensure that your functions are not only functional but also easy to understand and maintain. Here are some key tips and common pitfalls to avoid.

Tips for Writing Efficient and Readable Functions

Keep Functions Focused: Design functions to perform a single task or operation. This makes your code more modular and easier to test. For example, instead of creating a function that processes data and generates a report, split it into separate functions for processing and reporting.

Use Descriptive Names: Choose function names that clearly indicate their purpose. For instance, use calculate_mean() rather than calc() to convey the function’s role more explicitly.

Avoid Hardcoding Values: Use parameters instead of hardcoded values within functions. This makes your functions more flexible and reusable. For example, instead of using a fixed threshold value within a function, pass it as a parameter.

Common Mistakes to Avoid

Overcomplicating Functions: Avoid writing overly complex functions. If a function becomes too long or convoluted, break it down into smaller, more manageable pieces. Complex functions can be harder to debug and understand.

Neglecting Error Handling: Failing to include error handling can lead to unexpected issues during function execution. Implement checks to handle invalid inputs or edge cases gracefully.

Ignoring Code Consistency: Consistency in coding style helps maintain readability. Follow a consistent format for indentation, naming conventions, and comment style.

Best Practices for Function Documentation

Document Function Purpose: Clearly describe what each function does, its parameters, and its return values. Use comments and documentation strings to provide context and usage examples.

Specify Parameter Types: Indicate the expected data types for each parameter. This helps users understand how to call the function correctly and prevents type-related errors.

Update Documentation Regularly: Keep function documentation up-to-date with any changes made to the function’s logic or parameters. Accurate documentation enhances the usability of your code.

By adhering to these practices, you’ll improve the quality and usability of your R functions, making your codebase more reliable and easier to maintain.

Read Blogs:

Pattern Programming in Python: A Beginner’s Guide.

Understanding the Functional Programming Paradigm.

Frequently Asked Questions

What are the main types of functions in R programming?

In R programming, the main types of functions include built-in functions, user-defined functions, anonymous functions, recursive functions, S3 methods, S4 methods, and higher-order functions. Each serves a specific purpose, from performing basic tasks to handling complex operations.

How do user-defined functions differ from built-in functions in R?

User-defined functions are custom functions created by users to address specific needs, whereas built-in functions come pre-defined with R and handle common tasks. User-defined functions offer flexibility, while built-in functions provide efficiency and convenience for standard operations.

What is a recursive function in R programming?

A recursive function in R calls itself to solve a problem by breaking it down into smaller, similar sub-tasks. It's useful for problems like calculating factorials but requires careful implementation to avoid infinite recursion and performance issues.

Conclusion

Understanding the types of functions in R programming is crucial for optimising your code. From built-in functions that simplify tasks to user-defined functions that offer customisation, each type plays a unique role.

Mastering recursive, anonymous, and higher-order functions further enhances your programming capabilities. Implementing best practices ensures efficient and maintainable code, leveraging R’s full potential for data analysis and complex problem-solving.

#Different Types of Functions in R Programming#Types of Functions in R Programming#r programming#data science

2 notes

·

View notes

Text

Lodi Palle – Types and Future of Software Developer

There are various types of software developers, according to Lodi Palle, each specializing in different areas of software development. Here are a few common types:

Front-end Developer: Front-end developers focus on creating the user interface and user experience of software applications. They work with HTML, CSS, JavaScript, and front-end frameworks to build visually appealing and interactive interfaces that users interact with directly.

Back-end Developer: Back-end developers are responsible for the server-side development of applications. They work with server-side languages like Python, Java, or Ruby, and databases to handle data storage, processing, and server-side logic. They build the behind-the-scenes functionality that powers web and mobile applications.

Full-stack Developer: Full-stack developers are proficient in both front-end and back-end development. They have the skills to work on both the client-side and server-side components of an application. Full-stack developers can handle all aspects of software development, from designing user interfaces to implementing complex server-side logic.

#lode palle#lodi emmanuel palle#lodi palle#emmanuel palle#lode emmanuel palle#lode emmanuelle palle#emmanuelpalle

6 notes

·

View notes

Text

Data Science Made Easy: How the Best Online Training Institute in Hyderabad Can Help You Succeed

Are you searching for an apt Data Science course that will provide you with additional skills? Then you have arrived at the correct location. Data science courses are state-of-the-art courses that are in demand currently. Data Science is a branch that combines algorithms, scientific computing, and statistics to extract insights from unstructured data.

The data science course in E Vidya Technologies has several lessons structured in the order, from introducing data science and analytics to python and pandas to ending it with Machine Learning. This course will help you grasp several Data Science, NLP and Machine Learning concepts. Furthermore, with data science training in hyderabad, you will get clarity on the basics and good knowledge about the advanced python and web scraping levels.

Expert Training

Learning is a skill that takes time, and the process is different for different students. Thus, you should always find courses taught by certified professionals with expertise in this field. Usually, these trainers plan a complete schedule and curriculum for every student. Therefore, students should feel free of the pressure, and the sessions should be interactive.

Training Videos

Students prefer training videos to be at flexible hours. This gives them the opportunity to do other activities during that time. Also, equal intervals between the lectures give them time to recall and practice. Moreover, students can work on their doubts at this time. So there needs to be a 24/7 working team to train and clarify students' queries.

Resume Building

The goal after completing a course is to secure a job. For this, the perfect resume should be built. Thus, the student should undergo technical and non-technical mock interviews. E Vidya Technologies course offers both types of mock interviews, which will help you gain a command of the learned subject, and you will feel confident to apply for a job. The mock technical interviews include questions on the subject. In contrast, non-technical mock interviews focus on your communication skills. Students can improve themselves by receiving feedback on these interviews.

The minimum set of batch

In every course, having a limited number of students benefits both students and teachers. Students can discuss with their colleagues in a better way. Teachers will be able to focus on every student's progress. The limited set of students is an excellent source of competitiveness to each other.

These factors will give you a good hold on data science. Because data science is a vast subject with numerous sub-sections, you will take time to understand the complex structure. It would be best to have a tutor to structure your course at your convenience.

Conclusion

In short, to select the best online data science course, you must look for flexible studying hours, quizzes and tests, better training videos by experts and reasonable job Assistance. One such option is E Vidya Technologies which offers the best software training institute in hyderabad. So, what are you waiting for? Enroll yourself and start a fantastic journey in data science!

#Data Science#Online Training Institute#Hyderabad#Resume Building#E Vidya Technologies#Learning#communication#Machine

3 notes

·

View notes

Text

Django Best Practices for Building Secure and Scalable Web Applications

Django is a popular high-level Python web framework that makes it easy to build secure and scalable web applications. With its clean and simple syntax, Django allows developers to quickly and easily build complex web applications without having to worry about the underlying infrastructure. In this article, we will outline the best practices for building secure and scalable Django web applications.

1. Keep Your Django Version Up to Date

One of the most important things you can do to ensure the security of your Django web application is to keep your Django version up to date. This is because new releases of Django often include security updates that fix known vulnerabilities. Additionally, new releases of Django often include new features and performance improvements that can help you build better and faster web applications.

2. Use Secure Passwords

It is important to use strong passwords when building Django web applications. This includes not only the passwords used by users, but also the passwords used by administrators and other system accounts. Strong passwords should be at least 12 characters long and include a mix of upper and lowercase letters, numbers, and special characters.

3. Validate User Input

One of the most common ways that attackers can compromise a Django web application is by exploiting vulnerabilities in user input. This is why it is important to validate all user input before accepting it. This includes validating the format, length, and type of input, as well as checking for malicious content.

4. Use SSL/TLS Encryption

Another important aspect of building a secure Django web application is to use SSL/TLS encryption to protect sensitive information in transit. This includes information such as passwords, credit card numbers, and other sensitive data. SSL/TLS encryption ensures that the information being transmitted between the user and the server is encrypted and cannot be intercepted by an attacker.

5. Implement Access Control

Access control is an important aspect of building a secure web application. In Django, access control can be implemented using a combination of authentication and authorization. Authentication is the process of verifying that a user is who they claim to be, while authorization is the process of determining what actions a user is allowed to perform.

6. Use the Django Debug Toolbar

The Django Debug Toolbar is a powerful tool that can help you identify and fix performance and security issues in your Django web application. It provides a wealth of information about your application, including performance metrics, SQL queries, and other information that can help you optimize your application and make it more secure.

7. Monitor Your Logs

Monitoring your logs is an important part of building a secure and scalable Django web application. Logs can provide valuable information about the performance and security of your application, including error messages, access logs, and performance metrics. Regularly reviewing your logs can help you identify and fix issues with your application before they become major problems.

8. Regularly Back Up Your Data

Regularly backing up your data is an important part of building a secure and scalable Django web application. Backups can protect your data in the event of a hardware failure, a security breach, or other unexpected event. It is important to store backups in a secure location, such as an off-site server or a cloud-based storage service.

9. Use a Content Delivery Network (CDN)

Using a content delivery network (CDN) can help you improve the performance and scalability of your Django web application. A CDN caches your content on servers around the world, so that it is closer to users, reducing the time it takes for content to be delivered. This can help reduce server load and improve the overall performance of your application, making it more scalable.

10. Test Your Web Application Regularly

Regularly testing your web application is an important part of building a secure and scalable Django web application. This includes both functional testing, which verifies that the application is working as expected, and security testing, which identifies potential vulnerabilities in the application. By testing your web application regularly, you can identify and fix issues before they become major problems.

Conclusion

Building a secure and scalable Django web application requires a combination of good coding practices, regular maintenance, and attention to detail. By following the best practices outlined in this article, you can help ensure that your Django web application is robust, reliable, and secure. With a focus on security, performance, and scalability, you can build a high-quality Django web application that will meet the needs of your users for years to come.

At Capital Numbers, we offer comprehensive Django development services to help businesses build secure and scalable web applications. Our team of experienced Django developers leverages the latest technologies and best practices to deliver customized solutions that meet your unique business needs. From concept to deployment, we work closely with our clients to understand their requirements and provide tailored solutions that meet their goals. With a focus on quality, efficiency, and innovation, we help businesses build robust web applications that drive growth and improve user engagement. Whether you need a simple website or a complex web application, our team of experts is ready to help you achieve your goals with Django. Connect us and hire Django developers today.

#django#django development agencies#hire django developer#django development#django developers for hire#python django development

3 notes

·

View notes

Text

most useful data visualization libraries in Python

Unlock the power of your data with top data visualization libraries in Python for data analysis. From Matplotlib to Seaborn, discover tools that will help you effectively communicate your findings and make data-driven decisions

today's data-driven world we are constantly bombarded with large amounts of information. But raw data alone can be difficult to understand and make sense of. That's where data visualization comes in. With data visualization

data visualization libraries, libraries in Python, data visualization libraries in Python

we can take complex data sets and transform them into clear, easy-to-understand graphical representations like charts, graphs and plots.

How To Use Python For Data Visualization?

If you're looking to dive into world of data science, you should definitely consider learning Python. It's quickly becoming go-to language for data scientists everywhere and it's an essential skill to have. And one of the best things about Python is wide variety of libraries available for data visualization.

libraries are specifically designed to help you make sense of your data through different types of graphical representations. taking Business Intelligence Training course, you'll gain valuable insights into how to use libraries to uncover important information from your data. It's definitely skill worth investing in

Importing Packages

When it comes to using Python for data visualization. first step import necessary packages. These include Pandas for processing your data, Matplotlib for creating basic visualizations, Seaborn for more advanced visualizations and Numpy for calculations.

read full info - https://www.hinditechbate.in/2023/01/data-visualization-libraries-Python.html

2 notes

·

View notes