#AI Use Case Development Platform

Explore tagged Tumblr posts

Text

youtube

#AI Factory#UnifyCloud#CloudAtlas#AI Use Case Development Platform#AI Solution Gallary#AI#ML#Youtube

0 notes

Text

At the California Institute of the Arts, it all started with a videoconference between the registrar’s office and a nonprofit.

One of the nonprofit’s representatives had enabled an AI note-taking tool from Read AI. At the end of the meeting, it emailed a summary to all attendees, said Allan Chen, the institute’s chief technology officer. They could have a copy of the notes, if they wanted — they just needed to create their own account.

Next thing Chen knew, Read AI’s bot had popped up inabout a dozen of his meetings over a one-week span. It was in one-on-one check-ins. Project meetings. “Everything.”

The spread “was very aggressive,” recalled Chen, who also serves as vice president for institute technology. And it “took us by surprise.”

The scenariounderscores a growing challenge for colleges: Tech adoption and experimentation among students, faculty, and staff — especially as it pertains to AI — are outpacing institutions’ governance of these technologies and may even violate their data-privacy and security policies.

That has been the case with note-taking tools from companies including Read AI, Otter.ai, and Fireflies.ai.They can integrate with platforms like Zoom, Google Meet, and Microsoft Teamsto provide live transcriptions, meeting summaries, audio and video recordings, and other services.

Higher-ed interest in these products isn’t surprising.For those bogged down with virtual rendezvouses, a tool that can ingest long, winding conversations and spit outkey takeaways and action items is alluring. These services can also aid people with disabilities, including those who are deaf.

But the tools can quickly propagate unchecked across a university. They can auto-join any virtual meetings on a user’s calendar — even if that person is not in attendance. And that’s a concern, administrators say, if it means third-party productsthat an institution hasn’t reviewedmay be capturing and analyzing personal information, proprietary material, or confidential communications.

“What keeps me up at night is the ability for individual users to do things that are very powerful, but they don’t realize what they’re doing,” Chen said. “You may not realize you’re opening a can of worms.“

The Chronicle documented both individual and universitywide instances of this trend. At Tidewater Community College, in Virginia, Heather Brown, an instructional designer, unwittingly gave Otter.ai’s tool access to her calendar, and it joined a Faculty Senate meeting she didn’t end up attending. “One of our [associate vice presidents] reached out to inform me,” she wrote in a message. “I was mortified!”

24K notes

·

View notes

Text

(Read on our blog)

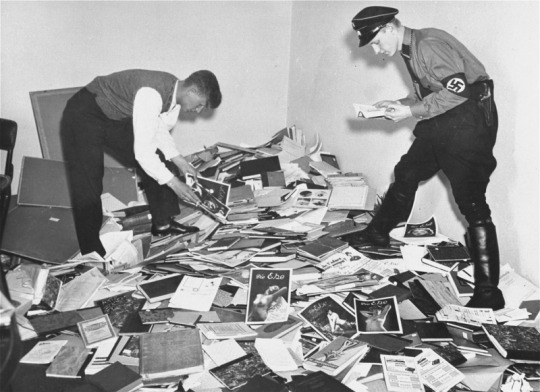

Beginning in 1933, the Nazis burned books to erase the ideas they feared—works of literature, politics, philosophy, criticism; works by Jewish and leftist authors, and research from the Institute for Sexual Science, which documented and affirmed queer and trans identities.

(Nazis collect "anti-German" books to be destroyed at a Berlin book-burning on May 10, 1933 (Source)

Stories tell truths.

These weren’t just books; they were lifelines.

Writing by, for, and about marginalized people isn’t just about representation, but survival. Writing has always been an incredibly powerful tool—perhaps the most resilient form of resistance, as fascism seeks to disconnect people from knowledge, empathy, history, and finally each other. Empathy is one of the most valuable resources we have, and in the darkest times writers armed with nothing but words have exposed injustice, changed culture, and kept their communities connected.

(A Nazi student and a member of the SA raid the Institute for Sexual Science's library in Berlin, May 6, 1933. Source)

Less than two weeks after the US presidential inauguration, the nightmare of Project 2025 is starting to unfold. What these proposals will mean for creative freedom and freedom of expression is uncertain, but the intent is clear. A chilling effect on subjects that writers engage with every day—queer narratives, racial justice, and critiques of power—is already manifest. The places where these works are published and shared may soon face increased pressure, censorship, and legal jeopardy.

And with speed-run fascism comes a rising tide of misinformation and hostility. The tech giants that facilitate writing, sharing, publishing, and communication—Google, Microsoft, Amazon, the-hellscape-formerly-known-as-Twitter, Facebook, TikTok—have folded like paper in a light breeze. OpenAI, embroiled in lawsuits for training its models on stolen works, is now positioned as the AI of choice for the administration, bolstered by a $500 billion investment. And privacy-focused companies are showing a newfound willingness to align with a polarizing administration, chilling news for writers who rely on digital privacy to protect their work and sources; even their personal safety.

Where does that leave writers?

Writing communities have always been a creative refuge, but they’re more than that now—they are a means of continuity. The information landscape is shifting rapidly, so staying informed on legal and political developments will be essential for protecting creative freedom and pushing back against censorship wherever possible. Direct your energy to the communities that need it, stay connected, check in on each other—and keep backup spaces in case platforms become unsafe.

We can’t stress this enough—support tools and platforms that prioritize creative freedom. The systems we rely on are being rewritten in real time, and the future of writing spaces depends on what we build now. We at Ellipsus will continue working to provide space for our community—one that protects and facilitates creative expression, not undermines it.

Above all—keep writing.

Keep imagining, keep documenting, keep sharing—keep connecting. Suppression thrives on silence, but words have survived every attempt at erasure.

- The Ellipsus team

#writeblr#writers on tumblr#writing#fiction#fanfic#fanfiction#us politics#american politics#lgbtq community#lgbtq rights#trans rights#freedom of expression#writers

4K notes

·

View notes

Note

What is your current opinion on Unreal Engine 5? Between Digital Foundry, content creators, and people on social media, everyone appears to be constantly attacking UE5 for performance issues (stuttering, frame rate, etc.). Is this criticism warranted, or is it more a case of developers still getting used to UE5 and its complexities (meaning it will likely improve in time)?

Everything improves with time as the engineers learn the details and optimize their work. This is true of every tech platform ever and won't be any more different with Unreal Engine 5 than it has been with UE4, 3, or anything else. That said... after having very recently worked with UE5 for enough time to get used to some of its foibles and having looked into some core engineering issues in a project utilizing some of the new tech introduced in UE5 (and the caveats and side effects of using that tech), I can say with fair confidence that (some) complaints about the performance issues are definitely warranted. These aren't global to all UE5 projects, but they are major performance issues we ran into and had to solve.

One major issue we ran into was with Nanite. Nanite is the new tech that allows incredibly detailed high poly models, a sort of [LOD system] on steroids. The Entity Component System of the Unreal Engine (every actor is a bag of individual components) allows developers to glom nanite meshes onto just about anything and everything including characters, making it very powerful and quick to stand up various different visuals. However, this also requires significant time spent optimizing that geometry for lighting and for use in game - interpenetrating bits and pieces that don't necessarily need to calculate lighting or normals or shadows unnecessarily add to the performance cost must be purged from those nanite models. Nanite looks great, but has issues that need to be ironed out and the documentation on those issues isn't fully formed because they're still being discovered (and Epic is still working on fixing them). We had major performance issues on any characters we built using nanite, which meant that our long-term goal for performance was actually to de-nanite our characters completely.

Another major issue I ran into was with the new UE5 World Partition system. World Partition is essentially their replacement for their old World Composition system, it's a means of handling level streaming for large contiguous world spaces. In any large open world, you're going to have to have individual tiles that get streamed in as the player approaches them - there's no reason to fit the entire visible world into memory at any given time with all the bells and whistles when the player can only see a small part of it. The World Partition system is supposed to stream in the necessary bits piecemeal and allow for seamless play. Unfortunately, there are a lot of issues with it that are just not documented and/or not fixed yet. I personally ran into issues with navmesh generation (the map layer used for AI pathfinding) using the World Partition that I had to ask Epic about, and their engineers responded with "Thanks for finding this bug. We'll fix it eventually, likely not in the next patch."

Most of these issues will eventually get ironed out, documented, and/or fixed as they come to light. That's pretty normal for any major piece of technology - things improve and mature as more people use it and the dev team has the time and bandwidth to fix bugs, document things better, and add quality of life features. Because this tech is still fairly new, all of the expected bleeding edge problems are showing up. You're seeing those results - the games that are forced to use the new less-tested systems are uncovering the issues (performance, bugs, missing functionality, etc.) as they go. Epic is making fixes and improvements, but us third-party game devs must still ship our games and this kind of issue is par for the course.

[Join us on Discord] and/or [Support us on Patreon]

Got a burning question you want answered?

Short questions: Ask a Game Dev on Twitter

Short questions: Ask a Game Dev on BlueSky

Long questions: Ask a Game Dev on Tumblr

Frequent Questions: The FAQ

64 notes

·

View notes

Text

Jason Koebler at 404 Media:

[Update: After this article was published, Bluesky restored Kabas' post and told 404 Media the following: "This was a case of our moderators applying the policy for non-consensual AI content strictly. After re-evaluating the newsworthy context, the moderation team is reinstating those posts."] Bluesky deleted a viral, AI-generated protest video in which Donald Trump is sucking on Elon Musk’s toes because its moderators said it was “non-consensual explicit material.” The video was broadcast on televisions inside the office Housing and Urban Development earlier this week, and quickly went viral on Bluesky and Twitter. Independent journalist Marisa Kabas obtained a video from a government employee and posted it on Bluesky, where it went viral. Tuesday night, Bluesky moderators deleted the video because they said it was “non-consensual explicit material.” “A Bluesky account you control (@marisakabas.bsky.social) posted content or shared a link that contains non-consensual explicit material, which is in violation of our Community Guidelines. As a result of this violation, we have taken down your post,” an email Kabas received from Bluesky moderation reads. “We trust that you will understand the necessity of these measures and the gravity of the situation. Bluesky explicitly prohibits the sharing of non-consensual sexual media. You cannot use Bluesky to break the law or cause harm to others. All users must be treated with respect.” “Hello—the post you have taken down was a video broadcast inside a government building to protest a fascist regime,” Kabas wrote in an email back to Bluesky seen by 404 Media. “It is in the public interest and it is legitimate news. Taking it down is an attempt to bury the story and an alarming form of censorship. I love this platform but I’m shocked by this decision. I ask you to reconsider it.” Other Bluesky users said that versions of the video they uploaded were also deleted, though it is still possible to find the video on the platform. Technically speaking, the AI video of Trump sucking Musk’s toes, which had the words “LONG LIVE THE REAL KING” shown on top of it, is a nonconsensual AI-generated video, because Trump and Musk did not agree to it. But social media platform content moderation policies have always had carve outs that allow for the criticism of powerful people, especially the world’s richest man and the literal president of the United States.

Bluesky briefly deleted AI video depicting Donald Trump sucking on Elon Musk’s toes in protest of DOGE’s purges on the basis it was “non-consensual explicit material”, before reversing that decision due to the newsworthiness of the video.

44 notes

·

View notes

Text

when i was a teenager i used to spend too much time on social media. since i've always been into anime and otaku things, a lot of the japanese and south korean artists i looked up to were kind of distant; they didn't talk about their lives nor followed people back often. to my eyes, this was peak cool. these people drew so good that they didn't need to adapt to algorithms or foreign languages to succeed! i used to think that if i had so much power i wouldn't be able to resist the urge to use it to change the world.

at the same time, i also hated it. i hated when artists were so secretive about their process, so ungrateful towards the people who always supported them. i especially hated pick me artists who were constantly complaining about how hard it was to draw profiles and the other eye and who would mostly draw trends and memes and didn't understand the depth of the pieces of media they liked.

now i understand that my vision back then was blurred by a mix of admiration, bitterness and of course the language barrier. artists on social media are just random people. very few get to the point where someone else manages their account, or they start working for a studio, and even then it's still someone behind the screen.

i still have my takes about how certain types of online artists behave, but lately i've been trying to avoid those bad feelings and be more grateful for the fact that people are still creating, despite everything that's going on in the world. i'd rather be forced to follow a thousand amateur and/or attention-seeking artists than a shitty genAI account.

it's been about 8 years since i started posting my fanart online and i've met so many artists from completely different backgrounds. i've seen my friends grow and make art i would have never imagined they could make. i'm mutuals with people i've admired for almost a decade and i'm regularly told that my art inspires others. some manga authors and videogame developers have seen my art of their characters. it's been like this for years and i'm still not used to it. it's so nicel!! but it's still unbelievable.

i realized some time ago that i am now that type of unreachable artist to a lot of people. i feel guilty about it, but i don't know what to be sorry for. i guess that it was never about trying to be mysterious or forcing myself to hold back my opinions, it's just that the world is too big for one person to be on the spotlight. now that i have a job and i'm busy, i'm very comfortable in a spotlight i can turn off whenever i want.

(side note i still draw a lot but it's mostly my ocs and i'm embarrassed to post them)

if there's something i know for certain is that people will always want to see cool art. my family always asks for my drawings even if they're always girls making out and not the kind of paintings they wish i made. my friends who aren't artists still struggle to put into words why they like what i do, even if they don't know the characters; but the fact that they keep trying to communicate it makes me happy, because it shows that they really want me to continue doing this.

i don't need to hope that humanity keeps making art because i already know it will happen. but i wish people who are already on this path don't feel discouraged about the future, even with the rise of generative AI and fascism and the decline of social media platforms. the world is much more beautiful with everyone's creations in it and there's always room for more, we will always yearn for more.

i have no plans to stop making art unless i go blind, in which case i would probably learn to make music. i want to get better, i will get better, but i'm just a random person who happens to be alive at a time when random people post their art online. no matter where you are in your artistic journey, if you decide to keep moving forward, i'll meet you here in the spotlight.

40 notes

·

View notes

Text

Weekend links, June 8, 2025

My posts

Seasonal reblog: a post about Donna Summer and Disco Demolition Night.

I seem to have crashed a bit and taken a week I couldn't really spare to rest a little (while still going to physical therapy three times). This compilation of Maria getting in my way for five and half minutes shows a bit of the next SH2 commentary, but that's about as far as I've gotten.

I am now developing quite a little Steam library of deep-discount games I have no time to play, as is traditional. This week I learned that the Epic platform is a thing, and also that it has Alan Wake 2 as an exclusive, or else I would own the latter by now. The rest of Alan Wake, I got for $5. When will I play all these games? Nobody knows, including my physical therapist who wants me to get up and stretch every fifteen minutes.

Meanwhile, Ian's band has a new YouTube channel; he talks about a special song here. He doesn't know when I have time to play these games either; he's the one who got me to buy Silent Hill 4 off GOG.com.

Reblogs of interest

Remembering Marsha P. Johnson, Stonewall, and her activism (I hadn't seen the Pay It No Mind arch before; it's beautiful).

Remembering muppeteer Richard Hunt ("he originated the characters of Scooter, Beaker, Statler, Sweetums, and Wayne, but also became the primary performer of Janice and is responsible for the flower child personality she is now known for"), a joyous performer lost to AIDS in 1992.

Sir Ian McKellen on the trans community: "The connection between us all is we come under the queer umbrella – we are queer. [...] The problems that transgender people have with the law are not dissimilar from what used to be the case for us, so I think we should all be allies really."

Writings on what queer masculinity can be

Aro Books For Pride

Community support isn't rainbow capitalism

Disability aids from Active Hands (here's the website; I haven't tried their products, but the posters are very happy with them)

A tale of two Mondays: sweet and beautiful and weary of life at age 0.

(Not What I'm Called: Manul Edition)

"Builder.AI just declared bankruptcy after admitting that they were faking their AI tool with 700 humans"

CatGPT is just as reliable.

Xuanji Tu, the Chinese poem that can be read 8,000 ways

"sometimes you just want to look at the qing dynasty jadeite cabbage again"

Poll: Which setting is sexier, lighthouse or clock tower?

Tallulah Bankhead: blonde and ambisextrous

Beautifully colored dice, and also, it's a painting

Always reblog the sunwoof

Out-of-focus summer fun

Perfectly synchronized with mama (I realized that "let's eat shit with mama" isn't something you say out of context)

Two very different artistic kinds of bats

The majestic Steller's jay (not sarcasm)

I should not exclude the Swedish blue tit

Pride for one thousand years

Video

New gameplay trailer for Silent Hill f; it looks hard as fuck and twice as scary. I watched it again and said, ".....I bet I could do that," which is how we know that gaming has fully eaten my brain.

Happy Los Jibbities!

I don't know why this made me laugh so hard, but it IS very ewok-coded, yes

Puzzle the tree kangaroo loves cauliflower. Our lives are so rich

Now, this starts off as a discussion of sign language in a production of Hamilton, but ends up as a master class on translating "Not Like Us" to ASL

The sacred texts

"MY NAME, IS FRICKIN MOON MOON"

Personal tag of the week

Let's say House of Leaves, because I never get tired of house jokes.

16 notes

·

View notes

Text

Anyone who has spent even 15 minutes on TikTok over the past two months will have stumbled across more than one creator talking about Project 2025, a nearly thousand-page policy blueprint from the Heritage Foundation that outlines a radical overhaul of the government under a second Trump administration. Some of the plan’s most alarming elements—including severely restricting abortion and rolling back the rights of LGBTQ+ people—have already become major talking points in the presidential race.

But according to a new analysis from the Technology Oversight Project, Project 2025 includes hefty handouts and deregulation for big business, and the tech industry is no exception. The plan would roll back environmental regulation to the benefit of the AI and crypto industries, quash labor rights, and scrap whole regulatory agencies, handing a massive win to big companies and billionaires—including many of Trump’s own supporters in tech and Silicon Valley.

“Their desire to eliminate whole agencies that are the enforcers of antitrust, of consumer protection is a huge, huge gift to the tech industry in general,” says Sacha Haworth, executive director at the Tech Oversight Project.

One of the most drastic proposals in Project 2025 suggests abolishing the Federal Reserve altogether, which would allow banks to back their money using cryptocurrencies, if they so choose. And though some conservatives have railed against the dominance of Big Tech, Project 2025 also suggests that a second Trump administration could abolish the Federal Trade Commission (FTC), which currently has the power to enforce antitrust laws.

Project 2025 would also drastically shrink the role of the National Labor Relations Board, the independent agency that protects employees’ ability to organize and enforces fair labor practices. This could have a major knock on effect for tech companies: In January, Musk’s SpaceX filed a lawsuit in a Texas federal court claiming that the National Labor Relations Board (NLRB) was unconstitutional after the agency said the company had illegally fired eight employees who sent a letter to the company’s board saying that Musk was a “distraction and embarrassment.” Last week, a Texas judge ruled that the structure of the NLRB—which includes a director that can’t be fired by the president—was unconstitutional, and experts believe the case may wind its way to the Supreme Court.

This proposal from Project 2025 could help quash the nascent unionization efforts within the tech sector, says Darrell West, a senior fellow at the Brookings Institution’s Center for Technology Innovation. “Tech, of course, relies a lot on independent contractors,” says West. “They have a lot of jobs that don't offer benefits. It's really an important part of the tech sector. And this document seems to reward those types of business.”

For emerging technologies like AI and crypto, a rollback in environmental regulations proposed by Project 2025 would mean that companies would not be accountable for the massive energy and environmental costs associated with bitcoin mining and running and cooling the data centers that make AI possible. “The tech industry can then backtrack on emission pledges, especially given that they are all in on developing AI technology,” says Haworth.

The Republican Party’s official platform for the 2024 elections is even more explicit, promising to roll back the Biden administration’s early efforts to ensure AI safety and “defend the right to mine Bitcoin.”

All of these changes would conveniently benefit some of Trump’s most vocal and important backers in Silicon Valley. Trump’s running mate, Republican senator J.D. Vance of Ohio, has long had connections to the tech industry, particularly through his former employer, billionaire founder of Palantir and longtime Trump backer Peter Thiel. (Thiel’s venture capital firm, Founder’s Fund, invested $200 million in crypto earlier this year.)

Thiel is one of several other Silicon Valley heavyweights who have recently thrown their support behind Trump. In the past month, Elon Musk and David Sacks have both been vocal about backing the former president. Venture capitalists Marc Andreessen and Ben Horowitz, whose firm a16z has invested in several crypto and AI startups, have also said they will be donating to the Trump campaign.

“They see this as their chance to prevent future regulation,” says Haworth. “They are buying the ability to avoid oversight.”

Reporting from Bloomberg found that sections of Project 2025 were written by people who have worked or lobbied for companies like Meta, Amazon, and undisclosed bitcoin companies. Both Trump and independent candidate Robert F. Kennedy Jr. have courted donors in the crypto space, and in May, the Trump campaign announced it would accept donations in cryptocurrency.

But Project 2025 wouldn’t necessarily favor all tech companies. In the document, the authors accuse Big Tech companies of attempting “to drive diverse political viewpoints from the digital town square.” The plan supports legislation that would eliminate the immunities granted to social media platforms by Section 230, which protects companies from being legally held responsible for user-generated content on their sites, and pushes for “anti-discrimination” policies that “prohibit discrimination against core political viewpoints.”

It would also seek to impose transparency rules on social platforms, saying that the Federal Communications Commission (FCC) “could require these platforms to provide greater specificity regarding their terms of service, and it could hold them accountable by prohibiting actions that are inconsistent with those plain and particular terms.”

And despite Trump’s own promise to bring back TikTok, Project 2025 suggests the administration “ban all Chinese social media apps such as TikTok and WeChat, which pose significant national security risks and expose American consumers to data and identity theft.”

West says the plan is full of contradictions when it comes to its approach to regulation. It’s also, he says, notably soft on industries where tech billionaires and venture capitalists have put a significant amount of money, namely AI and cryptocurrency. “Project 2025 is not just to be a policy statement, but to be a fundraising vehicle,” he says. “So, I think the money angle is important in terms of helping to resolve some of the seemingly inconsistencies in the regulatory approach.”

It remains to be seen how impactful Project 2025 could be on a future Republican administration. On Tuesday, Paul Dans, the director of the Heritage Foundation’s Project 2025, stepped down. Though Trump himself has sought to distance himself from the plan, reporting from the Wall Street Journal indicates that while the project may be lower profile, it’s not going away. Instead, the Heritage Foundation is shifting its focus to making a list of conservative personnel who could be hired into a Republican administration to execute the party’s vision.

65 notes

·

View notes

Text

#AI Factory#AI Use Case Gallary#AI Development Platform#AI In Healthcare#AI in recruitment#Artificial Intellegence

0 notes

Text

Thoughts and opinion on Switch 2

Before I start, do know I really like tech and generally, I like interesting implemtations of it, so I do have a bias towards handheld consoles in general because they have much more interesting limitations to start with. I'll get all my thoughts, up and down, on the switch 2.

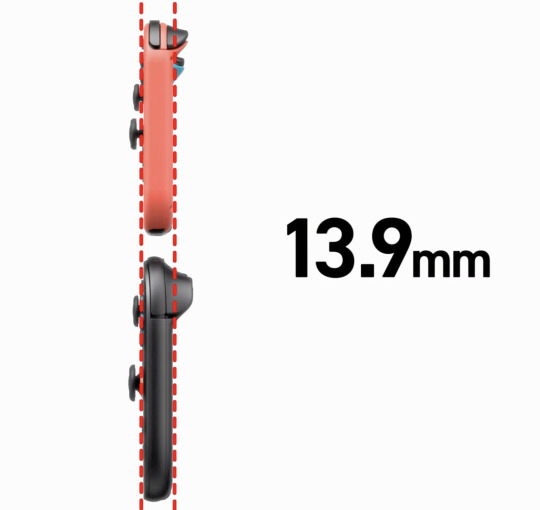

I know the bigger screen does mean more internal area to work with, but how the hell did they manage to keep the thickness nearly identical to the switch 1? Actually impressive, I desperately want to see inside if it is all just because of smaller chip size.

Ok, so where do I properly begin excluding the shock that they pulled off the same thickness? Oh, the screen!

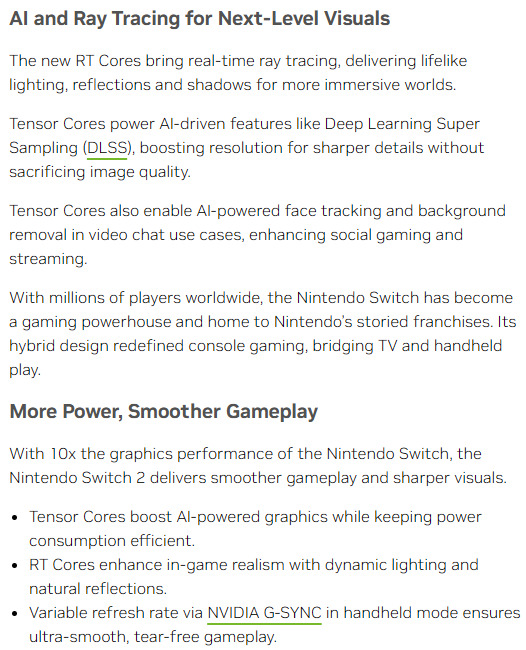

This screen is just a welcome addition overall. 1080p, better brightness and better panel tech that allows for HDR (Microsoft fix your god damn hdr implementation even nintendo has it) while being 120hz and VRR (Nvidia confirmed that it's using their implementation of g-sync via a blog post, here: https://blogs.nvidia.com/blog/nintendo-switch-2-leveled-up-with-nvidia-ai-powered-dlss-and-4k-gaming/). It now matches and slightly exceeds a lot of displays on handhelds now, which funnily enough makes puts it in a similar position as the og switch screen back in 2017, but at least the baseline is much higher so I think this will make the eventual OLED either be more meh, or more better in comparison. It may be closer to the meh side, at least with the first impressions I'm seeing on youtube and seen through my 2013 gaming monitor with clear faded pixels which is it's own issue if I do end up deciding to get a switch 2. Still +$100 I'm calling it. However, the fact it's 120hz natively means that you can now run games at 40fps without screen tearing because it's a clean divisable number of 120, with it looking noticably better then 30fps but not as draining as 60fps or above, which is going to help with more title's battery life. Speaking of Nvidia, let's talk a bit about that blog post.

I do not know how much people like this or not (See recent RTX 40xx and 50xx launches to see how happy people are with Nvidia), but I think they are very much the ideal partners in crime with Nintendo this time around. As long as the Tensor hardware is power efficient enough as they claim, this will just be a boon for handheld gaming times, even if they're stuck at the same 2-6.5 hours as the launch model og switch. It also means it can be updated with time to improve games potentially, so if developers decide to take advantage of it and nvidia/nintendo makes it easy to just update the codebase of a game to get to a never version, we may actually have a case of games becoming more performant and/or better looking with time, assuming they are not running at native 4k already. That's neglecting the processing offloading for stuff like that goofy camera, microphone quality and other stuff

(seriously, if you got a recent enough Nvidia GPU try the Nvidia Broadcast app, it's does basically all the audio/video stuff nintendo showed recently, but now with a focused platform I think this is going to become excellent)

oh ya, it is odd that the stream from friend's framerate is like 12 fps, best guess there is because it uses the on board video encoder and decoded to handle it, and if each is a seperate channel, along with one for background recording/screenshots, plus whatever the games may need for their own dedicated use, I am not too surprised it got the cut on such a slim package. Suck though.

That leads into the dock.

I think the dock is fine. Does the job, has USB ports on the side to connect controllers and charge them (USB 2 tho, so I guess the camera attachement had to go to the top USB C port CORRECTION, I just saw MKBHD's recent switch 2 impression video, it can actually be attached elsewhere maybe. That's interesting, Photo attached below). I think it's as inoffensive as the Switch Oled dock, which does all it needed to do too plus ethernet. It's fine.

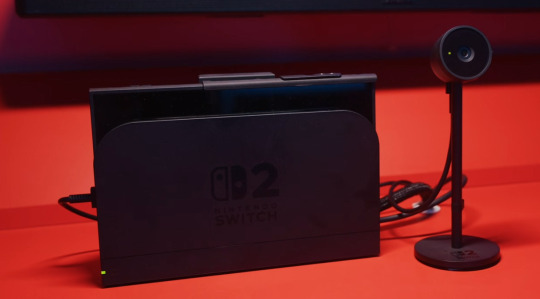

I guess while I'm here, let's touch upon the Microsd Express card slot.

Let me preface this by saying, I knew that the standard was compatible with SD cards of prior so I found it odd they didn't make it clear if it could be used to at least get video/screenshots off, but they did!

Talking about the microSD express cards themselves, I may be in the minority here, but I am very very happy to see this get adopted. For those who are not in the know, it's basically much faster flash (that can be made for slightly more then an normal SD card) that runs off of the reader's PCIe Gen 4, allowing up to 2gb/s of read/write. Which is Crazy, and if they're mandating it to be required for games, that means the current 800mb/s read ones on the market right now is what the console will be using and games will finally load up so much faster. And, unlike the original SD cards, higher capacities like a terabyte already exist AND are not insanely more expensive over base sd cards.(I mean $20-25 for 256gb sd cards to $60 for same but microsd express is still a jump, but do you guys remember how fucking much 256gb sd cards costed originally? It was like $150, and I think 256gb storage will get further milage then the original 32gb storage the og switch had. And 1tb already cost $200 for when it comes out with the switch 2. Those prices will drop unless, uh... not within the scope of what I want to talk about)

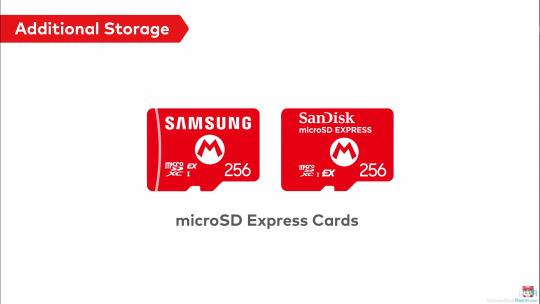

Since we're on storage, 256gb of UFS storage? Like on phones, that can go multiple gigabytes per second read/write? Hell ya, we bout to get load times not much worse then other modern consoles.

Let's touch upon the joycons now.

I like them, but as more in a sense of improvment over og switch joycons. Which, honestly, that's all they needed to do. The standout weird feature is the mouse on both, but I only see this as a plus. I want more of my PC games on portable systems, and as much as I want the steamdeck too, the switch form factor is still more portable. Just... please let the bigger joysticks be actually good and resistant to stick drift, I do not want to open them up just to replace the sticks with hall effect ones again. As a plus, I can't wait to see what insanity warioware is going to become now.

Oh ya, chat.

Surprised it took them 2 decades to finally do it, but they seem to have a lot of restraint there still, with extensive parent controls to help metigate any issues, so I'll only give them slightly less shit. Slightly. I'm going to call it though, there is going to be controversy about what eventually will occur over the gamechat. Can't wait!

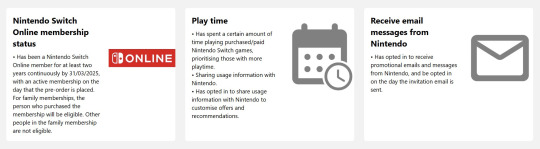

Switch online services

I mean, it was inevitable. This, with the Wii games that's almost absolutely coming later, is going to be good. I suspect a pricebump is going to occur with the NSO that's going to make it cost almost a full game per year, but at least the retro selection is going to start going from crazy to insane, making it probably worth it. I just hope that the voucher program (shown below) gets expanded to more third parties, because as much as I like nintendo games for $50 a piece with this program, it needs to be way more expansive to make the $20 cost at this time to be online, let alone get the 2 vouchers for $100, worth it. If they do that, and keep on adding new actually fun (looking at you Zelda Notes... what the fuck are you) and nice to have to the service, I think it could become worth it in the near future.

I ALMOST FORGOT ONLINE DOWNLOAD PLAY, seriously that alone may be worth it because with both local and online, it means you do not have to force your friends to buy games they may otherwise never touch outside of playing with you. This is straight up a good, pro-consumer thing if others don't ignore it 24/7. I just hope the streaming quality will not be dogshit ahhaha....

(Shout out to nintendo actuallly using this a part to prevent scalpers, I don't like it much because I didn't pay for NSO on the og switch but at least this is a verifiable way to prevent scalpers. I just wish acconts from the Wii U/3ds era got special treatment :^) )

Since we're getting more into software, shout out to how sad the UI is still.

Nintendo, the literal bare minimun here is not just black and white customizable themes. You haven't done that for a generation. I would have much rather had the UI present from the DSi/Wii to Wii U/3ds era then this bland nothing soup. God. Now, onto the most devicive one, and the one that makes or breaks it for me. The games, and their prices.

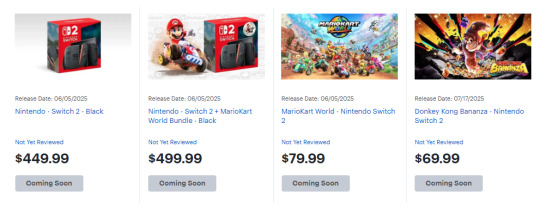

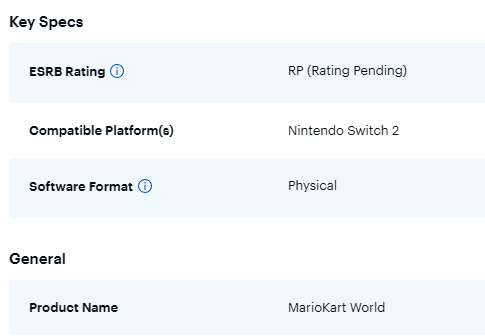

Let's get a few things clear, the $90 physical switch 2 game thing (which I had fallen for too) is in europe. If you're a suffering US citizen, the price is $80 for what seems to be big, high budget nintendo games, with everything else being $70, at least from the list of prices I've seen online.

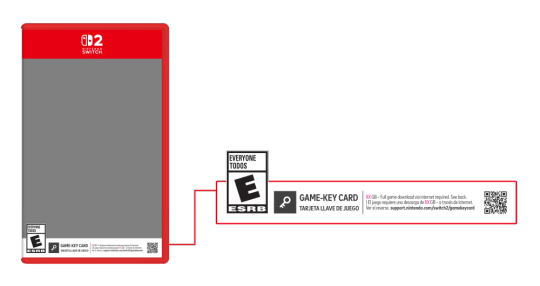

About Game key cards, ya it's just the 'download play' games on the switch, but instead of a piece of paper that's one time, it's a re-usable cartridge that allows multiple downloads on other switches and acts like a physical one otherwise. This, in my opinion, is objectively better, and is not the default option. Other games will be on the Cartidge like og switch, see Cyberpunk 2077 on a 64gb one (seriously how the fuck they did that).

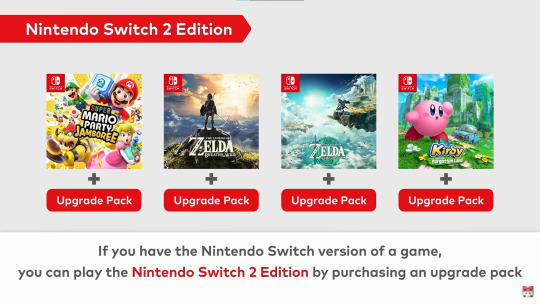

This switch 2 edition is on a case by case basis, so if it is just an fps/resolution bump, it is likely to be free. If it has a bit more, like some added extras and they know people would pay for the fps/resolution, see botw and totk will be $10, and if the content has basically DLC + all of the above, it's $20. Also, lol at welcome tour.

Seeing all of that, I'm iffy. I'm worried that the panic and reactions from everyone will lock the prices for everyone else at $80 since the publishers will take any chance to push prices up further, but if $70 stays I would reluctantly accept it. Unlike some people on the other platforms may say, in the past before $60 standardization, we had other options to play games like with rental stores, actually being around friends, and frequent discounts and bundles to get inventory moving. That does mean nowadays, especially with no sale nintendo, this price is here to stay, and if this is the cost to actually keep games good and not need astronomical sales to make back development costs, so be it. I am just not happy knowing that a lot of publishers will be using machine created/generated stuff each year for that price or $80 and expect no issues with it. The only thing I am very curious about is the capabilities of the switch 2 being somewhere between a PS4 and PS5, but able to handle PS5 ports, potentially making it the best way to play a lot of newer games on the go until valve decides in 2030 to make the steam deck 2.

Now everyone's favorite issues, price. I think it's reasonable, sucks a bit but reasonable. We're now dealing in a world where Nvidia has their focus on AI stuff, and knows that Nintendo wants backward compat + better stuff, so Nvidia likely is changing way more on parts. This world also includes inflation (seriously, $300 in 2017 is now closer to $400, and now there's all the extra nicer stuff slapped on top to justify a next gen). This world also includes ungodly uncertienty because of a a group in the wrong place in the wrong time. Considering all of this, honestly, $450 is fine. It sucks, I know it has pushed a few friends out from buying it w/o someone assisting them in the purchase, but it's fine. It's going to be a great refinement, which is all a sequel console had to be. The thing you have to know, nintendo is doing the thing again with previous controllers being compatible with the current system, so in reality (especially with me and my hall-effect modded controllers) the price to play with others will not be much more. I touched upon this already, but the game prices are iffy for me, and it's absolutely going to prevent me from buying as many games as I had for the og switch, but it's an dampener.

I will need more time to simmer (and see how my finacial situation is going to be), but I am currently leaning to I will try to get it. I'm on the fence, and I have a good chance of flipping to waiting later, or just not getting.

I may add more thoughts as I think them and remember I can use tumblr like this, but I think this is everything I wanted to get out that has been simmering in my head for a bit. Oh ya I almost forgot the most important thing, Homebrew. I love homebrew, it has given me extra life and enjoyment out of my og switch. If there is another launch edition vuln that allows homebrew, I want in.

16 notes

·

View notes

Text

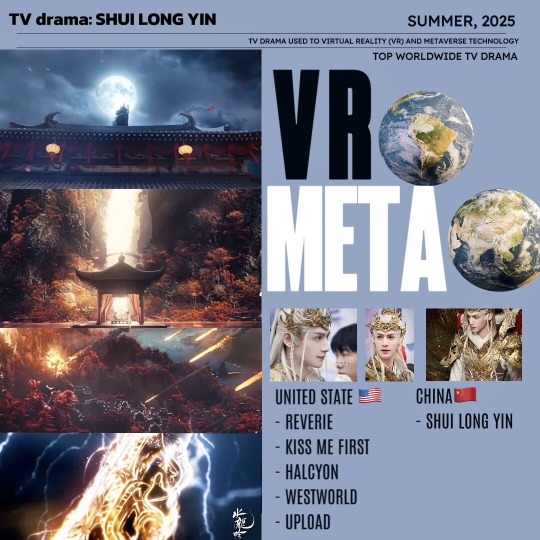

Shui Long Yin VR Metaverse: Technologies and Digital Assets, and the melon about it being released in Summer 2025

What is the metaverse?

The Shui Long Yin metaverse is a parallel world closely resembling the real world, built through the use of digital modelling and technologies such as VR and AR, and designed to exist permanently.

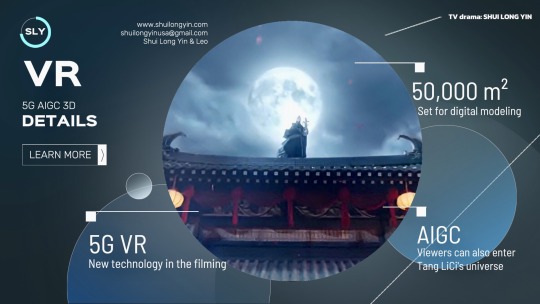

This virtual realm integrates cutting-edge advancements, including blockchain, augmented reality, 5G, big data, artificial intelligence, and 3D engines.

When you acquire a ticket to this world, you gain a digital asset, allowing you to become an immersive citizen within the Shui Long Yin Metaverse.

Every item within Shui Long Yin can be experienced through Augmented Reality using VR devices, providing a seamless blend of the physical and digital realms. These digital assets are permanent, and in some cases, overseas users may trade or transfer their tickets to enter the Shui Long Yin world.

What is VR and AR?

Virtual Reality (VR) is a technology that enables users to interact within a computer-simulated environment.

Augmented Reality (AR), on the other hand, combines elements of VR by merging the real world with computer-generated simulations. A well-known example of AR is the popular game Pokémon Go, where virtual objects are integrated into real-world surroundings.

Tang LiCi's universe

The Shui Long Yin film crew has digitally modeled the core art assets, 50 000 square meters.

What technologies does China Mobile & Migu bring to the table?

China Mobile served as the lead organizer for the 2023 World VR Industry Conference. Its subsidiary, Migu, has also been dedicated to advancing projects in this area.

Shui Long Yin is their first priority this summer 2025.

5G+AI: VR world in Metaverse

AIGC AR 3D: Using AI technology in graphics computer, with the best trained AI system in China.

Video ringtones as a globally pioneering service introduced by China Mobile.

Shui Long Yin from Screen to Metaverse to Real Life: Epic Battles and Intricate Plotlines

The United States and China are world pioneers in the development of TV drama integration VR Metaverse. Notably, Shui Long Yin is the sixth TV drama map worldwide to be merged into the Metaverse.

How can we enjoy these technologies?

-- First we need 5G -- According to a report by the Global Mobile Suppliers Association (GSA), by June 2022:

》There are 70 countries around the world had active 5G networks, you can fully experience all the technology featured in Shui Long Yin.

Example: South Korea, China, and the United States have been at the forefront. Follow after are Japan, United Kingdom, Switzerland, Australia, Taiwan, United Arab Emirates, Saudi Arabia, Viet Nam...

》No worries—even in countries without 5G, you can still watch the drama and enjoy AIGC and 3D technology through the streaming platforms Migu Video and Mango.

•Mango available on IOS and Android, Harmony OS

•Migu (Mobile HD) soon availabe on IOS and Android, Harmony OS

-- Second, we need VR devices --

In country where VR is already commonplace, such as the United States, owning a VR device is considered entirely normal. Users can select devices that best suit their preferred forms of home entertainment.

European countries have also become fairly familiar with VR technology.

However, it is still relatively new to many parts of Asia. When choosing a VR device, it’s important to select one that is most compatible with your intended activities, whether that’s gaming, watching movies, or working.

It's no surprise to us that the drama Shui Long Yin will be released in the summer 2025, but it will also be coinciding with offline tourism to Long Yin Town in Chengdu, online VR Metaverse travel, and 3D experiences on the new streaming platforms Migu and Mango. Stay tuned!

Tv drama: SHUI LONG YIN Shui Long Yin & Leo All music and image are copyrighted and belong to the respective owners, included the official film crew SHUILONGYIN.

#shui long yin#tang lici#水龙吟#唐俪辞#luo yunxi#luo yun xi#leo luo#罗云熙#cdrama#chinese drama#long yin town#long yin town vr

5 notes

·

View notes

Text

pulling out a section from this post (a very basic breakdown of generative AI) for easier reading;

AO3 and Generative AI

There are unfortunately some massive misunderstandings in regards to AO3 being included in LLM training datasets. This post was semi-prompted by the ‘Knot in my name’ AO3 tag (for those of you who haven’t heard of it, it’s supposed to be a fandom anti-AI event where AO3 writers help “further pollute” AI with Omegaverse), so let’s take a moment to address AO3 in conjunction with AI. We’ll start with the biggest misconception:

1. AO3 wasn’t used to train generative AI.

Or at least not anymore than any other internet website. AO3 was not deliberately scraped to be used as LLM training data.

The AO3 moderators found traces of the Common Crawl web worm in their servers. The Common Crawl is an open data repository of raw web page data, metadata extracts and text extracts collected from 10+ years of web crawling. Its collective data is measured in petabytes. (As a note, it also only features samples of the available pages on a given domain in its datasets, because its data is freely released under fair use and this is part of how they navigate copyright.) LLM developers use it and similar web crawls like Google’s C4 to bulk up the overall amount of pre-training data.

AO3 is big to an individual user, but it’s actually a small website when it comes to the amount of data used to pre-train LLMs. It’s also just a bad candidate for training data. As a comparison example, Wikipedia is often used as high quality training data because it’s a knowledge corpus and its moderators put a lot of work into maintaining a consistent quality across its web pages. AO3 is just a repository for all fanfic -- it doesn’t have any of that quality maintenance nor any knowledge density. Just in terms of practicality, even if people could get around the copyright issues, the sheer amount of work that would go into curating and labeling AO3’s data (or even a part of it) to make it useful for the fine-tuning stages most likely outstrips any potential usage.

Speaking of copyright, AO3 is a terrible candidate for training data just based on that. Even if people (incorrectly) think fanfic doesn’t hold copyright, there are plenty of books and texts that are public domain that can be found in online libraries that make for much better training data (or rather, there is a higher consistency in quality for them that would make them more appealing than fic for people specifically targeting written story data). And for any scrapers who don’t care about legalities or copyright, they’re going to target published works instead. Meta is in fact currently getting sued for including published books from a shadow library in its training data (note, this case is not in regards to any copyrighted material that might’ve been caught in the Common Crawl data, its regarding a book repository of published books that was scraped specifically to bring in some higher quality data for the first training stage). In a similar case, there’s an anonymous group suing Microsoft, GitHub, and OpenAI for training their LLMs on open source code.

Getting back to my point, AO3 is just not desirable training data. It’s not big enough to be worth scraping for pre-training data, it’s not curated enough to be considered for high quality data, and its data comes with copyright issues to boot. If LLM creators are saying there was no active pursuit in using AO3 to train generative AI, then there was (99% likelihood) no active pursuit in using AO3 to train generative AI.

AO3 has some preventative measures against being included in future Common Crawl datasets, which may or may not work, but there’s no way to remove any previously scraped data from that data corpus. And as a note for anyone locking their AO3 fics: that might potentially help against future AO3 scrapes, but it is rather moot if you post the same fic in full to other platforms like ffn, twitter, tumblr, etc. that have zero preventative measures against data scraping.

2. A/B/O is not polluting generative AI

…I’m going to be real, I have no idea what people expected to prove by asking AI to write Omegaverse fic. At the very least, people know A/B/O fics are not exclusive to AO3, right? The genre isn’t even exclusive to fandom -- it started in fandom, sure, but it expanded to general erotica years ago. It’s all over social media. It has multiple Wikipedia pages.

More to the point though, omegaverse would only be “polluting” AI if LLMs were spewing omegaverse concepts unprompted or like…associated knots with dicks more than rope or something. But people asking AI to write omegaverse and AI then writing omegaverse for them is just AI giving people exactly what they asked for. And…I hate to point this out, but LLMs writing for a niche the LLM trainers didn’t deliberately train the LLMs on is generally considered to be a good thing to the people who develop LLMs. The capability to fill niches developers didn’t even know existed increases LLMs’ marketability. If I were a betting man, what fandom probably saw as a GOTCHA moment, AI people probably saw as a good sign of LLMs’ future potential.

3. Individuals cannot affect LLM training datasets.

So back to the fandom event, with the stated goal of sabotaging AI scrapers via omegaverse fic.

…It’s not going to do anything.

Let’s add some numbers to this to help put things into perspective:

LLaMA’s 65 billion parameter model was trained on 1.4 trillion tokens. Of that 1.4 trillion tokens, about 67% of the training data was from the Common Crawl (roughly ~3 terabytes of data).

3 terabytes is 3,000,000,000 kilobytes.

That’s 3 billion kilobytes.

According to a news article I saw, there has been ~450k words total published for this campaign (*this was while it was going on, that number has probably changed, but you’re about to see why that still doesn’t matter). So, roughly speaking, ~450k of text is ~1012 KB (I’m going off the document size of a plain text doc for a fic whose word count is ~440k).

So 1,012 out of 3,000,000,000.

Aka 0.000034%.

And that 0.000034% of 3 billion kilobytes is only 2/3s of the data for the first stage of training.

And not to beat a dead horse, but 0.000034% is still grossly overestimating the potential impact of posting A/B/O fic. Remember, only parts of AO3 would get scraped for Common Crawl datasets. Which are also huge! The October 2022 Common Crawl dataset is 380 tebibytes. The April 2021 dataset is 320 tebibytes. The 3 terabytes of Common Crawl data used to train LLaMA was randomly selected data that totaled to less than 1% of one full dataset. Not to mention, LLaMA’s training dataset is currently on the (much) larger size as compared to most LLM training datasets.

I also feel the need to point out again that AO3 is trying to prevent any Common Crawl scraping in the future, which would include protection for these new stories (several of which are also locked!).

Omegaverse just isn’t going to do anything to AI. Individual fics are going to do even less. Even if all of AO3 suddenly became omegaverse, it’s just not prominent enough to influence anything in regards to LLMs. You cannot affect training datasets in any meaningful way doing this. And while this might seem really disappointing, this is actually a good thing.

Remember that anything an individual can do to LLMs, the person you hate most can do the same. If it were possible for fandom to corrupt AI with omegaverse, fascists, bigots, and just straight up internet trolls could pollute it with hate speech and worse. AI already carries a lot of biases even while developers are actively trying to flatten that out, it’s good that organized groups can’t corrupt that deliberately.

#generative ai#pulling this out wasnt really prompted by anything specific#so much as heard some repeated misconceptions and just#sighs#nope#incorrect#u got it wrong#sorry#unfortunately for me: no consistent tag to block#sigh#ao3

101 notes

·

View notes

Text

Future of LLMs (or, "AI", as it is improperly called)

Posted a thread on bluesky and wanted to share it and expand on it here. I'm tangentially connected to the industry as someone who has worked in game dev, but I know people who work at more enterprise focused companies like Microsoft, Oracle, etc. I'm a developer who is highly AI-critical, but I'm also aware of where it stands in the tech world and thus I think I can share my perspective. I am by no means an expert, mind you, so take it all with a grain of salt, but I think that since so many creatives and artists are on this platform, it would be of interest here. Or maybe I'm just rambling, idk.

LLM art models ("AI art") will eventually crash and burn. Even if they win their legal battles (which if they do win, it will only be at great cost), AI art is a bad word almost universally. Even more than that, the business model hemmoraghes money. Every time someone generates art, the company loses money -- it's a very high energy process, and there's simply no way to monetize it without charging like a thousand dollars per generation. It's environmentally awful, but it's also expensive, and the sheer cost will mean they won't last without somehow bringing energy costs down. Maybe this could be doable if they weren't also being sued from every angle, but they just don't have infinite money.

Companies that are investing in "ai research" to find a use for LLMs in their company will, after years of research, come up with nothing. They will blame their devs and lay them off. The devs, worth noting, aren't necessarily to blame. I know an AI developer at meta (LLM, really, because again AI is not real), and the morale of that team is at an all time low. Their entire job is explaining patiently to product managers that no, what you're asking for isn't possible, nothing you want me to make can exist, we do not need to pivot to LLMs. The product managers tell them to try anyway. They write an LLM. It is unable to do what was asked for. "Hm let's try again" the product manager says. This cannot go on forever, not even for Meta. Worst part is, the dev who was more or less trying to fight against this will get the blame, while the product manager moves on to the next thing. Think like how NFTs suddenly disappeared, but then every company moved to AI. It will be annoying and people will lose jobs, but not the people responsible.

ChatGPT will probably go away as something public facing as the OpenAI foundation continues to be mismanaged. However, while ChatGPT as something people use to like, write scripts and stuff, will become less frequent as the public facing chatGPT becomes unmaintainable, internal chatGPT based LLMs will continue to exist.

This is the only sort of LLM that actually has any real practical use case. Basically, companies like Oracle, Microsoft, Meta etc license an AI company's model, usually ChatGPT.They are given more or less a version of ChatGPT they can then customize and train on their own internal data. These internal LLMs are then used by developers and others to assist with work. Not in the "write this for me" kind of way but in the "Find me this data" kind of way, or asking it how a piece of code works. "How does X software that Oracle makes do Y function, take me to that function" and things like that. Also asking it to write SQL queries and RegExes. Everyone I talk to who uses these intrernal LLMs talks about how that's like, the biggest thign they ask it to do, lol.

This still has some ethical problems. It's bad for the enivronment, but it's not being done in some datacenter in god knows where and vampiring off of a power grid -- it's running on the existing servers of these companies. Their power costs will go up, contributing to global warming, but it's profitable and actually useful, so companies won't care and only do token things like carbon credits or whatever. Still, it will be less of an impact than now, so there's something. As for training on internal data, I personally don't find this unethical, not in the same way as training off of external data. Training a language model to understand a C++ project and then asking it for help with that project is not quite the same thing as asking a bot that has scanned all of GitHub against the consent of developers and asking it to write an entire project for me, you know? It will still sometimes hallucinate and give bad results, but nowhere near as badly as the massive, public bots do since it's so specialized.

The only one I'm actually unsure and worried about is voice acting models, aka AI voices. It gets far less pushback than AI art (it should get more, but it's not as caustic to a brand as AI art is. I have seen people willing to overlook an AI voice in a youtube video, but will have negative feelings on AI art), as the public is less educated on voice acting as a profession. This has all the same ethical problems that AI art has, but I do not know if it has the same legal problems. It seems legally unclear who owns a voice when they voice act for a company; obviously, if a third party trains on your voice from a product you worked on, that company can sue them, but can you directly? If you own the work, then yes, you definitely can, but if you did a role for Disney and Disney then trains off of that... this is morally horrible, but legally, without stricter laws and contracts, they can get away with it.

In short, AI art does not make money outside of venture capital so it will not last forever. ChatGPT's main income source is selling specialized LLMs to companies, so the public facing ChatGPT is mostly like, a showcase product. As OpenAI the company continues to deathspiral, I see the company shutting down, and new companies (with some of the same people) popping up and pivoting to exclusively catering to enterprises as an enterprise solution. LLM models will become like, idk, SQL servers or whatever. Something the general public doesn't interact with directly but is everywhere in the industry. This will still have environmental implications, but LLMs are actually good at this, and the data theft problem disappears in most cases.

Again, this is just my general feeling, based on things I've heard from people in enterprise software or working on LLMs (often not because they signed up for it, but because the company is pivoting to it so i guess I write shitty LLMs now). I think artists will eventually be safe from AI but only after immense damages, I think writers will be similarly safe, but I'm worried for voice acting.

8 notes

·

View notes

Note

Have you considered going to Pillowfort?

Long answer down below:

I have been to the Sheezys, the Buzzlys, the Mastodons, etc. These platforms all saw a surge of new activity whenever big sites did something unpopular. But they always quickly died because of mismanagement or users going back to their old haunts due to lack of activity or digital Stockholm syndrome.

From what I have personally seen, a website that was purely created as an alternative to another has little chance of taking off. It it's going to work, it needs to be developed naturally and must fill a different niche. I mean look at Zuckerberg's Threads; died as fast as it blew up. Will Pillowford be any different?

The only alternative that I found with potential was the fediverse (mastodon) because of its decentralized nature. So people could make their own rules. If Jack Dorsey's new dating app Bluesky gets integrated into this system, it might have a chance. Although decentralized communities will be faced with unique challenges of their own (egos being one of the biggest, I think).

Trying to build a new platform right now might be a waste of time anyway because AI is going to completely reshape the Internet as we know it. This new technology is going to send shockwaves across the world akin to those caused by the invention of the Internet itself over 40 years ago. I'm sure most people here are aware of the damage it is doing to artists and writers. You have also likely seen the other insidious applications. Social media is being bombarded with a flood of fake war footage/other AI-generated disinformation. If you posted a video of your own voice online, criminals can feed it into an AI to replicate it and contact your bank in an attempt to get your financial info. You can make anyone who has recorded themselves say and do whatever you want. Children are using AI to make revenge porn of their classmates as a new form of bullying. Politicians are saying things they never said in their lives. Google searches are being poisoned by people who use AI to data scrape news sites to generate nonsensical articles and clickbait. Soon video evidence will no longer be used in court because we won't be able to tell real footage from deep fakes.

50% of the Internet's traffic is now bots. In some cases, websites and forums have been reduced to nothing more than different chatbots talking to each other, with no humans in sight.

I don't think we have to count on government intervention to solve this problem. The Western world could ban all AI tomorrow and other countries that are under no obligation to follow our laws or just don't care would continue to use it to poison the Internet. Pandora's box is open, and there's no closing it now.

Yet I cannot stand an Internet where I post a drawing or comic and the only interactions I get are from bots that are so convincing that I won't be able to tell the difference between them and real people anymore. When all that remains of art platforms are waterfalls of AI sludge where my work is drowned out by a virtually infinite amount of pictures that are generated in a fraction of a second. While I had to spend +40 hours for a visually inferior result.

If that is what I can expect to look forward to, I might as well delete what remains of my Internet presence today. I don't know what to do and I don't know where to go. This is a depressing post. I wish, after the countless hours I spent looking into this problem, I would be able to offer a solution.

All I know for sure is that artists should not remain on "Art/Creative" platforms that deliberately steal their work to feed it to their own AI or sell their data to companies that will. I left Artstation and DeviantArt for those reasons and I want to do the same with Tumblr. It's one thing when social media like Xitter, Tik Tok or Instagram do it, because I expect nothing less from the filth that runs those. But creative platforms have the obligation to, if not protect, at least not sell out their users.

But good luck convincing the entire collective of Tumblr, Artstation, and DeviantArt to leave. Especially when there is no good alternative. The Internet has never been more centralized into a handful of platforms, yet also never been more lonely and scattered. I miss the sense of community we artists used to have.

The truth is that there is nowhere left to run. Because everywhere is the same. You can try using Glaze or Nightshade to protect your work. But I don't know if I trust either of them. I don't trust anything that offers solutions that are 'too good to be true'. And even if take those preemptive measures, what is to stop the tech bros from updating their scrapers to work around Glaze and steal your work anyway? I will admit I don't entirely understand how the technology works so I don't know if this is a legitimate concern. But I'm just wondering if this is going to become some kind of digital arms race between tech bros and artists? Because that is a battle where the artists lose.

29 notes

·

View notes

Text

Realization of a cold atom gyroscope in space

High-precision space-based gyroscopes are important in space science research and space engineering applications. In fundamental physics research, they can be used to test the general relativity effects, such as the frame-dragging effect. These tests can explore the boundaries of the validity of general relativity and search for potential new physical theories. Several satellite projects have been implemented, including the Gravity Probe B (GP-B) and the Laser Relativity Satellite (LARES), which used electrostatic gyroscopes or the orbit data of the satellite to test the frame-dragging effect, achieving testing accuracies of 19% and 3% respectively. No violation of this general relativity effect was observed. Atom interferometers (AIs) use matter waves to measure inertial quantities. In space, thanks to the quiet satellite environment and long interference time, AIs are expected to achieve much higher acceleration and rotation measurement accuracies than those on the ground, making them important candidates for high-precision space-based inertial sensors. Europe and the United States propose relevant projects and have already conducted pre-research experiments for AIs using microgravity platforms such as the dropping tower, sounding rocket, parabolic flying plane, and the International Space Station.

The research team led by Mingsheng Zhan from the Innovation Academy for Precision Measurement Science and Technology of the Chinese Academy of Sciences (APM) developed a payload named China Space Station Atom Interferometer (CSSAI) [npj Microgravity 2023, 9 (58): 1-10], which was launched in November 2022 and installed inside the High Microgravity Level Research Rack in the China Space Station (CSS) to carry out scientific experiments. This payload enables atomic interference experiments of 85Rb and 87Rb and features an integrated design. The overall size of the payload is only 46 cm × 33 cm × 26 cm, with a maximum power consumption of approximately 75 W.

Recently, Zhan’s team used CSSAI to realize the space cold atom gyroscope measurements and systematically analyze its performance. Based on the 87Rb atomic shearing interference fringes achieved in orbit, the team analyzed the optimal shearing angle relationship to eliminate rotational measurement errors and proposed methods to calibrate these angles, realizing precise in-orbit rotation and acceleration measurements. The uncertainty of the rotational measurement is better than 3.0×10⁻⁵ rad/s, and the resolution of the acceleration measurement is better than 1.1×10⁻⁶ m/s². The team also revealed various errors that affect the space rotational measurements. This research provides a basis for the future development of high-precision space quantum inertial sensors. This work has been published in the 4th issue of National Science Review in 2025, titled "Realization of a cold atom gyroscope in space". Professors Xi Chen, Jin Wang, and Mingsheng Zhan are the co-corresponding authors.

The research team analyzed and solved the dephasing problem of the cold atom shearing interference fringe. Under general cases, the period and phase of shearing fringes will be affected by the initial position and velocity distribution of cold atom clouds, thus resulting in errors in rotation and acceleration measurements. Through detailed analyses of the phase of the shearing fringes, a magic shearing angle relationship was found, which eliminates the dephasing caused by the parameters of the atom clouds. Furthermore, a scheme was proposed to calibrate the shearing angle precisely in orbit. Then, the research team carried out precision in-orbit rotation and acceleration measurements based on the shearing interference fringes. By utilizing the fringes with an interference time of 75 ms, a rotation measurement resolution of 50 μrad/s and an acceleration measurement resolution of 1.0 μm/s² were achieved for a single experiment. A long-term rotation measurement resolution of 17 μrad/s was achieved through data integration. Furthermore, the research team studied error terms for the in-orbit atom interference rotation measurement. Systematic effects were analyzed for the imaging magnification factor, shearing angle, interference time sequence, laser wavelength, atom cloud parameter, magnetic field distribution, etc. It is found that the shearing angle error is one of the main factors that limits the measurement accuracy of future high-precision cold atom gyroscopes in space. The rotation measured by CSSAI was compared with that measured by the gyroscope of the CSS, and these two measurement values are in good agreement, further demonstrating the reliability of the rotation measurement.

This work not only realized the world's first space cold atom gyroscope but also provided foundations for the future space quantum inertial sensors in engineering design, inertial quantity extraction, and error evaluation.

UPPER IMAGE: (Left) Rotation and acceleration measurements using the CSSAI in-orbit and (Right) Rotation comparison between the CSSAI and the classical gyroscopes of the CSS. Credit ©Science China Press

LOWER IMAGE: Atom interferometer and data analysis with it. (a) The China Space Station Atom interferometer. (b) Analysis of the dephasing of shearing fringes. (c) Calibration of the shearing angle. Credit ©Science China Press

6 notes

·

View notes

Text

Unlock the other 99% of your data - now ready for AI

New Post has been published on https://thedigitalinsider.com/unlock-the-other-99-of-your-data-now-ready-for-ai/

Unlock the other 99% of your data - now ready for AI

For decades, companies of all sizes have recognized that the data available to them holds significant value, for improving user and customer experiences and for developing strategic plans based on empirical evidence.

As AI becomes increasingly accessible and practical for real-world business applications, the potential value of available data has grown exponentially. Successfully adopting AI requires significant effort in data collection, curation, and preprocessing. Moreover, important aspects such as data governance, privacy, anonymization, regulatory compliance, and security must be addressed carefully from the outset.

In a conversation with Henrique Lemes, Americas Data Platform Leader at IBM, we explored the challenges enterprises face in implementing practical AI in a range of use cases. We began by examining the nature of data itself, its various types, and its role in enabling effective AI-powered applications.

Henrique highlighted that referring to all enterprise information simply as ‘data’ understates its complexity. The modern enterprise navigates a fragmented landscape of diverse data types and inconsistent quality, particularly between structured and unstructured sources.

In simple terms, structured data refers to information that is organized in a standardized and easily searchable format, one that enables efficient processing and analysis by software systems.

Unstructured data is information that does not follow a predefined format nor organizational model, making it more complex to process and analyze. Unlike structured data, it includes diverse formats like emails, social media posts, videos, images, documents, and audio files. While it lacks the clear organization of structured data, unstructured data holds valuable insights that, when effectively managed through advanced analytics and AI, can drive innovation and inform strategic business decisions.

Henrique stated, “Currently, less than 1% of enterprise data is utilized by generative AI, and over 90% of that data is unstructured, which directly affects trust and quality”.

The element of trust in terms of data is an important one. Decision-makers in an organization need firm belief (trust) that the information at their fingertips is complete, reliable, and properly obtained. But there is evidence that states less than half of data available to businesses is used for AI, with unstructured data often going ignored or sidelined due to the complexity of processing it and examining it for compliance – especially at scale.

To open the way to better decisions that are based on a fuller set of empirical data, the trickle of easily consumed information needs to be turned into a firehose. Automated ingestion is the answer in this respect, Henrique said, but the governance rules and data policies still must be applied – to unstructured and structured data alike.

Henrique set out the three processes that let enterprises leverage the inherent value of their data. “Firstly, ingestion at scale. It’s important to automate this process. Second, curation and data governance. And the third [is when] you make this available for generative AI. We achieve over 40% of ROI over any conventional RAG use-case.”

IBM provides a unified strategy, rooted in a deep understanding of the enterprise’s AI journey, combined with advanced software solutions and domain expertise. This enables organizations to efficiently and securely transform both structured and unstructured data into AI-ready assets, all within the boundaries of existing governance and compliance frameworks.

“We bring together the people, processes, and tools. It’s not inherently simple, but we simplify it by aligning all the essential resources,” he said.

As businesses scale and transform, the diversity and volume of their data increase. To keep up, AI data ingestion process must be both scalable and flexible.

“[Companies] encounter difficulties when scaling because their AI solutions were initially built for specific tasks. When they attempt to broaden their scope, they often aren’t ready, the data pipelines grow more complex, and managing unstructured data becomes essential. This drives an increased demand for effective data governance,” he said.

IBM’s approach is to thoroughly understand each client’s AI journey, creating a clear roadmap to achieve ROI through effective AI implementation. “We prioritize data accuracy, whether structured or unstructured, along with data ingestion, lineage, governance, compliance with industry-specific regulations, and the necessary observability. These capabilities enable our clients to scale across multiple use cases and fully capitalize on the value of their data,” Henrique said.

Like anything worthwhile in technology implementation, it takes time to put the right processes in place, gravitate to the right tools, and have the necessary vision of how any data solution might need to evolve.

IBM offers enterprises a range of options and tooling to enable AI workloads in even the most regulated industries, at any scale. With international banks, finance houses, and global multinationals among its client roster, there are few substitutes for Big Blue in this context.

To find out more about enabling data pipelines for AI that drive business and offer fast, significant ROI, head over to this page.

#ai#AI-powered#Americas#Analysis#Analytics#applications#approach#assets#audio#banks#Blue#Business#business applications#Companies#complexity#compliance#customer experiences#data#data collection#Data Governance#data ingestion#data pipelines#data platform#decision-makers#diversity#documents#emails#enterprise#Enterprises#finance

2 notes

·

View notes