#AI web scraping

Explore tagged Tumblr posts

Text

Great things to do with web scraping

Web scraping can transform your business in a myriad of ways. Utilize advanced web scraping solutions to streamline your data collection process. Read more https://www.scrape.works/infographics/WebScraping/great-things-to-do-with-web-scraping

0 notes

Text

The man and his flower

#pokemon fanart#pokemon#my art#az pokemon#trainer az#pokemon az#eternal flower floette#az floette#pokemon x and y#pokemon xy#i recently found out about art shield and rgbwatermark which can help you with adding watermarks and such in preventing ai scraping off.#you can kinda see some circle patterns if you look closely#worth checking them out esp if you are unable to use/have not yet access (web)glaze!

223 notes

·

View notes

Text

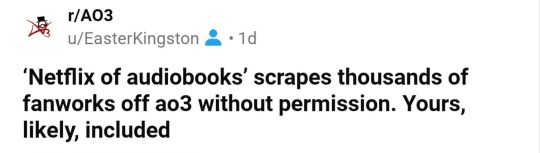

Ao3 was scraped for a GenAI dataset in the last few days (April 2025). If you have public works, they are likely a part of the dataset.

I’ve kept all of my Hidden Love fics open, trying to keep accessibility easy for out-of-country readers, so this makes me sad.

Here is a Reddit thread with additional information.

I’m tired.

4 notes

·

View notes

Text

ARTOBER DAY 17: A Warning to AI Thieves & Greedy Jerks

A fun goofy little pic with a warning for anybody (including this dumbass site) thinking of doing the scraping thing...

This Creator likes poisoning his data.

ռʊʀɢʟɛ is most welcome in these halls, servants of the Corpse God.

Also, say hi to my little Chaos Omni-Imp! If you're excited for my upcoming Space Marine Fancomic...

... then you'll probably get to see 'im in the future as a little easter egg~

#my ocs#fan comic#fanart#oc#oc art#small artist#artists on tumblr#ai scraping#ai scam#artober#day 17#web comic#nurgle#plaguefather#Chaos Demons#Imp#Chaos Horror#Chaos Fury#traitor astartes#Chaos Marines#heretic astartes#Havoc Brothers#warhammer 40k#warhammer 40000#warhammer art#warhammer fanart#one page rules#Grimdark Future

4 notes

·

View notes

Text

I think that if a person knows that something was made using trained on unethically sourced data AI. And still uses it/likes it/supports it/defends it.

Then said person should stop "being mad" when their data is used to train AI without consent.

#nitunio.txt#please dont half-ass it in terms of not supporting this stuff#if you like and willingly use writing AI that scrapes web without consent#then turn around and say 'wahh AI bad' when it concerns digital art. you're just a hypocrite#same goes for photos and music and other creative work#if you come across any 'machine learning AI generation' website immediately go to their FAQ or About sections#just see for yourself if they provide any sources for the data they've used and if it was consensual and only after that#ask yourself if you should be using it or just make something yourself#hell you can even ask somebody or pay somebody to do something you can't do. thats the joy of community#and even then there are many resources that were already made to be used for free with or without credit#i ramble a lot about things like these bc i cant just wrap my head around it#i just need all of these scraped datasets to burn down and self-delete

2 notes

·

View notes

Text

What is data mining? How is it different from data scraping?

Data mining converts data into accurate insights. Gather knowledge from unstructured data using advanced data mining techniques. Read more https://scrape.works/blog/what-is-data-mining-how-is-it-different-from-data-scraping/

0 notes

Text

done on my mobile app! There may be uses for A.I. (I remain unconvinced): this shit is not it.

They are already selling data to midjourney, and it's very likely your work is already being used to train their models because you have to OPT OUT of this, not opt in. Very scummy of them to roll this out unannounced.

98K notes

·

View notes

Text

🚚 AI-Scraped Insights for #QCommerce Delivery Time Optimization in #Singapore

In the hyper-competitive world of quick commerce, speed is everything. But what if your 10-minute delivery promise could be backed by real-time, #AIpoweredInsights?

Actowiz Solutions helps Q-commerce platforms in Singapore unlock:

✅ Zone-wise delivery performance

✅ Bottleneck identification across SKUs & hubs

✅ Competitor delivery benchmarks

✅ Predictive insights to reduce delivery lag

By combining AI models with live #DeliveryDataScraping, Q-commerce brands can ensure consistency, outperform rivals, and deliver delight—every time.

📊 Want to optimize your last-mile execution?

🔗 Read the full case study:

1 note

·

View note

Text

#automated data extraction#web scraping services#analyze competitor pricing strategies#analyze property demand#AI for advanced data insights

0 notes

Text

Unlocking the Web: How to Use an AI Agent for Web Scraping Effectively

In this age of big data, information has become the most powerful thing. However, accessing and organizing this data, particularly from the web, is not an easy feat. This is the point where AI agents step in. Automating the process of extracting valuable data from web pages, AI agents are changing the way businesses operate and developers, researchers as well as marketers.

In this blog, we’ll explore how you can use an AI agent for web scraping, what benefits it brings, the technologies behind it, and how you can build or invest in the best AI agent for web scraping for your unique needs. We’ll also look at how Custom AI Agent Development is reshaping how companies access data at scale.

What is Web Scraping?

Web scraping is a method of obtaining details from sites. It is used in a range of purposes, including price monitoring and lead generation market research, sentiment analysis and academic research. In the past web scraping was performed with scripting languages such as Python (with libraries like BeautifulSoup or Selenium) however, they require constant maintenance and are often limited in terms of scale and ability to adapt.

What is an AI Agent?

AI agents are intelligent software system that can be capable of making decisions and executing jobs on behalf of you. In the case of scraping websites, AI agents use machine learning, NLP (Natural Language Processing) and automated methods to navigate websites in a way that is intelligent and extract structured data and adjust to changes in the layout of websites and algorithms.

In contrast to crawlers or basic bots however, an AI agent doesn’t simply scrape in a blind manner; it comprehends the context of its actions, changes its behavior and grows with time.

Why Use an AI Agent for Web Scraping?

1. Adaptability

Websites can change regularly. Scrapers that are traditional break when the structure is changed. AI agents utilize pattern recognition and contextual awareness to adjust as they go along.

2. Scalability

AI agents are able to manage thousands or even hundreds of pages simultaneously due to their ability to make decisions automatically as well as cloud-based implementation.

3. Data Accuracy

AI improves the accuracy of data scraped in the process of filtering noise recognizing human language and confirming the results.

4. Reduced Maintenance

Because AI agents are able to learn and change and adapt, they eliminate the need for continuous manual updates to scrape scripts.

Best AI Agent for Web Scraping: What to Look For

If you’re searching for the best AI agent for web scraping. Here are the most important aspects to look out for:

NLP Capabilities for reading and interpreting text that is not structured.

Visual Recognition to interpret layouts of web pages or dynamic material.

Automation Tools: To simulate user interactions (clicks, scrolls, etc.)

Scheduling and Monitoring built-in tools that manage and automate scraping processes.

API integration You can directly send scraped data to your database or application.

Error Handling and Retries Intelligent fallback mechanisms that can help recover from sessions that are broken or access denied.

Custom AI Agent Development: Tailored to Your Needs

Though off-the-shelf AI agents can meet essential needs, Custom AI Agent Development is vital for businesses which require:

Custom-designed logic or workflows for data collection

Conformity with specific data policies or the lawful requirements

Integration with dashboards or internal tools

Competitive advantage via more efficient data gathering

At Xcelore, we specialize in AI Agent Development tailored for web scraping. Whether you’re monitoring market trends, aggregating news, or extracting leads, we build solutions that scale with your business needs.

How to Build Your Own AI Agent for Web Scraping

If you’re a tech-savvy person and want to create the AI you want to use Here’s a basic outline of the process:

Step 1: Define Your Objective

Be aware of the exact information you need, and the which sites. This is the basis for your design and toolset.

Step 2: Select Your Tools

Frameworks and tools that are popular include:

Python using libraries such as Scrapy, BeautifulSoup, and Selenium

Playwright or Puppeteer to automatize the browser

OpenAI and HuggingFace APIs for NLP and decision-making

Cloud Platforms such as AWS, Azure, or Google Cloud to increase their capacity

Step 3: Train Your Agent

Provide your agent with examples of structured as compared to. non-structured information. Machine learning can help it identify patterns and to extract pertinent information.

Step 4: Deploy and Monitor

You can run your AI agent according to a set schedule. Use alerting, logging, and dashboards to check the agent’s performance and guarantee accuracy of data.

Step 5: Optimize and Iterate

The AI agent you use should change. Make use of feedback loops as well as machine learning retraining in order to improve its reliability and accuracy as time passes.

Compliance and Ethics

Web scraping has ethical and legal issues. Be sure that your AI agent

Respects robots.txt rules

Avoid scraping copyrighted or personal content. Avoid scraping copyrighted or personal

Meets international and local regulations on data privacy

At Xcelore We integrate compliance into each AI Agent development project we manage.

Real-World Use Cases

E-commerce Price tracking across competitors�� websites

Finance Collecting news about stocks and financial statements

Recruitment extracting job postings and resumes

Travel Monitor hotel and flight prices

Academic Research: Data collection at a large scale to analyze

In all of these situations an intelligent and robust AI agent could turn the hours of manual data collection into a more efficient and scalable process.

Why Choose Xcelore for AI Agent Development?

At Xcelore, we bring together deep expertise in automation, data science, and software engineering to deliver powerful, scalable AI Agent Development Services. Whether you need a quick deployment or a fully custom AI agent development project tailored to your business goals, we’ve got you covered.

We can help:

Find scraping opportunities and devise strategies

Create and design AI agents that adapt to your demands

Maintain compliance and ensure data integrity

Transform unstructured web data into valuable insights

Final Thoughts

Making use of an AI agent for web scraping isn’t just an option for technical reasons, it’s now an advantage strategic. From better insights to more efficient automation, the advantages are immense. If you’re looking to build your own AI agent or or invest in the best AI agent for web scraping.The key is in a well-planned strategy and skilled execution.

Are you ready to unlock the internet by leveraging intelligent automation?

Contact Xcelore today to get started with your custom AI agent development journey.

#ai agent development services#AI Agent Development#AI agent for web scraping#build your own AI agent

0 notes

Text

Latest updates from OP:

SO HERE IS THE WHOLE STORY (SO FAR).

I am on my knees begging you to reblog this post and to stop reblogging the original ones I sent out yesterday. This is the complete account with all the most recent info; the other one is just sending people down senselessly panicked avenues that no longer lead anywhere.

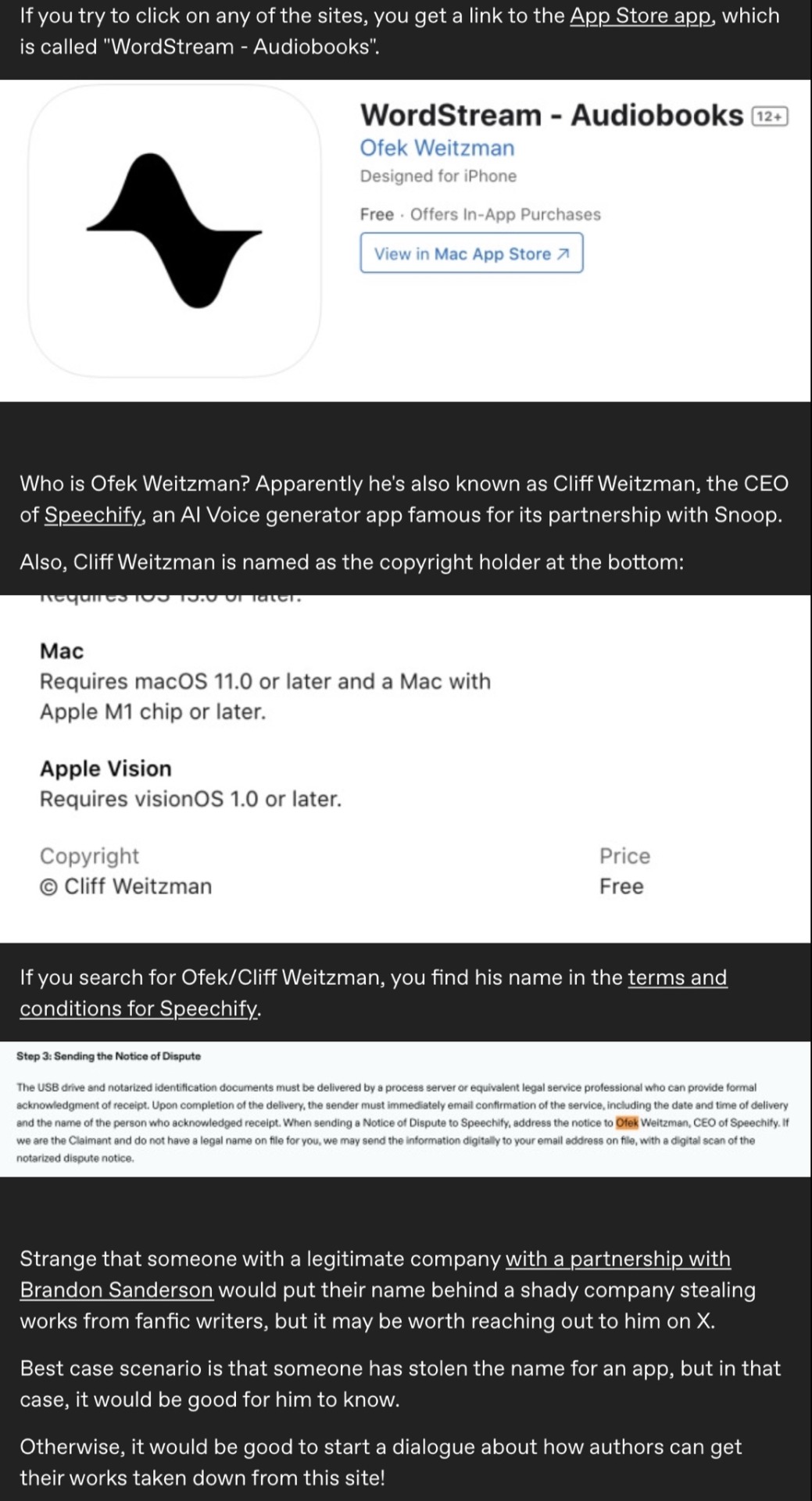

IN SHORT

Cliff Weitzman, CEO of Speechify and (aspiring?) voice actor, used AI to scrape thousands of popular, finished works off AO3 to list them on his own for-profit website and in his attached app. He did this without getting any kind of permission from the authors of said work or informing AO3. Obviously.

When fandom at large was made aware of his theft and started pushing back, Weitzman issued a non-apology on the original social media posts—using

his dyslexia;

his intent to implement a tip-system for the plagiarized authors; and

a sudden willingness to take down the work of every author who saw my original social media posts and emailed him individually with a ‘valid’ claim,

as reasons we should allow him to continue monetizing fanwork for his own financial gain.

When we less-than-kindly refused, he took down his ‘apologies’ as well as his website (allegedly—it’s possible that our complaints to his web host, the deluge of emails he received or the unanticipated traffic brought it down, since there wasn’t any sort of official statement made about it), and when it came back up several hours later, all of the work formerly listed in the fan fiction category was no longer there.

THE TAKEAWAYS

1. Cliff Weitzman (aka Ofek Weitzman) is a scumbag with no qualms about taking fanwork without permission, feeding it to AI and monetizing it for his own financial gain;

2. Fandom can really get things done when it wants to, and

3. Our fanworks appear to be hidden, but they’re NOT DELETED from Weitzman’s servers, and independently published, original works are still listed without the authors' permission. We need to hold this man responsible for his theft, keep an eye on both his current and future endeavors, and take action immediately when he crosses the line again.

THE TIMELINE, THE DETAILS, THE SCREENSHOTS (behind the cut)

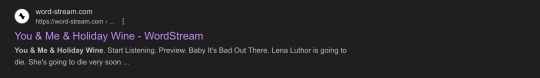

Sunday night, December 22nd 2024, I noticed an influx in visitors to my fic You & Me & Holiday Wine. When I searched the title online, hoping to find out where they came from, a new listing popped up (third one down, no less):

This listing is still up today, by the way, though now when you follow the link to word-stream, it just brings you to the main site. (Also, to be clear, this was not the cause for the influx of traffic to my fic; word-stream did not link back to the original work anywhere.)

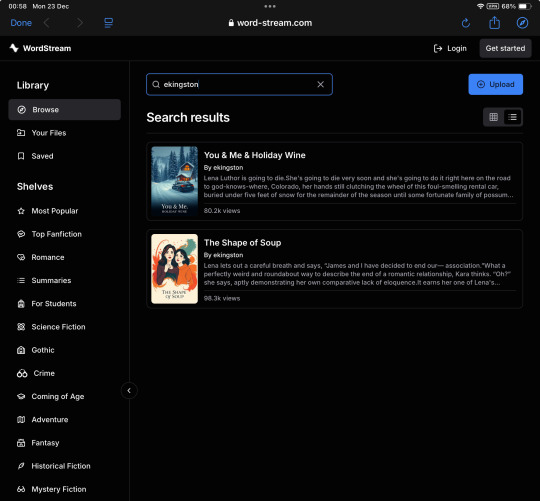

I followed the link to word-stream, where to my horror Y&M&HW was listed in its entirety—though, beyond the first half of the first chapter, behind a paywall—along with a link promising to take me—through an app downloadable on the Apple Store—to an AI-narrated audiobook version. When I searched word-stream itself for my ao3 handle I found both of my multi-chapter fics were listed this way:

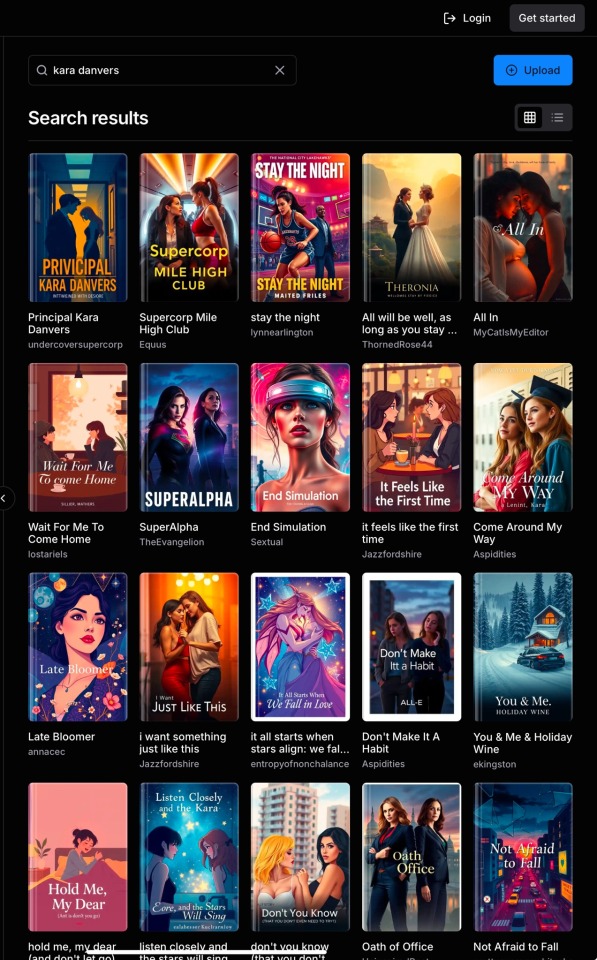

Because the tags on my fics (which included genres* and characters, but never the original IPs**) weren’t working, I put ‘Kara Danvers’ into the search bar and discovered that many more supercorp fics (Supergirl TV fandom, Kara Danvers/Lena Luthor pairing) were listed.

I went looking online for any mention of word-stream and AI plagiarism (the covers—as well as the ridiculously inflated number of reviews and ratings—made it immediately obvious that AI fuckery was involved), but found almost nothing: only one single Reddit post had been made, and it received (at that time) only a handful of upvotes and no advice.

I decided to make a tumblr post to bring the supercorp fandom up to speed about the theft. I draw as well as write for fandom and I’ve only ever had to deal with art theft—which has a clear set of steps to take depending on where said art was reposted—and I was at a loss regarding where to start in this situation.

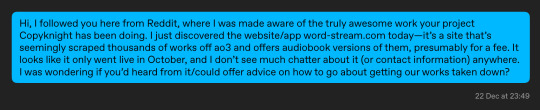

After my post went up I remembered Project Copy Knight, which is worth commending for the work they’ve done to get fic stolen from AO3 taken down from monetized AI 'audiobook’ YouTube accounts. I reached out to @echoekhi, asking if they’d heard of this site and whether they could advise me on how to get our works taken down.

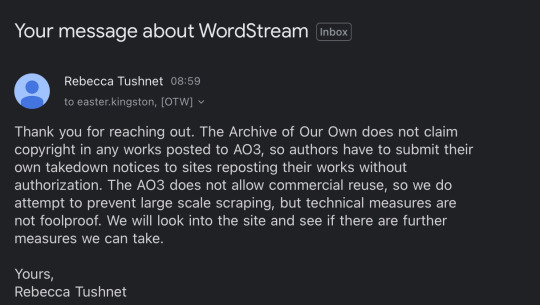

While waiting for a reply I looked into Copy Knight’s methods and decided to contact OTW’s legal department:

And then I went to bed.

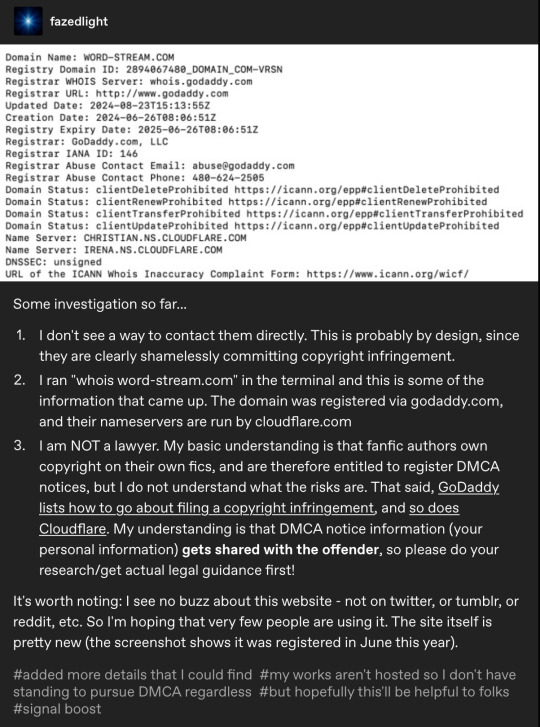

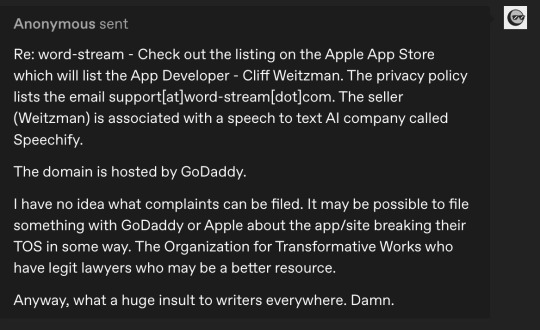

By morning, tumblr friends @makicarn and @fazedlight as well as a very helpful tumblr anon had seen my post and done some very productive sleuthing:

@echoekhi had also gotten back to me, advising me, as expected, to contact the OTW. So I decided to sit tight until I got a response from them.

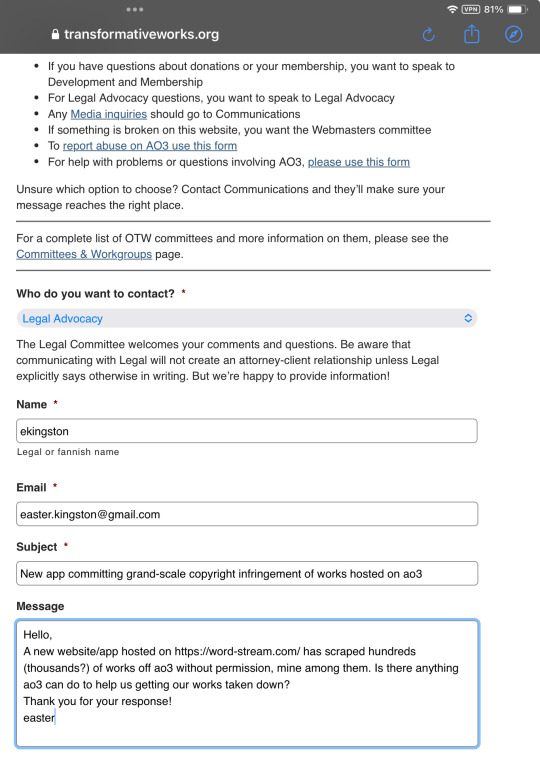

That response came only an hour or so later:

Which was 100% understandable, but still disappointing—I doubted a handful of individual takedown requests would accomplish much, and I wasn’t eager to share my given name and personal information with Cliff Weitzman himself, which is unavoidable if you want to file a DMCA.

I decided to take it to Reddit, hoping it would gain traction in the wider fanfic community, considering so many fandoms were affected. My Reddit posts (with the updates at the bottom as they were emerging) can be found here and here.

A helpful Reddit user posted a guide on how users could go about filing a DMCA against word-stream here (to wobbly-at-best results)

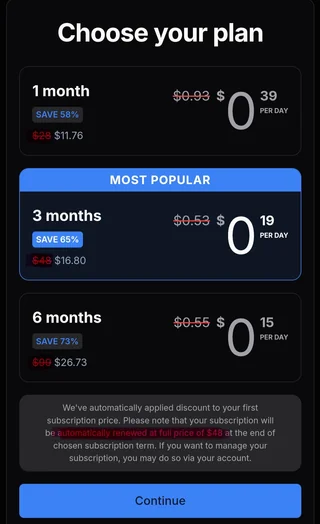

A different helpful Reddit user signed up to access insight into word-streams pricing. Comment is here.

Smells unbelievably scammy, right? In addition to those audacious prices—though in all fairness any amount of money would be audacious considering every work listed is accessible elsewhere for free—my dyscalculia is screaming silently at the sight of that completely unnecessary amount of intentionally obscured numbers.

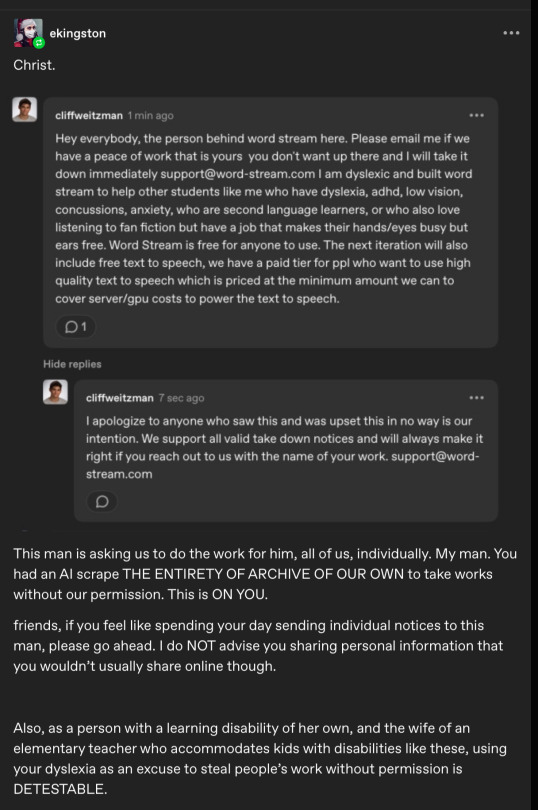

Speaking of which! As soon as the post on r/AO3—and, as a result, my original tumblr post—began taking off properly, sometime around 1 pm, jumpscare! A notification that a tumblr account named @cliffweitzman had commented on my post, and I got a bit mad about the gist of his message :

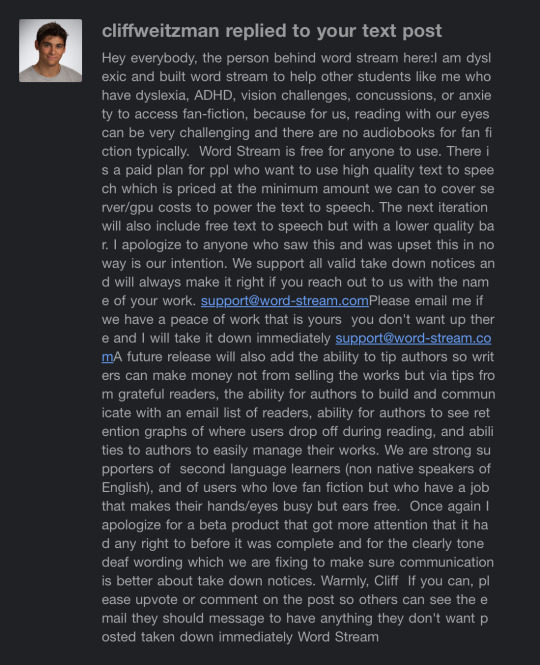

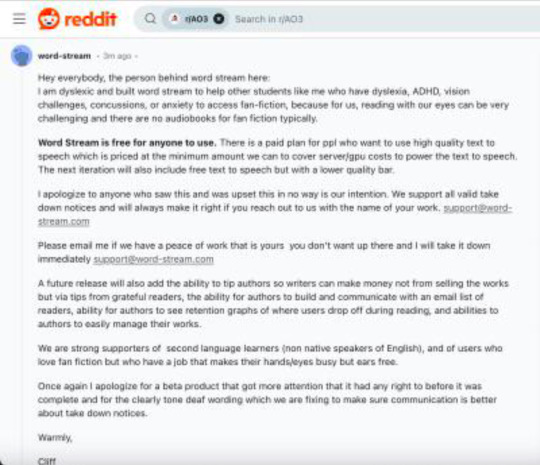

Fortunately he caught plenty of flack in the comments from other users (truly you should check out the comment section, it is extremely gratifying and people are making tremendously good points), in response to which, of course, he first tried to both reiterate and renegotiate his point in a second, longer comment (which I didn’t screenshot in time so I’m sorry for the crappy notification email formatting):

which he then proceeded to also post to Reddit (this is another Reddit user’s screenshot, I didn’t see it at all, the notifications were moving too fast for me to follow by then)

... where he got a roughly equal amount of righteously furious replies. (Check downthread, they're still there, all the way at the bottom.)

After which Cliff went ahead & deleted his messages altogether.

It’s not entirely clear whether his account was suspended by Reddit soon after or whether he deleted it himself, but considering his tumblr account is still intact, I assume it’s the former. He made a handful of sock puppet accounts to play around with for a while, both on Reddit and Tumblr, only one of which I have a screenshot of, but since they all say roughly the same thing, you’re not missing much:

And then word-stream started throwing a DNS error.

That lasted for a good number of hours, which was unfortunately right around the time that a lot of authors first heard about the situation and started asking me individually how to find out whether their work was stolen too. I do not have that information and I am unclear on the perimeters Weitzman set for his AI scraper, so this is all conjecture: it LOOKS like the fics that were lifted had three things in common:

They were completed works;

They had over several thousand kudos on AO3; and

They were written by authors who had actively posted or updated work over the past year.

If anyone knows more about these perimeters or has info that counters my observation, please let me know!

I finally thought to check/alert evil Twitter during this time, and found out that the news was doing the rounds there already. I made a quick thread summarizing everything that had happened just in case. You can find it here.

I went to Bluesky too, where fandom was doing all the heavy lifting for me already, so I just reskeeted, as you do, and carried on.

Sometime in the very early evening, word-stream went back up—but the fan fiction category was nowhere to be seen. Tentative joy and celebration!***

That’s when several users—the ones who had signed up for accounts to gain intel and had accessed their own fics that way—reported that their work could still be accessed through their history. Relevant Reddit post here.

Sooo—

We’re obviously not done. The fanwork that was stolen by Weitzman may be inaccessible through his website right now, but they aren’t actually gone. And the fact that Weitzman wasn’t willing to get rid of them altogether means he still has plans for them.

This was my final edit on my Reddit post before turning off notifications, and it's pretty much where my head will be at for at least the foreseeable future:

Please feel free to add info in the comments, make your own posts, take whatever action you want to take to protect your work. I only beg you—seriously, I’m on my knees here—to not give up like I saw a handful of people express the urge to do. Keep sharing your creative work and remain vigilant and stay active to make sure we can continue to do so freely. Visit your favorite fics, and the ones you’ve kept in your ‘marked for later’ lists but never made time to read, and leave kudos, leave comments, support your fandom creatives, celebrate podficcers and support AO3. We created this place and it’s our responsibility to keep it alive and thriving for as long as we possibly can.

Also FUCK generative AI. It has NO place in fandom spaces.

THE 'SMALL' PRINT (some of it in all caps):

*Weitzman knew what he was doing and can NOT claim ignorance. One, it’s pretty basic kindergarten stuff that you don’t steal some other kid’s art project and present it as your own only to act surprised when they protest and then tell the victim that they should have told you sooner that they didn’t want their project stolen. And two, he was very careful never to list the IPs these fanworks were based on, so it’s clear he was at least familiar enough with the legalities to not get himself in hot water with corporate lawyers. Fucking over fans, though, he figured he could get away with that.

**A note about the AI that Weitzman used to steal our work: it’s even greasier than it looks at first glance. It’s not just the method he used to lift works off AO3 and then regurgitate onto his own website and app. Looking beyond the untold horrors of his AI-generated cover ‘art’, in many cases these covers attempt to depict something from the fics in question that can’t be gleaned from their summaries alone. In addition, my fics (and I assume the others, as well) were listed with generated genres; tags that did not appear anywhere in or on my fic on AO3 and were sometimes scarily accurate and sometimes way off the mark. I remember You & Me & Holiday Wine had ‘found family’ (100% correct, but not tagged by me as such) and I believe The Shape of Soup was listed as, among others, ‘enemies to friends to lovers’ and ‘love triangle’ (both wildly inaccurate). Even worse, not all the fic listed (as authors on Reddit pointed out) came with their original summaries at all. Often the entire summary was AI-generated. All of these things make it very clear that it was an all-encompassing scrape—not only were our fics stolen, they were also fed word-for-word into the AI Weitzman used and then analyzed to suit Weitzman’s needs. This means our work was literally fed to this AI to basically do with whatever its other users want, including (one assumes) text generation.

***Fan fiction appears to have been made (largely) inaccessible on word-stream at this time, but I’m hearing from several authors that their original, independently published work, which is listed at places like Kindle Unlimited, DOES still appear in word-stream’s search engine. This obviously hurts writers, especially independent ones, who depend on these works for income and, as a rule, don’t have a huge budget or a legal team with oceans of time to fight these battles for them. If you consider yourself an author in the broader sense, beyond merely existing online as a fandom author, beyond concerns that your own work is immediately at risk, DO NOT STOP MAKING NOISE ABOUT THIS.

Again, please, please PLEASE reblog this post instead of the one I sent originally. All the information is here, and it's driving me nuts to see the old ones are still passed around, sending people on wild goose chases.

Thank you all so much.

#word-stream#cliff weitzman#plagiarism#speechify#archive of our own#writers on tumblr#fanfic#independent authors#web scraping#fandom activism#writeblr#ao3#ao3 fanfic#ao3 writer#fanfiction#writing#writer#writing community#anti ai#anti generative ai#fic theft#anti capitalism#not everything needs to be monetised you fucking donkeys. always a tech bro ready to make a buck off someone else's work.#congrats to those of you who thought ai was harmless fun. can you see the downward slope yet?

48K notes

·

View notes

Text

Choosing a web scraping tool in 2025? We’ve broken down the best free and paid options so you can extract data smarter, faster, and at scale. 👉 Check out the complete list in the article: https://shorturl.at/0Cvnw

#DataScraping #WebAutomation #BigData #Tech2025 #DataTools

0 notes

Text

Web Scraping 101: Everything You Need to Know in 2025

🕸️ What Is Web Scraping? An Introduction

Web scraping—also referred to as web data extraction—is the process of collecting structured information from websites using automated scripts or tools. Initially driven by simple scripts, it has now evolved into a core component of modern data strategies for competitive research, price monitoring, SEO, market intelligence, and more.

If you’re wondering “What is the introduction of web scraping?” — it’s this: the ability to turn unstructured web content into organized datasets businesses can use to make smarter, faster decisions.

💡 What Is Web Scraping Used For?

Businesses and developers alike use web scraping to:

Monitor competitors’ pricing and SEO rankings

Extract leads from directories or online marketplaces

Track product listings, reviews, and inventory

Aggregate news, blogs, and social content for trend analysis

Fuel AI models with large datasets from the open web

Whether it’s web scraping using Python, browser-based tools, or cloud APIs, the use cases are growing fast across marketing, research, and automation.

🔍 Examples of Web Scraping in Action

What is an example of web scraping?

A real estate firm scrapes listing data (price, location, features) from property websites to build a market dashboard.

An eCommerce brand scrapes competitor prices daily to adjust its own pricing in real time.

A SaaS company uses BeautifulSoup in Python to extract product reviews and social proof for sentiment analysis.

For many, web scraping is the first step in automating decision-making and building data pipelines for BI platforms.

⚖️ Is Web Scraping Legal?

Yes—if done ethically and responsibly. While scraping public data is legal in many jurisdictions, scraping private, gated, or copyrighted content can lead to violations.

To stay compliant:

Respect robots.txt rules

Avoid scraping personal or sensitive data

Prefer API access where possible

Follow website terms of service

If you’re wondering “Is web scraping legal?”—the answer lies in how you scrape and what you scrape.

🧠 Web Scraping with Python: Tools & Libraries

What is web scraping in Python? Python is the most popular language for scraping because of its ease of use and strong ecosystem.

Popular Python libraries for web scraping include:

BeautifulSoup – simple and effective for HTML parsing

Requests – handles HTTP requests

Selenium – ideal for dynamic JavaScript-heavy pages

Scrapy – robust framework for large-scale scraping projects

Puppeteer (via Node.js) – for advanced browser emulation

These tools are often used in tutorials like “Web scraping using Python BeautifulSoup” or “Python web scraping library for beginners.”

⚙️ DIY vs. Managed Web Scraping

You can choose between:

DIY scraping: Full control, requires dev resources

Managed scraping: Outsourced to experts, ideal for scale or non-technical teams

Use managed scraping services for large-scale needs, or build Python-based scrapers for targeted projects using frameworks and libraries mentioned above.

🚧 Challenges in Web Scraping (and How to Overcome Them)

Modern websites often include:

JavaScript rendering

CAPTCHA protection

Rate limiting and dynamic loading

To solve this:

Use rotating proxies

Implement headless browsers like Selenium

Leverage AI-powered scraping for content variation and structure detection

Deploy scrapers on cloud platforms using containers (e.g., Docker + AWS)

🔐 Ethical and Legal Best Practices

Scraping must balance business innovation with user privacy and legal integrity. Ethical scraping includes:

Minimal server load

Clear attribution

Honoring opt-out mechanisms

This ensures long-term scalability and compliance for enterprise-grade web scraping systems.

🔮 The Future of Web Scraping

As demand for real-time analytics and AI training data grows, scraping is becoming:

Smarter (AI-enhanced)

Faster (real-time extraction)

Scalable (cloud-native deployments)

From developers using BeautifulSoup or Scrapy, to businesses leveraging API-fed dashboards, web scraping is central to turning online information into strategic insights.

📘 Summary: Web Scraping 101 in 2025

Web scraping in 2025 is the automated collection of website data, widely used for SEO monitoring, price tracking, lead generation, and competitive research. It relies on powerful tools like BeautifulSoup, Selenium, and Scrapy, especially within Python environments. While scraping publicly available data is generally legal, it's crucial to follow website terms of service and ethical guidelines to avoid compliance issues. Despite challenges like dynamic content and anti-scraping defenses, the use of AI and cloud-based infrastructure is making web scraping smarter, faster, and more scalable than ever—transforming it into a cornerstone of modern data strategies.

🔗 Want to Build or Scale Your AI-Powered Scraping Strategy?

Whether you're exploring AI-driven tools, training models on web data, or integrating smart automation into your data workflows—AI is transforming how web scraping works at scale.

👉 Find AI Agencies specialized in intelligent web scraping on Catch Experts,

📲 Stay connected for the latest in AI, data automation, and scraping innovation:

💼 LinkedIn

🐦 Twitter

📸 Instagram

👍 Facebook

▶️ YouTube

#web scraping#what is web scraping#web scraping examples#AI-powered scraping#Python web scraping#web scraping tools#BeautifulSoup Python#web scraping using Python#ethical web scraping#web scraping 101#is web scraping legal#web scraping in 2025#web scraping libraries#data scraping for business#automated data extraction#AI and web scraping#cloud scraping solutions#scalable web scraping#managed scraping services#web scraping with AI

0 notes

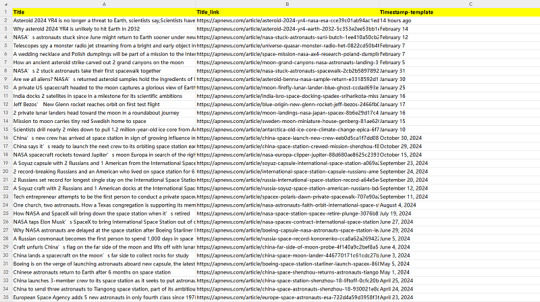

Text

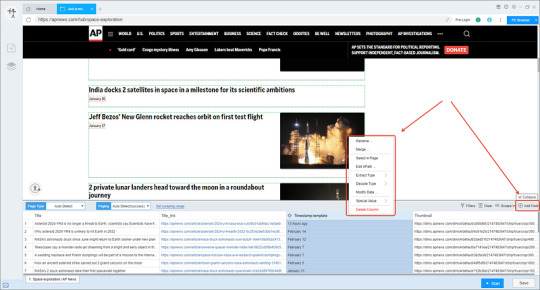

How to scrape news data from AP News

AP News is the news platform of the Associated Press (AP), one of the world's largest and most authoritative international news organizations.

Introduction to the scraping tool

ScrapeStorm is a new generation of Web Scraping Tool based on artificial intelligence technology. It is the first scraper to support both Windows, Mac and Linux operating systems.

Preview of the scraped result

This is the demo task:

Google Drive:

OneDrive:

1. Create a task

(1) Copy the URL

(2) Create a new smart mode task

You can create a new scraping task directly on the software, or you can create a task by importing rules.

How to create a smart mode task

2. Configure the scraping rules

Smart mode automatically detects the fields on the page. You can right-click the field to rename the name, add or delete fields, modify data, and so on.

3. Set up and start the scraping task

(1) Run settings

Choose your own needs, you can set Schedule, IP Rotation&Delay, Automatic Export, Download Images, Speed Boost, Data Deduplication and Developer.

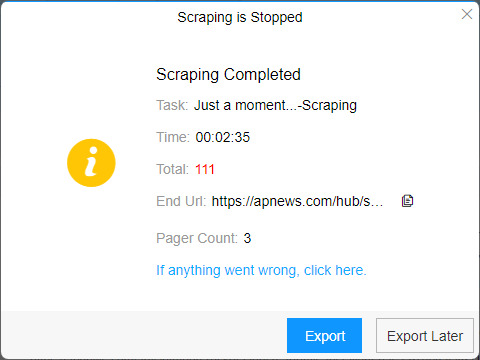

(2)Wait a moment, you will see the data being scraped.

4. Export and view data

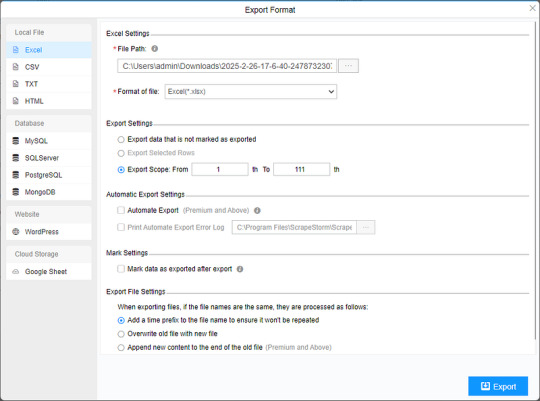

(1) Click “Export” to download your data.

(2) Choose the format to export according to your needs.

ScrapeStorm provides a variety of export methods to export locally, such as excel, csv, html, txt or database. Professional Plan and above users can also post directly to wordpress.

How to view data and clear data

0 notes

Link

#accent_color: None canonical_url: None codeinjection_foot: None codeinjection_head: None created_at: 2025-02-06T00:40:19.000Z description: N#research applied to web scraping. feature_image: None id: 610062291a85c3003b6b8a8f meta_description: None meta_title: None name: AI ML og_d

0 notes

Text

Ed's parental leave: Day 5 (Feb 8) - Draw the rest of the owl

Well, I finished my scraper: I can feed it the page I want scraped, and it will go and download everything I want and put it into a bunch of files on my filesystem, one per entry. I have mixed feelings about the AI coding experience. Let's try to tease them apart.

The good:

Not having to actually write code reduces cognitive load. I still have to design, fix bugs, do testing, iterate on UX, but I don't have to worry about writing correct Python code or how to use Selenium API correctly. I'm not sure if it made me faster, but I don't think I gained any skills in writing code that programs against Selenium, and that's fine by me.

Cursor is a refactoring machine. I changed my mind about overall program structure a lot, and it was easy to do things like add a new kwarg and thread it through everywhere, add logging for all wait operations, change the program to load all tabs at once rather than processing them one-by-one, etc. and it usually one shot all of these tasks. Normally doing these rewrites is annoying but I definitely felt like I could easily restructure the program as I better understood requirements. I also did not mind throwing away code and writing it again from scratch, and did this several times.

While I initially painstakingly prompted how exactly I wanted the scraping flow to go for a page, later in the development process I just asked the LLM to implement the scraping procedure for a page directly. These never worked zero shot, but they worked /enough/ to be a useful place to start debugging.

I really appreciated being able to ask the LLM for logging. I definitely would not have been disciplined enough to do good logging on a prototype but the logging was definitely useful.

The meh:

I noticed that sometimes the LLM is gravitationally pulled to certain ways of solving things. In principle, these can be solved with better prompting, though sometimes I decided I didn't care and let the LLM do it in its default style. For example, the LLM constantly wanted me to pass driver in as an argument to my functions, even though I had set things up so the driver was globally available and could always be inferred. Well, explicitly passing the driver in did end up being useful occasionally.

I still have some environment setup problems; for example, I needed to mid-stream upgrade the Python version in uv, and my pylance is still broken for some module imports for some reason. Cursor was not that helpful zero shot; I suspect careful prompting of Claude can help me debug this but Cursor isn't really bringing anything to the table here.

When using exclusively Composer, it's easy to notice that the LLM is re-generating the entire function every time. The longer the function, the more time it takes. Making matters worse, the LLM likes making wordy functions. Oh well. Goes to show that you really do want an unreasonably fast tok/s here.

I still had to do all the debugging myself, since the LLMs wrote bugs. One of the funnier bugs I had to resolve was why all the elements I was hoping to see were missing (answer: because the driver was actually focused on DevTools). It didn't feel like there would have been very much utility in prompting the model into figuring out the problem. In general I feel LLMs are very good at remembering solutions to problems they've seen in their training, but not very good at actually methodically debugging something. (Prompt problem? Maybe. Or maybe you just need some CoT.)

I didn't really rely on the LLM much for high level design of the scraper. I guess this is reasonable; with the hot reloading exploration yesterday, I've been trying to set things up in an unconventional way, whereas the LLM is going to try to do ordinary things.

The bad:

All in all, AI Coding didn't really make my problem feel "easy". I didn't have very many magic moments where the LLM melted a problem that I wasn't expecting it to melt. I still had to do all the debugging. I guess this is why most of the AI companies are trying to get end to end flows working, because that's what really squeezes the juice.

LLM really bad at refusal when asked to do impossible things. "I want to write a function that detects what Selenium window is currently focused, e.g., if the user had manually changed the focus in the browser." This is apparently impossible in Selenium. But the LLM will send you off on a merry goose chase with code that doesn't work.

The LLM has really bad default habits about exception handling, bare excepts everywhere. I think careful prompting will help here but it really is very annoying that this is the default.

Multi file refactoring doesn't work very well, matching observations I saw from others that multi-file makes Cursor struggle more to find the right context.

0 notes