#Neural Network Development

Explore tagged Tumblr posts

Text

Harnessing the Power of Artificial Intelligence in Web Development

#AI Services#Machine Learning Solutions#Artificial Intelligence Expertise#Advanced Data Analytics#Deep Learning Mastery#Predictive Analytics#Intelligent Automation#Neural Network Development#Data-driven Decision Making#Cognitive Computing Services#web development#zapper

0 notes

Text

Applied AI - Integrating AI With a Roomba

AKA. What have I been doing for the past month and a half

Everyone loves Roombas. Cats. People. Cat-people. There have been a number of Roomba hacks posted online over the years, but an often overlooked point is how very easy it is to use Roombas for cheap applied robotics projects.

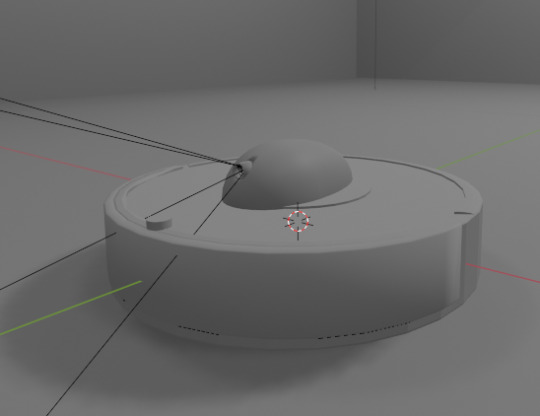

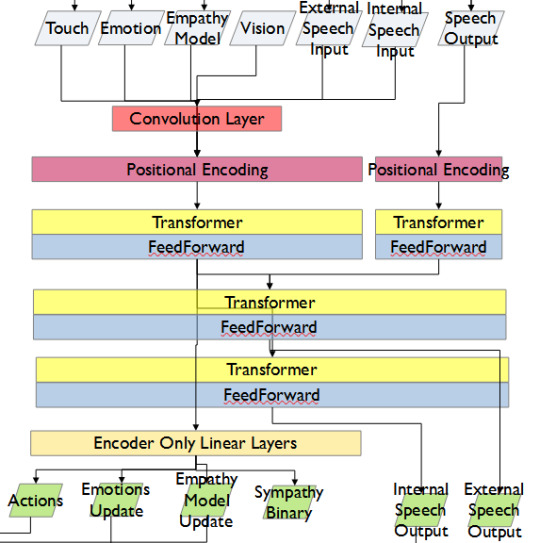

Continuing on from a project done for academic purposes, today's showcase is a work in progress for a real-world application of Speech-to-text, actionable, transformer based AI models. MARVINA (Multimodal Artificial Robotics Verification Intelligence Network Application) is being applied, in this case, to this Roomba, modified with a Raspberry Pi 3B, a 1080p camera, and a combined mic and speaker system.

The hardware specifics have been a fun challenge over the past couple of months, especially relating to the construction of the 3D mounts for the camera and audio input/output system.

Roomba models are particularly well suited to tinkering - the serial connector allows the interface of external hardware - with iRobot (the provider company) having a full manual for commands that can be sent to the Roomba itself. It can even play entire songs! (Highly recommend)

Scope:

Current:

The aim of this project is to, initially, replicate the verbal command system which powers the current virtual environment based system.

This has been achieved with the custom MARVINA AI system, which is interfaced with both the Pocket Sphinx Speech-To-Text (SpeechRecognition · PyPI) and Piper-TTS Text-To-Speech (GitHub - rhasspy/piper: A fast, local neural text to speech system) AI systems. This gives the AI the ability to do one of 8 commands, give verbal output, and use a limited-training version of the emotional-empathy system.

This has mostly been achieved. Now that I know it's functional I can now justify spending money on a better microphone/speaker system so I don't have to shout at the poor thing!

The latency time for the Raspberry PI 3B for each output is a very spritely 75ms! This allows for plenty of time between the current AI input "framerate" of 500ms.

Future - Software:

Subsequent testing will imbue the Roomba with a greater sense of abstracted "emotion" - the AI having a ground set of emotional state variables which decide how it, and the interacting person, are "feeling" at any given point in time.

This, ideally, is to give the AI system a sense of motivation. The AI is essentially being given separate drives for social connection, curiosity and other emotional states. The programming will be designed to optimise for those, while the emotional model will regulate this on a seperate, biologically based, system of under and over stimulation.

In other words, a motivational system that incentivises only up to a point.

The current system does have a system implemented, but this only has very limited testing data. One of the key parts of this project's success will be to generatively create a training data set which will allow for high-quality interactions.

The future of MARVINA-R will be relating to expanding the abstracted equivalent of "Theory-of-Mind". - In other words, having MARVINA-R "imagine" a future which could exist in order to consider it's choices, and what actions it wishes to take.

This system is based, in part, upon the Dyna-lang model created by Lin et al. 2023 at UC Berkley ([2308.01399] Learning to Model the World with Language (arxiv.org)) but with a key difference - MARVINA-R will be running with two neural networks - one based on short-term memory and the second based on long-term memory. Decisions will be made based on which is most appropriate, and on how similar the current input data is to the generated world-model of each model.

Once at rest, MARVINA-R will effectively "sleep", essentially keeping the most important memories, and consolidating them into the long-term network if they lead to better outcomes.

This will allow the system to be tailored beyond its current limitations - where it can be designed to be motivated by multiple emotional "pulls" for its attention.

This does, however, also increase the number of AI outputs required per action (by a magnitude of about 10 to 100) so this will need to be carefully considered in terms of the software and hardware requirements.

Results So Far:

Here is the current prototyping setup for MARVINA-R. As of a couple of weeks ago, I was able to run the entire RaspberryPi and applied hardware setup and successfully interface with the robot with the components disconnected.

I'll upload a video of the final stage of initial testing in the near future - it's great fun!

The main issues really do come down to hardware limitations. The microphone is a cheap ~$6 thing from Amazon and requires you to shout at the poor robot to get it to do anything! The second limitation currently comes from outputting the text-to-speech, which does have a time lag from speaking to output of around 4 seconds. Not terrible, but also can be improved.

To my mind, the proof of concept has been created - this is possible. Now I can justify further time, and investment, for better parts and for more software engineering!

#robot#robotics#roomba#roomba hack#ai#artificial intelligence#machine learning#applied hardware#ai research#ai development#cybernetics#neural networks#neural network#raspberry pi#open source

8 notes

·

View notes

Text

Romanian AI Helps Farmers and Institutions Get Better Access to EU Funds - Technology Org

New Post has been published on https://thedigitalinsider.com/romanian-ai-helps-farmers-and-institutions-get-better-access-to-eu-funds-technology-org/

Romanian AI Helps Farmers and Institutions Get Better Access to EU Funds - Technology Org

A Romanian state agency overseeing rural investments has adopted artificial intelligence to aid farmers in accessing European Union funds.

Gardening based on aquaculture technology. Image credit: sasint via Pixabay, free license

The Agency for Financing Rural Investments (AFIR) revealed that it integrated robots from software automation firm UiPath approximately two years ago. These robots have assumed the arduous task of accessing state databases to gather land registry and judicial records required by farmers, entrepreneurs, and state entities applying for EU funding.

George Chirita, director of AFIR, emphasized the role of AI-driven automation was groundbreaking in expediting the most important organizational processes for farmers, thereby enhancing their efficiency. Since the introduction of these robots, AFIR has managed financing requests totaling 5.32 billion euros ($5.75 billion) from over 50,000 beneficiaries, including farmers, businesses, and local institutions.

The implementation of robots has notably saved AFIR staff approximately 784 days’ worth of document searches. Over the past two decades, AFIR has disbursed funds amounting to 21 billion euros.

Despite Romania’s burgeoning status as a technology hub with a highly skilled workforce, the nation continues to lag behind its European counterparts in offering digital public services to citizens and businesses, and in effectively accessing EU development funds. Eurostat data from 2023 indicated that only 28% of Romanians possessed basic digital skills, significantly below the EU average of 54%. Moreover, Romania’s digital public services scored 45, well below the EU average of 84.

UiPath, the Romanian company valued at $13.3 billion following its public listing on the New York Stock Exchange, also provides automation solutions to agricultural agencies in other countries, including Norway and the United States.

Written by Vytautas Valinskas

#000#2023#A.I. & Neural Networks news#ai#aquaculture#artificial#Artificial Intelligence#artificial intelligence (AI)#Authored post#automation#billion#data#databases#development#efficiency#eu#EU funds#european union#Featured technology news#Fintech news#Funding#gardening#intelligence#investments#it#new york#Norway#Other#Robots#Romania

2 notes

·

View notes

Text

Project "ML.Pneumonia": Finale

Final accuracy metrics:

Project model - 83%

Basic model - 75%

Basic model with larger dataset - 83%

Overall results:

A good model of autoencoder has been developed

A classifier has been created which surpasses the basic model in accuracy

Both models are scaled well (the more voluminous datasets are used in training - the better results could be obtained)

Development proposals:

Increase hardware resources

Consider the need to create larger models (increase the number of CNNs layers, filters…)

Use larger datasets

Improve the preprocessing of the datasets used (CNN may not handle incorrectly rotated scans well, and not always the corresponding scans are presented in the datasets (e.g. in one of the datasets I've noticed a longitudinal CT scan instead of a transverse one))

The link to the GitHub repository with clean project code and details of results is left below.

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

0 notes

Text

1 note

·

View note

Text

#cyber security#ai#chatgpt#devops#neural networks#bug bounty#cyberpunk 2077#cybersecurity#information security#information technology#5g technology#5g network#5g services#books and reading#books#development#software#services#developer

0 notes

Text

Because I'm wondering what happens to the human brain between birth and adulthood and adultier adulthood, I'm tempted to get into the neuroscience of human development, how we become and continue to become who we are. Cortexes. Neurons. Axons. Synapses. Receptors and Neurotransmitters. But I'm afraid I'll get stuck in a metaphor that involves switchboards and switchboard operators from which there's no escape.

I just don't know enough to handle the explanation elegantly.

However.

I did go to school. Elementary. Junior. Senior. Two degrees across five years at the university. A year and a half studying the music and video industry, the business side and the production side for an Associate of Arts chaser.

I'm a product of a traditional education model who's currently integrating AI tools into that body of knowledge.

So.

My question yesterday was a riff on whether AI tools might be enough in the absence of that body of knowledge, that education, or some reduction of it.

Is there a difference between understanding a thing and retrieving that knowledge on the fly through a Large Language Model in the moment you need that knowledge?

In short: what need is there for general knowledge in our minds when we're carrying around the sum total of all human knowledge in our pockets?

Are we at a point where we don't need to know as much as we used to? To carry that amount in our minds?

So we can read less?

So we can write less?

So we can study less.

Which, let's be honest, we already do less reading and writing in comparison to previous generations.

Is there a price to leaving those skills behind or are we good to go boldly and successfully into the future?

Hmmm.

Okay so I do wanna get into the neuroscience and that switchboard/switchboard operators metaphor because what we learn, how we learn it, and the degree to which we study it biologically wires our brains in specific ways, biologically optimizes our brains in specific ways. Our required and chosen mental activities from infant to toddler to child to pre-teen to teen fire certain neurons and not others. Our required and chosen mental activities muscles up certain pathways, connections between certain neurons and not others. All of which all of which all of which has implications to the people we are and the professionals we become.

How?

We'll start that piece tomorrow...

#brain#mind#birth#adulthood#neuroscience#human development#neurons#wiring#switchboard#AI#knowledge on demand#understanding#knowing#knowledge#large language model#general knowledge#neural networks

0 notes

Text

From Science Fiction to Daily Reality: Unveiling the Wonders of AI and Deep Learning

Deep learning is like teaching a child to understand the world. Just as a child learns to identify objects by observing them repeatedly, deep learning algorithms learn by analyzing vast amounts of data. At the heart of deep learning is a neural network—layers upon layers of algorithms that mimic the human brain’s neurons and synapses. Imagine you’re teaching a computer to recognize cats. You’d…

View On WordPress

#AI Ethics#AI in Healthcare#AI Research#Algorithm Development#Artificial Intelligence#Autonomous Vehicles#Computer Vision#Data Science#Deep Learning#Machine Learning#Natural Language Processing (NLP)#Neural Networks#PyTorch#Robotics#TensorFlow

0 notes

Text

HWM: Game Design Log (25) Finding the Right “AI” System

Hey! I’m Omer from Oba Games, and I’m in charge of the game design for Hakan’s War Manager. I share a weekly game design log, and this time, I’ll be talking about AI and AI systems. Since I’m learning as I go, I thought, why not learn together? So, let’s get into it! Do We Need AI? Starting off with a simple question: Do we need AI? Now, I didn’t ask the most fundamental concept in game history…

View On WordPress

#AI system#Artificial Intelligence#Bayesian networks#decision tree#FSM#game design#game designer#game development#game mechanics#Hakan&039;s War Manager#Neural networks#state#state machine#strategy game

0 notes

Text

DAILY DOSE: Neural network excels where ChatGPT lags;

NEW SHERIFF IN TOWN. Scientists have developed a neural network capable of systematic generalization, a crucial aspect of human cognition where newly learned words are seamlessly integrated and utilized in different contexts. This AI performs comparably to humans, unlike ChatGPT which lagged in tests. This advancement, discussed in a study published on 25 October in Nature, signifies a…

View On WordPress

#Africa#artificial intelligence#Asia#Australia#dengue#drug development#environment#epidemiology#Europe#Featured#neural networks#North America#social media#South America#technology

0 notes

Text

Harnessing the Power of Artificial Intelligence in Web Development

Artificial Intelligence (AI) has emerged as a transformative force in various industries, and web development is no exception. From enhancing user experience to optimizing content, AI offers a plethora of benefits for web developers and businesses alike.

Introduction to Artificial Intelligence (AI) in Web Development

Understanding the basics of AI

AI involves the development of computer systems that can perform tasks that typically require human intelligence. These tasks include learning, reasoning, problem-solving, perception, and language understanding.

Evolution of AI in web development

In recent years, AI has revolutionized web development by enabling developers to create more dynamic and personalized websites and applications.

➦ Benefits of Integrating AI in Web Development

Enhanced user experience

By analyzing user data and behavior, AI algorithms can personalize content and recommend products or services tailored to individual preferences, thereby enhancing the overall user experience.

Personalization and customization

AI-powered algorithms can analyze user data in real-time to provide personalized recommendations, such as product suggestions, content recommendations, and targeted advertisements.

Improved efficiency and productivity

AI automation tools can streamline various web development tasks, such as code generation, testing, and debugging, leading to increased efficiency and productivity for developers.

➦ AI-Powered Web Design

Responsive design and adaptation

AI algorithms can analyze user devices and behavior to dynamically adjust website layouts and designs for optimal viewing experiences across various platforms and screen sizes.

Automated layout generation

AI-powered design tools can generate website layouts and templates based on user preferences, content requirements, and design trends, saving developers time and effort in the design process.

➦ AI in Content Creation and Optimization

Natural language processing (NLP) for content generation

AI-driven NLP algorithms can generate high-quality content, such as articles, blog posts, and product descriptions, based on user input, keywords, and topic relevance.

SEO optimization through AI tools

AI-powered SEO tools can analyze website content, keywords, and search engine algorithms to optimize website rankings and improve visibility in search engine results pages (SERPs).

➦ AI-Driven User Interaction

Chatbots and virtual assistants

AI-powered chatbots and virtual assistants can engage with website visitors in real-time, answering questions, providing assistance, and guiding users through various processes, such as product selection and checkout.

Predictive analytics for user behavior

AI algorithms can analyze user data and behavior to predict future actions and preferences, enabling businesses to anticipate user needs and tailor their offerings accordingly.

➦ Security Enhancement with AI

Fraud detection and prevention

AI algorithms can analyze user behavior and transaction data to detect and prevent fraudulent activities, such as unauthorized access, identity theft, and payment fraud.

Cybersecurity measures powered by AI algorithms

AI-driven cybersecurity tools can identify and mitigate potential security threats, such as malware, phishing attacks, and data breaches, by analyzing network traffic and patterns of suspicious behavior.

➦ Challenges and Considerations

Ethical implications of AI in web development

The use of AI in web development raises ethical concerns regarding privacy, bias, and the potential for misuse or abuse of AI technologies.

Data privacy concerns

AI algorithms rely on vast amounts of user data to function effectively, raising concerns about data privacy, consent, and compliance with regulations such as the General Data Protection Regulation (GDPR).

Future Trends in AI and Web Development

Advancements in machine learning algorithms

Continued advancements in machine learning algorithms, such as deep learning and reinforcement learning, are expected to further enhance AI capabilities in web development.

Integration of AI with IoT and blockchain

The integration of AI with the Internet of Things (IoT) and blockchain technologies holds the potential to create more intelligent and secure web applications and services.

➦ Conclusion

In conclusion, harnessing the power of artificial intelligence in web development offers numerous benefits, including enhanced user experience, improved efficiency, and personalized interactions. However, it is essential to address ethical considerations and data privacy concerns to ensure responsible and ethical use of AI technologies in web development.

➦ FAQs

1. How does AI enhance user experience in web development?

2. What are some examples of AI-powered web design tools?

3. How can AI algorithms optimize content for search engines?

4. What are the main challenges associated with integrating AI into web development?

5. What are some future trends in AI and web development?

At Zapperr, our AI and Machine Learning Mastery services open up a universe of possibilities in the digital realm. We understand that in the age of data, harnessing the power of artificial intelligence and machine learning can be a game-changer. Our dedicated team of experts is equipped to take your business to the next level by leveraging data-driven insights, intelligent algorithms, and cutting-edge technologies.

#AI Services#Machine Learning Solutions#Artificial Intelligence Expertise#Advanced Data Analytics#Deep Learning Mastery#Predictive Analytics#Intelligent Automation#Neural Network Development#Data-driven Decision Making#Cognitive Computing Services#web development#zapper

0 notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Text

#hugging face#space#neural networks#linklist#development#data management#data science#weltenschmid#knowledge base

0 notes

Text

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

New Post has been published on https://thedigitalinsider.com/from-recurrent-networks-to-gpt-4-measuring-algorithmic-progress-in-language-models-technology-org/

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

In 2012, the best language models were small recurrent networks that struggled to form coherent sentences. Fast forward to today, and large language models like GPT-4 outperform most students on the SAT. How has this rapid progress been possible?

Image credit: MIT CSAIL

In a new paper, researchers from Epoch, MIT FutureTech, and Northeastern University set out to shed light on this question. Their research breaks down the drivers of progress in language models into two factors: scaling up the amount of compute used to train language models, and algorithmic innovations. In doing so, they perform the most extensive analysis of algorithmic progress in language models to date.

Their findings show that due to algorithmic improvements, the compute required to train a language model to a certain level of performance has been halving roughly every 8 months. “This result is crucial for understanding both historical and future progress in language models,” says Anson Ho, one of the two lead authors of the paper. “While scaling compute has been crucial, it’s only part of the puzzle. To get the full picture you need to consider algorithmic progress as well.”

The paper’s methodology is inspired by “neural scaling laws”: mathematical relationships that predict language model performance given certain quantities of compute, training data, or language model parameters. By compiling a dataset of over 200 language models since 2012, the authors fit a modified neural scaling law that accounts for algorithmic improvements over time.

Based on this fitted model, the authors do a performance attribution analysis, finding that scaling compute has been more important than algorithmic innovations for improved performance in language modeling. In fact, they find that the relative importance of algorithmic improvements has decreased over time. “This doesn’t necessarily imply that algorithmic innovations have been slowing down,” says Tamay Besiroglu, who also co-led the paper.

“Our preferred explanation is that algorithmic progress has remained at a roughly constant rate, but compute has been scaled up substantially, making the former seem relatively less important.” The authors’ calculations support this framing, where they find an acceleration in compute growth, but no evidence of a speedup or slowdown in algorithmic improvements.

By modifying the model slightly, they also quantified the significance of a key innovation in the history of machine learning: the Transformer, which has become the dominant language model architecture since its introduction in 2017. The authors find that the efficiency gains offered by the Transformer correspond to almost two years of algorithmic progress in the field, underscoring the significance of its invention.

While extensive, the study has several limitations. “One recurring issue we had was the lack of quality data, which can make the model hard to fit,” says Ho. “Our approach also doesn’t measure algorithmic progress on downstream tasks like coding and math problems, which language models can be tuned to perform.”

Despite these shortcomings, their work is a major step forward in understanding the drivers of progress in AI. Their results help shed light about how future developments in AI might play out, with important implications for AI policy. “This work, led by Anson and Tamay, has important implications for the democratization of AI,” said Neil Thompson, a coauthor and Director of MIT FutureTech. “These efficiency improvements mean that each year levels of AI performance that were out of reach become accessible to more users.”

“LLMs have been improving at a breakneck pace in recent years. This paper presents the most thorough analysis to date of the relative contributions of hardware and algorithmic innovations to the progress in LLM performance,” says Open Philanthropy Research Fellow Lukas Finnveden, who was not involved in the paper.

“This is a question that I care about a great deal, since it directly informs what pace of further progress we should expect in the future, which will help society prepare for these advancements. The authors fit a number of statistical models to a large dataset of historical LLM evaluations and use extensive cross-validation to select a model with strong predictive performance. They also provide a good sense of how the results would vary under different reasonable assumptions, by doing many robustness checks. Overall, the results suggest that increases in compute have been and will keep being responsible for the majority of LLM progress as long as compute budgets keep rising by ≥4x per year. However, algorithmic progress is significant and could make up the majority of progress if the pace of increasing investments slows down.”

Written by Rachel Gordon

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#Accounts#ai#Algorithms#Analysis#approach#architecture#artificial intelligence (AI)#budgets#coding#data#deal#democratization#democratization of AI#Developments#efficiency#explanation#Featured information processing#form#Full#Future#GPT#GPT-4#growth#Hardware#History#how#Innovation#innovations#Invention

4 notes

·

View notes

Text

Project "ML.Pneumonia": Classifier

Using the weights obtained as part of the autoencoder training, the classifier was trained. Thrice. The training was unstable in terms of accuracy metric records, thus it was conducted three times to achieve the best results. The first attempt was the best one. The final accuracy is about 83%.

P.S.: The graphs of the best attempt metrics are shown below.

P.S.2: And here is the graph of the original model (basic method) "performance". Apparently, learning instability is a common problem in this classification issue.

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

0 notes

Text

To everyone that wants to stay updated with AI.

AI Seeker

AI Explorer - $1

Instant AI News: Trending updates from the tech and industry realms that spark my interest.

Model Spotlight: Dive into a featured AI model (covering text, image, video, etc.)

Tool Talk: A close-up look at a notable AI tool.

Prompt Engineering Insights: Dive into a specific technique, complemented with examples.

News Digest: A compiled summary of the week's top AI news.

AI Explorer Compilation: A curated collection of the week's content.

AI Innovator - $10

Extended AI News: All from the AI Explorer, plus highlights on emerging startups and innovative AI solutions.

Research Review: Deep dive into a significant AI research papers.

Tool Tutorial: Step-by-step guide on utilizing a specific AI tool.

Idea Lab: Explore a promising concept for an AI solution.

Access to all AI Explorer posts and compilations.

AI Developer - $15

Comprehensive AI News: Incorporating insights from both Explorer and Innovator tiers, and adding updates on the latest development tools, frameworks, and techniques.

Dev Spotlight: A focused look at a trending AI development tool, framework, or technique.

Implementation Showcase: A practical walk-through of an AI solution brought to life.

Access to all AI Innovator posts and compilations.

#ai#artificial intelligence#aiexploration#ai news#ai learning#ai development#ai solutions#ai ideas#llm#machine learning#deep learning#generative ai#ai tools#prompt engineering#neural networks

0 notes