#Visual Configuration Tool

Explore tagged Tumblr posts

Text

From Inquiry to Invoice: How CPQ Configurators Accelerate the Furniture Sales Funnel

In today’s fast-paced, customer-centric marketplace, furniture businesses are under increasing pressure to deliver personalized products, accurate quotes, and streamlined transactions — all without compromising speed or accuracy. With complex product catalogs, customizable options, and variable pricing, the traditional furniture sales funnel can be slow, error-prone, and frustrating for both buyers and sellers. Enter CPQ configurators, the digital accelerators that are transforming the furniture industry from the first inquiry to the final invoice.

What is a CPQ Configurator?

CPQ stands for Configure, Price, Quote, a category of software tools designed to streamline the sales process for complex and customizable products. A CPQ configurator allows sales teams, and in many cases, customers themselves, to configure a product, calculate an accurate price based on selected options, and generate a formal quote instantly.

In the furniture industry, where every detail matters, from fabric and finish to size and add-ons, CPQ tools eliminate guesswork, reduce back-and-forth, and enable a seamless sales experience.

How CPQ Configurators Streamline the Furniture Sales Funnel

Let’s look at how a CPQ configurator streamlines and accelerates each phase of the sales funnel:

1. Inquiry & Product Discovery

Modern CPQ tools often come integrated with 3D visual configurators that allow buyers to explore products interactively. Instead of flipping through physical catalogs or static PDFs, customers can visualize a chair in walnut finish with a leather seat or a modular sofa in various layouts — all in real time.

This interactive experience increases engagement, shortens the discovery phase, and gives customers confidence in their choices.

2. Product Configuration with Accuracy

Furniture CPQs are built with business logic and product rules that ensure every configuration is manufacturable. If a particular leg design isn’t compatible with a certain tabletop or material, the system prevents the user from selecting it.

This rule-based intelligence reduces costly errors, speeds up approvals, and ensures consistency across the sales team. It also frees up sales reps to focus on closing rather than correcting.

3. Real-Time Pricing

Pricing in the furniture industry can be highly variable, influenced by raw material costs, regional taxes, volume discounts, and more. A CPQ system can automatically calculate the correct price based on the customer’s selections in real time.

Dynamic pricing not only ensures quote accuracy but also helps sales reps respond to pricing inquiries faster and more confidently.

4. Instant Quoting

With CPQ software, quotes are generated instantly, often complete with product visuals, specifications, and terms. This drastically reduces turnaround time from days to minutes.

A faster quote means a faster decision, especially important in B2B scenarios where budget approval windows are narrow and time-to-purchase is short.

5. Order Processing and Invoicing

Once a quote is accepted, CPQ tools can integrate directly with ERP and CRM systems to automatically generate orders and invoices. No need to re-enter data, no risk of clerical errors, and no delay in kicking off production or delivery schedules.

End-to-end integration ensures that from the moment a customer makes a selection to the final payment, every step is tracked, documented, and executed efficiently.

CPQ in Action: Real-World Example

Consider a commercial furniture manufacturer that deals with hundreds of SKUs and highly customized products for office and hospitality spaces. Before CPQ, their sales reps spent hours on manual configuration, pricing approvals, and quote documentation.

After implementing a CPQ solution with 3D configuration and ERP integration, they reduced their quote-to-order time by over 70%, increased average order value by 18%, and saw a 50% drop in errors related to product configuration. Most importantly, their customers reported a more streamlined and enjoyable buying experience.

From inquiry to invoice, CPQ configurators empower furniture companies to meet modern expectations and stay ahead in a digital-first marketplace.

If you’re looking to optimize your furniture sales funnel with a CPQ solution, now’s the time. Streamline your quoting process, reduce costly mistakes, and close deals faster than ever.

Ready to explore what a CPQ configurator could do for your business?

Let’s talk - https://prototechsolutions.com/3d-services/3d-product-configurator/

#CPQ configurator for furniture#furniture CPQ software#furniture sales funnel#visual product configurator#furniture quote software#configure price quote tools#CPQ for furniture manufacturers#real-time furniture pricing

0 notes

Text

3D Furniture Configurator: Revolutionizing Interior Design

In the rapidly evolving world of interior design, technology plays a pivotal role in transforming how designers and consumers approach spaces. The Zolak 3D furniture configurator stands at the forefront of this transformation, offering a cutting-edge solution that seamlessly blends technology with creativity. This innovative tool empowers users to visualize, customize, and perfect their furniture choices, ensuring every design decision is informed and inspired.

What is the Zolak 3D Furniture Configurator?

The 3D furniture configurator is an advanced digital tool designed to assist users in creating detailed, lifelike representations of their interior spaces. Whether you’re a professional designer or a homeowner with a vision, this configurator allows you to experiment with various furniture pieces, colors, materials, and layouts in a realistic 3D environment.

Key Features and Benefits

Realistic Visualization

The configurator uses high-quality 3D rendering to produce realistic images of furniture in your chosen space. This feature helps users to see exactly how different pieces will look and fit within their rooms, eliminating guesswork and ensuring satisfaction with final choices.

Customization Options

Users can customize nearly every aspect of the furniture, from size and color to material and finish. This flexibility ensures that each piece fits perfectly with the desired aesthetic and functional requirements of the space.

User-Friendly Interface

Designed with user experience in mind, the Zolak configurator is intuitive and easy to navigate. Even those without technical expertise can quickly get the hang of it and start designing their ideal spaces.

Space Planning

The tool also offers space planning capabilities, allowing users to place furniture in their room’s exact dimensions. This helps in visualizing the flow and functionality of the space, ensuring that the layout is both practical and aesthetically pleasing.

Cost Efficiency

By providing a clear and accurate visualization, the Zolak configurator reduces the risk of costly mistakes. Users can make informed decisions before making any purchases, ensuring that every investment in furniture is a wise one.

How to Use the Zolak 3D Furniture Configurator

Sign Up or Log In

Start by signing up for a Zolak account or logging in if you already have one. This gives you access to all the features and allows you to save your projects.

Choose Your Room

Select the room you want to design. The configurator offers various templates or the option to input your room’s dimensions for a more personalized experience.

Select Furniture

Browse through Zolak’s extensive catalog of furniture. You can filter by type, style, and more to find the perfect pieces.

Customize and Place

Customize your chosen pieces to match your vision. Adjust colors, materials, and sizes, then place them in your room to see how they look.

Save and Share

Save your design for future reference or share it with others for feedback. The configurator makes it easy to export your design or share it directly from the platform.

Conclusion

The 3D furniture configurator is a game-changer in the realm of interior design. By combining advanced technology with user-friendly features, it empowers users to create beautiful, functional spaces with confidence. Whether you’re planning a complete overhaul or just looking to add a few new pieces, Zolak’s configurator is your go-to tool for making informed, inspired decisions. Embrace the future of interior design and start creating your dream space today.

#couch#sofa#3D#art#3D Furniture Configurator#Interior Design Technology#Furniture Visualization#Home Decor#Interior Design Tools#Furniture Customization#Space Planning#3D Rendering#Room Design#Zolak Tech#Virtual Interior Design#Digital Design Tools#E-commerce Furniture#Online Furniture Configurator#Furniture Design Software

1 note

·

View note

Text

Ladies and gentlemen, developers and dreamers — The moment we've all been waiting for has finally arrived!

After two years of pixel-pushing, code-crunching, and more coffee than I’d like to admit, I’m thrilled to present to you the next major step in the evolution of our beloved game engine:

🎉ResusBox 0.12.7 is officially LIVE! 🎉

This update is more than just new features — it's a heartbeat of passion, a breath of creativity, and a defibrillator-powered jolt to the imagination!

Packed with enhanced mechanics, fresh customization tools, smoother animations, and plenty of surprises (including a few *shocking* ones), this version brings us one step closer to making resuscitation-themed games a unique, expressive, and memorable experience.

What's New in ResusBox 0.12.7?

This version is packed with exciting new features and improvements that push the engine to a whole new level:

- New UI design — Clean, modern, and more intuitive than ever

- Help Center — Includes How to Play, controls, and settings guide

- Advanced graphics rendering system — Now with full overlay support

- Image support — JPG, PNG, BMP, and GIF images

- Cutscene Player — Story scene animations, including looping and autoskip features

- MP3 background music support — Play music in game and story mode

- New configuration file format — More readable, more powerful

- New game physics — Now powered by improved physics for smoother, more dynamic gameplay

- Custom inventory icons — Personalize your game visuals even more

- Terminal — Perfect for debugging, experimenting, or just having fun with commands

- Developer Manual — Official documentation to help you master game development

Thank you all for your patience, feedback, and support.

Stay creative and have fun, ResusKing 230

Developer of ResusBox.

DOWNLOAD RESUSBOX v0.12.7

Required OS: Windows XP SP3 or higher.

Required RAM: 512 Mb or higher.

Required DotNET Framework: v3.5 or higher.

Download:

Date: June 5, 2025.

114 notes

·

View notes

Note

Whats your process like for making the pages/script/comic in general? any advice you could give?

Hii:D

I'm gonna ramble about this a lot, so I'm adding this read more <3

That way this post won't be super long on the main page

If you DO want to see everything go ahead!

So! Right now I work on the pages from monday to saturday :]

I divide work like this:

Monday-Tuesday: Script, storyboard and dialogue bubbles!

Wednesday-Thursday: Lineart for the 4 pages! 2 pages each day

Friday-Saturday: Color the 4 pages! 2 pages each day

Talking about writing

I don't have the full script ready yet because I realized

I SUCK AT WRITING, NOT IN A "My writing is so bad way" BUT IN A "I can't write words without getting confused" WAY

That's one of the reasons why it took me SO long to start this thing! Because I wanted the script to be fully ready! And I couldn't do that because whenever I'm writing I get super confused😭😭I don't know how to explain it but I NEED visuals ??? I need to see how the dialogue I'm writing is gonna look???

So now, whenever I'm writing, I'm also drawing at the same time! AND FOR SOME REASON THAT WORKS, AND SUDDENLY I CAN WRITE

I REALLY DON'T KNOW WHY THAT IS BUT, UH, IT WORKS FOR ME!!! THAT'S ALL I NEED!!

This does NOT mean I'm improvising the story! I do have the full story ready and outlined! I'm following that outline :]

I realized having everything ready might work for some people, but it wasn't working for me D:

So, uh, my advice for writing is to trust yourself and to try different methods! You'll find something that works for you :]

I DO recommend having an outline of the story before beginning!! You don't need to know everything from the beginning but you need to know what NEEDS to happen and a basic idea of how it should end :]

Now about the making of the comic pages

Pls look for references constantly!! Very important!!

There's many different ways to make comics!

I always look in pinterest for panel layout/color pallete inspiration

I use clip studio paint to make everything, it's super useful cause it has a LOT of features that make the process MUCH easier (vector layers, a paint bucket that actually works, special comic configurations, a panel tool, 3d viewing which is super fun, predetermined speech bubbles, the story editor, etc.)

It takes me like?? Approximately 2 and half hours to make one page?? Some more and some less

But I'm also an easily distracted person so sometimes 2 hours turn into 3 because I spent 1 hour getting distracted with other stuff 😭

Uhhm, so yeah!! I think the layout is my favorite part, my least favorite part is adding the speech bubbles...ESPECIALLY if I have to add Wingdings

Andd I think that covers most of it? If you all have more specific questions let me know because there's a LOT of stuff that goes into making these😭😭but I get better and faster each time! My first pages took me like 4 hours on average...some would take me 6 hours...THAT WENT DOWN A LOT :D

#i don't know what to add here in tags hehe#answered ask#making a comic is great but oh my god does it take time to get used to it ....and to build a schedule that actually works....

74 notes

·

View notes

Text

Sir Roger Penrose

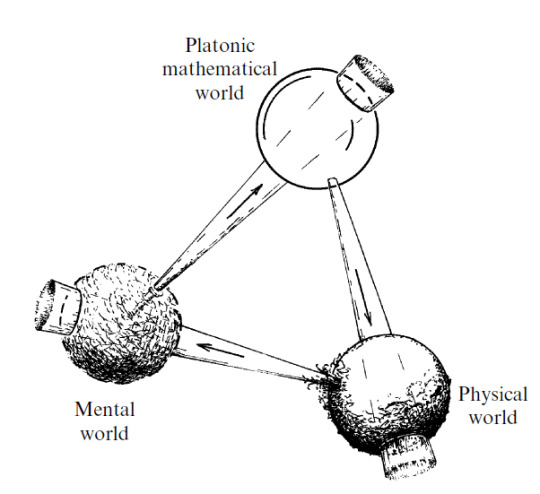

To me, the world of perfect forms is primary (as was Plato’s own belief) — its existence being almost a logical necessity — and both the other two worlds are its shadows.

Sir Roger Penrose, born on August 8, 1931, in Colchester, Essex, England, is a luminary in the realm of mathematical physics. His journey began with a Ph.D. in algebraic geometry from the University of Cambridge in 1957, and his career has spanned numerous prestigious posts at universities in both England and the United States. His work in the 1960s on the fundamental features of black holes, celestial bodies of such immense gravity that nothing, not even light, can escape, earned him the 2020 Nobel Prize for Physics.

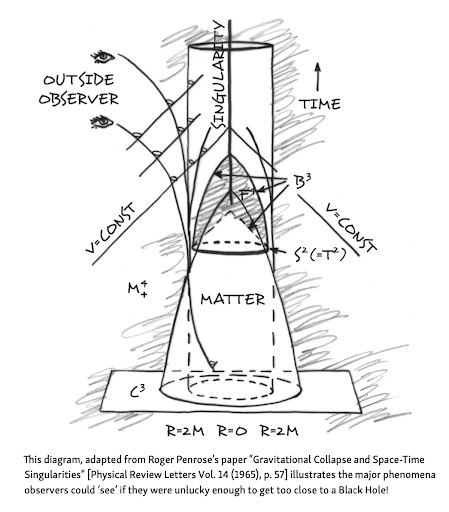

Penrose’s work on black holes, in collaboration with Stephen Hawking, led to the ground-breaking discovery that all matter within a black hole collapses to a singularity, a point in space where mass is compressed to infinite density and zero volume. This revelation illuminated our understanding of these enigmatic cosmic entities.

His work did not stop at the theoretical; he also developed a method of mapping the regions of space-time surrounding a black hole, known as a Penrose diagram. This tool allows us to visualize the effects of gravitation upon an entity approaching a black hole, providing a window into the heart of these celestial mysteries.

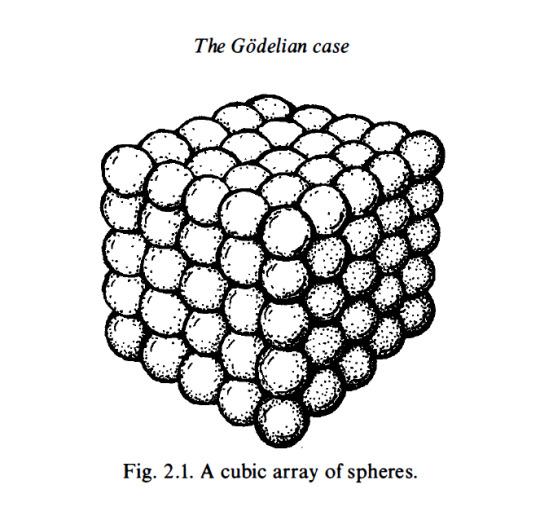

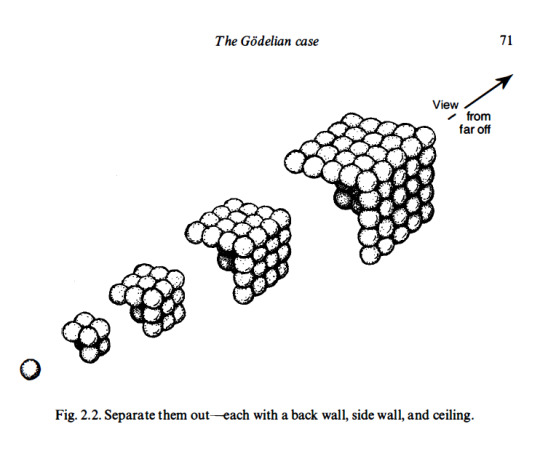

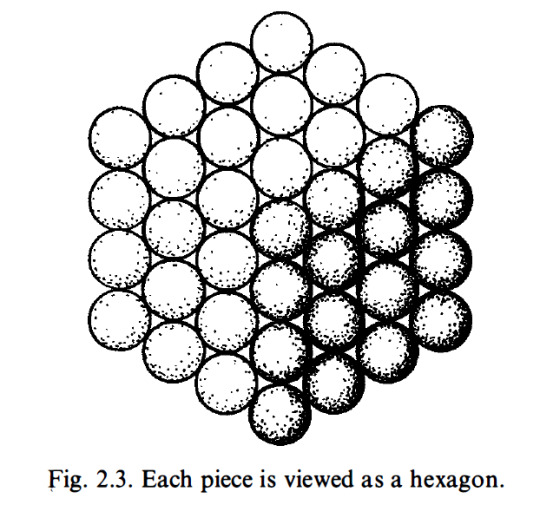

Within Penrose’s chapter, “The Godelian Case” (from “The Road to Reality”) the profound implications of Kurt Gödel’s incompleteness theorems are examined in relation to the connection between mathematics and geometry. Specifically, Penrose’s attention centers on the model depicted in Figure 2.1, which portrays a cubic array of spheres. Through this visual representation, Penrose explores the intricate relationship between geometry and mathematical understanding.

By introducing the model of a cubic array of spheres, Penrose highlights the fundamental role of spatial arrangements in mathematical cognition. This geometrical structure serves as a metaphorical embodiment of mathematical concepts, illustrating how spatial configurations can stimulate cognitive processes and facilitate intuitive comprehension of mathematical truths. The intricate interplay between the arrangement of spheres within the model and the underlying principles of mathematics encourages contemplation on the deep-rooted connections between geometry, spatial reasoning, and abstract mathematical thought.

Penrose’s utilization of the cubic array of spheres underscores his broader philosophical framework, which challenges reductionist accounts of human cognition that rely solely on formal systems or computational models. Through this geometrical representation, he advocates for a more holistic understanding of mathematical insight, one that recognizes the essential role of geometric intuition in shaping human understanding.

By looking at the intricate connection between mathematics and geometry, Penrose prompts a re-evaluation of the mechanistic view of cognition, emphasizing the need to incorporate spatial reasoning and intuitive geometrical understanding into comprehensive models of human thought.

(E) Find a sum of successive hexagonal numbers, starting from 1 , that is not a cube. I am going to try to convince you that this computation will indeed continue for ever without stopping. First of all, a cube is called a cube because it is a number that can be represented as a cubic array of points as depicted in Fig. 2. 1 . I want you to try to think of such an array as built up successively, starting at one corner and then adding a succession of three-faced arrangements each consisting of a back wall, side wall, and ceiling, as depicted in Fig. 2.2. Now view this three-faced arrangement from a long way out, along the direction of the corner common to all three faces. What do we see? A hexagon as in Fig. 2.3. The marks that constitute these hexagons, successively increasing in size, when taken together, correspond to the marks that constitute the entire cube. This, then, establishes the fact that adding together successive hexagonal numbers, starting with 1 , will always give a cube. Accordingly, we have indeed ascertained that (E) will never stop.

Penrose’s work is characterized by a profound appreciation for geometry. His father, a biologist with a passion for mathematics, introduced him to the beauty of geometric shapes and patterns at a young age. This early exposure to geometry shaped Penrose’s unique approach to scientific problems, leading him to develop new mathematical notations and diagrams that have become indispensable tools in the field. His creation of the Penrose tiling, a method of covering a plane with a set of shapes without using a repeating pattern, is a testament to his innovative thinking and his deep understanding of geometric principles.

His fascination with geometry extended beyond the realm of mathematics and into the world of art. He was deeply influenced by the work of Dutch artist M.C. Escher, whose intricate drawings of impossible structures and infinite patterns captivated Penrose’s imagination. This encounter with Escher’s art led Penrose to explore the interplay between geometry and art, culminating in his own contributions to the field of mathematical art. His work in this area, like his scientific research, is characterized by a deep appreciation for the beauty and complexity of geometric forms.

In geometric cognition, Penrose’s work has the potential to make significant contributions. His unique perspective on the role of geometry in understanding the physical world, the mind, and even art, offers a fresh approach to this emerging field. His belief in the power of geometric thinking, as evidenced by his own ground-breaking work, suggests that a geometric approach to cognition could yield valuable insights into the nature of thought and consciousness.

Objective mathematical notions must be thought of as timeless entities and are not to be regarded as being conjured into existence at the moment that they are first humanly perceived.

I argue that the phenomenon of consciousness cannot be accommodated within the framework of present-day physical theory.

His Orch OR theory posits that consciousness arises from quantum computations within the brain’s neurons. This bold hypothesis, bridging the gap between the physical and the mental, has sparked intense debate and research in the scientific community.

Penrose’s work on twistor theory, a geometric framework that seeks to unify quantum mechanics and general relativity, is a testament to his belief in the primacy of geometric structures. This theory, which represents particles and fields in a way that emphasizes their geometric and topological properties, can be seen as a metaphor for his views on cognition. Just as twistor theory seeks to represent complex physical phenomena in terms of simpler geometric structures, Penrose suggests that human cognition may also be understood in terms of fundamental geometric and topological structures.

This perspective has significant implications for the field of cognitive geometry, which studies how humans and other animals understand and navigate the geometric properties of their environment. If Penrose’s ideas are correct, our ability to understand and manipulate geometric structures may be a fundamental aspect of consciousness, rooted in the quantum geometry of the brain itself.

The final conclusion of all this is rather alarming. For it suggests that we must seek a non-computable physical theory that reaches beyond every computable level of oracle machines (and perhaps beyond). — Roger Penrose, Shadows of the Mind: A Search for the Missing Science of Consciousness

#geometrymatters#roger penrose#geometric cognition#cognitive geometry#consciousness#science#research#math#geometry

78 notes

·

View notes

Text

Easy IDs to do for beginners

[Plain text: Easy IDs to do for beginners /end PT.]

Disability Pride Month is here! And as so I think it'd be neat to incentive people to describe more images, as advocacy for acessibility.

But I get it that describing images (visual stuff) with *your own words* may seem a bit challenging, specially if you've never done that before, so I decided to gather some easy things you can describe to start!

1 - Text transcripts

What is a text transcrip? A text transcript is when you have an image whose only component is text, and you take the text from it and write it out for the people who for whatever reason can't acess the image themselves (like if they are blind and use a text-to-speech device to read what's on the screen for them and therefore can't recognize the text of an image, people with low vision that can't see average-sized text and configure theirs to display text in a bigger font, which doesn't work on images and it's too tiny for them to read...). An example of text transcript:

[ID: Text: An adult frog has a stout body, protruding eyes, anteriotly-attached tongue, limbs folded underneat, and no tail (the tail of tailed frogs is an) /end ID.]

(this is from the Wikipedia page on frogs.)

Text transcripts are easy to do because you only have to take the already existing text from an image and type it out. For longer text-only images, you can also use a text recognition AI tool, such as Google Lens, to select the text from you and then you just have to copy and paste it into the description.

2 - Memes

Despiste what you may think, most memes (specially 1-panel memes) are incredibly easy to describe, because they come from a well-known template. Take this one for an example:

[ID: The "Epic Handshake" meme. One of the people in the handshake is labelled "black people", and the other is labelled "tall people". The place where they shake hands has the caption "constantly being asked if you play basketball". /end ID.]

They are easy to describe because despiste having many elements, you can easily sum it all up in a few words, like "the loss meme", "the is this a pigeon meme", the "bernie sanders" meme, and so on. When you describe memes, you don't have to worry about every single detail, (@lierdumoa explains this better on this post) but only about 'what makes this meme funny?' If you are describing one, just describe which is the meme you're talking about, and how it differs from its template, like the captions or anyone's face that may have been edited in.

3 - One Single Thing

Images with "one single thing" are, I think, the easiest thing to describe on the world. When you describe things, what you're supposed to do is "describe what you see". If there's only one thing to see, then you can easily describe it! Quick example:

[ID: A banana. /end ID.]

See?

You could also describe this image as "a single yellow banana in a plain white background", but this extra information is not exactly important. One knows a banana is yellow. That is not unusual, and neither that nor the color of the background change anything in the image. So in these types of descriptions, you can keep things very short and simple, and deliver your message just as well.

An exception would be something like this:

[ID: A blue banana. /end ID.]

In this case, where there is something unusual about the object, describing it will be more useful. When you say "banana", one would assume the banana is yellow, so to clarify, you say that this specific banana is blue.

When you have other situations where your One Single Thing is unusual in some way, like a giant cat, a blue banana, or a rotten slice of bread, pointing out what their unusual characteristic is is the best way to go.

3.5 - A famous character of person

This one is actually similar to the One Single Thing type of ID. When you are describing, say, a random person or an oc, you'd want to describe things like their clothes, their hair color, etc., but in the case of an already well-known figure, like Naruto or Madonna, just saying their names delivers the message very well. Like this for example:

[ID: Taylor Swift, singing. /end ID.]

or

[ID: Alastor from Hazbin Hotel, leaning on his desk to pick up a cup. /end ID.]

In both of these cases, you technically could describe them as "a blonde woman with light skin...", "a cartoon character with animal ears and a suit..." but you will be more straight to the point if you just say "Taylor Swift" and "Alastor". In these cases, it's usually very useful to describe what they're doing as well, like "singing" and "leaning on his desk to pick up a cup", or whatever else.

An extra tip I can give you to describing characters in specific, is to point out if they are wearing anything different. With most cartoon characters, they usually have a signature outfit and hairstyle, that one would expect them to be in. So, similarly to the blue banana case, if they are wearing a different thing than they usually do, it comes in handy to state that in your description. Like this:

[ID: Sakura from Naruto wearing a nurse outfit. /end ID.]

Sakura's usual outfit is not a nurse one, so since she is wearing one, pointing it out is very helpful.

The last tip I have for describing characters is pointing out which franchise they are from. For example, if I just said "Sakura", you'd probably assume it was this one, since she is famous, but you wouldn't be able to be sure, because how many Sakuras are out there? So, saying "Emma from the X-Men" and "Emma from The Promised Neverland" is gonna be very helpful.

Helpful resources and final considerations:

A masterpost I did with many tutorials and tips for doing image descriptions in general

Why are image descriptions important (even for sighted people)?

And a few tips about formatting:

Putting "id" and "end id" at the start and at the end of your description is gonna help the people reading it to know where the description starts and where it ends, so they don't read, say, your caption, and think you are still talking about your description

Customized fonts, colored text, italics, bold text or tiny text aren't things you should do your ID in. Most customized fonts are pretty hard to understand, and most text-to-speech devices can't recognize them. Tiny text is hard to see for people who need big fonts, and italized text faces the same issue because it makes the words smaller. Full lines or paragraphs of colored text can cause eyestrain when people try to read them, and bolded text makes the edges of the words too close together and can make it even harder to read for people who have trouble reading already.

And that's it! Happy describing, folks!

#acessibility#disability pride month#image desciptions#alt text#described#once you are more familiarized with doing ids its always good to move on to more detailed descriptions#like all skills describing images is a think you have to harbor to get better at#but describing simple things is better than describing nothing!#it's easy AND it's good!#and also please note that i am a sighted person#what can always help is ask the blind people/people with low vision themselves what is helpful in an id#long post

59 notes

·

View notes

Text

How I Customize Windows and Android

Windows: Rainmeter

Rainmeter Skins

Rainmeter | Deviantart

r/Rainmeter

Rainmeter is where I get nifty desktop widgets (skins). There are a ton of skins online and you can spend hours just getting caught up in customizing. There are clocks, disk information, music visualizers, weather widgets*, and more.

I get most of my skins from the links I posted, but they are by no means the only resources for Rainmeter skins. r/Rainmeter and Deviantart have some awesome inspiration.

This is what my desktop looks like right now:

Dock: Dock 2 v1.5

Icons: icons8 - this is probably the best free resource for icons I know of

"Good Evening [name]": Simple Clean

Clock: Simplony

* Note about weather widgets: Older Rainmeter skins that use old weather APIs will likely not work. The Rainmeter forums has information with lists of weather skins that do work.

Windows: Useful Things for Workflow

Flow Launcher - this is basically a search bar, app launcher, and even easy-access terminal all in one. The default hotkey is Alt+Space. I use this almost primarily to do quick calculations. There are a ton of plugins and I've barely scratched the surface with how I use it.

ShareX - This is my screenshot tool and I love it. Admittedly, I find it difficult to configure, but once I had it set up, I didn't really have to adjust it. You can create custom hotkeys to screenshot your entire screen, or to select your screen, or even use OCR. This has saved me a ton of time copying over text in images and making it searchable.

Bonus - Get Rid of Windows Web Search in the Start Menu: If you're comfortable with editing your registry, and you want to get rid of the pesky web results in Windows search, this fix is what I used to get rid of it.

Android: Nova Launcher

This is my main Android launcher that I've been using for almost as long as I've owned a smartphone, and it's super customizable. The best part is that it's free with no ads, and you can purchase premium at a one-time cost.

The main things I use it for are app drawer tabs, renaming apps, hiding apps, and changing the icons.

I've had premium for so long that I've forgotten what the features were, but looking at the website, the one feature I use is app folders.

This is what my phone homescreen and app drawers look like:

Time/Weather: Breezy Weather

Calendar: Month: Calendar Widget (I got this on sale for like 30 cents once but there are a plethora of good calendar apps out there)

Icons: Whicons - White Icon Pack

Advanced Customization

Further things to enhance your customization experience to look into include:

Flashing a custom Android ROM (e.g. LineageOS)

Give up on Windows & install Linux instead (Ubuntu is a good one to start with)

Android app modification: ReVanced apps (includes Tumblr), Distraction Free Instagram

Miscellaneous notes under the cut:

None of these links are affiliate links. These are all tools I happen to use on a daily basis and I'm not being paid to promote them.

Install Rainmeter skins and programs I recommend at your own risk. Before altering Windows, such as editing the registry, make sure you have everything backed up.

The Windows web search fix works on my Windows 11 machine. I don't know if it works for Windows 10, but I do know I was able to disable it in Windows 10 at some point, so your mileage may vary.

Install non-Play Store apps at your own risk. (Although in my opinion, open source APKs are less sketchy than some apps on the Play Store...) Always check where you're downloading APKs form!

The wallpaper for my desktop and phone are custom wallpapers I made myself.

(At the request of @christ-chan-official)

11 notes

·

View notes

Text

Rodimus gets pregnant with a conjunxed mech's baby, serious/angsty version. We need more fucked up dratchrod fr

I can't believe the first ever fanfiction I write is Ratchet doing medical malpractice lmao

Fuckin,,, uh,,, warning for mention of miscarriage/imagined threat to an unborn child??? I feel like I should warn for that.

Ratchet liked to believe he tolerated Rodimus with the same grace he gave to the rest of Drift's most ridiculous eccentricities. Just because they were conjunxed, didn't mean they were perfectly in tune and of the same opinion on everything. Every relationship had to have some give and take.

At the very least, Rodimus was cheaper than the crystal collection.

But that did mean he had to hear more about Rodimus than he ever really wanted to. And when Drift started expressing his concerns about "Roddy not feeling "himself"'...

A suspicion had crept over him, like a coolant rupture slowly freezing his energon lines.

Rodimus walked into the medbay, aiming for flippantly casual and falling just short of the mark. Rodimus tended to avoid the medbay when Ratchet was on shift. Even discounting that, he could see what Drift meant about that "disturbed energy" nonsense. Rodimus's field was noticeably (at least, for a medic) pulsing at a lower frequency.

"So what did you call me down for, doc?"

Ratchet got straight to the point. He didn't have the patience for anything else.

"I need to do an examination." He gestured vaguely to the table. "Up you go."

Rodimus took one look at the medberth configuration and snickered. Climbing up and putting his legs into position, he started, "If this is your way of saying you want a threesome-"

"Can it." He snapped, working the latches on the stirrups.

Rodimus, legs spread, reclined on the exam table. Ratchet found he had even less of a tolerance than usual for Rodimus's chatter, though it was more in the vein of nervous rambling.

"I don't have a virus, doc, you would know," he said, with an obnoxious little browplate wiggle. Ratchet deliberately tuned him out, especially the uptick of irrelevant and vaguely sexual comments once he brought out the speculum. Thankfully, Rodimus still retracted his panel without a fight.

Rodimus had a larger than average anterior node, and line of biolights trailing down his valve lips that matched the node's vibrant red color. As Ratchet spread his slit and inserted the speculum, he saw that the internal lights were the same.

It's a very pretty valve. He can see why Drift would like it.

Unfortunately, he can't confirm the absence of what he's looking for with a visual alone. (Not to mention that the pulsing and flaring of internal biolights wasn't helping visibility any.)

Ratchet gently removed the speculum, ignoring the strings of lubricant that stretched and snapped between the tool and Rodimus.

"I'm going to have to do a manual examination. Try not to flex your calipers or pelvic floor."

Rodimus squeaked out something affirmative. Ratchet pressed two fingers into his valve, but was unsurprised to note that he'd need to insert more to find what he was looking for.

...Rodimus was silken smooth to the touch. He straightend four fingers and slowly pushed in further, firmly ignoring how the soft and wet valve lining trembled around his hand, until Rodimus's anterior node met the dip between his thumb and palm.

With most patients, he wouldn't insert something this big in one go, but it was Rodimus. Ratchet would bet he'd taken something bigger in the past day.

His fingertips ghosted across Rodimus's ceiling node, before finally finding the forge iris.

And confirming his suspicion.

"Congratulations. You're sparked." Even with how distant his own voice sounded to his audials, he could tell it was bitingly cold. Unfortunately, it's not viable, he doesn't say, fingertips gently pressed to the seal, soft, perfectly intact. The gestational seal that protects the protoform appears to have already ruptured. Your systems will register the breach as a confirmed contamination of foreign bodies, and terminate the protoform.

Ratchet looks up, finally, to see Rodimus.

There's a subtle tremor on his lips. Fear, in his matrix-blue eyes. His spark, suddenly, feels flayed open under them.

It could be in reaction to the news. Ratchet knows it isn't. He knows:

Rodimus is scared of him.

His fingers were absentmindedly stroking over the seal, and he nearly snatched them back like they'd been burned. The hand that calmly pulls out of Rodimus's valve doesn't feel like his.

For one delirious moment, he wondered if Rodimus would do just that. Light up his ridiculous mod, and burn the three of them to death together. All of them gone, in one final moment of complete and total devastation.

Drift, entirely alone, with only the memory of a conjunx to cling to. Nothing left to tell him of the sparkling he would never know he had.

The moment ends. Ratchet finds that he's the one rambling now, statistics about carriages, essential nutrients, general hazards. Somewhere in all of that, Rodimus gets unbuckled from the medberth.

He rattles out something about scheduling him regular check ups with First Aid.

And,

"I'll leave you to tell Drift the good news."

Ratchet can't look at him when he says it.

Rodimus leaves his medbay without any further commentary.

#valveplug#mine#3nthusiast writes#do i. maintag this...#mechpreg#ratchet#rodimus#if you saw the half done version of this that i accidentally posted while editing. no you didnt#functional webbed site

60 notes

·

View notes

Note

will you ever make janitor ai or character ai bots out of any of the characters from your fanfics? if not.. can people make them? (please i’m dying to make a Dream Blob au bot i’m on my hands and knees begging here)

In short: no. Please, please, please do not.

In long: please do not feed my fics or posts to A.I. To do so would be actively against my wishes, and even the thought of it is upsetting and angering to me.

A.I. has a lot of potential to do good – in the medical field, in the sciences, etc. There is nuance to the subject of A.I. in general. Regarding specifically A.I. art, the technology is not yet advanced enough to be used as a tool in the way that a tool is meant to be used – it is not a brush you can download, or a digital model you can pose. It is “trained” through being fed lots and lots of real, human-created pieces of art, and copying that art.

It does not learn how to use specific brush strokes, or specific colours, or how or why certain details are included or left out. It is wholesale lifting from what it is fed and mashing it together into new configurations, Frankenstein style – there is nothing creative about it. Similarly, when fed fictional writing, it does the same: it copies and pastes common tropes, common story beats, common plots, common phrasings – there is nothing of creativity in there at all.

There are currently no legal protections for artists of any kind against A.I. algorithms; the technology is still too new, and already it is causing harm. Even just on the practical side, the environmental impacts of the excessive electricity usage needed to run the A.I. is immense.

A.I. generated art is theft, pure and simple. It cannot be currently described as anything other than that. And creative writing is a form of art. A book that you pick up in an airport, or a fanfic you open in a tab on your phone, or a well-thumbed novel you found on a shelf in a café – these are pieces of art. Perhaps you do not think of them that way, in the same way you might look at a painting and say, “Yes, that is a piece of art,” but they are.

I was talking to a friend of mine some time ago, and they said (and I agreed) that writing is often devalued as a form of art, because the idea that “anyone can write a book” is so pervasive. And, yes, anyone can write a book – or anyone can write letters onto a page in a specific order. In much the same way, anyone can draw a picture, or paint a mural. It doesn’t mean that there isn’t a creative process involved, and it doesn’t mean that there isn’t the development of skills and immense amounts of knowledge and experience going into story-crafting as much as there is visual arts. Quite frankly, anyone who says, “Oh, anyone can write a book,” has almost certainly never actually tried to write a book themselves.

My friend went on to say that very often books are considered objects, just things, not pieces of art that have been handcrafted just for you, just for someone to pick up and immerse themselves in and enjoy. In much the same way, fanfic has also become a commodity – perhaps even more so, because its content is based on a pre-existing canon that does not belong to the writer. But fanfiction is still art, in much the same way that fanart is still art, and the devaluation of it and its creators is upsetting and frustrating.

I am not a machine. I do not press some buttons, pull some levers, and start outputting fanfic. This is something I do for fun, because I enjoy it. It is something I post online because I want other people to come enjoy it, too, and for it to be an expression of art meant as a part of a fan community’s expression of love for a canon. That is what being a fan is all about.

I am a real human being, and I don’t deserve to have my art stolen from me, fed to a shambling corpse spouting out things it has “learnt” from both my art and from every other piece of art that has been stolen to feed it. Anything it would say – that would not be my story, because it doesn’t come from me. It would just be an amalgamation of thousands of people’s stories, cut down into something mainstream and palatable because the point of so-called A.I. art is not to create unique and interesting stories – it is to create generic ones that will sell easily under the model of late stage capitalism.

You know, I got the email notification for this ask last night. I have my email notifs on because I spent so long being shadow-banned on this blog, and I fear missing things in my inbox. I checked my phone in the middle of the night because I couldn’t sleep, and while I was reading the ask I could hear my mother breathing in her sleep just nearby – we’re in a caravan together, because it’s been a while since I went away with my parents. I am typing this answer up from that same caravan, and I’m squinting a little because the sun is reflecting off my screen. We’re going to have a barbecue later for dinner – we just bought the food for it not three hours ago. Did you think of that? Did you think about the way that I am a person, living my own life, and now I am being forced to beg for you to respect me as one?

Because that’s what you would be doing, if you did this: you would commodify me, and you would commodify my art. It would be just another machine-made thing, not something that’s handmade for others’ enjoyment; not something that work – my time, my energy – has gone into, that my passion and love has gone into. But I am not a thing, and I resent being implicitly treated like one.

If you really want to know more about people’s fanfics – talk to them! Leave a comment, send in an ask, engage with them in some way. Fanfic is created by fans for the enjoyment of other fans, and fan communities are still communities, which means there is a social element to them. Stealing from others, as one might expect, is frowned upon greatly – they gave that to you, for free. You pay nothing for it – and shouldn’t – and now you want to plagiarise and thieve what was shown in good faith?

I suppose that, ultimately, if you were truly determined, there is nothing that I can do to stop you. You could copy/paste my works into your A.I. bot creator and go on your merry way, despite how I’ve told you that such would make me extremely upset, and that it isn’t something I want. I can say, “I forbid you to do this,” - and make no mistake I do forbid you – and ultimately I have no power to actually stop you, because there is no law in place to prevent you from doing exactly as you please.

I can do nothing to stop you except this: I am asking you not to. Please.

22 notes

·

View notes

Text

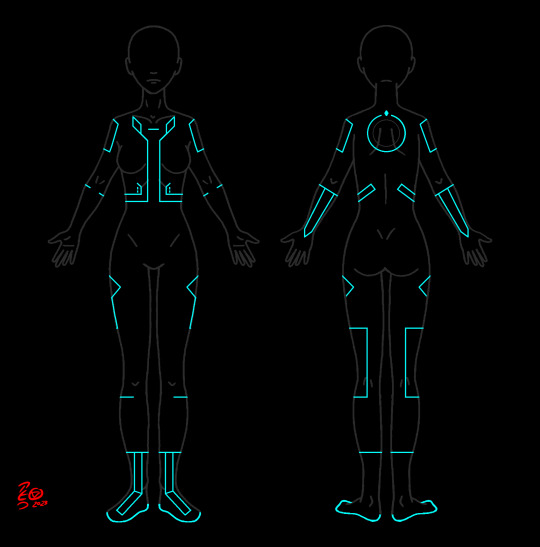

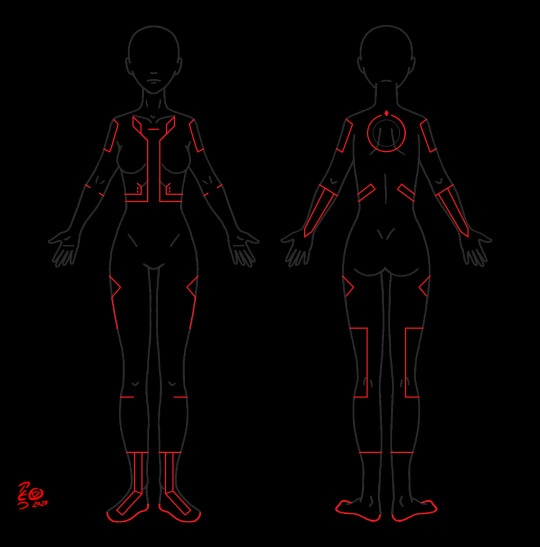

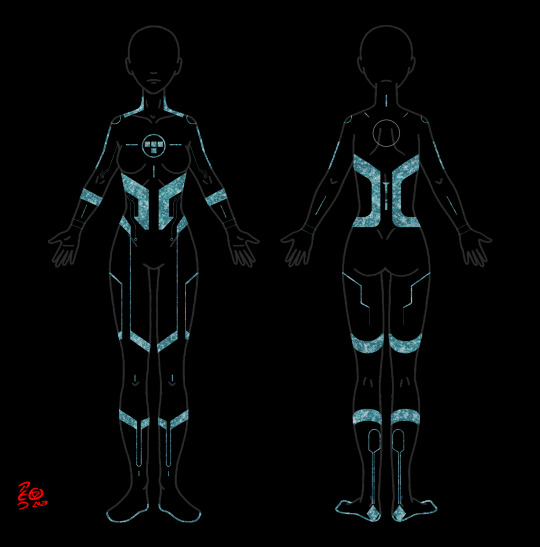

In honor of Tron Day, here's my project for my Tron OC Ark that I've been working on or a while now!

I used this base with some modifications to it for everything:

Iyzel Reaar's F2U Female Ref Base

The main modifications I did were to mirror half of it so I didn't have to figure out how to draw the circuits twice, erase certain features I'm not sure Basic programs have, and make the lines solid.

I used Windows 11 Paint's line and shape tools to draw the circuits, with adding a few pixels here and there when needed.

I used the GNU Image Manipulation Program for a lot of the color editing I did and for layering the textures.

-

The first two are Ark's base circuits, along with the red rectified version of them for Mars.

I used my friend @quesadillawizard's art and design for Ark - Ark's circuits are based off ones from the DS version of Evolution and Wolfy added more features to them.

I used the reference he made for me to make the circuits and referenced this sketch as well.

-

The next two are the Tron/Renegade circuits from Uprising, in both gray and black, as seen in the show.

@tucatalog was super helpful for references!

I mainly used two posts of theirs:

I looked at a few others as well, but I have a list of 50+ of their posts and were flipping between them, so I'm not entirely sure which others I used.

-

The next two are Grid scar versions of the Tron/Renegade circuits.

In Ark's backstory, she was captured and tortured by the Occupation. I've played around with different scar configurations for her and I love the idea of them literally carving the Tron circuits out/off of her.

I decided to do a little something different for the scars, I've tried another technique that wasn't much fun, and I'm not sure how to mimic the scars from Uprising.

So, I took some textures I got from Etsy and layered them over each other to try to make something interesting.

Textures I used:

Gemstone Crush

Iridescent Textures

Glassy Textures

Iridescent Glass

Sparkly Glitter

Fake Glitter Particles

I'm not sure if most of these came through, I figured out the glitter ones did a lot of the heavy lifting I wanted, so they're the most visible ones and the ones on top.

Anyway, one is just the Renegade scars and the other is the scars overlaid on Ark's circuits.

-

The last two are the circuits and scars over a skintone for circumstances when Ark ends up in the User world.

I like the thought of program circuits being barely visible in the User world, with just a hint of faint iridescence when the light hits them just right.

One of the textures from this pack fit my idea fairly well, so that's what I used:

Iridescent Textures

The scars are the ones I made earlier with a skin tone color overlay to make them blend in.

This is what I started this project for, as I wanted a visual for an RP and a potential fic.

-

I've done a smaller scale version of this before, that time I made the scars by repeatedly scribbling over the circuits with multiple different colors, which hurt my hand, lol:

-

Anyway, that's my project! It's not perfect, but I'm pretty pleased with how it came out!

A big thanks to @quesadillawizard and @proto-actual for looking them over!

Happy Tron Day!

#Tron#Tron Legacy#Tron Uprising#Renegade#Tron OC#Ark#Tron: Legacy#Tron: Uprising#OC#OCs#idea bag#my art

31 notes

·

View notes

Text

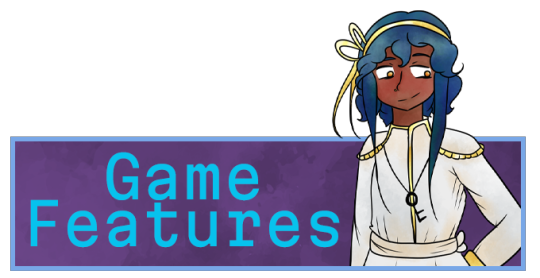

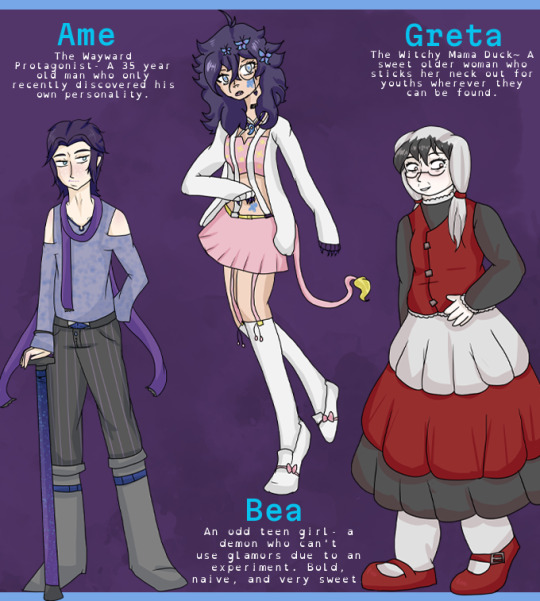

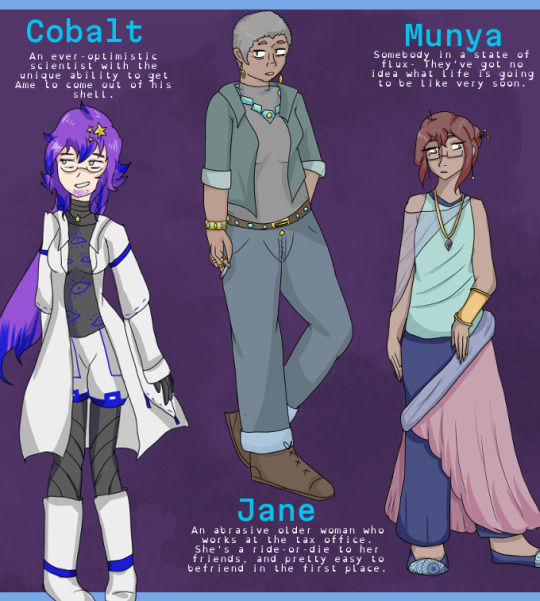

Entropic Float 2 Demo and Kickstarter Launch!

Picture a point-and-click adventure game. Now picture a branching, time-looping ontological mystery visual novel. Entropic Float 2 combines those into its gameplay concept: A point-and-click where you can never solve every puzzle at once. Where you have one key that fits two locks... And the only way to see what's behind the lock you didn't choose is to die, repeat the day, and try something different this time. With only you and your protagonist aware of the loop, seek an answer that earns new information. Through trial and error, solutions and murder... Can you find the way through this new Anomaly?

In early 2021, I started working on a project just for fun. Using the character creator and scene assembly tools of Illusion's Koikatsu, paired with the visual novel engine ren'py, I decided to learn to make a VN. A year and a half, over three hundred thousand words, and quite a bit of pocket money later, I released 'Entropic Float: This World Will Decay And Disappear' for free on steam and itch.io.

Something that was never meant to be more than a fun exploration of a concept grew into a huge endeavor for me, a visual novel as long as any two Umineko episodes combined! I got guest artists, made plenty of art myself, and learned a lot more about coding in ren'py than I ever thought I would. After releasing the game, my friends were proud of me, and I've heard from a lot of people it connected with. It became the basis of what I want to keep doing creatively- Expanding on this world, telling its story, and finding those people it will resonate with.

That brings us to Entropic Float 2: Land Of The Witch. A sequel I've been planning since the first game released a little over a year ago now. It's a game that can't be free (though if you, for any reason, cannot purchase it- I care more about sharing it than I do about making money) and a game I want to create to that standard. There won't be assets made in a character creator or royalty free music this time. I hope to bring the Pine Creek Anomaly to the screen with my own two hands, and a little bit of help from freelancers. I want to make a point-and-click adventure worth paying fifteen dollars for, an artistic experience that constitutes a huge step up from the first game.

A demo is available to download and play from itch.io! Features already reflected in the demo include:

The general structure of the point-and-click gameplay; including travel between maps, item collection and use, and conversations with other characters.

Hand-drawn backgrounds (with minor animations) and talksprites.

Profile screens for each character and a memory-seeking system improved from the first game. Also improved from the first game is exposition being mainly in a linear-locked part of the story so it doesn't need to be repeated in different places so different players don't get confused!

Planned features yet to be implemented include:

Loop Randomization- The demo includes only the very first loop of the story, but in the actual timelooping gameplay, many factors will be randomized between loops. Characters and items will appear and disappear, characters will have different trustworthiness scores, and eventually, the weather will even change and impact puzzles and character behaviors.

Zones- Minigame areas that abstractly inform you more about a character and are locked behind obtaining certain profile notes and memories. The rewards for completing zones will be tools to access additional areas, such as Area 2 waiting beyond the broken bridge.

Characters- Several of EF2's characters don't appear yet in the demo, due to having their introductions tied to maps that aren't accessible in Area 1.

General Improvements- Characters currently only have one talksprite. The preferences page is still in the ren'py default configuration. Some music tracks recycled from the first game aren't the best fit for the situation. These are all things that relate to the current version being a demo. These are all things that will improve sooner if this kickstarter is a success.

Shortly after handling the Anomalous Clocktower in the Black Rock Desert, Kanatsune Ame was promoted. Now, as an agent of the Wish Task Force at a rank equipped to handle more complex Anomalies, he's received an assignment for another one above his pay grade. Pine Creek, an entire town that was wiped from the map and from all memory several years ago, only to suddenly begin dragging new victims inside within the last few months... Somebody inside this forgotten piece of miserable Americana decided to call out to the world that left it behind, and it's up to you to find out who that could be.

Thank you, as always, for reading!

#entropic float#visual novel#official content#visual novel gamedev#gamedev#indie games#indie gamedev#vndev#entropic float 2: land of the witch#kickstarter#crowdfunding

77 notes

·

View notes

Text

How Unreal Engine Empowers Real-Time 3D Product Configurators

In today's fast-paced digital landscape, businesses are constantly seeking innovative solutions to enhance customer engagement and streamline the purchasing process. One such solution is the real-time 3D product configurator, a powerful tool that allows customers to visualize, customize, and order products directly from their devices. At the forefront of this technology is Unreal Engine, a versatile game engine renowned for its exceptional rendering capabilities and user-friendly interface. This blog post explores how Unreal Engine empowers 3D product configurators, revolutionizing the way consumers interact with products.

What is a 3D Product Configurators?

A 3D product configurator is an interactive application that enables users to customize products in real-time. These configurators are widely used across various industries, including automotive, fashion, and furniture. By allowing customers to manipulate product features—such as colors, materials, and sizes these tools enhance user experience and increase conversion rates.

The effectiveness of a product configurator largely depends on the underlying technology used to create it. Unreal Engine stands out as a top choice due to its advanced features and capabilities that cater specifically to the needs of product visualization.

Key Advantages of Using Unreal Engine

High-Fidelity Visuals: Unreal Engine is renowned for its stunning graphics and high-quality rendering capabilities. It supports advanced features like ray tracing and volumetric clouds, allowing businesses to create photorealistic representations of their products. This level of detail enhances customer trust and satisfaction as they can see exactly what they are purchasing.

Real-Time Rendering: One of the most significant advantages of Unreal Engine is its real-time rendering capability. This feature allows users to see changes immediately as they customize products, creating an interactive experience that keeps customers engaged. Quick feedback loops enable rapid iterations during the design process, which is crucial for businesses looking to refine their offerings.

Cross-Platform Compatibility: Unreal Engine supports a wide range of platforms, including web browsers, mobile devices, and VR headsets. This versatility ensures that businesses can reach their customers wherever they are, whether on a desktop or a mobile device. The ability to deploy across multiple platforms without compromising quality is a significant advantage for any organization.

User-Friendly Interface: The Blueprint visual scripting system in Unreal Engine allows developers to create complex interactions without extensive programming knowledge. This accessibility enables teams to develop sophisticated configurators quickly while maintaining flexibility in design and functionality.

Robust Community Support: With over 7.5 million developers using Unreal Engine globally, there is an extensive community providing resources, tutorials, and support. This vast network makes it easier for businesses to find skilled developers and troubleshoot issues as they arise.

Types of Configurators Built with Unreal Engine

Unreal Engine can be used to create various types of product configurators tailored to specific industry needs:

Automotive Configurators: Car manufacturers use Unreal Engine to allow customers to customize vehicles by selecting colors, wheels, interiors, and more. These configurators provide an immersive experience where users can visualize their dream car before making a purchase.

Fashion Configurators: In the fashion industry, brands utilize 3D configurators to let customers personalize clothing and accessories. Users can choose fabrics, colors, and styles in real-time, enhancing their shopping experience.

Furniture Configurators: Furniture retailers leverage Unreal Engine's capabilities to allow customers to design their ideal living spaces by selecting different furniture pieces, colors, and arrangements. This interactive approach helps customers envision how products will fit into their homes.

Building a 3D Product Configurator with Unreal Engine

Creating a successful 3D product configurator involves several key steps:

Choosing the Right 3D Models: High-quality 3D models are essential for an effective configurator. Businesses should invest in accurate representations of their products using software like Blender or Maya before importing them into Unreal Engine.

Implementing Product Logic: The configurator must incorporate logic that governs how users interact with the product features. This includes defining customizable options and ensuring that changes made by users are reflected in real time.

Designing an Intuitive User Interface (UI): A well-designed UI enhances user experience by making navigation easy and intuitive. Designers should focus on creating clear menus and buttons that allow users to customize products seamlessly.

Testing for Performance: Before launching the configurator, thorough testing is essential to ensure that it performs well across all intended platforms. This includes checking for load times, responsiveness, and visual fidelity.

Integrating E-commerce Capabilities: To complete the customer journey from customization to purchase, businesses should integrate their configurator with e-commerce platforms like Shopify or WooCommerce. This step ensures a seamless transition from product visualization to ordering.

Conclusion

Unreal Engine has emerged as a leading platform for developing real-time 3D product configurators due to its powerful rendering capabilities, user-friendly interface, and extensive community support. By leveraging these advantages, businesses can create immersive experiences that captivate customers and drive sales.

As consumer expectations continue to evolve in the digital age, adopting technologies like Unreal Engine will be crucial for companies looking to stay competitive in their respective markets. Whether in automotive design or fashion retailing, the ability to visualize and customize products in real-time not only enhances customer satisfaction but also fosters brand loyalty—an essential ingredient for success in today's marketplace.

Incorporating a real-time 3D product configurator powered by Unreal Engine can transform how businesses engage with their customers while providing an innovative solution that meets modern demands for personalization and interactivity. As this technology continues to evolve, we can expect even more exciting developments in the realm of product visualization and customization in the years ahead.

#3D Product Configurator#3D Product Configurator Development Services#3D Product Configurator Tool#Visualization Tool#product configurator

0 notes

Text

Some Fortune 500 companies have begun testing software that can spot a deepfake of a real person in a live video call, following a spate of scams involving fraudulent job seekers who take a signing bonus and run.

The detection technology comes courtesy of GetReal Labs, a new company founded by Hany Farid, a UC-Berkeley professor and renowned authority on deepfakes and image and video manipulation.

GetReal Labs has developed a suite of tools for spotting images, audio, and video that are generated or manipulated either with artificial intelligence or manual methods. The company’s software can analyze the face in a video call and spot clues that may indicate it has been artificially generated and swapped onto the body of a real person.

“These aren’t hypothetical attacks, we’ve been hearing about it more and more,” Farid says. “In some cases, it seems they're trying to get intellectual property, infiltrating the company. In other cases, it seems purely financial, they just take the signing bonus.”

The FBI issued a warning in 2022 about deepfake job hunters who assume a real person’s identity during video calls. UK-based design and engineering firm Arup lost $25 million to a deepfake scammer posing as the company’s CFO. Romance scammers have also adopted the technology, swindling unsuspecting victims out of their savings.

Impersonating a real person on a live video feed is just one example of the kind of reality-melting trickery now possible thanks to AI. Large language models can convincingly mimic a real person in online chat, while short videos can be generated by tools like OpenAI’s Sora. Impressive AI advances in recent years have made deepfakery more convincing and more accessible. Free software makes it easy to hone deepfakery skills, and easily accessible AI tools can turn text prompts into realistic-looking photographs and videos.

But impersonating a person in a live video is a relatively new frontier. Creating this type of a deepfake typically involves using a mix of machine learning and face-tracking algorithms to seamlessly stitch a fake face onto a real one, allowing an interloper to control what an illicit likeness appears to say and do on screen.

Farid gave WIRED a demo of GetReal Labs’ technology. When shown a photograph of a corporate boardroom, the software analyzes the metadata associated with the image for signs that it has been modified. Several major AI companies including OpenAI, Google, and Meta now add digital signatures to AI-generated images, providing a solid way to confirm their inauthenticity. However, not all tools provide such stamps, and open source image generators can be configured not to. Metadata can also be easily manipulated.

GetReal Labs also uses several AI models, trained to distinguish between real and fake images and video, to flag likely forgeries. Other tools, a mix of AI and traditional forensics, help a user scrutinize an image for visual and physical discrepancies, for example highlighting shadows that point in different directions despite having the same light source, or that do not appear to match the object that cast them.

Lines drawn on different objects shown in perspective will also reveal if they converge on a common vanishing point, as would be the case in a real image.

Other startups that promise to flag deepfakes rely heavily on AI, but Farid says manual forensic analysis will also be crucial to flagging media manipulation. “Anybody who tells you that the solution to this problem is to just train an AI model is either a fool or a liar,” he says.

The need for a reality check extends beyond Fortune 500 firms. Deepfakes and manipulated media are already a major problem in the world of politics, an area Farid hopes his company’s technology could do real good. The WIRED Elections Project is tracking deepfakes used to boost or trash political candidates in elections in India, Indonesia, South Africa, and elsewhere. In the United States, a fake Joe Biden robocall was deployed last January in an effort to dissuade people from turning out to vote in the New Hampshire Presidential primary. Election-related “cheapfake” videos, edited in misleading ways, have gone viral of late, while a Russian disinformation unit has promoted an AI-manipulated clip disparaging Joe Biden.

Vincent Conitzer, a computer scientist at Carnegie Mellon University in Pittsburgh and coauthor of the book Moral AI, expects AI fakery to become more pervasive and more pernicious. That means, he says, there will be growing demand for tools designed to counter them.

“It is an arms race,” Conitzer says. “Even if you have something that right now is very effective at catching deepfakes, there's no guarantee that it will be effective at catching the next generation. A successful detector might even be used to train the next generation of deepfakes to evade that detector.”

GetReal Labs agrees it will be a constant battle to keep up with deepfakery. Ted Schlein, a cofounder of GetReal Labs and a veteran of the computer security industry, says it may not be long before everyone is confronted with some form of deepfake deception, as cybercrooks become more conversant with the technology and dream up ingenious new scams. He adds that manipulated media is a top topic of concern for many chief security officers. “Disinformation is the new malware,” Schlein says.

With significant potential to poison political discourse, Farid notes that media manipulation can be considered a more challenging problem. “I can reset my computer or buy a new one,” he says. “But the poisoning of the human mind is an existential threat to our democracy.”

13 notes

·

View notes

Text

I'm quite happy that Rider IDE is now free for personal use. This is a recent development.

Where-in I talk about IDEs a bit

Rider is a C# IDE that is in direct competition with Visual Studio. It's a bit surprising that Microsoft gave enough wiggle room in the ecosystem to allow a competitor like this to exist.

Of course, both Rider and VS are non-free software, but I find Rider to be an addition to the ecosystem that makes things healthier overall.

Ultimately, even if Rider wasn't free, I don't mind paying for this kind of tool. It is a good tool. What I mind is the lack of control and recourse if the company decides to fuck me over. And that's less likely when you have two IDEs in direct competition like this.

(Though to be clear, this is extremely far from a bulletproof defense and your long-term future as a programmer is always at risk if you don't have FOSS tooling available.)

(Also, it would be cool if we found a way to pay people for tools that doesn't require them holding the kind of power they can use to fuck you over later.)

I think it's generally unlikely for the FOSS community to develop IDEs that are this comprehensive, along with the fact that most programmers in that category have an inherent distaste for IDEs. I think that at least for some usecases, the distaste is misguided.

Trying to get emacs to give you roughly the capabilities of a proprietary IDE can be really painful. Understanding how to configure it and setting everything up is a short full-time job. Then maintaining it becomes a constant endeavor depending on the packages you've decided to rely on and to try to integrate together. It will work wonderfully, then when you update your packages something stops working and debugging it can be frustrating and time-consuming. Sometimes it's not from updating -- you notice some quirky behaviour or bad performance you want to fix and this sends you down a rabbit hole.

By comparison, Rider works mostly how I want it to work. It's had some minor misbehavior, but nothing that would make me have to stop and expend a lot of time. The time saved is really psychologically significant. On some days debugging my tools is fine and even fun. On other days it is devastating.

Don't get me wrong. The stuff you can do with emacs is incredible. The level of customization, the ecosystem. If you want to be a power user among power users, emacs is your uncle, your sister, your estranged half-brother, and your time-travelling son. But it definitely comes at a cost.

Where-in I talk about VSCode a bit

All of this rambling also reminds me of VSCode.

VSCode masquerades as being free software, but the moment you fork it in any way:

Microsoft's C# and C++ debuggers are so restrictively licensed as to exclude the ability to run them with a VSCode fork. (Bonus fact: Jetbrains when developing Rider had to write a debugger from scratch!)

Microsoft forbids the VSCode extension marketplace from being used by any VSCode fork.

Microsoft allows proprietary extensions to be published to the extension market place, which are configured to refuse to work with a non-official build even if you obtain them separately.

In response to this, Open VSX appeared, operated by the Eclipse Foundation. This permits popular FOSS builds of VSCode, such as VSCodium, to still offer an extension marketplace.

Open VSX has an adapter to Microsoft's marketplace API, which is what permits a build of VSCode to use Open VSX as a replacement for Microsoft's marketplace.

Open VSX does not have every extension that Microsoft's marketplace has and will always lack the proprietary ones. But the fact that a FOSS alternative exists is encouraging and heartwarming.

8 notes

·

View notes

Text

NASA’s TESS spots record-breaking stellar triplets

Professional and amateur astronomers teamed up with artificial intelligence to find an unmatched stellar trio called TIC 290061484, thanks to cosmic “strobe lights” captured by NASA’s TESS (Transiting Exoplanet Survey Satellite).

The system contains a set of twin stars orbiting each other every 1.8 days, and a third star that circles the pair in just 25 days. The discovery smashes the record for shortest outer orbital period for this type of system, set in 1956, which had a third star orbiting an inner pair in 33 days.

“Thanks to the compact, edge-on configuration of the system, we can measure the orbits, masses, sizes, and temperatures of its stars,” said Veselin Kostov, a research scientist at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, and the SETI Institute in Mountain View, California. “And we can study how the system formed and predict how it may evolve.”

A paper, led by Kostov, describing the results was published in The Astrophysical Journal Oct. 2.

Flickers in starlight helped reveal the tight trio, which is located in the constellation Cygnus. The system happens to be almost flat from our perspective. This means the stars each cross right in front of, or eclipse, each other as they orbit. When that happens, the nearer star blocks some of the farther star’s light.

Using machine learning, scientists filtered through enormous sets of starlight data from TESS to identify patterns of dimming that reveal eclipses. Then, a small team of citizen scientists filtered further, relying on years of experience and informal training to find particularly interesting cases.

These amateur astronomers, who are co-authors on the new study, met as participants in an online citizen science project called Planet Hunters, which was active from 2010 to 2013. The volunteers later teamed up with professional astronomers to create a new collaboration called the Visual Survey Group, which has been active for over a decade.

“We’re mainly looking for signatures of compact multi-star systems, unusual pulsating stars in binary systems, and weird objects,” said Saul Rappaport, an emeritus professor of physics at MIT in Cambridge. Rappaport co-authored the paper and has helped lead the Visual Survey Group for more than a decade. “It’s exciting to identify a system like this because they’re rarely found, but they may be more common than current tallies suggest.” Many more likely speckle our galaxy, waiting to be discovered.

Partly because the stars in the newfound system orbit in nearly the same plane, scientists say it’s likely very stable despite their tight configuration (the trio’s orbits fit within a smaller area than Mercury’s orbit around the Sun). Each star’s gravity doesn’t perturb the others too much, like they could if their orbits were tilted in different directions.

But while their orbits will likely remain stable for millions of years, “no one lives here,” Rappaport said. “We think the stars formed together from the same growth process, which would have disrupted planets from forming very closely around any of the stars.” The exception could be a distant planet orbiting the three stars as if they were one.

As the inner stars age, they will expand and ultimately merge, triggering a supernova explosion in around 20 to 40 million years.

In the meantime, astronomers are hunting for triple stars with even shorter orbits. That’s hard to do with current technology, but a new tool is on the way.

Images from NASA’s upcoming Nancy Grace Roman Space Telescope will be much more detailed than TESS’s. The same area of the sky covered by a single TESS pixel will fit more than 36,000 Roman pixels. And while TESS took a wide, shallow look at the entire sky, Roman will pierce deep into the heart of our galaxy where stars crowd together, providing a core sample rather than skimming the whole surface.

“We don’t know much about a lot of the stars in the center of the galaxy except for the brightest ones,” said Brian Powell, a co-author and data scientist at Goddard. “Roman’s high-resolution view will help us measure light from stars that usually blur together, providing the best look yet at the nature of star systems in our galaxy.”

And since Roman will monitor light from hundreds of millions of stars as part of one of its main surveys, it will help astronomers find more triple star systems in which all the stars eclipse each other.

“We’re curious why we haven’t found star systems like these with even shorter outer orbital periods,” said Powell. “Roman should help us find them and bring us closer to figuring out what their limits might be.”

Roman could also find eclipsing stars bound together in even larger groups — half a dozen, or perhaps even more all orbiting each other like bees buzzing around a hive.

“Before scientists discovered triply eclipsing triple star systems, we didn’t expect them to be out there,” said co-author Tamás Borkovits, a senior research fellow at the Baja Observatory of The University of Szeged in Hungary. “But once we found them, we thought, well why not? Roman, too, may reveal never-before-seen categories of systems and objects that will surprise astronomers.”

TESS is a NASA Astrophysics Explorer mission managed by NASA Goddard and operated by MIT in Cambridge, Massachusetts. Additional partners include Northrop Grumman, based in Falls Church, Virginia; NASA’s Ames Research Center in California’s Silicon Valley; the Center for Astrophysics | Harvard & Smithsonian in Cambridge, Massachusetts; MIT’s Lincoln Laboratory; and the Space Telescope Science Institute in Baltimore. More than a dozen universities, research institutes, and observatories worldwide are participants in the mission.

IMAGE: This artist’s concept illustrates how tightly the three stars in the system called TIC 290061484 orbit each other. If they were placed at the center of our solar system, all the stars’ orbits would be contained a space smaller than Mercury’s orbit around the Sun. The sizes of the triplet stars and the Sun are also to scale. Credit NASA’s Goddard Space Flight Center

7 notes

·

View notes

Text

The Evolution of DJ Controllers: From Analog Beginnings to Intelligent Performance Systems

The DJ controller has undergone a remarkable transformation—what began as a basic interface for beat matching has now evolved into a powerful centerpiece of live performance technology. Over the years, the convergence of hardware precision, software intelligence, and real-time connectivity has redefined how DJs mix, manipulate, and present music to audiences.

For professional audio engineers and system designers, understanding this technological evolution is more than a history lesson—it's essential knowledge that informs how modern DJ systems are integrated into complex live environments. From early MIDI-based setups to today's AI-driven, all-in-one ecosystems, this blog explores the innovations that have shaped DJ controllers into the versatile tools they are today.

The Analog Foundation: Where It All Began

The roots of DJing lie in vinyl turntables and analog mixers. These setups emphasized feel, timing, and technique. There were no screens, no sync buttons—just rotary EQs, crossfaders, and the unmistakable tactile response of a needle on wax.

For audio engineers, these analog rigs meant clean signal paths and minimal processing latency. However, flexibility was limited, and transporting crates of vinyl to every gig was logistically demanding.

The Rise of MIDI and Digital Integration