#fix website errors

Explore tagged Tumblr posts

Text

How Do I Fix Website Errors & website loading issues Fastly ? » Quickwebsitefix.com

In today's digital era, a website serves as a vital tool for businesses, organizations, and individuals to establish an online presence and connect with their target audience. However, encountering errors or issues with a website is not uncommon. From broken links and slow loading times to compatibility problems and design glitches, these issues can negatively impact user experience and hinder the website's overall performance. In this article, we will explore effective strategies to fix website errors and address common issues that website owners may encounter.

Identify the Problem:

The first step in resolving website errors is to identify the underlying issue. Thoroughly analyze the website's functionality, design, and performance to pinpoint the exact problem. Some common website issues include:

a) Broken links: Use website crawlers or online tools to identify broken links and fix them by updating the URL or removing the link altogether.

b) Slow loading times: Optimize website performance by compressing images, minimizing HTTP requests, and enabling caching mechanisms.

c) Compatibility issues: Test the website across multiple browsers, devices, and screen sizes to ensure compatibility. Make necessary adjustments using responsive design techniques.

d) Code errors: Review the website's code for any syntax errors or bugs. Utilize developer tools and debuggers to identify and fix coding issues.

e) Security vulnerabilities: Regularly scan your website for security vulnerabilities, and ensure that your software, plugins, and scripts are up to date.

Backup Your Website:

Before making any significant changes to your website, it is crucial to create a backup. Backing up your website ensures that you have a restore point in case anything goes wrong during the fixing process. Use your hosting provider's backup tools or employ backup plugins to create a copy of your website's files and databases.

Update Website Software and Plugins:

Outdated software and plugins can cause compatibility issues and security vulnerabilities. Regularly update your content management system (CMS), themes, and plugins to their latest versions.Developers often release updates that fix website and enhance performance, ensuring your website operates smoothly.

Test and Optimize Website Performance:

Website speed is a critical factor affecting user experience and search engine rankings. Perform regular speed tests using online tools like Google PageSpeed Insights or GTmetrix to identify performance bottlenecks. Optimize images, minify CSS and JavaScript files, enable caching, and leverage content delivery networks (CDNs) to enhance website loading issues.

Fix Broken Links and Redirects:

Broken links can frustrate users and negatively impact your website's search engine optimization (SEO). Conduct regular link audits using tools like Google Search Console or online link checkers. Fix broken links by updating or replacing them with relevant content. For broken links that cannot be fixed, implement proper 301 redirects to ensure a seamless user experience.

Enhance Website Security:

Website security is of paramount importance to protect sensitive data and maintain user trust. Implement a robust security protocol that includes using strong passwords, enabling two-factor authentication, regularly scanning for malware, and employing a firewall. Secure your website with an SSL certificate to encrypt data transmitted between your website and users.

Seek Professional Help:

If you encounter complex website errors or lack the technical expertise, it may be beneficial to seek professional help. Web developers and designers can efficiently diagnose and repair website, saving you time and effort.

Conclusion:

Fixing a website errors is a crucial task to ensure your website's optimal performance and user satisfaction. By following the strategies outlined in this article, you can effectively address common website issues and enhance the overall functionality, security, and performance of your website. Remember to regularly maintain and monitor your website to identify and fix any new issues that may arise. With a well-maintained and error-free website,

#fix my website#website issues#fix coding issues#fix website#website loading issues#website repair#fixing a website#fix website errors#website fix

0 notes

Text

ich lenke mich gerade von der Hölle der Arbeitsagentur-Webseite mit tröstlichen Gedanken von Adam & Leo ab :')

#wie lautet eigentlich der Shipname für die zwei?#gibt es sonst was als#spatort#?#tatort saarbrücken#tatort#i'm currently throwing such a tantrum over such a small thing#pls don't make fun of me :(#i entered all my info to melden mich als arbeitssuchend#but i made an error while entering my phone info#and didn't notice until after i'd submitted it#and then when i went to correct my submitted info on the website#it said some shit like 'Sie haben keinen Zugang zu dieser Leistung'#(i don't remember the exact words but it was sth like that)#even spent ages on hold with the technical support phone line#but couldn't get through to anyone#but you can get your unemployment benefits reduced if they can't contact you by phone#SO THAT'S FUCKING GREAT#i really hope they don't try to call me until i've been able to fix this#i am broke already and my alg1 is not going to be much#cosmo gyres#personal#text#o hear my sad complaint

14 notes

·

View notes

Text

Going to become a dc writer not out of dreams or ambition but to fucking. Fix what they're doing to my boy

3 notes

·

View notes

Text

great news everyone i can now post images again on mobile :)

#nash.txt#it fixed itself after i switched my main's url back after pride month so i guess that's what was wrong? maybe like a database error or smth#functional website 👍

36 notes

·

View notes

Text

attack for slashersilly on artfight

#max arts#deltarune#i can't tag your blog. find it#the rose leaf is a bit too big im realizing.. oh well#i nitpick small errors a lot and fix them so if you want a fully 'updated' one you can find it in original high quality on my website like#later tonight or tomorrow

24 notes

·

View notes

Text

.

#sorry i haven’t/will not be as active or not fully myself#school started and even though it’s only been a week it’s taking a terrible toll on me#i’m having to relearn that maybe i shouldn’t try to do an entire class’s work in a week & then break down when i can’t do the advanced—#assignments for a coding language i quite literally did the equivalent of hello world in two days prior#one of my classes has a garbage website and the prof wants to be helpful & i appreciate it but i had her last semester & her hopping on—#a zoom and yelling/ordering me around/stressing me out isn’t going to fix the errors the site itself has#also i don’t want to draw attention to myself lest anyone find out i don’t really know shit about coding and have been cheating—#the whole time#but yeah. im too stressed to sleep for no reason even though i have work#and i feel guilty for relaxing whenever i know i have schoolwork even if im entirely exhausted#What’s wrong with me#anyways#rose.txt#tw vent

7 notes

·

View notes

Text

feeling pretty wretched but today i made some images 👍

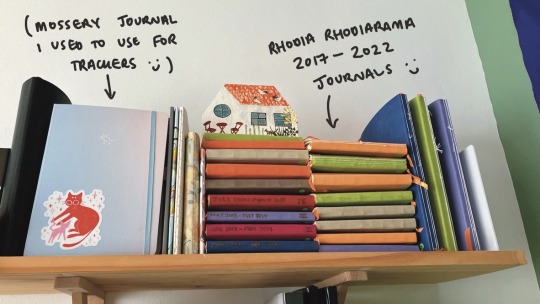

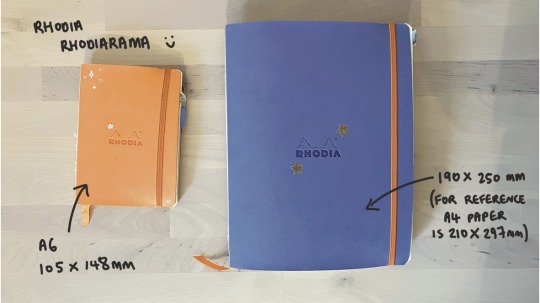

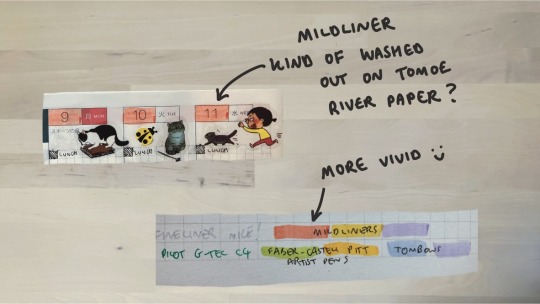

#i am working on da video (: it's Basic™ which is my m.o at the moment (: working on 'doing things' rather than 'imagining a perfect thing'!#as testament to how big my headache is i spent an annoyingly long time trying to synthesise that middle image from copy pasted#product pictures from a website of the notebooks and a blank photo of my table trying to figure out how i could get them to scale#before i was like wait a minute........... i could take this photo in real life.................!#EDIT if you saw the version with an error no you didn't... figured out how to fix it with my friend the colour picker tool#another wonderful day to be a beautiful genius

9 notes

·

View notes

Text

the thing about adhd is that sometimes i will forget something i was supposed to be upset about. .....unfortunately i usually remember right when it would make me most upset. so that blows

#anime life#just remembered i have no idea when I'll get more adderall#bc there was an issue w my payment for it#and the website for the mail order pharmacy gives me an error message whenever i try to fix it

5 notes

·

View notes

Text

tumblr app found a new, special, and unique way in which to torture me personally, in that videos will freeze and be broken when i try to look at them, but as soon as i start scrolling they’ll autoplay.

4 notes

·

View notes

Text

you will never experience a more broken website than when your registering for college courses

#colleges: our website experiences error due to heavy traffic? lets not fix that#errors*#sorry i keep posting abt this im stressed and annoyed#personal

3 notes

·

View notes

Text

How HTTP Status Codes & DNS Errors Impact Google Search

Learn how HTTP status codes, network failures, and DNS errors affect Google Search indexing and crawling. Fix soft 404s, 5xx issues, and debug DNS problems. How HTTP Status Codes, Network, and DNS Errors Affect Google Search Google Search relies on efficient and accurate crawling of web content to provide the most relevant results to users. This crawling process is governed by how websites…

#4xx errors#5xx errors#canonical URLs#crawl rate#crawling issues#debugging DNS#DNS errors#fixing soft 404#Google Search indexing#Googlebot crawl#HTTP 301#HTTP 302#HTTP status codes#network errors#redirect errors#Search Console errors#SEO errors#server errors#soft 404#website SEO

0 notes

Text

the most difficult thing about ffxiv is Mogstation fr

subscribing to the game? lol good luck

cancelling your sub? sqex overlords say 🙅

#bloody error codes#i got ONE message re: something about their payment system currently “undergoing processes”#but shouldn't that info be on the fkn lodestone!??#i still remember when they/their payment processor halted payments with visa etc. and that took AGES to be fixed#why are jp websites/UI so outdated 💀#lyna rambles

0 notes

Text

Hi tumblr staff, stop breaking tumblr, thanks

#🦈 blahajyapping#powder's vents#i can't even look at reblog notes or likes now#it just does the thing where it loads the post again but in that 'blinding you with white background' way#and when i again try to click the reblog notes and the likes all i get is crickets#literally zero response from tumblr#error 404 but without displaying the error LOL#no but fr someone please fix this i love snooping in the reblog notes and to be deprived of that is like being deprived of water#I NEED MY FIX OF FUNNEE PEOPLE IN THE NOTES :(#EDIT: i had to reinstall the extension we use to make tumblr look normal and less like the dumb bird website#and that seems to have fixed the problem#i'm still blaming tumblr though bc i can :)

0 notes

Text

this is making some serious rounds again for some reason and i'd just like to say that if any of the horror girlies in my notes can recommend me a printer that would actually willingly put this many words on a pair of booty shorts without me having to spend half my life savings i would 100% have them made lol

so i watched the fly (1986). um

#107 txt#the fly#david cronenberg#i would fix the grammatical errors too. i copy pasted this from a mobie website and there are definitely some commas where they shouldn't b

2K notes

·

View notes

Text

How to Fix Crawl Errors and Boost Your Website’s Performance

As a website owner or SEO professional, keeping your website healthy and optimized for search engines is crucial. One of the key elements of a well-optimized website is ensuring that search engine crawlers can easily access and index your pages. However, when crawl errors arise, they can prevent your site from being fully indexed, negatively impacting your search rankings.

In this blog, we’ll discuss how to fix crawl errors, why they occur, and the best practices for maintaining a crawl-friendly website.

What Are Crawl Errors?

Crawl errors occur when a search engine's crawler (like Googlebot) tries to access a page on your website but fails to do so. When these crawlers can’t reach your pages, they can’t index them, which means your site won’t show up properly in search results. Crawl errors are usually classified into two categories: site errors and URL errors.

Site Errors: These affect your entire website and prevent the crawler from accessing any part of it.

URL Errors: These are specific to certain pages or files on your site.

Understanding the types of crawl errors is the first step in fixing them. Let’s dive deeper into the common types of errors and how to fix crawl errors on your website.

Common Crawl Errors and How to Fix Them

1. DNS Errors

A DNS error occurs when the crawler can’t communicate with your site’s server. This usually happens because the server is down or your DNS settings are misconfigured.

How to Fix DNS Errors:

Check if your website is online.

Use a DNS testing tool to ensure your DNS settings are correctly configured.

If the issue persists, contact your web hosting provider to resolve any server problems.

2. Server Errors (5xx)

Server errors occur when your server takes too long to respond, or when it crashes, resulting in a 5xx error code (e.g., 500 Internal Server Error, 503 Service Unavailable). These errors can lead to temporary crawl issues.

How to Fix Server Errors:

Ensure your hosting plan can handle your website’s traffic load.

Check server logs for detailed error messages and troubleshoot accordingly.

Contact your hosting provider for assistance if you’re unable to resolve the issue on your own.

3. 404 Not Found Errors

A 404 error occurs when a URL on your website no longer exists, but is still being linked to or crawled by search engines. This is one of the most common crawl errors and can occur if you’ve deleted a page without properly redirecting it.

How to Fix 404 Errors:

Use Google Search Console to identify all 404 errors on your site.

Set up 301 redirects for any pages that have been permanently moved or deleted.

If the page is no longer relevant, ensure it returns a proper 404 response, but remove any internal links to it.

4. Soft 404 Errors

A soft 404 occurs when a page returns a 200 OK status code, but the content on the page is essentially telling users (or crawlers) that the page doesn’t exist. This confuses crawlers and can impact your site’s performance.

How to Fix Soft 404 Errors:

Ensure that any page that no longer exists returns a true 404 status code.

If the page is valuable, update the content to make it relevant, or redirect it to another related page.

5. Robots.txt Blocking Errors

The robots.txt file tells search engines which pages they can or can’t crawl. If certain pages are blocked unintentionally, they won’t be indexed, leading to crawl issues.

0 notes

Text

#benefits of HTTPS for SEO#best practices for mobile-friendly websites#crawlability#fixing crawl errors in Google Search Console#Google ranking#how to optimize site speed for SEO#HTTPS#implementing schema markup for SEO#mobile-friendliness#schema markup#search engine optimization#site speed#structured data#technical SEO#website performance

1 note

·

View note