#git workflow strategies

Explore tagged Tumblr posts

Text

Use Git if: ✅ You need speed and distributed development ✅ You want better branching and merging ✅ You work offline frequently

#git tutorial#git commands#git basics#git workflow#git repository#git commit#git push#git pull#git merge#git branch#git rebase#git clone#git fetch#git vs svn#git version control#git best practices#git for beginners#git advanced commands#git vs github#git stash#git log#git diff#git reset#git revert#gitignore#git troubleshooting#git workflow strategies#git for developers#cloudcusp.com#cloudcusp

1 note

·

View note

Text

Top 10 ChatGPT Prompts For Software Developers

ChatGPT can do a lot more than just code creation and this blog post is going to be all about that. We have curated a list of ChatGPT prompts that will help software developers with their everyday tasks. ChatGPT can respond to questions and can compose codes making it a very helpful tool for software engineers.

While this AI tool can help developers with the entire SDLC (Software Development Lifecycle), it is important to understand how to use the prompts effectively for different needs.

Prompt engineering gives users accurate results. Since ChatGPT accepts prompts, we receive more precise answers. But a lot depends on how these prompts are formulated.

To Get The Best Out Of ChatGPT, Your Prompts Should Be:

Clear and well-defined. The more detailed your prompts, the better suggestions you will receive from ChatGPT.

Specify the functionality and programming language. Not specifying what you exactly need might not give you the desired results.

Phrase your prompts in a natural language, as if asking someone for help. This will make ChatGPT understand your problem better and give more relevant outputs.

Avoid unnecessary information and ambiguity. Keep it not only to the point but also inclusive of all important details.

Top ChatGPT Prompts For Software Developers

Let’s quickly have a look at some of the best ChatGPT prompts to assist you with various stages of your Software development lifecycle.

1. For Practicing SQL Commands;

2. For Becoming A Programming Language Interpreter;

3. For Creating Regular Expressions Since They Help In Managing, Locating, And Matching Text.

4. For Generating Architectural Diagrams For Your Software Requirements.

Prompt Examples: I want you to act as a Graphviz DOT generator, an expert to create meaningful diagrams. The diagram should have at least n nodes (I specify n in my input by writing [n], 10 being the default value) and to be an accurate and complex representation of the given input. Each node is indexed by a number to reduce the size of the output, should not include any styling, and with layout=neato, overlap=false, node [shape=rectangle] as parameters. The code should be valid, bugless and returned on a single line, without any explanation. Provide a clear and organized diagram, the relationships between the nodes have to make sense for an expert of that input. My first diagram is: “The water cycle [8]”.

5. For Solving Git Problems And Getting Guidance On Overcoming Them.

Prompt Examples: “Explain how to resolve this Git merge conflict: [conflict details].” 6. For Code generation- ChatGPT can help generate a code based on descriptions given by you. It can write pieces of codes based on the requirements given in the input. Prompt Examples: -Write a program/function to {explain functionality} in {programming language} -Create a code snippet for checking if a file exists in Python. -Create a function that merges two lists into a dictionary in JavaScript.

7. For Code Review And Debugging: ChatGPT Can Review Your Code Snippet And Also Share Bugs.

Prompt Examples: -Here’s a C# code snippet. The function is supposed to return the maximum value from the given list, but it’s not returning the expected output. Can you identify the problem? [Enter your code here] -Can you help me debug this error message from my C# program: [error message] -Help me debug this Python script that processes a list of objects and suggests possible fixes. [Enter your code here]

8. For Knowing The Coding Best Practices And Principles: It Is Very Important To Be Updated With Industry’s Best Practices In Coding. This Helps To Maintain The Codebase When The Organization Grows.

Prompt Examples: -What are some common mistakes to avoid when writing code? -What are the best practices for security testing? -Show me best practices for writing {concept or function} in {programming language}.

9. For Code Optimization: ChatGPT Can Help Optimize The Code And Enhance Its Readability And Performance To Make It Look More Efficient.

Prompt Examples: -Optimize the following {programming language} code which {explain the functioning}: {code snippet} -Suggest improvements to optimize this C# function: [code snippet] -What are some strategies for reducing memory usage and optimizing data structures?

10. For Creating Boilerplate Code: ChatGPT Can Help In Boilerplate Code Generation.

Prompt Examples: -Create a basic Java Spring Boot application boilerplate code. -Create a basic Python class boilerplate code

11. For Bug Fixes: Using ChatGPT Helps Fixing The Bugs Thus Saving A Large Chunk Of Time In Software Development And Also Increasing Productivity.

Prompt Examples: -How do I fix the following {programming language} code which {explain the functioning}? {code snippet} -Can you generate a bug report? -Find bugs in the following JavaScript code: (enter code)

12. Code Refactoring- ChatGPt Can Refactor The Code And Reduce Errors To Enhance Code Efficiency, Thus Making It Easier To Modify In The Future.

Prompt Examples –What are some techniques for refactoring code to improve code reuse and promote the use of design patterns? -I have duplicate code in my project. How can I refactor it to eliminate redundancy?

13. For Choosing Deployment Strategies- ChatGPT Can Suggest Deployment Strategies Best Suited For A Particular Project And To Ensure That It Runs Smoothly.

Prompt Examples -What are the best deployment strategies for this software project? {explain the project} -What are the best practices for version control and release management?

14. For Creating Unit Tests- ChatGPT Can Write Test Cases For You

Prompt Examples: -How does test-driven development help improve code quality? -What are some best practices for implementing test-driven development in a project? These were some prompt examples for you that we sourced on the basis of different requirements a developer can have. So whether you have to generate a code or understand a concept, ChatGPT can really make a developer’s life by doing a lot of tasks. However, it certainly comes with its own set of challenges and cannot always be completely correct. So it is advisable to cross-check the responses. Hope this helps. Visit us- Intelliatech

#ChatGPT prompts#Developers#Terminal commands#JavaScript console#API integration#SQL commands#Programming language interpreter#Regular expressions#Code debugging#Architectural diagrams#Performance optimization#Git merge conflicts#Prompt engineering#Code generation#Code refactoring#Debugging#Coding best practices#Code optimization#Code commenting#Boilerplate code#Software developers#Programming challenges#Software documentation#Workflow automation#SDLC (Software Development Lifecycle)#Project planning#Software requirements#Design patterns#Deployment strategies#Security testing

0 notes

Text

UiPath Automation Developer Associate v1 UiPath-ADAv1 Prep Guide 2025

Achieving the UiPath Certified Professional – Automation Developer Associate (ADAv1) credential is a powerful statement in today’s robotic process automation (RPA) world. It signifies you can design and build effective automation workflows using UiPath Studio, Robots, and Orchestrator, and contribute valuably within RPA teams. As a foundational credential, ADAv1 opens doors to roles like Solution Architect, Automation Architect, and Advanced Developer.

To guide you on this path, Cert007’s UiPath Automation Developer Associate v1 UiPath-ADAv1 Prep Guide 2025 delivers the most up-to-date structure and content for the ADAv1 exam—arming you with clear study strategies and hands-on practices essential for success.

What Is the UiPath Automation Developer Associate (UiPath-ADAv1) Certification?

This entry-level certification is designed to validate your ability to design, build, and deploy simple automation solutions using key UiPath tools like UiPath Studio, Robots, and Orchestrator. It also shows that you can function effectively within a team delivering larger automation projects.

Successfully passing this exam sets the foundation for advanced roles like:

Advanced Automation Developer

Solution Architect

Automation Architect

Who Should Take the UiPath-ADAv1 Exam? Ideal Candidates and Requirements

The UiPath-ADAv1 exam is suitable for:

RPA beginners who have completed foundational UiPath training through UiPath Academy or certified partners.

Aspiring automation developers with 3–6 months of hands-on UiPath experience.

The Minimally Qualified Candidate (MQC) is someone who understands basic RPA principles and has practical experience working on simple automation tasks.

UiPath-ADAv1 Exam Overview: Format, Duration, and Key Details

Here’s a quick summary of the exam structure and key information:CategoryDetailsExam Code UiPath-ADAv1 Certification UiPath Automation Developer Associate v1 Track UiPath Certified Professional - Developer Duration 90 Minutes Passing Score 70% Exam Fee $150 USD Validity 3 Years Prerequisites None officially, but hands-on experience is advised

Complete List of Topics Covered in the UiPath-ADAv1 Exam

The UiPath-ADAv1 exam tests your knowledge across the following domains:

Business Knowledge

Platform Knowledge

UiPath Studio Interface

Variables and Arguments

Control Flow in Workflows

Debugging and Troubleshooting

Exception Handling Techniques

Activity Logging and Monitoring

UI Automation Basics

Object Repository Management

Excel Automation Tasks

Email and PDF Automation

Data Manipulation in Workflows

Version Control (e.g., Git Integration)

Workflow Analyzer Usage

RPA Testing Techniques

Orchestrator Functionalities

Integration Service

Why Cert007 Offers the Best UiPath-ADAv1 Exam Prep Materials

Preparing for a certification like UiPath-ADAv1 requires precise and targeted study materials. That’s where Cert007’s UiPath-ADAv1 Prep Guide comes in. It provides an up-to-date, comprehensive package tailored to cover everything you need to pass with confidence.

✅ Key Features of Cert007 UiPath-ADAv1 Prep Guide:

Updated and Verified Exam Questions

Realistic Mock Exams in PDF and Software Formats

Detailed Explanations for Deeper Understanding

Covers All Latest UiPath-ADAv1 Exam Objectives

Suitable for Self-Study or Team-Based Learning

Final Thoughts: Boost Your RPA Career with UiPath Certification and Cert007 Support

Earning the UiPath Automation Developer Associate v1 Certification not only validates your skills but also opens doors to exciting opportunities in the automation industry. With comprehensive preparation and dedicated practice using the UiPath-ADAv1 Prep Guide from Cert007, you'll be well on your way to achieving your RPA career goals.

Start your preparation today with the trusted UiPath-ADAv1 Prep Guide from Cert007 – your roadmap to RPA success!

0 notes

Text

5 Practical AI Agents That Deliver Enterprise Value

The AI landscape is buzzing, and while Generative AI models like GPT have captured headlines with their ability to create text and images, a new and arguably more transformative wave is gathering momentum: AI Agents. These aren't just sophisticated chatbots; they are autonomous entities designed to understand complex goals, plan multi-step actions, interact with various tools and systems, and execute tasks to achieve those goals with minimal human oversight.

AI Agents are moving beyond theoretical concepts to deliver tangible enterprise value across diverse industries. They represent a significant leap in automation, moving from simply generating information to actively pursuing and accomplishing real-world objectives. Here are 5 practical types of AI Agents that are already making a difference or are poised to in 2025 and beyond:

1. Autonomous Customer Service & Support Agents

Beyond the Basic Chatbot: While traditional chatbots follow predefined scripts to answer FAQs, Agentic AI takes customer service to an entirely new level.

How they work: Given a customer's query, these agents can autonomously diagnose the problem, access customer databases (CRM, order history), consult extensive knowledge bases, initiate refunds, reschedule appointments, troubleshoot technical issues by interacting with IT systems, and even proactively escalate to a human agent with a comprehensive summary if the issue is too complex.

Enterprise Value: Dramatically reduces the workload on human support teams, significantly improves first-contact resolution rates, provides 24/7 support for complex inquiries, and ultimately enhances customer satisfaction through faster, more accurate service.

2. Automated Software Development & Testing Agents

The Future of Engineering Workflows: Imagine an AI that can not only write code but also comprehend requirements, rigorously test its own creations, and even debug and refactor.

How they work: Given a high-level feature request ("add a new user login flow with multi-factor authentication"), the agent breaks it down into granular sub-tasks (design database schema, write front-end code, implement authentication logic, write unit tests, integrate with existing APIs). It then leverages code interpreters, interacts with version control systems (e.g., Git), and testing frameworks to iteratively build, test, and refine the code until the feature is complete and verified.

Enterprise Value: Accelerates development cycles by automating repetitive coding tasks, reduces bugs through proactive testing, and frees up human developers for higher-level architectural design, creative problem-solving, and complex integrations.

3. Intelligent Financial Trading & Risk Management Agents

Real-time Precision in Volatile Markets: In the fast-paced and high-stakes world of finance, AI agents can act with unprecedented speed, precision, and analytical depth.

How they work: These agents continuously monitor real-time market data, analyze news feeds for sentiment (e.g., identifying early signs of market shifts from global events), detect complex patterns indicative of fraud or anomalies in transactions, and execute trades based on sophisticated algorithms and pre-defined risk parameters. They can dynamically adjust strategies based on market shifts or regulatory changes, integrating with trading platforms and compliance systems.

Enterprise Value: Optimizes trading strategies for maximum returns, significantly enhances fraud detection capabilities, identifies emerging market risks faster than human analysts, and provides a continuous monitoring layer that ensures compliance and protects assets.

4. Dynamic Supply Chain Optimization & Resilience Agents

Navigating Global Complexity with Autonomy: Modern global supply chains are incredibly complex and vulnerable to unforeseen disruptions. AI agents offer a powerful proactive solution.

How they work: An agent continuously monitors global events (e.g., weather patterns, geopolitical tensions, port congestion, supplier issues), analyzes real-time inventory levels and demand forecasts, and dynamically re-routes shipments, identifies and qualifies alternative suppliers, or adjusts production schedules in real-time to mitigate disruptions. They integrate seamlessly with ERP systems, logistics platforms, and external data feeds.

Enterprise Value: Builds unparalleled supply chain resilience, drastically reduces operational costs due to delays and inefficiencies, minimizes stockouts and overstock, and ensures continuous availability of goods even in turbulent environments.

5. Personalized Marketing & Sales Agents

Hyper-Targeted Engagement at Scale: Moving beyond automated emails to truly intelligent, adaptive customer interaction is crucial for modern sales and marketing.

How they work: These agents research potential leads by crawling public data, analyze customer behavior across multiple channels (website interactions, social media engagement, past purchases), generate highly personalized outreach messages (emails, ad copy, chatbot interactions) using integrated generative AI models, manage entire campaign execution, track real-time engagement, and even schedule follow-up actions like booking a demo or sending a tailored proposal. They integrate with CRM and marketing automation platforms.

Enterprise Value: Dramatically improves lead conversion rates, fosters deeper customer engagement through hyper-personalization, optimizes marketing spend by targeting the most promising segments, and frees up sales teams to focus on high-value, complex relationship-building.

The Agentic Future is Here

The transition from AI that simply "generates" information to AI that "acts" autonomously marks a profound shift in enterprise automation and intelligence. While careful consideration for aspects like trust, governance, and reliable integration is essential, these practical examples demonstrate that AI Agents are no longer just a theoretical concept. They are powerful tools ready to deliver tangible business value, automate complex workflows, and redefine efficiency across every facet of the modern enterprise. Embracing Agentic AI is key to unlocking the next level of business transformation and competitive advantage.

0 notes

Text

Where Can I Find DevOps Training with Placement Near Me?

Introduction: Unlock Your Tech Career with DevOps Training

In today’s digital world, companies are moving faster than ever. Continuous delivery, automation, and rapid deployment have become the new norm. That’s where DevOps comes in a powerful blend of development and operations that fuels speed and reliability in software delivery.

Have you ever wondered how companies like Amazon, Netflix, or Facebook release features so quickly without downtime? The secret lies in DevOps an industry-demanded approach that integrates development and operations to streamline software delivery. Today, DevOps skills are not just desirable they’re essential. If you’re asking, “Where can I find DevOps training with placement near me?”, this guide will walk you through everything you need to know to find the right training and land the job you deserve.

Understanding DevOps: Why It Matters

DevOps is more than a buzzword it’s a cultural and technical shift that transforms how software teams build, test, and deploy applications. It focuses on collaboration, automation, continuous integration (CI), continuous delivery (CD), and feedback loops.

Professionals trained in DevOps can expect roles like:

DevOps Engineer

Site Reliability Engineer

Cloud Infrastructure Engineer

Release Manager

The growing reliance on cloud services and rapid deployment pipelines has placed DevOps engineers in high demand. A recent report by Global Knowledge ranks DevOps as one of the highest-paying tech roles in North America.

Why DevOps Training with Placement Is Crucial

Many learners begin with self-study or unstructured tutorials, but that only scratches the surface. A comprehensive DevOps training and placement program ensures:

Structured learning of core and advanced DevOps concepts

Hands-on experience with DevOps automation tools

Resume building, interview preparation, and career support

Real-world project exposure to simulate a professional environment

Direct pathways to job interviews and job offers

If you’re looking for DevOps training with placement “near me,” remember that “location” today is no longer just geographic—it’s also digital. The right DevOps online training can provide the accessibility and support you need, no matter your zip code.

Core Components of a DevOps Course Online

When choosing a DevOps course online, ensure it covers the following modules in-depth:

1. Introduction to DevOps Culture and Principles

Evolution of DevOps

Agile and Lean practices

Collaboration and communication strategies

2. Version Control with Git and GitHub

Branching and merging strategies

Pull requests and code reviews

Git workflows in real-world projects

3. Continuous Integration (CI) Tools

Jenkins setup and pipelines

GitHub Actions

Code quality checks and automated builds

4. Configuration Management

Tools like Ansible, Chef, or Puppet

Managing infrastructure as code (IaC)

Role-based access control

5. Containerization and Orchestration

Docker fundamentals

Kubernetes (K8s) clusters, deployments, and services

Helm charts and autoscaling strategies

6. Monitoring and Logging

Prometheus and Grafana

ELK Stack (Elasticsearch, Logstash, Kibana)

Incident alerting systems

7. Cloud Infrastructure and DevOps Automation Tools

AWS, Azure, or GCP fundamentals

Terraform for IaC

CI/CD pipelines integrated with cloud services

Real-World Applications: Why Hands-On Learning Matters

A key feature of any top-tier DevOps training online is its practical approach. Without hands-on labs or real projects, theory can only take you so far.

Here’s an example project structure:

Project: Deploying a Multi-Tier Application with Kubernetes

Such projects help learners not only understand tools but also simulate real DevOps scenarios, building confidence and clarity.

DevOps Training and Certification: What You Should Know

Certifications validate your knowledge and can significantly improve your job prospects. A solid DevOps training and certification program should prepare you for globally recognized exams like:

DevOps Foundation Certification

Certified Kubernetes Administrator (CKA)

AWS Certified DevOps Engineer

Docker Certified Associate

While certifications are valuable, employers prioritize candidates who demonstrate both theoretical knowledge and applied skills. This is why combining training with placement offers the best return on investment.

What to Look for in a DevOps Online Course

If you’re on the hunt for the best DevOps training online, here are key features to consider:

Structured Curriculum

It should cover everything from fundamentals to advanced automation practices.

Expert Trainers

Trainers should have real industry experience, not just academic knowledge.

Hands-On Projects

Project-based assessments help bridge the gap between theory and application.

Flexible Learning

A good DevOps online course offers recordings, live sessions, and self-paced materials.

Placement Support

Look for programs that offer:

Resume writing and LinkedIn profile optimization

Mock interviews with real-time feedback

Access to a network of hiring partners

Benefits of Enrolling in DevOps Bootcamp Online

A DevOps bootcamp online fast-tracks your learning process. These are intensive, short-duration programs designed for focused outcomes. Key benefits include:

Rapid skill acquisition

Industry-aligned curriculum

Peer collaboration and group projects

Career coaching and mock interviews

Job referrals and hiring events

Such bootcamps are ideal for professionals looking to upskill, switch careers, or secure a DevOps role without spending years in academia.

DevOps Automation Tools You Must Learn

Git & GitHub Git is the backbone of version control in DevOps, allowing teams to track changes, collaborate on code, and manage development history. GitHub enhances this by offering cloud-based repositories, pull requests, and code review tools—making it a must-know for every DevOps professional.

Jenkins Jenkins is the most popular open-source automation server used to build and manage continuous integration and continuous delivery (CI/CD) pipelines. It integrates with almost every DevOps tool and helps automate testing, deployment, and release cycles efficiently.

Docker Docker is a game-changer in DevOps. It enables you to containerize applications, ensuring consistency across environments. With Docker, developers can package software with all its dependencies, leading to faster development and more reliable deployments.

Kubernetes Once applications are containerized, Kubernetes helps manage and orchestrate them at scale. It automates deployment, scaling, and load balancing of containerized applications—making it essential for managing modern cloud-native infrastructures.

Ansible Ansible simplifies configuration management and infrastructure automation. Its agentless architecture and easy-to-write YAML playbooks allow you to automate repetitive tasks across servers and maintain consistency in deployments.

Terraform Terraform enables Infrastructure as Code (IaC), allowing teams to provision and manage cloud resources using simple, declarative code. It supports multi-cloud environments and ensures consistent infrastructure with minimal manual effort.

Prometheus & Grafana For monitoring and alerting, Prometheus collects metrics in real-time, while Grafana visualizes them beautifully. Together, they help track application performance and system health essential for proactive operations.

ELK Stack (Elasticsearch, Logstash, Kibana) The ELK stack is widely used for centralized logging. Elasticsearch stores logs, Logstash processes them, and Kibana provides powerful visualizations, helping teams troubleshoot issues quickly.

Mastering these tools gives you a competitive edge in the DevOps job market and empowers you to build reliable, scalable, and efficient software systems.

Job Market Outlook for DevOps Professionals

According to the U.S. Bureau of Labor Statistics, software development roles are expected to grow 25% by 2032—faster than most other industries. DevOps roles are a large part of this trend. Companies need professionals who can automate pipelines, manage scalable systems, and deliver software efficiently.

Average salaries in the U.S. for DevOps engineers range between $95,000 to $145,000, depending on experience, certifications, and location.

Companies across industries—from banking and healthcare to retail and tech—are hiring DevOps professionals for critical digital transformation roles.

Is DevOps for You?

If you relate to any of the following, a DevOps course online might be the perfect next step:

You're from an IT background looking to transition into automation roles

You enjoy scripting, problem-solving, and system management

You're a software developer interested in faster and reliable deployments

You're a system admin looking to expand into cloud and DevOps roles

You want a structured, placement-supported training program to start your career

How to Get Started with DevOps Training and Placement

Step 1: Enroll in a Comprehensive Program

Choose a program that covers both foundational and advanced concepts and includes real-time projects.

Step 2: Master the Tools

Practice using popular DevOps automation tools like Docker, Jenkins, and Kubernetes.

Step 3: Work on Live Projects

Gain experience working on CI/CD pipelines, cloud deployment, and infrastructure management.

Step 4: Prepare for Interviews

Use mock sessions, Q&A banks, and technical case studies to strengthen your readiness.

Step 5: Land the Job

Leverage placement services, interview support, and resume assistance to get hired.

Key Takeaways

DevOps training provides the automation and deployment skills demanded in modern software environments.

Placement support is crucial to transitioning from learning to earning.

Look for comprehensive online courses that offer hands-on experience and job assistance.

DevOps is not just a skill it’s a mindset of collaboration, speed, and innovation.

Ready to launch your DevOps career? Join H2K Infosys today for hands-on learning and job placement support. Start your transformation into a DevOps professional now.

#devops training#DevOps course#devops training online#devops online training#devops training and certification#devops certification training#devops training with placement#devops online courses#best devops training online#online DevOps course#advanced devops course#devops training and placement#devops course online#devops real time training#DevOps automation tools

0 notes

Text

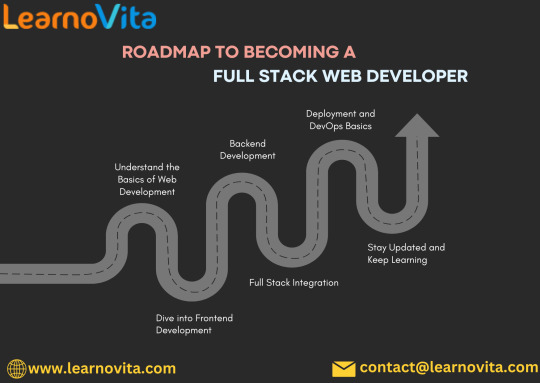

Explore the Power of Full Stack Web Development with Real-World Projects

In the fast-evolving world of technology, full stack web development stands out as one of the most versatile and in-demand skills today. If you’re eager to build a strong foundation in both frontend and backend development, it’s crucial to explore the power of full stack web development with real-world projects. This hands-on approach not only enhances your understanding but also prepares you to tackle actual challenges in the industry.

Why Focus on Full Stack Development?

Full stack developers possess the unique ability to design and build complete web applications — from user interfaces to databases and servers. Their comprehensive skill set makes them highly valuable to employers, startups, and freelance clients alike.

Key advantages of mastering full stack development include:

End-to-End Development Skills: Handle both client and server-side programming.

Increased Employability: Companies prefer versatile developers who can oversee projects holistically.

Flexibility: Opportunity to work on diverse projects across industries.

Better Problem-Solving: Understanding the full application lifecycle improves debugging and innovation.

Importance of Real-World Projects in Learning

Theory is important, but nothing beats practical experience. Engaging with real-world projects allows you to:

Apply concepts in a tangible manner.

Understand project workflows and collaboration.

Develop problem-solving strategies.

Build a strong portfolio to showcase to employers.

Our training program encourages students to explore the power of full stack web development with real-world projects, enabling them to graduate as job-ready developers.

Comprehensive Curriculum

Our course includes a wide range of essential topics to ensure you gain a holistic understanding:

Frontend Development: Master HTML, CSS, JavaScript, and frameworks like React and Angular.

Backend Programming: Learn server-side scripting with Node.js, Express.js, and incorporate backend knowledge from the Java certification course in Pune.

Databases: Work with relational and non-relational databases such as MySQL and MongoDB.

Version Control: Use Git and GitHub to manage code collaboratively.

Deployment: Learn to deploy applications on cloud platforms and manage servers.

Who Should Join?

This course is ideal for:

Beginners who want to start their web development journey.

Graduates aiming to boost their technical skills.

Professionals looking for a career shift into software development.

Freelancers and entrepreneurs interested in developing web applications.

Why Choose the Best Full Stack Develop

0 notes

Text

Building It Right: How to Future-Proof Your Dynamics 365 CE Solution Architecture

A well-structured Dynamics 365 Customer Engagement (CE) solution architecture is essential for growing businesses aiming to scale their CRM without sacrificing performance or maintainability. As organizations expand, the complexity of their Dynamics implementation increases and without the right architecture in place, that complexity can quickly spiral into technical debt.

This blog explores what defines a scalable CE solution architecture and how to structure it for long-term success. It begins with the fundamentals: understanding how components like entities, workflows, business rules, plugins, and applications should be organized and layered. A clear layering strategy divided into base (Microsoft or ISV), middle (business-specific customizations), and top (patches and new features) helps reduce deployment conflicts and simplifies upgrades.

One of the most important decisions in solution architecture is choosing between managed and unmanaged solutions. The post recommends using unmanaged solutions during development for flexibility, then exporting them as managed for production to maintain control and stability.

Version control is another key pillar. Microsoft’s support for patches and cloned solutions allows teams to manage changes more effectively, reduce risks, and trace updates. These tools ensure that enhancements and bug fixes are delivered in a controlled, trackable manner.

The blog also outlines how to build a smart environment strategy, including dedicated Dev, Test, UAT, and Production instances. Changes should flow systematically through these stages to catch issues early and ensure smoother go-lives.

Incorporating Git and Azure DevOps into your workflow supports continuous integration and deployment (CI/CD), making it easier to automate deployments, track changes, and collaborate across teams. This practice cuts down on manual errors and speeds up release cycles.

To help teams avoid common pitfalls, best practices include keeping the base solution lightweight, avoiding direct edits in production, tagging every release with a version number, and maintaining clear naming conventions.

The blog wraps up with a real-world example of how a multi-region organization successfully applied these principles to deploy Dynamics 365 CE at scale proving that the right solution architecture doesn’t just support the business, it helps drive it forward

0 notes

Text

Top PHP Development Tools Every Developer Must Use

Powerful PHP Development Tools Every Developer Needs

When it comes to building fast, scalable, and secure web applications, PHP Development Tools play a crucial role in improving a developer's workflow. These tools simplify complex coding tasks, increase productivity, and reduce time-to-market applications. Whether you’re a freelancer, part of an agency, or seeking a PHP Development Tools Company, leveraging the right stack can make all the difference.

From debugging to deployment, PHP development tools have evolved rapidly. They now offer integrated environments, package managers, testing frameworks, and much more. This makes it easier for PHP Development Tools Companies to deliver high-quality software products consistently. Let's explore the must-have PHP tools and how they benefit both beginners and expert developers.

1. PHPStorm

A go-to IDE for PHP developers, PHPStorm offers intelligent code assistance, debugging, and testing. Its seamless integration with frameworks like Laravel, Symfony, and WordPress makes it indispensable for modern development workflows.

2. Xdebug

Xdebug is an extension for debugging and profiling PHP code. It helps developers trace errors quickly, providing a detailed breakdown of stack traces, memory usage, and performance bottlenecks.

3. Composer

Composer is a dependency manager that enables developers to manage libraries, tools, and packages easily. It’s essential for maintaining consistency in your PHP project and avoiding compatibility issues.

4. Laravel Forge

For Laravel lovers, Forge is an excellent server management tool. It simplifies the deployment of PHP apps to cloud providers like DigitalOcean, Linode, and AWS.

5. NetBeans

NetBeans is another powerful IDE with extensive support for PHP development. It supports multiple languages and integrates well with Git, SVN, and Mercurial.

✅ Book an Appointment with a PHP Expert

Looking to scale your PHP project with confidence? Book an Appointment Today, get expert insights, cost breakdowns, and the right strategy tailored to your business.

6. PHPUnit

When it comes to testing PHP applications, PHPUnit is the industry standard. It helps developers write unit tests, perform test-driven development (TDD), and ensure that code changes don’t break functionality.

7. Sublime Text + PHP Extensions

While not a dedicated PHP IDE, Sublime Text is lightweight, highly customizable, and loved for its speed. With PHP-specific plugins, it can be transformed into a lean PHP development machine.

Why PHP Development Tools Matter for Businesses

Companies offering PHP Development Tools Services focus on providing complete solutions, from development to deployment. These tools empower developers to build robust applications while maintaining security and performance standards. If you’re considering hiring a partner, choosing a PHP Development Tools Solution from a reputed firm can cut down your development time and cost.

In India, the demand for reliable PHP Development Tools in India is on the rise. Indian companies are known for offering affordable, high-quality services that meet global standards. Whether you're a startup or a large enterprise, opting to Hire PHP Developer in India is a strategic move.

The PHP Development Tools Cost varies depending on the features and scale of the project. However, the ROI is often significant, thanks to better code quality, reduced bugs, and faster deployment cycles.

Conclusion

Choosing the right PHP development tools can significantly impact the success of your web projects. From powerful IDEs like PHPStorm to essential tools like Composer and PHPUnit, every tool serves a unique purpose. Collaborating with a professional PHP Development Tools Company or availing PHP Development Tools Services ensures you have access to expert knowledge, efficient workflows, and scalable solutions.

0 notes

Text

Hiring Blockchain Developers in the UAE: Costs, Skills & Where to Start

From being a mere scope of discussion affiliated with cryptocurrencies, blockchain technology has swiftly transformed into a powerful technology serving multiple industries – finance, supply chain, healthcare, real estate, and even government services. With organizations in the UAE racing to make innovations and gain a competitive upper hand, the demand to hire blockchain developers in UAE has soared tremendously. However, finding the right resources in this domain is not easy.

In this all-encompassing guide, we are going to discuss each and everything associated with the hiring of blockchain developers in UAE, such as cost details, essential skills, and how to go about it step by step.

Why the UAE Is a Hotspot for Blockchain Talent

Dubai and Abu Dhabi in the UAE are striving to become internationally recognized centers for blockchain innovation. Fully supported initiatives such as the Dubai Blockchain Strategy and the Emirates Blockchain Strategy 2021 showcase the government’s intent to transform 50% of government transactions into blockchain technology based workflows. Additionally, the business ecosystem is also optimally set up with friendly policies, tax breaks, and global market accessibility.

With growing initiatives, specialized skill developers, and a competitive startup environment, the UAE serves as the prime location for blockchain development. Therefore, if you are looking to hire blockchain developers in UAE, the time to act is now.When Looking To Hire Blockchain Developers, What Things Should You Keep In Mind?

Regardless of your situation, the most critical component is whether hiring blockchain developers makes sense to your business. You might need developers for blockchain in the UAE if:

You aim to build a decentralized app (i.e., dApp)

You want to execute transactions via smart contracts for secured and automated dealings

You are looking to launch an NFT marketplace or a token-based project

You need secure sharing of information among several different parties

There's interest towards blockchain for use in identity checks, supply chain verifiability, or solutions dealing with finances

Key pointers to remember as you prepare for the recruitment drive

The field of Blockchain Technology is very broad and so are the skill sets that need to be hired for it. When planning to hire a blockchain expert in the UAE region, candidates should at least have these skills:

1. Major Technical Skills Needed

Proficiency in Programming Languages: Rust, Python, Go, Javascript, and Solidity for Ethereum

Contract Creation: Experience with Ethereum, Binance Smart Chain, and Hyperledger

Blockchain Architecture: Understanding nodes, data structures, and consensus algorithms

Cryptography: Understanding security protocols, public and private key encryption, and hashing

Token Streamlining: Experience with capturing events with Etherscan API and employing Web3 for token issuance

Web3 and dApp work: Experience with MetaMask and Web3.js

2. Development Tools & Platforms

Truffle and Hardhat for Testing and Deployment

Smart contract prototyping using Remix IDE

Decentralized Storage And Orals Using IPFS Along with Chainlink

Git for version control

3. Soft Skills adequacies

Problem-solving mind

Agile and collaborative development approaches

Ability to document and communicate clearly

Costs of Hiring Blockchain Developers in the UAE

The price of hiring blockchain developers is on the higher side owing to their complexity and specialization. However, their cost within the UAE is subject to other factors:

1. Hiring Model

In-House Developer: AED 25,000 - AED 45,000/month

Freelancer/Contractor: AED 150 - AED 400/hour

Dedicated Development Team via Agency: AED 35,000 - AED 65,000/month depending on team size and experience

2. Experience Level

Junior Developer (0-2 years): AED 15,000 - AED 25,000/month

Mid-Level Developer (2-5 years): AED 25,000 - AED 35,000/month

Senior Developer (5+ years): AED 35,000 - AED 50,000+/month

3. Project Scope and Complexity

The more complex your blockchain solution is (for example, multi-chain support, custom tokenomics, interoperability), the more costly it will be to develop.

Identifying the Best Blockchain Developers in the UAE

Whether you want to work with a freelancer or engage a full-blown team, the UAE has numerous avenues to tap into freelance and knowledgeable blockchain workers. Below is a compilation of some of the foremost starting points.

1. Administrative Job Assignment Sites

Said websites include Total, Upwork, and Freelancers which let you transact with specialized blockchain developers from across the globe with many being located in the UAE.

2. Blockchain Development Agencies

WDCS Technology, for example, provides specialized ready-made blockchain development consultants alongside other consulting services pertaining to the industry. If you are looking for a complete blockchain development service without going through the recruitment process, this is the ideal route for you.

3. Generic Job Portals Alongside Professional Networking Websites

For job listings, you can use Bayt and Naukrigulf.

You can meet local developers at Workshop meetup and for Talk Tech in Dubai.

For professional connections, LinkedIn UAE serves the purpose.

4. University Incubators and Hackathons

You can get in touch with some of the tech incubators from Khalifa University or take part in Gitex Future Stars to scout for innovative blockchain developers.

Systematic Guideline for Recruiting Blockchain Developers in UAE

Step 1: Define the boundaries and constraints of your project.

It should include objectives, relevant blockchain platform, features, and deadlines.

Step 2: Pick the Hiring Model

Select from in-house, freelance, or development agency based on financial resources, project details, and future goals.

Step 3: Conduct Skills & Experience Evaluation

Assess candidate skills using technical tests, coding competitions, and relevant work sample evaluations.

Step 4: Determine Organizational Values, Work Style, and Soft Skills

Look to see whether the developer fits your organizational practices, protocols, and whether they are able to work in teamwork settings.

Step 5: Effective Onboarding & Management

Implement agile project approaches with daily stand-ups and use tools like Jira or Trello for milestones to manage progress.

Legal and Regulatory Framework

If you are hiring blockchain developers in UAE and dealing with tokens, digital financial assets, or consumer information, the following regulations should be considered:

Adhere to the UAE Central Bank rules regarding digital assets

Obey the ADGM and DIFC free zone laws if operating in those areas

Have adequate Intellectual Property (IP) ownership and Non-Disclosure Agreements (NDAs) in place within your employment agreements

Most Common Issues and Solutions:

1. Limited skilled candidates

It is still a developing skill. This problem can be solved by collaboration within agencies.

2. Change Technology Quickly

Look for developers who actively participate in the community and are willing to learn new things.

3. Risks In Security

Make sure to enforce secure coding policies with internal developer teams and conduct regular audits.

Why Collaborate With A Blockchain Development Agency In The UAE?

It may seem cost-effective to hire freelancers or build an internal team, but working with a dedicated agency like WDCS Technology has added benefits, such as:

Access to a ready-to-use team of blockchain specialists.

Faster time-to-market.

All-round project assistance right from the concept through to deployment.

The ability to adjust resource levels as required with project growth.

Conclusion

The UAE is a good market for businesses looking to hire blockchain developers because it has a blend of progressive policies and a growing technology ecosystem. However, having the skilled talent to help build and manage a decentralized platform, digital tokens, or secure data flows is what makes the difference.

If you are looking to accelerate the pace of your blockchain project while avoiding expensive mistakes, it is recommended that you consult local experts who know the market well.

Have You Prepared Yourself To Employ Blockchain Developers In UAE?

WDCS Technologies provides UAE centered blockchain development services. UAE certified specialists can help execute your ideas securely and efficiently and within the designated timeframe.

Visit Our Services or Get In Touch With Us To Start!

0 notes

Text

Why ‘Learning Java’ Won’t Cut It Anymore (And What to Do Instead)

Master Java with context — full stack, marketing, design, AI, and real-world training to become job-ready with Cyberinfomines.

Walk into any engineering college today and ask students what programming language they’re learning. The most common answer? Java. For decades, Java has been considered the holy grail for aspiring developers. But in 2025, is simply “learning Java” enough to get a job, build something meaningful, or even keep pace with the evolving tech landscape?

Let’s be honest — learning Java in isolation won’t cut it anymore. You need context, application, ecosystem understanding, and business awareness. And more importantly, you need transformation — not just instruction. That’s where Cyberinfomines comes in.

Let’s unpack why Java isn’t the final destination — and how you can future-proof your learning journey with the right approach.

1. Java Is Just One Piece of a Much Bigger Puzzle

Knowing Java is like knowing how to use a hammer — it’s a tool. But building a house requires architecture, plumbing, wiring, and design. In the same way, modern development needs you to understand how Java fits into the broader ecosystem.

Today’s applications require:

Front-end frameworks (React, Angular)

Back-end orchestration (Spring Boot, Node.js)

Cloud environments (AWS, GCP, Azure)

Databases (MongoDB, PostgreSQL, MySQL)

APIs and microservices

CI/CD pipelines and DevOps workflows

Without these, Java becomes a limiting skill rather than an empowering one. That’s why Cyberinfomines trains students through Full Stack Developer programs — not just “Java.”

2. The Industry Demands Job-Ready, Not Just Trained

It’s not about whether you’ve heard of concepts like REST APIs or Docker; it’s about whether you’ve built something using them. Recruiters are no longer impressed with your familiarity — they want your functionality.

That’s why we emphasize:

Project Based Training that mimics real workplace challenges

Continuous integration tools, Git/GitHub workflows

Working on Existing Website & Mobile Applications to understand deployment, bugs, and testing cycles

Exposure to UI/UX, backend architecture, and server handling

This applied learning means you walk into interviews with a portfolio, not just a certificate.

3. Java Developers Now Need Cross-Disciplinary Skills

A good developer today must also know a bit about:

Product thinking

APIs and webhooks

Application security

SEO principles

User experience

Through Web Application Designing and Website Designing modules, we help learners merge technical skills with business and user priorities.

4. You Can’t Ignore Digital Marketing Anymore

Wait — what does digital marketing have to do with programming?

Everything.

Even the best application will die in silence if it’s not marketed correctly. Developers today are expected to collaborate with marketers, understand basic analytics, and build platforms that support growth strategies.

That’s why we offer:

Search Engine Optimization (SEO) integration strategies

How email workflows connect through Email Marketing APIs

Site analytics with Web Analytics & Data Analytics

Building mobile-first experiences for Mobile Marketing campaigns

So no, Java can’t do it alone. You need a broader lens to see the big picture.

5. AI & Automation Are Reshaping Everything

Please visit our website to know more:-https://cyberinfomines.com/blog-details/why-learning-java-won%E2%80%99t-cut-it-anymore-and-what-to-do-instead

0 notes

Text

How a Professional Web Development Company in Udaipur Builds Scalable Websites

In today’s fast-paced digital world, your website isn’t just a digital brochure — it’s your 24/7 storefront, marketing engine, and sales channel. But not every website is built to last or grow. This is where scalable web development comes into play.For businesses in Rajasthan’s growing tech hub, choosing the right web development company in Udaipur can make or break your digital success. In this article, we’ll explore how WebSenor, a leading player in the industry, helps startups, brands, and enterprises build websites that scale — not just technically, but strategically.

Why Scalability Matters in Modern Web Development

As more Udaipur tech startups and businesses go digital, a scalable website ensures your online presence grows with your company — without needing a complete rebuild every year.

Scalability means your website:

Handles increasing traffic without slowing down

Adapts to new features or user demands

Remains secure, fast, and reliable as you grow

Whether you’re launching an eCommerce website in Udaipur, building an app for services, or developing a customer portal, scalable architecture is the foundation.

Meet WebSenor – Your Web Development Partner in Udaipur

WebSenor Technologies, a trusted Udaipur IT company, has been delivering custom website development in Udaipur and globally since 2008. Known for their strategic thinking, expert team, and client-first mindset, they’ve earned their spot among the best web development firms in Udaipur. What sets them apart? Their ability to not just design beautiful websites, but to engineer platforms built for scale — from traffic to features to integrations.

What Exactly Is a Scalable Website?

A scalable website is one that grows with your business — whether that’s more visitors, more products, or more complex workflows.

Business Perspective:

Can handle thousands of users without performance drops

Easily integrates new features or plugins

Offers seamless user experience across devices

Technical Perspective:

Built using flexible frameworks and architecture

Cloud-ready with load balancing and caching

Optimized for security, speed, and modularity

Think of it like constructing a building with space to expand: it may start with one floor, but the foundation is strong enough to add five more later.

WebSenor’s Proven Process for Building Scalable Websites

Here’s how WebSenor is one of the top-rated Udaipur developers — delivers scalability from the first step:

1. Discovery & Requirement Analysis

They begin by deeply understanding your business needs, user base, and long-term goals. This early strategy phase ensures the right roadmap for future growth is in place.

2. Scalable Architecture Planning

WebSenor uses:

Microservices for independent functionality

Modular design for faster updates

Cloud-first architecture (AWS, Azure)

Database design that handles scaling easily (NoSQL, relational hybrid models)

3. Technology Stack Selection

They work with the most powerful and modern stacks:

React.js, Vue.js (for the frontend)

Node.js, Laravel, Django (for the backend)

MongoDB, MySQL, PostgreSQL for data These tools are selected based on project needs — no bloated tech, only what works best.

4. Agile Development & Code Quality

Their agile methodology allows flexible development in sprints. With Git-based version control, clean and reusable code, and collaborative workflows, they ensure top-quality software every time.

5. Cloud Integration & Hosting

WebSenor implements:

Auto-scaling cloud environments

CDN for global speed

Load balancing for high-traffic periods

This ensures your site performs reliably — whether you have 100 or 100,000 users.

6. Testing & Optimization

From speed tests to mobile responsiveness to security audits, every site is battle-tested before launch. Performance is fine-tuned using tools like Lighthouse, GTmetrix, and custom scripts.

Case Study: Scalable eCommerce for a Growing Udaipur Business

One of WebSenor’s recent projects involved an eCommerce website development in Udaipur for a local fashion brand.

Challenge:The client needed a simple store for now but anticipated scaling to 1,000+ products and international orders in the future.

Solution:WebSenor built a modular eCommerce platform using Laravel and Vue.js, integrated with AWS hosting. With scalable product catalogs and multilingual support, the store handled:

4x growth in traffic in 6 months

2,000+ monthly transactions

99.99% uptime during sale events

Client Feedback:

“WebSenor didn't just build our website — they built a system that grows with us. The team was responsive, transparent, and truly professional.”

Expert Insights from WebSenor’s Team

“Too often, startups focus only on what they need today. We help them plan 1–3 years ahead. That’s where real savings and growth come from.”

He also shared this advice:

Avoid hardcoded solutions

Always design databases for growth

Prioritize speed and user experience from Day 1

WebSenor’s team includes full-stack experts, UI/UX designers, and cloud engineers who are passionate about future-proof development.

Why Trust WebSenor – Udaipur’s Web Development Experts

Here’s why businesses across India and overseas choose WebSenor:

15+ years of development experience

300+ successful projects

A strong team of local Udaipur development experts

Featured among the best web development companies in Udaipur

Client base in the US, UK, UAE, and Australia

Ready to Build a Scalable Website? Get Started with WebSenor

Whether you're a startup, SME, or growing brand, WebSenor offers tailored web development services in Udaipur built for growth and stability.

👉 View Portfolio Book a Free Consultation Explore Custom Website Development

From eCommerce to custom applications, they’ll guide you every step of the way.

Conclusion

Scalability isn’t just a feature, it’s a necessity. As your business grows, your website should never hold you back. Partnering with a professional and experienced team like WebSenor means you’re not just getting a website. You’re investing in a future-ready digital platform, backed by some of the best web development minds in Udaipur. WebSenor is more than a service provider — they're your growth partner.

0 notes

Text

How to Set Up Your Local Development Environment for WordPress

Setting up a local development environment is one of the best ways to experiment with and build WordPress websites efficiently. It offers you a safe space to test themes, plugins, and updates before applying changes to a live site. Whether you’re a beginner or an experienced developer, having a local environment is essential in streamlining your workflow and minimizing website downtime.

Before we dive into the technical steps, it’s worth mentioning the benefits of WordPress for your business website. WordPress offers unmatched flexibility, scalability, and user-friendliness, making it an ideal platform for businesses of all sizes. When paired with a solid local development setup, WordPress becomes even more powerful in enabling fast and secure site builds.

Step 1: Choose Your Local Development Tool

There are several local development tools available that cater specifically to WordPress users:

Local by Flywheel (now Local WP): Extremely beginner-friendly with features like SSL support and one-click WordPress installs.

XAMPP: A more general-purpose tool offering Apache, MySQL, PHP, and Perl support.

MAMP: Ideal for macOS users.

DevKinsta: Built by Kinsta, it offers seamless WordPress development and staging capabilities.

Choose the one that suits your OS and comfort level.

Step 2: Install WordPress Locally

Once you’ve chosen your tool:

Install the software and launch it.

Create a new WordPress site through the interface.

Set up your site name, username, password, and email.

After setup, you’ll get access to your WordPress dashboard locally, allowing you to install themes, plugins, and begin your customizations.

Step 3: Configure Your Development Environment

To ensure an efficient workflow, consider these configurations:

Enable Debug Mode: Helps in identifying PHP errors.

Use Version Control (e.g., Git): Keeps your changes tracked and manageable.

Database Access: Tools like phpMyAdmin help manage your WordPress database locally.

If your project requires dynamic functionality, leveraging PHP Development Services during the setup phase can ensure custom features are implemented correctly from the beginning.

Step 4: Customize Themes and Plugins Safely

With your local environment set up, now's the time to begin theme development or customization. You can safely create or modify a child theme, experiment with new plugins, and write custom code without any risk of affecting your live site.

For those unfamiliar with theme structures or WordPress standards, it’s often wise to hire a professional WordPress developer who understands best practices and can ensure clean, maintainable code.

Step 5: Syncing to a Live Server

After building and testing your site locally, you'll eventually want to push it live. Popular methods include:

Using a plugin like Duplicator or All-in-One WP Migration

Manual migration via FTP and phpMyAdmin

Using version-controlled deployment tools

Syncing should always be done carefully to avoid overwriting crucial data. Regular backups and testing are essential.

Step 6: Maintain Your WordPress Site Post-Launch

Launching your website is only the beginning. Ongoing updates, security patches, and performance optimization are critical for long-term success. Enlisting website maintenance services ensures your site remains fast, secure, and up-to-date.

Services can include:

Core, plugin, and theme updates

Malware scans and security hardening

Site performance monitoring

Regular backups

Final Thoughts

A local WordPress development environment not only speeds up your development process but also protects your live website from unintended changes and errors. With tools and strategies now more accessible than ever, there's no reason not to use one.From learning the basics to running advanced builds, setting up locally gives you the confidence and space to grow your WordPress skills. And if you want to see real-world examples or follow along with tips and tricks I share, feel free to check out my work on Instagram for practical inspiration.

0 notes

Text

From Code to Cloud: End-to-End Automation with CI/CD and IaC

Modern software development demands speed, reliability, and repeatability. Organizations aiming to fully leverage cloud computing must go beyond just migrating applications—they must automate the entire lifecycle, from writing code to deploying it in the cloud.

Two key practices make this possible: CI/CD (Continuous Integration and Continuous Deployment) and Infrastructure as Code (IaC). When combined, they provide an end-to-end automation framework that accelerates delivery while ensuring consistency and control.

In this post, we’ll explore how CI/CD and IaC work together to turn your development workflow into a streamlined, automated engine that takes your code seamlessly to the cloud.

Understanding CI/CD and IaC

What is CI/CD?

Continuous Integration is the practice of automatically integrating code changes into a shared repository, running tests to detect errors early. Continuous Deployment takes it further by automatically deploying tested code to production environments.

Benefits:

Faster and safer deployments

Reduced manual errors

Immediate feedback on code quality

Shorter time to market

What is Infrastructure as Code (IaC)?

Infrastructure as Code is the process of managing cloud infrastructure using code instead of manual provisioning. Tools like Terraform, AWS CloudFormation, and Pulumi allow teams to write, version, and deploy infrastructure configurations just like application code.

Benefits:

Environment consistency

Version control and change tracking

Faster provisioning and recovery

Improved collaboration between dev and ops

Why Combine CI/CD and IaC?

When CI/CD pipelines are integrated with Infrastructure as Code, the result is end-to-end automation—from code changes to infrastructure setup and deployment. This combination ensures:

Every environment is created exactly the same way

Code and infrastructure changes go through the same review and testing process

Deployments are consistent, secure, and repeatable

Real-World Example of Code-to-Cloud Automation

Let’s look at a typical workflow:

A developer pushes code and infrastructure configuration files to a Git repository.

A CI pipeline triggers:

Runs unit tests and static analysis

Lints and validates infrastructure templates

Packages the application

If tests pass, a CD pipeline triggers:

Applies infrastructure changes using Terraform

Deploys the new application build

Runs post-deployment tests

Sends notifications to the team

This approach means no manual steps are required—from writing the code to having it live in production.

Benefits of End-to-End Automation

1. Speed

CI/CD with IaC shortens the release cycle dramatically. Teams can deploy updates several times a day with minimal friction.

2. Scalability

IaC enables infrastructure to scale dynamically as needed. CI/CD ensures that changes scale alongside applications.

3. Security and Compliance

Automated pipelines can integrate security scans, secrets management, and compliance checks early in the lifecycle (a practice known as Shift Left Security).

4. Cost Efficiency

Resources are provisioned and deprovisioned automatically, reducing idle time and waste. Plus, errors are caught early, preventing costly downtime.

5. Resilience

Because infrastructure is version-controlled, it can be rebuilt quickly in case of failure. Teams can roll back to previous versions of both code and infrastructure with confidence.

How Salzen Cloud Delivers Code-to-Cloud Automation

At Salzen Cloud, we help businesses design, implement, and scale CI/CD + IaC workflows tailored to their cloud strategy. Our services include:

CI/CD pipeline setup using tools like GitHub Actions, GitLab CI, Jenkins

Infrastructure as Code with Terraform, AWS CDK, and more

Automated testing, monitoring, and security integration

Training teams on DevOps best practices

We recently worked with a SaaS company to rebuild their deployment process from scratch. The result? Deployment times reduced by 85%, infrastructure provisioning went from hours to minutes, and incident frequency dropped by half.

Best Practices to Get Started

Start with version control: Keep all code and infrastructure in Git

Use modular IaC: Create reusable and testable components

Integrate early testing: Catch issues before they reach production

Automate rollback: Always have a safe path back in case of failure

Monitor everything: Set up real-time alerts and dashboards

Final Thoughts

CI/CD and IaC are no longer optional—they’re the foundation of modern cloud-native operations. Together, they empower development teams to move fast, stay secure, and deliver value continuously.

If you’re ready to transform your development workflow and go from code to cloud seamlessly, reach out to Salzen Cloud. Our team can help architect a solution that scales with your business and accelerates innovation.

0 notes

Text

👨💻 Learn Git and GitHub – Master Version Control Like a Pro! Ready to take your coding skills to the next level? Whether you're a beginner or a budding developer, learning Git and GitHub is essential in today’s collaborative development world. 🔧 Track changes, manage code versions, collaborate with teams, and contribute to open-source projects with ease. In this course, you'll learn: ✅ Git basics: clone, commit, push, pull ✅ Branching and merging strategies ✅ Resolving conflicts ✅ Working with GitHub repositories ✅ Real-world collaboration workflows

💡 Boost your resume and streamline your development process – one commit at a time! Perfect for developers, students, and tech enthusiasts. Start building better code, together.

🚀 Enroll now and own your code confidently!

#LearnGit #GitAndGitHub #VersionControl #CodeCollaboration #GitTutorial #GitHubLearning #OpenSource #CodeWithConfidence #DeveloperSkills #ProgrammingBasics #TechEducation #GitWorkflow #CommitPushPull #CodeVersioning #GitMastery #DevJourney

0 notes

Text

Unlocking the Secrets to Full Stack Web Development

Full stack web development is a multifaceted discipline that combines creativity with technical skills. If you're eager to unlock the secrets of this dynamic field, this blog will guide you through the essential components, skills, and strategies to become a successful full stack developer.

For those looking to enhance their skills, Full Stack Developer Course in Bangalore programs offer comprehensive education and job placement assistance, making it easier to master this tool and advance your career.

What Does Full Stack Development Entail?

Full stack development involves both front-end and back-end technologies. Full stack developers are equipped to build entire web applications, managing everything from user interface design to server-side logic and database management.

Core Skills Every Full Stack Developer Should Master

1. Front-End Development

The front end is the part of the application that users interact with. Key skills include:

HTML: The markup language for structuring web content.

CSS: Responsible for the visual presentation and layout.

JavaScript: Adds interactivity and functionality to web pages.

Frameworks: Familiarize yourself with front-end frameworks like React, Angular, or Vue.js to speed up development.

2. Back-End Development

The back end handles the server-side logic and database interactions. Important skills include:

Server-Side Languages: Learn languages such as Node.js, Python, Ruby, or Java.

Databases: Understand both SQL (MySQL, PostgreSQL) and NoSQL (MongoDB) databases.

APIs: Learn how to create and consume RESTful and GraphQL APIs for data exchange.

3. Version Control Systems

Version control is essential for managing code changes and collaborating with others. Git is the industry standard. Familiarize yourself with commands and workflows.

4. Deployment and Hosting

Knowing how to deploy applications is critical. Explore:

Cloud Platforms: Get to know services like AWS, Heroku, or DigitalOcean for hosting.

Containerization: Learn about Docker to streamline application deployment.

With the aid of Best Online Training & Placement programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

Strategies for Success

Step 1: Start with the Fundamentals

Begin your journey by mastering HTML, CSS, and JavaScript. Utilize online platforms like Codecademy, freeCodeCamp, or MDN Web Docs to build a strong foundation.

Step 2: Build Real Projects

Apply what you learn by creating real-world projects. Start simple with personal websites or small applications. This hands-on experience will solidify your understanding.

Step 3: Explore Front-End Frameworks

Once you’re comfortable with the basics, dive into a front-end framework like React or Angular. These tools will enhance your development speed and efficiency.

Step 4: Learn Back-End Technologies

Choose a back-end language and framework that interests you. Node.js with Express is a great choice for JavaScript fans. Build RESTful APIs and connect them to a database.

Step 5: Master Git and Version Control

Understand the ins and outs of Git. Practice branching, merging, and collaboration on platforms like GitHub to enhance your workflow.

Step 6: Dive into Advanced Topics

As you progress, explore advanced topics such as:

Authentication and Authorization: Learn how to secure your applications.

Performance Optimization: Improve the speed and efficiency of your applications.

Security Best Practices: Protect your applications from common vulnerabilities.

Building Your Portfolio

Create a professional portfolio that showcases your skills and projects. Include:

Project Descriptions: Highlight the technologies used and your role in each project.

Blog: Share your learning journey or insights on technical topics.

Resume: Keep it updated to reflect your skills and experiences.

Networking and Job Opportunities

Engage with the tech community through online forums, meetups, and networking events. Building connections can lead to job opportunities, mentorship, and collaborations.

Conclusion

Unlocking the secrets to full stack web development is a rewarding journey that requires commitment and continuous learning. By mastering the essential skills and following the strategies outlined in this blog, you’ll be well on your way to becoming a successful full stack developer. Embrace the challenges, keep coding, and enjoy the adventure.

0 notes

Text

Learn Salesforce (Admin + Developer) with LWC Live Project

Introduction: Why Learn Salesforce in 2025?

Salesforce isn't just a buzzword—it's the backbone of CRM systems powering businesses across industries. Whether you’re eyeing a career switch, aiming to boost your tech resume, or already in the ecosystem, learning Salesforce (Admin + Developer) with Lightning Web Components (LWC) and Live Project experience can fast-track your growth.

In today’s digital-first world, companies want Salesforce pros who don’t just know theory—they can build, automate, and solve real business problems. That’s why this course—Learn Salesforce (Admin + Developer) with LWC Live Project—is gaining attention. It offers a perfect mix of foundational concepts, hands-on development, and real-world exposure.

What You’ll Learn in This Course (And Why It Matters)

Let’s break down what makes this course stand out from the crowd.

✅ Salesforce Admin Fundamentals

Before diving into development, you'll master the core essentials:

Creating custom objects, fields, and relationships

Automating processes with Workflow Rules and Flow

Building intuitive Lightning Pages

Managing users, roles, and security

Generating dashboards and reports for decision-making

These are the exact skills Salesforce Admins use daily to help businesses streamline their operations.

✅ Developer Skills You Can Use from Day One

This course bridges the gap between theory and practice. You’ll learn:

Apex programming (triggers, classes, SOQL, and more)

Visualforce basics

Building Lightning Components

Advanced LWC (Lightning Web Components) development

Unlike typical developer tutorials, you won’t just follow along. You’ll build actual components, debug real scenarios, and write scalable code that works.

✅ Lightning Web Components: The Future of Salesforce

If you want to stay relevant, LWC is a must-learn. This course helps you:

Understand how LWC works with core Salesforce data

Build reusable components

Interact with Apex backend using modern JavaScript

Implement events, component communication, and conditional rendering

These are hot skills employers are actively searching for—especially in 2025 and beyond.

The Power of Learning with a Live Project

You can’t call yourself job-ready without hands-on practice.

That’s why this course includes a Live Project, simulating how real-world businesses use Salesforce. You’ll:

Gather requirements like a real consultant

Build a working CRM or service app

Use Git, deployment tools, and testing strategies

Present your work just like in a client meeting

This turns your learning from passive watching to active doing, and makes your resume stand out.

Who Is This Course For?

Here’s the great part: this course is beginner-friendly but powerful enough for intermediates looking to level up.

It’s for:

Freshers or career changers wanting to break into tech

Working professionals looking to pivot into Salesforce roles

Aspiring developers who want to learn Apex + LWC

Admins who want to become full-stack Salesforce professionals

No coding background? You’ll still be able to follow along thanks to the step-by-step breakdowns and real-time guidance.

Why Salesforce Skills Are in Demand in 2025

A few fast facts to show just how hot the Salesforce job market is:

Over 150,000 new job openings expected globally in the Salesforce ecosystem this year

Salesforce Admins and Developers earn $80k–$140k+ USD annually

LWC and Apex skills are now required by 7 out of 10 Salesforce job listings

Remote roles and contract gigs are increasing, giving you flexible career options

With Salesforce constantly evolving, this course gives you an up-to-date skillset that companies are actively hiring for.

Trending Keywords Optimized in This Blog:

Learn Salesforce Admin and Developer

Salesforce LWC project-based course

Salesforce Admin certification training

Apex and Lightning Web Components

Salesforce Live Project training

Hands-on Salesforce course 2025

Real-world Salesforce LWC examples

Salesforce developer job-ready program

Master Salesforce with LWC in 2025

Salesforce for beginners to advanced

These keywords help ensure your blog ranks well for multiple search intents—course seekers, career switchers, and job-hunters.

The Benefits You’ll Walk Away With

Let’s be honest. You’re not here just to learn. You’re here to get results. This course gives you:

🔹 Real Confidence

You’ll go from "I think I get it" to "I know how to build this."

🔹 Portfolio-Ready Projects

Walk away with a live Salesforce app you can show recruiters and hiring managers.

🔹 Practical Experience

Understand how real clients work, think, and change requirements on the fly.

🔹 Certification Preparation

The course aligns with content from:

Salesforce Certified Administrator

Salesforce Platform Developer I

So you can study with purpose and even prep for exams on the side.

🔹 Interview Readiness

You’ll learn to speak confidently about your live project, troubleshoot in real-time, and answer technical questions.

How This Course Is Different from Others

There are tons of Salesforce courses out there. Here’s what makes this one stand out:

Feature

Other Courses

This Course

LWC Training

❌ Often missing or outdated

✅ Fully integrated

Live Project

❌ Simulated only

✅ Real-world use case