#saml and oauth2

Explore tagged Tumblr posts

Text

SAML and OAuth2

SAML and OAuth 2.0 - Same same but different! Explore the key differences and learn how to implement these authentication and authorization protocols in Drupal for enhanced security and user experience.

0 notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Optimizing Digital Identity with the Right CIAM Partner

In today’s hyper-connected digital economy, ensuring secure and seamless user access is more than a technical requirement—it’s a business necessity. Enterprises are investing in Customer Identity and Access Management (CIAM) solutions to provide personalized experiences, meet regulatory obligations, and safeguard customer data. However, the marketplace is flooded with CIAM vendors, each claiming to offer the most innovative platform. That’s why understanding the Best Practices for Evaluating CIAM Providers is critical to making the right decision.

The right CIAM solution should do more than just handle logins—it should empower your business to scale, secure digital touchpoints, and improve user experience. This blog offers a detailed breakdown of the Best Practices for Evaluating CIAM Providers, helping businesses navigate complexity and choose strategically.

Start with Business Objectives and Use Cases

Before comparing technical features or pricing, businesses must align their identity strategy with core objectives. This is one of the essential Best Practices for Evaluating CIAM Providers. Determine what success looks like in your CIAM initiative.

Consider the following:

What are the key identity touchpoints across web, mobile, and APIs?

Are you serving B2C, B2B, or B2E users?

Do you need support for multi-brand, multi-region, or multi-language experiences?

What’s your roadmap for expansion, integrations, and identity-driven personalization?

Clear articulation of goals streamlines vendor selection and minimizes mismatches later.

Security and Compliance Must Be Non-Negotiable

Security is the foundation of CIAM. Among the Best Practices for Evaluating CIAM Providers, ensuring enterprise-grade security capabilities is a top priority. Threats such as credential stuffing, phishing, and account takeovers are on the rise.

Look for a provider that offers:

Multi-factor authentication (MFA)

Passwordless authentication support

Risk-based access controls

Advanced threat intelligence

End-to-end encryption

Role-based access management

Additionally, regulatory compliance cannot be an afterthought. Ensure the provider helps you comply with:

GDPR

CCPA

HIPAA

SOC 2

ISO/IEC 27001

Choose a provider with a proven record in privacy, data residency, and audit trails to ensure global compliance.

Scalability and Uptime Assurance

One of the core Best Practices for Evaluating CIAM Providers is evaluating scalability. As your user base grows, so should your identity platform—without downtime or degradation.

Evaluate providers on:

Cloud-native infrastructure

Global data centers

CDN support

Horizontal scaling

High availability (99.99% uptime SLA)

The platform should support rapid onboarding of millions of users, seasonal peaks, and business expansions without re-architecture.

Integration Capabilities Across the Ecosystem

Your CIAM platform must integrate with your digital infrastructure. This is among the most strategic Best Practices for Evaluating CIAM Providers, especially for enterprise environments.

Evaluate whether the CIAM provider offers:

RESTful APIs and SDKs

Webhooks and event triggers

Federation protocols (OAuth2, SAML, OpenID Connect)

Pre-built connectors to tools like Salesforce, Adobe, and Microsoft Azure

Compatibility with CI/CD pipelines

Robust integrations future-proof your identity management and accelerate value realization across departments.

Delivering a Seamless User Experience

Modern consumers expect frictionless, secure interactions. One of the most user-focused Best Practices for Evaluating CIAM Providers is ensuring that the platform can deliver intuitive identity journeys.

Key UX features to evaluate:

Social logins (Google, Facebook, Apple ID)

Branded and customizable login screens

Progressive profiling

Self-service account recovery

Passwordless options (biometric, magic link, OTP)

A positive user experience reduces abandonment, improves engagement, and strengthens brand loyalty.

Privacy Management and Consent Control

Today’s users are privacy-conscious and demand control over their data. Among the Best Practices for Evaluating CIAM Providers, a robust privacy management engine is a must.

Ensure your CIAM solution offers:

Real-time consent capture

Preference management dashboards

Data minimization tools

Support for data portability and deletion

Legal versioning of consent forms

Automated compliance workflows

These capabilities are crucial not only for compliance but for maintaining customer trust.

Customization and Workflow Orchestration

Not all businesses are the same—and neither are their CIAM needs. As part of the Best Practices for Evaluating CIAM Providers, check if the platform offers customization without heavy development work.

Evaluate flexibility in:

Theming and branding

Custom attributes and registration fields

Workflow design (drag-and-drop or code-based)

Event hooks and triggers

Conditional logic (e.g., location-based MFA)

The provider should allow you to modify onboarding flows, authentication rules, and profile enrichment strategies without vendor lock-in.

Analytics and Reporting

Understanding how users interact with your system is vital for improving both security and experience. A key element in the Best Practices for Evaluating CIAM Providers is the presence of embedded analytics tools.

Seek CIAM platforms that provide:

Real-time dashboards

Login and registration funnel analysis

Session intelligence

Anomaly detection and alerting

Export and API access for external BI tools

Data-driven insights can optimize journeys, identify threats, and uncover opportunities for personalization.

Vendor Support and Community Ecosystem

Strong vendor support can be the difference between a successful rollout and a failed project. Among the critical Best Practices for Evaluating CIAM Providers is reviewing the quality of vendor support.

Look for:

24/7 global support

Dedicated success managers

Extensive documentation and API guides

Developer forums and Slack communities

Transparent product roadmaps

A vendor invested in your success will accelerate deployment and simplify scaling.

Transparent Pricing and Clear ROI

Pricing models for CIAM vary—by MAU (monthly active users), API calls, feature tiers, or enterprise licenses. One of the most practical Best Practices for Evaluating CIAM Providers is clarity in cost structure.

Before signing:

Understand the pricing model

Identify additional or hidden fees

Calculate cost per user vs. benefit

Forecast scale-related charges

Ask for detailed usage analytics

The right CIAM partner should provide ROI in reduced support tickets, improved user retention, and faster onboarding.

Check Real-World Performance and Customer Stories

Proof matters. The final among the Best Practices for Evaluating CIAM Providers is validation through case studies and references.

Request:

Industry-specific success stories

Performance benchmarks

Case studies on global scalability

Testimonials from enterprise clients

Implementation timelines

This ensures you’re choosing a provider with experience in real-world, complex environments—not just PowerPoint capabilities.

Read Full Article : https://bizinfopro.com/webinars/best-practices-for-evaluating-ciam-providers/

About Us : BizInfoPro is a modern business publication designed to inform, inspire, and empower decision-makers, entrepreneurs, and forward-thinking professionals. With a focus on practical insights and in‑depth analysis, it explores the evolving landscape of global business—covering emerging markets, industry innovations, strategic growth opportunities, and actionable content that supports smarter decision‑making.

#CustomerExperience#CyberSecurityBestPractices#DigitalIdentityManagement#CIAMSolutions#IdentityAndAccessManagement

0 notes

Text

Unlocking the Power of Keycloak for Identity and Access Management (IAM)

In the ever-evolving digital landscape, managing identity and access securely is paramount. Businesses need a robust, scalable, and flexible solution to safeguard their applications and services. Enter Keycloak, an open-source Identity and Access Management (IAM) tool that has emerged as a game-changer for organizations worldwide.

What is Keycloak?

Keycloak is an open-source IAM solution developed by Red Hat, designed to provide secure authentication and authorization for applications. It simplifies user management while supporting modern security protocols such as OAuth2, OpenID Connect, and SAML. With its rich feature set and community support, Keycloak is a preferred choice for developers and administrators alike.

Key Features of Keycloak

1. Single Sign-On (SSO)

Keycloak enables users to log in once and gain access to multiple applications without re-authenticating. This enhances user experience and reduces friction.

2. Social Login Integration

With out-of-the-box support for social login providers like Google, Facebook, and Twitter, Keycloak makes it easy to offer social authentication for applications.

3. Fine-Grained Access Control

Keycloak allows administrators to define roles, permissions, and policies to control access to resources. This ensures that users only access what they’re authorized to.

4. User Federation

Keycloak supports integrating with existing user directories like LDAP and Active Directory. This simplifies the adoption process by leveraging existing user bases.

5. Extensibility

Its plugin-based architecture allows customization to fit unique business requirements. From custom themes to additional authentication mechanisms, the possibilities are vast.

6. Multi-Factor Authentication (MFA)

For enhanced security, Keycloak supports MFA, adding an extra layer of protection to user accounts.

Why Choose Keycloak for IAM?

Open Source Advantage: Being open source, Keycloak eliminates vendor lock-in and offers complete transparency.

Scalability: It handles millions of users and adapts to growing business needs.

Developer-Friendly: Its RESTful APIs and SDKs streamline integration into existing applications.

Active Community: Keycloak’s active community ensures continuous updates, bug fixes, and feature enhancements.

Real-World Use Cases

E-Commerce Platforms: Managing customer accounts, providing social logins, and enabling secure payments.

Enterprise Applications: Centralized authentication for internal tools and systems.

Educational Portals: Facilitating SSO for students and staff across multiple applications.

Cloud and DevOps: Securing APIs and managing developer access in CI/CD pipelines.

Getting Started with Keycloak

Installation: Keycloak can be deployed on-premises or in the cloud. It supports Docker and Kubernetes for easy containerized deployment.

Configuration: After installation, configure realms, clients, roles, and users to set up your IAM environment.

Integration: Use Keycloak’s adapters or APIs to integrate it with your applications.

A Glimpse into the Future

As organizations embrace digital transformation, IAM solutions like Keycloak are becoming indispensable. With its commitment to security, scalability, and community-driven innovation, Keycloak is set to remain at the forefront of IAM solutions.

Final Thoughts

Keycloak is not just an IAM tool; it’s a strategic asset that empowers businesses to secure their digital ecosystem. By simplifying user authentication and access management, it helps organizations focus on what truly matters: delivering value to their customers.

So, whether you’re a developer building a new application or an administrator managing enterprise security, Keycloak is a tool worth exploring. Its features, flexibility, and community support make it a standout choice in the crowded IAM space.

For more information visit : https://www.hawkstack.com/

0 notes

Text

API Integration Services

Jellyfish Technologies is one of the most advanced companies in developing digital products and software, with more than 150 experts and over 13 years of experience. We have completed over 4,000 web, mobile, and software projects.

We are dealing with custom API integration services for iOS, Android, and web applications, using a variety of authentication methods, including OAuth, OAuth1, OAuth2, JSON Web Token (JWT), and SAML. At Jellyfish Technologies, we are on the focus of modernization towards developing the most efficient digital ecosystems possible. From consulting to development, Jellyfish Technologies provides full-scale services offered by one of the best API development companies around to ensure that your project succeeds, consulting, and more, to drive your project’s success.

0 notes

Text

Innovating on Authentication Standards

By George Fletcher and Lovlesh Chhabra

When Yahoo and AOL came together a year ago as a part of the new Verizon subsidiary Oath, we took on the challenge of unifying their identity platforms based on current identity standards. Identity standards have been a critical part of the Internet ecosystem over the last 20+ years. From single-sign-on and identity federation with SAML; to the newer identity protocols including OpenID Connect, OAuth2, JOSE, and SCIM (to name a few); to the explorations of “self-sovereign identity” based on distributed ledger technologies; standards have played a key role in providing a secure identity layer for the Internet.

As we navigated this journey, we ran across a number of different use cases where there was either no standard or no best practice available for our varied and complicated needs. Instead of creating entirely new standards to solve our problems, we found it more productive to use existing standards in new ways.

One such use case arose when we realized that we needed to migrate the identity stored in mobile apps from the legacy identity provider to the new Oath identity platform. For most browser (mobile or desktop) use cases, this doesn’t present a huge problem; some DNS magic and HTTP redirects and the user will sign in at the correct endpoint. Also it’s expected for users accessing services via their browser to have to sign in now and then.

However, for mobile applications it's a completely different story. The normal user pattern for mobile apps is for the user to sign in (via OpenID Connect or OAuth2) and for the app to then be issued long-lived tokens (well, the refresh token is long lived) and the user never has to sign in again on the device (entering a password on the device is NOT a good experience for the user).

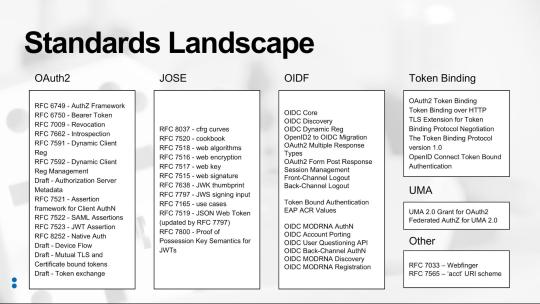

So the issue is, how do we allow the mobile app to move from one identity provider to another without the user having to re-enter their credentials? The solution came from researching what standards currently exist that might address this use case (see figure “Standards Landscape” below) and finding the OAuth 2.0 Token Exchange draft specification (https://tools.ietf.org/html/draft-ietf-oauth-token-exchange-13).

The Token Exchange draft allows for a given token to be exchanged for new tokens in a different domain. This could be used to manage the “audience” of a token that needs to be passed among a set of microservices to accomplish a task on behalf of the user, as an example. For the use case at hand, we created a specific implementation of the Token Exchange specification (a profile) to allow the refresh token from the originating Identity Provider (IDP) to be exchanged for new tokens from the consolidated IDP. By profiling this draft standard we were able to create a much better user experience for our consumers and do so without inventing proprietary mechanisms.

During this identity technical consolidation we also had to address how to support sharing signed-in users across mobile applications written by the same company (technically, signed with the same vendor signing key). Specifically, how can a signed-in user to Yahoo Mail not have to re-sign in when they start using the Yahoo Sports app? The current best practice for this is captured in OAuth 2.0 for Natives Apps (RFC 8252). However, the flow described by this specification requires that the mobile device system browser hold the user’s authenticated sessions. This has some drawbacks such as users clearing their cookies, or using private browsing mode, or even worse, requiring the IDPs to support multiple users signed in at the same time (not something most IDPs support).

While, RFC 8252 provides a mechanism for single-sign-on (SSO) across mobile apps provided by any vendor, we wanted a better solution for apps provided by Oath. So we looked at how could we enable mobile apps signed by the vendor to share the signed-in state in a more “back channel” way. One important fact is that mobile apps cryptographically signed by the same vender can securely share data via the device keychain on iOS and Account Manager on Android.

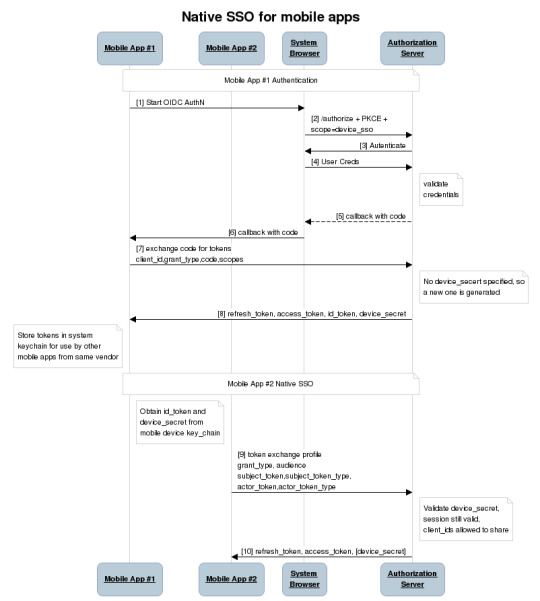

Using this as a starting point we defined a new OAuth2 scope, device_sso, whose purpose is to require the Authorization Server (AS) to return a unique “secret” assigned to that specific device. The precedent for using a scope to define specification behavior is OpenID Connect itself, which defines the “openid” scope as the trigger for the OpenID Provider (an OAuth2 AS) to implement the OpenID Connect specification. The device_secret is returned to a mobile app when the OAuth2 code is exchanged for tokens and then stored by the mobile app in the device keychain and with the id_token identifying the user who signed in.

At this point, a second mobile app signed by the same vendor can look in the keychain and find the id_token, ask the user if they want to use that identity with the new app, and then use a profile of the token exchange spec to obtain tokens for the second mobile app based on the id_token and the device_secret. The full sequence of steps looks like this:

As a result of our identity consolidation work over the past year, we derived a set of principles identity architects should find useful for addressing use cases that don’t have a known specification or best practice. Moreover, these are applicable in many contexts outside of identity standards:

Spend time researching the existing set of standards and draft standards. As the diagram shows, there are a lot of standards out there already, so understanding them is critical.

Don’t invent something new if you can just profile or combine already existing specifications.

Make sure you understand the spirit and intent of the existing specifications.

For those cases where an extension is required, make sure to extend the specification based on its spirit and intent.

Ask the community for clarity regarding any existing specification or draft.

Contribute back to the community via blog posts, best practice documents, or a new specification.

As we learned during the consolidation of our Yahoo and AOL identity platforms, and as demonstrated in our examples, there is no need to resort to proprietary solutions for use cases that at first look do not appear to have a standards-based solution. Instead, it’s much better to follow these principles, avoid the NIH (not-invented-here) syndrome, and invest the time to build solutions on standards.

36 notes

·

View notes

Text

CVE-2022-25262 PoC + vulnerability details for CVE-2022-25262 | JetBrains Hub single-click SAML...

CVE-2022-25262 PoC + vulnerability details for CVE-2022-25262 | JetBrains Hub single-click SAML response takeover. https://github.com/yuriisanin/CVE-2022-25262 #redteam #hackers #exploit #inject #cve #poc

JetBrains Hub single-click SAML response takeover (CVE-2022-25262) - YouTube OAuth2 authorization code takeover using Slack Integration service + usage of authorization code pool during OAuth to SAML exchange leading to SAML response ...

0 notes

Text

DC Engineer Consultant

Company: HexawareExperience: 10 to 14location: India, ChennaiRef: 29035741Summary: Job Description Experience in Azure AD and ADB2C template design creation and custom template modification LDAP and kerbros SAML , oauth2 , OpendID connect Experience in Powershell, .net/C# Architect and Design and implement solutions…. Source link Apply Now #Engineer #Consultant

View On WordPress

0 notes

Text

Purpose of Pivotal Cloud Foundry

Cloud platforms let anyone deploy network apps or services and make them offered to the planet in several minutes. When software becomes popular, the cloud easily scales it to take care of more traffic, replacing with several keystrokes the build-out and migration efforts that when took months. Cloud platforms signify the next object in the evolution of IT, enable you to focus fully on your applications and data with no worry about the underlying infrastructure.

Not all cloud platforms are manufactured equal. Some have less language and framework support, lack key app services or restrict deployment to a single cloud. Cloud Foundry (CF) has transformed into the industry standard. It can be an open-source platform deploys to run your apps on your own computing deploy on an IaaS like AWS, vSphere, or OpenStack. You may also work with a PaaS deployed by way of a commercial CF cloud provider. A wide community plays a role in and supports Cloud Foundry. The platform's directness and extensibility check its users from being locked into a single framework, set of app services, or cloud.

Cloud Foundry is ideal for anyone interested in removing the fee and complexity of configuring infrastructure because of their apps. Developers can deploy their apps to Cloud Foundry utilizing their existing tools and with zero modification with their code.

How Cloud Foundry Works

To flexibly serve and scale apps online, Cloud Foundry has subsystems that execute particular functions. Here's how several of those main subsystems work.

How a Cloud Balances Its Load

Clouds balance their processing loads over multiple machines, optimizing for effectiveness and flexibility against point failure. A Cloud Foundry installation accomplish at this three levels:

BOSH creates and deploys virtual machines (VMs) along with physical computing infrastructure, and deploys and runs Cloud Foundry along with this cloud. To organize the deployment, BOSH follow a manifest document.

The CF Cloud Controller runs the apps and further process on the cloud's VMs, balancing demand and organization app lifecycles.

The router routes incoming traffic from the planet to the VMs that are running the apps that the traffic

demands, usually working with a customer-provided load balancer.

How Apps Run Anywhere

Cloud Foundry designates two forms of VMs: the component VMs that constitute the platform's infrastructure, and the host VMs that host apps for the outside world. Within CF, the Diego system distributes the hosted app load over all the host VMs and keeps it running and balanced through demand surges, outages, and other changes. Diego accomplishes this via an auction algorithm.

To generally meet demand, multiple host VMs run duplicate instances of the exact same app. This means that apps must certainly be portable. Cloud Foundry distributes app source code to VMs with all the VMs require to compile and run the apps locally. Including the OS stack that the app runs on, and a buildpack containing all languages, libraries, and services that the app uses. Before sending software to a VM, the Cloud Controller stage it for delivery by combine stack, buildpack, and source code in to a droplet that the VM can unpack, collect and run. For easy, standalone apps without any dynamic pointers, the droplet can include a pre-compiled executable in place of source code, language, and libraries.

How CF Organizes Users and Workspaces

CF manages user accounts through two User Authentication and Authorization (UAA) servers, which support access control as OAuth2 services and can store user information internally, or hook up to external user stores through LDAP or SAML.

One UAA server allows use of BOSH and hold accounts for the CF operators who deploy runtimes, services, and additional software onto the BOSH layer directly. One other UAA server controls access to the Cloud Controller and determines who is able to tell it to complete what. The Cloud Controller UAA define various user roles, such as admin, developer, or auditor, and grants them unlike sets of privileges to run CF commands. The Cloud Controller UAA also scopes the roles to separate, compartmentalized Orgs and Spaces within an installation, to control and track use.

Where CF Stores Resources

Cloud Foundry utilizes the git system on GitHub to version-control source code, buildpacks, documentation, and additional resources. Developers on the platform also use GitHub for their own apps, custom configurations, and additional resources. To store large binary files, such as droplets, CF maintains an interior or external blobstore. CF uses MySQL and Consul is used to store and share temporary information, such as internal component states.

How CF Components Communicate

Cloud Foundry components communicate together by posting messages internally using Http and Https protocols, and by sending NATS messages to one another directly.

How to Monitor and Analyze a CF Deployment

system logs are generated by Cloud Foundry from Cloud Foundry components and app logs from hosted apps

As CF runs, it's component and host VMs generate logs and metrics. Cloud Foundry apps also typically generate logs. The Loggregator system aggregates the component metrics and app logs in to a structured, usable form, the Firehose. You need to use all the output of the Firehose or direct the output to specific uses, such as monitoring system internals, triggering alerts, or analyzing user behavior, by applying nozzles.

The component logs follow an alternative path. They stream from rsyslog agents, and the cloud operator can configure them to stream out to a syslog drain.

Using Services with CF

Typical apps be determined by free or metered services such as databases or third-party APIs. To add these into a software, a developer writes a Service Broker, an API that publishes to the Cloud Controller the capacity to list service offerings, provision the service, and enable apps to create calls out to it.

Why Pivotal Cloud Foundry Differs from Open Source Cloud Foundry

Open-source software provide the base for the Pivotal Cloud Foundry platform. Pivotal Application Service (PAS) may be the Pivotal distribution of Cloud Foundry software for hosting apps. Pivotal offers extra profitable features, enterprise services, support, documentation, certificates, and other value-adds.

For more information about Pivotal Cloud Foundry CLICK HERE

#Pivotal Cloud Foundry Online Training#Cloud Foundry Training#Cloud Foundry Training in Hyderabad#PCF Training#Cloud Foundry Developer Online Training in Hyderabad#Cloud Foundry Online Training#Best Cloud Foundry Training Institute

0 notes

Photo

RT @NativeScript: Just released! Connect to Open ID, Active Directory, OAuth2 or SAML in 5 min. https://t.co/3qujsE7Lzg https://t.co/ReuWWKwaaB

0 notes

Text

Auth - vse

OAuth2, OpenID Connect (OIDC), SAML -------------------------- OAuth 2.0 is a framework that controls authorization to a protected resource such as an application or a set of files, while OpenID Connect and SAML are both industry standards for federated authentication.

OpenID Connect is built on the OAuth 2.0 protocol and uses an additional JSON Web Token (JWT), called an ID token, to standardize areas that OAuth 2.0 leaves up to choice, such as scopes and endpoint discovery. It is specifically focused on user authentication and is widely used to enable user logins on consumer websites and mobile apps.

SAML is independent of OAuth, relying on an exchange of messages to authenticate in XML SAML format, as opposed to JWT. It is more commonly used to help enterprise users sign in to multiple applications using a single login.

OAuth - obecný framework na prava (authorizace), OpenID implementace nad ním pro authentikace (poda ten token), SAML mimo, FIDO novinka

OpenID Connect (OIDC) is an authentication layer on top of OAuth 2.0, an authorization framework.

Oauth (code flow - frontend + backend, implicit flow - jen frontend js)

Authentication - KDO Authorization - CO

IdentityServer is an open-source authentication server that implements OpenID Connect (OIDC) and OAuth 2.0 standards for ASP.NET Core

Active Directory is a database based system that provides authentication, directory, policy, and other services in a Windows environment

LDAP (Lightweight Directory Access Protocol) is an application protocol for querying and modifying items in directory service providers like Active Directory, which supports a form of LDAP.

Short answer: AD is a directory services database, and LDAP is one of the protocols you can use to talk to it.

Lightweight Directory Access Protocol or LDAP, is a standards based specification for interacting with directory data. Directory Services can implement support of LDAP to provide interoperability among 3rd party applications.

Active Directory is Microsoft's implementation of a directory service that, among other protocols, supports LDAP to query it's data.

Kerberos is a network authentication protocol. It is designed to provide strong authentication for client/server applications by using secret-key cryptography. Clients authenticate to Active Directory using the Kerberos protocol.

AD je ta db, kerberos je protokol (kerberos vs openid jde porovnat - obe protokoly) Azure Active directory - je ta db v azure, včetne services, je to identity provider - umi pres openid snad Identity server je framework co pouziju pro napsani serveru, ale nema to tu spravu dat co ma AD a ui, to si musim poskladat

---- Oauth flow Implicit - uz nedoporucovana, jen ciste spa The application opens a browser to send the user to the OAuth server The user sees the authorization prompt and approves the app’s request The user is redirected back to the application with an access token in the URL fragment *****

Code flow The application opens a browser to send the user to the OAuth server The user sees the authorization prompt and approves the app’s request The user is redirected back to the application with an authorization code in the query string ****** The application exchanges the authorization code for an access token ******

0 notes

Video

youtube

(via YouTube Short | What is Difference Between OAuth2 and SAML | Quick Guide to SAML Vs OAuth2)

Hi, a short #video on #oauth2 Vs #SAML #authentication & #authorization is published on #codeonedigest #youtube channel. Learn OAuth2 and SAML in 1 minute.

#saml #oauth #oauth2 #samlvsoauth2 #samlvsoauth #samlvsoauth2.0 #samlvsoauth2vsjwt #samlvsoauthvssso #oauth2vssaml #oauth2vssaml2 #oauth2vssaml2.0 #oauth2authentication #oauth2authenticationspringboot #oauth2authorizationseverspringboot #oauth2authorizationcodeflowspringboot #oauth2authenticationpostman #oauth2authorization #oauth2authorizationserver #oauth2authorizationcode #samltutorial #samlauthentication #saml2registration #saml2 #samlvsoauth #oauth2authenticationflow #oauth2authenticationserver #Oauth #oauth2 #oauth2explained #oauth2springboot #oauth2authorizationcodeflow #oauth2springbootmicroservices #oauth2tutorial #oauth2springbootrestapi #oauth2withjwtinspringboot

1 note

·

View note

Text

Collective #416

Inspirational Website of the Week: Hypergiant

An awesome retro design with a great flow and nice details. Our pick this week.

Get inspired

Our Sponsor Want Enterprise Mobile Auth Simplified?

With 5 minutes and a few bits of config, you can build an app connected to OpenID, Active Directory, OAuth2 or SAML.

Learn more

A Strategy Guide To CSS Custom Properties

A fantastic guide for…

View On WordPress

0 notes

Text

YouTube Short | What is Difference Between OAuth2 and SAML | Quick Guide to SAML Vs OAuth2

Hi, a short #video on #oauth2 Vs #SAML #authentication & #authorization is published on #codeonedigest #youtube channel. Learn OAuth2 and SAML in 1 minute. #saml #oauth #oauth2 #samlvsoauth2 #samlvsoauth

What is SAML? SAML is an acronym used to describe the Security Assertion Markup Language (SAML). Its primary role in online security is that it enables you to access multiple web applications using single sign-on (SSO). What is OAuth2? OAuth2 is an open-standard authorization protocol or framework that provides applications the ability for “secure designated access.” OAuth2 doesn’t share…

View On WordPress

#Oauth#oauth2#oauth2 authentication#oauth2 authentication flow#oauth2 authentication postman#oauth2 authentication server#oauth2 authentication spring boot#oauth2 authorization#oauth2 authorization code#oauth2 authorization code flow#oauth2 authorization code flow spring boot#oauth2 authorization server#oauth2 authorization sever spring boot#oauth2 explained#oauth2 spring boot#oauth2 spring boot microservices#oauth2 spring boot rest api#oauth2 tutorial#oauth2 vs saml#oauth2 vs saml 2.0#oauth2 vs saml2#saml#saml 2.0#saml 2.0 registration#saml authentication#saml tutorial#saml vs oauth#saml vs oauth 2.0#saml vs oauth vs sso#saml vs oauth2

0 notes

Text

Ignite 2018

afinita - pri load balanceru - stejny klient chodi na stejny server, možná i jako geo u cache apod? retence=napr u zaloh jak stare odmazavat webjob= WebJob is a feature of Azure App Service that enables you to run a program or script in the same context as a web app, background process, long running cqrs = Command Query Responsibility Segregation - zvláštní api/model na write a read, opak je crud webhook = obrácené api, zavolá clienta pokud se něco změní (dostane eventu)

docker - muze byt vice kontejneru v jedné appce, docker-compose.yml azure má nějaké container registry - tam se nahraje image (napr website) a ten se pak deployne někam např. app service, ale jiné image nemusí container registry - není veřejné narozdíl do dockeru, někdo jiný z teamu může si stáhnout

azure functions 2 - v GA

xamarin - ui je vlastne access k native api, ale v csharp projekty - shared, a special pro ios a android

ML.NET - je to framework pro machine learning

hosting SPA na azure storage static website - teoreticky hodně výhod, cachování, levné hosting

devops - automate everything you can

sponge - learn constantly multi-talented - few thinks amazing, rest good konverzace, teaching, presenting, positivity, control share everything

powershell - future, object based CLI to MS tech powerShell ISE - editor - uz je ve windows, ale ted VS code cmdlets - mini commands, hlavni cast, .net classy [console]::beep() function neco { params{[int] Seconds} } pipeline - chain processing - output je input pro dalsi atd dir neco | Select-object modules - funkce dohromady, k tomu manifest

web single sign-on = fedaration - nekdo jiny se zaruci ze ja neco muzu a ze jsem to ja federation=trust data jsou na jednom miste SAML - security assertion markup language, jen web, složité API Security - header Authorization Basic (username, heslo zakodovane) OAuth2 - misto toho tokeny (vstupenka) openid connect - id token, access token - všechny platformy, code flow doporučené, jiné implicit flow? fido - fast identity online - abstrakce uh wtf, private public key pair per origin - nejde phising ldap. kerberos - jak to zapada?

httprepl - cli swagger

asm.js - polyfil pro web assembly, web assembly je native kod v browseru - napr .net = blazzor

svet bez hesel windows hello - windows login - face nebo fingerprint ms authenticator - mobilni apka - matchnu vygenerovany kod FIDO2 - novy security standart - mam u sebe privatni klic, server posle neco (nonce), to zakryptuju privatnim pošlu zpátky - přes veřejný rozšifruje a má potvrzené, pak to samé s tokenem

cosmos db transakce - jen pouzitim stored procedure, single partition default index na vše, jde omezit při vytváření kolekce change feed - log of changes, in order trik jak dostat rychle document count - meta info o kolekci a naparsovat key-value cosmos - vyhody globalni distribuce, eventy, multimodel, pro big data asi

AKS - container - appka, orchestrator - komunikace mezi kontejnery, správa kontejnerů, healthchecks, updates AKS - orchestrator - nejčastější orchestrátor, standart, extensible, self healing představa něco jako cli nebo klient - řeknu jaké kontejnery, počet apod uvnitř se to nějak zařídí - api server, workers atd.. - je to managed kubernetes v azure, customer se stará jen o to co nasadit a kdy - ci/cd aks = azure kubernetes service

dev spaces - share aks cluster for dev (ne ci/cd), realne dependency (bez mock jiných service apod) extension do VS, pracuju lokalne, sync do azure, využíví namespace v aks (každý má svojí verzi service) - normalne frontned.com, já mám ondra.frontend.com a svojí api, pokud se zeptá na url tak se koukne jestli běží lokálně, když ne tak se zeptá team verze respektive je to celé v azure, ale je tam moje verze aplikace

kubernets - master (api server) - jeden Node - vice, VMs, v nem pods - containers, mají unikátní ip, networking - basic pro dev, advanced pro live nody a pody jsou interní věc, ven přes services helm - něco jako worker co se o to stará? jako docker-compose - vic imagu, help je pro aks??, arm template pro aks (skrpit jak postavit prostředí)

event notification patern - objednávky do fronty, ostatní systémy zpracují, co nejvíce info v eventě event sourcing - ukládat změny - inserted, updated, updated, updated, místo get update save, jde také udělat přes event, materialized view - spočítání stavu podle těch event, jdě dělat jednou za čas event grid - event routing

azure function - zip, z něj to spustí (vyhneme se problemu při update file by file), přes proměnné, nyní default ve 2.0 je startup, kde je možné připravit DI a funkce pak přes konstruktor už se dá kombinovat s kontejnery, aks atd durable functions - složitější věci s návaznostmi funkci, long running, local state, code-only, orchestartor function - vola activity function, má vnitrni stav, probudi se dela do prvni aktivity, tu spusti, spi, probudi se checkne jestli dobehla, pokracuje dal logic apps - design workflow, visual azure function runtime jde teoreticky hostovat na aks? v devops pro non .net jazyky potreba instalovat zvlast extension v2 - vice lang, .net core - bezi vsude, binding jako extension key vault - v 2008 ani preview funkce hosting - consuption = shared, app service - dedicated microservice=1 function app, jeden jazyk, jeden scale api management = gateway - na microservices, jde rozdělat na ruzné service azure storage tiery - premium (big data), hot (aplikace, levne transakce, drahy store), cold (backup, levny store, drahe transakce), archive (dlouhodobý archiv) - ruzné ceny/rychlosti, soft delete - po dobu retence je možnost obnovit smazané, data lifecycle management - automaticky presouvat data mezi tiery, konfigurace json

hybric cloud - integrace mezi on premise a cloudem - azure stack - azure které běží on premise někde use case: potřebujeme hodně rychle/jsme offline, vyhovění zákonům, model

místo new HttpClient, raději services.AddHttpCLient(addretry, addcircuitbreaker apod) a pak přes konstruktor, používá factory, používání Polly (retry apod..) - pro get, pro post - davat do queue

people led, technology enpowered

service fabric 3 varianty - standalone (on prem), azure (clustery vm na azure), mesh (serverless), nějaká json konfigurace zase, umí autoscale (trigger a mechanism json konfig), spíš hodně interní věc - běží na tom věci v azure, předchudce aks, jednoduší, proprietární, stateful, autoscale apod..

důležitá věc microservices - vlastní svoje data, nemají sdílenou db principy: async publish/subscr komunikace, healt checks, resilient (retry, circuit breaker), api gateway, orchestrator (scaleout, dev) architektura - pres api gateway na ruzne microservice (i ms mezi sebou) - ocelot orchestrator - kubernetes - dostane cluster VMs a ty si managuje helm = package manager pro kubernetes, dela deploy, helm chart = popis jak deploynout standartni

key valut - central pro všechny secrets, scalable security, aplikace musí mít MSI (nějaké identity - přes to se povolí přístup)

Application Insights - kusto language, azure monitor search in (kolekce) "neco" where neco >= ago(30d) sumarize makelist(eventId) by Computer - vraci Comuter a k tomu list eventId, nebo makeset umí funkce nějak let fce=(){...}; join kind=inner ... bla let promenna - datatable napr hodně data - evaluate autocluster_v2() - uděla grupy cca, podobně evaluate basket(0.01) pin to dashboard, vedle set alert

ai oriented architecture: program logic + ai, trend dostat tam ai nejak

0 notes