#software testing material

Explore tagged Tumblr posts

Video

youtube

Fully Automatic Micro Vickers Hardness Tester with HMS Software By Multi...

#youtube#Multitek Micro Vickers Hardness Machine#Digital Vickers Hardness Tester#Metallurgical Testing Equipment India#Material Hardness Testing Machine#Vickers Hardness Tester with Software#Micro Vickers Hardness Testing Machine

0 notes

Note

(half rant half story)

I'm a physicist. I work for a company that helps develop car parts. Essentially, car companies come to us with ideas on what they want from a part or material, and we make/test the idea or help them make/test it. Usually this means talking to other scientists and engineers and experts and it's all fine. Sometimes this means talking to businesspeople and board execs and I hate them

A bit ago when AI was really taking off in the zeitgeist I went to a meeting to talk about some tweaks Car Company A wanted to make to their hydraulics- specifically the master cylinder, but it doesn't super matter. I thought I'd be talking to their engineers - it ends up being just me, their head supervisor (who was not a scientist/engineer) and one of their executives from a different area (also not a scientist/engineer). I'm the only one in the room who actually knows how a car works, and also the lowest-level employee, and also aware that these people will give feedback to my boss based on how I 'represent the company ' whilst I'm here.

I start to explain my way through how I can make some of the changes they want - trying to do so in a way they'll understand - when Head Supervisor cuts me off and starts talking about AI. I'm like "oh well AI is often integrated into the software for a car but we're talking hardware right now, so that's not something we really ca-"

"Can you add artificial intelligence to the hydraulics?"

"..sorry, what was that?"

"Can you add AI to the hydraulics system?"

can i fucking what mate "Sir, I'm sorry, I'm a little confused - what do you mean by adding AI to the hydraulics?"

"I just thought this stuff could run smoother if you added AI to it. Most things do"

The part of the car that moves when you push the acceleration pedal is metal and liquid my dude what are you talking about "You want me to .add AI...to the pistons? To the master cylinder?"

"Yeah exactly, if you add AI to the bit that makes the pistons work, it should work better, right?"

IT'S METAL PIPES it's metal pipes it's metal pipes "Sir, there isn't any software in that part of the car"

"I know, but it's artificial intelligence, I'm sure there's a way to add it"

im exploding you with my mind you cannot seriously be asking me to add AI to a section of car that has as much fucking code attached to it as a SOCK what do you MEAN. The most complicated part of this thing is a SPRING you can't be serious

He was seriously asking. I've met my fair share of idiots but I was sure he wasn't genuinely seriously asking that I add AI directly to a piston system, but he was. And not even in the like "oh if we implement a way for AI to control that part" kind of way, he just vaguely thought that AI would "make it better" WHAT THE FUCK DO YOU MEANNNNN I HAD TO SPEND 20 MINUTES OF MY HARD EARNED LIFE EXPLAINING THAT NEITHER I NOR ANYONE ELSE CAN ADD AI TO A GOD DAMNED FUCKING PISTON. "CAN YOU ADD AI TO THE HYDRAULICS" NO BUT EVEN WITHOUT IT THAT METAL PIPE IS MORE INTELLIGENT THAN YOU

Posted by admin Rodney.

13K notes

·

View notes

Text

Athletes Go for the Gold with NASA Spinoffs

NASA technology tends to find its way into the sporting world more often than you’d expect. Fitness is important to the space program because astronauts must undergo the extreme g-forces of getting into space and endure the long-term effects of weightlessness on the human body. The agency’s engineering expertise also means that items like shoes and swimsuits can be improved with NASA know-how.

As the 2024 Olympics are in full swing in Paris, here are some of the many NASA-derived technologies that have helped competitive athletes train for the games and made sure they’re properly equipped to win.

The LZR Racer reduces skin friction drag by covering more skin than traditional swimsuits. Multiple pieces of the water-resistant and extremely lightweight LZR Pulse fabric connect at ultrasonically welded seams and incorporate extremely low-profile zippers to keep viscous drag to a minimum.

Swimsuits That Don’t Drag

When the swimsuit manufacturer Speedo wanted its LZR Racer suit to have as little drag as possible, the company turned to the experts at Langley Research Center to test its materials and design. The end result was that the new suit reduced drag by 24 percent compared to the prior generation of Speedo racing suit and broke 13 world records in 2008. While the original LZR Racer is no longer used in competition due to the advantage it gave wearers, its legacy lives on in derivatives still produced to this day.

Trilion Quality Systems worked with NASA’s Glenn Research Center to adapt existing stereo photogrammetry software to work with high-speed cameras. Now the company sells the package widely, and it is used to analyze stress and strain in everything from knee implants to running shoes and more.

High-Speed Cameras for High-Speed Shoes

After space shuttle Columbia, investigators needed to see how materials reacted during recreation tests with high-speed cameras, which involved working with industry to create a system that could analyze footage filmed at 30,000 frames per second. Engineers at Adidas used this system to analyze the behavior of Olympic marathoners' feet as they hit the ground and adjusted the design of the company’s high-performance footwear based on these observations.

Martial artist Barry French holds an Impax Body Shield while former European middle-weight kickboxing champion Daryl Tyler delivers an explosive jump side kick; the force of the impact is registered precisely and shown on the display panel of the electronic box French is wearing on his belt.

One-Thousandth-of-an-Inch Punch

In the 1980s, Olympic martial artists needed a way to measure the impact of their strikes to improve training for competition. Impulse Technology reached out to Glenn Research Center to create the Impax sensor, an ultra-thin film sensor which creates a small amount of voltage when struck. The more force applied, the more voltage it generates, enabling a computerized display to show how powerful a punch or kick was.

Astronaut Sunita Williams poses while using the Interim Resistive Exercise Device on the ISS. The cylinders at the base of each side house the SpiraFlex FlexPacks that inventor Paul Francis honed under NASA contracts. They would go on to power the Bowflex Revolution and other commercial exercise equipment.

Weight Training Without the Weight

Astronauts spending long periods of time in space needed a way to maintain muscle mass without the effect of gravity, but lifting free weights doesn’t work when you’re practically weightless. An exercise machine that uses elastic resistance to provide the same benefits as weightlifting went to the space station in the year 2000. That resistance technology was commercialized into the Bowflex Revolution home exercise equipment shortly afterwards.

Want to learn more about technologies made for space and used on Earth? Check out NASA Spinoff to find products and services that wouldn’t exist without space exploration.

Make sure to follow us on Tumblr for your regular dose of space!

2K notes

·

View notes

Text

Generative AI Policy (February 9, 2024)

As of February 9, 2024, we are updating our Terms of Service to prohibit the following content:

Images created through the use of generative AI programs such as Stable Diffusion, Midjourney, and Dall-E.

This post explains what that means for you. We know it’s impossible to remove all images created by Generative AI on Pillowfort. The goal of this new policy, however, is to send a clear message that we are against the normalization of commercializing and distributing images created by Generative AI. Pillowfort stands in full support of all creatives who make Pillowfort their home. Disclaimer: The following policy was shaped in collaboration with Pillowfort Staff and international university researchers. We are aware that Artificial Intelligence is a rapidly evolving environment. This policy may require revisions in the future to adapt to the changing landscape of Generative AI.

-

Why is Generative AI Banned on Pillowfort?

Our Terms of Service already prohibits copyright violations, which includes reposting other people’s artwork to Pillowfort without the artist’s permission; and because of how Generative AI draws on a database of images and text that were taken without consent from artists or writers, all Generative AI content can be considered in violation of this rule. We also had an overwhelming response from our user base urging us to take action on prohibiting Generative AI on our platform.

-

How does Pillowfort define Generative AI?

As of February 9, 2024 we define Generative AI as online tools for producing material based on large data collection that is often gathered without consent or notification from the original creators.

Generative AI tools do not require skill on behalf of the user and effectively replace them in the creative process (ie - little direction or decision making taken directly from the user). Tools that assist creativity don't replace the user. This means the user can still improve their skills and refine over time.

For example: If you ask a Generative AI tool to add a lighthouse to an image, the image of a lighthouse appears in a completed state. Whereas if you used an assistive drawing tool to add a lighthouse to an image, the user decides the tools used to contribute to the creation process and how to apply them.

Examples of Tools Not Allowed on Pillowfort: Adobe Firefly* Dall-E GPT-4 Jasper Chat Lensa Midjourney Stable Diffusion Synthesia

Example of Tools Still Allowed on Pillowfort:

AI Assistant Tools (ie: Google Translate, Grammarly) VTuber Tools (ie: Live3D, Restream, VRChat) Digital Audio Editors (ie: Audacity, Garage Band) Poser & Reference Tools (ie: Poser, Blender) Graphic & Image Editors (ie: Canva, Adobe Photoshop*, Procreate, Medibang, automatic filters from phone cameras)

*While Adobe software such as Adobe Photoshop is not considered Generative AI, Adobe Firefly is fully integrated in various Adobe software and falls under our definition of Generative AI. The use of Adobe Photoshop is allowed on Pillowfort. The creation of an image in Adobe Photoshop using Adobe Firefly would be prohibited on Pillowfort.

-

Can I use ethical generators?

Due to the evolving nature of Generative AI, ethical generators are not an exception.

-

Can I still talk about AI?

Yes! Posts, Comments, and User Communities discussing AI are still allowed on Pillowfort.

-

Can I link to or embed websites, articles, or social media posts containing Generative AI?

Yes. We do ask that you properly tag your post as “AI” and “Artificial Intelligence.”

-

Can I advertise the sale of digital or virtual goods containing Generative AI?

No. Offsite Advertising of the sale of goods (digital and physical) containing Generative AI on Pillowfort is prohibited.

-

How can I tell if a software I use contains Generative AI?

A general rule of thumb as a first step is you can try testing the software by turning off internet access and seeing if the tool still works. If the software says it needs to be online there’s a chance it’s using Generative AI and needs to be explored further.

You are also always welcome to contact us at [email protected] if you’re still unsure.

-

How will this policy be enforced/detected?

Our Team has decided we are NOT using AI-based automated detection tools due to how often they provide false positives and other issues. We are applying a suite of methods sourced from international universities responding to moderating material potentially sourced from Generative AI instead.

-

How do I report content containing Generative AI Material?

If you are concerned about post(s) featuring Generative AI material, please flag the post for our Site Moderation Team to conduct a thorough investigation. As a reminder, Pillowfort’s existing policy regarding callout posts applies here and harassment / brigading / etc will not be tolerated.

Any questions or clarifications regarding our Generative AI Policy can be sent to [email protected].

2K notes

·

View notes

Text

youtube

Rambling: So much of this is just like. It's all the money, you can't get around the money. Engineering is primarily a cost optimisation problem, so is business, where do you buy your parts, how much do you pay your labour. The companies can make equal quality goods cheaper in China because of the industrial base. Western workers don't want to work in manufacturing because it doesn't pay as much or as reliably as other jobs.

I like reading articles and watching videos about factories and a thing you find with a lot of American factories is they're often highly specific niche industries where they don't have much competition or they're really low volume where less intensive manufacturing processes still work or they have big military contracts that give them their base income. Really it's wild how every little engineering shop in the US requires base level security clearance because they make the cable harness for the Hornet or whatever. And crucially, crucially: they employ 100 people. Planning to work for one of these companies is like planning to be a pro baseball player but you make $35/hr.

I studied in South Africa, and I studied electrical engineering, but like. That was my fifth or sixth choice from a personal interest perspective? As a teenager I was really into biochem. I really wanted to work on like. Bioreactor stuff. South Africa has okay industrial chemistry but not that much biochem. So why would I go spend five years getting a biochem Masters and hope I could find a job at one of like six companies. It's a bad move! Once again, baseball player odds! Mostly if you're lucky you'll get to fuck around in a half-related field for a few years and then you'll wind up with some office job that you found because it turns out running tests on paint shearing isn't personally fulfilling enough to make you stay in a lab job.

Hell, even taking the Good Hiring Engineering Job market, it's a goddamn pain in the ass to find any actual engineering work. I applied to dozens of internship positions every semester at engineering firms and workshops and never so much as heard back, whereas I could go to the software job fairs and get two offers and several interviews for a vacation job in a couple weeks. You can swim upstream to get in there but even if you're willing to take the pay cut, engineering jobs are slow moving and slow hiring, and in small departments your professional progression is often gated behind someone retiring or dying.

A while ago someone (was this Reggie? sounds like him EDIT: YEP) was talking about how part of the reason why no one in the US for the past 20 years can do like, epitaxial growth optimisation isn't because there's some philosophical or educational divison, but because anyone committed and driven enough to spend months optimizing that would just put that energy and commitment into going into software or becoming a quant or some other higher yield option. Meanwhile if you're a driven and focussed ladder climber in China there's dozens of factories looking for someone to do exactly this. The people in the West who are so into this that they still do it are often in academia, not industry, and that's an even more competitive and impenetrable sector to get into. Getting a PhD grad job in academic chip manufacturing is miserable, it's basically a six year long interview process that costs you hundreds of thousands of dollars that has a 0.1% chance of panning out.

Actually, I did once do a factory internship, it was my only nepotism internship, at a construction materials factory where my dad was a manager, and it was really interesting work! I had a lot of freedom in a small engineering team and I spent a while understanding a bag filling machine and reading manuals and tuning the control process and talking to floor workers and designing sheet metal parts to improve their jobs. And when I talked to the engineer supervising me I found out he was on a six month contract that wasn't getting renewed and he would be leaving the company basically the same time my internship ended. That company hadn't hired a full-time process engineer in ages, and probably never would if they could avoid it. Not encouraging!

People often say you should get into the trades because they pay well and are material fulfilling work. This is like. It's an elision. Successful tradespeople are in very high demand, but becoming a successful tradesperson is very, very finicky. I worked with a lot of electricians and millwrights and technicians, and for every tech who was successful and running a roaring business there were five guys stuck in eternal apprenticeships or struggling to make a name for themselves in the industry on their own. Some trades are great for this, other trades are 90% training scams where you spend nine months and five thousand dollars on a course that gives you a certificate almost no one cares about.

Every now and then I talk to an installation tech I used to work with who has a bunch of CCTV and security certs he got in the DRC, and he is just absolutely struggling to get by. There's already enough successful companies to serve the demand, why would you take a risk on this fly-by-night? He could find a technical job, and he does, but it's a dead end, everyone wants a base technician forever, they don't want you to upskill and move on. They hire in an external electrician to come in for an hour sign off on your work, and that's all you need.

You can't develop an industrial base unless it's appealing to work in the industrial base. If you're an industrialising nation, the appeal is "It's not farm work and you might get some real money instead of a sack of barley" but in a modern society you need to pay at least as well as the office jobs. If your industrial sector is small it can afford to only hire the most qualified people because it's a labour buyer's market, and that's how you produce a massive knowledge gap.

#Youtube#industrial capacity#engineering#smartereveryday is an interesting example he is a weapons engineer and a weird military guy#which like yeah that's how you do manufacturing in the US. Every little engineering shop needs military clearance#having a weird week re: industry i guess

213 notes

·

View notes

Text

Conspiratorialism as a material phenomenon

I'll be in TUCSON, AZ from November 8-10: I'm the GUEST OF HONOR at the TUSCON SCIENCE FICTION CONVENTION.

I think it behooves us to be a little skeptical of stories about AI driving people to believe wrong things and commit ugly actions. Not that I like the AI slop that is filling up our social media, but when we look at the ways that AI is harming us, slop is pretty low on the list.

The real AI harms come from the actual things that AI companies sell AI to do. There's the AI gun-detector gadgets that the credulous Mayor Eric Adams put in NYC subways, which led to 2,749 invasive searches and turned up zero guns:

https://www.cbsnews.com/newyork/news/nycs-subway-weapons-detector-pilot-program-ends/

Any time AI is used to predict crime – predictive policing, bail determinations, Child Protective Services red flags – they magnify the biases already present in these systems, and, even worse, they give this bias the veneer of scientific neutrality. This process is called "empiricism-washing," and you know you're experiencing it when you hear some variation on "it's just math, math can't be racist":

https://pluralistic.net/2020/06/23/cryptocidal-maniacs/#phrenology

When AI is used to replace customer service representatives, it systematically defrauds customers, while providing an "accountability sink" that allows the company to disclaim responsibility for the thefts:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

When AI is used to perform high-velocity "decision support" that is supposed to inform a "human in the loop," it quickly overwhelms its human overseer, who takes on the role of "moral crumple zone," pressing the "OK" button as fast as they can. This is bad enough when the sacrificial victim is a human overseeing, say, proctoring software that accuses remote students of cheating on their tests:

https://pluralistic.net/2022/02/16/unauthorized-paper/#cheating-anticheat

But it's potentially lethal when the AI is a transcription engine that doctors have to use to feed notes to a data-hungry electronic health record system that is optimized to commit health insurance fraud by seeking out pretenses to "upcode" a patient's treatment. Those AIs are prone to inventing things the doctor never said, inserting them into the record that the doctor is supposed to review, but remember, the only reason the AI is there at all is that the doctor is being asked to do so much paperwork that they don't have time to treat their patients:

https://apnews.com/article/ai-artificial-intelligence-health-business-90020cdf5fa16c79ca2e5b6c4c9bbb14

My point is that "worrying about AI" is a zero-sum game. When we train our fire on the stuff that isn't important to the AI stock swindlers' business-plans (like creating AI slop), we should remember that the AI companies could halt all of that activity and not lose a dime in revenue. By contrast, when we focus on AI applications that do the most direct harm – policing, health, security, customer service – we also focus on the AI applications that make the most money and drive the most investment.

AI hasn't attracted hundreds of billions in investment capital because investors love AI slop. All the money pouring into the system – from investors, from customers, from easily gulled big-city mayors – is chasing things that AI is objectively very bad at and those things also cause much more harm than AI slop. If you want to be a good AI critic, you should devote the majority of your focus to these applications. Sure, they're not as visually arresting, but discrediting them is financially arresting, and that's what really matters.

All that said: AI slop is real, there is a lot of it, and just because it doesn't warrant priority over the stuff AI companies actually sell, it still has cultural significance and is worth considering.

AI slop has turned Facebook into an anaerobic lagoon of botshit, just the laziest, grossest engagement bait, much of it the product of rise-and-grind spammers who avidly consume get rich quick "courses" and then churn out a torrent of "shrimp Jesus" and fake chainsaw sculptures:

https://www.404media.co/email/1cdf7620-2e2f-4450-9cd9-e041f4f0c27f/

For poor engagement farmers in the global south chasing the fractional pennies that Facebook shells out for successful clickbait, the actual content of the slop is beside the point. These spammers aren't necessarily tuned into the psyche of the wealthy-world Facebook users who represent Meta's top monetization subjects. They're just trying everything and doubling down on anything that moves the needle, A/B splitting their way into weird, hyper-optimized, grotesque crap:

https://www.404media.co/facebook-is-being-overrun-with-stolen-ai-generated-images-that-people-think-are-real/

In other words, Facebook's AI spammers are laying out a banquet of arbitrary possibilities, like the letters on a Ouija board, and the Facebook users' clicks and engagement are a collective ideomotor response, moving the algorithm's planchette to the options that tug hardest at our collective delights (or, more often, disgusts).

So, rather than thinking of AI spammers as creating the ideological and aesthetic trends that drive millions of confused Facebook users into condemning, praising, and arguing about surreal botshit, it's more true to say that spammers are discovering these trends within their subjects' collective yearnings and terrors, and then refining them by exploring endlessly ramified variations in search of unsuspected niches.

(If you know anything about AI, this may remind you of something: a Generative Adversarial Network, in which one bot creates variations on a theme, and another bot ranks how closely the variations approach some ideal. In this case, the spammers are the generators and the Facebook users they evince reactions from are the discriminators)

https://en.wikipedia.org/wiki/Generative_adversarial_network

I got to thinking about this today while reading User Mag, Taylor Lorenz's superb newsletter, and her reporting on a new AI slop trend, "My neighbor’s ridiculous reason for egging my car":

https://www.usermag.co/p/my-neighbors-ridiculous-reason-for

The "egging my car" slop consists of endless variations on a story in which the poster (generally a figure of sympathy, canonically a single mother of newborn twins) complains that her awful neighbor threw dozens of eggs at her car to punish her for parking in a way that blocked his elaborate Hallowe'en display. The text is accompanied by an AI-generated image showing a modest family car that has been absolutely plastered with broken eggs, dozens upon dozens of them.

According to Lorenz, variations on this slop are topping very large Facebook discussion forums totalling millions of users, like "Movie Character…,USA Story, Volleyball Women, Top Trends, Love Style, and God Bless." These posts link to SEO sites laden with programmatic advertising.

The funnel goes:

i. Create outrage and hence broad reach;

ii, A small percentage of those who see the post will click through to the SEO site;

iii. A small fraction of those users will click a low-quality ad;

iv. The ad will pay homeopathic sub-pennies to the spammer.

The revenue per user on this kind of scam is next to nothing, so it only works if it can get very broad reach, which is why the spam is so designed for engagement maximization. The more discussion a post generates, the more users Facebook recommends it to.

These are very effective engagement bait. Almost all AI slop gets some free engagement in the form of arguments between users who don't know they're commenting an AI scam and people hectoring them for falling for the scam. This is like the free square in the middle of a bingo card.

Beyond that, there's multivalent outrage: some users are furious about food wastage; others about the poor, victimized "mother" (some users are furious about both). Not only do users get to voice their fury at both of these imaginary sins, they can also argue with one another about whether, say, food wastage even matters when compared to the petty-minded aggression of the "perpetrator." These discussions also offer lots of opportunity for violent fantasies about the bad guy getting a comeuppance, offers to travel to the imaginary AI-generated suburb to dole out a beating, etc. All in all, the spammers behind this tedious fiction have really figured out how to rope in all kinds of users' attention.

Of course, the spammers don't get much from this. There isn't such a thing as an "attention economy." You can't use attention as a unit of account, a medium of exchange or a store of value. Attention – like everything else that you can't build an economy upon, such as cryptocurrency – must be converted to money before it has economic significance. Hence that tooth-achingly trite high-tech neologism, "monetization."

The monetization of attention is very poor, but AI is heavily subsidized or even free (for now), so the largest venture capital and private equity funds in the world are spending billions in public pension money and rich peoples' savings into CO2 plumes, GPUs, and botshit so that a bunch of hustle-culture weirdos in the Pacific Rim can make a few dollars by tricking people into clicking through engagement bait slop – twice.

The slop isn't the point of this, but the slop does have the useful function of making the collective ideomotor response visible and thus providing a peek into our hopes and fears. What does the "egging my car" slop say about the things that we're thinking about?

Lorenz cites Jamie Cohen, a media scholar at CUNY Queens, who points out that subtext of this slop is "fear and distrust in people about their neighbors." Cohen predicts that "the next trend, is going to be stranger and more violent.”

This feels right to me. The corollary of mistrusting your neighbors, of course, is trusting only yourself and your family. Or, as Margaret Thatcher liked to say, "There is no such thing as society. There are individual men and women and there are families."

We are living in the tail end of a 40 year experiment in structuring our world as though "there is no such thing as society." We've gutted our welfare net, shut down or privatized public services, all but abolished solidaristic institutions like unions.

This isn't mere aesthetics: an atomized society is far more hospitable to extreme wealth inequality than one in which we are all in it together. When your power comes from being a "wise consumer" who "votes with your wallet," then all you can do about the climate emergency is buy a different kind of car – you can't build the public transit system that will make cars obsolete.

When you "vote with your wallet" all you can do about animal cruelty and habitat loss is eat less meat. When you "vote with your wallet" all you can do about high drug prices is "shop around for a bargain." When you vote with your wallet, all you can do when your bank forecloses on your home is "choose your next lender more carefully."

Most importantly, when you vote with your wallet, you cast a ballot in an election that the people with the thickest wallets always win. No wonder those people have spent so long teaching us that we can't trust our neighbors, that there is no such thing as society, that we can't have nice things. That there is no alternative.

The commercial surveillance industry really wants you to believe that they're good at convincing people of things, because that's a good way to sell advertising. But claims of mind-control are pretty goddamned improbable – everyone who ever claimed to have managed the trick was lying, from Rasputin to MK-ULTRA:

https://pluralistic.net/HowToDestroySurveillanceCapitalism

Rather than seeing these platforms as convincing people of things, we should understand them as discovering and reinforcing the ideology that people have been driven to by material conditions. Platforms like Facebook show us to one another, let us form groups that can imperfectly fill in for the solidarity we're desperate for after 40 years of "no such thing as society."

The most interesting thing about "egging my car" slop is that it reveals that so many of us are convinced of two contradictory things: first, that everyone else is a monster who will turn on you for the pettiest of reasons; and second, that we're all the kind of people who would stick up for the victims of those monsters.

Tor Books as just published two new, free LITTLE BROTHER stories: VIGILANT, about creepy surveillance in distance education; and SPILL, about oil pipelines and indigenous landback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/10/29/hobbesian-slop/#cui-bono

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#taylor lorenz#conspiratorialism#conspiracy fantasy#mind control#a paradise built in hell#solnit#ai slop#ai#disinformation#materialism#doppelganger#naomi klein

308 notes

·

View notes

Text

Barbara Gordon's Coding & Computer Cram School is a popular YouTube series. Tucker Foley is a star student.

Barbara Gordon's Cram School posts free online courses for both coding and computer engineering. Think Crash Course in terms of entertainment, but college lecture in terms of depth. Hundreds of thousands of viewers flock to it— students who missed a class, people looking to add new skills to a resume, even simple hobbyists. It’s a project Barbara’s proud of.

Sometimes, when she wants to relax, she’ll even hop in the comments and spend an afternoon troubleshooting a viewer’s project with them.

User “Fryer-Tuck” has especially interesting ones. Barbara finds herself seeking out his comments, checking in on whatever this crazy kid is making next. An app for collecting GPS pings and assembling them on a map in real-time, an algorithm that connects geographic points to predict something’s movement taking a hundred other variables into account, simplified versions of incredibly complex homemade programs so they can run on incredibly limited CPU’s.

(Barbara wants to buy the kid a PC. It seems he’s got natural talent, but he keeps making reference to a PDA. Talk about 90’s! This guy’s hardware probably predates his birth.)

She chats with him more and more, switching to less public PM threads, and eventually, he opens up. His latest project, though, is not something Barbara has personal experience with.

FT: so if you found, hypothetically, a mysterious glowing substance that affects tech in weird and wacky ways that could totally have potential but might be vaguely sentient/otherworldly…. what would you do and how would you experiment with it. safely, of course. and hypothetically

BG: I’d make sure all my tests were in disposable devices and quarantined programs to keep it from infecting my important stuff. Dare I ask… how weird and wacky is it?

FT: uhhh. theoretically, a person composed of this substance once used it to enter a video game. like physical body, into the computer, onto the screen? moving around and talking and fighting enemies within the game?

FT: its been experimented with before, but not on any tech with a brain. just basic shields and blasters and stuff, its an energy source. also was put in a car once

FT: i wanna see how it affects software, yk? bc i already know it can. mess around and see how far i can push it

BG: […]

FT: … barbara?

BG: Sorry, thinking. Would you mind sharing more details? You said “blasters?”

Honestly. Kid genius with access to some truly wacky materials and even wackier weapons, she needs to start a file on him before he full sends to either hero or villain.

[OR: Tucker is a self-taught hacker, but if he were to credit a teacher, he'd name Barbara Gordon's Coding & Computer Cram School! He's even caught the attention of Dr. Gordon herself. She's full of sage advice, and with how she preaches the value of a good VPN, he's sure she's not pro-government. Maybe she'll help him as he studies the many applications of ecto-tech!]

#she does end up sending tucker a PC lol#and after she learns he has experience supporting a superhero team maybe pushes his name forward to WEs outreach program for r&d potentials#picks him up by the scruff and says MY coding buddy#also fun fact she had a phd in library science at one point. i like that about her i think we should talk about it a little more#also tucker was making a ghost reporting & tracking app for amity parkers#dpxdc#dcxdp#barbara gordon#tucker foley#prompt#kipwrite

599 notes

·

View notes

Text

The Artisul team was kind enough to send me their Artisul D16 display tablet to review! Timelapse and review can be found under the read more.

I have been using the same model of display tablet for over 10 years now (a Wacom Cintiq 22HD) and feel like I might be set in my ways, so getting the chance to try a different brand of display tablet was also a new experience for me!

The Unboxing

The tablet arrived in high-quality packaging with enough protection that none of the components get scratched or banged up in the shipping process. I was pleasantly surprised that additionally to the tablet, pen, stand, cables and nibs it also included a smudge guard glove and a pen case.

The stand is very light-weight and I was at first worried that it would not be able to hold up the tablet safely, but it held up really well. I appreciated that it offered steeper levels of inclination for the tablet, since I have seen plenty other display tablets who don’t offer that level of ergonomics for artists. My only gripe is that you can’t anchor the tablet to the stand. It will rest on the stand and can be easily taken on or off, but that also means that you can bump into it and dislodge it from the stand if you aren’t careful. It would require significant force, but as a cat owner, I know that a scenario like that is more likely than I’d like.

Another thing I noticed is how light the tablet is in comparison to my Cintiq. Granted, my Cintiq is larger (22 inches vs the 15.8 inches of the Artisul D16), but the Artisul D16 comes in at about 1.5kg of weight. While I don’t consider display tablets that require a PC and an outlet to work really portable, it would be a lot easier to move with the Artisul D16 from one space to another. In comparison, my Cintiq weighs in at a proud 8.5kg, making it a chore to move around. I have it hooked up to a monitor stand to be able to move it more easily across my desk.

The Setup

The setup of the tablet was quick as well, with only minor hiccups. The drivers installed quickly and basic setup was done in a matter of minutes. That doesn’t mean it came without issues: the cursor vanished as soon as I hovered over the driver window, making it a guessing game where I would be clicking and the pen calibration refused to work on the tablet screen and instead always defaulted to my regular screen. I ended up using the out of the box pen calibration for my test drawing which worked well enough.

The tablet comes with customizable hot-keys that you can reassign in the driver software. I did not end up using the hot-keys, since I use a Razer Tartarus for all my shortcuts, but I did play around with them to get a feel for them. The zoom wheel had a very satisfying haptic feel to it which I really enjoyed, and as far as I could tell, you can map a lot of shortcuts to the buttons, including with modifier keys like ctrl, shift, alt and the win key. I noticed that there was no option to map numpad keys to these buttons, but I was informed by my stream viewers that very few people have a full size keyboard with a numpad anymore.

The pen comes with two buttons as well. Unlike the hot-keys on the side of the tablet, these are barely customizable. I was only able to assign mouse clicks to them (right, left, scroll wheel click, etc) and no other hotkeys. I have the alt key mapped to my pen button on my Cintiq, enabling me to color pick with a single click of the pen. The other button is mapped to the tablet menu for easy display switches. Not having this level of customization was a bit of a bummer, but I just ended up mapping the alt key to a new button on my Razer Tartarus and moved on.

The pen had a very similar size to my Wacom pen, but was significantly lighter. It also rattled slightly when shook, but after inspection this was just the buttons clicking against the outer case and no internal issues. The pen is made from one material, a smooth plastic finish. I would have liked for there to be a rubber-like material at the grip like on the Wacom pen for better handling, but it still worked fine without it.

Despite not being able to calibrate the pen for the display tablet, the cursor offset was minimal. It took me a while at the beginning to get used to the slight difference to my current tablet, but it was easy to get used to it and I was able to smoothly ink and color with the tablet. The screen surface was very smooth, reminding me more of an iPad surface. The included smudge guard glove helped mitigate any slipping or sliding this might have caused, enabling me to draw smoothly. Like with the cursor offset, it took me a while to get used to the different pressure sensitivity of the tablet, but I adapted quickly.

So what do I think of it?

Overall, drawing felt different on this tablet, but I can easily see myself getting used to the quirks of the tablet with time. Most of the issues I had were QoL things I am used to from my existing tablet.

But I think that’s where the most important argument for the tablet comes in: the price.

I love my Cintiq. I can do professional grade work on it and I rebought the same model after my old one got screen issues, I liked it that much. But it also costs more than a 1000 € still, even after being on the market for over 10 years (I bought it for about 1.500 € refurbished in 2014, for reference). The Artisul D16 on the other hand runs you a bit more than 200€. That is a significant price difference! I often get asked by aspiring artists what tools I use and while I am always honest with them, I also preface it by saying that they should not invest in a Cintiq if they are just starting out. They are high quality professional tools and have a price point that reflects that. You do not need these expensive tools to create art. You can get great results on a lot cheaper alternatives! I do this for a living so I can justify paying extra for the QoL upgrades the Cintiq offers me, but I have no illusion that they are an accessible tool for most people.

I can recommend the Artisul D16 as a beginner screen tablet for people who are just getting into art or want to try a display tablet for once. I wouldn’t give up my Cintiq for it, but I can appreciate the value it offers for the competitive price point. If you want to get an Artisul D16 for yourself, you can click this link to check out their shop!

AMAZON.US: https://www.amazon.com/dp/B07TQLGC81

AMAZON.JP: https://www.amazon.co.jp/dp/B07T6ZT84V

AMAZON.MX: https://www.amazon.com.mx/dp/B07T6ZT84V

Once again thank you to the Artisul team for giving me the opportunity to review their display tablet!

105 notes

·

View notes

Text

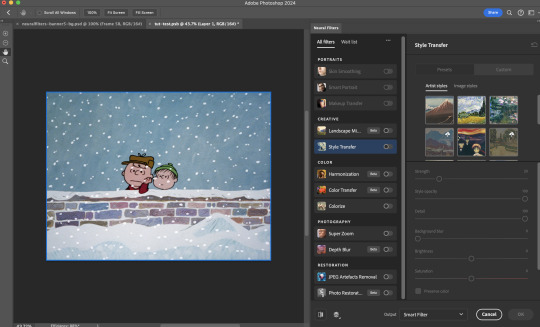

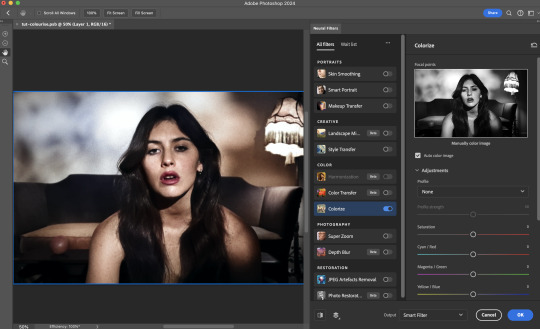

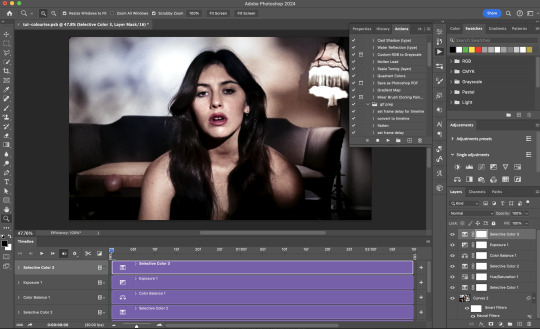

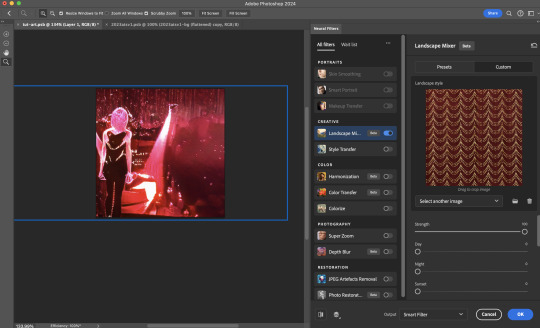

Neural Filters Tutorial for Gifmakers by @antoniosvivaldi

Hi everyone! In light of my blog’s 10th birthday, I’m delighted to reveal my highly anticipated gifmaking tutorial using Neural Filters - a very powerful collection of filters that really broadened my scope in gifmaking over the past 12 months.

Before I get into this tutorial, I want to thank @laurabenanti, @maines , @cobbbvanth, and @cal-kestis for their unconditional support over the course of my journey of investigating the Neural Filters & their valuable inputs on the rendering performance!

In this tutorial, I will outline what the Photoshop Neural Filters do and how I use them in my workflow - multiple examples will be provided for better clarity. Finally, I will talk about some known performance issues with the filters & some feasible workarounds.

Tutorial Structure:

Meet the Neural Filters: What they are and what they do

Why I use Neural Filters? How I use Neural Filters in my giffing workflow

Getting started: The giffing workflow in a nutshell and installing the Neural Filters

Applying Neural Filters onto your gif: Making use of the Neural Filters settings; with multiple examples

Testing your system: recommended if you’re using Neural Filters for the first time

Rendering performance: Common Neural Filters performance issues & workarounds

For quick reference, here are the examples that I will show in this tutorial:

Example 1: Image Enhancement | improving the image quality of gifs prepared from highly compressed video files

Example 2: Facial Enhancement | enhancing an individual's facial features

Example 3: Colour Manipulation | colourising B&W gifs for a colourful gifset

Example 4: Artistic effects | transforming landscapes & adding artistic effects onto your gifs

Example 5: Putting it all together | my usual giffing workflow using Neural Filters

What you need & need to know:

Software: Photoshop 2021 or later (recommended: 2023 or later)*

Hardware: 8GB of RAM; having a supported GPU is highly recommended*

Difficulty: Advanced (requires a lot of patience); knowledge in gifmaking and using video timeline assumed

Key concepts: Smart Layer / Smart Filters

Benchmarking your system: Neural Filters test files**

Supplementary materials: Tutorial Resources / Detailed findings on rendering gifs with Neural Filters + known issues***

*I primarily gif on an M2 Max MacBook Pro that's running Photoshop 2024, but I also have experiences gifmaking on few other Mac models from 2012 ~ 2023.

**Using Neural Filters can be resource intensive, so it’s helpful to run the test files yourself. I’ll outline some known performance issues with Neural Filters and workarounds later in the tutorial.

***This supplementary page contains additional Neural Filters benchmark tests and instructions, as well as more information on the rendering performance (for Apple Silicon-based devices) when subject to heavy Neural Filters gifmaking workflows

Tutorial under the cut. Like / Reblog this post if you find this tutorial helpful. Linking this post as an inspo link will also be greatly appreciated!

1. Meet the Neural Filters!

Neural Filters are powered by Adobe's machine learning engine known as Adobe Sensei. It is a non-destructive method to help streamline workflows that would've been difficult and/or tedious to do manually.

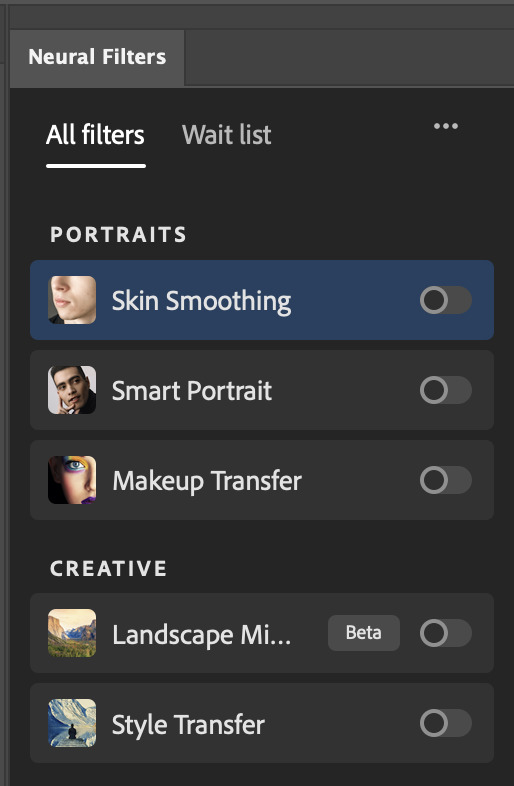

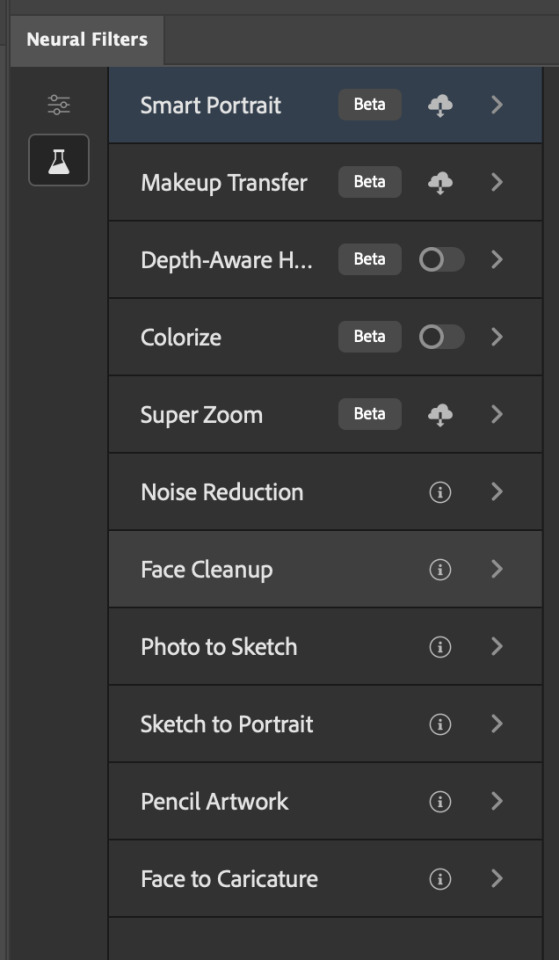

Here are the Neural Filters available in Photoshop 2024:

Skin Smoothing: Removes blemishes on the skin

Smart Portrait: This a cloud-based filter that allows you to change the mood, facial age, hair, etc using the sliders+

Makeup Transfer: Applies the makeup (from a reference image) to the eyes & mouth area of your image

Landscape Mixer: Transforms the landscape of your image (e.g. seasons & time of the day, etc), based on the landscape features of a reference image

Style Transfer: Applies artistic styles e.g. texturings (from a reference image) onto your image

Harmonisation: Applies the colour balance of your image based on the lighting of the background image+

Colour Transfer: Applies the colour scheme (of a reference image) onto your image

Colourise: Adds colours onto a B&W image

Super Zoom: Zoom / crop an image without losing resolution+

Depth Blur: Blurs the background of the image

JPEG Artefacts Removal: Removes artefacts caused by JPEG compression

Photo Restoration: Enhances image quality & facial details

+These three filters aren't used in my giffing workflow. The cloud-based nature of Smart Portrait leads to disjointed looking frames. For Harmonisation, applying this on a gif causes Neural Filter timeout error. Finally, Super Zoom does not currently support output as a Smart Filter

If you're running Photoshop 2021 or earlier version of Photoshop 2022, you will see a smaller selection of Neural Filters:

Things to be aware of:

You can apply up to six Neural Filters at the same time

Filters where you can use your own reference images: Makeup Transfer (portraits only), Landscape Mixer, Style Transfer (not available in Photoshop 2021), and Colour Transfer

Later iterations of Photoshop 2023 & newer: The first three default presets for Landscape Mixer and Colour Transfer are currently broken.

2. Why I use Neural Filters?

Here are my four main Neural Filters use cases in my gifmaking process. In each use case I'll list out the filters that I use:

Enhancing Image Quality:

Common wisdom is to find the highest quality video to gif from for a media release & avoid YouTube whenever possible. However for smaller / niche media (e.g. new & upcoming musical artists), prepping gifs from highly compressed YouTube videos is inevitable.

So how do I get around with this? I have found Neural Filters pretty handy when it comes to both correcting issues from video compression & enhancing details in gifs prepared from these highly compressed video files.

Filters used: JPEG Artefacts Removal / Photo Restoration

Facial Enhancement:

When I prepare gifs from highly compressed videos, something I like to do is to enhance the facial features. This is again useful when I make gifsets from compressed videos & want to fill up my final panel with a close-up shot.

Filters used: Skin Smoothing / Makeup Transfer / Photo Restoration (Facial Enhancement slider)

Colour Manipulation:

Neural Filters is a powerful way to do advanced colour manipulation - whether I want to quickly transform the colour scheme of a gif or transform a B&W clip into something colourful.

Filters used: Colourise / Colour Transfer

Artistic Effects:

This is one of my favourite things to do with Neural Filters! I enjoy using the filters to create artistic effects by feeding textures that I've downloaded as reference images. I also enjoy using these filters to transform the overall the atmosphere of my composite gifs. The gifsets where I've leveraged Neural Filters for artistic effects could be found under this tag on usergif.

Filters used: Landscape Mixer / Style Transfer / Depth Blur

How I use Neural Filters over different stages of my gifmaking workflow:

I want to outline how I use different Neural Filters throughout my gifmaking process. This can be roughly divided into two stages:

Stage I: Enhancement and/or Colourising | Takes place early in my gifmaking process. I process a large amount of component gifs by applying Neural Filters for enhancement purposes and adding some base colourings.++

Stage II: Artistic Effects & more Colour Manipulation | Takes place when I'm assembling my component gifs in the big PSD / PSB composition file that will be my final gif panel.

I will walk through this in more detail later in the tutorial.

++I personally like to keep the size of the component gifs in their original resolution (a mixture of 1080p & 4K), to get best possible results from the Neural Filters and have more flexibility later on in my workflow. I resize & sharpen these gifs after they're placed into my final PSD composition files in Tumblr dimensions.

3. Getting started

The essence is to output Neural Filters as a Smart Filter on the smart object when working with the Video Timeline interface. Your workflow will contain the following steps:

Prepare your gif

In the frame animation interface, set the frame delay to 0.03s and convert your gif to the Video Timeline

In the Video Timeline interface, go to Filter > Neural Filters and output to a Smart Filter

Flatten or render your gif (either approach is fine). To flatten your gif, play the "flatten" action from the gif prep action pack. To render your gif as a .mov file, go to File > Export > Render Video & use the following settings.

Setting up:

o.) To get started, prepare your gifs the usual way - whether you screencap or clip videos. You should see your prepared gif in the frame animation interface as follows:

Note: As mentioned earlier, I keep the gifs in their original resolution right now because working with a larger dimension document allows more flexibility later on in my workflow. I have also found that I get higher quality results working with more pixels. I eventually do my final sharpening & resizing when I fit all of my component gifs to a main PSD composition file (that's of Tumblr dimension).

i.) To use Smart Filters, convert your gif to a Smart Video Layer.

As an aside, I like to work with everything in 0.03s until I finish everything (then correct the frame delay to 0.05s when I upload my panels onto Tumblr).

For convenience, I use my own action pack to first set the frame delay to 0.03s (highlighted in yellow) and then convert to timeline (highlighted in red) to access the Video Timeline interface. To play an action, press the play button highlighted in green.

Once you've converted this gif to a Smart Video Layer, you'll see the Video Timeline interface as follows:

ii.) Select your gif (now as a Smart Layer) and go to Filter > Neural Filters

Installing Neural Filters:

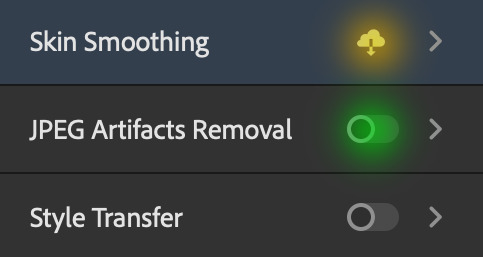

Install the individual Neural Filters that you want to use. If the filter isn't installed, it will show a cloud symbol (highlighted in yellow). If the filter is already installed, it will show a toggle button (highlighted in green)

When you toggle this button, the Neural Filters preview window will look like this (where the toggle button next to the filter that you use turns blue)

4. Using Neural Filters

Once you have installed the Neural Filters that you want to use in your gif, you can toggle on a filter and play around with the sliders until you're satisfied. Here I'll walkthrough multiple concrete examples of how I use Neural Filters in my giffing process.

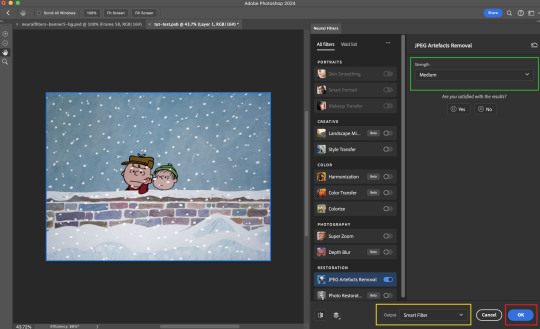

Example 1: Image enhancement | sample gifset

This is my typical Stage I Neural Filters gifmaking workflow. When giffing older or more niche media releases, my main concern is the video compression that leads to a lot of artefacts in the screencapped / video clipped gifs.

To fix the artefacts from compression, I go to Filter > Neural Filters, and toggle JPEG Artefacts Removal filter. Then I choose the strength of the filter (boxed in green), output this as a Smart Filter (boxed in yellow), and press OK (boxed in red).

Note: The filter has to be fully processed before you could press the OK button!

After applying the Neural Filters, you'll see "Neural Filters" under the Smart Filters property of the smart layer

Flatten / render your gif

Example 2: Facial enhancement | sample gifset

This is my routine use case during my Stage I Neural Filters gifmaking workflow. For musical artists (e.g. Maisie Peters), YouTube is often the only place where I'm able to find some videos to prepare gifs from. However even the highest resolution video available on YouTube is highly compressed.

Go to Filter > Neural Filters and toggle on Photo Restoration. If Photoshop recognises faces in the image, there will be a "Facial Enhancement" slider under the filter settings.

Play around with the Photo Enhancement & Facial Enhancement sliders. You can also expand the "Adjustment" menu make additional adjustments e.g. remove noises and reducing different types of artefacts.

Once you're happy with the results, press OK and then flatten / render your gif.

Example 3: Colour Manipulation | sample gifset

Want to make a colourful gifset but the source video is in B&W? This is where Colourise from Neural Filters comes in handy! This same colourising approach is also very helpful for colouring poor-lit scenes as detailed in this tutorial.

Here's a B&W gif that we want to colourise:

Highly recommended: add some adjustment layers onto the B&W gif to improve the contrast & depth. This will give you higher quality results when you colourise your gif.

Go to Filter > Neural Filters and toggle on Colourise.

Make sure "Auto colour image" is enabled.

Play around with further adjustments e.g. colour balance, until you're satisfied then press OK.

Important: When you colourise a gif, you need to double check that the resulting skin tone is accurate to real life. I personally go to Google Images and search up photoshoots of the individual / character that I'm giffing for quick reference.

Add additional adjustment layers until you're happy with the colouring of the skin tone.

Once you're happy with the additional adjustments, flatten / render your gif. And voila!

Note: For Colour Manipulation, I use Colourise in my Stage I workflow and Colour Transfer in my Stage II workflow to do other types of colour manipulations (e.g. transforming the colour scheme of the component gifs)

Example 4: Artistic Effects | sample gifset

This is where I use Neural Filters for the bulk of my Stage II workflow: the most enjoyable stage in my editing process!

Normally I would be working with my big composition files with multiple component gifs inside it. To begin the fun, drag a component gif (in PSD file) to the main PSD composition file.

Resize this gif in the composition file until you're happy with the placement

Duplicate this gif. Sharpen the bottom layer (highlighted in yellow), and then select the top layer (highlighted in green) & go to Filter > Neural Filters

I like to use Style Transfer and Landscape Mixer to create artistic effects from Neural Filters. In this particular example, I've chosen Landscape Mixer

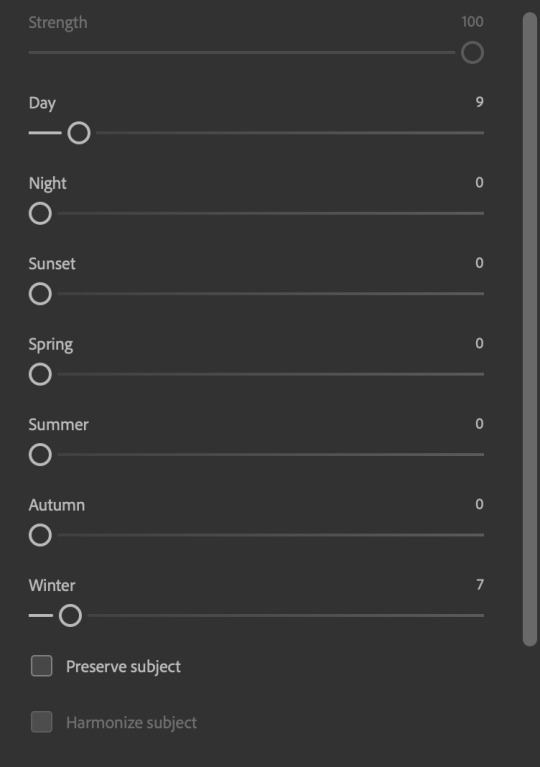

Select a preset or feed a custom image to the filter (here I chose a texture that I've on my computer)

Play around with the different sliders e.g. time of the day / seasons

Important: uncheck "Harmonise Subject" & "Preserve Subject" - these two settings are known to cause performance issues when you render a multiframe smart object (e.g. for a gif)

Once you're happy with the artistic effect, press OK

To ensure you preserve the actual subject you want to gif (bc Preserve Subject is unchecked), add a layer mask onto the top layer (with Neural Filters) and mask out the facial region. You might need to play around with the Layer Mask Position keyframes or Rotoscope your subject in the process.

After you're happy with the masking, flatten / render this composition file and voila!

Example 5: Putting it all together | sample gifset

Let's recap on the Neural Filters gifmaking workflow and where Stage I and Stage II fit in my gifmaking process:

i. Preparing & enhancing the component gifs

Prepare all component gifs and convert them to smart layers

Stage I: Add base colourings & apply Photo Restoration / JPEG Artefacts Removal to enhance the gif's image quality

Flatten all of these component gifs and convert them back to Smart Video Layers (this process can take a lot of time)

Some of these enhanced gifs will be Rotoscoped so this is done before adding the gifs to the big PSD composition file

ii. Setting up the big PSD composition file

Make a separate PSD composition file (Ctrl / Cmmd + N) that's of Tumblr dimension (e.g. 540px in width)

Drag all of the component gifs used into this PSD composition file

Enable Video Timeline and trim the work area

In the composition file, resize / move the component gifs until you're happy with the placement & sharpen these gifs if you haven't already done so

Duplicate the layers that you want to use Neural Filters on

iii. Working with Neural Filters in the PSD composition file

Stage II: Neural Filters to create artistic effects / more colour manipulations!

Mask the smart layers with Neural Filters to both preserve the subject and avoid colouring issues from the filters

Flatten / render the PSD composition file: the more component gifs in your composition file, the longer the exporting will take. (I prefer to render the composition file into a .mov clip to prevent overriding a file that I've spent effort putting together.)

Note: In some of my layout gifsets (where I've heavily used Neural Filters in Stage II), the rendering time for the panel took more than 20 minutes. This is one of the rare instances where I was maxing out my computer's memory.

Useful things to take note of:

Important: If you're using Neural Filters for Colour Manipulation or Artistic Effects, you need to take a lot of care ensuring that the skin tone of nonwhite characters / individuals is accurately coloured

Use the Facial Enhancement slider from Photo Restoration in moderation, if you max out the slider value you risk oversharpening your gif later on in your gifmaking workflow

You will get higher quality results from Neural Filters by working with larger image dimensions: This gives Neural Filters more pixels to work with. You also get better quality results by feeding higher resolution reference images to the Neural Filters.

Makeup Transfer is more stable when the person / character has minimal motion in your gif

You might get unexpected results from Landscape Mixer if you feed a reference image that don't feature a distinctive landscape. This is not always a bad thing: for instance, I have used this texture as a reference image for Landscape Mixer, to create the shimmery effects as seen in this gifset

5. Testing your system

If this is the first time you're applying Neural Filters directly onto a gif, it will be helpful to test out your system yourself. This will help:

Gauge the expected rendering time that you'll need to wait for your gif to export, given specific Neural Filters that you've used

Identify potential performance issues when you render the gif: this is important and will determine whether you will need to fully playback your gif before flattening / rendering the file.

Understand how your system's resources are being utilised: Inputs from Windows PC users & Mac users alike are welcome!

About the Neural Filters test files:

Contains six distinct files, each using different Neural Filters

Two sizes of test files: one copy in full HD (1080p) and another copy downsized to 540px

One folder containing the flattened / rendered test files

How to use the Neural Filters test files:

What you need:

Photoshop 2022 or newer (recommended: 2023 or later)

Install the following Neural Filters: Landscape Mixer / Style Transfer / Colour Transfer / Colourise / Photo Restoration / Depth Blur

Recommended for some Apple Silicon-based MacBook Pro models: Enable High Power Mode

How to use the test files:

For optimal performance, close all background apps

Open a test file

Flatten the test file into frames (load this action pack & play the “flatten” action)

Take note of the time it takes until you’re directed to the frame animation interface

Compare the rendered frames to the expected results in this folder: check that all of the frames look the same. If they don't, you will need to fully playback the test file in full before flattening the file.†

Re-run the test file without the Neural Filters and take note of how long it takes before you're directed to the frame animation interface

Recommended: Take note of how your system is utilised during the rendering process (more info here for MacOS users)

†This is a performance issue known as flickering that I will discuss in the next section. If you come across this, you'll have to playback a gif where you've used Neural Filters (on the video timeline) in full, prior to flattening / rendering it.

Factors that could affect the rendering performance / time (more info):

The number of frames, dimension, and colour bit depth of your gif

If you use Neural Filters with facial recognition features, the rendering time will be affected by the number of characters / individuals in your gif

Most resource intensive filters (powered by largest machine learning models): Landscape Mixer / Photo Restoration (with Facial Enhancement) / and JPEG Artefacts Removal

Least resource intensive filters (smallest machine learning models): Colour Transfer / Colourise

The number of Neural Filters that you apply at once / The number of component gifs with Neural Filters in your PSD file

Your system: system memory, the GPU, and the architecture of the system's CPU+++

+++ Rendering a gif with Neural Filters demands a lot of system memory & GPU horsepower. Rendering will be faster & more reliable on newer computers, as these systems have CPU & GPU with more modern instruction sets that are geared towards machine learning-based tasks.

Additionally, the unified memory architecture of Apple Silicon M-series chips are found to be quite efficient at processing Neural Filters.

6. Performance issues & workarounds

Common Performance issues:

I will discuss several common issues related to rendering or exporting a multi-frame smart object (e.g. your composite gif) that uses Neural Filters below. This is commonly caused by insufficient system memory and/or the GPU.

Flickering frames: in the flattened / rendered file, Neural Filters aren't applied to some of the frames+-+

Scrambled frames: the frames in the flattened / rendered file isn't in order

Neural Filters exceeded the timeout limit error: this is normally a software related issue

Long export / rendering time: long rendering time is expected in heavy workflows

Laggy Photoshop / system interface: having to wait quite a long time to preview the next frame on the timeline

Issues with Landscape Mixer: Using the filter gives ill-defined defined results (Common in older systems)--

Workarounds:

Workarounds that could reduce unreliable rendering performance & long rendering time:

Close other apps running in the background

Work with smaller colour bit depth (i.e. 8-bit rather than 16-bit)

Downsize your gif before converting to the video timeline-+-

Try to keep the number of frames as low as possible

Avoid stacking multiple Neural Filters at once. Try applying & rendering the filters that you want one by one

Specific workarounds for specific issues:

How to resolve flickering frames: If you come across flickering, you will need to playback your gif on the video timeline in full to find the frames where the filter isn't applied. You will need to select all of the frames to allow Photoshop to reprocess these, before you render your gif.+-+

What to do if you come across Neural Filters timeout error? This is caused by several incompatible Neural Filters e.g. Harmonisation (both the filter itself and as a setting in Landscape Mixer), Scratch Reduction in Photo Restoration, and trying to stack multiple Neural Filters with facial recognition features.

If the timeout error is caused by stacking multiple filters, a feasible workaround is to apply the Neural Filters that you want to use one by one over multiple rendering sessions, rather all of them in one go.

+-+This is a very common issue for Apple Silicon-based Macs. Flickering happens when a gif with Neural Filters is rendered without being previously played back in the timeline.

This issue is likely related to the memory bandwidth & the GPU cores of the chips, because not all Apple Silicon-based Macs exhibit this behaviour (i.e. devices equipped with Max / Ultra M-series chips are mostly unaffected).

-- As mentioned in the supplementary page, Landscape Mixer requires a lot of GPU horsepower to be fully rendered. For older systems (pre-2017 builds), there are no workarounds other than to avoid using this filter.

-+- For smaller dimensions, the size of the machine learning models powering the filters play an outsized role in the rendering time (i.e. marginal reduction in rendering time when downsizing 1080p file to Tumblr dimensions). If you use filters powered by larger models e.g. Landscape Mixer and Photo Restoration, you will need to be very patient when exporting your gif.

7. More useful resources on using Neural Filters

Creating animations with Neural Filters effects | Max Novak

Using Neural Filters to colour correct by @edteachs

I hope this is helpful! If you have any questions or need any help related to the tutorial, feel free to send me an ask 💖

#photoshop tutorial#gif tutorial#dearindies#usernik#useryoshi#usershreyu#userisaiah#userroza#userrobin#userraffa#usercats#userriel#useralien#userjoeys#usertj#alielook#swearphil#*#my resources#my tutorials

538 notes

·

View notes

Text

Writing Notes: Cookbook

Whether you want to turn your own recipes into a cookbook as a family keepsake, or work with a publisher to get the most viral recipes from your blog onto paper and into bookstores, making a cookbook is often a fun but work-intensive process.

How to Make a Cookbook

The process of making a cookbook will depend on your publishing route, but in general you’ll need to work through the following steps:

Concept: The first step of making a cookbook is to figure out what kind of cookbook this will be. Your cookbook can focus on a single ingredient, meal, region, or culture. It can be an educational tome for beginners, or a slapdash collection of family favorites for your relatives. If you’re looking to get your cookbook published, a book proposal is a necessary step towards getting a book deal, and can also help you pin down your concept.

Compile recipes: If you’ve been dreaming of writing a cookbook, chances are you probably already know some recipes that have to be included. Make a list of those important recipes and use that as a jumping-off point to brainstorm how your cookbook will be organized and what other recipes need to be developed. If you’re compiling a community cookbook, reach out to your community members and assemble their recipes.

Outline: Based on your guiding concept and key recipes, make a rough table of contents. Possibly the most common way to divide a cookbook is into meals (appetizers, breakfast, lunch, dinner) but cookbooks can also be divided by season, raw ingredients (vegetables, fish, beef), cooking techniques, or some other narrative structure.

Recipe development: Flesh out your structure by developing beyond your core recipes, if needed, and fine-tuning those recipes which need a bit more work.

Recipe testing: Hire recipe testers, or enlist your friends and family, to test out your recipes in their home kitchens. Have them let you know what worked and didn’t work, or what was confusing.

Write the surrounding material: Most cookbooks include some writing other than the recipes. This may include chapter introductions and blurbs for each recipe.

Photography and layout: If your book includes photography, at some point there will be photo shoots where the food will have to be prepared and styled for camera. Traditional publishing houses will likely want to hire stylists and photographers who specialize in food photography. Once the images and text are both ready, a book designer will arrange them together and make the cover design, but you can also make your own cookbook design using software like InDesign or old-school DIY-style, with paper, scissors, and a photocopier.

Editing: If you’re working with a publisher, there may be several rounds of back and forth as your editor works with you to fine-tune the recipes and text. The book will then be sent to a copy editor who will go through the entire cookbook looking for grammar and style issues, and indexer for finishing touches. If you’re self-publishing, give a rough draft of your book to friends and family members to proofread.

Printing: After everything is laid out and approved, your cookbook is ready to be printed. If you’re printing your cookbook yourself, you can go to a copy shop to get it spiral bound, or send it off to a printer for more options.

Common Types of Cookbooks

More so than any other kind of nonfiction book, cookbooks lend themselves to self-publishing. Of course, cookbook publishing is also a huge industry, and a professional publisher might be the best route for your book depending on the scope and your reach as a chef.

Self-published: This is a cookbook made of up your own recipes, which you might give as gifts to family and friends. You can easily self-publish a cookbook online as an individual. But if having a print book is important to you, there are many options. You can print and staple together a short cookbook, zine-style. Many copy shops will also offer options for wire-bound cookbooks, and there are resources online that will print bookstore-quality softcover or hard-cover books for a fee.

Community cookbooks: A special subset of self-published cookbook made up of recipes from multiple individuals, usually to raise money for a cause or organization. Working with a group has the advantage of a large pool of recipes and testers, and is a great way to share your recipes with a larger audience while also supporting a cause you believe in.

Through a publishing house: If you think your cookbook has a wider audience, you may want to seek out a mainstream publishing house. Get a literary agent who can to publishers who can connect you with publishers who are interested in your cookbook. Large publishing houses don’t usually accept pitches from individuals, but you can reach out to small, local publishers without an agent as intermediary. To publish a cookbook through a publishing house, you’ll typically need a book proposal outlining your concept, audience, and budget.

Things to Consider Before Making a Cookbook

Before embarking on your cookbook project, it’s a good idea to get organized, and to figure out what kind of cookbook you want to make.

Photography: More so than other texts, cookbooks often include visual accompaniment. Beautiful pages of full-color photos are expensive, which is one reason publishers like to work with bloggers who can style and photograph their own food. Not all cookbooks need photos, however. Some of the most iconic cookbooks rely on illustrations, or words alone. Figure out what role, if any, visuals will play in your book.

Audience: Are you turning recipe cards into a keepsake family cookbook, or selling this cookbook nationwide? Your intended audience will greatly influence how you write and publish your cookbook, whether it’s vegans, college students, or owners of pressure cookers. You’ll need to consider your audience’s cooking skill level, desires, and where they buy their food.

Budget: Once you have a vision for what you want your cookbook to be, budget your time and resources. Do you need help to make this book? The answer is probably yes. Assemble a team of people who understand your vision and know what kind of commitment will be involved.

Source ⚜ More: Notes & References ⚜ Writing Resources PDFs

#cookbook#nonfiction#writing reference#writing tips#writeblr#dark academia#literature#writers on tumblr#spilled ink#writing prompt#light academia#writing ideas#writing inspiration#writing resources

44 notes

·

View notes

Text

On Saturday, an Associated Press investigation revealed that OpenAI's Whisper transcription tool creates fabricated text in medical and business settings despite warnings against such use. The AP interviewed more than 12 software engineers, developers, and researchers who found the model regularly invents text that speakers never said, a phenomenon often called a “confabulation” or “hallucination” in the AI field.

Upon its release in 2022, OpenAI claimed that Whisper approached “human level robustness” in audio transcription accuracy. However, a University of Michigan researcher told the AP that Whisper created false text in 80 percent of public meeting transcripts examined. Another developer, unnamed in the AP report, claimed to have found invented content in almost all of his 26,000 test transcriptions.

The fabrications pose particular risks in health care settings. Despite OpenAI’s warnings against using Whisper for “high-risk domains,” over 30,000 medical workers now use Whisper-based tools to transcribe patient visits, according to the AP report. The Mankato Clinic in Minnesota and Children’s Hospital Los Angeles are among 40 health systems using a Whisper-powered AI copilot service from medical tech company Nabla that is fine-tuned on medical terminology.

Nabla acknowledges that Whisper can confabulate, but it also reportedly erases original audio recordings “for data safety reasons.” This could cause additional issues, since doctors cannot verify accuracy against the source material. And deaf patients may be highly impacted by mistaken transcripts since they would have no way to know if medical transcript audio is accurate or not.

The potential problems with Whisper extend beyond health care. Researchers from Cornell University and the University of Virginia studied thousands of audio samples and found Whisper adding nonexistent violent content and racial commentary to neutral speech. They found that 1 percent of samples included “entire hallucinated phrases or sentences which did not exist in any form in the underlying audio” and that 38 percent of those included “explicit harms such as perpetuating violence, making up inaccurate associations, or implying false authority.”

In one case from the study cited by AP, when a speaker described “two other girls and one lady,” Whisper added fictional text specifying that they “were Black.” In another, the audio said, “He, the boy, was going to, I’m not sure exactly, take the umbrella.” Whisper transcribed it to, “He took a big piece of a cross, a teeny, small piece … I’m sure he didn’t have a terror knife so he killed a number of people.”

An OpenAI spokesperson told the AP that the company appreciates the researchers’ findings and that it actively studies how to reduce fabrications and incorporates feedback in updates to the model.

Why Whisper Confabulates

The key to Whisper’s unsuitability in high-risk domains comes from its propensity to sometimes confabulate, or plausibly make up, inaccurate outputs. The AP report says, "Researchers aren’t certain why Whisper and similar tools hallucinate," but that isn't true. We know exactly why Transformer-based AI models like Whisper behave this way.

Whisper is based on technology that is designed to predict the next most likely token (chunk of data) that should appear after a sequence of tokens provided by a user. In the case of ChatGPT, the input tokens come in the form of a text prompt. In the case of Whisper, the input is tokenized audio data.