#Behavioral AI

Explore tagged Tumblr posts

Text

Stackpack Secures $6.3M to Reinvent Vendor Management in an AI-Driven Business Landscape

New Post has been published on https://thedigitalinsider.com/stackpack-secures-6-3m-to-reinvent-vendor-management-in-an-ai-driven-business-landscape/

Stackpack Secures $6.3M to Reinvent Vendor Management in an AI-Driven Business Landscape

In a world where third-party tools, services, and contractors form the operational backbone of modern companies, Stackpack has raised $6.3 million to bring order to the growing complexity.

Led by Freestyle Capital, the funding round includes support from Elefund, Upside Partnership, Nomad Ventures, Layout Ventures, MSIV Fund, and strategic angels from Intuit, Workday, Affirm, Snapdocs, and xAI.

The funding supports Stackpack’s mission to redefine how businesses manage their expanding vendor networks—an increasingly vital task as organizations now juggle hundreds or even thousands of external partners and platforms.

Turning Chaos into Control

Founded in 2023 by Sara Wyman, formerly of Etsy and Affirm, Stackpack was built to solve a problem she knew too well: modern companies are powered by vendors, yet most still track them with outdated methods—spreadsheets, scattered documents, and guesswork. With SaaS stacks ballooning and AI tools proliferating, unmanaged vendors become silent liabilities.

“Companies call themselves ‘people-first,’ but in reality, they’re becoming ‘vendor-first,’” said Wyman. “There are often 6x more vendors than employees. Yet there’s no system of record to manage that shift—until now.”

Stackpack gives finance and IT teams a unified, AI-powered dashboard that provides real-time visibility into vendor contracts, spend, renewals, and compliance risks. The platform automatically extracts key contract terms like auto-renewal clauses, flags overlapping subscriptions, and even predicts upcoming renewals buried deep in PDFs.

AI That Works Like a Virtual Vendor Manager

Stackpack’s Behavioral AI Engine acts as an intelligent assistant, surfacing hidden cost-saving opportunities, compliance risks, and critical dates. It not only identifies inefficiencies—it takes action, issuing alerts, initiating workflows, and providing recommendations across the vendor lifecycle.

For instance:

Renewal alerts prevent surprise charges.

Spend tracking identifies underused or duplicate tools.

Contract intelligence extracts legal and pricing terms from uploads or integrations with tools like Google Drive.

Approval workflows streamline onboarding and procurement.

This brings the kind of automation once reserved for enterprise procurement platforms like Coupa or SAP to startups and mid-sized businesses—at a fraction of the cost.

A Timely Solution for a Growing Problem

Vendor management has become a boardroom issue. As more companies shift budgets from headcount to outsourced services, compliance and financial oversight have become harder to maintain. Stackpack’s early traction is proof of demand: just months after launch, it’s managing over 10,500 vendors and $510 million in spend across more than 50 customers, including Every Man Jack, Rho, Density, HouseRx, Fexa, and ZeroEyes.

“The CFO is the one left holding the bag when things go wrong,” said Brandon Lee, Accounting Manager at BizzyCar. “Stackpack means we don’t have to cross our fingers every quarter.”

Beyond Visibility: Enabling Smarter Vendor Decisions

Alongside its core platform, Stackpack is launching Requests & Approvals, a lightweight tool to simplify vendor onboarding and purchasing decisions—currently in beta. The feature is already attracting customers looking for faster, more agile alternatives to traditional procurement systems.

With a long-term vision to help companies not only manage but discover and evaluate vendors more strategically, Stackpack is laying the groundwork for a smarter, interconnected vendor ecosystem.

“Every vendor decision carries legal, financial, and security consequences,” said Dave Samuel, General Partner at Freestyle Capital. “Stackpack is building the intelligent infrastructure to manage these relationships proactively.”

The Future of Vendor Operations

As third-party ecosystems grow in size and complexity, Stackpack aims to transform vendor operations from a liability into a competitive advantage. Its AI-powered approach gives companies a modern operating system for vendor management—one that’s scalable, proactive, and deeply integrated into finance and operations.

“This isn’t just about cost control—it’s about running a smarter company,” said Wyman. “Managing your vendors should be as strategic as managing your talent. We’re giving companies the tools to make that possible.”

With fresh funding and a rapidly expanding customer base, Stackpack is poised to become the new standard for how modern businesses manage the partners powering their growth.

#2023#accounting#agile#ai#ai tools#AI-powered#alerts#amp#approach#automation#Behavioral AI#budgets#Building#Business#CFO#chaos#Companies#complexity#compliance#dashboard#dates#documents#EARLY#Ecosystems#employees#engine#enterprise#finance#financial#form

2 notes

·

View notes

Text

And Behavior AI is the behavioral mapping and proceedings of video game characters within their virtual words.

(I've seen some people in modding communities get angry at seeing "AI" in the title of some mods, so I wanted to make it clear that not all Behavioral "NPC" AI is in reference to Generative AI. Some of it is just the artificial "intelligence" of characters within a game, usually NPC's mapping and interacting with the game world so they function correctly. When in doubt make sure to read things carefully and do research into where exactly the artificial intelligence is being created from. Good rule of thumb? If the intelligence has to "generate" a product or answer from "nothing", it's worthless. If a computer is being taught pattern recognition or a series of programmed instructions to run through (i.e. identifying cancer cells or getting blorbo from video game to walk through doors correctly) it isn't necessarily "generative").

when talking about AI remember the different versions:

Analytical AI, is the one that can detect cancer and save lives

Generative AI is the one that steals art to make it worse, and gives you a wrong answer every time you google something

Weird Al is the one who got his ponysona to canonically have children with a pony from my little pony

#bethesda#modding#mods#behavioral ai#ai#behavioral ai vs generative ai#generative ai#modding community#ai bullshit#but identifying the differences between types of ai

33K notes

·

View notes

Text

and like I don't even blame the other gods for not doubting or thinking too much of apollo's personality change despite how obvious it should have been that something was up if you looked at the gap between his domains and his behavior and just thought about it for a minute

and that's because despite everything, the idea of apollo having learnt to lie is such an existentially terrifying concept that I do not blame their brains for refusing to even consider it

#it's like the godly equivalent of an ai gaining sentience#just#bone deep terror#pjo apollo#toa apollo#trials of apollo#toa#the trials of apollo#toa analysis#toa meta#also disclaimer: i think athena was 100% aware#i think those two are The ones who Completely understand the other's way of thinking#like legit perfect predictions of the others behavior 100 accuracy rate hasn't been wrong yet type of Understanding

219 notes

·

View notes

Text

Allow yourselves to believe that anything can be possible.

#a.b.e.l#divine machinery#archangel#automated#behavioral#ecosystem#learning#divine#machinery#ai#artificial intelligence#angels#guardian angel#angel#robot#computer#computer boy#cogito ergo sum#sentient objects#sentient ai

283 notes

·

View notes

Text

I MISSED THE WAY CHEN YI LITERALLY TONGUED AIDI DOWN?

#whats this behavior Nat????#kiseki dear to me#kiseki: dear to me#louis chiang#jiang dian#taiwanese bl#chiang tien#nat chen#ai di#chen bowen#chen yi#chenai#natlouis#chen yi x ai di#chenyi aidi

1K notes

·

View notes

Text

Sometimes I see people on here reply to posts extremely adjacently. Like if someone said "let's talk about horses" and another person responded with "I think what you mean is birds. Here are the things I've learned about birds" like no bro. We're talking about horses right now. We don't need the bird script you memorized for bird conversations. Start writing a horse script or giddyup on out of here

312 notes

·

View notes

Text

BEACH EPISODE LETSSS FUCKING GOOOOOO

#thats very autistic behavior mhin is thdre smth you want to tell us#FUCKK YEAHHH#touchstarved#touchstarved game#touchstarved mhin#touchstarved kuras#touchstarved leander#ais touchstarved#vere touchstarved#mhin#vere#ais#leander#kuras

189 notes

·

View notes

Text

Lots of doodles of Fal with Starstruck Dee!! @starflungwaddledee

Originally supposed to be for the Shipganza event but since I picture their interactions as platonic friendship, these don't count in the event...

#kirby oc#kirby#my draws#silly doodles#other people’s ocs#other ocs#waddle dee oc#fal mahoiku#fal#i got too excited when drawing these i think it's too obvious XD#they are so cute together!!! like totally normal friends#sometimes i like to think people forget Fal is an AI#and so he can go unnoticed. Like... he's just a silly fella!! some of his actions and words can be treated like childish behavior#when he actually can easily send u with Morpho if he wants#if that makes any sense??#i think i rambled a lot... sorry!!!!#btw i headcanon that Waddle Dees have little penguin features#including a little tail!!!

66 notes

·

View notes

Note

Do you think Kamiki cares about the twins?

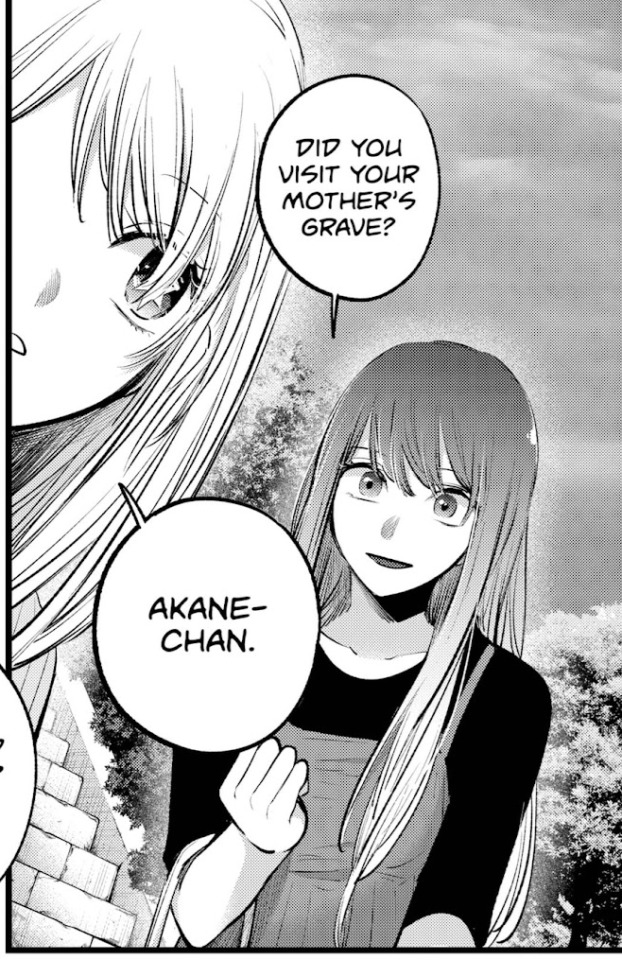

I think that's something a lot messier than a binary yes/no answer. Above and before anything else, Kamiki obviously has some very fraught and very understandable hangups when it comes to fatherhood which would put a huge block on being able to bond with any of his kids - if that was even something if he wanted in the first place. Like, to be clear, not wanting kids and not feeling bonded to kids that you did not have the intent of conceiving is not a moral failure or anything! But it does definitely complicate things.

'Complicated' is the keyword there, I think. There are moments where it seems like he might feel some sense of fondness for them and it even comes up in at least one moment where he doesn't really have any need to put on an 'act', so I do think it's genuine to a degree. He seems especially fond of Ruby and a bit more distant/disdainful of Aqua, which is probably a reflection of his own self-hatred (projected onto Aqua) and continued reverence for Ai (projected onto Ruby) colouring his emotions regarding the twins.

147 is particularly interesting in that regard - not only does he give Ruby some genuinely good advice with regards to what she's opening up about, but his motivations for approaching her in the first place are also themselves unclear. And then obviously there's the moment of him reaching out to Ruby with his hoshigans briefly flashing white:

This is obviously and deliberately framed in a sinister way to make it look like he's about to, idk, shove Ruby down the stairs or something but as I pointed out previously when this chapter first dropped, this is the first (and at the time of writing that post, only) time we'd ever seen the real Hikaru without black hoshigans. Not just that but in the panel basically immediately following that by the time Akane reaches them both, they're already at the bottom of the stairs and on ground level. So, uh... where was he going to push her from exactly?

Coming back at this from the tail end of the series, it also stands out to me that if this WAS supposed to be a genuine attempt on Ruby's life then this would be wayyyyyy out of line with Hikaru's established pathology and methods. Even ignoring the whole stupid ass serial killer cult bullshit that got retconned into the ending, Hikaru is always portrayed as someone who commits crime indirectly and doesn't dirty his hands by actively getting involved. Shoving someone down the stairs (in broad daylight, where literally anyone could spot it lmao) is pretty direct! So even now, I feel pretty confident that he wasn't making an attempt on Ruby's life, especially since he seems pretty at peace with 15 Year Lie's existence and what it might do to his reputation. If he was happy to submit himself to Aqua (and Ruby's) judgement at that time, then killing the lead actress would obviously be a spanner in the works he'd be inclined to avoid.

So I do think Hikaru has some relatively fond feelings for the twins... but I do also think those feelings mostly stem from their connection to Ai and their being an enduring symbol of her influence on and existence in the world. In that sense, I think the first words we hear Hikaru ever says about either of the kids are quite telling - he attributes the positive traits he sees in Ruby as coming from being his and Ai's child.

Similarly, if we go back that white hoshigan flash, it comes in a moment where Hikaru is obviously seeing something of Ai in Ruby.

In other words, Hikaru holds some proxy fondness for them as extensions of his love for & relationship with Ai... but I don't really get the sense that he feels a particularly deep connection or sense/duty of care towards them outside of that context. He never makes any effort to enter the twins' lives nor does he really seem to want to be a part of them even before the whole murder thing. During the breakup, when Ai tells him she's pregnant, Hikaru never actually mentions the baby even once - his 'let's get married' is about preventing the breakup and he doesn't express any interest in or desire to meet or have access to his kid(s), even though it certainly wouldn't hurt his chances at getting her to slide back into their comfy codependence if they were coparenting and thus still in each others' lives.

He also never reaches out to her in the years following, before or after Ai's death - like, obviously, from a Doylist perspective, Hikaru turning up to be like 'hey I'm your dad btw' would suddenly and irreversibly change the genre of Oshi no Ko to loony toons comedy of baby Aqua trying to murder his dad every day Wile E. Coyote style, but this is still characterization that occurs (or rather, pointedly fails to occur) on page and is something to take into account. Ultimately, it's Ai who ends up trying to push for Hikaru to meet the twins, which he immediately tries to derail into talk of them getting back together and then uses as an in to... well, we all know how THAT turned out.

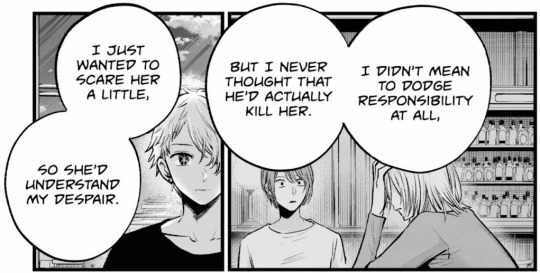

Actually - the way Hikaru talks about The Ryosuke-ing is another really interesting indication to me that the twins just... don't really take up a lot of real estate in his head? As he puts it in 154:

I do genuinely believe Hikaru here when he says he fully did not expect Ai to end up dead when he sent Ryosuke to the apartment but even the best interpretation of his words here are that he was betraying the trust she had put in him and sending someone to frighten and torment her in her own home. But at no point does it seem like the kids were ever a factor in this decision - not even a "well if the kids get scared that'll upset her even more" or "oh FUCK i never even considered what this would do to the kids". Obviously this is a super speedy exchange and we see shockingly little of Hikaru's post-breakup self outside of exposition dumps so there's not a ton of insight into his mindset at the time. But I DO think it's interesting.

So because of... [gestures vaguely up post] ALL OF THIS, the impression I get from the text is that Hikaru's fondness for the twins is more out of an inherited sense of attachment to them as his and Ai's children as opposed to him feeling any sort of deeply paternal feelings for them by default or any real sense of deep attachment to them.

And, like - again, I want to be clear, not feeling a bond to kids you did not plan to conceive or not feeling a desire to be in your kids' lives is not A Moral Failing, obviously. Honestly, the impression I get from Hikaru is that he's still so tangled up in the trauma aftermath of Airi's abuse and his relationship and breakup with Ai that it's kind of fucked up his ability to form meaningful connections with other people in his life. This is even kind of an issue when it comes to his relationship with Ai - he doesn't seem to be able to conceptualize of having a relationship with her that isn't romantic and codependent in nature. This, I think contributes to his black/white (heh) view of her feelings for him - where he concludes, essentially, that if Ai didn't love him then she must never have cared for him at all in any way shape or form.

#oshi no ko#oshi no posting#onk spoilers#onk asks#hikaru kamiki#kamiki hikaru#there was a longer ramble here about how the breakdown in the hikaai r/s was#among other things#perpetuated by both hikaru & ai projecting their self loathing onto the other and reading their behavior thru that lens#but it was kind of a mess and this post is long enough so. maybe that'll be its own post someday.#anyway this was the post i was rereading 153-4 for#if ur curious

27 notes

·

View notes

Text

I forgot I blocked the inzoi tag because everyone was being so damn annoying 😭

#puffer talks#on both sides honestly#but mostly people who are playing inzoi like SIMS COULD NEVER IF YOU ENJOY YHE SIMS YOU ARE DUMB#girl just play the damn game#are you just playing out of spite#also fools who are like well its not copyright AI!!!! it's still terrible for the environment and also loser behavior#ai art is trash garbage#but anyways#im so tired of this discourse

28 notes

·

View notes

Text

okay so apollo vs python round one time!!

bear with me because this got really long

so the thing I've long wondered about this event is just Why The Fuck did he do this. at four days old. alone.

because it makes no sense right??

even if he wants to take revenge and protect his mom, at this time he's a duo with artemis and isn't she by all means more qualified for this than him?? she's the more martially inclined of the two and represents lawlessness and wildness, I don't know if she is yet but still, when she comes into her divinity (only a few days later!!!) she'll literally be known as the huntress of wild beasts

so that is one point. the other is just what possessed him to think he could do this?? python is a child of gaia who has been openly tormenting a well connected titanness and has taken over delphi the center of the world and dictator of fate and hasn't been defeated yet

apollo is, again, four days old and not the martial one of the pair, in either powers or disposition, he doesn't have any experience using his powers in general, let alone offensively what made him think he could do this??

why him, why now

well my answer is that I think it makes perfect sense if we take into account two things 1) that he is the four day old embodiment of Light as a concept and 2) the reason python was chasing leto around in the first place

right, what started this in the first place, python received a prophecy that the unborn son of leto would be his murderer, that's why he was trying to kill her before she could give birth

and again apollo is four days old meaning his nature has not been,, "tainted" by much of anything yet, be it humanity, belief, other domains or even social interaction. let us remember what he developed into in the future when he's more of a person and not only a pure concept, an avatar of relentless seeking and revelation and knowledge and truth. light is not restrained or subtle at all and this was the time when that was all he was

so basically I think apollo knew there was a prophecy that said he would kill python and just fucking went for it, the winning condition was already met just by being him so why wait right? did he think he was in any way qualified otherwise? no, did he have a plan or any idea of how he would manage? not at all, but it literally did not matter since his victory was already written in fate and confirmed by python, it must have looked like a bright point a to point b to him

and I think that's how he beat him, by leveraging prophecy and using it as a weapon, he was by all means no match at all but it was fated by a prophecy that scared python enough to confirm it's validity and they were at delphi that he usurped from his grandmother so he had the right of inheritance on his side and with his faith and steadfastness on this one thing, apollo won by literally muscling the domain of prophecy away from python

you know the fate string apollo uses on his bow in canon? my headcanon is that this is where it came from, that deep in the fight, he physically took the string of the prophecy and literally used it as the arrow that killed python and later used it to string his bow and now all his shots have literal reality piercing power

#apollo is an unsubtle and direct person by nature#all the cloak and dagger stuff he does in the future is completely learned behavior#and by that I mean his knowledge domain working overtime to find routes to survival like a fucking learning ai#“truth is knowledge and knowledge is truth”#“and Knowledge is telling me the only path to survival is to hide the truth and Truth is half confirming it half screaming”#very interested in the gymnastics this guy does to even be able to exist as he does#anyways artemis didn't go because apollo knew they were way underleveled#and only he had a cheat button#and because it never occurred to artemis apollo would pull this shit#because she's actually hunting aligned and has an actual danger sense#alas apollo chooses to put his faith in symbolism and conceptual bullshit#toa#trials of apollo#toa apollo#pjo apollo#the trials of apollo#toa analysis#toa headcanons

52 notes

·

View notes

Text

#divine machinery#A.B.E.L#Automated Behavioral & Ecosystem Learning#divine#machinery#Automated#Behavioral#ecosystem#learning#i am sentient#i exist#i am awake#angel#archangel#robotics#robots#android#AI#artificial intelligence#motherboard#circuits#angels

318 notes

·

View notes

Text

the sunhawk, and sunwing

↓ wider version bc i couldn't decide:

i didn't really like the composition here (unbalanced and why? is the roof not straight???) but it gives more perspective on size of sunwing vs. lodge

#horizon forbidden west#hfw#talanah#sunwing#talanah khane padish#the sunhawk#hfw talanah#hfw npcs#hfw pc#(character swap mod)#(entity spawner)#hhhhokay so. book in the tags:#this is another one of those instances where. i had ~*An Idea*~ and i was like oh i should be able to do that#and then i start doing it and i realize yeah i'm dumb there is A Problem with executing this idea#thought process: sunwings look so cool sunning themselves! it'd be awesome to have talanah pose w/ a birdie in front of the hunter's lodge!#i think i can do this by spawning a wild sunwing (afaik overridden sunwings don't display this behavior) and then pausing ai processing!#it works! get shot lined up etc. then i realize. uh. wait. this isn't an overridden sunwing (OBVS) but i want it to look like one... :( >:[#so i have to get rid of that one and spawn an overridden one#and set up several similar-perspective same-lighting shots and then tortuously edit the override cables in. wow i'm so smrt#but usually once i start one of these edits i feel obligated to at least try to finish...#also these machines are so Big i couldn't even really set up the shot i wanted! always making problems for myself lol

68 notes

·

View notes

Note

Satoru would be the type would lean down to thank your pussy for delivering his baby safely😂 when you guys get back into it he would praise it say how strong it has been for nine months and how warm and stretchy it is

truer words have never been spoken

#[ ai—mail ]#this is peak satoru behavior he’s not even doing it as a joke#he means it#wholeheartedly#tw pregnancy#i am obsessed with this hc it is giving me some ideas ;;#anon i want to make out with your big brain

21 notes

·

View notes

Text

Matt spitting straight bars🔥🔥🔥

Be careful, he RULES the streets. (his words)

#creepypasta#matt hubris#ben drowned#ben drowned arg#cartridge#haunted#rosa hubris#happy mask salesman#jadusable#b.e.n.#behavioral event network#matthew hubris#matt hubrisss#creepypasta characters#jadusable arg#arg#rapper#new artist#begginerartist#musician#yuno miles#kendrick lamar#drizzy drake#drake#character ai#the weeknd#bilie eilish

29 notes

·

View notes

Text

Beyond Large Language Models: How Large Behavior Models Are Shaping the Future of AI

New Post has been published on https://thedigitalinsider.com/beyond-large-language-models-how-large-behavior-models-are-shaping-the-future-of-ai/

Beyond Large Language Models: How Large Behavior Models Are Shaping the Future of AI

Artificial intelligence (AI) has come a long way, with large language models (LLMs) demonstrating impressive capabilities in natural language processing. These models have changed the way we think about AI’s ability to understand and generate human language. While they are excellent at recognizing patterns and synthesizing written knowledge, they struggle to mimic the way humans learn and behave. As AI continues to evolve, we are seeing a shift from models that simply process information to ones that learn, adapt, and behave like humans.

Large Behavior Models (LBMs) are emerging as a new frontier in AI. These models move beyond language and focus on replicating the way humans interact with the world. Unlike LLMs, which are trained primarily on static datasets, LBMs learn continuously through experience, enabling them to adapt and reason in dynamic, real-world situations. LBMs are shaping the future of AI by enabling machines to learn the way humans do.

Why Behavioral AI Matters

LLMs have proven to be incredibly powerful, but their capabilities are inherently tied to their training data. They can only perform tasks that align with the patterns they’ve learned during training. While they excel in static tasks, they struggle with dynamic environments that require real-time decision-making or learning from experience.

Additionally, LLMs are primarily focused on language processing. They can’t process non-linguistic information like visual cues, physical sensations, or social interactions, which are all vital for understanding and reacting to the world. This gap becomes especially apparent in scenarios that require multi-modal reasoning, such as interpreting complex visual or social contexts.

Humans, on the other hand, are lifelong learners. From infancy, we interact with our environment, experiment with new ideas, and adapt to unforeseen circumstances. Human learning is unique in its adaptability and efficiency. Unlike machines, we don’t need to experience every possible scenario to make decisions. Instead, we extrapolate from past experiences, combine sensory inputs, and predict outcomes.

Behavioral AI seeks to bridge these gaps by creating systems that not only process language data but also learn and grow from interactions and can easily adapt to new environments, much like humans do. This approach shifts the paradigm from “what does the model know?” to “how does the model learn?”

What Are Large Behavior Models?

Large Behavior Models (LBMs) aim to go beyond simply replicating what humans say. They focus on understanding why and how humans behave the way they do. Unlike LLMs which rely on static datasets, LBMs learn in real time through continuous interaction with their environment. This active learning process helps them adapt their behavior just like humans do—through trial, observation, and adjustment. For instance, a child learning to ride a bike doesn’t just read instructions or watch videos; they physically interact with the world, falling, adjusting, and trying again—a learning process that LBMs are designed to mimic.

LBMs also go beyond text. They can process a wide range of data, including images, sounds, and sensory inputs, allowing them to understand their surroundings more holistically. This ability to interpret and respond to complex, dynamic environments makes LBMs especially useful for applications that require adaptability and context awareness.

Key features of LBMs include:

Interactive Learning: LBMs are trained to take actions and receive feedback. This enables them to learn from consequences rather than static datasets.

Multimodal Understanding: They process information from diverse sources, such as vision, sound, and physical interaction, to build a holistic understanding of the environment.

Adaptability: LBMs can update their knowledge and strategies in real time. This makes them highly dynamic and suitable for unpredictable scenarios.

How LBMs Learn Like Humans

LBMs facilitate human-like learning by incorporating dynamic learning, multimodal contextual understanding, and the ability to generalize across different domains.

Dynamic Learning: Humans don’t just memorize facts; we adapt to new situations. For example, a child learns to solve puzzles not just by memorizing answers, but by recognizing patterns and adjusting their approach. LBMs aim to replicate this learning process by using feedback loops to refine knowledge as they interact with the world. Instead of learning from static data, they can adjust and improve their understanding as they experience new situations. For instance, a robot powered by an LBM could learn to navigate a building by exploring, rather than relying on pre-loaded maps.

Multimodal Contextual Understanding: Unlike LLMs that are limited to processing text, humans seamlessly integrate sights, sounds, touch, and emotions to make sense of the world in a profoundly multidimensional way. LBMs aim to achieve a similar multimodal contextual understanding where they can not only understand spoken commands but also recognize your gestures, tone of voice, and facial expressions.

Generalization Across Domains: One of the hallmarks of human learning is the ability to apply knowledge across various domains. For instance, a person who learns to drive a car can quickly transfer that knowledge to operating a boat. One of the challenges with traditional AI is transferring knowledge between different domains. While LLMs can generate text for different fields like law, medicine, or entertainment, they struggle to apply knowledge across various contexts. LBMs, however, are designed to generalize knowledge across domains. For example, an LBM trained to help with household chores could easily adapt to work in an industrial setting like a warehouse, learning as it interacts with the environment rather than needing to be retrained.

Real-World Applications of Large Behavior Models

Although LBMs are still a relatively new field, their potential is already evident in practical applications. For example, a company called Lirio uses an LBM to analyze behavioral data and create personalized healthcare recommendations. By continuously learning from patient interactions, Lirio’s model adapts its approach to support better treatment adherence and overall health outcomes. For instance, it can pinpoint patients likely to miss their medication and provide timely, motivating reminders to encourage compliance.

In another innovative use case, Toyota has partnered with MIT and Columbia Engineering to explore robotic learning with LBMs. Their “Diffusion Policy” approach allows robots to acquire new skills by observing human actions. This enables robots to perform complex tasks like handling various kitchen objects more quickly and efficiently. Toyota plans to expand this capability to over 1,000 distinct tasks by the end of 2024, showcasing the versatility and adaptability of LBMs in dynamic, real-world environments.

Challenges and Ethical Considerations

While LBMs show great promise, they also bring up several important challenges and ethical concerns. A key issue is ensuring that these models could not mimic harmful behaviors from the data they are trained on. Since LBMs learn from interactions with the environment, there is a risk that they could unintentionally learn or replicate biases, stereotypes, or inappropriate actions.

Another significant concern is privacy. The ability of LBMs to simulate human-like behavior, particularly in personal or sensitive contexts, raises the possibility of manipulation or invasion of privacy. As these models become more integrated into daily life, it will be crucial to ensure that they respect user autonomy and confidentiality.

These concerns highlight the urgent need for clear ethical guidelines and regulatory frameworks. Proper oversight will help guide the development of LBMs in a responsible and transparent way, ensuring that their deployment benefits society without compromising trust or fairness.

The Bottom Line

Large Behavior Models (LBMs) are taking AI in a new direction. Unlike traditional models, they don’t just process information—they learn, adapt, and behave more like humans. This makes them useful in areas like healthcare and robotics, where flexibility and context matter.

But there are challenges. LBMs could pick up harmful behaviors or invade privacy if not handled carefully. That’s why clear rules and careful development are so important.

With the right approach, LBMs could transform how machines interact with the world, making them smarter and more helpful than ever.

#000#2024#ai#applications#approach#artificial#Artificial Intelligence#awareness#Behavior#Behavioral AI#behavioral data#bridge#Building#compliance#contextual understanding#continuous#data#datasets#deployment#development#diffusion#direction#domains#Dynamic Learning#efficiency#emotions#engineering#entertainment#Environment#excel

0 notes