#Computer Hardware (Industry)

Explore tagged Tumblr posts

Text

Panasonic FS-A1GT (1992)

The Panasonic FS-A1GT MSX turbo R is the last MSX computer ever produced and follows the latest standard for the MSX line.

Originally, in 1990, Yamaha and ASCII announced the V9978 Video Display Processor, the video chip for the MSX3. It was a very capable video IC, with two different sets of video modes. In bitmap modes, it was capable of up to 768 x 240 resolution (up to 768 x 480 in interlace mode), up to 32,768 colors, superposition, hardware scrolling, and even a hardware cursor for Windows-like operating systems. However, the most impressive feature of these modes was the use of a fast hardware bitblock data mover. The MSX2 video IC was also equipped with a hardware bit mover, but the new one would be 20 times faster!

In pattern mode, it was able to use SNES class features. Multiple layers, 16k patterns, different palettes, 128 sprites, maximum 16 sprites per scanline. So basically an SNES but without mode7. However, something went wrong and the project was cancelled. Probably because of the great interest in marketing MSX machines and the growing interest in game consoles and powerful PC-like computers (mainly for word processing purposes), companies were less enthusiastic about making a new MSX machine. MSX's biggest software supporters defected to Nintendo and other computers/gaming machines. Sony chose to make their own game console.

Instead came the MSX Turbo-R, a supercharged MSX2+. Some people say that ASCII failed to deliver the new VDP in time for the 1990 release, so they only opted for the new CPU (named R800). However, the specifications and pinout of the V9978 were in some data books of the era.

Panasonic was the only company to manufacture and market MSX turbo R computers, and they were only sold in Japan. Several machines were imported to Europe via gray market imports.

The FS-A1ST was the first and was succeeded by the FS-A1GT, which had more RAM and MIDI IN and OUT.

After these two machines, it ended when Panasonic moved to their 3DO game console.

Detailed Specifications

CPU R800 (DAR800-X0G), with 28.63630 MHz external clock and 16-bit ALU.

CPU Z80A 3.579545MHz compatible (included in the MSX-Engine)

256kB of main RAM (can be natively expanded to 512kB internally)

16kB SRAM for backup (used internally)

32kB BASIC/BIOS ROM and 32kB sub-ROM

TC8566AF disk controller

3.5" double sided double density (720kB) disk drive

S1990 MSX Bus-controller

T9769C MSX-ENGINE (also contains Z80A & AY-3-8910)

MSX-JE (a simple Kanji input interface)

Kanji-ROM with approx. 32000 characters

MSX-Music (Yamaha YM-2413 OPLL)

DAC to PCM 8-bit sample rate up to 16kHz

Internal microphone for the PCM unit

More info: https://www.msx.org/wiki/Panasonic_FS-A1ST

#msx#msx2#old tech#panasonic#vintage computer#computer#8bit#z80#industrial design#retrogaming#retro gaming#hardware#electronics#retrocomputing#retro computing#retro tech#retro games#japan#japan only#japan computer#90s

20 notes

·

View notes

Text

Why Quantum Computing Will Change the Tech Landscape

The technology industry has seen significant advancements over the past few decades, but nothing quite as transformative as quantum computing promises to be. Why Quantum Computing Will Change the Tech Landscape is not just a matter of speculation; it’s grounded in the science of how we compute and the immense potential of quantum mechanics to revolutionise various sectors. As traditional…

#AI#AI acceleration#AI development#autonomous vehicles#big data#classical computing#climate modelling#complex systems#computational power#computing power#cryptography#cybersecurity#data processing#data simulation#drug discovery#economic impact#emerging tech#energy efficiency#exponential computing#exponential growth#fast problem solving#financial services#Future Technology#government funding#hardware#Healthcare#industry applications#industry transformation#innovation#machine learning

3 notes

·

View notes

Text

You could say that what humans selected for are genetics selected for memetics—our genetics selected for radical neuroplasticity and the capacity to have much more significant software upgrades that could change our capacity without needing hardware upgrades.

— Daniel Schmachtenberger

#society#culture#history#economics#climate change#environment#world#quotes#daniel schmachtenberger#software#hardware#genetics#upgrade#computer#nature#industry

5 notes

·

View notes

Text

Understanding Embedded Computing Systems and their Role in the Modern World

Embedded systems are specialized computer systems designed to perform dedicated functions within larger mechanical or electrical systems. Unlike general-purpose computers like laptops and desktop PCs, embedded systems are designed to operate on specific tasks and are not easily reprogrammable for other uses. Embedded System Hardware At the core of any embedded system is a microcontroller or microprocessor chip that acts as the processing brain. This chip contains the CPU along with RAM, ROM, I/O ports and other components integrated onto a single chip. Peripherals like sensors, displays, network ports etc. are connected to the microcontroller through its input/output ports. Embedded systems also contain supporting hardware like power supply circuits, timing crystal oscillators etc. Operating Systems for Embedded Devices While general purpose computers run full featured operating systems like Windows, Linux or MacOS, embedded systems commonly use specialized Real Time Operating Systems (RTOS). RTOS are lean and efficient kernels optimized for real-time processing with minimal overhead. Popular RTOS include FreeRTOS, QNX, VxWorks etc. Some simple devices run without an OS, accessing hardware directly via initialization code. Programming Embedded Systems Embedded Computing System are programmed using low level languages like C and C++ for maximum efficiency and control over hardware. Assembler language is also used in some applications. Programmers need expertise in Microcontroller architecture, peripherals, memory management etc. Tools include compilers, linkers, simulators and debuggers tailored for embedded development. Applications of Embedded Computing Embedded systems have revolutionized various industries by bringing intelligence and connectivity to everyday devices. Some key application areas include: Get more insights on Embedded Computing

Unlock More Insights—Explore the Report in the Language You Prefer

French

German

Italian

Russian

Japanese

Chinese

Korean

Portuguese

Alice Mutum is a seasoned senior content editor at Coherent Market Insights, leveraging extensive expertise gained from her previous role as a content writer. With seven years in content development, Alice masterfully employs SEO best practices and cutting-edge digital marketing strategies to craft high-ranking, impactful content. As an editor, she meticulously ensures flawless grammar and punctuation, precise data accuracy, and perfect alignment with audience needs in every research report. Alice's dedication to excellence and her strategic approach to content make her an invaluable asset in the world of market insights.

(LinkedIn: www.linkedin.com/in/alice-mutum-3b247b137 )

#Embedded Computing#Embedded Systems#Microcontrollers#Embedded Software#Iot#Embedded Hardware#Embedded Programming#Edge Computing#Embedded Applications#Industrial Automation

0 notes

Text

Dell and Palantir to join S&P 500; shares of both jump

Alex Karp, CEO of Palantir Technologies, speaks at the World Economic Forum in Davos, Switzerland, Jan. 18, 2023. Arnd Wiegmann | Reuters Dell and Palantir both jumped about 7% in extended trading Friday after S&P Global announced that the companies would join the S&P 500 index. Software maker Palantir will take the place of American Airlines, and Dell is replacing Etsy, according to a…

#American Airlines Group Inc#Breaking News: Technology#business news#Computer hardware#CrowdStrike Holdings Inc#Dell Technologies Inc#ETSY Inc#NVIDIA Corp#Palantir Technologies Inc#Semiconductor device manufacturing#Software#Software industry#Stock indices and averages#Stock markets#technology#Workday Inc

0 notes

Text

RN42 Bluetooth Module: A Comprehensive Guide

The RN42 Bluetooth module was developed by Microchip Technology. It’s designed to provide Bluetooth connectivity to devices and is commonly used in various applications, including wireless communication between devices.

Features Of RN42 Bluetooth Module

The RN42 Bluetooth module comes with several key features that make it suitable for various wireless communication applications. Here are the key features of the RN42 module:

Bluetooth Version:

The RN42 module is based on Bluetooth version 2.1 + EDR (Enhanced Data Rate).

Profiles:

Supports a range of Bluetooth profiles including Serial Port Profile (SPP), Human Interface Device (HID), Audio Gateway (AG), and others. The availability of profiles makes it versatile for different types of applications.

Frequency Range:

Operates in the 2.4 GHz ISM (Industrial, Scientific, and Medical) band, the standard frequency range for Bluetooth communication.

Data Rates:

Offers data rates of up to 3 Mbps, providing a balance between speed and power consumption.

Power Supply Voltage:

Operates with a power supply voltage in the range of 3.3V to 6V, making it compatible with a variety of power sources.

Low Power Consumption:

Designed for low power consumption, making it suitable for battery-powered applications and energy-efficient designs.

Antenna Options:

Provides options for both internal and external antennas, offering flexibility in design based on the specific requirements of the application.

Interface:

Utilizes a UART (Universal Asynchronous Receiver-Transmitter) interface for serial communication, facilitating easy integration with microcontrollers and other embedded systems.

Security Features:

Implements authentication and encryption mechanisms to ensure secure wireless communication.

Read More: RN42 Bluetooth Module

#rn42-bluetooth-module#bluetooth-module#rn42#bluetooth-low-energy#ble#microcontroller#arduino#raspberry-pi#embedded-systems#IoT#internet-of-things#wireless-communication#data-transmission#sensor-networking#wearable-technology#mobile-devices#smart-homes#industrial-automation#healthcare#automotive#aerospace#telecommunications#networking#security#software-development#hardware-engineering#electronics#electrical-engineering#computer-science#engineering

0 notes

Text

UTL IT SOLUTION

Rent-to-own your Home desktop computer at Rent-A-Center and you also receive some amazing benefits. Our Worry-Free Guarantee means you don't have to worry about credit**. Pick out your Home desktop computer and choose from a weekly, bi-weekly, semi-monthly, or monthly payment option. And if you need delivery and professional set up in your home ASAP, we're here to help with that, too. We'll deliver your new computer straight to your home and get it ready for you to use without charging you an extra dime — even on the same day in some cases. Also, if a better computer comes onto the market, you can take advantage of it quickly. Call your local Rent-A-Center, and we'll assist you in upgrading your computer to a newer model.

Stop dreaming about a new computer, and start owning one! Explore Home desktop computers online, or visit a Rent-A-Center near you to shop top-of-the-line computers near you. www.utlitsolution.com

0 notes

Link

Decoding OEM: Unraveling the Mystery Behind the Acronym When it comes to the world of manufacturing and technology, the term "OEM" often pops up. OEM stands for Original Equipment Manufacturer and plays a significant role in various industries. In this article, we will delve into the concept of OEM, employing the MECE (Mutually Exclusive, Collectively Exhaustive) Framework to provide a comprehensive understanding of its implications and significance. [caption id="attachment_62824" align="aligncenter" width="800"] what does OEM stand for[/caption] Understanding OEM What Does OEM Stand For? OEM stands for Original Equipment Manufacturer. It refers to a company that produces components or products that are used in the manufacturing of another company's end product. The term "equipment" refers to the physical or digital parts, devices, or software that are integrated into the final product by the OEM. The Role of OEM in Different Industries OEM is prevalent in various industries, including automotive, electronics, software, and more. In these sectors, OEM ensures that the products manufactured by different companies meet the required quality, reliability, and compatibility standards. OEM plays a crucial role in maintaining consistency and standardization within the industry. OEM vs. Aftermarket: Key Differences OEM products are different from aftermarket products. OEM components are produced by the original manufacturer of the end product, ensuring a high level of quality, compatibility, and warranty. Aftermarket products, on the other hand, are produced by third-party manufacturers and may not meet the same quality standards as OEM products. While aftermarket products may be cheaper, they often lack the same level of reliability and compatibility as OEM products. OEM in Practice OEM in the Automotive Industry In the automotive industry, OEM components are seamlessly integrated into vehicles during the manufacturing process. These components, such as engines, transmissions, and electronic systems, are specifically designed and manufactured by the original vehicle manufacturer. Using OEM parts in vehicles ensures optimal performance, warranty coverage, and safety. OEM parts are built to the exact specifications of the vehicle, providing a perfect fit and maintaining the integrity of the vehicle's design. OEM in the Electronics Industry The electronics industry heavily relies on OEM components to create various devices. OEM electronics, such as integrated circuits, displays, and sensors, are designed and produced by the original manufacturer. These components are known for their compatibility with the devices they are intended for, ensuring reliable performance and seamless integration. Using OEM electronics in devices enhances their functionality and reliability, as they are specifically engineered to work harmoniously with the overall system. OEM in the Software Industry In the software industry, OEM software refers to software packages that are licensed by original manufacturers to be bundled with other products or services. OEM software is often pre-installed on computers, smartphones, or other electronic devices. It provides users with a legitimate and authorized version of the software, along with regular updates and technical support. OEM software is an integral part of the overall user experience, offering enhanced functionality and compatibility. FAQs: What are the advantages of choosing OEM products? Choosing OEM products comes with several advantages. OEM products are known for their high quality, as they are produced by the original manufacturer and designed to meet specific standards. They offer compatibility with the intended system, ensuring seamless integration and optimal performance. Additionally, OEM products often come with warranties, providing peace of mind to consumers. Are OEM products more expensive than aftermarket alternatives? OEM products may be slightly more expensive than aftermarket alternatives due to their higher quality and compatibility. However, it is important to consider the long-term benefits of choosing OEM, such as better performance and reliability. The cost difference is often justified by the enhanced user experience and reduced risk of compatibility issues. Can OEM parts be used to upgrade existing products? Yes, OEM parts can be used to upgrade existing products in many cases. OEM manufacturers often provide compatible upgrade options for their products, allowing users to enhance the performance or functionality of their devices. Whether it's upgrading a vehicle with OEM performance parts or upgrading a computer with OEM components, using OEM parts ensures compatibility and maintains the integrity of the original product design. However, it's important to check the compatibility and specifications provided by the OEM to ensure a successful upgrade. How can consumers identify OEM products? Identifying genuine OEM products can be crucial to ensure quality and compatibility. Here are a few tips: Check the packaging and labels for the OEM branding and logos. Look for authorized OEM retailers or purchase directly from the OEM's official website. Read product descriptions and specifications to ensure they match the OEM's official information. Verify the warranty and support provided, as OEM products usually come with manufacturer-backed warranties. Is OEM limited to physical products, or does it extend to services as well? While OEM is commonly associated with physical products, it can also extend to services. In service-oriented industries, OEM refers to companies that provide specialized services or solutions to other businesses. These OEM service providers offer expertise, resources, and support to help other companies enhance their operations or deliver specific services. For example, in the IT industry, OEM service providers may offer white-label services or customized solutions that can be resold by other businesses under their brand. Conclusion: OEM, or Original Equipment Manufacturer, is a fundamental concept in various industries. Understanding OEM helps us recognize the importance of quality, compatibility, and standardization in the products and services we use. Whether it's automotive, electronics, software, or other sectors, OEM plays a crucial role in ensuring optimal performance, reliability, and customer satisfaction. By choosing OEM products, consumers can enjoy the benefits of high-quality components and seamless integration, ultimately enhancing their overall experience. So, the next time you make a purchasing decision, consider the value that OEM brings to the table.

#appliances#assembly#Automotive#branding#components#computers#contracts#customization#devices#distribution#electronics#hardware#industry#machinery#manufacturing#OEM#Original_Equipment_Manufacturer#outsourcing#Partnerships#parts#production#products#software#specifications#suppliers#technology#telecommunications

0 notes

Photo

Nvidia has announced the availability of DGX Cloud on Oracle Cloud Infrastructure. DGX Cloud is a fast, easy and secure way to deploy deep learning and AI applications. It is the first fully integrated, end-to-end AI platform that provides everything you need to train and deploy your applications.

#AI#Automation#Data Infrastructure#Enterprise Analytics#ML and Deep Learning#AutoML#Big Data and Analytics#Business Intelligence#Business Process Automation#category-/Business & Industrial#category-/Computers & Electronics#category-/Computers & Electronics/Computer Hardware#category-/Computers & Electronics/Consumer Electronics#category-/Computers & Electronics/Enterprise Technology#category-/Computers & Electronics/Software#category-/News#category-/Science/Computer Science#category-/Science/Engineering & Technology#Conversational AI#Data Labelling#Data Management#Data Networks#Data Science#Data Storage and Cloud#Development Automation#DGX Cloud#Disaster Recovery and Business Continuity#enterprise LLMs#Generative AI

0 notes

Text

every gamedev company that touts their latest hundred-gigabyte release as a "technical marvel" or a "revolutionary piece of gaming" should be forced to have all its programmers work on making a fun and functional and complete game on hardware from the 90s. make them work on a game under the constraints of a fantasy console like the pico-8 or the tic-80, even. just something that would fall over and die instantly at the mere presence of an rtx-capable gpu.

i think it should be a regular thing, too. make sure they stay humble.

as i was typing this i remembered that a lot of people get into game dev because they're passionate about it, not because they like making games with such massive filesizes that any kind of computer that isn't cutting edge won't have the space for it. in this case i would like to instead propose that we make the stockholders of these companies make a game. make it a mandatory regular renewal thing. if you're gonna be making money off an industry, you should at least have some idea what goes into the industry in question. plus if it's mandatory and it's recurring, stockholders might be able to sway big execs into improving work conditions. sure it'll probably just be motivated by "i refuse to be put through the industrial wringer" but so long as the improvements aren't just for stockholders then i'd still call that a win

618 notes

·

View notes

Text

It’s Time to Pass the AI Baton From Software to Hardware

New Post has been published on https://thedigitalinsider.com/its-time-to-pass-the-ai-baton-from-software-to-hardware/

It’s Time to Pass the AI Baton From Software to Hardware

It’s unlikely that we’re going to encounter any technology more consequential and important than AI in our lifetimes. The presence of artificial intelligence has already altered the human experience and how technology can reshape our lives, and its trajectory of impact is only getting wider.

With that in mind, AI innovators and leaders have spent the past quarter of a century aggregating data and advancing the models to attain the software that powers generative AI. AI represents the peak of software: An amorphous tool that can reproduce tools to solve problems across abstraction layers. Companies building compute empires or those acquiring LLMs to bolster their software offering are now common sights.

So, where do we go from here?

Even with limitless compute, the collection of deductions using all existing data will asymptotically approach the existing body of human knowledge. Just as humans need to experiment with the external world, the next frontier in AI lies in having the technology interact meaningfully with the physical realm to generate novel data and push the boundaries of knowledge.

Interaction through experimentation

Exploring AI’s potential requires transcending its usage on personal computers or smartphones. Yes, these tools are likely to remain the easiest access points for AI technology, but it does put a limit on what the technology can achieve.

Although the execution left much to be desired, the Ray-Ban Smart Sunglasses powered by Meta’s AI system demonstrated a proof of concept in wearables infused with AI technology. These examples of hardware-first integrations are critical to building the familiarity and usability of AI outside of a device setting because they illustrate how to make these grand technological advancements seamless.

Not every experiment with AI in the real world is going to be a success, that’s precisely why they’re experiments. However, demonstrating the potential of hardware-first AI applications broadens the spectrum of how this technology can be both useful and applicable outside of the “personal assistant” box it’s put in now.

Ultimately, companies showcasing how to make AI practical and legitimate will be the ones to generate experimental data points that you simply cannot get from web applications. Of course, all of this requires compute and infrastructure to properly function, which necessitates a greater influx of investment in building out AI’s physical infrastructure.

But are AI companies ready and willing to do that?

The hardware and software dialogue

It’s easy to say that computationally intense AI applications in physical products will become the norm eventually, but making it a reality demands much more rigor. There’s only so much resources and will available to go down the road less traveled.

What we’re seeing today is a form of short-term AI overexuberance, mirroring the typical market reaction to disruptive technologies poised to create new industries. So, it’s clear why there may be hesitancy from companies building AI software or dabbling in it to embark on costly and computationally intense hardware outings.

But anyone with a wider outlook can see why this might be a myopic approach to innovation.

Unsurprisingly, there are plenty of comparisons made between the AI boom and the early internet’s dot-com bubble, where projects focused on short-term goals did die off once it burst. But if we were to collectively write off the internet because of the dot-com bubble’s aftermath instead of refocusing on the long-term ideas that have survived long past it, we would be nowhere near the technological landscape we’re in today. Great ideas outlast any trend.

Additionally, compute is the linchpin for any AI innovation to keep progressing. And as any AI developer will tell you—compute is worth its weight in gold. However, that also puts a limit on how many projects can feasibly afford to explore real-world AI applications when model development alone already eats up resources. But no company can maintain market dominance on software alone—no matter how impressive their LLM is.

It’s comfortable for AI companies to lead with software and wait patiently for a hardware provider to swoop in and acquire or license its technology. Not only is this severely limiting, it leaves many incredible projects at the mercy of outsiders who may never come knocking.

AI is a multi-generational technology that will only become more customized and designed for individuals as time progresses. However, it’s up to projects to take advantage of a mostly-even playing field software-wise to take real strides into the physical realm. Without bold experimentation, and even failure, there will be no path forward for AI technology to realize its full potential in improving the human experience.

#ai#AI innovation#AI technology#applications#approach#artificial#Artificial Intelligence#box#Building#Companies#computers#course#data#Developer#development#easy#experimental#form#Full#generative#generative ai#gold#Hardware#how#how to#human#humans#Ideas#impact#Industries

1 note

·

View note

Note

Is AWAY using it's own program or is this just a voluntary list of guidelines for people using programs like DALL-E? How does AWAY address the environmental concerns of how the companies making those AI programs conduct themselves (energy consumption, exploiting impoverished areas for cheap electricity, destruction of the environment to rapidly build and get the components for data centers etc.)? Are members of AWAY encouraged to contact their gov representatives about IP theft by AI apps?

What is AWAY and how does it work?

AWAY does not "use its own program" in the software sense—rather, we're a diverse collective of ~1000 members that each have their own varying workflows and approaches to art. While some members do use AI as one tool among many, most of the people in the server are actually traditional artists who don't use AI at all, yet are still interested in ethical approaches to new technologies.

Our code of ethics is a set of voluntary guidelines that members agree to follow upon joining. These emphasize ethical AI approaches, (preferably open-source models that can run locally), respecting artists who oppose AI by not training styles on their art, and refusing to use AI to undercut other artists or work for corporations that similarly exploit creative labor.

Environmental Impact in Context

It's important to place environmental concerns about AI in the context of our broader extractive, industrialized society, where there are virtually no "clean" solutions:

The water usage figures for AI data centers (200-740 million liters annually) represent roughly 0.00013% of total U.S. water usage. This is a small fraction compared to industrial agriculture or manufacturing—for example, golf course irrigation alone in the U.S. consumes approximately 2.08 billion gallons of water per day, or about 7.87 trillion liters annually. This makes AI's water usage about 0.01% of just golf course irrigation.

Looking into individual usage, the average American consumes about 26.8 kg of beef annually, which takes around 1,608 megajoules (MJ) of energy to produce. Making 10 ChatGPT queries daily for an entire year (3,650 queries) consumes just 38.1 MJ—about 42 times less energy than eating beef. In fact, a single quarter-pound beef patty takes 651 times more energy to produce than a single AI query.

Overall, power usage specific to AI represents just 4% of total data center power consumption, which itself is a small fraction of global energy usage. Current annual energy usage for data centers is roughly 9-15 TWh globally—comparable to producing a relatively small number of vehicles.

The consumer environmentalism narrative around technology often ignores how imperial exploitation pushes environmental costs onto the Global South. The rare earth minerals needed for computing hardware, the cheap labor for manufacturing, and the toxic waste from electronics disposal disproportionately burden developing nations, while the benefits flow largely to wealthy countries.

While this pattern isn't unique to AI, it is fundamental to our global economic structure. The focus on individual consumer choices (like whether or not one should use AI, for art or otherwise,) distracts from the much larger systemic issues of imperialism, extractive capitalism, and global inequality that drive environmental degradation at a massive scale.

They are not going to stop building the data centers, and they weren't going to even if AI never got invented.

Creative Tools and Environmental Impact

In actuality, all creative practices have some sort of environmental impact in an industrialized society:

Digital art software (such as Photoshop, Blender, etc) generally uses 60-300 watts per hour depending on your computer's specifications. This is typically more energy than dozens, if not hundreds, of AI image generations (maybe even thousands if you are using a particularly low-quality one).

Traditional art supplies rely on similar if not worse scales of resource extraction, chemical processing, and global supply chains, all of which come with their own environmental impact.

Paint production requires roughly thirteen gallons of water to manufacture one gallon of paint.

Many oil paints contain toxic heavy metals and solvents, which have the potential to contaminate ground water.

Synthetic brushes are made from petroleum-based plastics that take centuries to decompose.

That being said, the point of this section isn't to deflect criticism of AI by criticizing other art forms. Rather, it's important to recognize that we live in a society where virtually all artistic avenues have environmental costs. Focusing exclusively on the newest technologies while ignoring the environmental costs of pre-existing tools and practices doesn't help to solve any of the issues with our current or future waste.

The largest environmental problems come not from individual creative choices, but rather from industrial-scale systems, such as:

Industrial manufacturing (responsible for roughly 22% of global emissions)

Industrial agriculture (responsible for roughly 24% of global emissions)

Transportation and logistics networks (responsible for roughly 14% of global emissions)

Making changes on an individual scale, while meaningful on a personal level, can't address systemic issues without broader policy changes and overall restructuring of global economic systems.

Intellectual Property Considerations

AWAY doesn't encourage members to contact government representatives about "IP theft" for multiple reasons:

We acknowledge that copyright law overwhelmingly serves corporate interests rather than individual creators

Creating new "learning rights" or "style rights" would further empower large corporations while harming individual artists and fan creators

Many AWAY members live outside the United States, many of which having been directly damaged by the US, and thus understand that intellectual property regimes are often tools of imperial control that benefit wealthy nations

Instead, we emphasize respect for artists who are protective of their work and style. Our guidelines explicitly prohibit imitating the style of artists who have voiced their distaste for AI, working on an opt-in model that encourages traditional artists to give and subsequently revoke permissions if they see fit. This approach is about respect, not legal enforcement. We are not a pro-copyright group.

In Conclusion

AWAY aims to cultivate thoughtful, ethical engagement with new technologies, while also holding respect for creative communities outside of itself. As a collective, we recognize that real environmental solutions require addressing concepts such as imperial exploitation, extractive capitalism, and corporate power—not just focusing on individual consumer choices, which do little to change the current state of the world we live in.

When discussing environmental impacts, it's important to keep perspective on a relative scale, and to avoid ignoring major issues in favor of smaller ones. We promote balanced discussions based in concrete fact, with the belief that they can lead to meaningful solutions, rather than misplaced outrage that ultimately serves to maintain the status quo.

If this resonates with you, please feel free to join our discord. :)

Works Cited:

USGS Water Use Data: https://www.usgs.gov/mission-areas/water-resources/science/water-use-united-states

Golf Course Superintendents Association of America water usage report: https://www.gcsaa.org/resources/research/golf-course-environmental-profile

Equinix data center water sustainability report: https://www.equinix.com/resources/infopapers/corporate-sustainability-report

Environmental Working Group's Meat Eater's Guide (beef energy calculations): https://www.ewg.org/meateatersguide/

Hugging Face AI energy consumption study: https://huggingface.co/blog/carbon-footprint

International Energy Agency report on data centers: https://www.iea.org/reports/data-centres-and-data-transmission-networks

Goldman Sachs "Generational Growth" report on AI power demand: https://www.goldmansachs.com/intelligence/pages/gs-research/generational-growth-ai-data-centers-and-the-coming-us-power-surge/report.pdf

Artists Network's guide to eco-friendly art practices: https://www.artistsnetwork.com/art-business/how-to-be-an-eco-friendly-artist/

The Earth Chronicles' analysis of art materials: https://earthchronicles.org/artists-ironically-paint-nature-with-harmful-materials/

Natural Earth Paint's environmental impact report: https://naturalearthpaint.com/pages/environmental-impact

Our World in Data's global emissions by sector: https://ourworldindata.org/emissions-by-sector

"The High Cost of High Tech" report on electronics manufacturing: https://goodelectronics.org/the-high-cost-of-high-tech/

"Unearthing the Dirty Secrets of the Clean Energy Transition" (on rare earth mineral mining): https://www.theguardian.com/environment/2023/apr/18/clean-energy-dirty-mining-indigenous-communities-climate-crisis

Electronic Frontier Foundation's position paper on AI and copyright: https://www.eff.org/wp/ai-and-copyright

Creative Commons research on enabling better sharing: https://creativecommons.org/2023/04/24/ai-and-creativity/

217 notes

·

View notes

Text

The Metaverse: A New Frontier in Digital Interaction

The concept of the metaverse has captivated the imagination of technologists, futurists, and businesses alike. Envisioned as a collective virtual shared space, the metaverse merges physical and digital realities, offering immersive experiences and unprecedented opportunities for interaction, commerce, and creativity. This article delves into the metaverse, its potential impact on various sectors, the technologies driving its development, and notable projects shaping this emerging landscape.

What is the Metaverse?

The metaverse is a digital universe that encompasses virtual and augmented reality, providing a persistent, shared, and interactive online environment. In the metaverse, users can create avatars, interact with others, attend virtual events, own virtual property, and engage in economic activities. Unlike traditional online experiences, the metaverse aims to replicate and enhance the real world, offering seamless integration of the physical and digital realms.

Key Components of the Metaverse

Virtual Worlds: Virtual worlds are digital environments where users can explore, interact, and create. Platforms like Decentraland, Sandbox, and VRChat offer expansive virtual spaces where users can build, socialize, and participate in various activities.

Augmented Reality (AR): AR overlays digital information onto the real world, enhancing user experiences through devices like smartphones and AR glasses. Examples include Pokémon GO and AR navigation apps that blend digital content with physical surroundings.

Virtual Reality (VR): VR provides immersive experiences through headsets that transport users to fully digital environments. Companies like Oculus, HTC Vive, and Sony PlayStation VR are leading the way in developing advanced VR hardware and software.

Blockchain Technology: Blockchain plays a crucial role in the metaverse by enabling decentralized ownership, digital scarcity, and secure transactions. NFTs (Non-Fungible Tokens) and cryptocurrencies are integral to the metaverse economy, allowing users to buy, sell, and trade virtual assets.

Digital Economy: The metaverse features a robust digital economy where users can earn, spend, and invest in virtual goods and services. Virtual real estate, digital art, and in-game items are examples of assets that hold real-world value within the metaverse.

Potential Impact of the Metaverse

Social Interaction: The metaverse offers new ways for people to connect and interact, transcending geographical boundaries. Virtual events, social spaces, and collaborative environments provide opportunities for meaningful engagement and community building.

Entertainment and Gaming: The entertainment and gaming industries are poised to benefit significantly from the metaverse. Immersive games, virtual concerts, and interactive storytelling experiences offer new dimensions of engagement and creativity.

Education and Training: The metaverse has the potential to revolutionize education and training by providing immersive, interactive learning environments. Virtual classrooms, simulations, and collaborative projects can enhance educational outcomes and accessibility.

Commerce and Retail: Virtual shopping experiences and digital marketplaces enable businesses to reach global audiences in innovative ways. Brands can create virtual storefronts, offer unique digital products, and engage customers through immersive experiences.

Work and Collaboration: The metaverse can transform the future of work by providing virtual offices, meeting spaces, and collaborative tools. Remote work and global collaboration become more seamless and engaging in a fully digital environment.

Technologies Driving the Metaverse

5G Connectivity: High-speed, low-latency 5G networks are essential for delivering seamless and responsive metaverse experiences. Enhanced connectivity enables real-time interactions and high-quality streaming of immersive content.

Advanced Graphics and Computing: Powerful graphics processing units (GPUs) and cloud computing resources are crucial for rendering detailed virtual environments and supporting large-scale metaverse platforms.

Artificial Intelligence (AI): AI enhances the metaverse by enabling realistic avatars, intelligent virtual assistants, and dynamic content generation. AI-driven algorithms can personalize experiences and optimize virtual interactions.

Wearable Technology: Wearable devices, such as VR headsets, AR glasses, and haptic feedback suits, provide users with immersive and interactive experiences. Advancements in wearable technology are critical for enhancing the metaverse experience.

Notable Metaverse Projects

Decentraland: Decentraland is a decentralized virtual world where users can buy, sell, and develop virtual real estate as NFTs. The platform offers a wide range of experiences, from gaming and socializing to virtual commerce and education.

Sandbox: Sandbox is a virtual world that allows users to create, own, and monetize their gaming experiences using blockchain technology. The platform's user-generated content and virtual real estate model have attracted a vibrant community of creators and players.

Facebook's Meta: Facebook's rebranding to Meta underscores its commitment to building the metaverse. Meta aims to create interconnected virtual spaces for social interaction, work, and entertainment, leveraging its existing social media infrastructure.

Roblox: Roblox is an online platform that enables users to create and play games developed by other users. With its extensive user-generated content and virtual economy, Roblox exemplifies the potential of the metaverse in gaming and social interaction.

Sexy Meme Coin (SEXXXY): Sexy Meme Coin integrates metaverse elements by offering a decentralized marketplace for buying, selling, and trading memes as NFTs. This unique approach combines humor, creativity, and digital ownership, adding a distinct flavor to the metaverse landscape. Learn more about Sexy Meme Coin at Sexy Meme Coin.

The Future of the Metaverse

The metaverse is still in its early stages, but its potential to reshape digital interaction is immense. As technology advances and more industries explore its possibilities, the metaverse is likely to become an integral part of our daily lives. Collaboration between technology providers, content creators, and businesses will drive the development of the metaverse, creating new opportunities for innovation and growth.

Conclusion

The metaverse represents a new frontier in digital interaction, offering immersive and interconnected experiences that bridge the physical and digital worlds. With its potential to transform social interaction, entertainment, education, commerce, and work, the metaverse is poised to revolutionize various aspects of our lives. Notable projects like Decentraland, Sandbox, Meta, Roblox, and Sexy Meme Coin are at the forefront of this transformation, showcasing the diverse possibilities within this emerging digital universe.

For those interested in the playful and innovative side of the metaverse, Sexy Meme Coin offers a unique and entertaining platform. Visit Sexy Meme Coin to explore this exciting project and join the community.

274 notes

·

View notes

Note

Since some people might want a Mac, I'll offer a Mac equivalent of your laptop guide from the perspective of a Mac/Linux person.

Even the cheapest Macs cost more than Windows laptops, but part of that is Apple not making anything for the low end of the tech spectrum. There is no equivalent Mac to an Intel i3 with 4 gigabytes of RAM. This makes it a lot easier to find the laptop you need.

That said, it is possible to buy the wrong Mac for you, and the wrong Mac for you is the 13-inch MacBook Pro with the Touch Bar. Get literally anything else. If it has an M2 chip in it, it's the most recent model and will serve you well for several years. Any new MacBook Air is a good pick.

(You could wait for new Macs with M3, but I wouldn't bother. If you are reading these guides the M3 isn't going to do anything you need done that a M2 couldn't.)

Macs now have integrated storage and memory, so you should be aware that whatever internal storage and RAM you get, you'll be stuck with. But if you would be willing to get a 256 gig SSD in a Windows laptop, the Mac laptop with 256 gigs of storage will be just as good, and if you'd be willing to get 8 gigs of RAM in a Windows laptop the Mac will perform slightly better with the same amount of memory.

Buy a small external hard drive and hook it up so Time Machine can make daily backups of your laptop. Turn on iCloud Drive so your documents are available anywhere you can use a web browser. And get AppleCare because it will almost certainly be a waste of money but wooooooow will you be glad it's there if you need it.

I get that you are trying to help and I am not trying to be mean to you specifically, but people shouldn't buy apple computers. That's why I didn't provide specs for them. Apple is a company that is absolutely terrible to its customers and its customers deserve better than what apple is willing to offer.

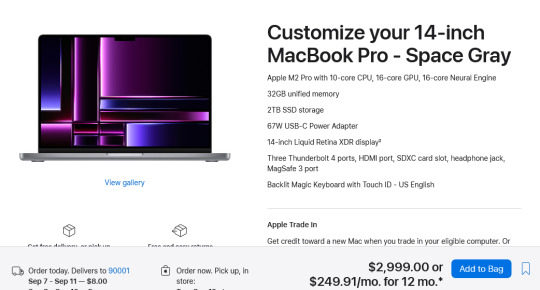

Apple charges $800 to upgrade the onboard storage from a 256GB SSD to a 2TB SSD.

A 2TB SSD costs between $75-100.

I maintain that any company that would charge you more than half the cost of a new device to install a $100 part on day one is a company making the wrong computer for you.

The point of being willing to tolerate a 256GB SSD or 8GB RAM in a Windows laptop is that you're deferring some of the cost to save money at the time of purchase so that you can spend a little bit in three years instead of having to replace the entire computer. Because, you see, many people cannot afford to pay $1000 for a computer and need to buy a computer that costs $650 and will add $200 worth of hardware at a later date.

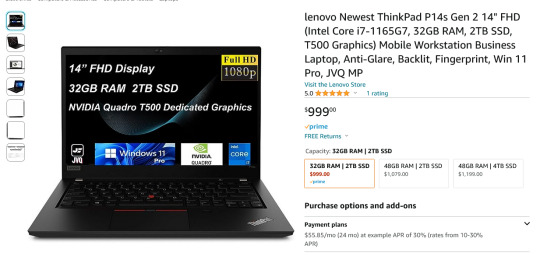

My minimum specs recommendations for a mac would be to configure one with the max possible RAM and SSD, look at the cost, and choose to go buy three i7 windows laptops with the same storage and RAM for less than the sticker price of the macs.

So let's say you want to get a 14" Macbook pro with the lowest-level processor. That's $2000. Now let's bump that from 16GB RAM and a 512GB SSD to 32GB and 2TB. That gets you to $3000. (The SSD is $200 less than on the lower model, and they'll let you put in an 8TB SSD for $1800 on this model; that's not available on the 13" because apple's product development team is entirely staffed by assholes who think you deserve a shitty computer if you can't afford to pay the cost of two 1991 Jeep Cherokee Laredos for a single laptop).

For $3000 you can get 3 Lenovo Workstation laptops with i7 processors, 32GB RAM, and a 2TB SSD.

And look, for just $200 more I could go up to 48GB RAM and get a 4TB SSD - it costs $600 to upgrade the 14" mac from a 2TB SSD to a 4TB SSD so you could still get three laptops with more ram and the same amount of storage for the cost of one macbook.

I get that some people need to use Final Cut and Logic Pro, but hoo boy they sure are charging you through the nose to use products that have become industry standard. The words "capture" and "monopoly" come to mind even though they don't quite apply here.

"Hostile" does, though, especially since Mac users end up locked into the ecosystem through software and cloud services and become uncertain how to leave it behind if they ever decide that a computer should cost less than a month's rent on a shitty studio apartment in LA.

There's a very good reason I didn't give mac advice and that's because my mac advice is "DON'T."

#sorry i swear i know you're being nice#i am incapable of being nice when talking about apple#i was a total apple fangirl until the unibody#which is the domino that started all the other companies pulling shit like soldered RAM#they said 'fuck you - users shouldn't service their own computers' and I say 'fuck apple - users shouldn't use macs'#and that has been my stance on the matter since 2012#which was the last time i bought a macbook because i knew i'd never buy a computer that would fight me to change my own battery

475 notes

·

View notes

Text

One of the things enterprise storage and destruction company Iron Mountain does is handle the archiving of the media industry's vaults. What it has been seeing lately should be a wake-up call: Roughly one-fifth of the hard disk drives dating to the 1990s it was sent are entirely unreadable.

Music industry publication Mix spoke with the people in charge of backing up the entertainment industry. The resulting tale is part explainer on how music is so complicated to archive now, part warning about everyone's data stored on spinning disks.

"In our line of work, if we discover an inherent problem with a format, it makes sense to let everybody know," Robert Koszela, global director for studio growth and strategic initiatives at Iron Mountain, told Mix. "It may sound like a sales pitch, but it's not; it's a call for action."

Hard drives gained popularity over spooled magnetic tape as digital audio workstations, mixing and editing software, and the perceived downsides of tape, including deterioration from substrate separation and fire. But hard drives present their own archival problems. Standard hard drives were also not designed for long-term archival use. You can almost never decouple the magnetic disks from the reading hardware inside, so if either fails, the whole drive dies.

There are also general computer storage issues, including the separation of samples and finished tracks, or proprietary file formats requiring archival versions of software. Still, Iron Mountain tells Mix that “if the disk platters spin and aren’t damaged," it can access the content.

But "if it spins" is becoming a big question mark. Musicians and studios now digging into their archives to remaster tracks often find that drives, even when stored at industry-standard temperature and humidity, have failed in some way, with no partial recovery option available.

“It’s so sad to see a project come into the studio, a hard drive in a brand-new case with the wrapper and the tags from wherever they bought it still in there,” Koszela says. “Next to it is a case with the safety drive in it. Everything’s in order. And both of them are bricks.���

Entropy Wins

Mix's passing along of Iron Mountain's warning hit Hacker News earlier this week, which spurred other tales of faith in the wrong formats. The gist of it: You cannot trust any medium, so you copy important things over and over, into fresh storage. "Optical media rots, magnetic media rots and loses magnetic charge, bearings seize, flash storage loses charge, etc.," writes user abracadaniel. "Entropy wins, sometimes much faster than you’d expect."

There is discussion of how SSDs are not archival at all; how floppy disk quality varied greatly between the 1980s, 1990s, and 2000s; how Linear Tape-Open, a format specifically designed for long-term tape storage, loses compatibility over successive generations; how the binder sleeves we put our CD-Rs and DVD-Rs in have allowed them to bend too much and stop being readable.

Knowing that hard drives will eventually fail is nothing new. Ars wrote about the five stages of hard drive death, including denial, back in 2005. Last year, backup company Backblaze shared failure data on specific drives, showing that drives that fail tend to fail within three years, that no drive was totally exempt, and that time does, generally, wear down all drives. Google's server drive data showed in 2007 that HDD failure was mostly unpredictable, and that temperatures were not really the deciding factor.

So Iron Mountain's admonition to music companies is yet another warning about something we've already heard. But it's always good to get some new data about just how fragile a good archive really is.

75 notes

·

View notes

Text

Teeeni (1988) Nanyang Technological Institute (NTI), School of Electrical and Electronic Engineering (EEE), Singapore. "Prof Goh said the institute's School of Electrical and Electronic Engineering first started experimenting with micromouse building in 1987, using a commercially available kit from Japan. The result, named Rattus-Rodee, was not only bulky and clumsy, but extremely slow. Undaunted by the poor performance, NTI rounded up a group of lecturers with expertise ranging from microprocessor hardware, computer software, motor control, sensor technology to artificial intelligence. At least 80 students at different levels of study were recruited for the programme. What helped most, Prof Goh recalled, was the in-house programme which required second-year students to undergo 10 weeks of intensive practical training during their long vacation. This exposed them to different training modules which included industrial talks, factory visits, mechanical workshop and workstation education. Having been taught the basics, the students were challenged to build micromouses and compete for prizes in campus contests. This resulted in successive generations of micromouses, each of which was an improvement of the previous one. "Ruth", which came after Rattus-Rodee, was reduced in size and weight and travelled more accurately, but was still slow. Though sleek, the third-generation model "Teeeni" was still heavy and slow. But both performed creditably in the National Micromouse Contest last year. Ruth was in fourth position and Teeeni was placed second." – Mightiest Mouse, by Nancy Koh, The Straits Times, 6 November 1989.

The video is an excerpt from "UK Micromouse 1989."

21 notes

·

View notes